|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

Assorted Methods/Classes that are too subtle to have their own packages. More...

Assorted Methods/Classes that are too subtle to have their own packages.

Do NOT use this package to hold hundleds of classes/methods. That's a class design failure. This package should contain really only a few methods and classes, each of which should be extremely simple and unrelated each other. Otherwise, make a package for them. Learn from the stupid history of java.util.

|

Files | |

| file | atomic_fences.hpp |

| Atomic fence methods and load/store with fences that work for both C++11/non-C++11 code. | |

| file | cacheline.hpp |

| Constants and methods related to CPU cacheline and its prefetching. | |

| file | endianness.hpp |

| A few macros and helper methods related to byte endian-ness. | |

| file | raw_atomics.hpp |

| Raw atomic operations that work for both C++11 and non-C++11 code. | |

Classes | |

| struct | foedus::assorted::Hex |

| Convenient way of writing hex integers to stream. More... | |

| struct | foedus::assorted::HexString |

| Equivalent to std::hex in case the stream doesn't support it. More... | |

| struct | foedus::assorted::Top |

| Write only first few bytes to stream. More... | |

| struct | foedus::assorted::ConstDiv |

| The pre-calculated p-m pair for optimized integer division by constant. More... | |

| class | foedus::assorted::DumbSpinlock |

| A simple spinlock using a boolean field. More... | |

| class | foedus::assorted::FixedString< MAXLEN, CHAR > |

| An embedded string object of fixed max-length, which uses no external memory. More... | |

| struct | foedus::assorted::ProbCounter |

| Implements a probabilistic counter [Morris 1978]. More... | |

| struct | foedus::assorted::ProtectedBoundary |

| A 4kb dummy data placed between separate memory regions so that we can check if/where a bogus memory access happens. More... | |

| class | foedus::assorted::UniformRandom |

| A very simple and deterministic random generator that is more aligned with standard benchmark such as TPC-C. More... | |

| class | foedus::assorted::ZipfianRandom |

| A simple zipfian generator based off of YCSB's Java implementation. More... | |

Macros | |

| #define | SPINLOCK_WHILE(x) for (foedus::assorted::SpinlockStat __spins; (x); __spins.yield_backoff()) |

| A macro to busy-wait (spinlock) with occasional pause. More... | |

| #define | INSTANTIATE_ALL_TYPES(M) |

| A macro to explicitly instantiate the given template for all types we care. More... | |

| #define | INSTANTIATE_ALL_NUMERIC_TYPES(M) |

| INSTANTIATE_ALL_TYPES minus std::string. More... | |

| #define | INSTANTIATE_ALL_INTEGER_PLUS_BOOL_TYPES(M) |

| INSTANTIATE_ALL_TYPES minus std::string/float/double. More... | |

| #define | INSTANTIATE_ALL_INTEGER_TYPES(M) |

| INSTANTIATE_ALL_NUMERIC_TYPES minus bool/double/float. More... | |

Functions | |

| template<typename T , uint64_t ALIGNMENT> | |

| T | foedus::assorted::align (T value) |

| Returns the smallest multiply of ALIGNMENT that is equal or larger than the given number. More... | |

| template<typename T > | |

| T | foedus::assorted::align8 (T value) |

| 8-alignment. More... | |

| template<typename T > | |

| T | foedus::assorted::align16 (T value) |

| 16-alignment. More... | |

| template<typename T > | |

| T | foedus::assorted::align64 (T value) |

| 64-alignment. More... | |

| int64_t | foedus::assorted::int_div_ceil (int64_t dividee, int64_t dividor) |

| Efficient ceil(dividee/dividor) for integer. More... | |

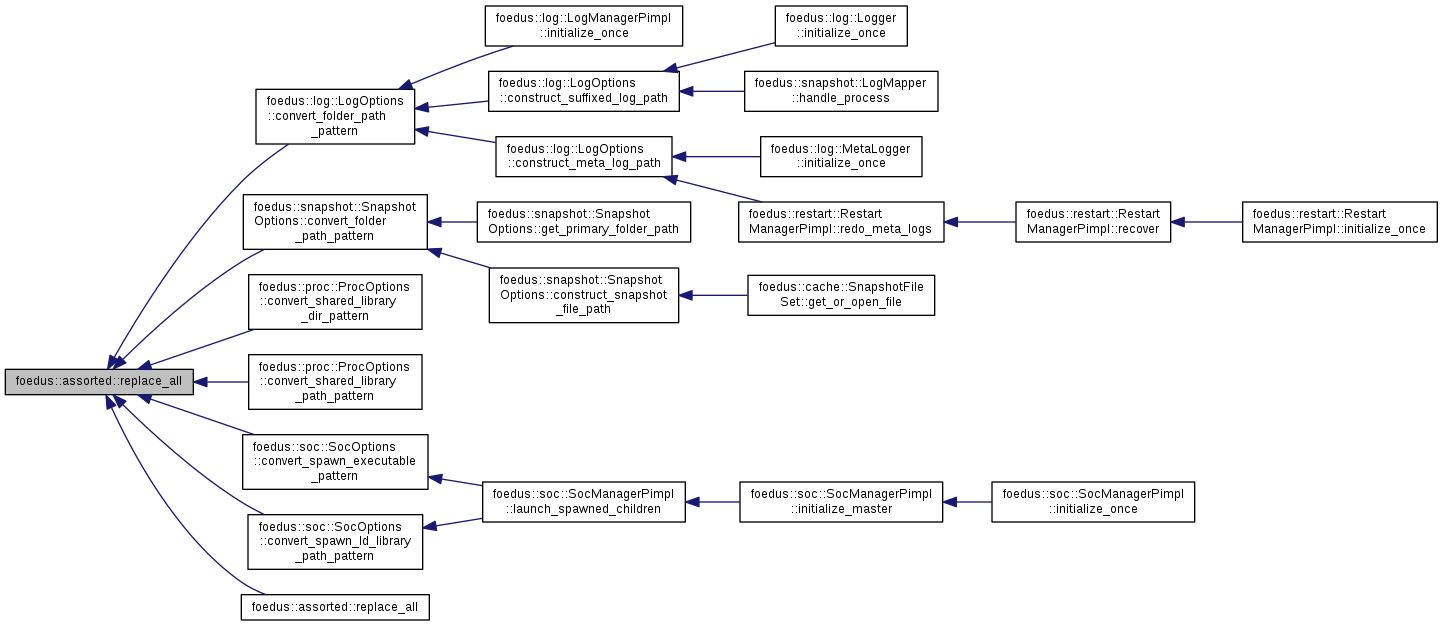

| std::string | foedus::assorted::replace_all (const std::string &target, const std::string &search, const std::string &replacement) |

| target.replaceAll(search, replacement). More... | |

| std::string | foedus::assorted::replace_all (const std::string &target, const std::string &search, int replacement) |

| target.replaceAll(search, String.valueOf(replacement)). More... | |

| std::string | foedus::assorted::os_error () |

| Thread-safe strerror(errno). More... | |

| std::string | foedus::assorted::os_error (int error_number) |

| This version receives errno. More... | |

| std::string | foedus::assorted::get_current_executable_path () |

| Returns the full path of current executable. More... | |

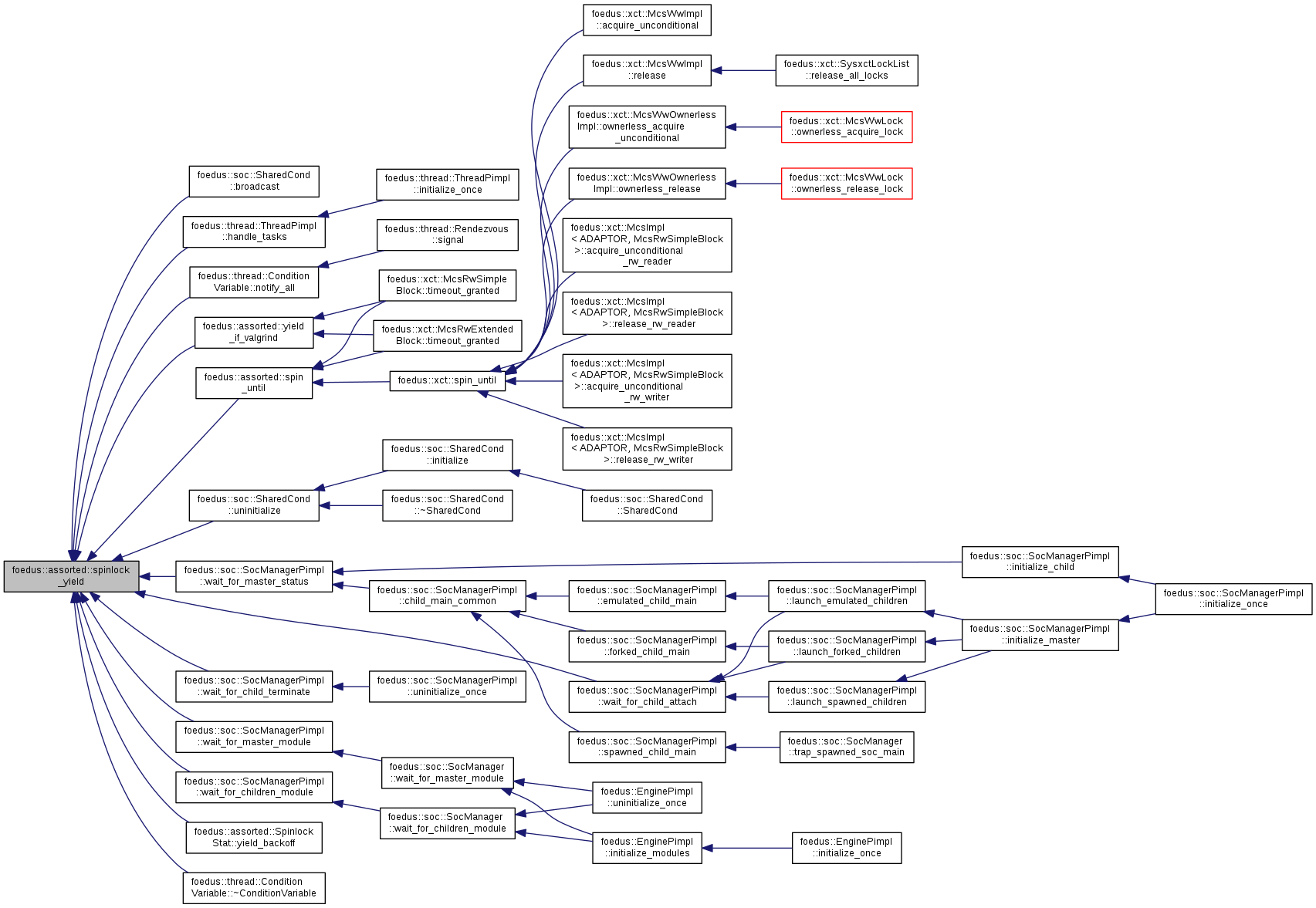

| void | foedus::assorted::spinlock_yield () |

| Invoke _mm_pause(), x86 PAUSE instruction, or something equivalent in the env. More... | |

| template<uint64_t SIZE1, uint64_t SIZE2> | |

| int | foedus::assorted::static_size_check () |

| Alternative for static_assert(sizeof(foo) == sizeof(bar), "oh crap") to display sizeof(foo). More... | |

| std::string | foedus::assorted::demangle_type_name (const char *mangled_name) |

| Demangle the given C++ type name if possible (otherwise the original string). More... | |

| template<typename T > | |

| std::string | foedus::assorted::get_pretty_type_name () |

| Returns the name of the C++ type as readable as possible. More... | |

| uint64_t | foedus::assorted::generate_almost_prime_below (uint64_t threshold) |

| Generate a prime or some number that is almost prime less than the given number. More... | |

| void | foedus::assorted::memory_fence_acquire () |

| Equivalent to std::atomic_thread_fence(std::memory_order_acquire). More... | |

| void | foedus::assorted::memory_fence_release () |

| Equivalent to std::atomic_thread_fence(std::memory_order_release). More... | |

| void | foedus::assorted::memory_fence_acq_rel () |

| Equivalent to std::atomic_thread_fence(std::memory_order_acq_rel). More... | |

| void | foedus::assorted::memory_fence_consume () |

| Equivalent to std::atomic_thread_fence(std::memory_order_consume). More... | |

| void | foedus::assorted::memory_fence_seq_cst () |

| Equivalent to std::atomic_thread_fence(std::memory_order_seq_cst). More... | |

| template<typename T > | |

| T | foedus::assorted::atomic_load_seq_cst (const T *target) |

| Atomic load with a seq_cst barrier for raw primitive types rather than std::atomic<T>. More... | |

| template<typename T > | |

| T | foedus::assorted::atomic_load_acquire (const T *target) |

| Atomic load with an acquire barrier for raw primitive types rather than std::atomic<T>. More... | |

| template<typename T > | |

| T | foedus::assorted::atomic_load_consume (const T *target) |

| Atomic load with a consume barrier for raw primitive types rather than std::atomic<T>. More... | |

| template<typename T > | |

| void | foedus::assorted::atomic_store_seq_cst (T *target, T value) |

| Atomic store with a seq_cst barrier for raw primitive types rather than std::atomic<T>. More... | |

| template<typename T > | |

| void | foedus::assorted::atomic_store_release (T *target, T value) |

| Atomic store with a release barrier for raw primitive types rather than std::atomic<T>. More... | |

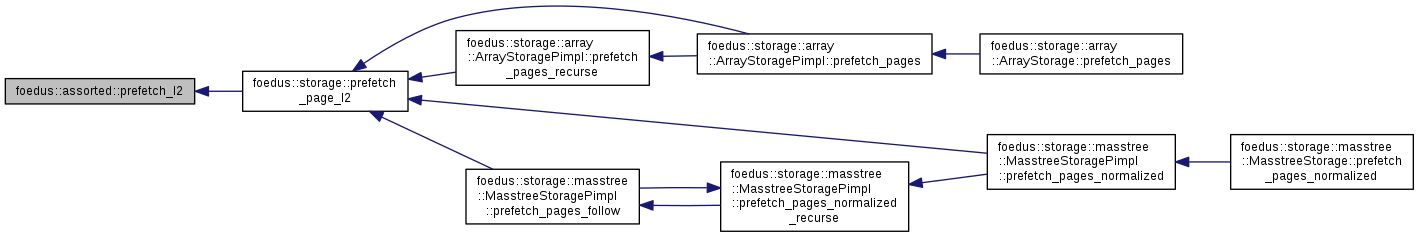

| void | foedus::assorted::prefetch_cacheline (const void *address) |

| Prefetch one cacheline to L1 cache. More... | |

| void | foedus::assorted::prefetch_cachelines (const void *address, int cacheline_count) |

| Prefetch multiple contiguous cachelines to L1 cache. More... | |

| void | foedus::assorted::prefetch_l2 (const void *address, int cacheline_count) |

| Prefetch multiple contiguous cachelines to L2/L3 cache. More... | |

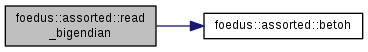

| template<typename T > | |

| T | foedus::assorted::read_bigendian (const void *be_bytes) |

| Convert a big-endian byte array to a native integer. More... | |

| template<typename T > | |

| void | foedus::assorted::write_bigendian (T host_value, void *be_bytes) |

| Convert a native integer to big-endian bytes and write them to the given address. More... | |

| int | foedus::assorted::mod_numa_node (int numa_node) |

| In order to run even on a non-numa machine or a machine with fewer sockets, we allow specifying arbitrary numa_node. More... | |

| template<typename T > | |

| bool | foedus::assorted::raw_atomic_compare_exchange_strong (T *target, T *expected, T desired) |

| Atomic CAS. More... | |

| template<typename T > | |

| bool | foedus::assorted::raw_atomic_compare_exchange_weak (T *target, T *expected, T desired) |

| Weak version of raw_atomic_compare_exchange_strong(). More... | |

| bool | foedus::assorted::raw_atomic_compare_exchange_strong_uint128 (uint64_t *ptr, const uint64_t *old_value, const uint64_t *new_value) |

| Atomic 128-bit CAS, which is not in the standard yet. More... | |

| bool | foedus::assorted::raw_atomic_compare_exchange_weak_uint128 (uint64_t *ptr, const uint64_t *old_value, const uint64_t *new_value) |

| Weak version of raw_atomic_compare_exchange_strong_uint128(). More... | |

| template<typename T > | |

| T | foedus::assorted::raw_atomic_exchange (T *target, T desired) |

| Atomic Swap for raw primitive types rather than std::atomic<T>. More... | |

| template<typename T > | |

| T | foedus::assorted::raw_atomic_fetch_add (T *target, T addendum) |

| Atomic fetch-add for raw primitive types rather than std::atomic<T>. More... | |

| template<typename T > | |

| T | foedus::assorted::raw_atomic_fetch_and_bitwise_and (T *target, T operand) |

| Atomic fetch-bitwise-and for raw primitive types rather than std::atomic<T>. More... | |

| template<typename T > | |

| T | foedus::assorted::raw_atomic_fetch_and_bitwise_or (T *target, T operand) |

| Atomic fetch-bitwise-or for raw primitive types rather than std::atomic<T>. More... | |

| template<typename T > | |

| T | foedus::assorted::raw_atomic_fetch_and_bitwise_xor (T *target, T operand) |

| Atomic fetch-bitwise-xor for raw primitive types rather than std::atomic<T> More... | |

Variables | |

| const uint16_t | foedus::assorted::kCachelineSize = 64 |

| Byte count of one cache line. More... | |

| const bool | foedus::assorted::kIsLittleEndian = false |

| A handy const boolean to tell if it's little endina. More... | |

| #define INSTANTIATE_ALL_INTEGER_PLUS_BOOL_TYPES | ( | M | ) |

INSTANTIATE_ALL_TYPES minus std::string/float/double.

Definition at line 317 of file assorted_func.hpp.

| #define INSTANTIATE_ALL_INTEGER_TYPES | ( | M | ) |

INSTANTIATE_ALL_NUMERIC_TYPES minus bool/double/float.

Definition at line 313 of file assorted_func.hpp.

| #define INSTANTIATE_ALL_NUMERIC_TYPES | ( | M | ) |

INSTANTIATE_ALL_TYPES minus std::string.

Definition at line 320 of file assorted_func.hpp.

| #define INSTANTIATE_ALL_TYPES | ( | M | ) |

A macro to explicitly instantiate the given template for all types we care.

M is the macro to explicitly instantiate a template for the given type. This macro explicitly instantiates the template for bool, float, double, all integers (signed/unsigned), and std::string. This is useful when definition of the template class/method involve too many details and you rather want to just give declaration of them in header.

Use this as follows. In header file.

Then, in cpp file.

Remember, you should invoke this macro in cpp, not header, otherwise you will get multiple-definition errors.

Definition at line 323 of file assorted_func.hpp.

| #define SPINLOCK_WHILE | ( | x | ) | for (foedus::assorted::SpinlockStat __spins; (x); __spins.yield_backoff()) |

A macro to busy-wait (spinlock) with occasional pause.

Use this as follows.

Definition at line 256 of file assorted_func.hpp.

Referenced by foedus::snapshot::LogGleaner::execute(), foedus::xct::XctManagerPimpl::handle_epoch_chime(), foedus::xct::XctManagerPimpl::handle_epoch_chime_wait_grace_period(), foedus::snapshot::SnapshotManagerPimpl::handle_snapshot(), foedus::assorted::DumbSpinlock::lock(), foedus::thread::ThreadPimpl::set_thread_schedule(), and foedus::log::LogManagerPimpl::wait_until_durable().

|

inline |

Returns the smallest multiply of ALIGNMENT that is equal or larger than the given number.

| T | integer type |

| ALIGNMENT | alignment size. must be power of two |

In other words, round-up. For example of 8-alignment, 7 becomes 8, 8 becomes 8, 9 becomes 16.

Definition at line 44 of file assorted_func.hpp.

References ASSERT_ND.

|

inline |

|

inline |

|

inline |

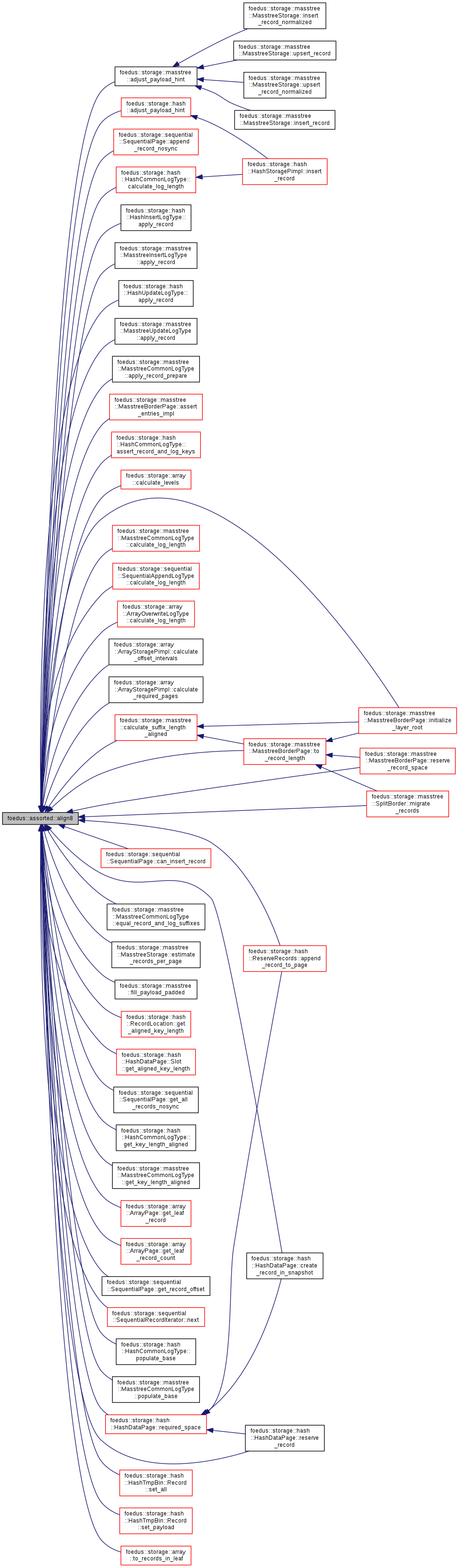

8-alignment.

Definition at line 58 of file assorted_func.hpp.

Referenced by foedus::storage::masstree::adjust_payload_hint(), foedus::storage::hash::adjust_payload_hint(), foedus::storage::sequential::SequentialPage::append_record_nosync(), foedus::storage::hash::ReserveRecords::append_record_to_page(), foedus::storage::hash::HashInsertLogType::apply_record(), foedus::storage::masstree::MasstreeInsertLogType::apply_record(), foedus::storage::hash::HashUpdateLogType::apply_record(), foedus::storage::masstree::MasstreeUpdateLogType::apply_record(), foedus::storage::masstree::MasstreeCommonLogType::apply_record_prepare(), foedus::storage::masstree::MasstreeBorderPage::assert_entries_impl(), foedus::storage::hash::HashCommonLogType::assert_record_and_log_keys(), foedus::storage::array::calculate_levels(), foedus::storage::hash::HashCommonLogType::calculate_log_length(), foedus::storage::masstree::MasstreeCommonLogType::calculate_log_length(), foedus::storage::sequential::SequentialAppendLogType::calculate_log_length(), foedus::storage::array::ArrayOverwriteLogType::calculate_log_length(), foedus::storage::array::ArrayStoragePimpl::calculate_offset_intervals(), foedus::storage::array::ArrayStoragePimpl::calculate_required_pages(), foedus::storage::masstree::calculate_suffix_length_aligned(), foedus::storage::sequential::SequentialPage::can_insert_record(), foedus::storage::hash::HashDataPage::create_record_in_snapshot(), foedus::storage::masstree::MasstreeCommonLogType::equal_record_and_log_suffixes(), foedus::storage::masstree::MasstreeStorage::estimate_records_per_page(), foedus::storage::masstree::fill_payload_padded(), foedus::storage::hash::RecordLocation::get_aligned_key_length(), foedus::storage::hash::HashDataPage::Slot::get_aligned_key_length(), foedus::storage::sequential::SequentialPage::get_all_records_nosync(), foedus::storage::hash::HashCommonLogType::get_key_length_aligned(), foedus::storage::masstree::MasstreeCommonLogType::get_key_length_aligned(), foedus::storage::array::ArrayPage::get_leaf_record(), foedus::storage::array::ArrayPage::get_leaf_record_count(), foedus::storage::sequential::SequentialPage::get_record_offset(), foedus::storage::masstree::MasstreeBorderPage::initialize_layer_root(), foedus::storage::masstree::SplitBorder::migrate_records(), foedus::storage::sequential::SequentialRecordIterator::next(), foedus::storage::hash::HashCommonLogType::populate_base(), foedus::storage::masstree::MasstreeCommonLogType::populate_base(), foedus::storage::hash::HashDataPage::required_space(), foedus::storage::hash::HashDataPage::reserve_record(), foedus::storage::masstree::MasstreeBorderPage::reserve_record_space(), foedus::storage::hash::HashTmpBin::Record::set_all(), foedus::storage::hash::HashTmpBin::Record::set_payload(), foedus::storage::masstree::MasstreeBorderPage::to_record_length(), and foedus::storage::array::to_records_in_leaf().

|

inline |

Atomic load with an acquire barrier for raw primitive types rather than std::atomic<T>.

| T | integer type |

Definition at line 114 of file atomic_fences.hpp.

|

inline |

Atomic load with a consume barrier for raw primitive types rather than std::atomic<T>.

| T | integer type |

Definition at line 125 of file atomic_fences.hpp.

|

inline |

Atomic load with a seq_cst barrier for raw primitive types rather than std::atomic<T>.

| T | integer type |

Definition at line 103 of file atomic_fences.hpp.

|

inline |

Atomic store with a release barrier for raw primitive types rather than std::atomic<T>.

| T | integer type |

Definition at line 145 of file atomic_fences.hpp.

|

inline |

Atomic store with a seq_cst barrier for raw primitive types rather than std::atomic<T>.

| T | integer type |

Definition at line 135 of file atomic_fences.hpp.

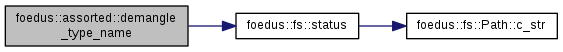

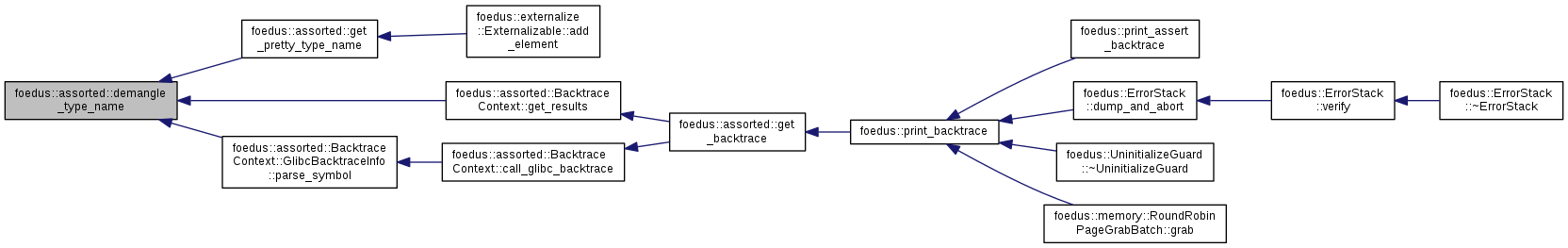

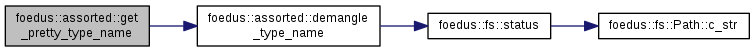

| std::string foedus::assorted::demangle_type_name | ( | const char * | mangled_name | ) |

Demangle the given C++ type name if possible (otherwise the original string).

Definition at line 151 of file assorted_func.cpp.

References foedus::fs::status().

Referenced by foedus::assorted::get_pretty_type_name(), foedus::assorted::BacktraceContext::get_results(), and foedus::assorted::BacktraceContext::GlibcBacktraceInfo::parse_symbol().

| uint64_t foedus::assorted::generate_almost_prime_below | ( | uint64_t | threshold | ) |

Generate a prime or some number that is almost prime less than the given number.

| [in] | threshold | Returns a number less than this number |

In a few places, we need a number that is a prime or at least not divided by many numbers. For example in hashing. It doesn't have to be a real prime. Instead, we want to cheaply calculate such number. This method uses a complex polynomial to generate that looks-like a prime.

Definition at line 164 of file assorted_func.cpp.

Referenced by foedus::cache::determine_logical_buckets().

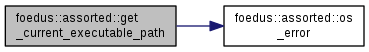

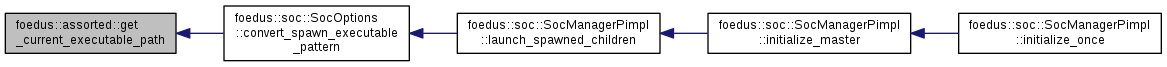

| std::string foedus::assorted::get_current_executable_path | ( | ) |

Returns the full path of current executable.

This relies on linux /proc/self/exe. Not sure how to port it to Windows..

Definition at line 81 of file assorted_func.cpp.

References foedus::assorted::os_error().

Referenced by foedus::soc::SocOptions::convert_spawn_executable_pattern().

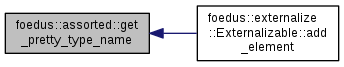

| std::string foedus::assorted::get_pretty_type_name | ( | ) |

Returns the name of the C++ type as readable as possible.

| T | the type |

Definition at line 215 of file assorted_func.hpp.

References foedus::assorted::demangle_type_name().

Referenced by foedus::externalize::Externalizable::add_element().

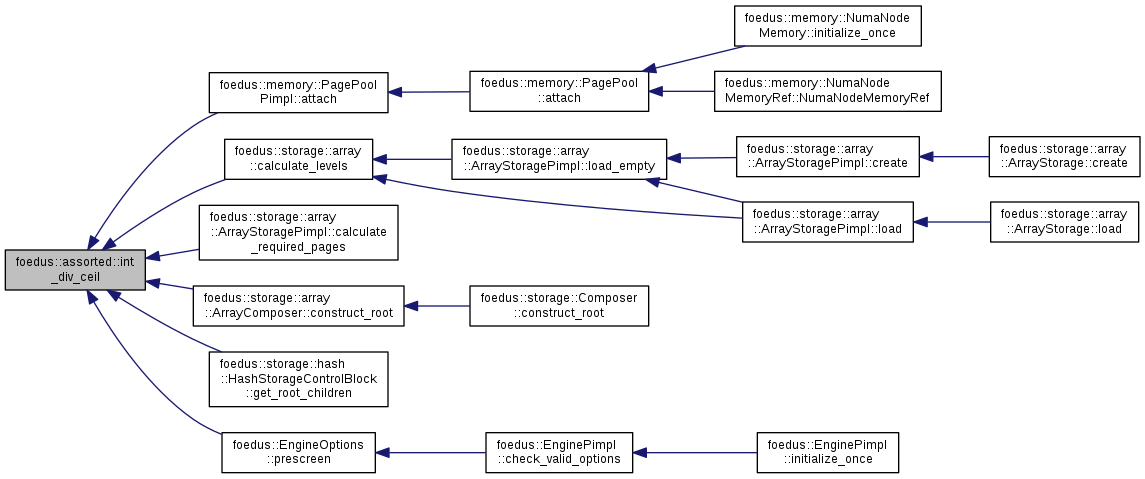

| int64_t foedus::assorted::int_div_ceil | ( | int64_t | dividee, |

| int64_t | dividor | ||

| ) |

Efficient ceil(dividee/dividor) for integer.

Definition at line 40 of file assorted_func.cpp.

Referenced by foedus::memory::PagePoolPimpl::attach(), foedus::storage::array::calculate_levels(), foedus::storage::array::ArrayStoragePimpl::calculate_required_pages(), foedus::storage::array::ArrayComposer::construct_root(), foedus::storage::hash::HashStorageControlBlock::get_root_children(), and foedus::EngineOptions::prescreen().

|

inline |

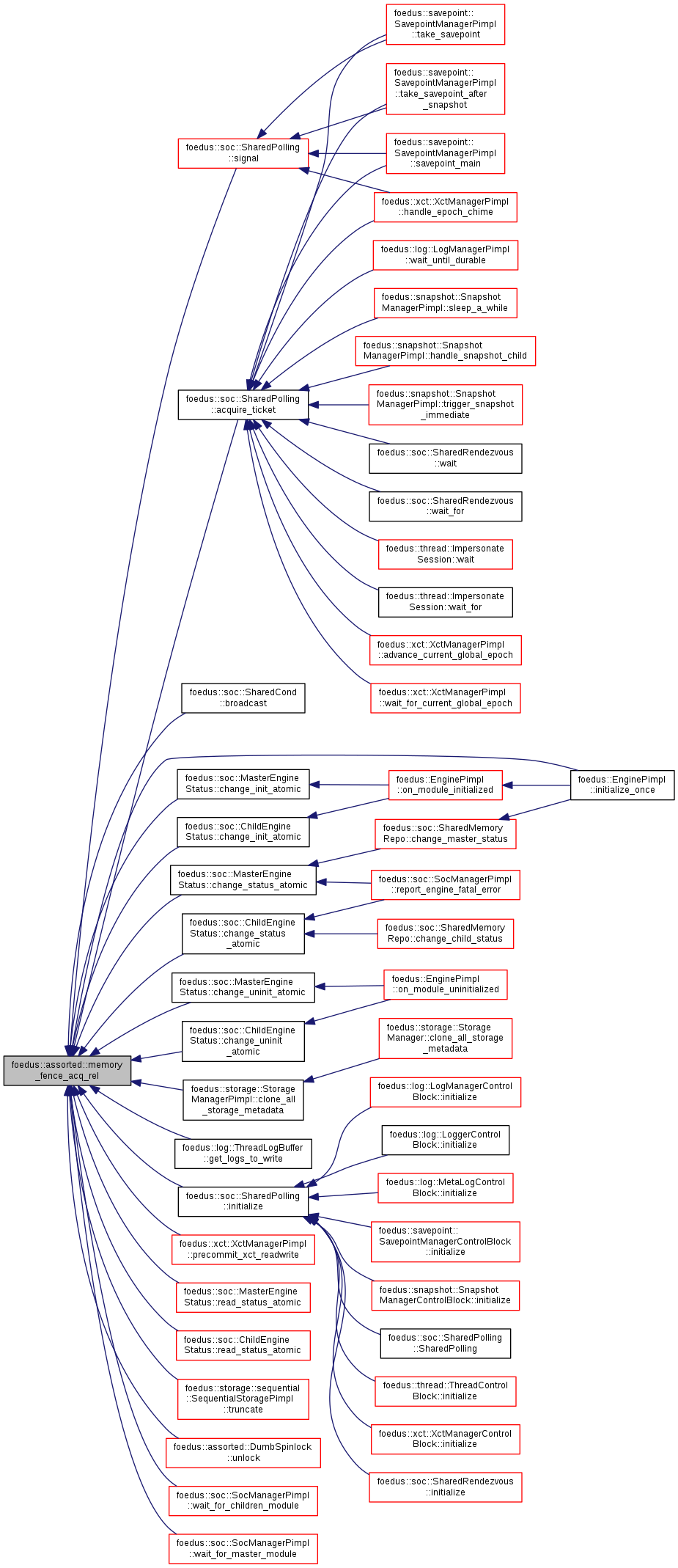

Equivalent to std::atomic_thread_fence(std::memory_order_acq_rel).

A load operation with this memory order performs the acquire operation on the affected memory location and a store operation with this memory order performs the release operation.

Definition at line 69 of file atomic_fences.hpp.

Referenced by foedus::soc::SharedPolling::acquire_ticket(), foedus::soc::SharedCond::broadcast(), foedus::soc::MasterEngineStatus::change_init_atomic(), foedus::soc::ChildEngineStatus::change_init_atomic(), foedus::soc::MasterEngineStatus::change_status_atomic(), foedus::soc::ChildEngineStatus::change_status_atomic(), foedus::soc::MasterEngineStatus::change_uninit_atomic(), foedus::soc::ChildEngineStatus::change_uninit_atomic(), foedus::storage::StorageManagerPimpl::clone_all_storage_metadata(), foedus::log::ThreadLogBuffer::get_logs_to_write(), foedus::soc::SharedPolling::initialize(), foedus::EnginePimpl::initialize_once(), foedus::xct::XctManagerPimpl::precommit_xct_readwrite(), foedus::soc::MasterEngineStatus::read_status_atomic(), foedus::soc::ChildEngineStatus::read_status_atomic(), foedus::soc::SharedPolling::signal(), foedus::storage::sequential::SequentialStoragePimpl::truncate(), foedus::assorted::DumbSpinlock::unlock(), foedus::soc::SocManagerPimpl::wait_for_children_module(), and foedus::soc::SocManagerPimpl::wait_for_master_module().

|

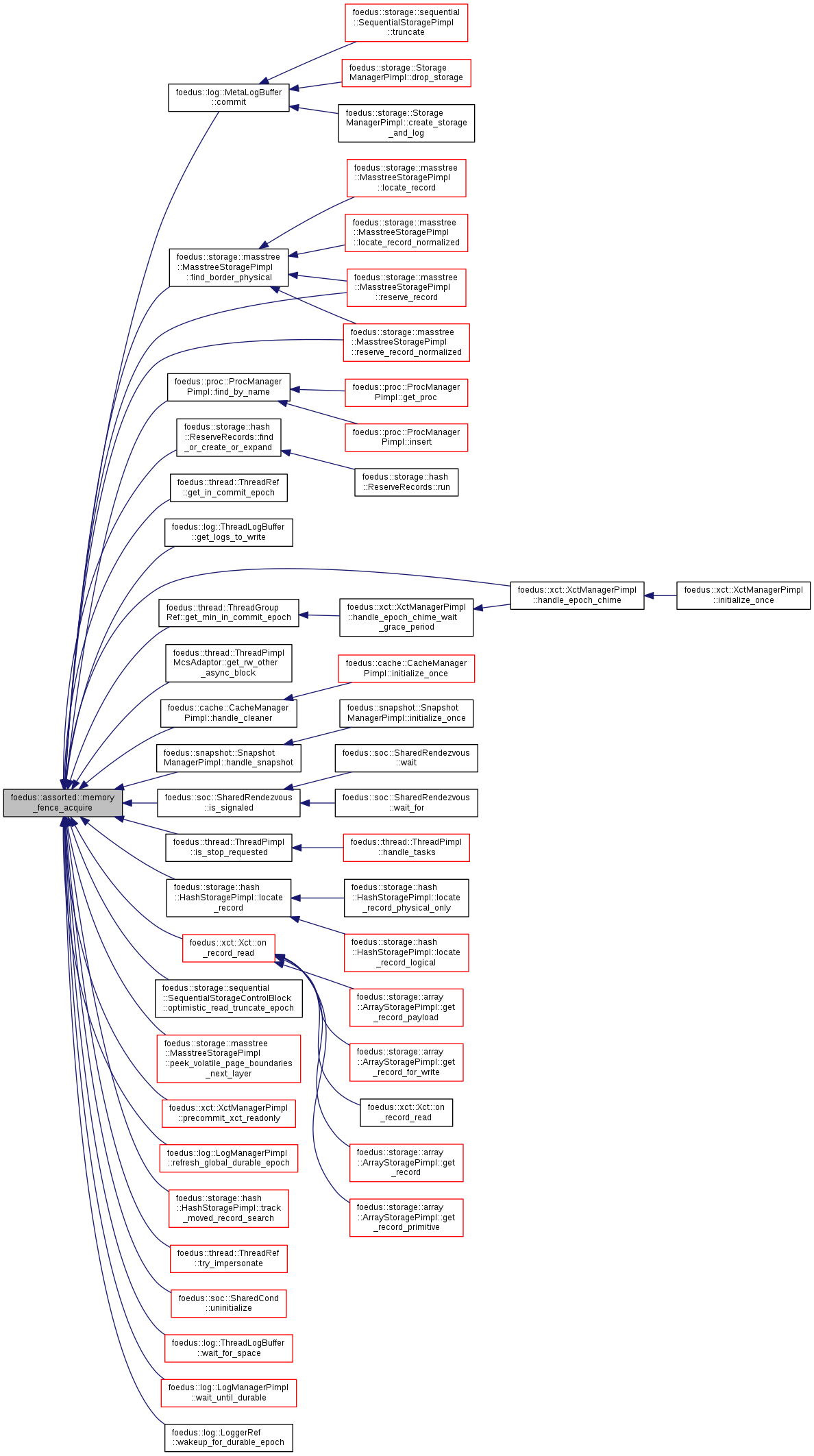

inline |

Equivalent to std::atomic_thread_fence(std::memory_order_acquire).

A load operation with this memory order performs the acquire operation on the affected memory location: prior writes made to other memory locations by the thread that did the release become visible in this thread.

Definition at line 46 of file atomic_fences.hpp.

Referenced by foedus::log::MetaLogBuffer::commit(), foedus::storage::masstree::MasstreeStoragePimpl::find_border_physical(), foedus::proc::ProcManagerPimpl::find_by_name(), foedus::storage::hash::ReserveRecords::find_or_create_or_expand(), foedus::thread::ThreadRef::get_in_commit_epoch(), foedus::log::ThreadLogBuffer::get_logs_to_write(), foedus::thread::ThreadGroupRef::get_min_in_commit_epoch(), foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::get_rw_other_async_block(), foedus::cache::CacheManagerPimpl::handle_cleaner(), foedus::xct::XctManagerPimpl::handle_epoch_chime(), foedus::snapshot::SnapshotManagerPimpl::handle_snapshot(), foedus::soc::SharedRendezvous::is_signaled(), foedus::thread::ThreadPimpl::is_stop_requested(), foedus::storage::hash::HashStoragePimpl::locate_record(), foedus::xct::Xct::on_record_read(), foedus::storage::sequential::SequentialStorageControlBlock::optimistic_read_truncate_epoch(), foedus::storage::masstree::MasstreeStoragePimpl::peek_volatile_page_boundaries_next_layer(), foedus::xct::XctManagerPimpl::precommit_xct_readonly(), foedus::log::LogManagerPimpl::refresh_global_durable_epoch(), foedus::storage::masstree::MasstreeStoragePimpl::reserve_record(), foedus::storage::masstree::MasstreeStoragePimpl::reserve_record_normalized(), foedus::storage::hash::HashStoragePimpl::track_moved_record_search(), foedus::thread::ThreadRef::try_impersonate(), foedus::soc::SharedCond::uninitialize(), foedus::log::ThreadLogBuffer::wait_for_space(), foedus::log::LogManagerPimpl::wait_until_durable(), and foedus::log::LoggerRef::wakeup_for_durable_epoch().

|

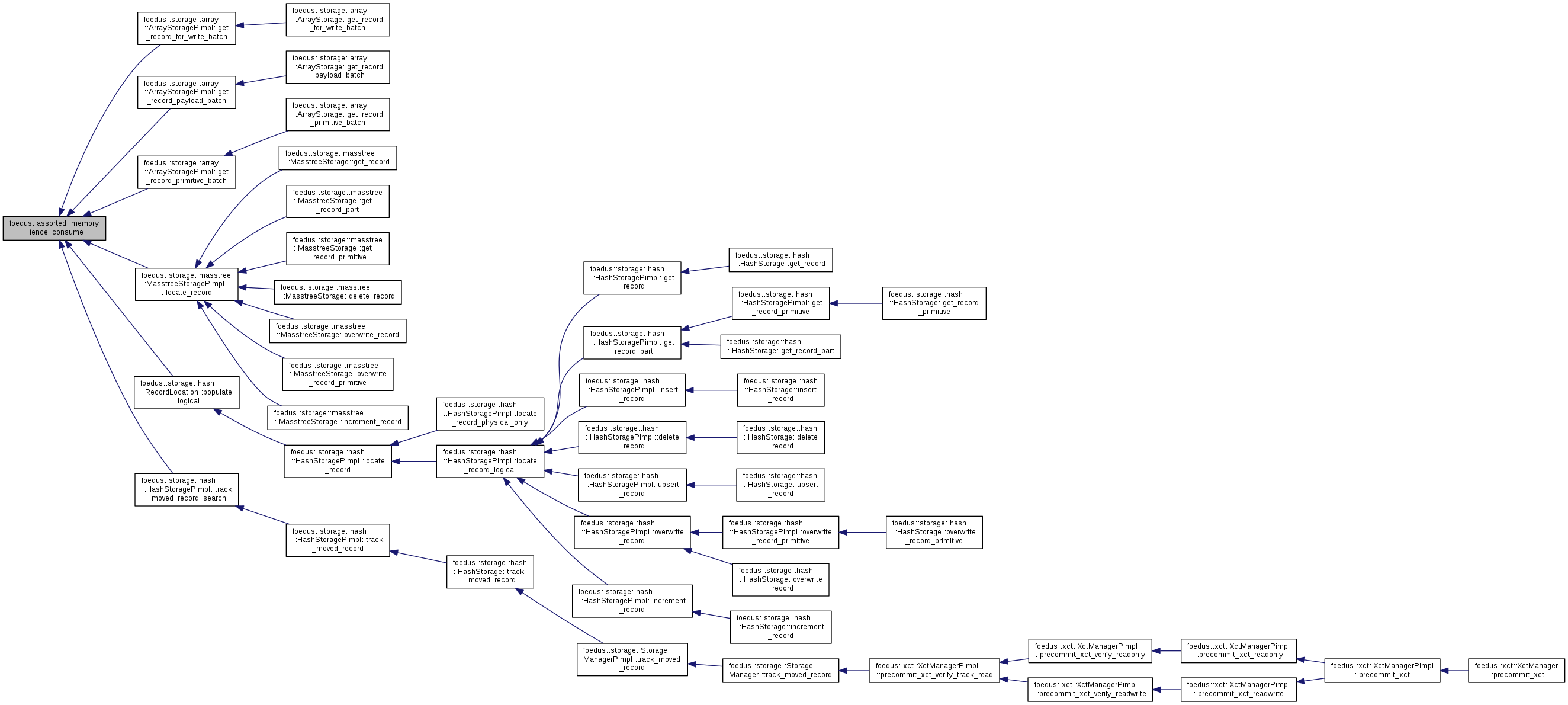

inline |

Equivalent to std::atomic_thread_fence(std::memory_order_consume).

A load operation with this memory order performs a consume operation on the affected memory location: prior writes to data-dependent memory locations made by the thread that did a release operation become visible to this thread.

Definition at line 81 of file atomic_fences.hpp.

Referenced by foedus::storage::array::ArrayStoragePimpl::get_record_for_write_batch(), foedus::storage::array::ArrayStoragePimpl::get_record_payload_batch(), foedus::storage::array::ArrayStoragePimpl::get_record_primitive_batch(), foedus::storage::masstree::MasstreeStoragePimpl::locate_record(), foedus::storage::hash::RecordLocation::populate_logical(), and foedus::storage::hash::HashStoragePimpl::track_moved_record_search().

|

inline |

Equivalent to std::atomic_thread_fence(std::memory_order_release).

A store operation with this memory order performs the release operation: prior writes to other memory locations become visible to the threads that do a consume or an acquire on the same location.

Definition at line 58 of file atomic_fences.hpp.

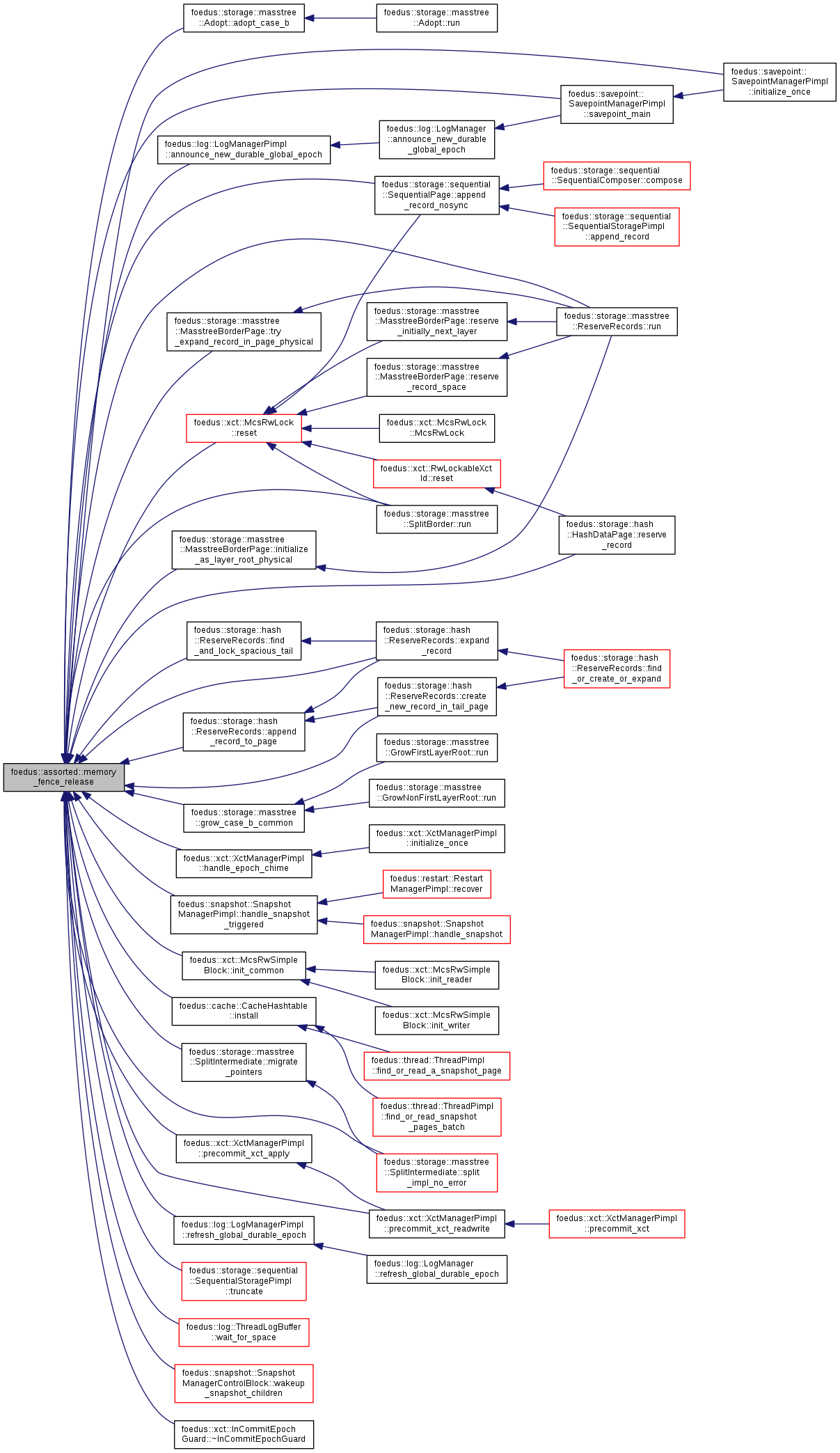

Referenced by foedus::storage::masstree::Adopt::adopt_case_b(), foedus::log::LogManagerPimpl::announce_new_durable_global_epoch(), foedus::storage::sequential::SequentialPage::append_record_nosync(), foedus::storage::hash::ReserveRecords::append_record_to_page(), foedus::storage::hash::ReserveRecords::create_new_record_in_tail_page(), foedus::storage::hash::ReserveRecords::expand_record(), foedus::storage::hash::ReserveRecords::find_and_lock_spacious_tail(), foedus::storage::masstree::grow_case_b_common(), foedus::xct::XctManagerPimpl::handle_epoch_chime(), foedus::snapshot::SnapshotManagerPimpl::handle_snapshot_triggered(), foedus::xct::McsRwSimpleBlock::init_common(), foedus::storage::masstree::MasstreeBorderPage::initialize_as_layer_root_physical(), foedus::savepoint::SavepointManagerPimpl::initialize_once(), foedus::cache::CacheHashtable::install(), foedus::storage::masstree::SplitIntermediate::migrate_pointers(), foedus::xct::XctManagerPimpl::precommit_xct_apply(), foedus::xct::XctManagerPimpl::precommit_xct_readwrite(), foedus::log::LogManagerPimpl::refresh_global_durable_epoch(), foedus::storage::hash::HashDataPage::reserve_record(), foedus::xct::McsRwLock::reset(), foedus::storage::masstree::SplitBorder::run(), foedus::storage::masstree::ReserveRecords::run(), foedus::savepoint::SavepointManagerPimpl::savepoint_main(), foedus::storage::masstree::SplitIntermediate::split_impl_no_error(), foedus::storage::sequential::SequentialStoragePimpl::truncate(), foedus::storage::masstree::MasstreeBorderPage::try_expand_record_in_page_physical(), foedus::log::ThreadLogBuffer::wait_for_space(), foedus::snapshot::SnapshotManagerControlBlock::wakeup_snapshot_children(), and foedus::xct::InCommitEpochGuard::~InCommitEpochGuard().

|

inline |

Equivalent to std::atomic_thread_fence(std::memory_order_seq_cst).

Same as memory_order_acq_rel, plus a single total order exists in which all threads observe all modifications in the same order.

Definition at line 92 of file atomic_fences.hpp.

|

inline |

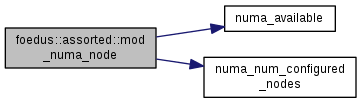

In order to run even on a non-numa machine or a machine with fewer sockets, we allow specifying arbitrary numa_node.

we just take mod.

Definition at line 31 of file mod_numa_node.hpp.

References numa_available(), and numa_num_configured_nodes().

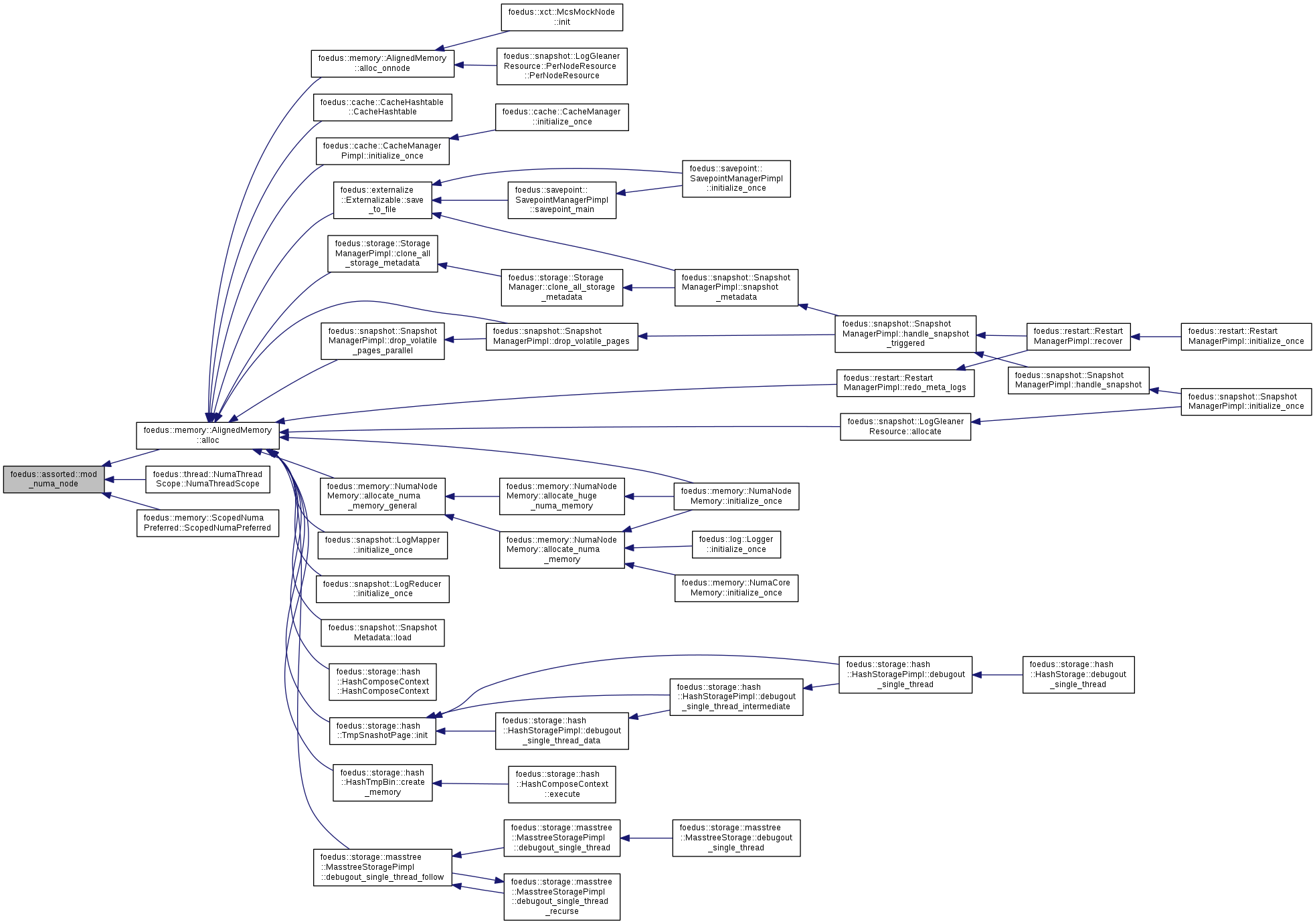

Referenced by foedus::memory::AlignedMemory::alloc(), foedus::thread::NumaThreadScope::NumaThreadScope(), and foedus::memory::ScopedNumaPreferred::ScopedNumaPreferred().

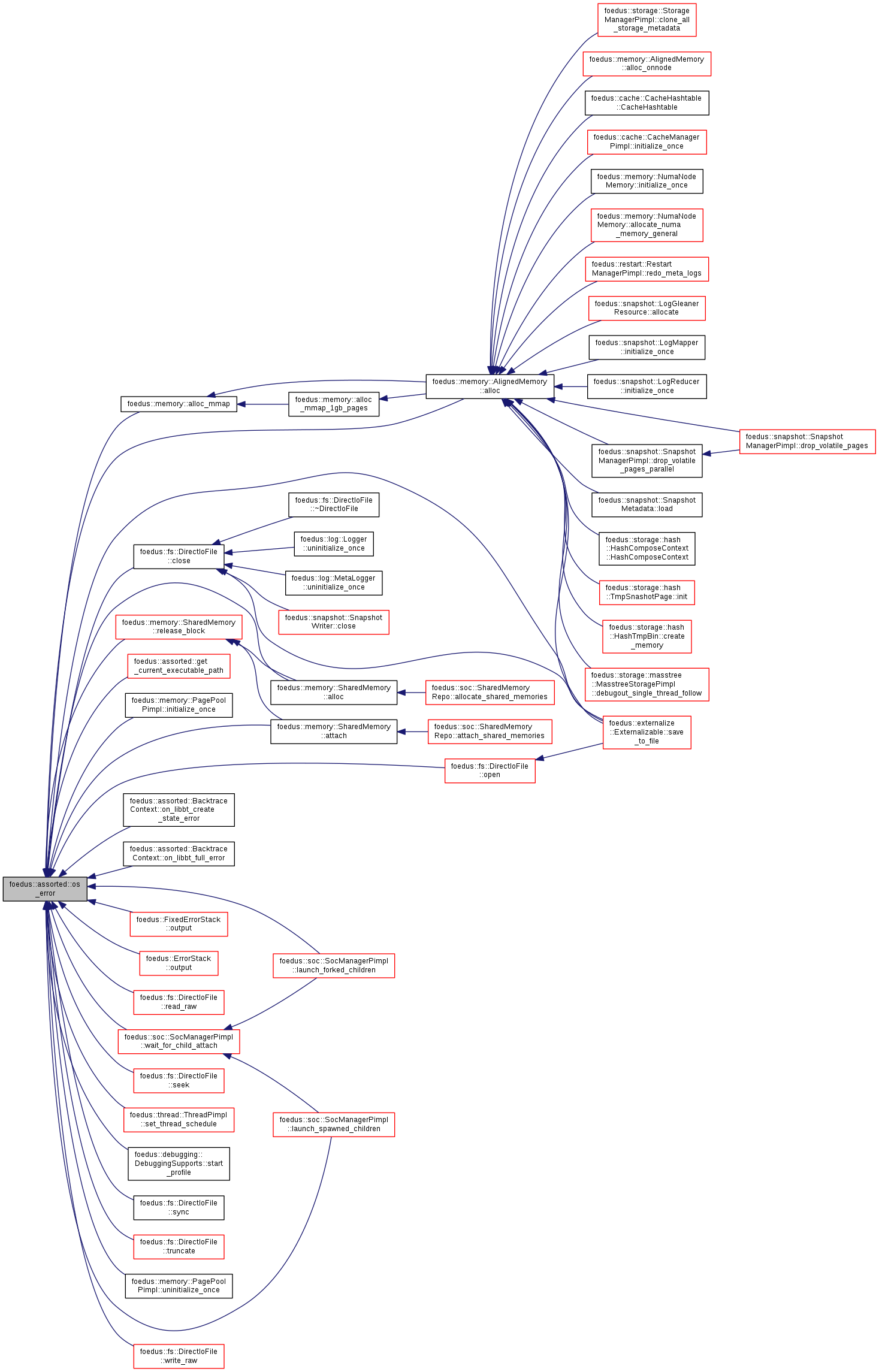

| std::string foedus::assorted::os_error | ( | ) |

Thread-safe strerror(errno).

We might do some trick here for portability, too.

Definition at line 67 of file assorted_func.cpp.

Referenced by foedus::memory::SharedMemory::alloc(), foedus::memory::AlignedMemory::alloc(), foedus::memory::alloc_mmap(), foedus::memory::SharedMemory::attach(), foedus::fs::DirectIoFile::close(), foedus::assorted::get_current_executable_path(), foedus::memory::PagePoolPimpl::initialize_once(), foedus::soc::SocManagerPimpl::launch_forked_children(), foedus::soc::SocManagerPimpl::launch_spawned_children(), foedus::assorted::BacktraceContext::on_libbt_create_state_error(), foedus::assorted::BacktraceContext::on_libbt_full_error(), foedus::fs::DirectIoFile::open(), foedus::FixedErrorStack::output(), foedus::ErrorStack::output(), foedus::fs::DirectIoFile::read_raw(), foedus::memory::SharedMemory::release_block(), foedus::externalize::Externalizable::save_to_file(), foedus::fs::DirectIoFile::seek(), foedus::thread::ThreadPimpl::set_thread_schedule(), foedus::debugging::DebuggingSupports::start_profile(), foedus::fs::DirectIoFile::sync(), foedus::fs::DirectIoFile::truncate(), foedus::memory::PagePoolPimpl::uninitialize_once(), foedus::soc::SocManagerPimpl::wait_for_child_attach(), and foedus::fs::DirectIoFile::write_raw().

| std::string foedus::assorted::os_error | ( | int | error_number | ) |

This version receives errno.

Definition at line 71 of file assorted_func.cpp.

|

inline |

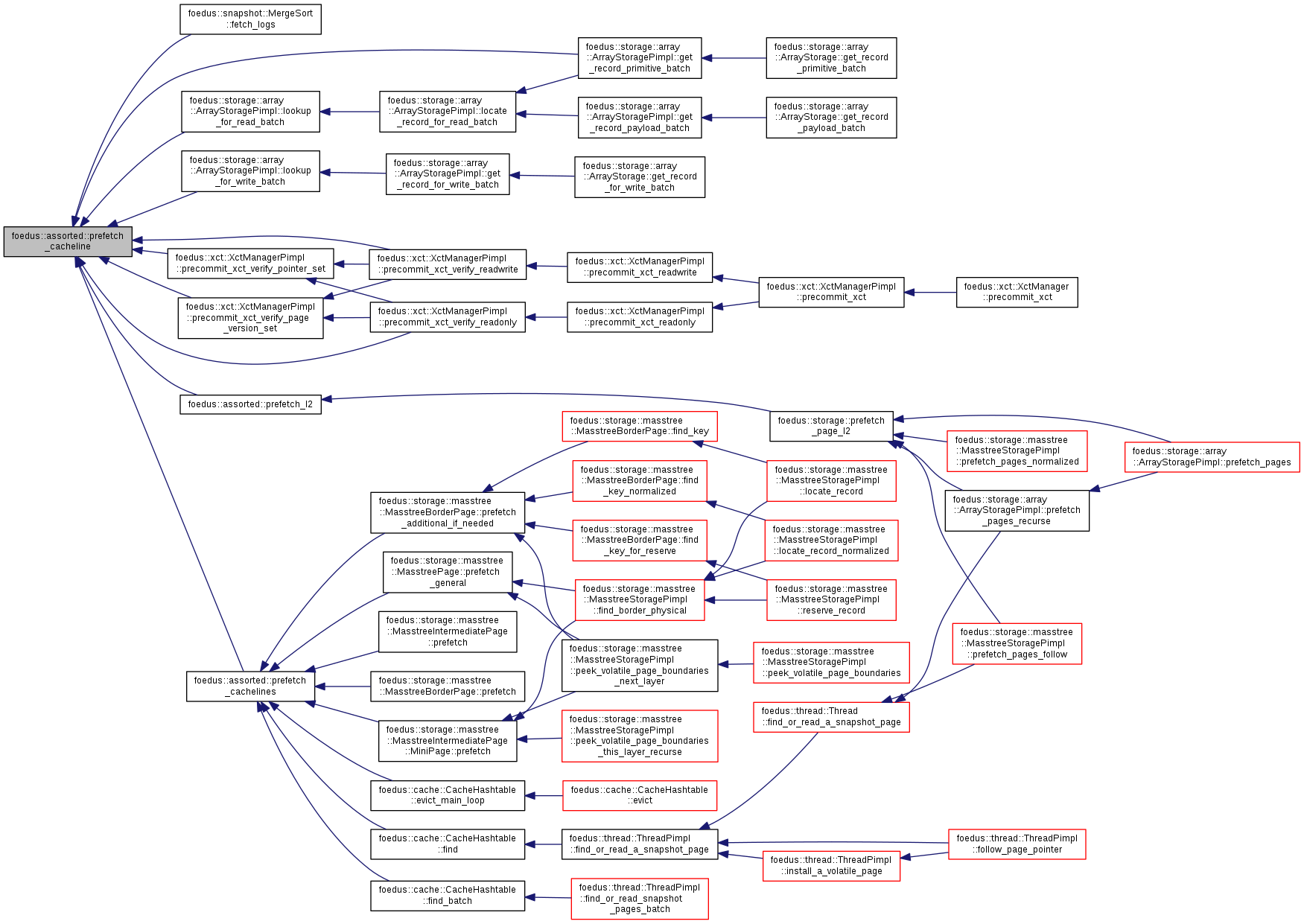

Prefetch one cacheline to L1 cache.

| [in] | address | memory address to prefetch. |

Definition at line 49 of file cacheline.hpp.

Referenced by foedus::snapshot::MergeSort::fetch_logs(), foedus::storage::array::ArrayStoragePimpl::get_record_primitive_batch(), foedus::storage::array::ArrayStoragePimpl::lookup_for_read_batch(), foedus::storage::array::ArrayStoragePimpl::lookup_for_write_batch(), foedus::xct::XctManagerPimpl::precommit_xct_verify_page_version_set(), foedus::xct::XctManagerPimpl::precommit_xct_verify_pointer_set(), foedus::xct::XctManagerPimpl::precommit_xct_verify_readonly(), foedus::xct::XctManagerPimpl::precommit_xct_verify_readwrite(), foedus::assorted::prefetch_cachelines(), and foedus::assorted::prefetch_l2().

|

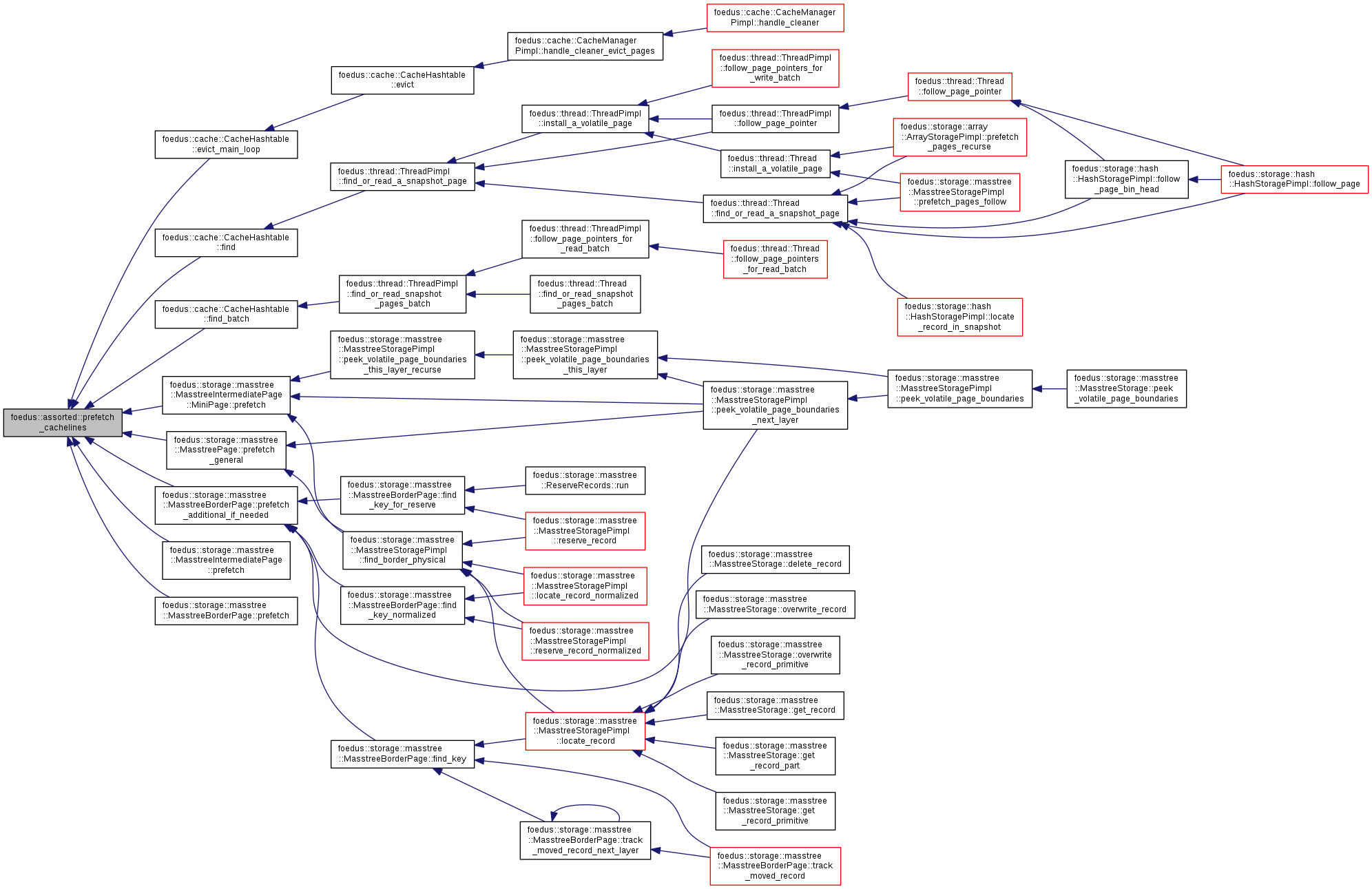

inline |

Prefetch multiple contiguous cachelines to L1 cache.

| [in] | address | memory address to prefetch. |

| [in] | cacheline_count | count of cachelines to prefetch. |

Definition at line 66 of file cacheline.hpp.

References foedus::assorted::prefetch_cacheline().

Referenced by foedus::cache::CacheHashtable::evict_main_loop(), foedus::cache::CacheHashtable::find(), foedus::cache::CacheHashtable::find_batch(), foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::prefetch(), foedus::storage::masstree::MasstreeIntermediatePage::prefetch(), foedus::storage::masstree::MasstreeBorderPage::prefetch(), foedus::storage::masstree::MasstreeBorderPage::prefetch_additional_if_needed(), and foedus::storage::masstree::MasstreePage::prefetch_general().

|

inline |

Prefetch multiple contiguous cachelines to L2/L3 cache.

| [in] | address | memory address to prefetch. |

| [in] | cacheline_count | count of cachelines to prefetch. |

Definition at line 79 of file cacheline.hpp.

References foedus::assorted::prefetch_cacheline().

Referenced by foedus::storage::prefetch_page_l2().

|

inline |

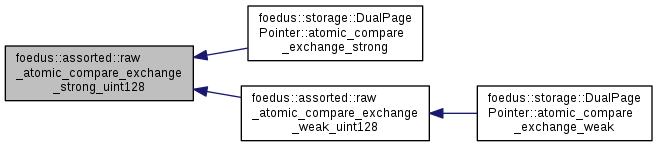

| bool foedus::assorted::raw_atomic_compare_exchange_strong_uint128 | ( | uint64_t * | ptr, |

| const uint64_t * | old_value, | ||

| const uint64_t * | new_value | ||

| ) |

Atomic 128-bit CAS, which is not in the standard yet.

| [in,out] | ptr | Points to 128-bit data. MUST BE 128-bit ALIGNED. |

| [in] | old_value | Points to 128-bit data. If ptr holds this value, we swap. Unlike std::atomic_compare_exchange_strong, this arg is const. |

| [in] | new_value | Points to 128-bit data. We change the ptr to hold this value. |

We shouldn't rely on it too much as double-word CAS is not provided in older CPU. Once the C++ standard employs it, this method should go away. I will be graybeard by then, tho.

Definition at line 25 of file raw_atomics.cpp.

Referenced by foedus::storage::DualPagePointer::atomic_compare_exchange_strong(), and foedus::assorted::raw_atomic_compare_exchange_weak_uint128().

|

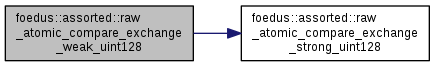

inline |

Weak version of raw_atomic_compare_exchange_strong().

| T | integer type |

Definition at line 74 of file raw_atomics.hpp.

|

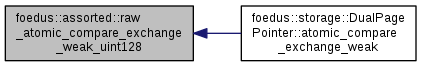

inline |

Weak version of raw_atomic_compare_exchange_strong_uint128().

Definition at line 108 of file raw_atomics.hpp.

References foedus::assorted::raw_atomic_compare_exchange_strong_uint128().

Referenced by foedus::storage::DualPagePointer::atomic_compare_exchange_weak().

|

inline |

Atomic Swap for raw primitive types rather than std::atomic<T>.

| T | integer type |

| [in,out] | target | Points to the data to be swapped. |

| [in] | desired | This value will be installed. |

This is a non-conditional swap, which always succeeds.

Definition at line 130 of file raw_atomics.hpp.

|

inline |

Atomic fetch-add for raw primitive types rather than std::atomic<T>.

| T | integer type |

Definition at line 150 of file raw_atomics.hpp.

|

inline |

Atomic fetch-bitwise-and for raw primitive types rather than std::atomic<T>.

| T | integer type |

Definition at line 164 of file raw_atomics.hpp.

|

inline |

Atomic fetch-bitwise-or for raw primitive types rather than std::atomic<T>.

| T | integer type |

Definition at line 174 of file raw_atomics.hpp.

|

inline |

Atomic fetch-bitwise-xor for raw primitive types rather than std::atomic<T>

| T | integer type |

Definition at line 184 of file raw_atomics.hpp.

|

inline |

Convert a big-endian byte array to a native integer.

| [in] | be_bytes | a big-endian byte array. MUST BE ALIGNED. |

| T | type of native integer |

Almost same as bexxtoh in endian.h except this is a single template function that supports signed integers.

Definition at line 116 of file endianness.hpp.

References ASSUME_ALIGNED, and foedus::assorted::betoh().

| std::string foedus::assorted::replace_all | ( | const std::string & | target, |

| const std::string & | search, | ||

| const std::string & | replacement | ||

| ) |

target.replaceAll(search, replacement).

Sad that std C++ doesn't provide such a basic stuff. regex is an overkill for this purpose.

Definition at line 45 of file assorted_func.cpp.

Referenced by foedus::log::LogOptions::convert_folder_path_pattern(), foedus::snapshot::SnapshotOptions::convert_folder_path_pattern(), foedus::proc::ProcOptions::convert_shared_library_dir_pattern(), foedus::proc::ProcOptions::convert_shared_library_path_pattern(), foedus::soc::SocOptions::convert_spawn_executable_pattern(), foedus::soc::SocOptions::convert_spawn_ld_library_path_pattern(), and foedus::assorted::replace_all().

| std::string foedus::assorted::replace_all | ( | const std::string & | target, |

| const std::string & | search, | ||

| int | replacement | ||

| ) |

target.replaceAll(search, String.valueOf(replacement)).

Definition at line 59 of file assorted_func.cpp.

References foedus::assorted::replace_all().

| void foedus::assorted::spinlock_yield | ( | ) |

Invoke _mm_pause(), x86 PAUSE instruction, or something equivalent in the env.

Invoke this where you do a spinlock. It especially helps valgrind. Probably you should invoke this after a few spins.

Definition at line 193 of file assorted_func.cpp.

Referenced by foedus::soc::SharedCond::broadcast(), foedus::thread::ThreadPimpl::handle_tasks(), foedus::thread::ConditionVariable::notify_all(), foedus::assorted::spin_until(), foedus::soc::SharedCond::uninitialize(), foedus::soc::SocManagerPimpl::wait_for_child_attach(), foedus::soc::SocManagerPimpl::wait_for_child_terminate(), foedus::soc::SocManagerPimpl::wait_for_children_module(), foedus::soc::SocManagerPimpl::wait_for_master_module(), foedus::soc::SocManagerPimpl::wait_for_master_status(), foedus::assorted::SpinlockStat::yield_backoff(), foedus::assorted::yield_if_valgrind(), and foedus::thread::ConditionVariable::~ConditionVariable().

|

inline |

Alternative for static_assert(sizeof(foo) == sizeof(bar), "oh crap") to display sizeof(foo).

Use it like this:

Definition at line 195 of file assorted_func.hpp.

References CXX11_STATIC_ASSERT.

|

inline |

Convert a native integer to big-endian bytes and write them to the given address.

| [in] | host_value | a native integer. |

| [out] | be_bytes | address to write out big endian bytes. MUST BE ALIGNED. |

| T | type of native integer |

Definition at line 131 of file endianness.hpp.

References ASSUME_ALIGNED.

| const uint16_t foedus::assorted::kCachelineSize = 64 |

Byte count of one cache line.

Several places use this to avoid false sharing of cache lines, for example separating two variables that are frequently accessed with atomic requirements.

Definition at line 42 of file cacheline.hpp.

Referenced by foedus::cache::CacheHashtable::evict_main_loop(), foedus::storage::masstree::MasstreeBorderPage::prefetch_additional_if_needed(), and foedus::storage::prefetch_page_l2().

| const bool foedus::assorted::kIsLittleEndian = false |

A handy const boolean to tell if it's little endina.

Most compilers would resolve "if (kIsLittleEndian) ..." at compile time, so no overhead. Compared to writing ifdef each time, this is handier. However, there are a few cases where we have to write ifdef (class definition etc).

Definition at line 54 of file endianness.hpp.