|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

Assorted Methods/Classes that are too subtle to have their own packages. More...

Assorted Methods/Classes that are too subtle to have their own packages.

Do NOT use this package to hold hundleds of classes/methods. That's a class design failure. This package should contain really only a few methods and classes, each of which should be extremely simple and unrelated each other. Otherwise, make a package for them. Learn from the stupid history of java.util.

Classes | |

| struct | BacktraceContext |

| struct | ConstDiv |

| The pre-calculated p-m pair for optimized integer division by constant. More... | |

| class | DumbSpinlock |

| A simple spinlock using a boolean field. More... | |

| class | FixedString |

| An embedded string object of fixed max-length, which uses no external memory. More... | |

| struct | Hex |

| Convenient way of writing hex integers to stream. More... | |

| struct | HexString |

| Equivalent to std::hex in case the stream doesn't support it. More... | |

| struct | ProbCounter |

| Implements a probabilistic counter [Morris 1978]. More... | |

| struct | ProtectedBoundary |

| A 4kb dummy data placed between separate memory regions so that we can check if/where a bogus memory access happens. More... | |

| struct | SpinlockStat |

| Helper for SPINLOCK_WHILE. More... | |

| struct | Top |

| Write only first few bytes to stream. More... | |

| class | UniformRandom |

| A very simple and deterministic random generator that is more aligned with standard benchmark such as TPC-C. More... | |

| class | ZipfianRandom |

| A simple zipfian generator based off of YCSB's Java implementation. More... | |

Functions | |

| template<typename T , uint64_t ALIGNMENT> | |

| T | align (T value) |

| Returns the smallest multiply of ALIGNMENT that is equal or larger than the given number. More... | |

| template<typename T > | |

| T | align8 (T value) |

| 8-alignment. More... | |

| template<typename T > | |

| T | align16 (T value) |

| 16-alignment. More... | |

| template<typename T > | |

| T | align64 (T value) |

| 64-alignment. More... | |

| int64_t | int_div_ceil (int64_t dividee, int64_t dividor) |

| Efficient ceil(dividee/dividor) for integer. More... | |

| std::string | replace_all (const std::string &target, const std::string &search, const std::string &replacement) |

| target.replaceAll(search, replacement). More... | |

| std::string | replace_all (const std::string &target, const std::string &search, int replacement) |

| target.replaceAll(search, String.valueOf(replacement)). More... | |

| std::string | os_error () |

| Thread-safe strerror(errno). More... | |

| std::string | os_error (int error_number) |

| This version receives errno. More... | |

| std::string | get_current_executable_path () |

| Returns the full path of current executable. More... | |

| void | spinlock_yield () |

| Invoke _mm_pause(), x86 PAUSE instruction, or something equivalent in the env. More... | |

| template<uint64_t SIZE1, uint64_t SIZE2> | |

| int | static_size_check () |

| Alternative for static_assert(sizeof(foo) == sizeof(bar), "oh crap") to display sizeof(foo). More... | |

| std::string | demangle_type_name (const char *mangled_name) |

| Demangle the given C++ type name if possible (otherwise the original string). More... | |

| template<typename T > | |

| std::string | get_pretty_type_name () |

| Returns the name of the C++ type as readable as possible. More... | |

| uint64_t | generate_almost_prime_below (uint64_t threshold) |

| Generate a prime or some number that is almost prime less than the given number. More... | |

| void | memory_fence_acquire () |

| Equivalent to std::atomic_thread_fence(std::memory_order_acquire). More... | |

| void | memory_fence_release () |

| Equivalent to std::atomic_thread_fence(std::memory_order_release). More... | |

| void | memory_fence_acq_rel () |

| Equivalent to std::atomic_thread_fence(std::memory_order_acq_rel). More... | |

| void | memory_fence_consume () |

| Equivalent to std::atomic_thread_fence(std::memory_order_consume). More... | |

| void | memory_fence_seq_cst () |

| Equivalent to std::atomic_thread_fence(std::memory_order_seq_cst). More... | |

| template<typename T > | |

| T | atomic_load_seq_cst (const T *target) |

| Atomic load with a seq_cst barrier for raw primitive types rather than std::atomic<T>. More... | |

| template<typename T > | |

| T | atomic_load_acquire (const T *target) |

| Atomic load with an acquire barrier for raw primitive types rather than std::atomic<T>. More... | |

| template<typename T > | |

| T | atomic_load_consume (const T *target) |

| Atomic load with a consume barrier for raw primitive types rather than std::atomic<T>. More... | |

| template<typename T > | |

| void | atomic_store_seq_cst (T *target, T value) |

| Atomic store with a seq_cst barrier for raw primitive types rather than std::atomic<T>. More... | |

| template<typename T > | |

| void | atomic_store_release (T *target, T value) |

| Atomic store with a release barrier for raw primitive types rather than std::atomic<T>. More... | |

| void | prefetch_cacheline (const void *address) |

| Prefetch one cacheline to L1 cache. More... | |

| void | prefetch_cachelines (const void *address, int cacheline_count) |

| Prefetch multiple contiguous cachelines to L1 cache. More... | |

| void | prefetch_l2 (const void *address, int cacheline_count) |

| Prefetch multiple contiguous cachelines to L2/L3 cache. More... | |

| template<typename T > | |

| T | betoh (T be_value) |

| template<> | |

| uint64_t | betoh< uint64_t > (uint64_t be_value) |

| template<> | |

| uint32_t | betoh< uint32_t > (uint32_t be_value) |

| template<> | |

| uint16_t | betoh< uint16_t > (uint16_t be_value) |

| template<> | |

| uint8_t | betoh< uint8_t > (uint8_t be_value) |

| template<> | |

| int64_t | betoh< int64_t > (int64_t be_value) |

| template<> | |

| int32_t | betoh< int32_t > (int32_t be_value) |

| template<> | |

| int16_t | betoh< int16_t > (int16_t be_value) |

| template<> | |

| int8_t | betoh< int8_t > (int8_t be_value) |

| template<typename T > | |

| T | htobe (T host_value) |

| template<> | |

| uint64_t | htobe< uint64_t > (uint64_t host_value) |

| template<> | |

| uint32_t | htobe< uint32_t > (uint32_t host_value) |

| template<> | |

| uint16_t | htobe< uint16_t > (uint16_t host_value) |

| template<> | |

| uint8_t | htobe< uint8_t > (uint8_t host_value) |

| template<> | |

| int64_t | htobe< int64_t > (int64_t host_value) |

| template<> | |

| int32_t | htobe< int32_t > (int32_t host_value) |

| template<> | |

| int16_t | htobe< int16_t > (int16_t host_value) |

| template<> | |

| int8_t | htobe< int8_t > (int8_t host_value) |

| template<typename T > | |

| T | read_bigendian (const void *be_bytes) |

| Convert a big-endian byte array to a native integer. More... | |

| template<typename T > | |

| void | write_bigendian (T host_value, void *be_bytes) |

| Convert a native integer to big-endian bytes and write them to the given address. More... | |

| int | mod_numa_node (int numa_node) |

| In order to run even on a non-numa machine or a machine with fewer sockets, we allow specifying arbitrary numa_node. More... | |

| template<typename T > | |

| bool | raw_atomic_compare_exchange_strong (T *target, T *expected, T desired) |

| Atomic CAS. More... | |

| template<typename T > | |

| bool | raw_atomic_compare_exchange_weak (T *target, T *expected, T desired) |

| Weak version of raw_atomic_compare_exchange_strong(). More... | |

| bool | raw_atomic_compare_exchange_strong_uint128 (uint64_t *ptr, const uint64_t *old_value, const uint64_t *new_value) |

| Atomic 128-bit CAS, which is not in the standard yet. More... | |

| bool | raw_atomic_compare_exchange_weak_uint128 (uint64_t *ptr, const uint64_t *old_value, const uint64_t *new_value) |

| Weak version of raw_atomic_compare_exchange_strong_uint128(). More... | |

| template<typename T > | |

| T | raw_atomic_exchange (T *target, T desired) |

| Atomic Swap for raw primitive types rather than std::atomic<T>. More... | |

| template<typename T > | |

| T | raw_atomic_fetch_add (T *target, T addendum) |

| Atomic fetch-add for raw primitive types rather than std::atomic<T>. More... | |

| template<typename T > | |

| T | raw_atomic_fetch_and_bitwise_and (T *target, T operand) |

| Atomic fetch-bitwise-and for raw primitive types rather than std::atomic<T>. More... | |

| template<typename T > | |

| T | raw_atomic_fetch_and_bitwise_or (T *target, T operand) |

| Atomic fetch-bitwise-or for raw primitive types rather than std::atomic<T>. More... | |

| template<typename T > | |

| T | raw_atomic_fetch_and_bitwise_xor (T *target, T operand) |

| Atomic fetch-bitwise-xor for raw primitive types rather than std::atomic<T> More... | |

| std::vector< std::string > | get_backtrace (bool rich=true) |

| Returns the backtrace information of the current stack. More... | |

| bool | is_running_on_valgrind () |

| template<typename COND > | |

| uint64_t | spin_until (COND spin_until_cond) |

| Spin locally until the given condition returns true. More... | |

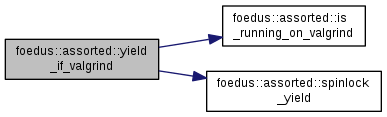

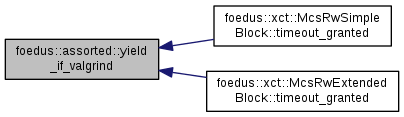

| void | yield_if_valgrind () |

| Use this in your while as a stop-gap before switching to spin_until(). More... | |

| std::ostream & | operator<< (std::ostream &o, const Hex &v) |

| std::ostream & | operator<< (std::ostream &o, const HexString &v) |

| std::ostream & | operator<< (std::ostream &o, const Top &v) |

| void | libbt_create_state_error (void *data, const char *msg, int errnum) |

| void | libbt_full_error (void *data, const char *msg, int errnum) |

| int | libbt_full (void *data, uintptr_t pc, const char *filename, int lineno, const char *function) |

Variables | |

| const uint16_t | kCachelineSize = 64 |

| Byte count of one cache line. More... | |

| const uint32_t | kPower2To31 = 1U << 31 |

| const uint64_t | kPower2To63 = 1ULL << 63 |

| const uint32_t | kFull32Bits = 0xFFFFFFFF |

| const uint32_t | kFull31Bits = 0x7FFFFFFF |

| const uint64_t | kFull64Bits = 0xFFFFFFFFFFFFFFFFULL |

| const uint64_t | kFull63Bits = 0x7FFFFFFFFFFFFFFFULL |

| const bool | kIsLittleEndian = false |

| A handy const boolean to tell if it's little endina. More... | |

| const uint64_t | kProtectedBoundaryMagicWord = 0x42a6292680d7ce36ULL |

| const char * | kUpperHexChars = "0123456789ABCDEF" |

| T foedus::assorted::betoh | ( | T | be_value | ) |

|

inline |

Definition at line 79 of file endianness.hpp.

|

inline |

Definition at line 76 of file endianness.hpp.

|

inline |

Definition at line 73 of file endianness.hpp.

|

inline |

Definition at line 82 of file endianness.hpp.

|

inline |

Definition at line 67 of file endianness.hpp.

|

inline |

Definition at line 66 of file endianness.hpp.

|

inline |

Definition at line 65 of file endianness.hpp.

|

inline |

Definition at line 68 of file endianness.hpp.

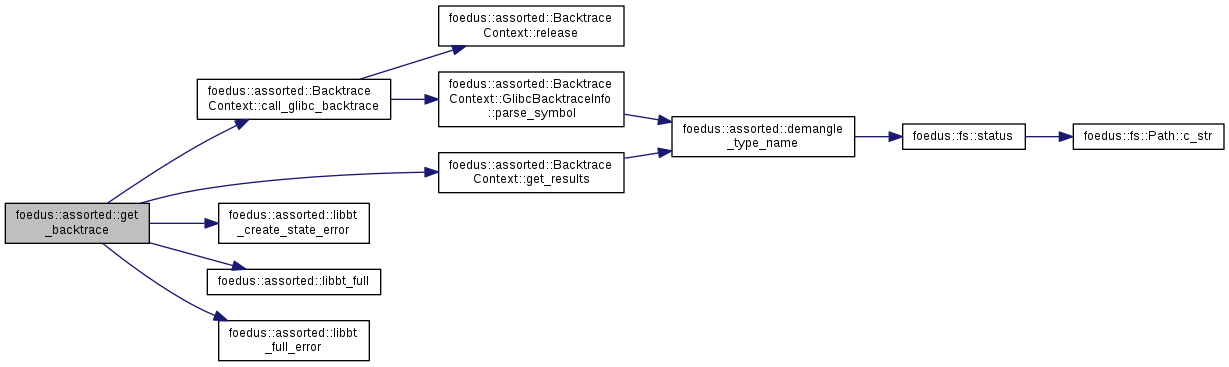

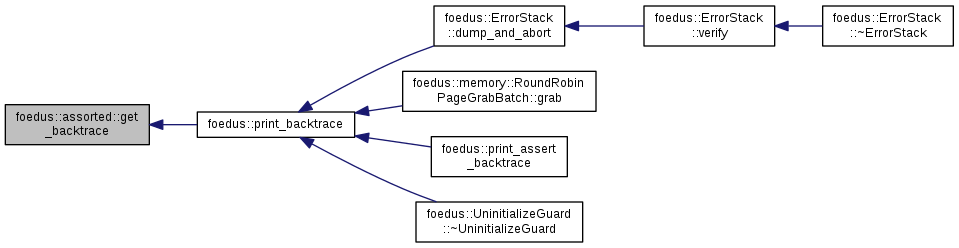

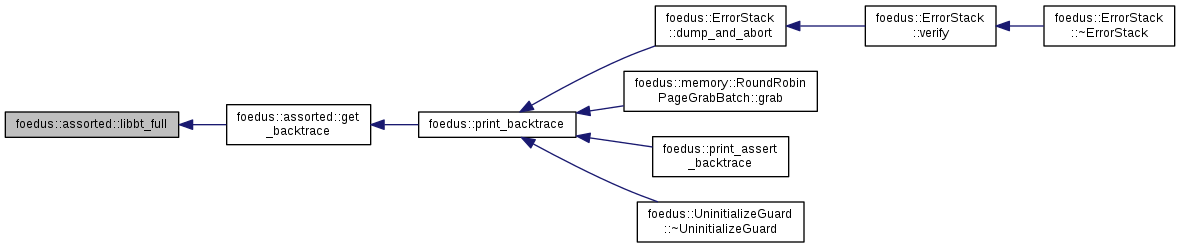

| std::vector< std::string > foedus::assorted::get_backtrace | ( | bool | rich = true | ) |

Returns the backtrace information of the current stack.

If rich flag is given, the backtrace information is converted to human-readable format as much as possible via addr2line (which is linux-only). Also, this method does not care about out-of-memory situation. When you are really concerned with it, just use backtrace, backtrace_symbols_fd etc.

Definition at line 263 of file rich_backtrace.cpp.

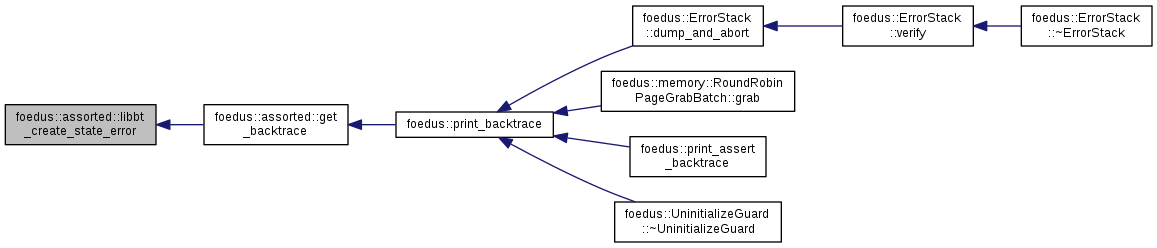

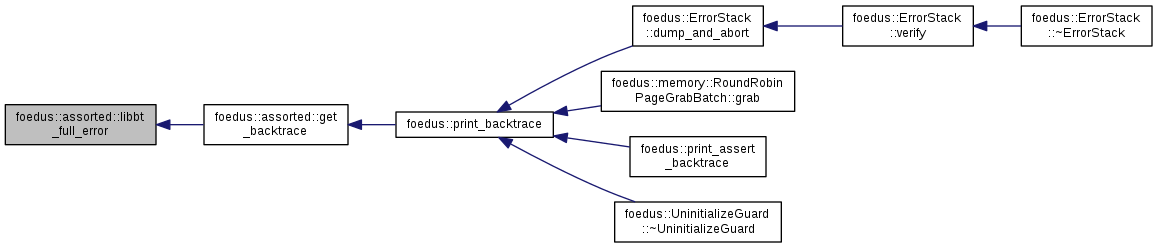

References foedus::assorted::BacktraceContext::call_glibc_backtrace(), foedus::assorted::BacktraceContext::get_results(), libbt_create_state_error(), libbt_full(), and libbt_full_error().

Referenced by foedus::print_backtrace().

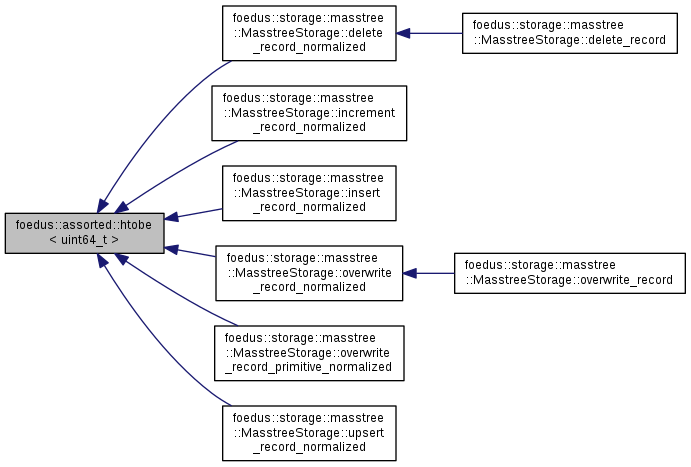

| T foedus::assorted::htobe | ( | T | host_value | ) |

|

inline |

Definition at line 98 of file endianness.hpp.

|

inline |

Definition at line 95 of file endianness.hpp.

|

inline |

Definition at line 92 of file endianness.hpp.

|

inline |

Definition at line 101 of file endianness.hpp.

|

inline |

Definition at line 89 of file endianness.hpp.

|

inline |

Definition at line 88 of file endianness.hpp.

|

inline |

Definition at line 87 of file endianness.hpp.

Referenced by foedus::storage::masstree::MasstreeStorage::delete_record_normalized(), foedus::storage::masstree::MasstreeStorage::increment_record_normalized(), foedus::storage::masstree::MasstreeStorage::insert_record_normalized(), foedus::storage::masstree::MasstreeStorage::overwrite_record_normalized(), foedus::storage::masstree::MasstreeStorage::overwrite_record_primitive_normalized(), and foedus::storage::masstree::MasstreeStorage::upsert_record_normalized().

|

inline |

Definition at line 90 of file endianness.hpp.

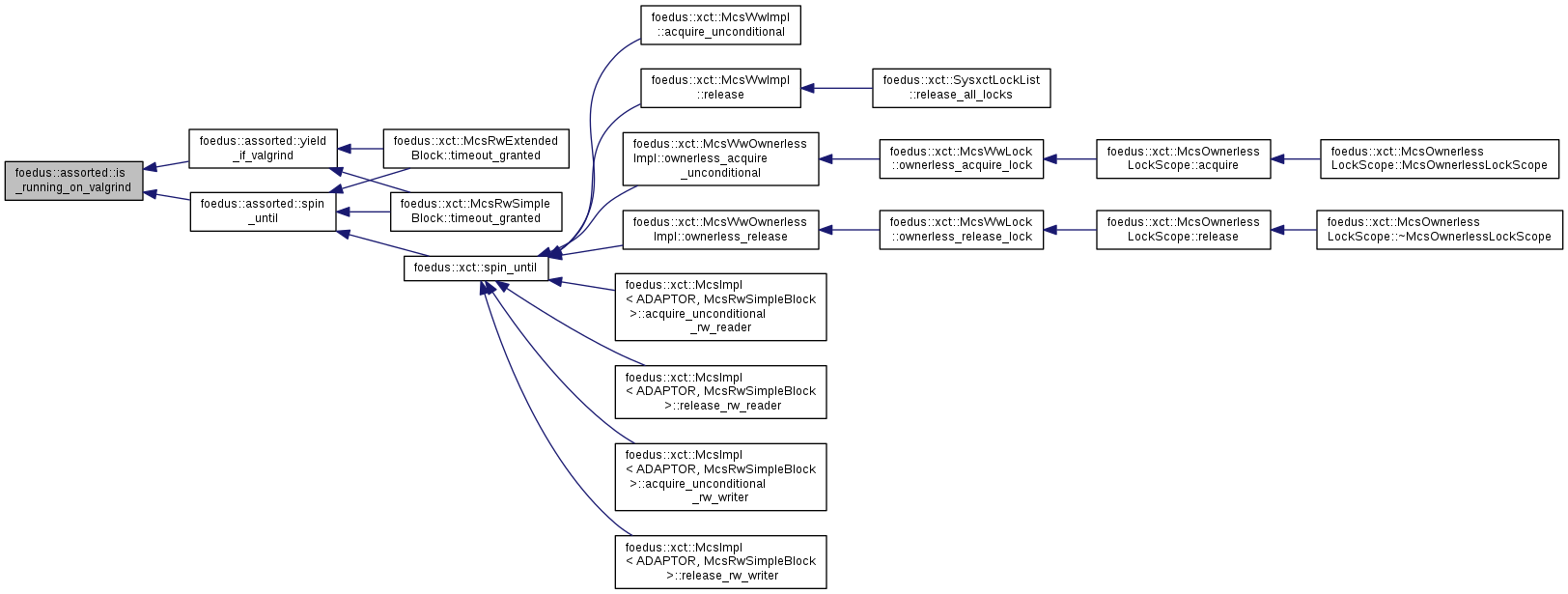

| bool foedus::assorted::is_running_on_valgrind | ( | ) |

Definition at line 24 of file spin_until_impl.cpp.

Referenced by spin_until(), and yield_if_valgrind().

| void foedus::assorted::libbt_create_state_error | ( | void * | data, |

| const char * | msg, | ||

| int | errnum | ||

| ) |

Definition at line 117 of file rich_backtrace.cpp.

Referenced by get_backtrace().

| int foedus::assorted::libbt_full | ( | void * | data, |

| uintptr_t | pc, | ||

| const char * | filename, | ||

| int | lineno, | ||

| const char * | function | ||

| ) |

Definition at line 131 of file rich_backtrace.cpp.

Referenced by get_backtrace().

| void foedus::assorted::libbt_full_error | ( | void * | data, |

| const char * | msg, | ||

| int | errnum | ||

| ) |

Definition at line 124 of file rich_backtrace.cpp.

Referenced by get_backtrace().

| std::ostream& foedus::assorted::operator<< | ( | std::ostream & | o, |

| const Hex & | v | ||

| ) |

Definition at line 92 of file assorted_func.cpp.

References foedus::assorted::Hex::fix_digits_, and foedus::assorted::Hex::val_.

| std::ostream& foedus::assorted::operator<< | ( | std::ostream & | o, |

| const HexString & | v | ||

| ) |

Definition at line 123 of file assorted_func.cpp.

References foedus::assorted::HexString::max_bytes_, and foedus::assorted::HexString::str_.

| std::ostream& foedus::assorted::operator<< | ( | std::ostream & | o, |

| const Top & | v | ||

| ) |

Definition at line 138 of file assorted_func.cpp.

References foedus::assorted::Top::data_, foedus::assorted::Top::data_len_, and foedus::assorted::Top::max_bytes_.

|

inline |

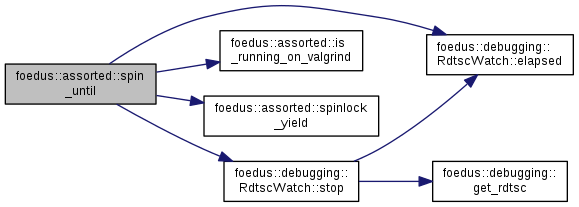

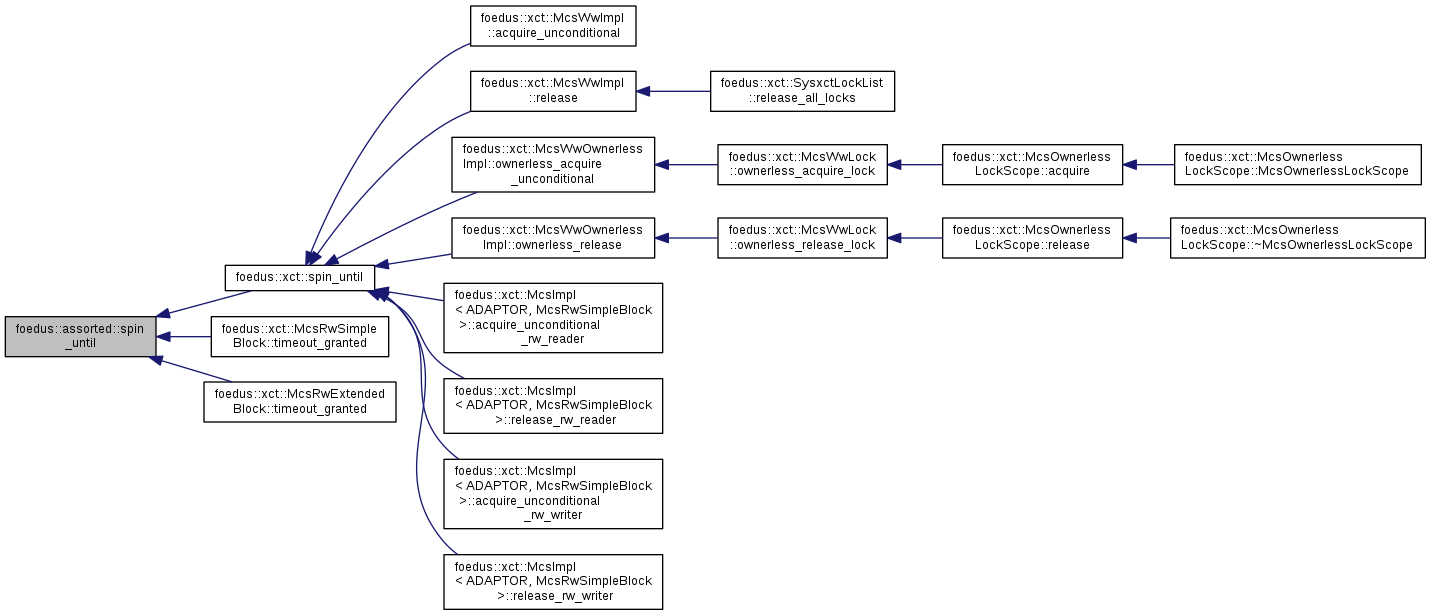

Spin locally until the given condition returns true.

Even if you think your while-loop is trivial, make sure you use this. The yield is necessary for valgrind runs. This template has a negligible overhead in non-valgrind release compilation.

This is a frequently appearing pattern without which valgrind runs would go into an infinite loop. Unfortunately it needs lambda, so this is an _impl file.

In general:

Notice the !. spin_"until", thus opposite to "while".

Definition at line 61 of file spin_until_impl.hpp.

References foedus::debugging::RdtscWatch::elapsed(), is_running_on_valgrind(), spinlock_yield(), and foedus::debugging::RdtscWatch::stop().

Referenced by foedus::xct::spin_until(), foedus::xct::McsRwSimpleBlock::timeout_granted(), and foedus::xct::McsRwExtendedBlock::timeout_granted().

|

inline |

Use this in your while as a stop-gap before switching to spin_until().

Definition at line 81 of file spin_until_impl.hpp.

References is_running_on_valgrind(), and spinlock_yield().

Referenced by foedus::xct::McsRwSimpleBlock::timeout_granted(), and foedus::xct::McsRwExtendedBlock::timeout_granted().

| const uint32_t foedus::assorted::kFull31Bits = 0x7FFFFFFF |

Definition at line 30 of file const_div.hpp.

| const uint32_t foedus::assorted::kFull32Bits = 0xFFFFFFFF |

Definition at line 29 of file const_div.hpp.

| const uint64_t foedus::assorted::kFull63Bits = 0x7FFFFFFFFFFFFFFFULL |

Definition at line 32 of file const_div.hpp.

| const uint64_t foedus::assorted::kFull64Bits = 0xFFFFFFFFFFFFFFFFULL |

Definition at line 31 of file const_div.hpp.

| const uint32_t foedus::assorted::kPower2To31 = 1U << 31 |

Definition at line 27 of file const_div.hpp.

| const uint64_t foedus::assorted::kPower2To63 = 1ULL << 63 |

Definition at line 28 of file const_div.hpp.

| const uint64_t foedus::assorted::kProtectedBoundaryMagicWord = 0x42a6292680d7ce36ULL |

Definition at line 33 of file protected_boundary.hpp.

Referenced by foedus::assorted::ProtectedBoundary::reset().

| const char* foedus::assorted::kUpperHexChars = "0123456789ABCDEF" |

Definition at line 91 of file assorted_func.cpp.