|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

The Simple MCS-RW lock. More...

The Simple MCS-RW lock.

These need partial specialization on RW_BLOCK=McsRwSimpleBlock. However, C++ standard doesn't allow function partial specialization. We thus make it a class partial specialization as below. Stupid? Totally agree!

Definition at line 348 of file xct_mcs_impl.cpp.

Public Member Functions | |

| McsBlockIndex | acquire_try_rw_writer (McsRwLock *lock) |

| McsBlockIndex | acquire_try_rw_reader (McsRwLock *lock) |

| McsBlockIndex | acquire_unconditional_rw_reader (McsRwLock *mcs_rw_lock) |

| void | release_rw_reader (McsRwLock *mcs_rw_lock, McsBlockIndex block_index) |

| McsBlockIndex | acquire_unconditional_rw_writer (McsRwLock *mcs_rw_lock) |

| void | release_rw_writer (McsRwLock *mcs_rw_lock, McsBlockIndex block_index) |

| AcquireAsyncRet | acquire_async_rw_reader (McsRwLock *lock) |

| AcquireAsyncRet | acquire_async_rw_writer (McsRwLock *lock) |

| bool | retry_async_rw_reader (McsRwLock *lock, McsBlockIndex block_index) |

| bool | retry_async_rw_writer (McsRwLock *lock, McsBlockIndex block_index) |

| void | cancel_async_rw_reader (McsRwLock *, McsBlockIndex) |

| void | cancel_async_rw_writer (McsRwLock *, McsBlockIndex) |

Static Public Member Functions | |

| static bool | does_support_try_rw_reader () |

|

inline |

Definition at line 544 of file xct_mcs_impl.cpp.

References foedus::xct::McsImpl< ADAPTOR, RW_BLOCK >::retry_async_rw_reader().

|

inline |

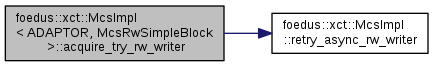

Definition at line 550 of file xct_mcs_impl.cpp.

References foedus::xct::McsImpl< ADAPTOR, RW_BLOCK >::retry_async_rw_writer().

|

inline |

Definition at line 363 of file xct_mcs_impl.cpp.

References ASSERT_ND, and foedus::xct::McsImpl< ADAPTOR, RW_BLOCK >::retry_async_rw_reader().

|

inline |

Definition at line 350 of file xct_mcs_impl.cpp.

References foedus::xct::McsImpl< ADAPTOR, RW_BLOCK >::retry_async_rw_writer().

|

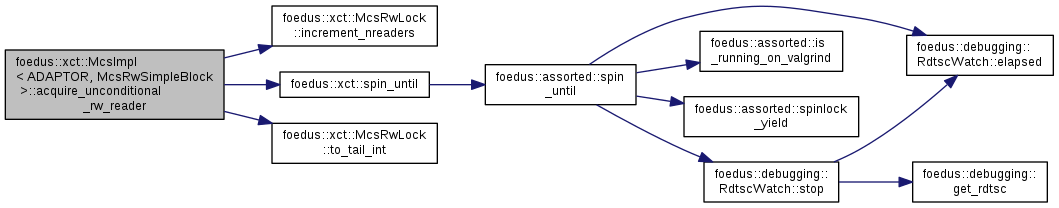

inline |

Definition at line 380 of file xct_mcs_impl.cpp.

References ASSERT_ND, foedus::xct::McsRwLock::increment_nreaders(), foedus::xct::spin_until(), foedus::xct::McsRwLock::tail_, and foedus::xct::McsRwLock::to_tail_int().

|

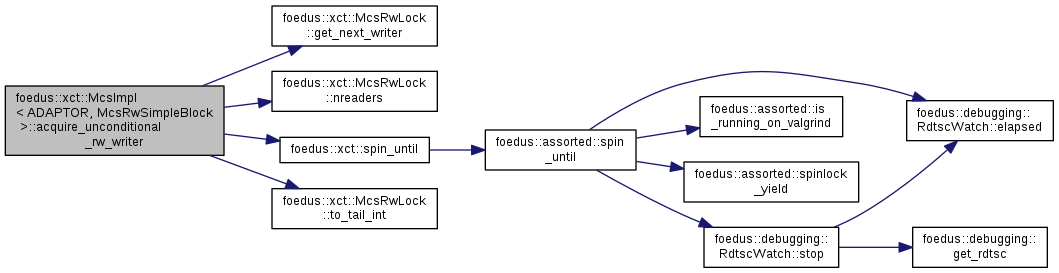

inline |

Definition at line 476 of file xct_mcs_impl.cpp.

References ASSERT_ND, foedus::xct::McsRwLock::get_next_writer(), foedus::xct::McsRwLock::kNextWriterNone, foedus::xct::McsRwLock::next_writer_, foedus::xct::McsRwLock::nreaders(), foedus::xct::spin_until(), foedus::xct::McsRwLock::tail_, and foedus::xct::McsRwLock::to_tail_int().

|

inline |

Definition at line 643 of file xct_mcs_impl.cpp.

|

inline |

Definition at line 647 of file xct_mcs_impl.cpp.

|

inlinestatic |

Definition at line 362 of file xct_mcs_impl.cpp.

|

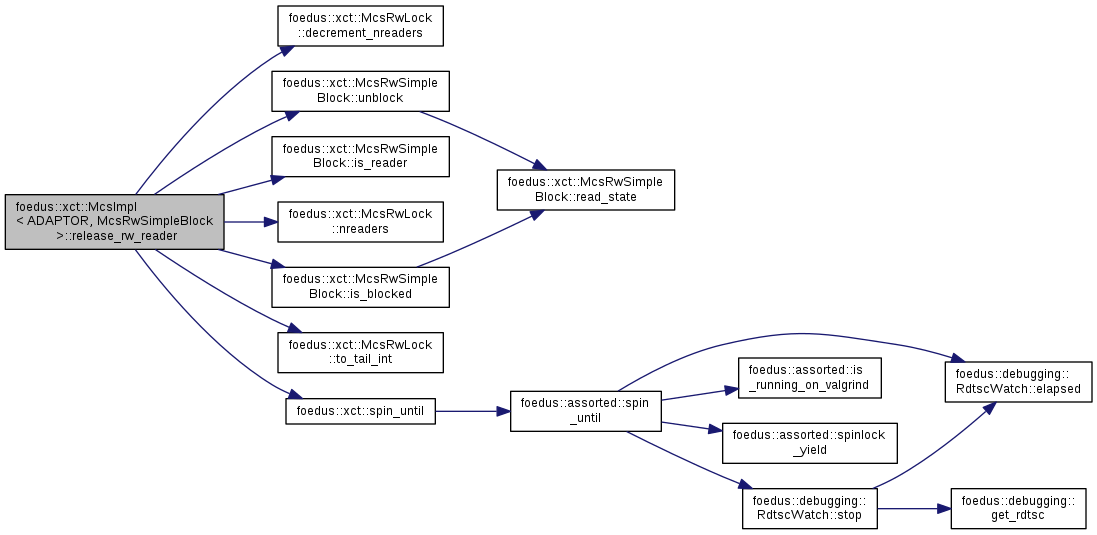

inline |

Definition at line 429 of file xct_mcs_impl.cpp.

References ASSERT_ND, foedus::xct::McsRwLock::decrement_nreaders(), foedus::xct::McsRwSimpleBlock::is_blocked(), foedus::xct::McsRwSimpleBlock::is_reader(), foedus::xct::McsRwLock::kNextWriterNone, foedus::xct::McsRwLock::next_writer_, foedus::xct::McsRwLock::nreaders(), foedus::xct::spin_until(), foedus::xct::McsRwLock::tail_, foedus::xct::McsRwLock::to_tail_int(), and foedus::xct::McsRwSimpleBlock::unblock().

|

inline |

Definition at line 517 of file xct_mcs_impl.cpp.

References ASSERT_ND, foedus::xct::McsRwLock::increment_nreaders(), foedus::xct::spin_until(), foedus::xct::McsRwLock::tail_, foedus::xct::McsRwLock::to_tail_int(), and UNLIKELY.

|

inline |

Definition at line 556 of file xct_mcs_impl.cpp.

References foedus::xct::McsRwLock::increment_nreaders(), foedus::xct::McsRwLock::tail_, and foedus::xct::McsRwLock::to_tail_int().

|

inline |

Definition at line 628 of file xct_mcs_impl.cpp.

References foedus::xct::McsRwLock::tail_, and foedus::xct::McsRwLock::to_tail_int().