|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

Transaction Manager, which provides APIs to begin/commit/abort transactions. More...

Transaction Manager, which provides APIs to begin/commit/abort transactions.

This package is the implementation of the commit protocol, the gut of concurrency control.

First thing first. Here's a minimal example to start one transaction in the engine.

Notice the wait_for_commit(commit_epoch) call. Without invoking the method, you should not consider that your transactions are committed. That's why the name of the method invoked above is "precommit_xct".

Here's a minimal example to start several transactions and commit them together, or group-commit, which is the primary usecase our engine is optimized for.

In this case, we invoke wait_for_commit() for the largest commit epoch just once at the end. This dramatically improves the throughput at the cost of latency of individual transactions.

Our commit protocol is based on [TU13], except that we have handling of non-volatile pages. [TU13]'s commit protocol completely avoids writes to shared memory by read operations. [LARSON11] is also an optimistic concurrency control, but it still has "read-lock" bits which has to be written by read operations. In many-core NUMA environment, this might cause scalability issues, thus we employ [TU13]'s approach.

The engine maintains two global foedus::Epoch; current Epoch and durable Epoch. foedus::xct::XctManager keeps advancing current epoch periodically while the log module advances durable epoch when it confirms that all log entries up to the epoch becomes durable and also that the log module durably writes a savepoint ( Savepoint Manager ) file.

Epoch Chime advances the current global epoch when a configured interval elapses or the user explicitly requests it. The chime checks whether it can safely advance an epoch so that the following invariant always holds.

In many cases, the invariants are trivially achieved. However, there are a few tricky cases.

Whenever the chime advances the epoch, we have to safely detect whether there is any transaction that might violate the invariant without causing expensive synchronization. This is done via the in-commit epoch guard. For more details, see the following class.

See foedus::xct::IsolationLevel

bluh

Classes | |

| struct | AcquireAsyncRet |

| Return value of acquire_async_rw. More... | |

| class | CurrentLockList |

| Sorted list of all locks, either read-lock or write-lock, taken in the current run. More... | |

| struct | CurrentLockListIteratorForWriteSet |

| An iterator over CurrentLockList to find entries along with sorted write-set. More... | |

| struct | InCommitEpochGuard |

| Automatically sets in-commit-epoch with appropriate fence during pre-commit protocol. More... | |

| struct | LockableXctId |

| Transaction ID, a 128-bit data to manage record versions and provide locking mechanism. More... | |

| struct | LockEntry |

| An entry in CLL and RLL, representing a lock that is taken or will be taken. More... | |

| struct | LockFreeReadXctAccess |

| Represents a record of special read-access during a transaction without any need for locking. More... | |

| struct | LockFreeWriteXctAccess |

| Represents a record of special write-access during a transaction without any need for locking. More... | |

| class | McsAdaptorConcept |

| Defines an adapter template interface for our MCS lock classes. More... | |

| class | McsImpl |

| Implements an MCS-locking Algorithm. More... | |

| class | McsImpl< ADAPTOR, McsRwExtendedBlock > |

| The Extended MCS-RW lock. More... | |

| class | McsImpl< ADAPTOR, McsRwSimpleBlock > |

| The Simple MCS-RW lock. More... | |

| class | McsMockAdaptor |

| Implements McsAdaptorConcept. More... | |

| struct | McsMockContext |

| Analogous to the entire engine. More... | |

| struct | McsMockDataPage |

| A dummy page layout to store RwLockableXctId. More... | |

| struct | McsMockNode |

| Analogous to one thread-group/socket/node. More... | |

| struct | McsMockThread |

| A dummy implementation that provides McsAdaptorConcept for testing. More... | |

| class | McsOwnerlessLockScope |

| struct | McsRwAsyncMapping |

| struct | McsRwExtendedBlock |

| Pre-allocated MCS block for extended version of RW-locks. More... | |

| struct | McsRwLock |

| An MCS reader-writer lock data structure. More... | |

| struct | McsRwSimpleBlock |

| Reader-writer (RW) MCS lock classes. More... | |

| struct | McsWwBlock |

| Pre-allocated MCS block for WW-locks. More... | |

| struct | McsWwBlockData |

| Exclusive-only (WW) MCS lock classes. More... | |

| class | McsWwImpl |

| A specialized/simplified implementation of an MCS-locking Algorithm for exclusive-only (WW) locks. More... | |

| struct | McsWwLock |

| An exclusive-only (WW) MCS lock data structure. More... | |

| class | McsWwOwnerlessImpl |

| A ownerless (contextless) interface for McsWwImpl. More... | |

| struct | PageComparator |

| struct | PageVersionAccess |

| Represents a record of reading a page during a transaction. More... | |

| struct | PointerAccess |

| Represents a record of following a page pointer during a transaction. More... | |

| struct | ReadXctAccess |

| Represents a record of read-access during a transaction. More... | |

| struct | RecordXctAccess |

| Base of ReadXctAccess and WriteXctAccess. More... | |

| class | RetrospectiveLockList |

| Retrospective lock list. More... | |

| struct | RwLockableXctId |

| The MCS reader-writer lock variant of LockableXctId. More... | |

| struct | SysxctFunctor |

| A functor representing the logic in a system transaction via virtual-function. More... | |

| struct | SysxctLockEntry |

| An entry in CLL/RLL for system transactions. More... | |

| class | SysxctLockList |

| RLL/CLL of a system transaction. More... | |

| struct | SysxctWorkspace |

| Per-thread reused work memory for system transactions. More... | |

| struct | TrackMovedRecordResult |

| Result of track_moved_record(). More... | |

| struct | WriteXctAccess |

| Represents a record of write-access during a transaction. More... | |

| class | Xct |

| Represents a user transaction. More... | |

| struct | XctId |

| Persistent status part of Transaction ID. More... | |

| class | XctManager |

| Xct Manager class that provides API to begin/abort/commit transaction. More... | |

| struct | XctManagerControlBlock |

| Shared data in XctManagerPimpl. More... | |

| class | XctManagerPimpl |

| Pimpl object of XctManager. More... | |

| struct | XctOptions |

| Set of options for xct manager. More... | |

Typedefs | |

| typedef uintptr_t | UniversalLockId |

| Universally ordered identifier of each lock. More... | |

| typedef uint32_t | LockListPosition |

| Index in a lock-list, either RLL or CLL. More... | |

| typedef uint32_t | McsBlockIndex |

| Index in thread-local MCS block. More... | |

Enumerations | |

| enum | IsolationLevel { kDirtyRead, kSnapshot, kSerializable } |

| Specifies the level of isolation during transaction processing. More... | |

| enum | LockMode { kNoLock = 0, kReadLock, kWriteLock } |

| Represents a mode of lock. More... | |

Functions | |

| template<typename LOCK_LIST , typename LOCK_ENTRY > | |

| LockListPosition | lock_lower_bound (const LOCK_LIST &list, UniversalLockId lock) |

| General lower_bound/binary_search logic for any kind of LockList/LockEntry. More... | |

| template<typename LOCK_LIST , typename LOCK_ENTRY > | |

| LockListPosition | lock_binary_search (const LOCK_LIST &list, UniversalLockId lock) |

| UniversalLockId | to_universal_lock_id (storage::VolatilePagePointer page_id, uintptr_t addr) |

| template<typename MCS_ADAPTOR , typename ENCLOSURE_RELEASE_ALL_LOCKS_FUNCTOR > | |

| ErrorCode | run_nested_sysxct_impl (SysxctFunctor *functor, MCS_ADAPTOR mcs_adaptor, uint32_t max_retries, SysxctWorkspace *workspace, UniversalLockId enclosing_max_lock_id, ENCLOSURE_RELEASE_ALL_LOCKS_FUNCTOR enclosure_release_all_locks_functor) |

| Runs a system transaction nested in a user transaction. More... | |

| UniversalLockId | to_universal_lock_id (const memory::GlobalVolatilePageResolver &resolver, uintptr_t lock_ptr) |

| Always use this method rather than doing the conversion yourself. More... | |

| UniversalLockId | to_universal_lock_id (uint64_t numa_node, uint64_t local_page_index, uintptr_t lock_ptr) |

| If you already have the numa_node, local_page_index, prefer this one. More... | |

| UniversalLockId | xct_id_to_universal_lock_id (const memory::GlobalVolatilePageResolver &resolver, RwLockableXctId *lock) |

| UniversalLockId | rw_lock_to_universal_lock_id (const memory::GlobalVolatilePageResolver &resolver, McsRwLock *lock) |

| RwLockableXctId * | from_universal_lock_id (const memory::GlobalVolatilePageResolver &resolver, const UniversalLockId universal_lock_id) |

| Always use this method rather than doing the conversion yourself. More... | |

| void | _dummy_static_size_check__COUNTER__ () |

| std::ostream & | operator<< (std::ostream &o, const LockEntry &v) |

| Debugging. More... | |

| std::ostream & | operator<< (std::ostream &o, const CurrentLockList &v) |

| std::ostream & | operator<< (std::ostream &o, const RetrospectiveLockList &v) |

| template<typename LOCK_LIST > | |

| void | lock_assert_sorted (const LOCK_LIST &list) |

| std::ostream & | operator<< (std::ostream &o, const SysxctLockEntry &v) |

| Debugging. More... | |

| std::ostream & | operator<< (std::ostream &o, const SysxctLockList &v) |

| std::ostream & | operator<< (std::ostream &o, const SysxctWorkspace &v) |

| std::ostream & | operator<< (std::ostream &o, const Xct &v) |

| std::ostream & | operator<< (std::ostream &o, const PointerAccess &v) |

| std::ostream & | operator<< (std::ostream &o, const PageVersionAccess &v) |

| std::ostream & | operator<< (std::ostream &o, const ReadXctAccess &v) |

| std::ostream & | operator<< (std::ostream &o, const WriteXctAccess &v) |

| std::ostream & | operator<< (std::ostream &o, const LockFreeReadXctAccess &v) |

| std::ostream & | operator<< (std::ostream &o, const LockFreeWriteXctAccess &v) |

| std::ostream & | operator<< (std::ostream &o, const McsWwLock &v) |

| Debug out operators. More... | |

| std::ostream & | operator<< (std::ostream &o, const XctId &v) |

| std::ostream & | operator<< (std::ostream &o, const LockableXctId &v) |

| std::ostream & | operator<< (std::ostream &o, const McsRwLock &v) |

| std::ostream & | operator<< (std::ostream &o, const RwLockableXctId &v) |

| void | assert_mcs_aligned (const void *address) |

| template<typename COND > | |

| void | spin_until (COND spin_until_cond) |

Variables | |

| const UniversalLockId | kNullUniversalLockId = 0 |

| This never points to a valid lock, and also evaluates less than any vaild alocks. More... | |

| const LockListPosition | kLockListPositionInvalid = 0 |

| const uint64_t | kMcsGuestId = -1 |

| A special value meaning the lock is held by a non-regular guest that doesn't have a context. More... | |

| const uint64_t | kXctIdDeletedBit = 1ULL << 63 |

| const uint64_t | kXctIdMovedBit = 1ULL << 62 |

| const uint64_t | kXctIdBeingWrittenBit = 1ULL << 61 |

| const uint64_t | kXctIdNextLayerBit = 1ULL << 60 |

| const uint64_t | kXctIdMaskSerializer = 0x0FFFFFFFFFFFFFFFULL |

| const uint64_t | kXctIdMaskEpoch = 0x0FFFFFFF00000000ULL |

| const uint64_t | kXctIdMaskOrdinal = 0x00000000FFFFFFFFULL |

| const uint64_t | kMaxXctOrdinal = (1ULL << 24) - 1U |

| Maximum value of in-epoch ordinal. More... | |

| const uint64_t | kLockPageSize = 1 << 12 |

| Must be same as storage::kPageSize. More... | |

| constexpr uint32_t | kMcsMockDataPageHeaderSize = 128U |

| constexpr uint32_t | kMcsMockDataPageHeaderPad = kMcsMockDataPageHeaderSize - sizeof(storage::PageHeader) |

| constexpr uint32_t | kMcsMockDataPageLocksPerPage |

| constexpr uint32_t | kMcsMockDataPageFiller |

| const uint16_t | kReadsetPrefetchBatch = 16 |

| struct foedus::xct::AcquireAsyncRet |

Return value of acquire_async_rw.

Definition at line 161 of file xct_id.hpp.

| Class Members | ||

|---|---|---|

| bool | acquired_ | whether we immediately acquired the lock or not |

| McsBlockIndex | block_index_ |

the queue node we pushed. It is always set whether acquired_ or not, whether simple or extended. However, in simple case when !acquired_, the block is not used and nothing sticks to the queue. We just skip the index next time. |

| typedef uint32_t foedus::xct::McsBlockIndex |

|

inline |

Definition at line 1246 of file xct_id.hpp.

|

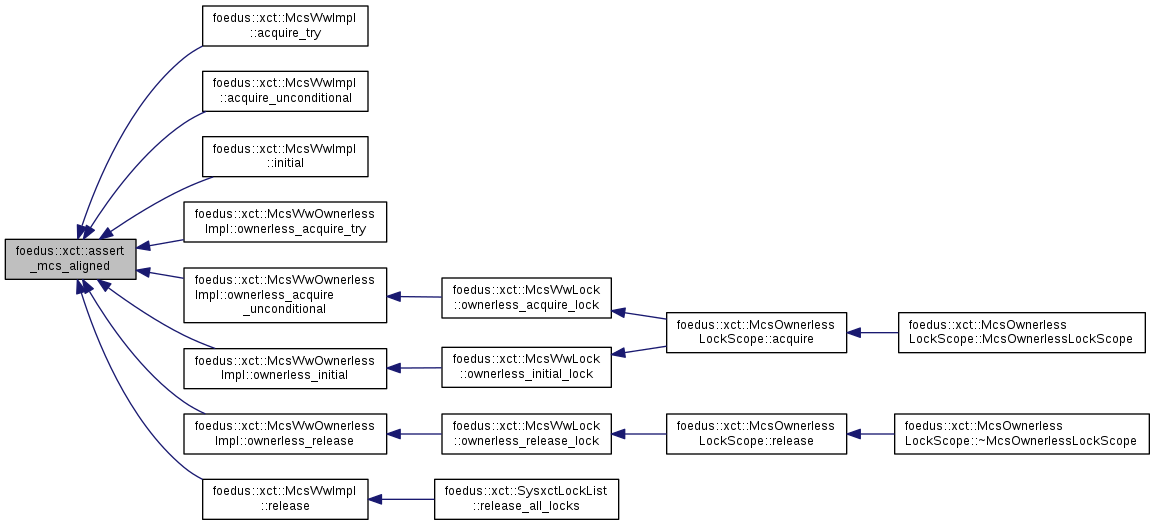

inline |

Definition at line 34 of file xct_mcs_impl.cpp.

References ASSERT_ND.

Referenced by foedus::xct::McsWwImpl< ADAPTOR >::acquire_try(), foedus::xct::McsWwImpl< ADAPTOR >::acquire_unconditional(), foedus::xct::McsWwImpl< ADAPTOR >::initial(), foedus::xct::McsWwOwnerlessImpl::ownerless_acquire_try(), foedus::xct::McsWwOwnerlessImpl::ownerless_acquire_unconditional(), foedus::xct::McsWwOwnerlessImpl::ownerless_initial(), foedus::xct::McsWwOwnerlessImpl::ownerless_release(), and foedus::xct::McsWwImpl< ADAPTOR >::release().

| RwLockableXctId * foedus::xct::from_universal_lock_id | ( | const memory::GlobalVolatilePageResolver & | resolver, |

| const UniversalLockId | universal_lock_id | ||

| ) |

Always use this method rather than doing the conversion yourself.

Definition at line 61 of file xct_id.cpp.

References foedus::memory::GlobalVolatilePageResolver::bases_.

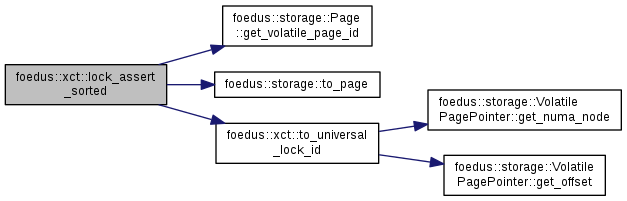

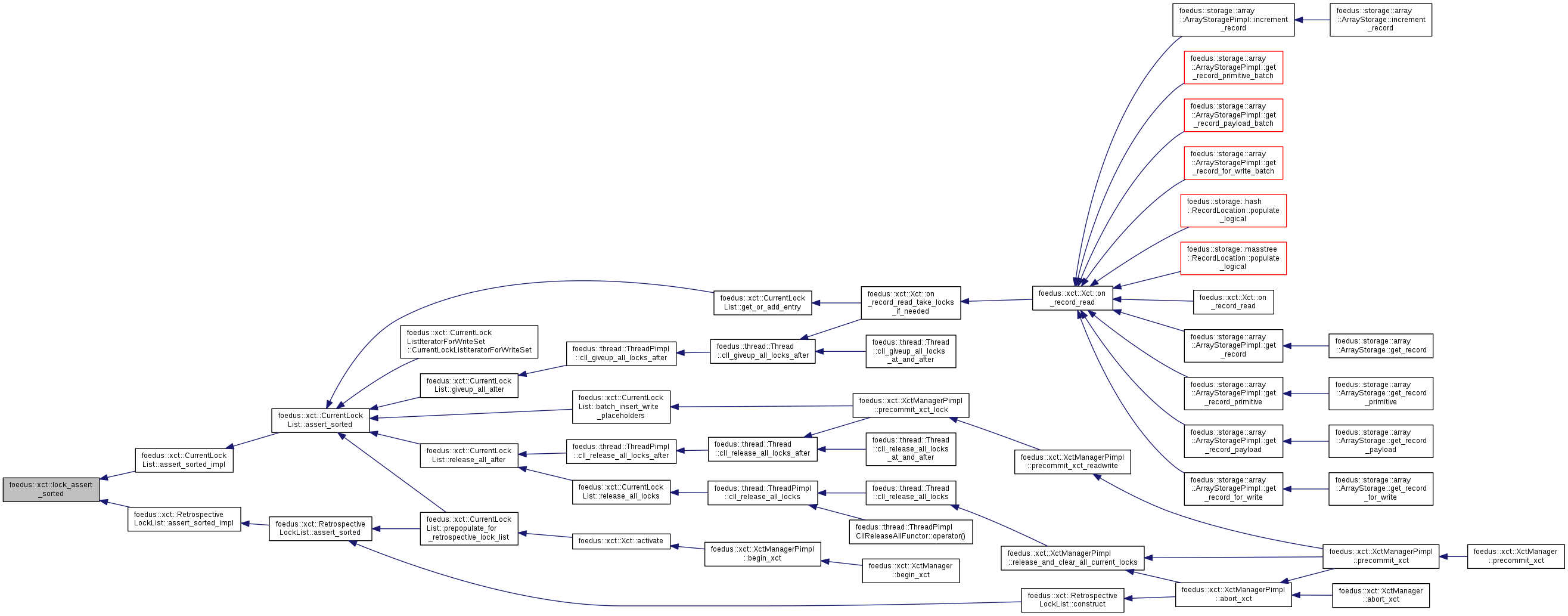

| void foedus::xct::lock_assert_sorted | ( | const LOCK_LIST & | list | ) |

Definition at line 159 of file retrospective_lock_list.cpp.

References ASSERT_ND, foedus::storage::Page::get_volatile_page_id(), kLockListPositionInvalid, kNoLock, foedus::xct::LockEntry::lock_, foedus::storage::to_page(), and to_universal_lock_id().

Referenced by foedus::xct::RetrospectiveLockList::assert_sorted_impl(), and foedus::xct::CurrentLockList::assert_sorted_impl().

|

inline |

Definition at line 936 of file retrospective_lock_list.hpp.

References kLockListPositionInvalid.

|

inline |

General lower_bound/binary_search logic for any kind of LockList/LockEntry.

Used from retrospective_lock_list.cpp and sysxct_impl.cpp. These implementations are skewed towards sorted cases, meaning it runs faster when accesses are nicely sorted.

Definition at line 903 of file retrospective_lock_list.hpp.

References ASSERT_ND, and kLockListPositionInvalid.

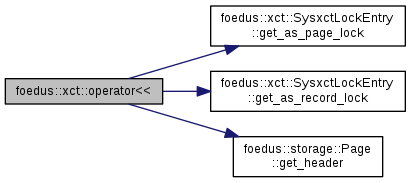

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const PointerAccess & | v | ||

| ) |

Definition at line 32 of file xct_access.cpp.

References foedus::xct::PointerAccess::address_, foedus::xct::PointerAccess::observed_, and foedus::storage::VolatilePagePointer::word.

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const SysxctLockEntry & | v | ||

| ) |

Debugging.

Definition at line 35 of file sysxct_impl.cpp.

References foedus::xct::SysxctLockEntry::get_as_page_lock(), foedus::xct::SysxctLockEntry::get_as_record_lock(), foedus::storage::Page::get_header(), foedus::xct::SysxctLockEntry::mcs_block_, foedus::xct::SysxctLockEntry::page_lock_, foedus::xct::SysxctLockEntry::universal_lock_id_, and foedus::xct::SysxctLockEntry::used_in_this_run_.

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const PageVersionAccess & | v | ||

| ) |

Definition at line 38 of file xct_access.cpp.

References foedus::xct::PageVersionAccess::address_, and foedus::xct::PageVersionAccess::observed_.

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const ReadXctAccess & | v | ||

| ) |

Definition at line 44 of file xct_access.cpp.

References foedus::xct::ReadXctAccess::observed_owner_id_, foedus::xct::RecordXctAccess::ordinal_, foedus::xct::RecordXctAccess::owner_id_address_, foedus::xct::RecordXctAccess::owner_lock_id_, foedus::xct::ReadXctAccess::related_write_, and foedus::xct::RecordXctAccess::storage_id_.

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const SysxctLockList & | v | ||

| ) |

Definition at line 53 of file sysxct_impl.cpp.

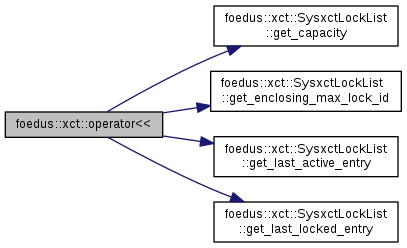

References foedus::xct::SysxctLockList::get_capacity(), foedus::xct::SysxctLockList::get_enclosing_max_lock_id(), foedus::xct::SysxctLockList::get_last_active_entry(), and foedus::xct::SysxctLockList::get_last_locked_entry().

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const WriteXctAccess & | v | ||

| ) |

Definition at line 59 of file xct_access.cpp.

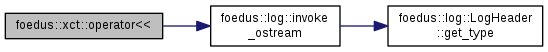

References foedus::log::invoke_ostream(), foedus::xct::WriteXctAccess::log_entry_, foedus::xct::RecordXctAccess::ordinal_, foedus::xct::RecordXctAccess::owner_id_address_, foedus::xct::RecordXctAccess::owner_lock_id_, foedus::xct::WriteXctAccess::related_read_, and foedus::xct::RecordXctAccess::storage_id_.

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const SysxctWorkspace & | v | ||

| ) |

Definition at line 70 of file sysxct_impl.cpp.

References foedus::xct::SysxctWorkspace::lock_list_, and foedus::xct::SysxctWorkspace::running_sysxct_.

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const LockFreeReadXctAccess & | v | ||

| ) |

Definition at line 75 of file xct_access.cpp.

References foedus::xct::LockFreeReadXctAccess::observed_owner_id_, foedus::xct::LockFreeReadXctAccess::owner_id_address_, and foedus::xct::LockFreeReadXctAccess::storage_id_.

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const LockFreeWriteXctAccess & | v | ||

| ) |

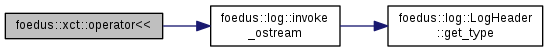

Definition at line 85 of file xct_access.cpp.

References foedus::log::invoke_ostream(), foedus::xct::LockFreeWriteXctAccess::log_entry_, and foedus::xct::LockFreeWriteXctAccess::storage_id_.

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const LockEntry & | v | ||

| ) |

Debugging.

Definition at line 118 of file retrospective_lock_list.cpp.

References foedus::xct::LockEntry::lock_, foedus::xct::LockEntry::preferred_mode_, foedus::xct::LockEntry::taken_mode_, and foedus::xct::LockEntry::universal_lock_id_.

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const CurrentLockList & | v | ||

| ) |

Definition at line 132 of file retrospective_lock_list.cpp.

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const RetrospectiveLockList & | v | ||

| ) |

Definition at line 145 of file retrospective_lock_list.cpp.

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const Xct & | v | ||

| ) |

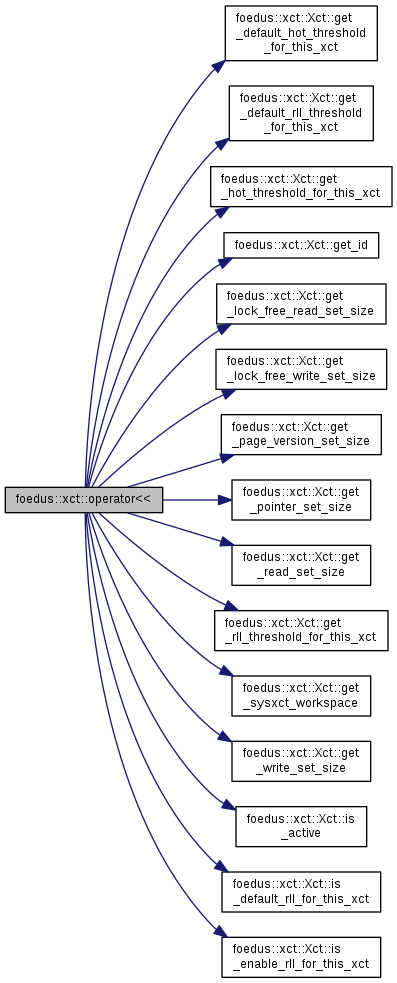

Definition at line 168 of file xct.cpp.

References foedus::xct::Xct::get_default_hot_threshold_for_this_xct(), foedus::xct::Xct::get_default_rll_threshold_for_this_xct(), foedus::xct::Xct::get_hot_threshold_for_this_xct(), foedus::xct::Xct::get_id(), foedus::xct::Xct::get_lock_free_read_set_size(), foedus::xct::Xct::get_lock_free_write_set_size(), foedus::xct::Xct::get_page_version_set_size(), foedus::xct::Xct::get_pointer_set_size(), foedus::xct::Xct::get_read_set_size(), foedus::xct::Xct::get_rll_threshold_for_this_xct(), foedus::xct::Xct::get_sysxct_workspace(), foedus::xct::Xct::get_write_set_size(), foedus::xct::Xct::is_active(), foedus::xct::Xct::is_default_rll_for_this_xct(), and foedus::xct::Xct::is_enable_rll_for_this_xct().

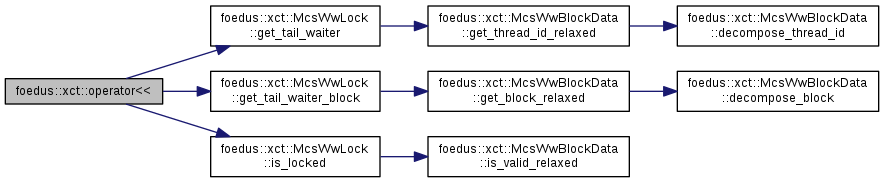

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const McsWwLock & | v | ||

| ) |

Debug out operators.

Definition at line 171 of file xct_id.cpp.

References foedus::xct::McsWwLock::get_tail_waiter(), foedus::xct::McsWwLock::get_tail_waiter_block(), and foedus::xct::McsWwLock::is_locked().

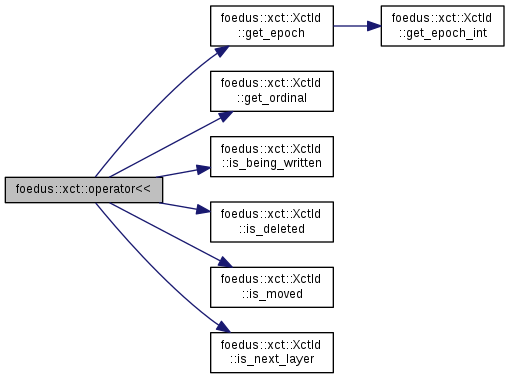

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const XctId & | v | ||

| ) |

Definition at line 178 of file xct_id.cpp.

References foedus::xct::XctId::get_epoch(), foedus::xct::XctId::get_ordinal(), foedus::xct::XctId::is_being_written(), foedus::xct::XctId::is_deleted(), foedus::xct::XctId::is_moved(), and foedus::xct::XctId::is_next_layer().

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const LockableXctId & | v | ||

| ) |

Definition at line 190 of file xct_id.cpp.

References foedus::xct::LockableXctId::lock_, and foedus::xct::LockableXctId::xct_id_.

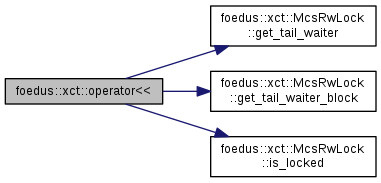

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const McsRwLock & | v | ||

| ) |

Definition at line 195 of file xct_id.cpp.

References foedus::xct::McsRwLock::get_tail_waiter(), foedus::xct::McsRwLock::get_tail_waiter_block(), and foedus::xct::McsRwLock::is_locked().

| std::ostream& foedus::xct::operator<< | ( | std::ostream & | o, |

| const RwLockableXctId & | v | ||

| ) |

Definition at line 202 of file xct_id.cpp.

References foedus::xct::RwLockableXctId::lock_, and foedus::xct::RwLockableXctId::xct_id_.

|

inline |

Definition at line 1231 of file xct_id.hpp.

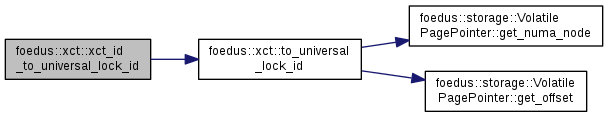

References to_universal_lock_id().

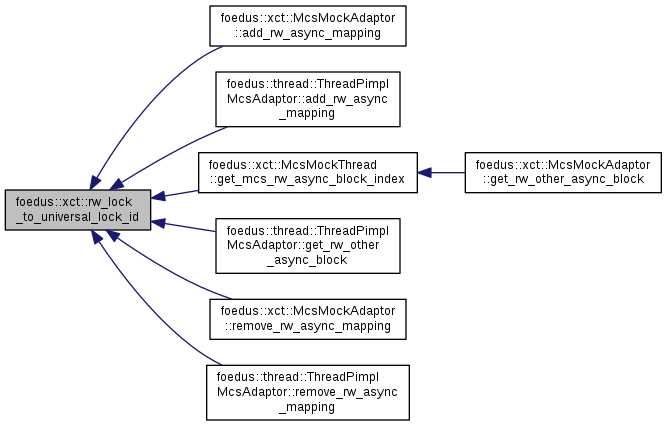

Referenced by foedus::xct::McsMockAdaptor< RW_BLOCK >::add_rw_async_mapping(), foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::add_rw_async_mapping(), foedus::xct::McsMockThread< RW_BLOCK >::get_mcs_rw_async_block_index(), foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::get_rw_other_async_block(), foedus::xct::McsMockAdaptor< RW_BLOCK >::remove_rw_async_mapping(), and foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::remove_rw_async_mapping().

| void foedus::xct::spin_until | ( | COND | spin_until_cond | ) |

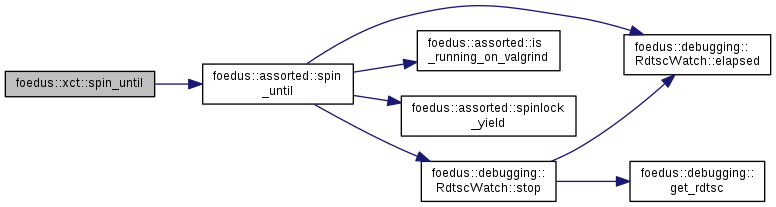

Definition at line 41 of file xct_mcs_impl.cpp.

References foedus::assorted::spin_until().

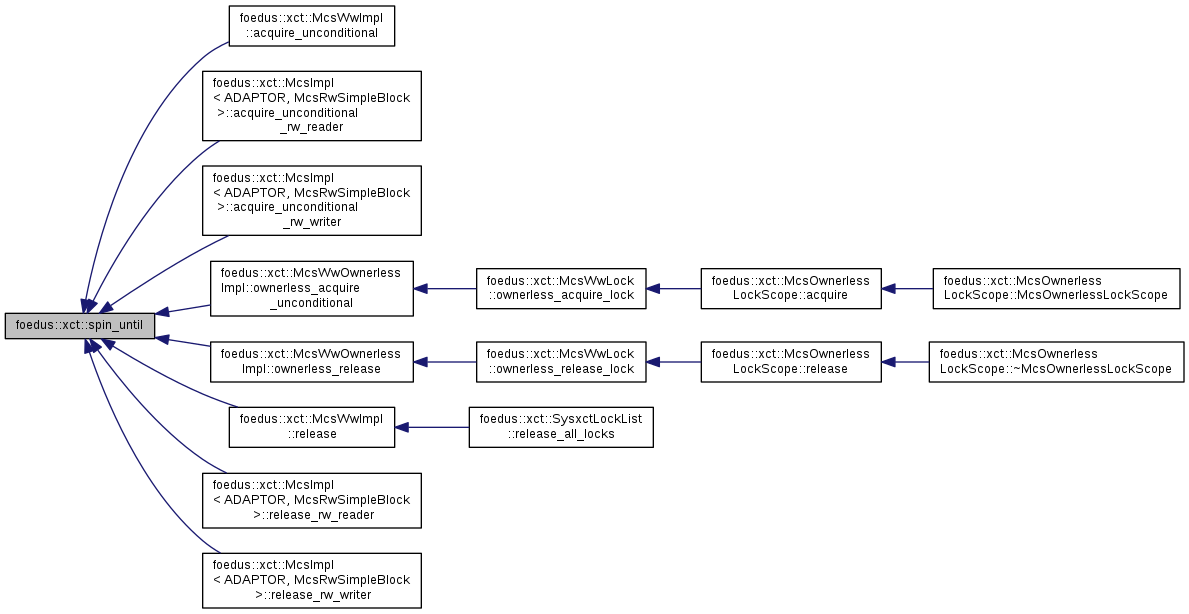

Referenced by foedus::xct::McsWwImpl< ADAPTOR >::acquire_unconditional(), foedus::xct::McsImpl< ADAPTOR, McsRwSimpleBlock >::acquire_unconditional_rw_reader(), foedus::xct::McsImpl< ADAPTOR, McsRwSimpleBlock >::acquire_unconditional_rw_writer(), foedus::xct::McsWwOwnerlessImpl::ownerless_acquire_unconditional(), foedus::xct::McsWwOwnerlessImpl::ownerless_release(), foedus::xct::McsWwImpl< ADAPTOR >::release(), foedus::xct::McsImpl< ADAPTOR, McsRwSimpleBlock >::release_rw_reader(), and foedus::xct::McsImpl< ADAPTOR, McsRwSimpleBlock >::release_rw_writer().

|

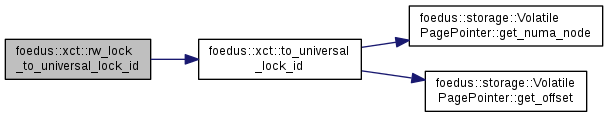

inline |

Definition at line 63 of file sysxct_impl.hpp.

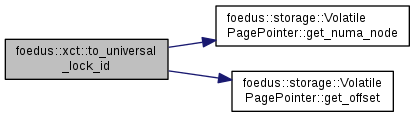

References foedus::storage::VolatilePagePointer::get_numa_node(), and foedus::storage::VolatilePagePointer::get_offset().

Referenced by foedus::xct::SysxctLockList::assert_sorted_impl(), foedus::xct::SysxctLockList::batch_get_or_add_entries(), foedus::xct::SysxctLockList::get_or_add_entry(), lock_assert_sorted(), foedus::xct::Xct::on_record_read(), foedus::xct::PageComparator::operator()(), rw_lock_to_universal_lock_id(), to_universal_lock_id(), and xct_id_to_universal_lock_id().

| UniversalLockId foedus::xct::to_universal_lock_id | ( | const memory::GlobalVolatilePageResolver & | resolver, |

| uintptr_t | lock_ptr | ||

| ) |

Always use this method rather than doing the conversion yourself.

Definition at line 36 of file xct_id.cpp.

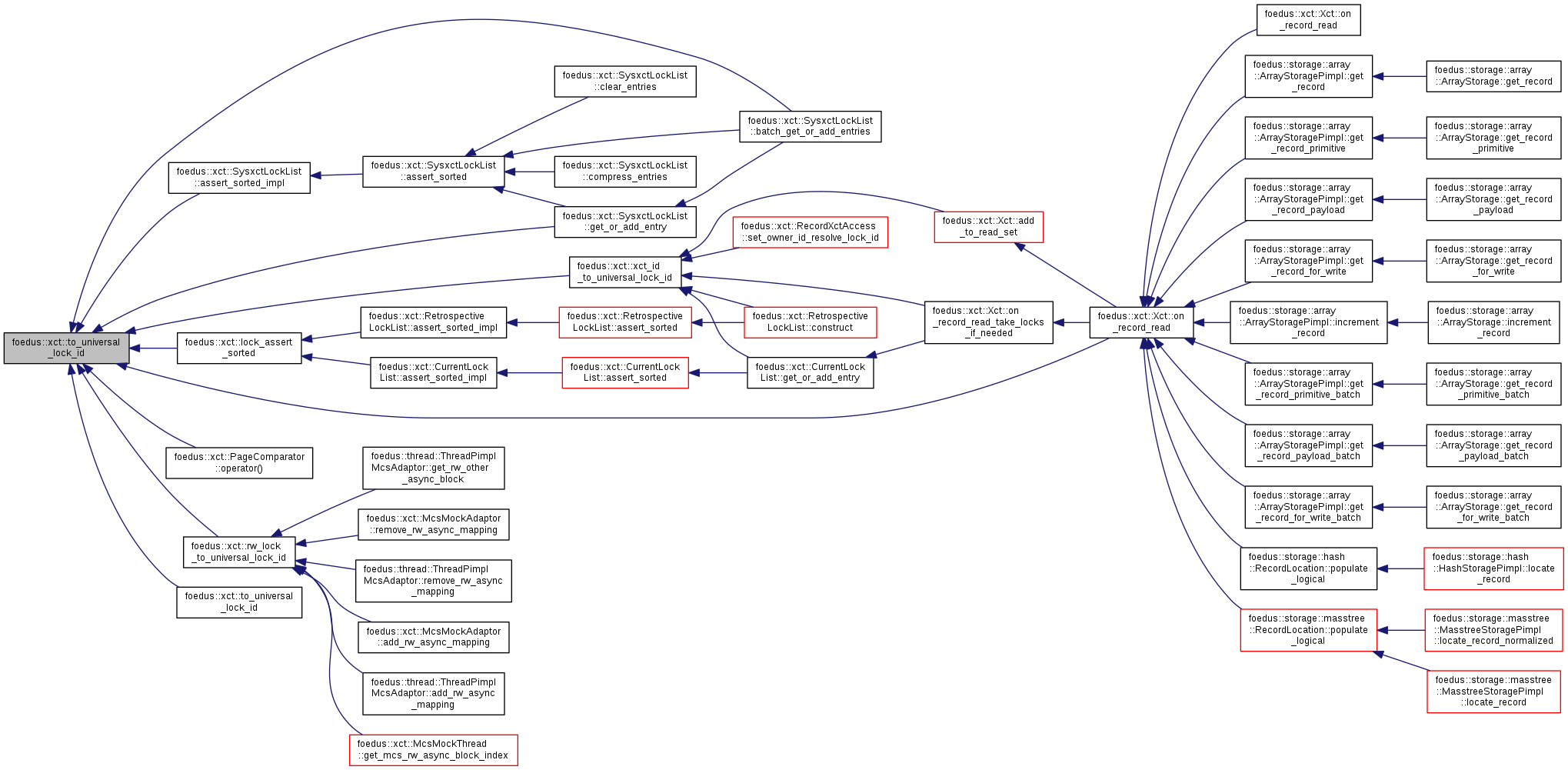

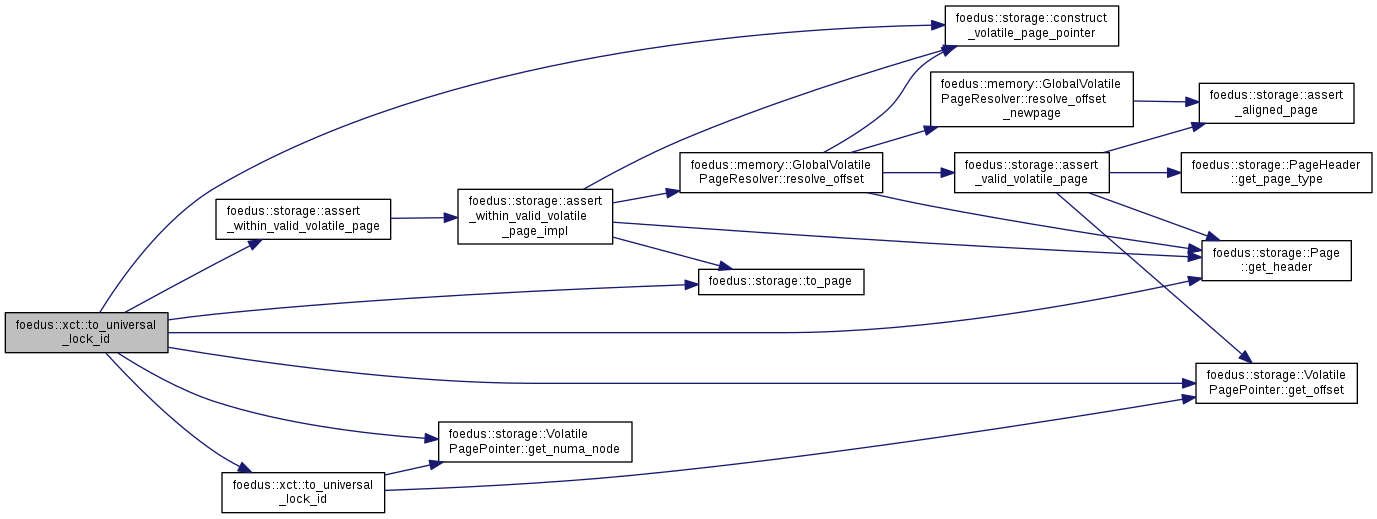

References ASSERT_ND, foedus::storage::assert_within_valid_volatile_page(), foedus::memory::GlobalVolatilePageResolver::begin_, foedus::storage::construct_volatile_page_pointer(), foedus::memory::GlobalVolatilePageResolver::end_, foedus::storage::Page::get_header(), foedus::storage::VolatilePagePointer::get_numa_node(), foedus::storage::VolatilePagePointer::get_offset(), foedus::memory::GlobalVolatilePageResolver::numa_node_count_, foedus::storage::to_page(), and to_universal_lock_id().

|

inline |

If you already have the numa_node, local_page_index, prefer this one.

Definition at line 1217 of file xct_id.hpp.

References kLockPageSize.

|

inline |

Definition at line 1226 of file xct_id.hpp.

References to_universal_lock_id().

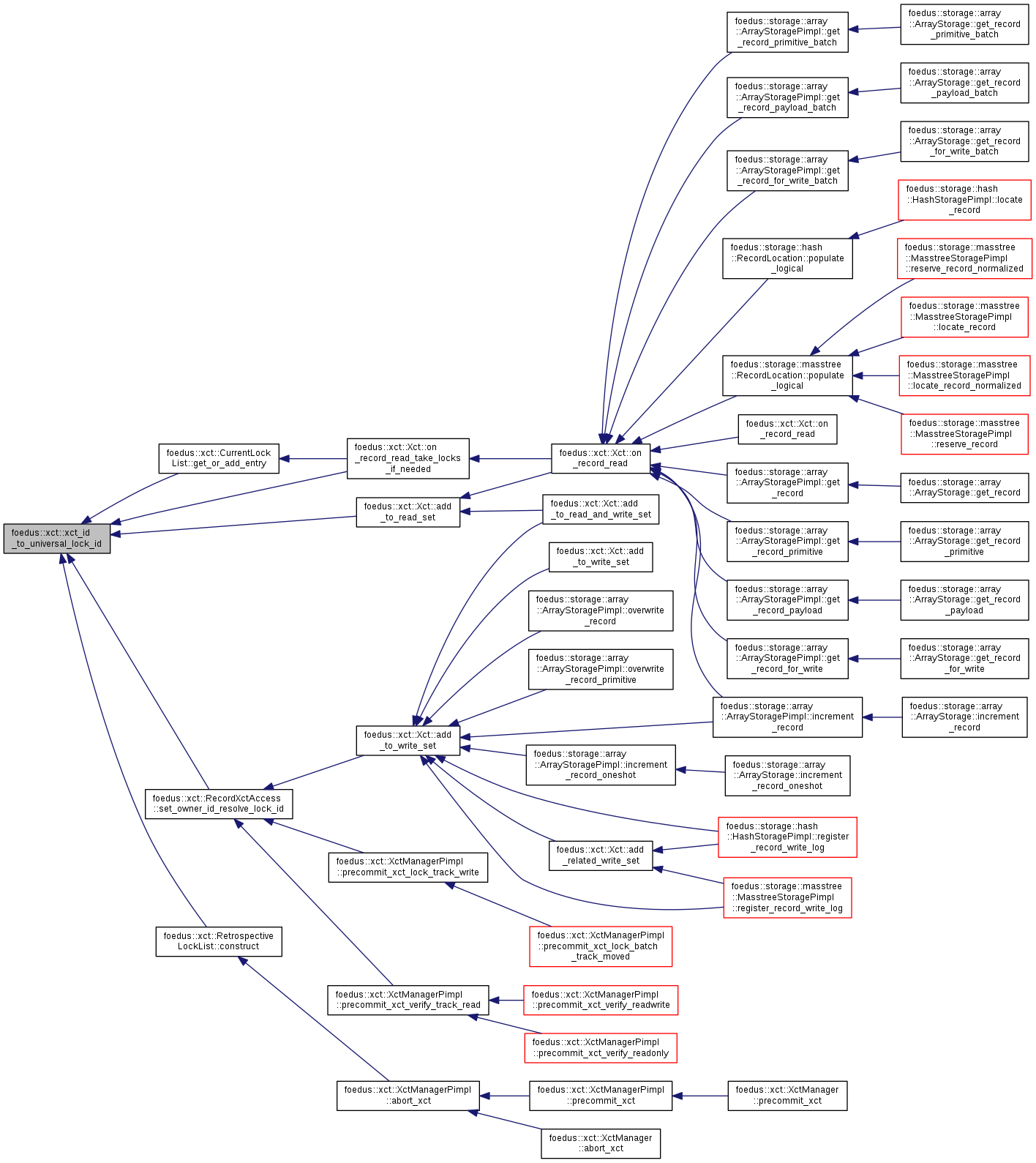

Referenced by foedus::xct::Xct::add_to_read_set(), foedus::xct::RetrospectiveLockList::construct(), foedus::xct::CurrentLockList::get_or_add_entry(), foedus::xct::Xct::on_record_read_take_locks_if_needed(), and foedus::xct::RecordXctAccess::set_owner_id_resolve_lock_id().

| const LockListPosition foedus::xct::kLockListPositionInvalid = 0 |

Definition at line 149 of file xct_id.hpp.

Referenced by foedus::xct::SysxctLockList::assert_sorted_impl(), foedus::xct::SysxctLockList::batch_get_or_add_entries(), foedus::xct::CurrentLockList::batch_insert_write_placeholders(), foedus::xct::SysxctLockList::calculate_last_locked_entry_from(), foedus::xct::CurrentLockList::calculate_last_locked_entry_from(), foedus::xct::SysxctLockList::clear_entries(), foedus::xct::RetrospectiveLockList::clear_entries(), foedus::xct::CurrentLockList::clear_entries(), foedus::xct::SysxctLockList::compress_entries(), foedus::xct::RetrospectiveLockList::construct(), foedus::xct::CurrentLockListIteratorForWriteSet::CurrentLockListIteratorForWriteSet(), foedus::xct::CurrentLockList::get_max_locked_id(), foedus::xct::SysxctLockList::get_or_add_entry(), foedus::xct::CurrentLockList::get_or_add_entry(), foedus::xct::RetrospectiveLockList::is_empty(), foedus::xct::SysxctLockList::is_empty(), foedus::xct::CurrentLockList::is_empty(), foedus::xct::RetrospectiveLockList::is_valid_entry(), foedus::xct::SysxctLockList::is_valid_entry(), foedus::xct::CurrentLockList::is_valid_entry(), lock_assert_sorted(), lock_binary_search(), lock_lower_bound(), foedus::xct::Xct::on_record_read_take_locks_if_needed(), foedus::xct::XctManagerPimpl::precommit_xct_lock(), foedus::xct::CurrentLockList::prepopulate_for_retrospective_lock_list(), foedus::xct::CurrentLockList::release_all_after(), foedus::xct::SysxctLockList::release_all_locks(), foedus::xct::CurrentLockList::try_async_multiple_locks(), foedus::xct::CurrentLockList::try_or_acquire_multiple_locks(), and foedus::xct::CurrentLockList::try_or_acquire_single_lock().

| const uint64_t foedus::xct::kLockPageSize = 1 << 12 |

Must be same as storage::kPageSize.

To avoid header dependencies, we declare a dedicated constant here and statically asserts the equivalence in cpp.

Definition at line 1215 of file xct_id.hpp.

Referenced by to_universal_lock_id().

| const uint64_t foedus::xct::kMcsGuestId = -1 |

A special value meaning the lock is held by a non-regular guest that doesn't have a context.

See the MCSg paper for more details.

Definition at line 158 of file xct_id.hpp.

Referenced by foedus::xct::McsWwImpl< ADAPTOR >::acquire_unconditional(), foedus::xct::McsWwBlockData::is_guest_acquire(), foedus::xct::McsWwBlockData::is_guest_atomic(), foedus::xct::McsWwBlockData::is_guest_consume(), foedus::xct::McsWwBlockData::is_guest_relaxed(), foedus::xct::McsWwOwnerlessImpl::ownerless_acquire_try(), foedus::xct::McsWwOwnerlessImpl::ownerless_acquire_unconditional(), and foedus::xct::McsWwOwnerlessImpl::ownerless_release().

| constexpr uint32_t foedus::xct::kMcsMockDataPageFiller |

Definition at line 175 of file xct_mcs_adapter_impl.hpp.

| constexpr uint32_t foedus::xct::kMcsMockDataPageHeaderPad = kMcsMockDataPageHeaderSize - sizeof(storage::PageHeader) |

Definition at line 170 of file xct_mcs_adapter_impl.hpp.

| constexpr uint32_t foedus::xct::kMcsMockDataPageHeaderSize = 128U |

Definition at line 168 of file xct_mcs_adapter_impl.hpp.

| constexpr uint32_t foedus::xct::kMcsMockDataPageLocksPerPage |

Definition at line 172 of file xct_mcs_adapter_impl.hpp.

Referenced by foedus::xct::McsMockContext< RW_BLOCK >::get_rw_lock_address(), foedus::xct::McsMockContext< RW_BLOCK >::get_ww_lock_address(), foedus::xct::McsMockDataPage::init(), and foedus::xct::McsMockContext< RW_BLOCK >::init().

| const UniversalLockId foedus::xct::kNullUniversalLockId = 0 |

This never points to a valid lock, and also evaluates less than any vaild alocks.

Definition at line 137 of file xct_id.hpp.

Referenced by foedus::xct::McsMockAdaptor< RW_BLOCK >::add_rw_async_mapping(), foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::add_rw_async_mapping(), foedus::xct::SysxctLockList::assert_sorted_impl(), foedus::xct::SysxctLockEntry::clear(), foedus::thread::Thread::cll_giveup_all_locks_at_and_after(), foedus::thread::Thread::cll_release_all_locks_at_and_after(), foedus::xct::CurrentLockList::get_max_locked_id(), foedus::xct::CurrentLockList::giveup_all_at_and_after(), foedus::xct::SysxctLockList::init(), foedus::xct::Xct::on_record_read_take_locks_if_needed(), foedus::xct::CurrentLockList::release_all_at_and_after(), foedus::xct::CurrentLockList::release_all_locks(), foedus::xct::McsMockAdaptor< RW_BLOCK >::remove_rw_async_mapping(), foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::remove_rw_async_mapping(), and run_nested_sysxct_impl().

| const uint16_t foedus::xct::kReadsetPrefetchBatch = 16 |

Definition at line 646 of file xct_manager_pimpl.cpp.

| const uint64_t foedus::xct::kXctIdBeingWrittenBit = 1ULL << 61 |

Definition at line 885 of file xct_id.hpp.

Referenced by foedus::xct::XctId::set_being_written().

| const uint64_t foedus::xct::kXctIdDeletedBit = 1ULL << 63 |

Definition at line 883 of file xct_id.hpp.

Referenced by foedus::xct::XctId::set_deleted().

| const uint64_t foedus::xct::kXctIdMaskEpoch = 0x0FFFFFFF00000000ULL |

Definition at line 888 of file xct_id.hpp.

| const uint64_t foedus::xct::kXctIdMaskOrdinal = 0x00000000FFFFFFFFULL |

Definition at line 889 of file xct_id.hpp.

| const uint64_t foedus::xct::kXctIdMaskSerializer = 0x0FFFFFFFFFFFFFFFULL |

Definition at line 887 of file xct_id.hpp.

Referenced by foedus::xct::XctId::clear_status_bits().

| const uint64_t foedus::xct::kXctIdMovedBit = 1ULL << 62 |

Definition at line 884 of file xct_id.hpp.

Referenced by foedus::xct::XctId::set_moved().

| const uint64_t foedus::xct::kXctIdNextLayerBit = 1ULL << 60 |

Definition at line 886 of file xct_id.hpp.

Referenced by foedus::xct::XctId::set_next_layer().