|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

RLL/CLL of a system transaction. More...

RLL/CLL of a system transaction.

Unlike the normal transaction, lock-list is simpler in sysxct. Sysxct doesn't need read-set or asynchronous locking. It immediately takes the locks required by the logic, and abort if it couldn't. Unlike normal xct, sysxct also locks records for just a few times (counting "lock all records in this page" as one). We thus integrate RLL and CLL into this list in system transactions. We might follow this approach in normal xct later.

This object is a POD. We use a fixed-size array.

Definition at line 166 of file sysxct_impl.hpp.

#include <sysxct_impl.hpp>

Public Types | |

| enum | Constants { kMaxSysxctLocks = 1 << 10 } |

Public Member Functions | |

| SysxctLockList () | |

| ~SysxctLockList () | |

| SysxctLockList (const SysxctLockList &other)=delete | |

| SysxctLockList & | operator= (const SysxctLockList &other)=delete |

| void | init () |

| void | clear_entries (UniversalLockId enclosing_max_lock_id) |

| Remove all entries. More... | |

| void | compress_entries (UniversalLockId enclosing_max_lock_id) |

| Unlike clear_entries(), this is used when a sysxct is aborted and will be retried. More... | |

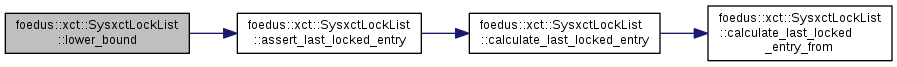

| LockListPosition | lower_bound (UniversalLockId lock) const |

| Analogous to std::lower_bound() for the given lock. More... | |

| template<typename MCS_ADAPTOR > | |

| ErrorCode | request_record_lock (MCS_ADAPTOR mcs_adaptor, storage::VolatilePagePointer page_id, RwLockableXctId *lock) |

| If not yet acquired, acquires a record lock and adds an entry to this list, re-sorting part of the list if necessary to keep the sortedness. More... | |

| template<typename MCS_ADAPTOR > | |

| ErrorCode | batch_request_record_locks (MCS_ADAPTOR mcs_adaptor, storage::VolatilePagePointer page_id, uint32_t lock_count, RwLockableXctId **locks) |

| Used to acquire many locks in a page at once. More... | |

| template<typename MCS_ADAPTOR > | |

| ErrorCode | request_page_lock (MCS_ADAPTOR mcs_adaptor, storage::Page *page) |

| Acquires a page lock. More... | |

| template<typename MCS_ADAPTOR > | |

| ErrorCode | batch_request_page_locks (MCS_ADAPTOR mcs_adaptor, uint32_t lock_count, storage::Page **pages) |

| The interface is same as the record version, but this one doesn't do much optimization. More... | |

| template<typename MCS_ADAPTOR > | |

| void | release_all_locks (MCS_ADAPTOR mcs_adaptor) |

| Releases all locks that were acquired. More... | |

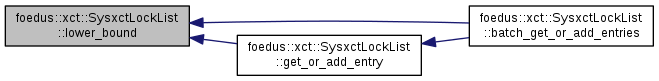

| LockListPosition | get_or_add_entry (storage::VolatilePagePointer page_id, uintptr_t lock_addr, bool page_lock) |

| LockListPosition | batch_get_or_add_entries (storage::VolatilePagePointer page_id, uint32_t lock_count, uintptr_t *lock_addr, bool page_lock) |

| Batched version of get_or_add_entry(). More... | |

| const SysxctLockEntry * | get_array () const |

| SysxctLockEntry * | get_array () |

| SysxctLockEntry * | get_entry (LockListPosition pos) |

| const SysxctLockEntry * | get_entry (LockListPosition pos) const |

| uint32_t | get_capacity () const |

| bool | is_full () const |

| When this returns full, it's catastrophic. More... | |

| LockListPosition | get_last_active_entry () const |

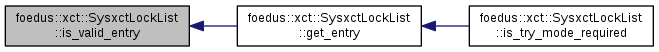

| bool | is_valid_entry (LockListPosition pos) const |

| bool | is_empty () const |

| void | assert_sorted () const __attribute__((always_inline)) |

| Inline definitions of SysxctLockList methods. More... | |

| void | assert_sorted_impl () const |

| SysxctLockEntry * | begin () |

| SysxctLockEntry * | end () |

| const SysxctLockEntry * | cbegin () const |

| const SysxctLockEntry * | cend () const |

| LockListPosition | to_pos (const SysxctLockEntry *entry) const |

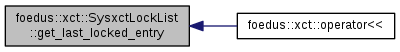

| LockListPosition | get_last_locked_entry () const |

| LockListPosition | calculate_last_locked_entry () const |

| Calculate last_locked_entry_ by really checking the whole list. More... | |

| LockListPosition | calculate_last_locked_entry_from (LockListPosition from) const |

| Only searches among entries at or before "from". More... | |

| void | assert_last_locked_entry () const |

| UniversalLockId | get_enclosing_max_lock_id () const |

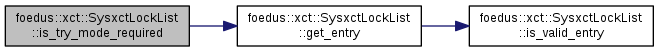

| bool | is_try_mode_required (LockListPosition pos) const |

Friends | |

| struct | TestIsTryRequired |

| std::ostream & | operator<< (std::ostream &o, const SysxctLockList &v) |

| Enumerator | |

|---|---|

| kMaxSysxctLocks |

Maximum number of locks one system transaction might take. In most cases just a few. The worst case is page-split where a sysxct takes a page-full of records. |

Definition at line 170 of file sysxct_impl.hpp.

|

inline |

Definition at line 179 of file sysxct_impl.hpp.

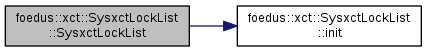

References init().

|

inline |

Definition at line 180 of file sysxct_impl.hpp.

|

delete |

|

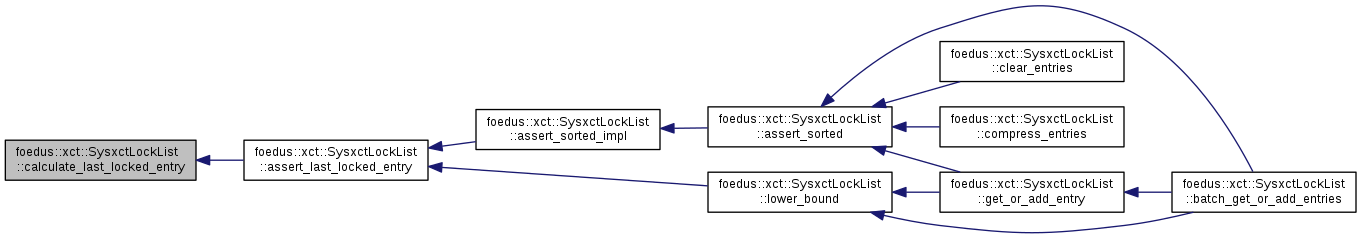

inline |

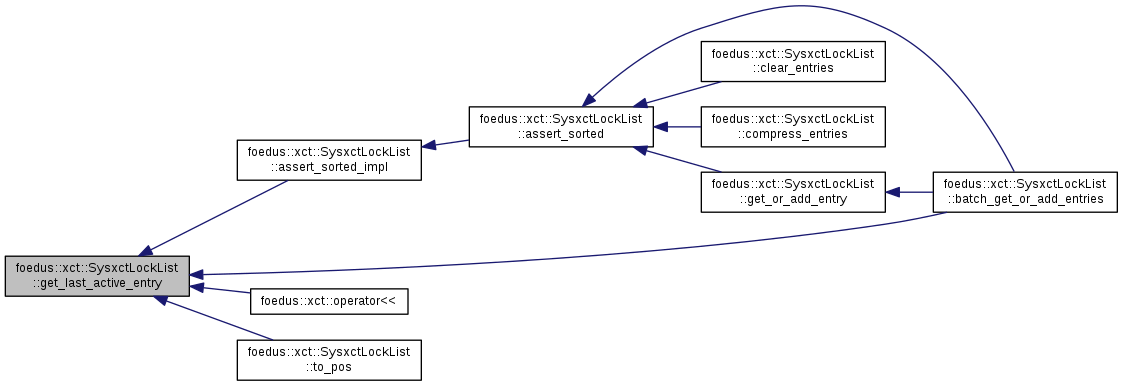

Definition at line 345 of file sysxct_impl.hpp.

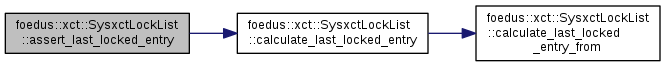

References ASSERT_ND, and calculate_last_locked_entry().

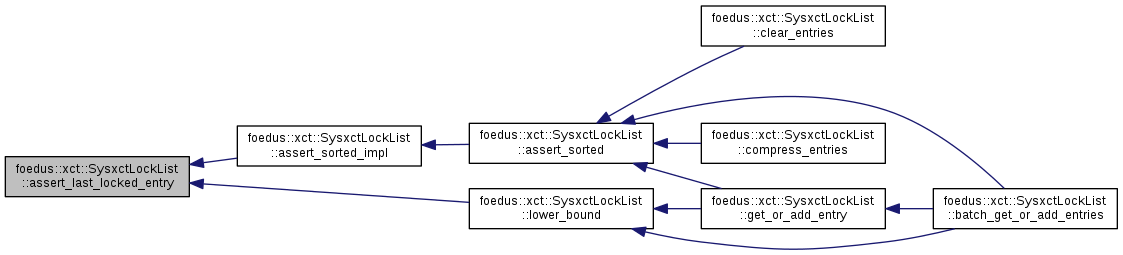

Referenced by assert_sorted_impl(), and lower_bound().

|

inline |

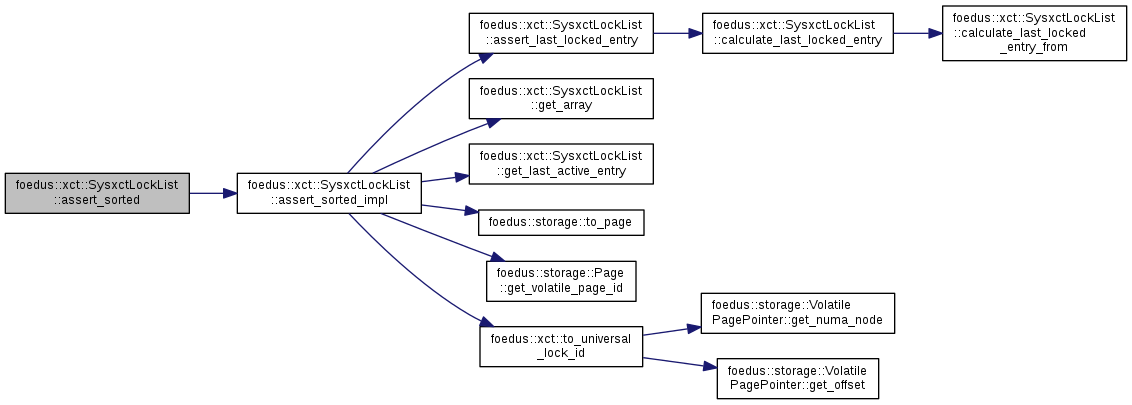

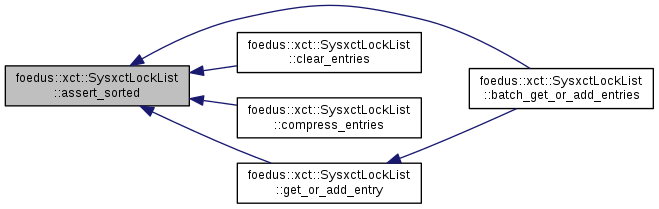

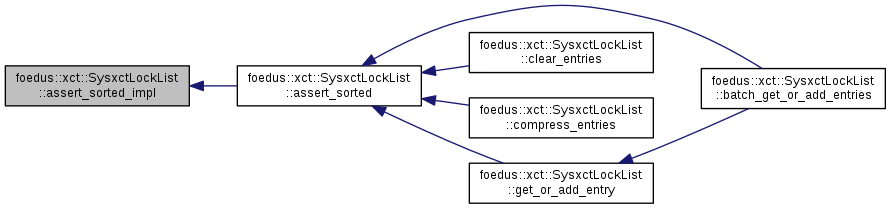

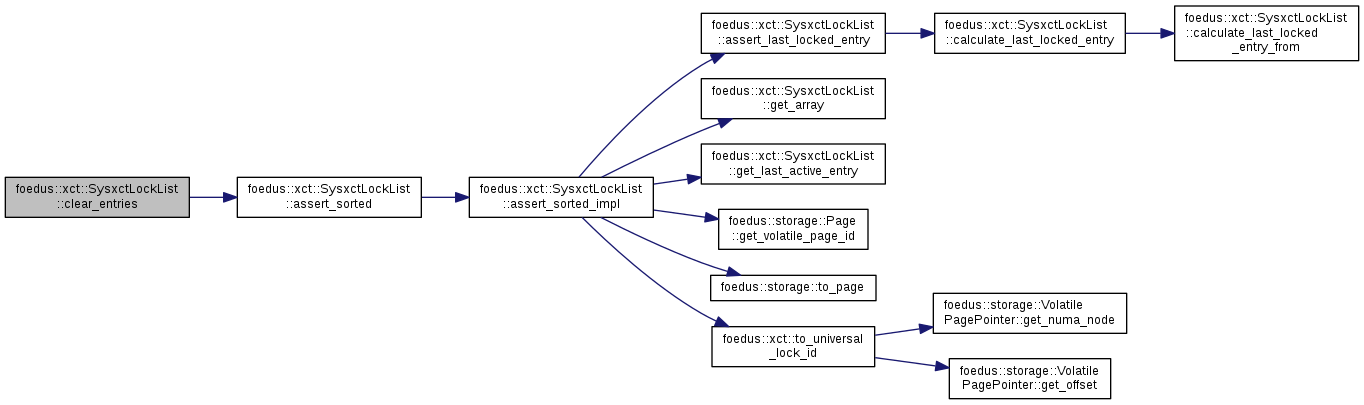

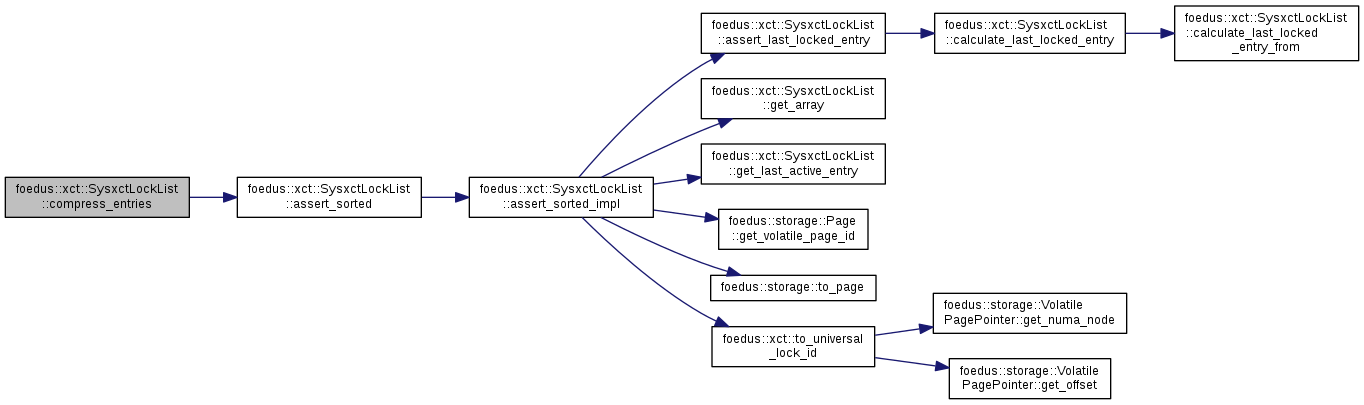

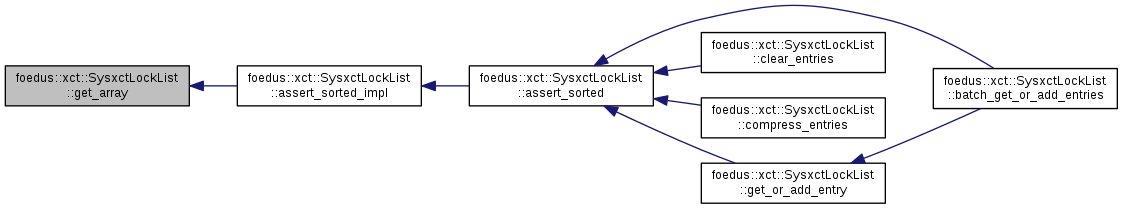

Inline definitions of SysxctLockList methods.

Definition at line 549 of file sysxct_impl.hpp.

References assert_sorted_impl().

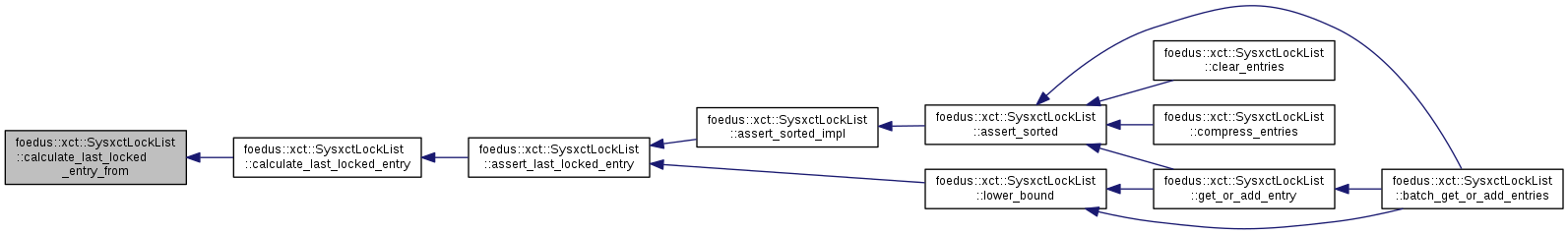

Referenced by batch_get_or_add_entries(), clear_entries(), compress_entries(), and get_or_add_entry().

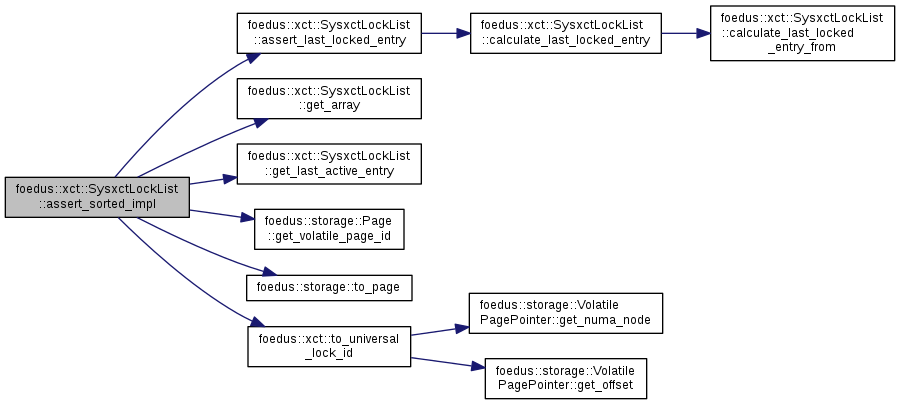

| void foedus::xct::SysxctLockList::assert_sorted_impl | ( | ) | const |

Definition at line 78 of file sysxct_impl.cpp.

References assert_last_locked_entry(), ASSERT_ND, get_array(), get_last_active_entry(), foedus::storage::Page::get_volatile_page_id(), foedus::xct::kLockListPositionInvalid, foedus::xct::kNullUniversalLockId, foedus::storage::to_page(), and foedus::xct::to_universal_lock_id().

Referenced by assert_sorted().

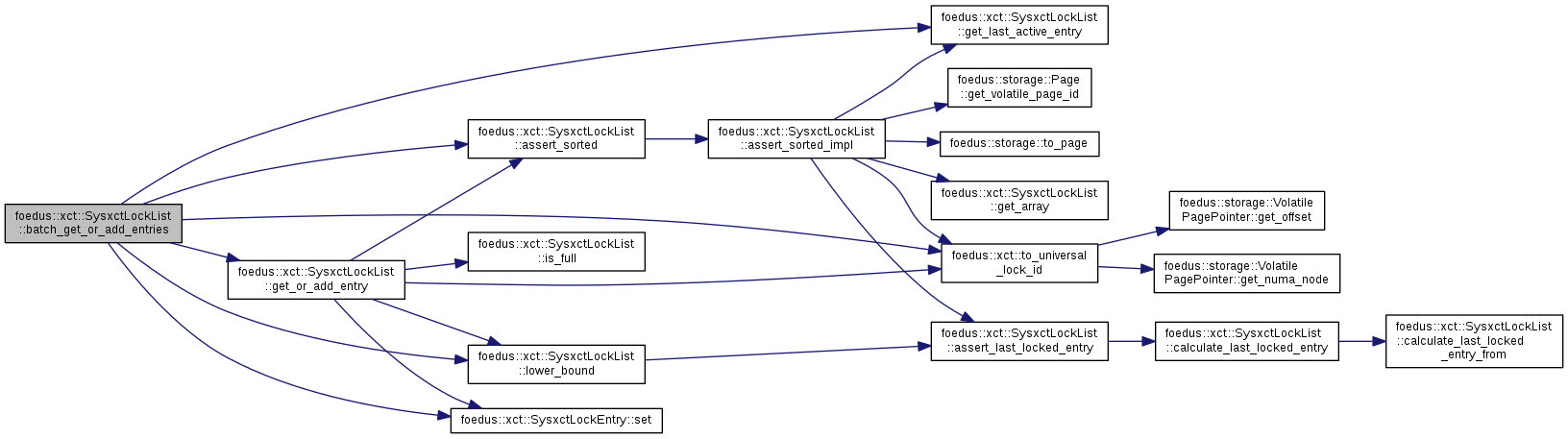

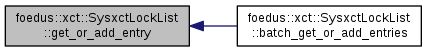

| LockListPosition foedus::xct::SysxctLockList::batch_get_or_add_entries | ( | storage::VolatilePagePointer | page_id, |

| uint32_t | lock_count, | ||

| uintptr_t * | lock_addr, | ||

| bool | page_lock | ||

| ) |

Batched version of get_or_add_entry().

Definition at line 152 of file sysxct_impl.cpp.

References ASSERT_ND, assert_sorted(), get_last_active_entry(), get_or_add_entry(), foedus::xct::kLockListPositionInvalid, kMaxSysxctLocks, lower_bound(), foedus::xct::SysxctLockEntry::set(), foedus::xct::to_universal_lock_id(), foedus::xct::SysxctLockEntry::universal_lock_id_, and UNLIKELY.

|

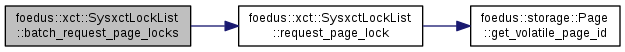

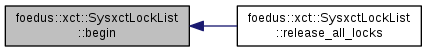

inline |

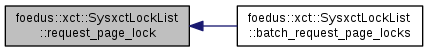

The interface is same as the record version, but this one doesn't do much optimization.

We shouldn't frequently lock many pages in sysxct. However, at least this one locks the pages in UniversalLockId order to avoid deadlocks.

Definition at line 252 of file sysxct_impl.hpp.

References CHECK_ERROR_CODE, foedus::kErrorCodeOk, and request_page_lock().

|

inline |

Used to acquire many locks in a page at once.

This reduces sorting cost.

Definition at line 230 of file sysxct_impl.hpp.

|

inline |

Definition at line 316 of file sysxct_impl.hpp.

Referenced by release_all_locks().

|

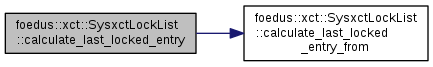

inline |

Calculate last_locked_entry_ by really checking the whole list.

Usually for sanity checks

Definition at line 333 of file sysxct_impl.hpp.

References calculate_last_locked_entry_from().

Referenced by assert_last_locked_entry().

|

inline |

Only searches among entries at or before "from".

Definition at line 337 of file sysxct_impl.hpp.

References foedus::xct::kLockListPositionInvalid.

Referenced by calculate_last_locked_entry().

|

inline |

Definition at line 318 of file sysxct_impl.hpp.

|

inline |

Definition at line 319 of file sysxct_impl.hpp.

|

inline |

Remove all entries.

This is used when a sysxct starts a fresh new transaction, not retry.

Definition at line 193 of file sysxct_impl.hpp.

References ASSERT_ND, assert_sorted(), and foedus::xct::kLockListPositionInvalid.

| void foedus::xct::SysxctLockList::compress_entries | ( | UniversalLockId | enclosing_max_lock_id | ) |

Unlike clear_entries(), this is used when a sysxct is aborted and will be retried.

We 'inherit' some entries (although all of them must be released) to help guide next runs.

Definition at line 237 of file sysxct_impl.cpp.

References ASSERT_ND, assert_sorted(), foedus::xct::kLockListPositionInvalid, and foedus::xct::SysxctLockEntry::used_in_this_run_.

|

inline |

Definition at line 317 of file sysxct_impl.hpp.

Referenced by release_all_locks().

|

inline |

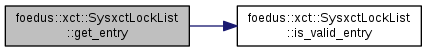

Definition at line 293 of file sysxct_impl.hpp.

Referenced by assert_sorted_impl().

|

inline |

Definition at line 294 of file sysxct_impl.hpp.

|

inline |

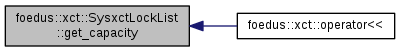

Definition at line 303 of file sysxct_impl.hpp.

References kMaxSysxctLocks.

Referenced by foedus::xct::operator<<().

|

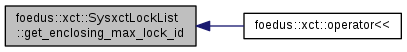

inline |

Definition at line 352 of file sysxct_impl.hpp.

Referenced by foedus::xct::operator<<().

|

inline |

Definition at line 295 of file sysxct_impl.hpp.

References ASSERT_ND, and is_valid_entry().

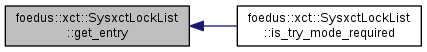

Referenced by is_try_mode_required().

|

inline |

Definition at line 299 of file sysxct_impl.hpp.

References ASSERT_ND, and is_valid_entry().

|

inline |

Definition at line 306 of file sysxct_impl.hpp.

Referenced by assert_sorted_impl(), batch_get_or_add_entries(), foedus::xct::operator<<(), and to_pos().

|

inline |

Definition at line 331 of file sysxct_impl.hpp.

Referenced by foedus::xct::operator<<().

| LockListPosition foedus::xct::SysxctLockList::get_or_add_entry | ( | storage::VolatilePagePointer | page_id, |

| uintptr_t | lock_addr, | ||

| bool | page_lock | ||

| ) |

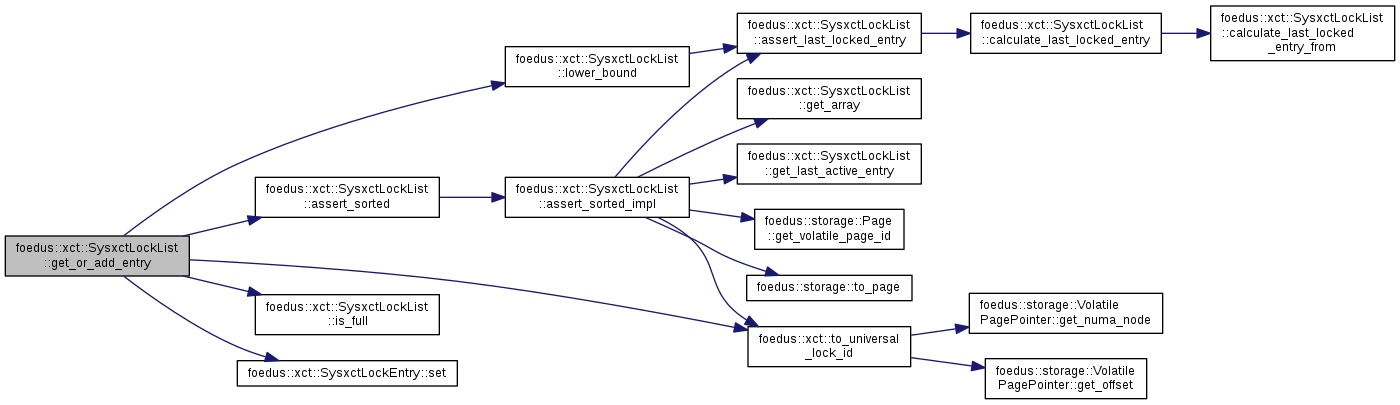

Definition at line 103 of file sysxct_impl.cpp.

References ASSERT_ND, assert_sorted(), is_full(), foedus::xct::kLockListPositionInvalid, lower_bound(), foedus::xct::SysxctLockEntry::set(), foedus::xct::to_universal_lock_id(), and UNLIKELY.

Referenced by batch_get_or_add_entries().

|

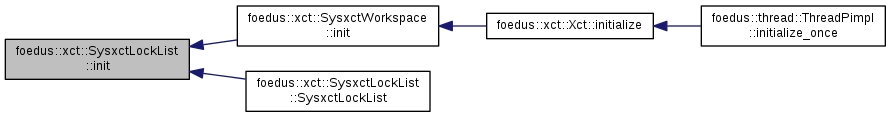

inline |

Definition at line 186 of file sysxct_impl.hpp.

References foedus::xct::kNullUniversalLockId.

Referenced by foedus::xct::SysxctWorkspace::init(), and SysxctLockList().

|

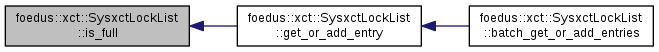

inline |

Definition at line 310 of file sysxct_impl.hpp.

References foedus::xct::kLockListPositionInvalid.

|

inline |

When this returns full, it's catastrophic.

Definition at line 305 of file sysxct_impl.hpp.

References kMaxSysxctLocks.

Referenced by get_or_add_entry().

|

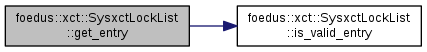

inline |

Definition at line 354 of file sysxct_impl.hpp.

References get_entry(), and foedus::xct::SysxctLockEntry::universal_lock_id_.

|

inline |

Definition at line 307 of file sysxct_impl.hpp.

References foedus::xct::kLockListPositionInvalid.

Referenced by get_entry().

| LockListPosition foedus::xct::SysxctLockList::lower_bound | ( | UniversalLockId | lock | ) | const |

Analogous to std::lower_bound() for the given lock.

Data manipulation (search/add/etc)

Definition at line 98 of file sysxct_impl.cpp.

References assert_last_locked_entry().

Referenced by batch_get_or_add_entries(), and get_or_add_entry().

|

delete |

|

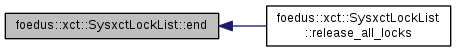

inline |

Releases all locks that were acquired.

Entries are left as they were. You should then call clear_entries() or compress_entries().

Definition at line 647 of file sysxct_impl.hpp.

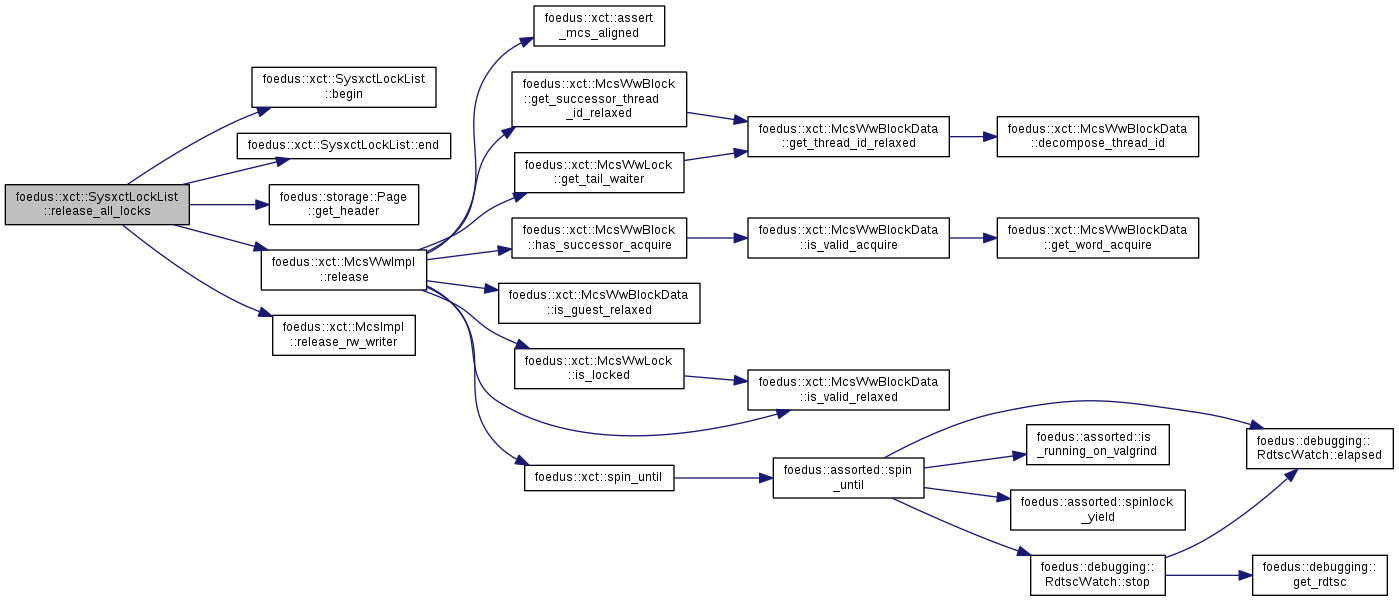

References begin(), end(), foedus::storage::Page::get_header(), foedus::xct::kLockListPositionInvalid, foedus::storage::PageVersion::lock_, foedus::storage::PageHeader::page_version_, foedus::xct::McsWwImpl< ADAPTOR >::release(), and foedus::xct::McsImpl< ADAPTOR, RW_BLOCK >::release_rw_writer().

|

inline |

Acquires a page lock.

Definition at line 241 of file sysxct_impl.hpp.

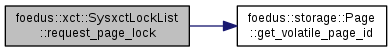

References foedus::storage::Page::get_volatile_page_id().

Referenced by batch_request_page_locks().

|

inline |

If not yet acquired, acquires a record lock and adds an entry to this list, re-sorting part of the list if necessary to keep the sortedness.

If there is an existing entry for the lock, it resuses the entry.

Definition at line 220 of file sysxct_impl.hpp.

|

inline |

Definition at line 320 of file sysxct_impl.hpp.

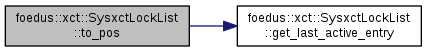

References ASSERT_ND, and get_last_active_entry().

|

friend |

Definition at line 53 of file sysxct_impl.cpp.

|

friend |

Definition at line 168 of file sysxct_impl.hpp.