|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

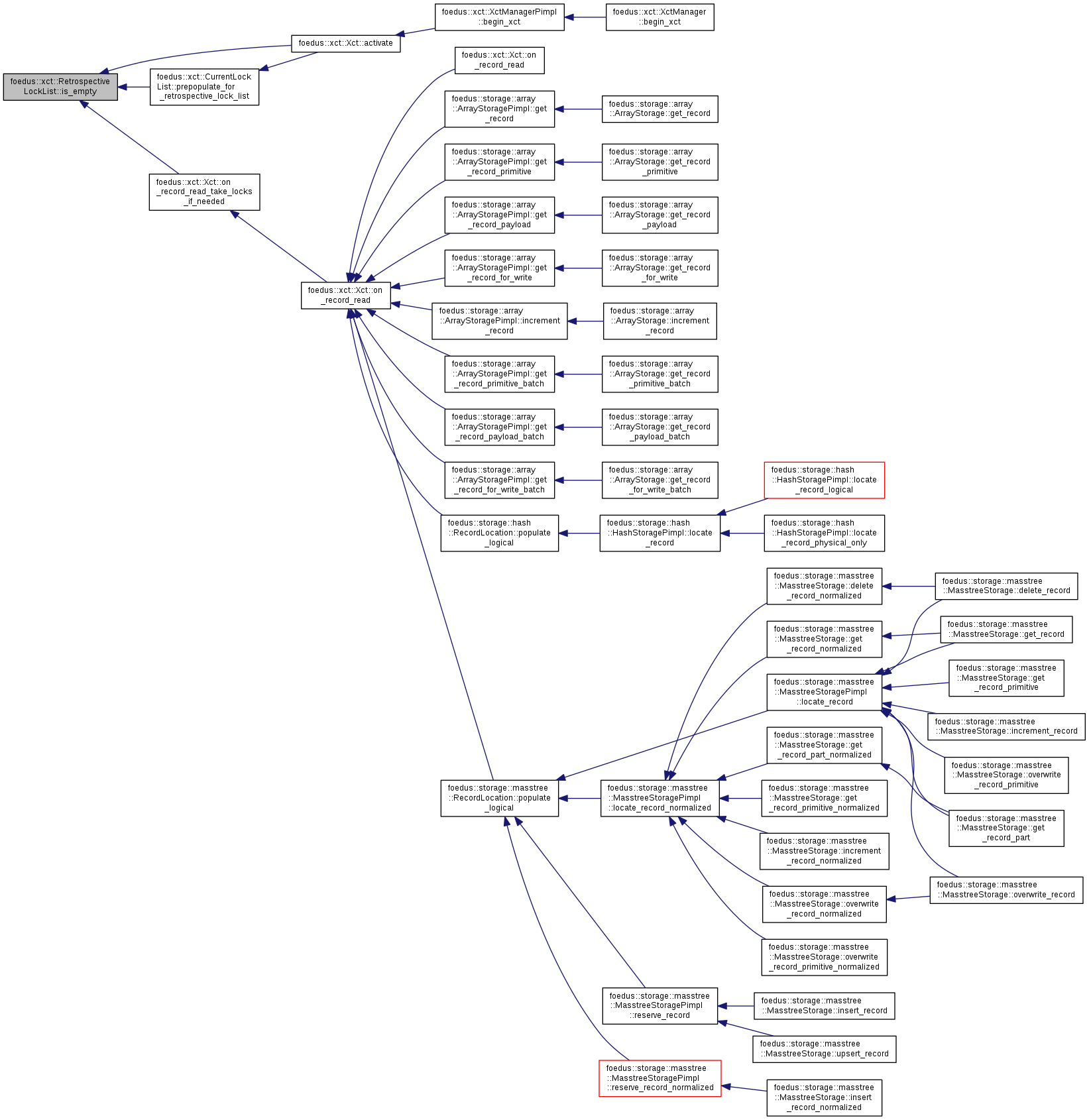

Retrospective lock list. More...

Retrospective lock list.

This is NOT a POD because we need dynamic memory for the list.

Definition at line 189 of file retrospective_lock_list.hpp.

#include <retrospective_lock_list.hpp>

Public Member Functions | |

| RetrospectiveLockList () | |

| Init/Uninit. More... | |

| ~RetrospectiveLockList () | |

| void | init (LockEntry *array, uint32_t capacity, const memory::GlobalVolatilePageResolver &resolver) |

| void | uninit () |

| void | clear_entries () |

| LockListPosition | binary_search (UniversalLockId lock) const |

| Analogous to std::binary_search() for the given lock. More... | |

| LockListPosition | lower_bound (UniversalLockId lock) const |

| Analogous to std::lower_bound() for the given lock. More... | |

| void | construct (thread::Thread *context, uint32_t read_lock_threshold) |

| Fill out this retrospetive lock list for the next run of the given transaction. More... | |

| const LockEntry * | get_array () const |

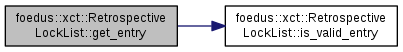

| LockEntry * | get_entry (LockListPosition pos) |

| const LockEntry * | get_entry (LockListPosition pos) const |

| uint32_t | get_capacity () const |

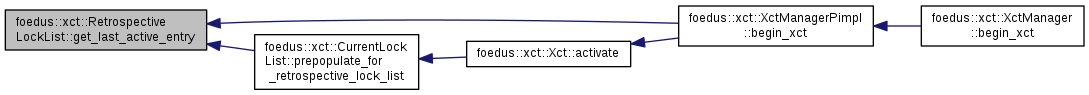

| LockListPosition | get_last_active_entry () const |

| bool | is_valid_entry (LockListPosition pos) const |

| bool | is_empty () const |

| void | assert_sorted () const __attribute__((always_inline)) |

| void | assert_sorted_impl () const |

| LockEntry * | begin () |

| LockEntry * | end () |

| const LockEntry * | cbegin () const |

| const LockEntry * | cend () const |

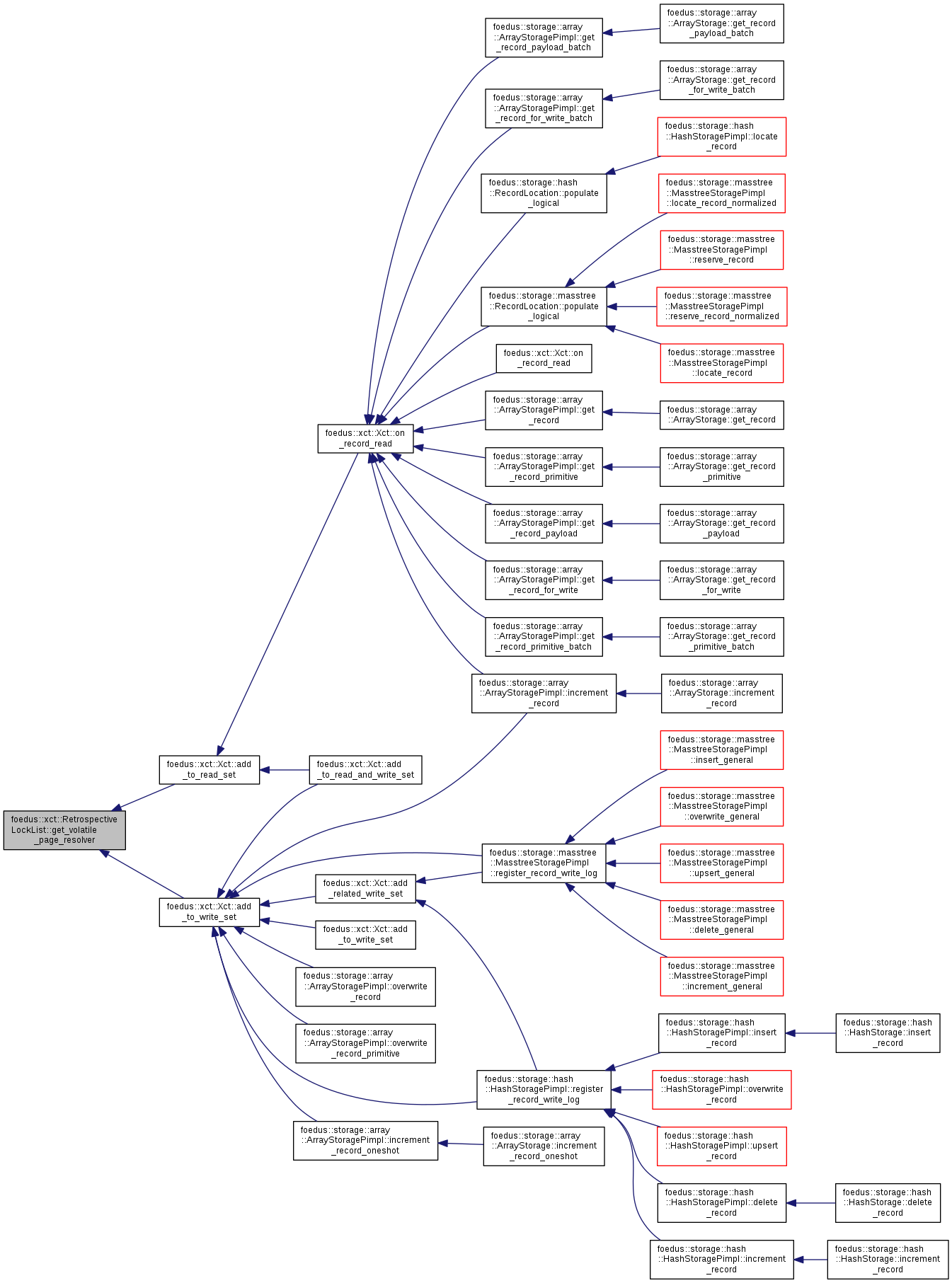

| const memory::GlobalVolatilePageResolver & | get_volatile_page_resolver () const |

Friends | |

| std::ostream & | operator<< (std::ostream &o, const RetrospectiveLockList &v) |

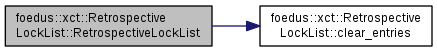

| foedus::xct::RetrospectiveLockList::RetrospectiveLockList | ( | ) |

Init/Uninit.

Definition at line 39 of file retrospective_lock_list.cpp.

References clear_entries().

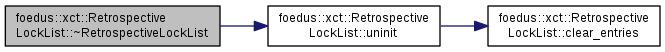

| foedus::xct::RetrospectiveLockList::~RetrospectiveLockList | ( | ) |

Definition at line 45 of file retrospective_lock_list.cpp.

References uninit().

|

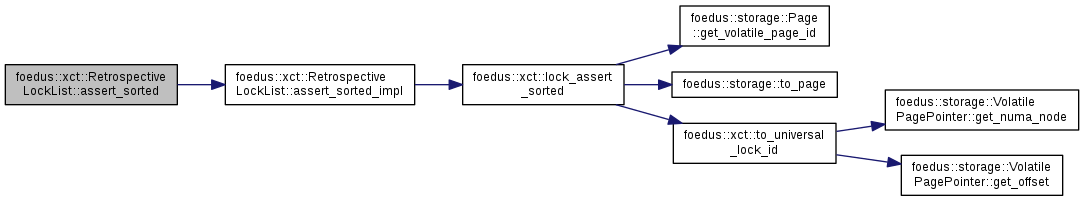

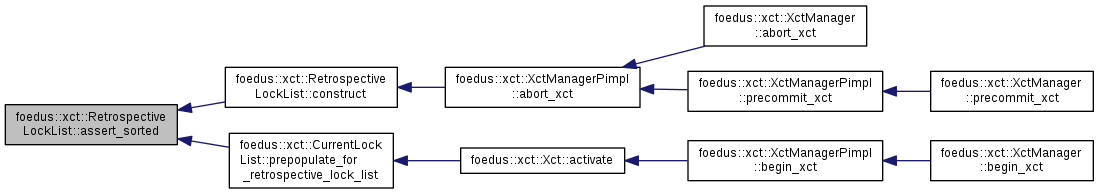

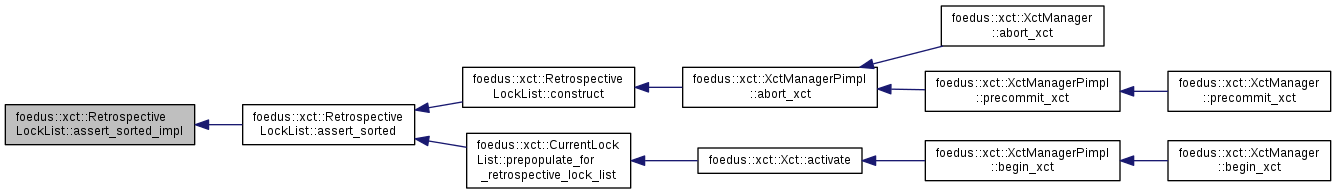

inline |

Definition at line 548 of file retrospective_lock_list.hpp.

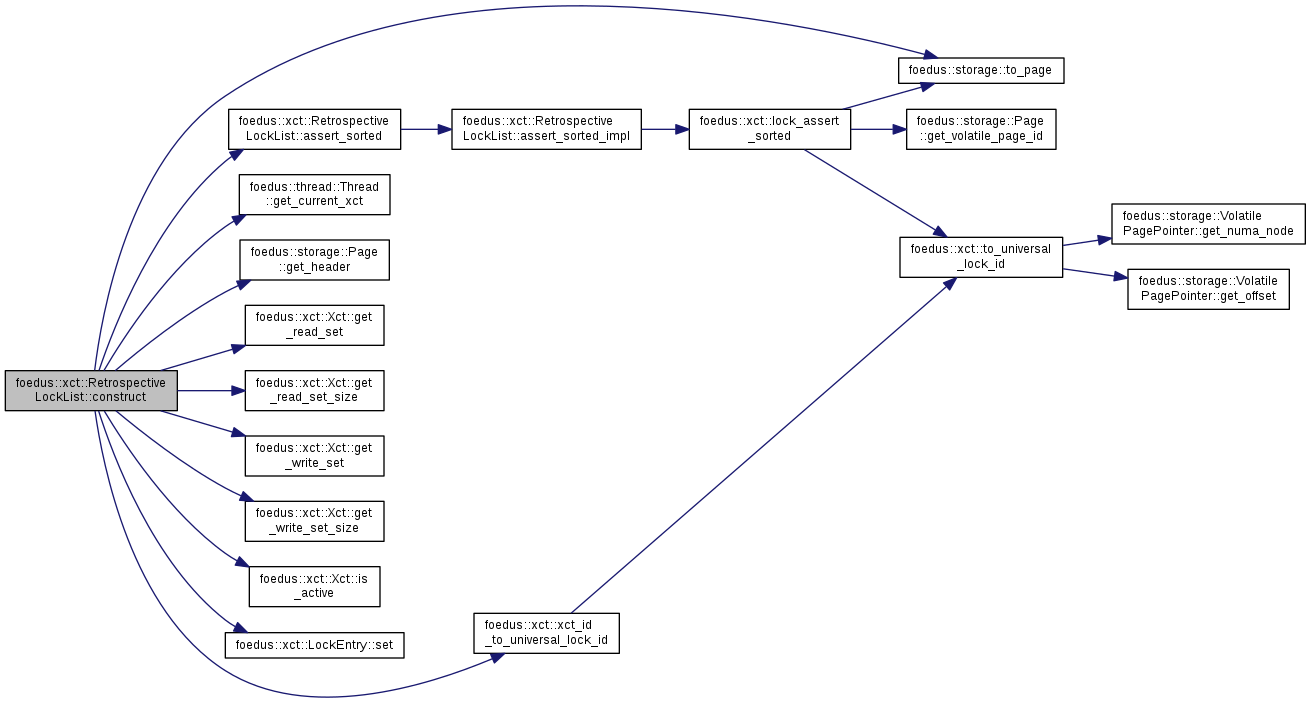

References assert_sorted_impl().

Referenced by construct(), and foedus::xct::CurrentLockList::prepopulate_for_retrospective_lock_list().

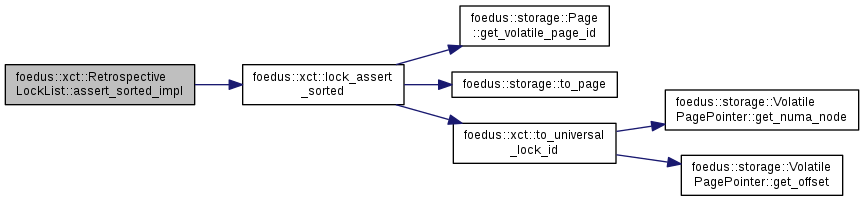

| void foedus::xct::RetrospectiveLockList::assert_sorted_impl | ( | ) | const |

Definition at line 182 of file retrospective_lock_list.cpp.

References foedus::xct::lock_assert_sorted().

Referenced by assert_sorted().

|

inline |

Definition at line 254 of file retrospective_lock_list.hpp.

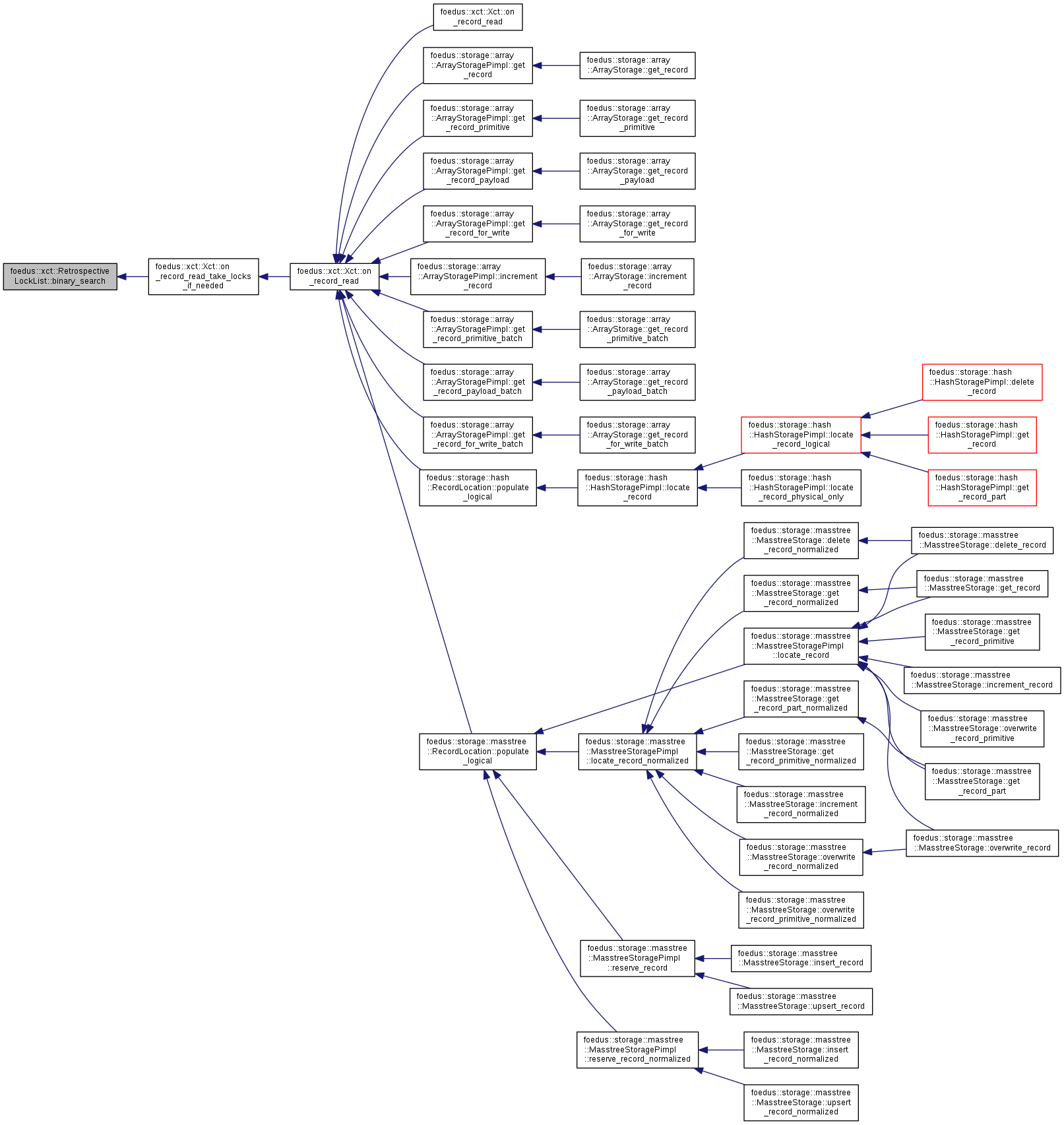

| LockListPosition foedus::xct::RetrospectiveLockList::binary_search | ( | UniversalLockId | lock | ) | const |

Analogous to std::binary_search() for the given lock.

Definition at line 193 of file retrospective_lock_list.cpp.

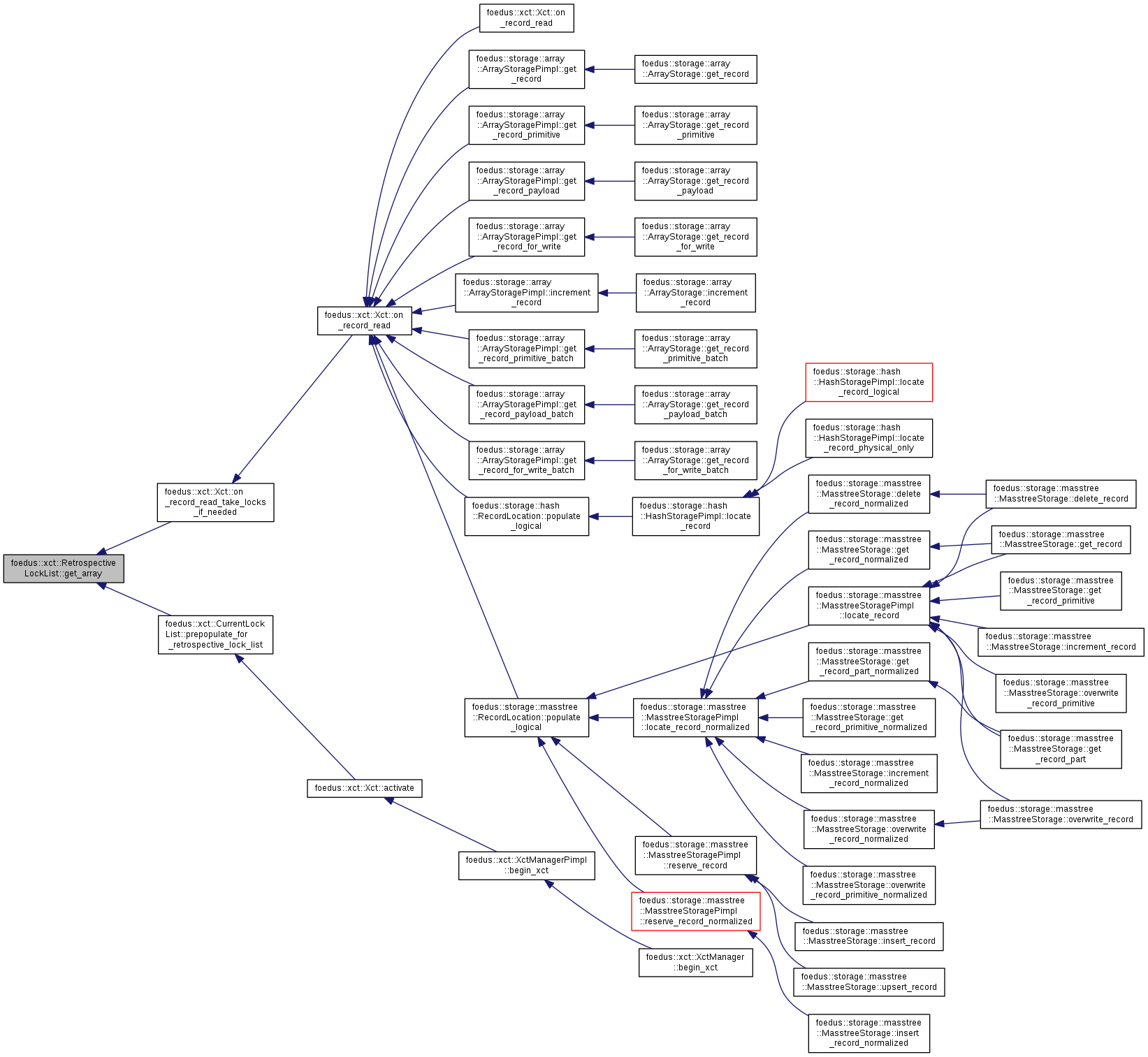

Referenced by foedus::xct::Xct::on_record_read_take_locks_if_needed().

|

inline |

Definition at line 256 of file retrospective_lock_list.hpp.

|

inline |

Definition at line 257 of file retrospective_lock_list.hpp.

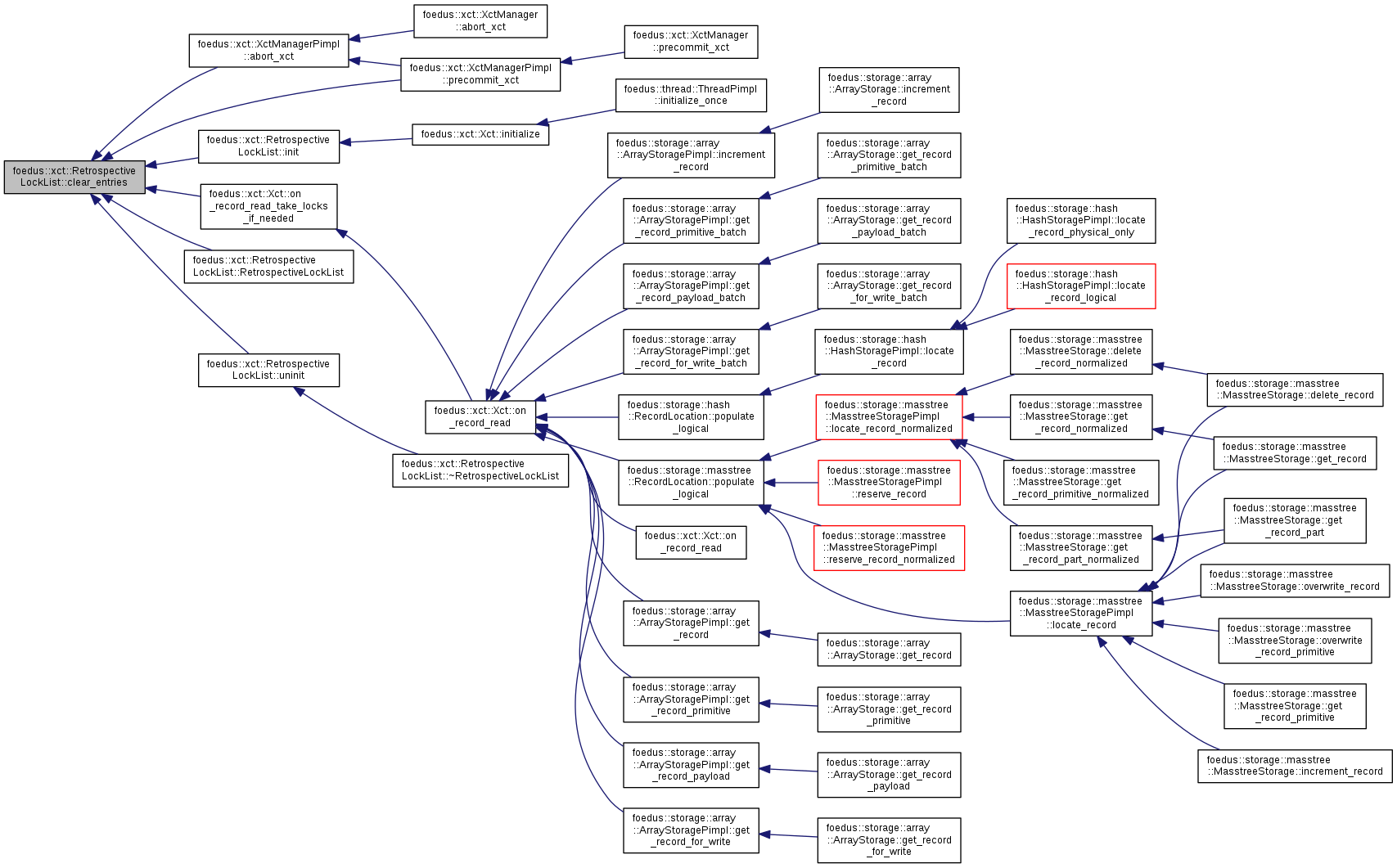

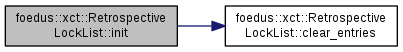

| void foedus::xct::RetrospectiveLockList::clear_entries | ( | ) |

Definition at line 59 of file retrospective_lock_list.cpp.

References foedus::xct::kLockListPositionInvalid, foedus::xct::kNoLock, foedus::xct::LockEntry::lock_, foedus::xct::LockEntry::preferred_mode_, foedus::xct::LockEntry::taken_mode_, and foedus::xct::LockEntry::universal_lock_id_.

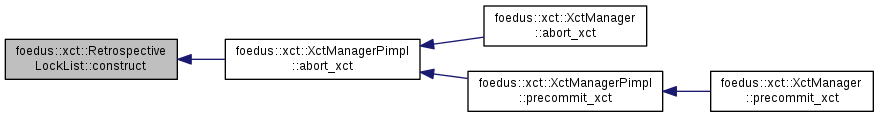

Referenced by foedus::xct::XctManagerPimpl::abort_xct(), init(), foedus::xct::Xct::on_record_read_take_locks_if_needed(), foedus::xct::XctManagerPimpl::precommit_xct(), RetrospectiveLockList(), and uninit().

| void foedus::xct::RetrospectiveLockList::construct | ( | thread::Thread * | context, |

| uint32_t | read_lock_threshold | ||

| ) |

Fill out this retrospetive lock list for the next run of the given transaction.

| [in] | context | the thread conveying the transaction. must be currently running a xct |

| [in] | read_lock_threshold | we "recommend" a read lock in RLL for records whose page have this value or more in the temperature-stat. This value should be a bit lower than the threshold we trigger read-locks without RLL. Otherwise, the next run might often take a read-lock the RLL discarded due to a concurrent abort, which might violate canonical order. |

This method is invoked when a transaction aborts at precommit due to some conflict. Based on the current transaction's read/write-sets, this builds an RLL that contains locks we probably should take next time in a canonical order.

Definition at line 252 of file retrospective_lock_list.cpp.

References ASSERT_ND, assert_sorted(), foedus::thread::Thread::get_current_xct(), foedus::storage::Page::get_header(), foedus::xct::Xct::get_read_set(), foedus::xct::Xct::get_read_set_size(), foedus::xct::Xct::get_write_set(), foedus::xct::Xct::get_write_set_size(), foedus::storage::PageHeader::hotness_, foedus::xct::Xct::is_active(), foedus::xct::kLockListPositionInvalid, foedus::xct::kNoLock, foedus::xct::kReadLock, foedus::xct::kWriteLock, foedus::xct::LockEntry::preferred_mode_, foedus::xct::LockEntry::set(), foedus::storage::to_page(), foedus::assorted::ProbCounter::value_, foedus::xct::RwLockableXctId::xct_id_, and foedus::xct::xct_id_to_universal_lock_id().

Referenced by foedus::xct::XctManagerPimpl::abort_xct().

|

inline |

Definition at line 255 of file retrospective_lock_list.hpp.

|

inline |

Definition at line 234 of file retrospective_lock_list.hpp.

Referenced by foedus::xct::Xct::on_record_read_take_locks_if_needed(), and foedus::xct::CurrentLockList::prepopulate_for_retrospective_lock_list().

|

inline |

Definition at line 243 of file retrospective_lock_list.hpp.

|

inline |

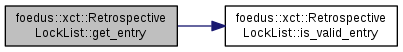

Definition at line 235 of file retrospective_lock_list.hpp.

References ASSERT_ND, and is_valid_entry().

|

inline |

Definition at line 239 of file retrospective_lock_list.hpp.

References ASSERT_ND, and is_valid_entry().

|

inline |

Definition at line 244 of file retrospective_lock_list.hpp.

Referenced by foedus::xct::XctManagerPimpl::begin_xct(), and foedus::xct::CurrentLockList::prepopulate_for_retrospective_lock_list().

|

inline |

Definition at line 258 of file retrospective_lock_list.hpp.

Referenced by foedus::xct::Xct::add_to_read_set(), and foedus::xct::Xct::add_to_write_set().

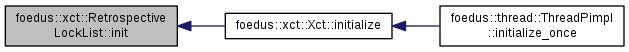

| void foedus::xct::RetrospectiveLockList::init | ( | LockEntry * | array, |

| uint32_t | capacity, | ||

| const memory::GlobalVolatilePageResolver & | resolver | ||

| ) |

Definition at line 49 of file retrospective_lock_list.cpp.

References clear_entries().

Referenced by foedus::xct::Xct::initialize().

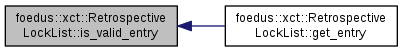

|

inline |

Definition at line 248 of file retrospective_lock_list.hpp.

References foedus::xct::kLockListPositionInvalid.

Referenced by foedus::xct::Xct::activate(), foedus::xct::Xct::on_record_read_take_locks_if_needed(), and foedus::xct::CurrentLockList::prepopulate_for_retrospective_lock_list().

|

inline |

Definition at line 245 of file retrospective_lock_list.hpp.

References foedus::xct::kLockListPositionInvalid.

Referenced by get_entry().

| LockListPosition foedus::xct::RetrospectiveLockList::lower_bound | ( | UniversalLockId | lock | ) | const |

Analogous to std::lower_bound() for the given lock.

Definition at line 200 of file retrospective_lock_list.cpp.

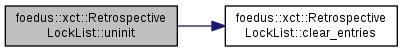

| void foedus::xct::RetrospectiveLockList::uninit | ( | ) |

Definition at line 70 of file retrospective_lock_list.cpp.

References clear_entries().

Referenced by ~RetrospectiveLockList().

|

friend |

Definition at line 145 of file retrospective_lock_list.cpp.