|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

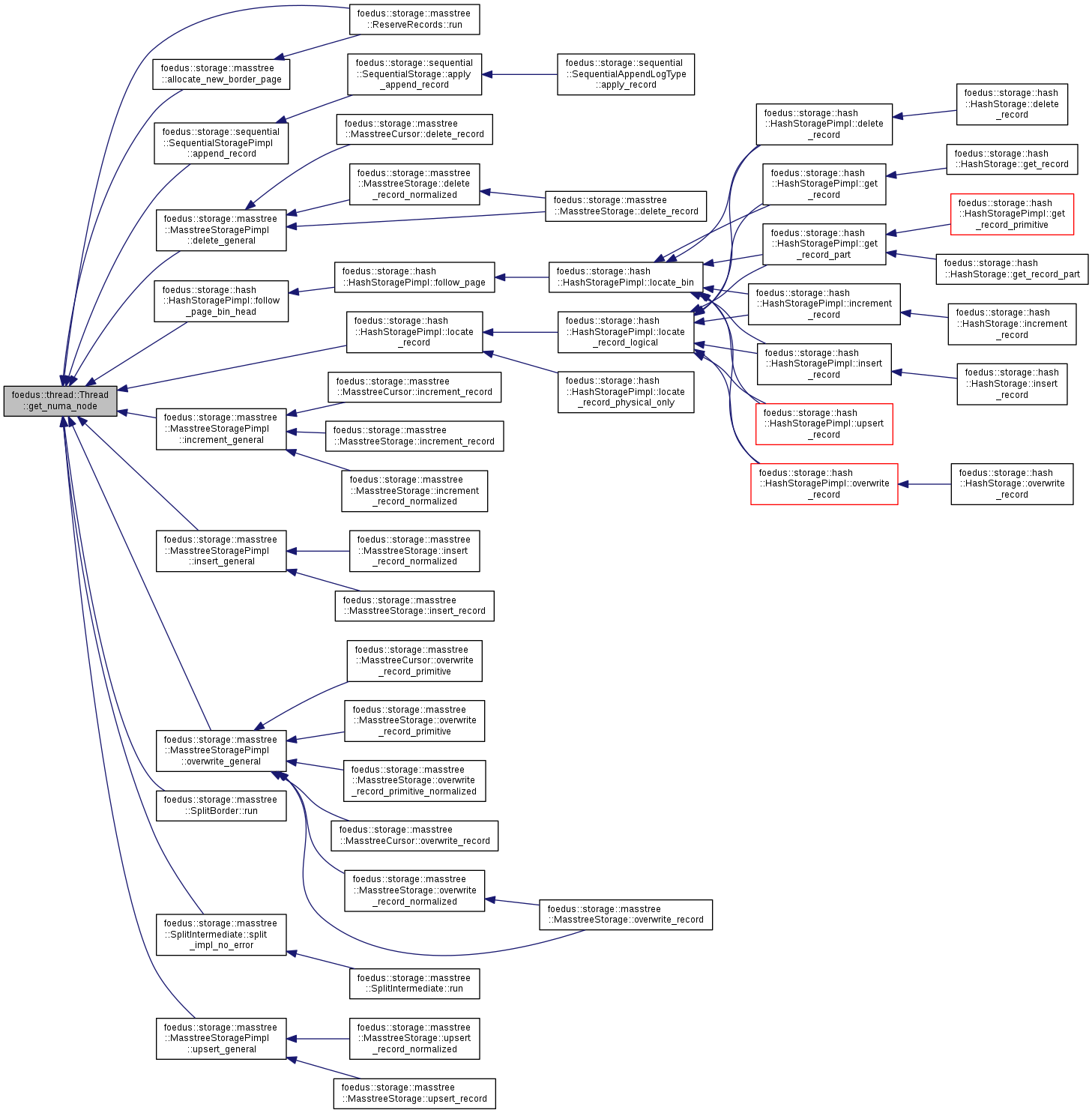

Represents one thread running on one NUMA core. More...

Represents one thread running on one NUMA core.

SILO uses a simple spin lock with atomic CAS, but we observed a HUUUGE bottleneck with it on big machines (8 sockets or 16 sockets) while it was totally fine up to 4 sockets. It causes a cache invalidation storm even with exponential backoff. The best solution is MCS locking with local spins. We implemented it with advices from HLINUX team.

Definition at line 48 of file thread.hpp.

#include <thread.hpp>

Public Types | |

| enum | Constants { kMaxFindPagesBatch = 32 } |

Public Member Functions | |

| Thread ()=delete | |

| Thread (Engine *engine, ThreadId id, ThreadGlobalOrdinal global_ordinal) | |

| ~Thread () | |

| ErrorStack | initialize () override |

| Acquires resources in this object, usually called right after constructor. More... | |

| bool | is_initialized () const override |

| Returns whether the object has been already initialized or not. More... | |

| ErrorStack | uninitialize () override |

| An idempotent method to release all resources of this object, if any. More... | |

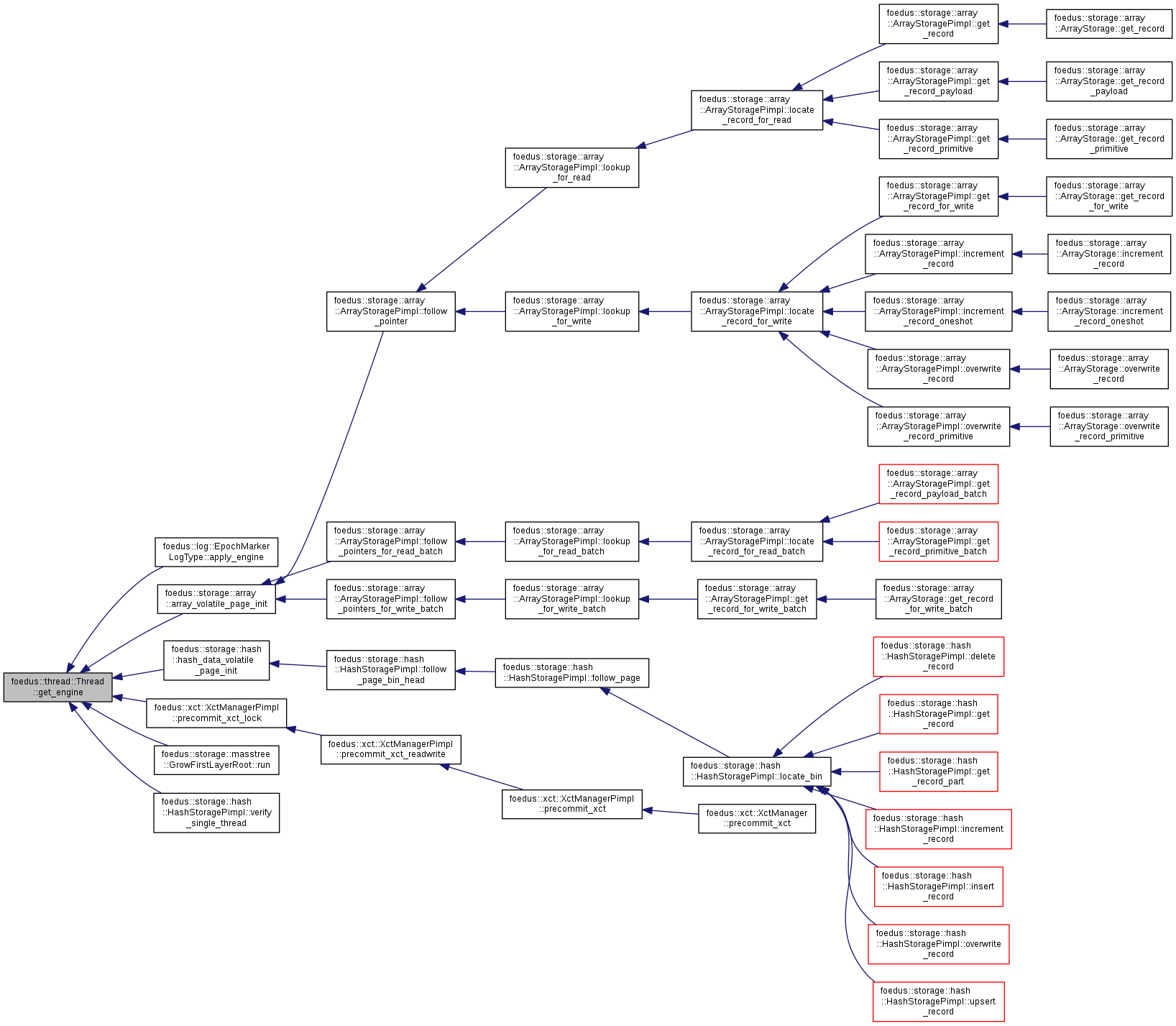

| Engine * | get_engine () const |

| ThreadId | get_thread_id () const |

| ThreadGroupId | get_numa_node () const |

| ThreadGlobalOrdinal | get_thread_global_ordinal () const |

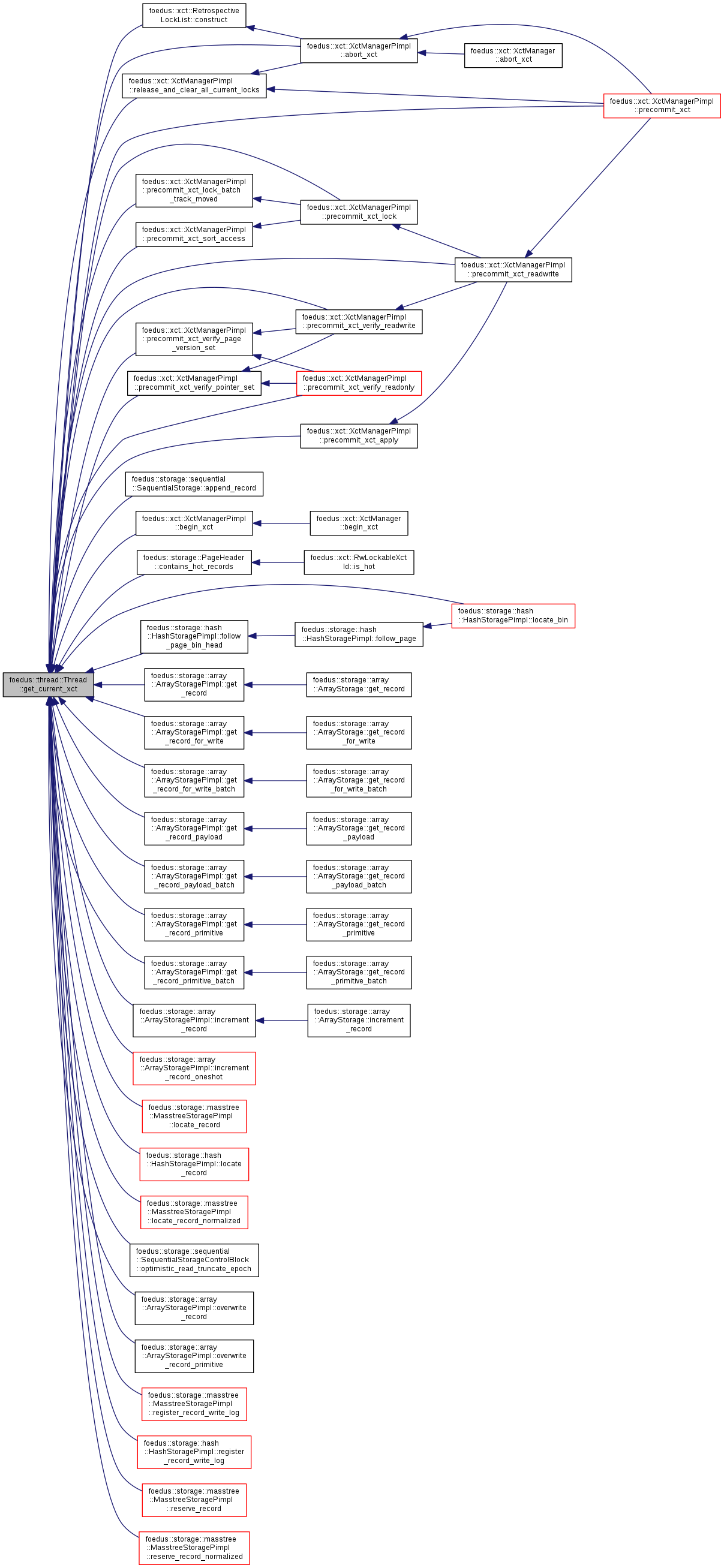

| xct::Xct & | get_current_xct () |

| Returns the transaction that is currently running on this thread. More... | |

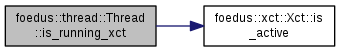

| bool | is_running_xct () const |

| Returns if this thread is running an active transaction. More... | |

| memory::NumaCoreMemory * | get_thread_memory () const |

| Returns the private memory repository of this thread. More... | |

| memory::NumaNodeMemory * | get_node_memory () const |

| Returns the node-shared memory repository of the NUMA node this thread belongs to. More... | |

| log::ThreadLogBuffer & | get_thread_log_buffer () |

| Returns the private log buffer for this thread. More... | |

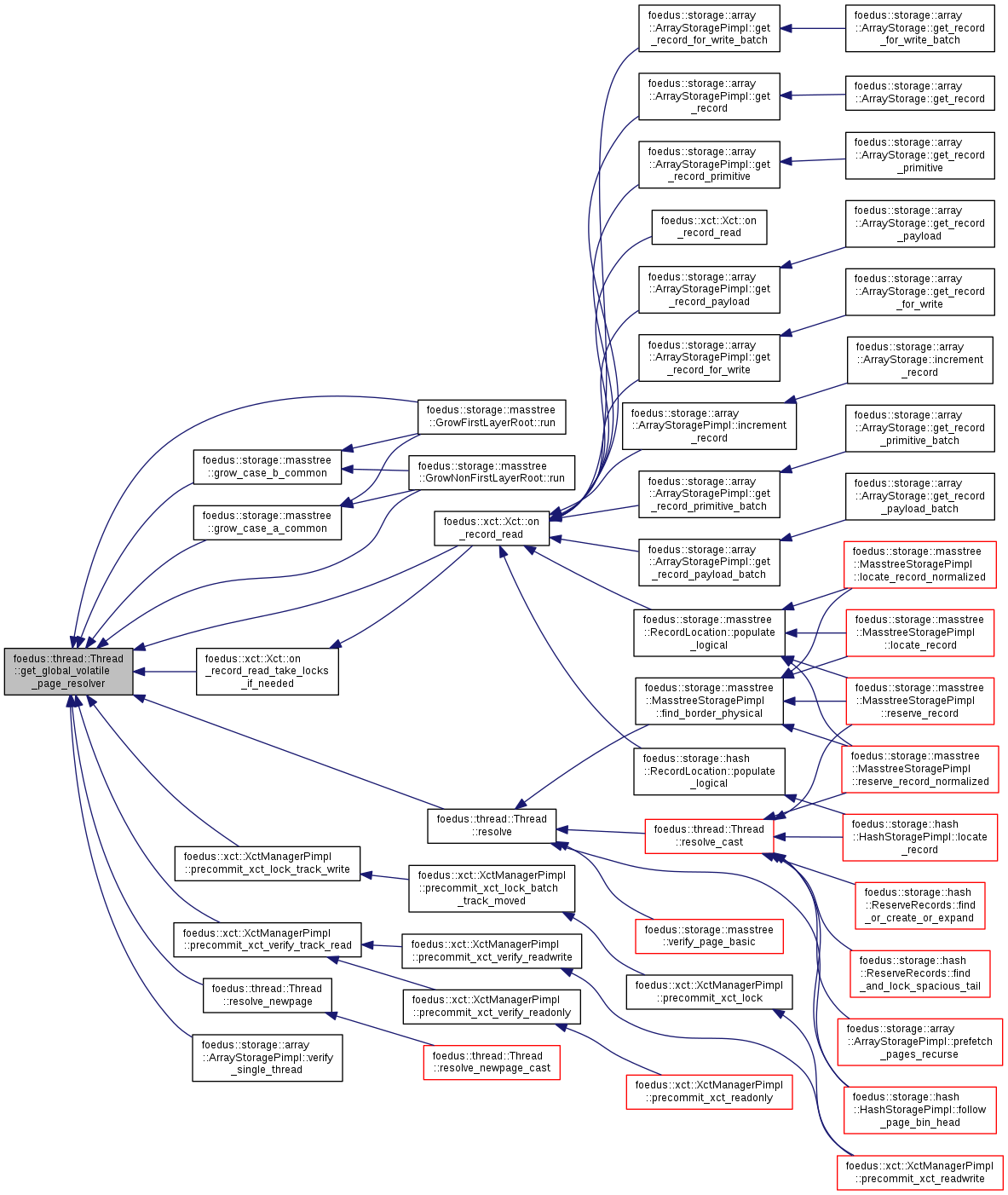

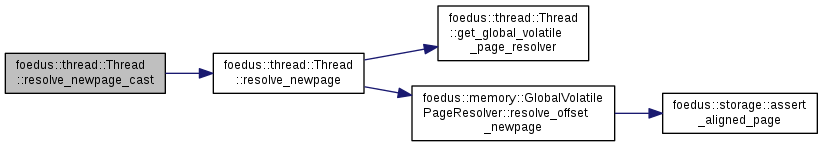

| const memory::GlobalVolatilePageResolver & | get_global_volatile_page_resolver () const |

| Returns the page resolver to convert page ID to page pointer. More... | |

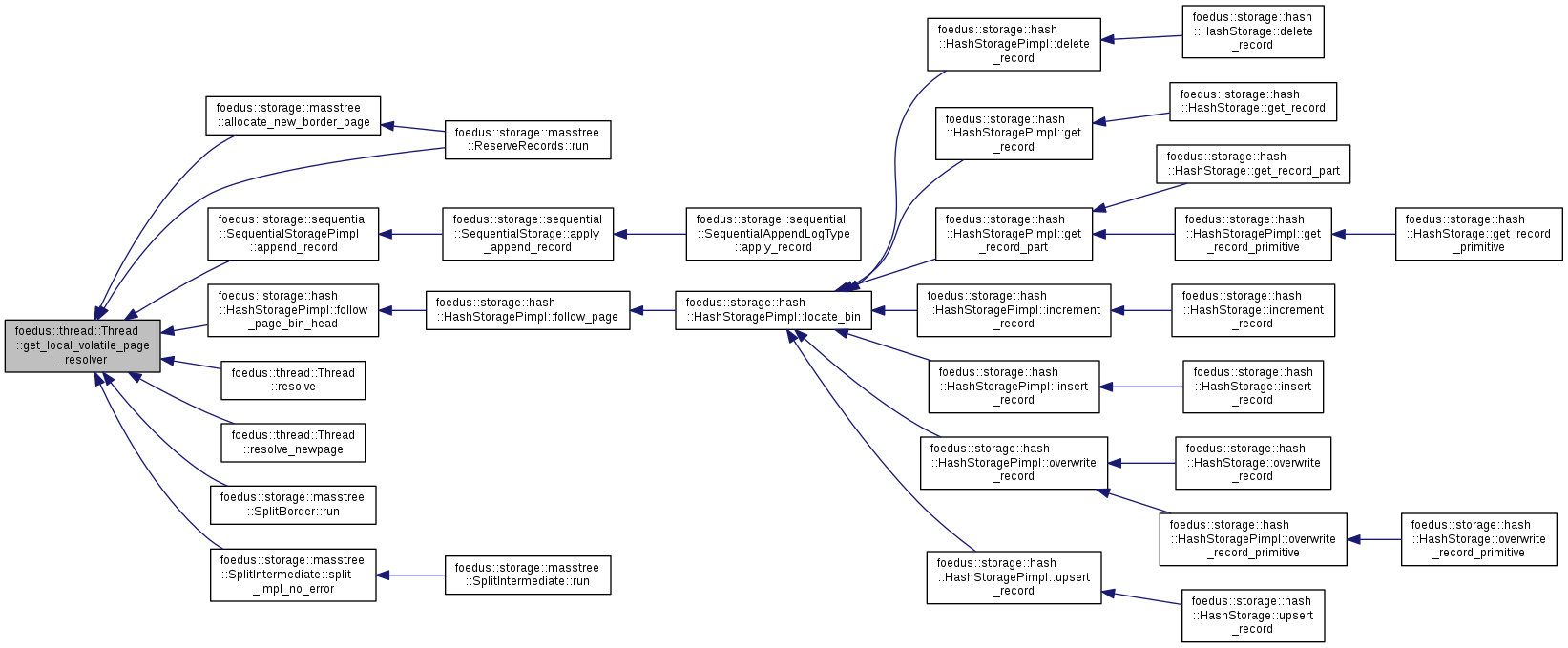

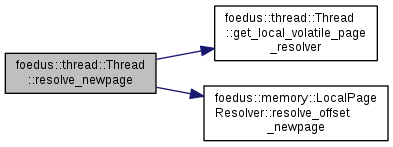

| const memory::LocalPageResolver & | get_local_volatile_page_resolver () const |

| Returns page resolver to convert only local page ID to page pointer. More... | |

| uint64_t | get_snapshot_cache_hits () const |

| [statistics] count of cache hits in snapshot caches More... | |

| uint64_t | get_snapshot_cache_misses () const |

| [statistics] count of cache misses in snapshot caches More... | |

| void | reset_snapshot_cache_counts () const |

| [statistics] resets the above two More... | |

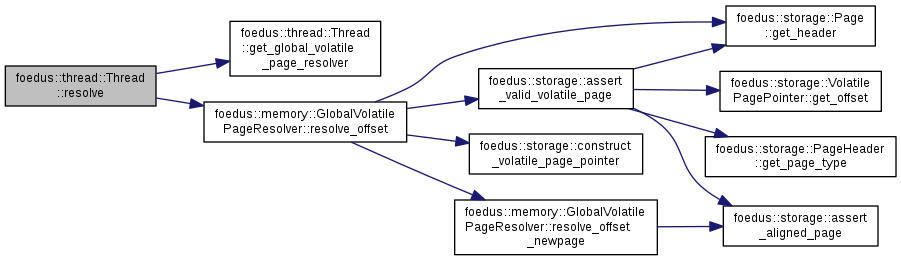

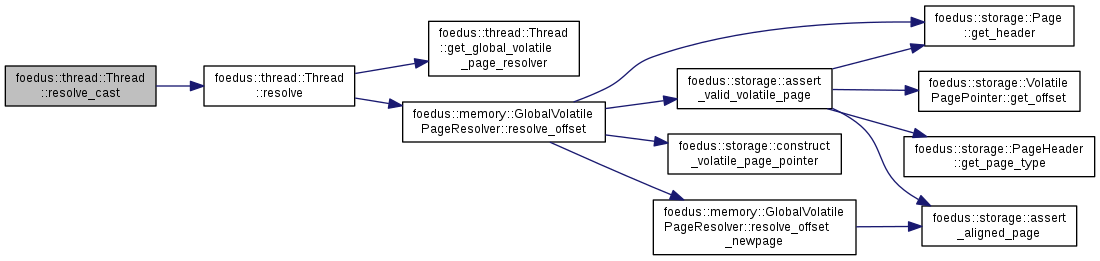

| storage::Page * | resolve (storage::VolatilePagePointer ptr) const |

| Shorthand for get_global_volatile_page_resolver.resolve_offset() More... | |

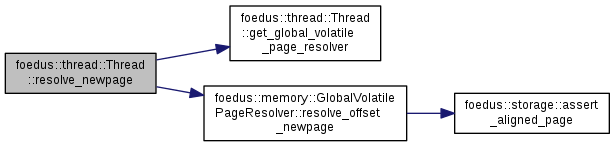

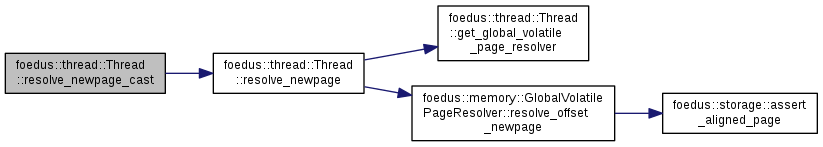

| storage::Page * | resolve_newpage (storage::VolatilePagePointer ptr) const |

| Shorthand for get_global_volatile_page_resolver.resolve_offset_newpage() More... | |

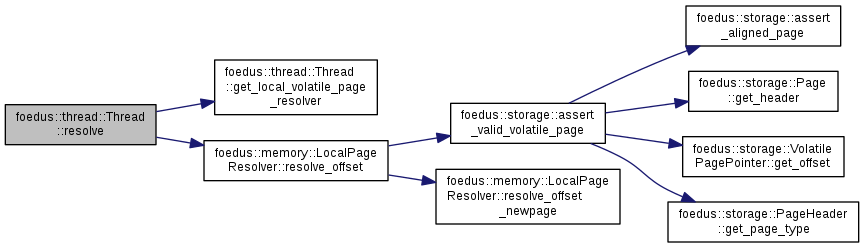

| storage::Page * | resolve (memory::PagePoolOffset offset) const |

| Shorthand for get_local_volatile_page_resolver.resolve_offset() More... | |

| storage::Page * | resolve_newpage (memory::PagePoolOffset offset) const |

| Shorthand for get_local_volatile_page_resolver.resolve_offset_newpage() More... | |

| template<typename P > | |

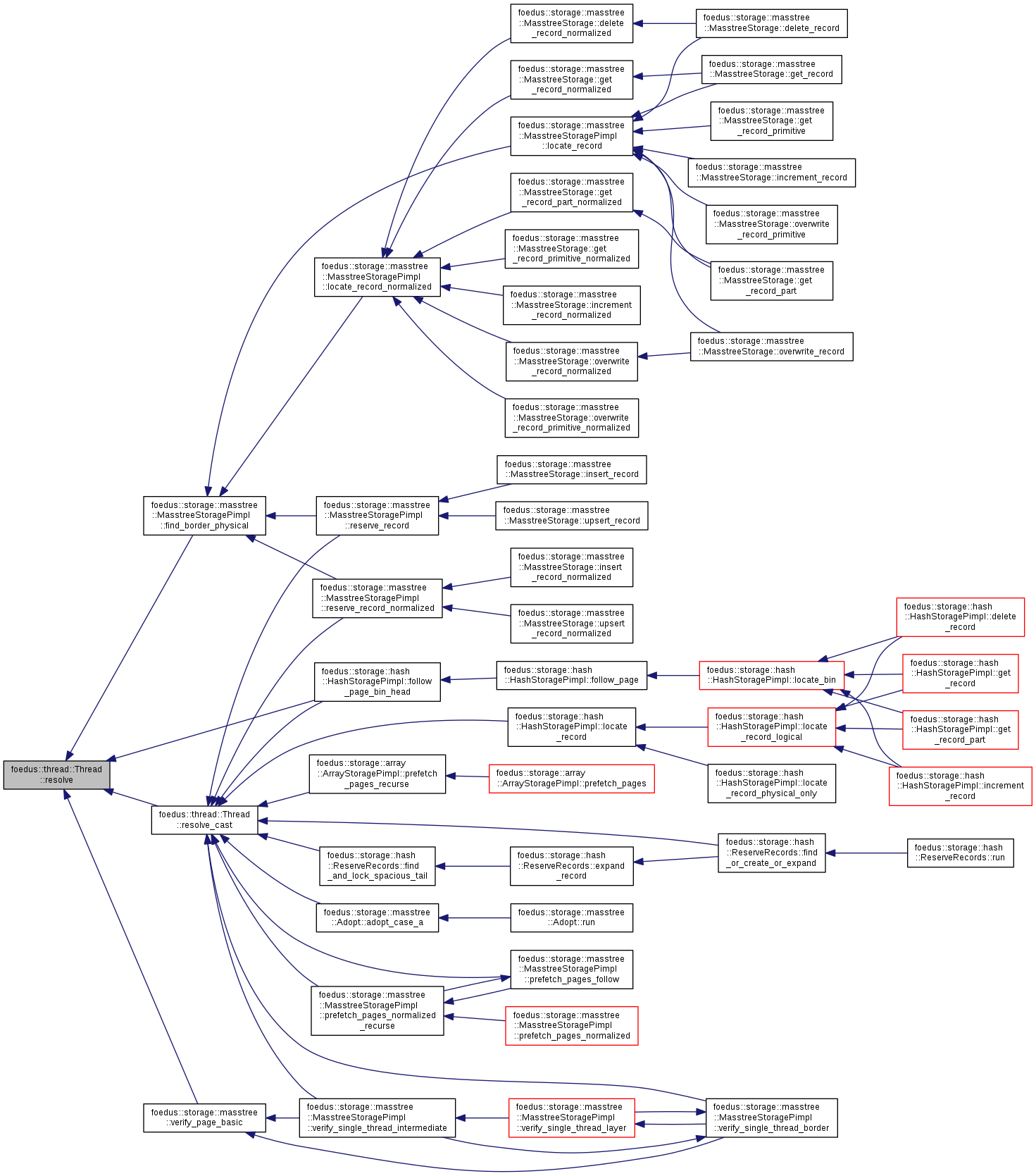

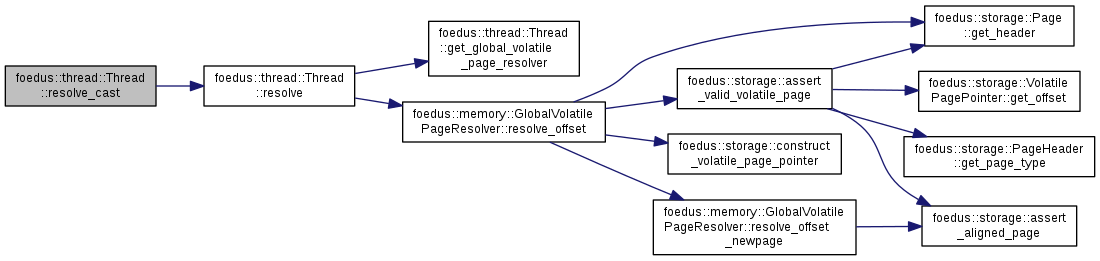

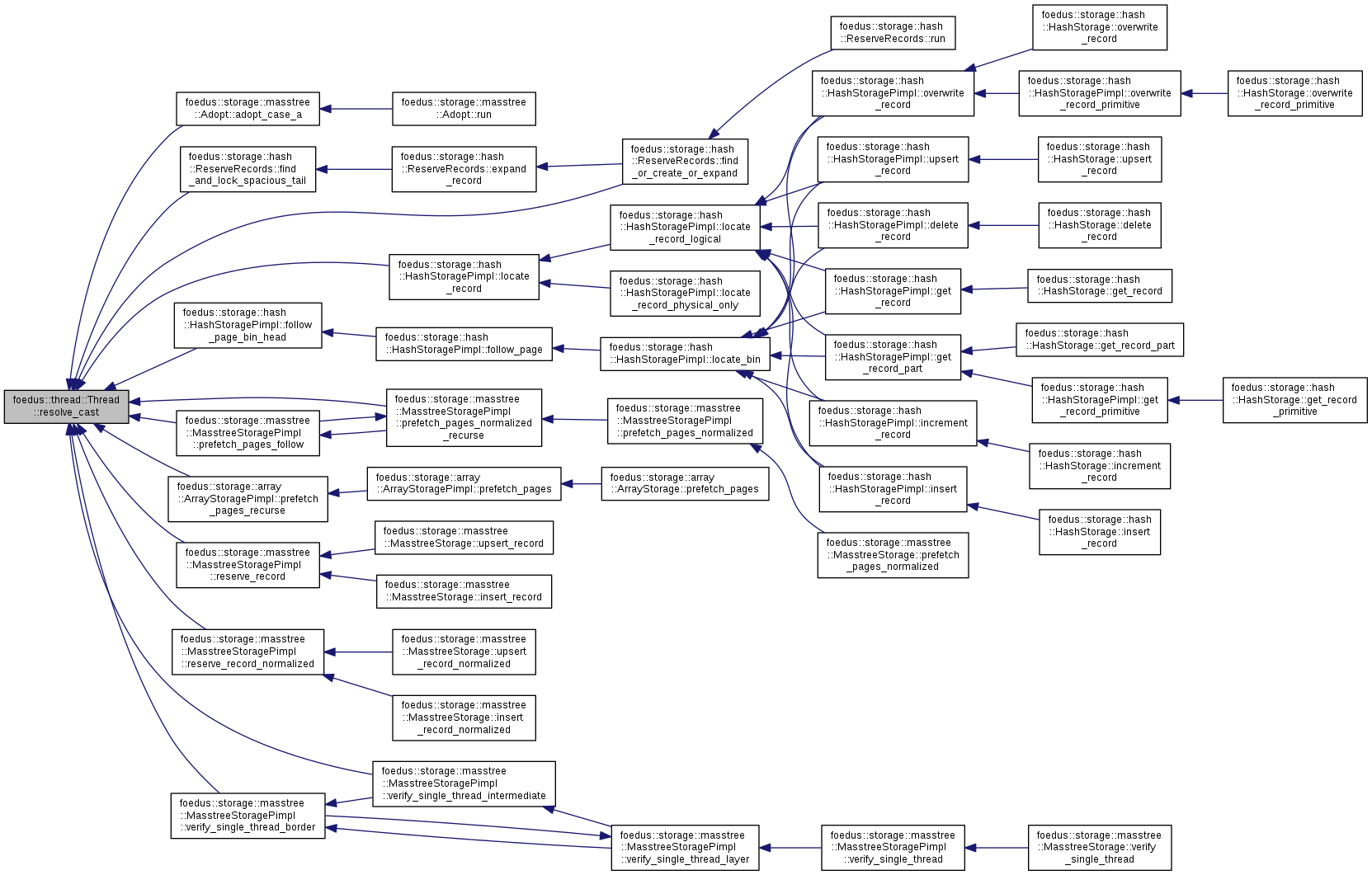

| P * | resolve_cast (storage::VolatilePagePointer ptr) const |

| resolve() plus reinterpret_cast More... | |

| template<typename P > | |

| P * | resolve_newpage_cast (storage::VolatilePagePointer ptr) const |

| template<typename P > | |

| P * | resolve_cast (memory::PagePoolOffset offset) const |

| template<typename P > | |

| P * | resolve_newpage_cast (memory::PagePoolOffset offset) const |

| ErrorCode | find_or_read_a_snapshot_page (storage::SnapshotPagePointer page_id, storage::Page **out) |

| Find the given page in snapshot cache, reading it if not found. More... | |

| ErrorCode | find_or_read_snapshot_pages_batch (uint16_t batch_size, const storage::SnapshotPagePointer *page_ids, storage::Page **out) |

| Batched version of find_or_read_a_snapshot_page(). More... | |

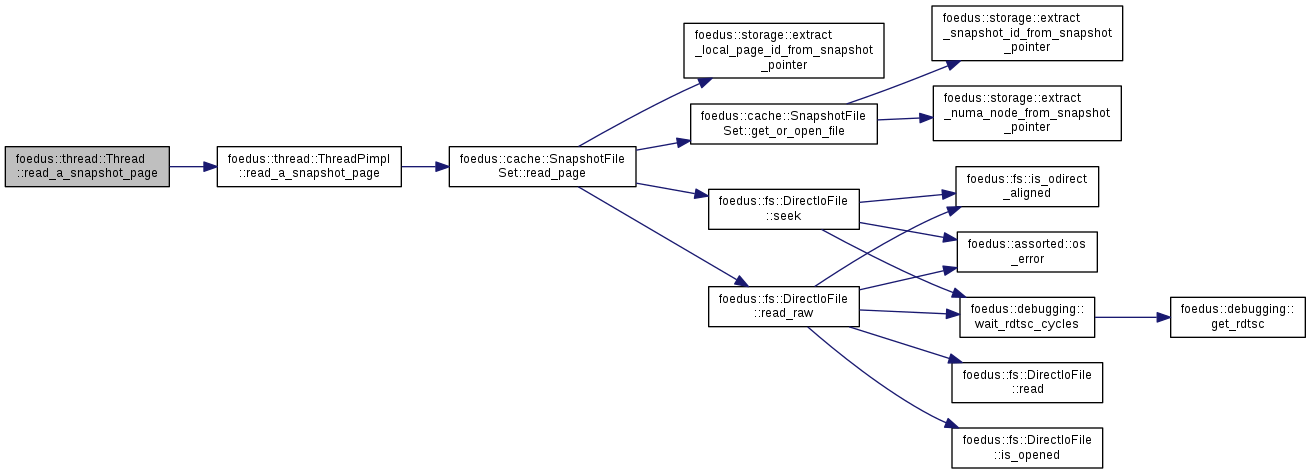

| ErrorCode | read_a_snapshot_page (storage::SnapshotPagePointer page_id, storage::Page *buffer) |

| Read a snapshot page using the thread-local file descriptor set. More... | |

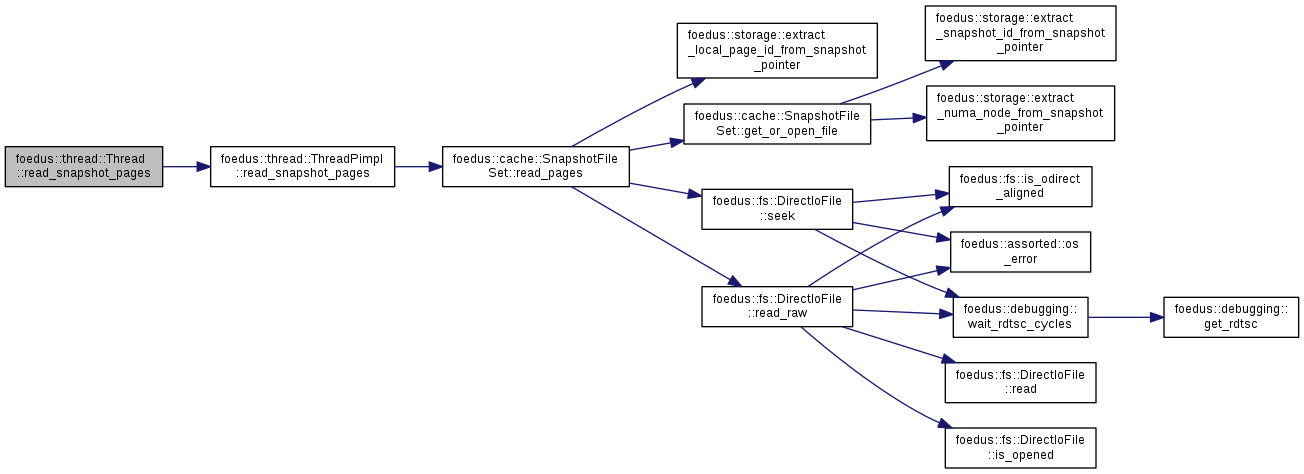

| ErrorCode | read_snapshot_pages (storage::SnapshotPagePointer page_id_begin, uint32_t page_count, storage::Page *buffer) |

| Read contiguous pages in one shot. More... | |

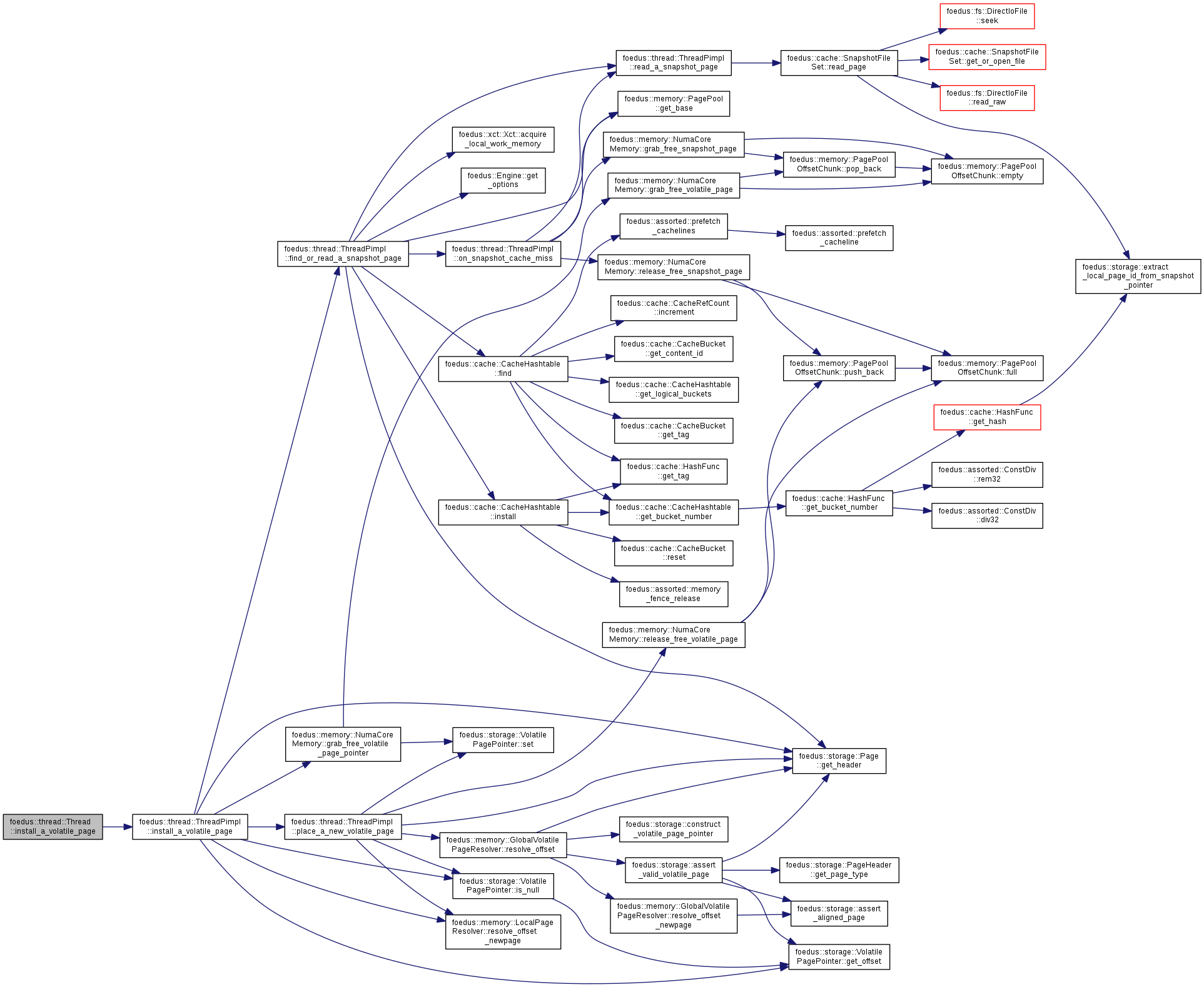

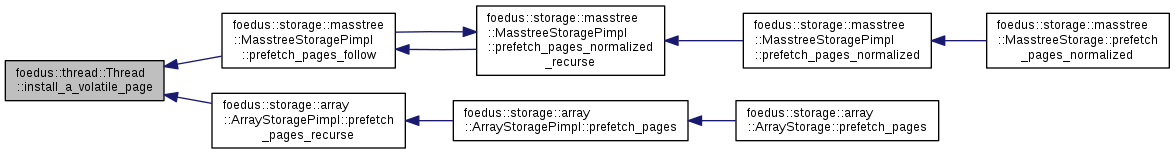

| ErrorCode | install_a_volatile_page (storage::DualPagePointer *pointer, storage::Page **installed_page) |

| Installs a volatile page to the given dual pointer as a copy of the snapshot page. More... | |

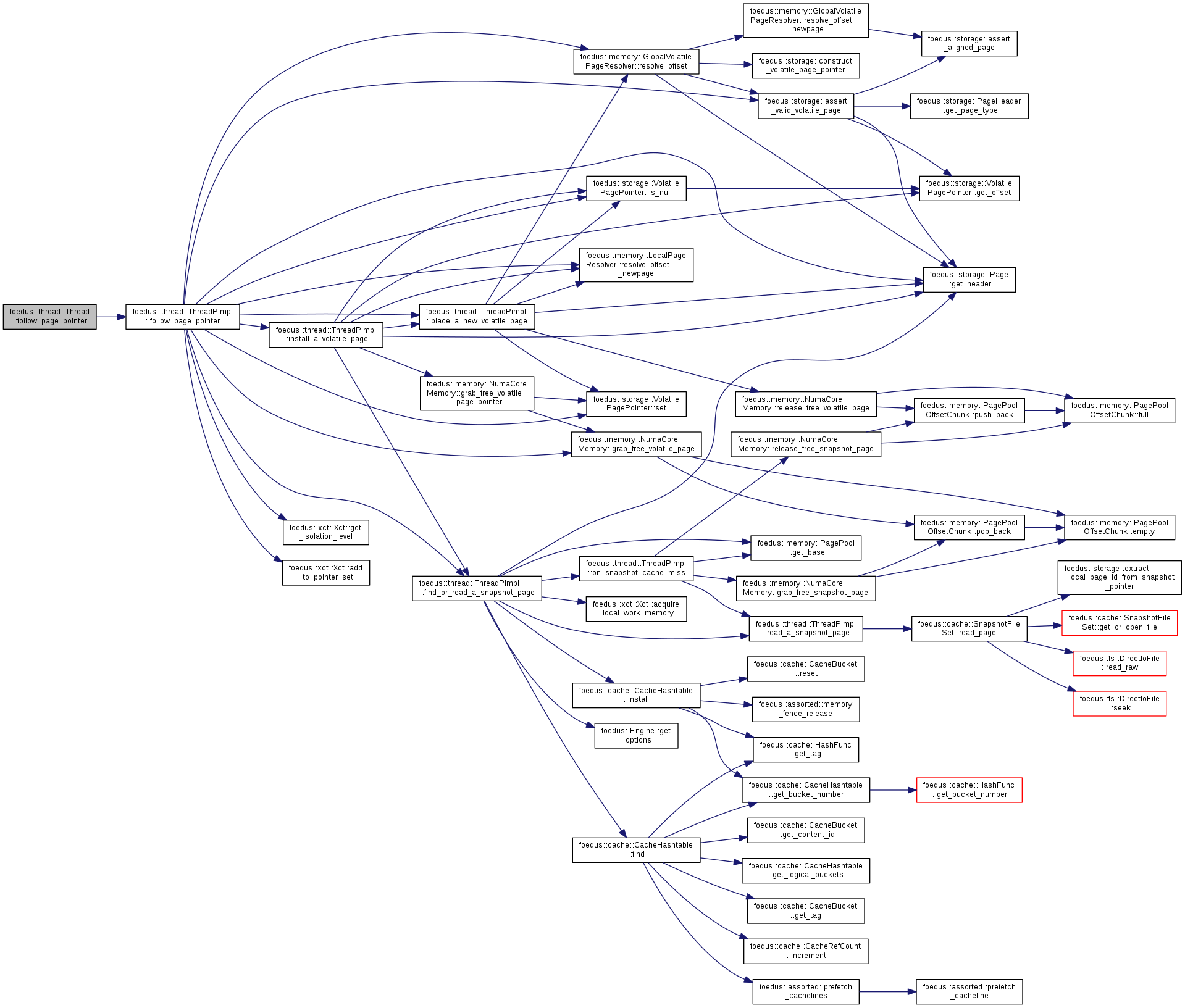

| ErrorCode | follow_page_pointer (storage::VolatilePageInit page_initializer, bool tolerate_null_pointer, bool will_modify, bool take_ptr_set_snapshot, storage::DualPagePointer *pointer, storage::Page **page, const storage::Page *parent, uint16_t index_in_parent) |

| A general method to follow (read) a page pointer. More... | |

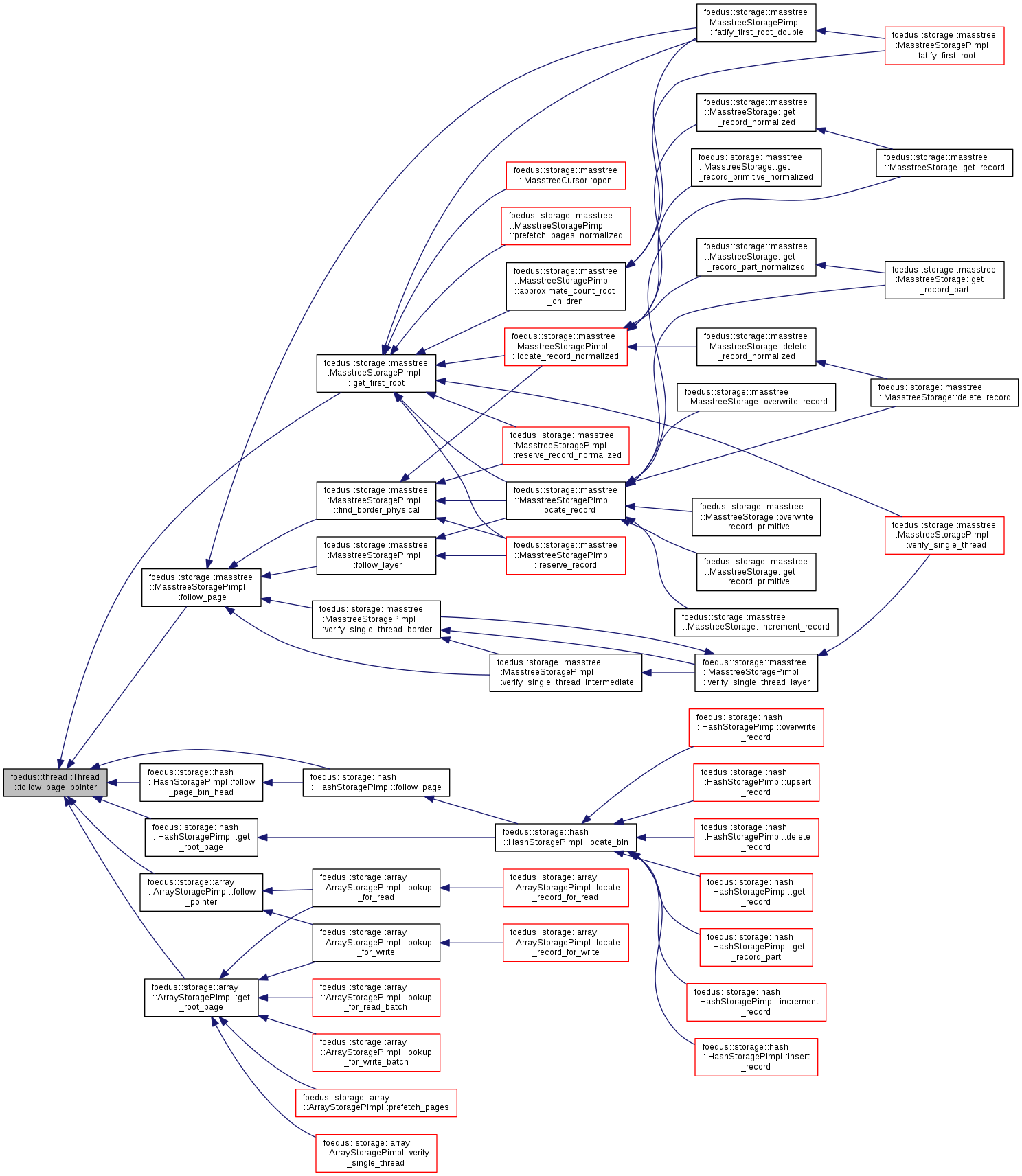

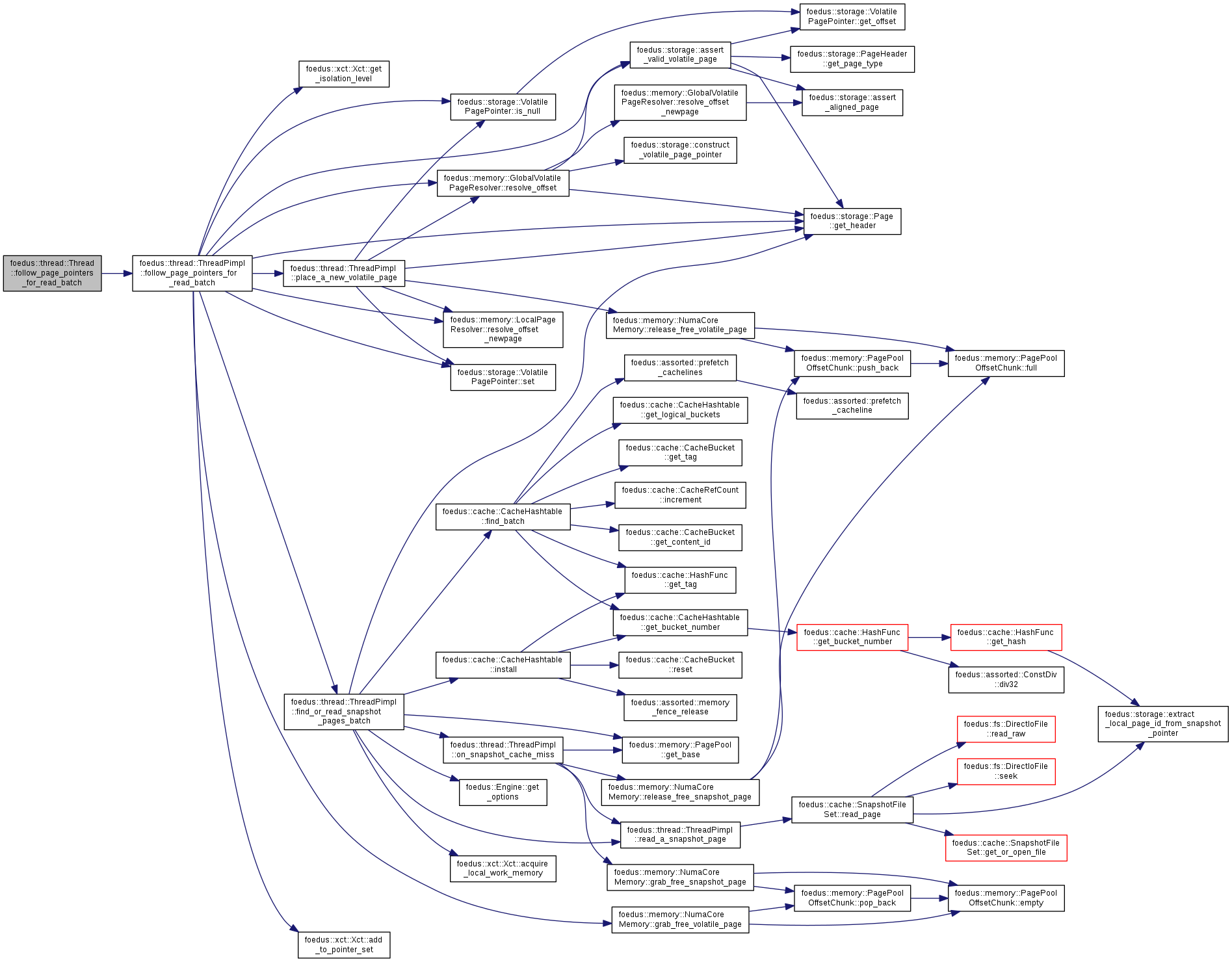

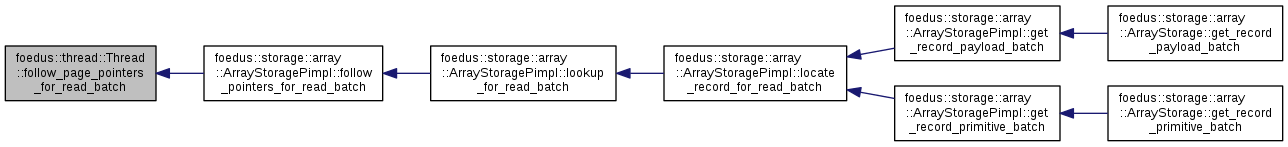

| ErrorCode | follow_page_pointers_for_read_batch (uint16_t batch_size, storage::VolatilePageInit page_initializer, bool tolerate_null_pointer, bool take_ptr_set_snapshot, storage::DualPagePointer **pointers, storage::Page **parents, const uint16_t *index_in_parents, bool *followed_snapshots, storage::Page **out) |

| Batched version of follow_page_pointer with will_modify==false. More... | |

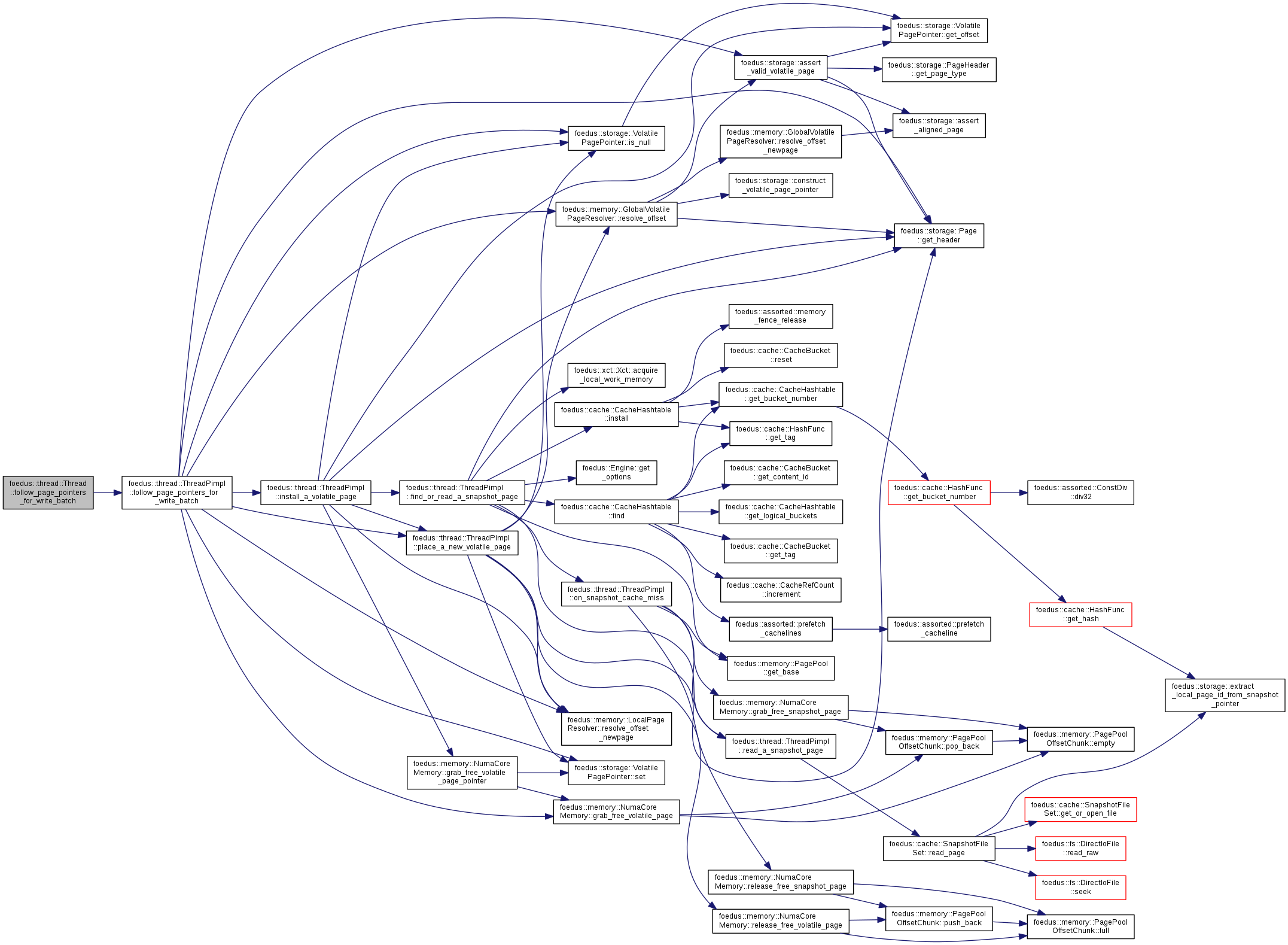

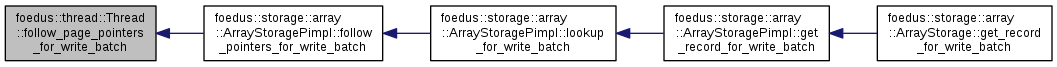

| ErrorCode | follow_page_pointers_for_write_batch (uint16_t batch_size, storage::VolatilePageInit page_initializer, storage::DualPagePointer **pointers, storage::Page **parents, const uint16_t *index_in_parents, storage::Page **out) |

| Batched version of follow_page_pointer with will_modify==true and tolerate_null_pointer==true. More... | |

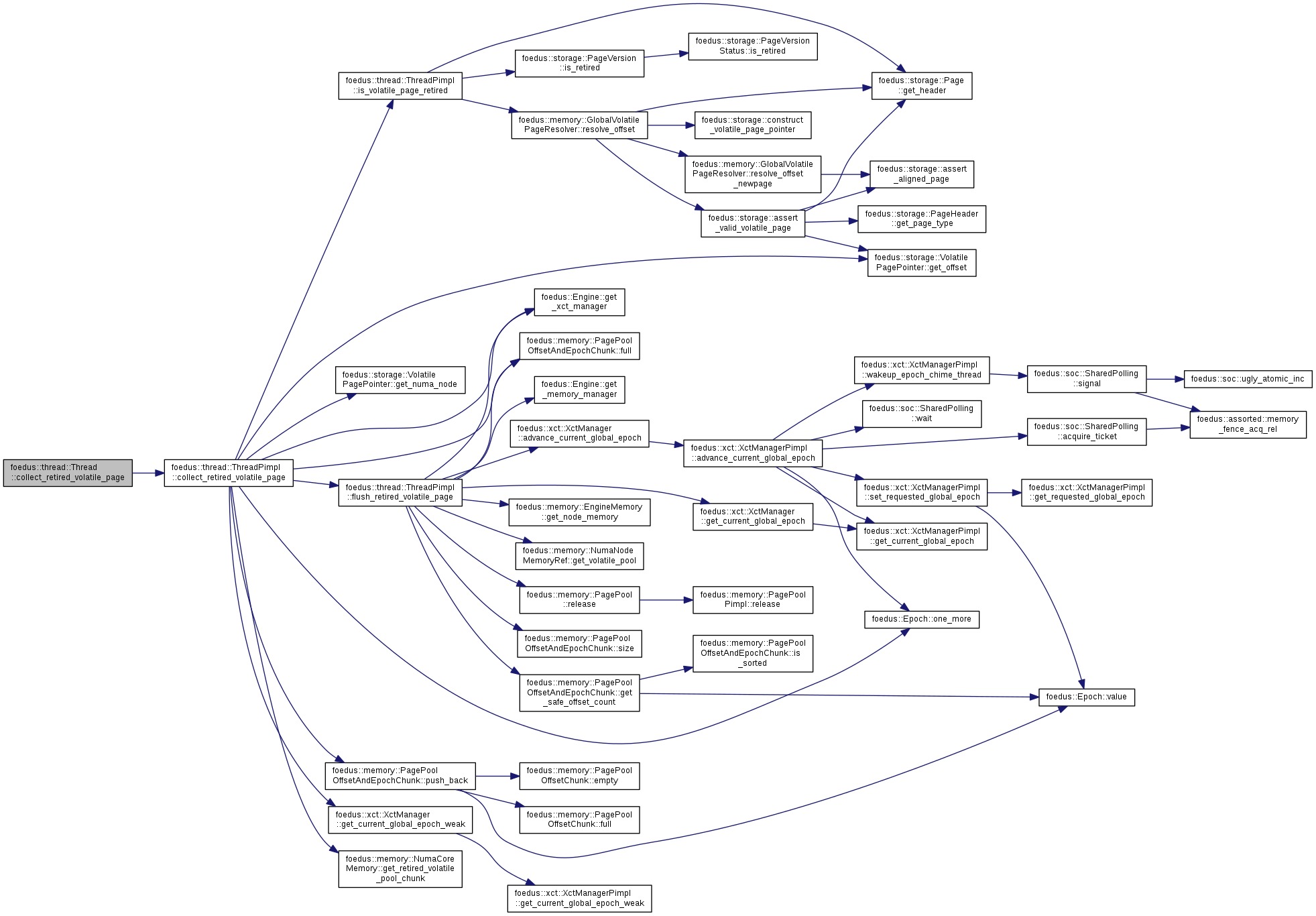

| void | collect_retired_volatile_page (storage::VolatilePagePointer ptr) |

| Keeps the specified volatile page as retired as of the current epoch. More... | |

| xct::McsRwSimpleBlock * | get_mcs_rw_simple_blocks () |

| Unconditionally takes MCS lock on the given mcs_lock. More... | |

| xct::McsRwExtendedBlock * | get_mcs_rw_extended_blocks () |

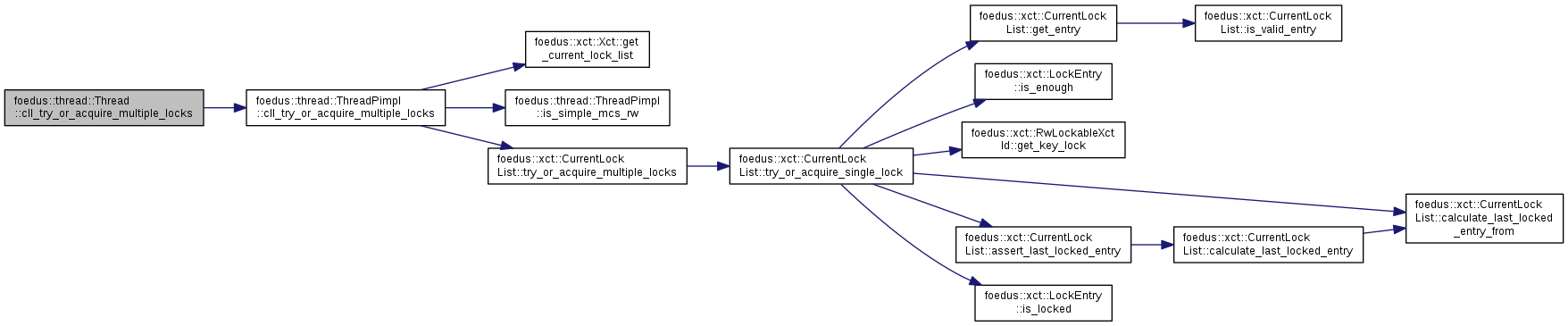

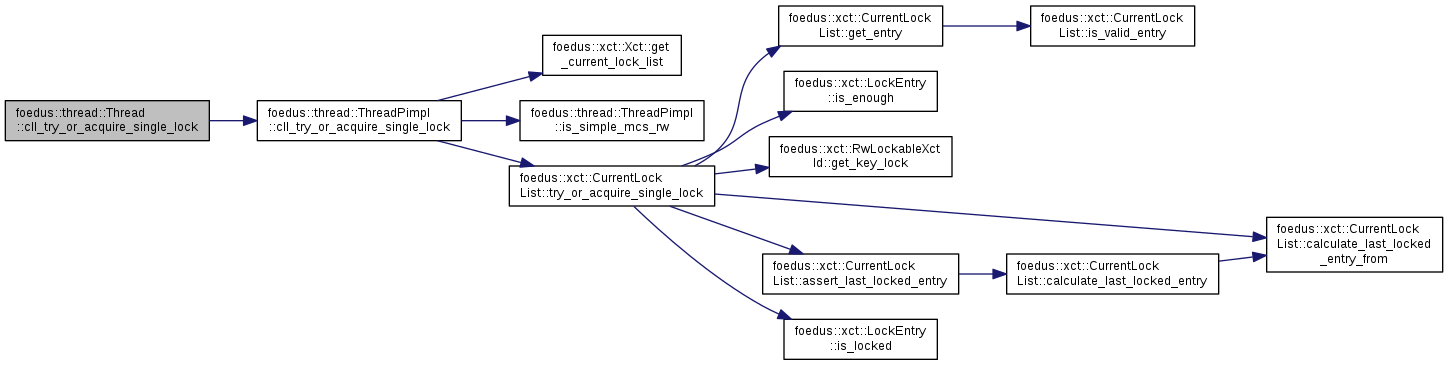

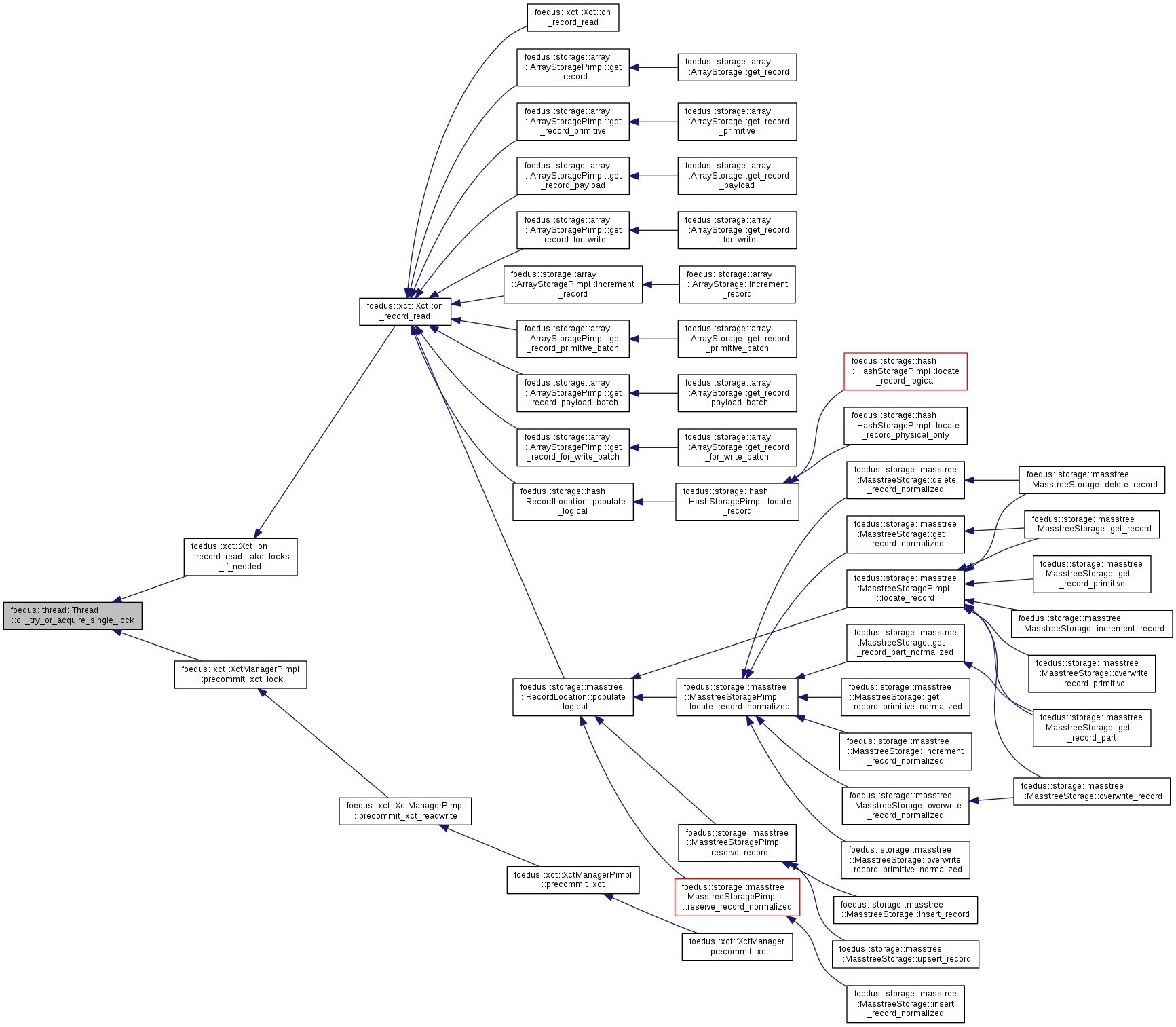

| ErrorCode | cll_try_or_acquire_single_lock (xct::LockListPosition pos) |

| Methods related to Current Lock List (CLL) These are the only interface in Thread to lock records. More... | |

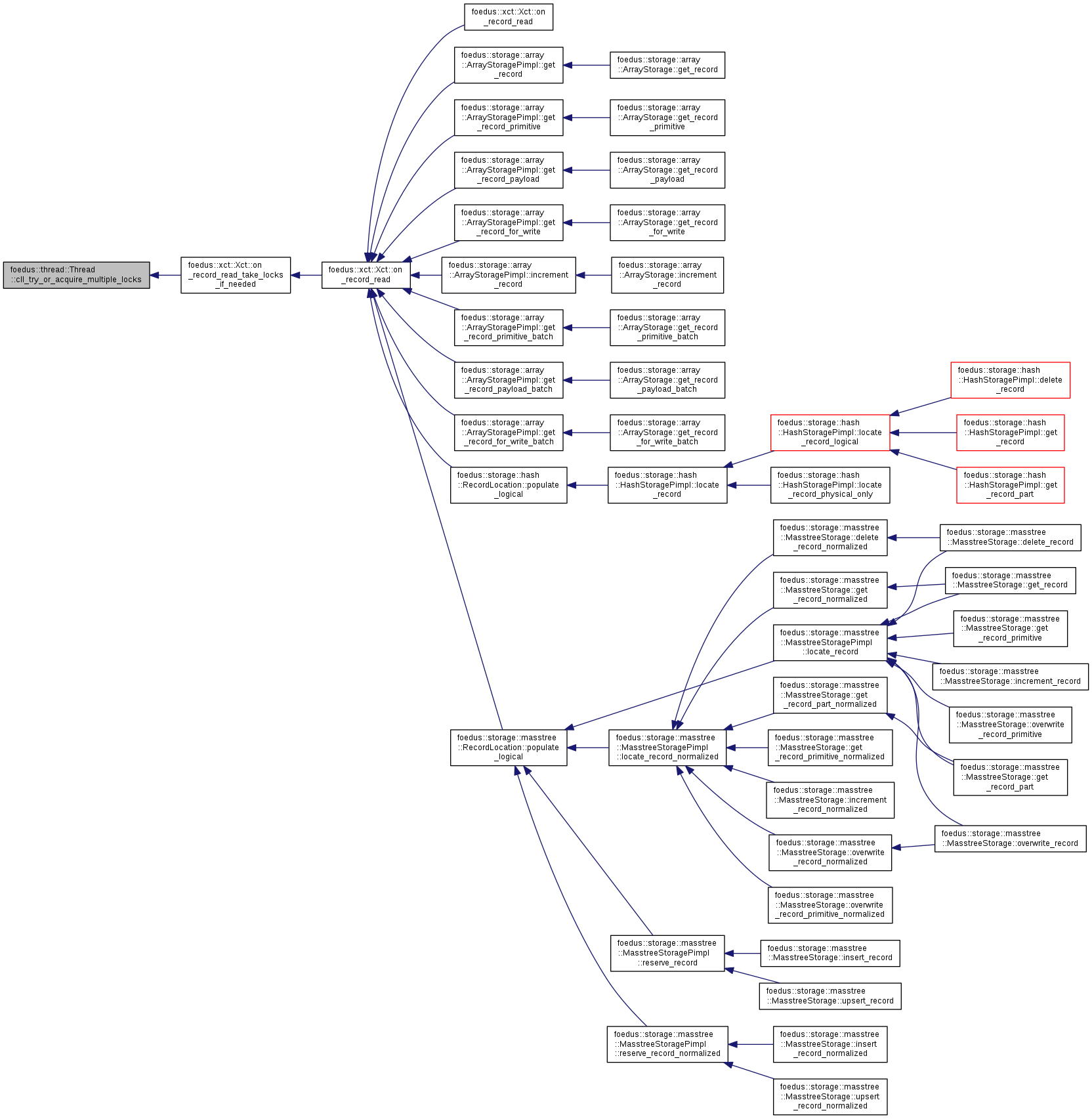

| ErrorCode | cll_try_or_acquire_multiple_locks (xct::LockListPosition upto_pos) |

| Acquire multiple locks up to the given position in canonical order. More... | |

| void | cll_giveup_all_locks_after (xct::UniversalLockId address) |

| This gives-up locks in CLL that are not yet taken. More... | |

| void | cll_giveup_all_locks_at_and_after (xct::UniversalLockId address) |

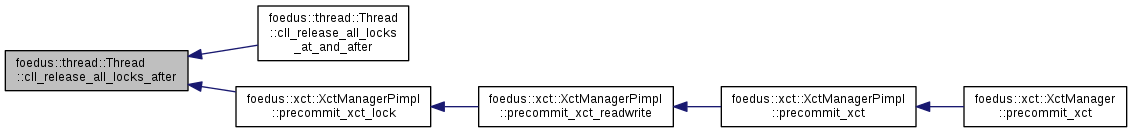

| void | cll_release_all_locks_after (xct::UniversalLockId address) |

| Release all locks in CLL of this thread whose addresses are canonically ordered before the parameter. More... | |

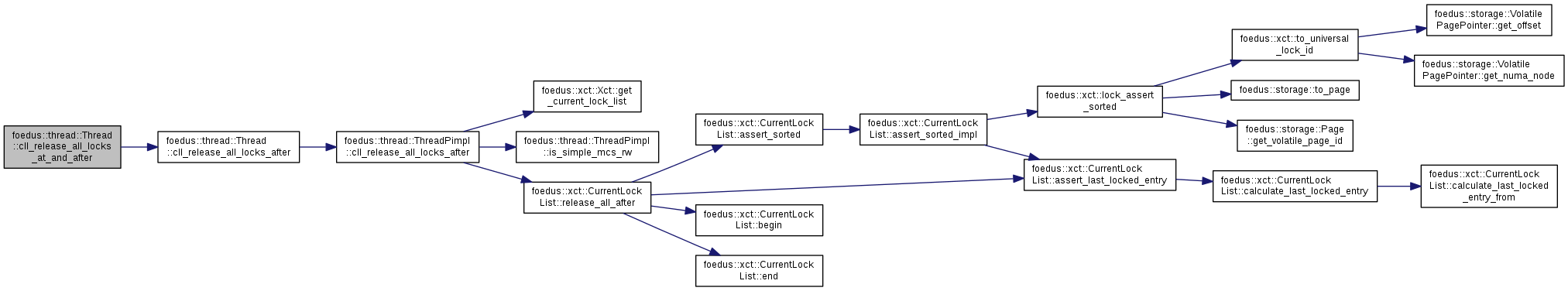

| void | cll_release_all_locks_at_and_after (xct::UniversalLockId address) |

| same as mcs_release_all_current_locks_after(address - 1) More... | |

| void | cll_release_all_locks () |

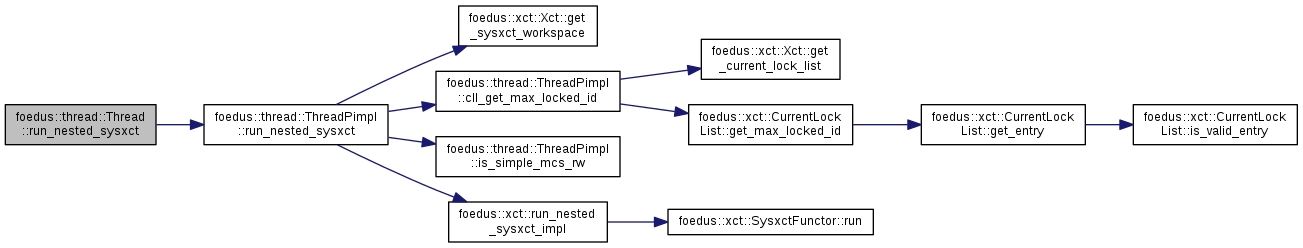

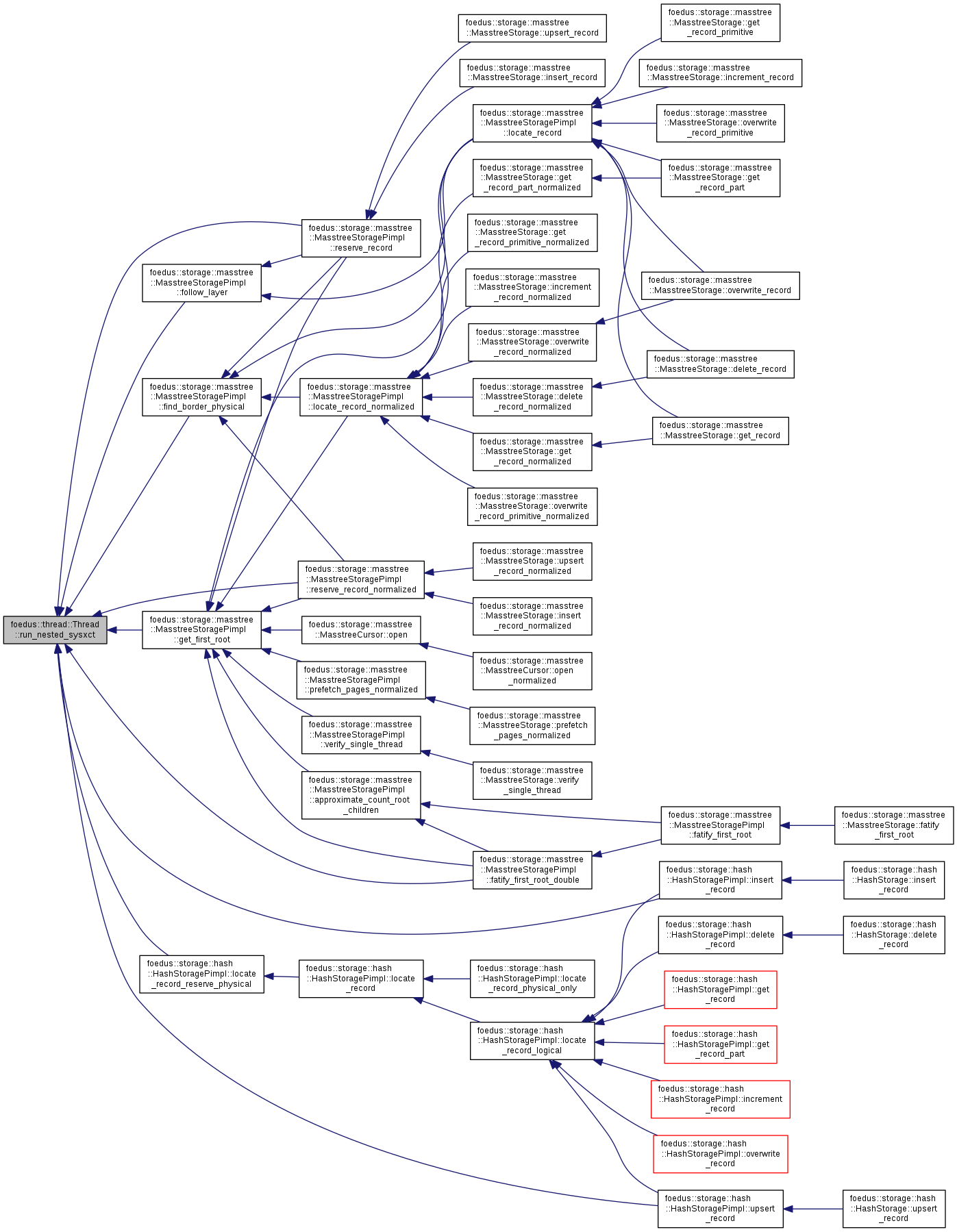

| ErrorCode | run_nested_sysxct (xct::SysxctFunctor *functor, uint32_t max_retries=0) |

| Methods related to System transactions (sysxct) nested under this thread. More... | |

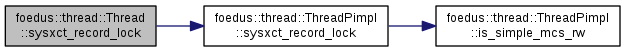

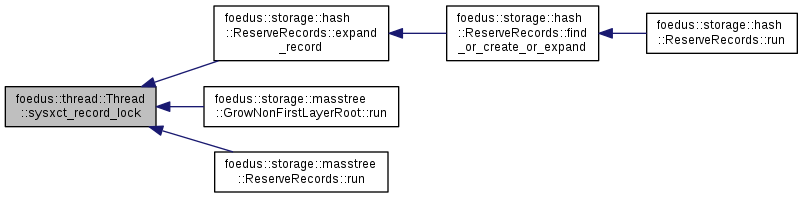

| ErrorCode | sysxct_record_lock (xct::SysxctWorkspace *sysxct_workspace, storage::VolatilePagePointer page_id, xct::RwLockableXctId *lock) |

| Takes a lock for a sysxct running under this thread. More... | |

| ErrorCode | sysxct_batch_record_locks (xct::SysxctWorkspace *sysxct_workspace, storage::VolatilePagePointer page_id, uint32_t lock_count, xct::RwLockableXctId **locks) |

| Takes a bunch of locks in the same page for a sysxct running under this thread. More... | |

| ErrorCode | sysxct_page_lock (xct::SysxctWorkspace *sysxct_workspace, storage::Page *page) |

| Takes a page lock in the same page for a sysxct running under this thread. More... | |

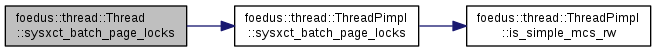

| ErrorCode | sysxct_batch_page_locks (xct::SysxctWorkspace *sysxct_workspace, uint32_t lock_count, storage::Page **pages) |

| Takes a bunch of page locks for a sysxct running under this thread. More... | |

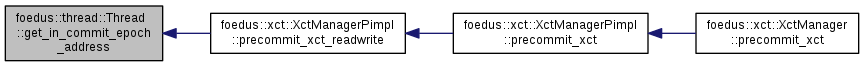

| Epoch * | get_in_commit_epoch_address () |

| Currently we don't have sysxct_release_locks() etc. More... | |

| ThreadPimpl * | get_pimpl () const |

| Returns the pimpl of this object. More... | |

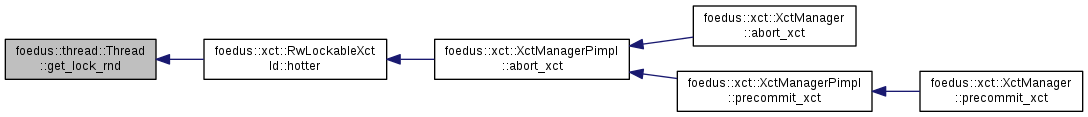

| assorted::UniformRandom & | get_lock_rnd () |

| bool | is_hot_page (const storage::Page *page) const |

Public Member Functions inherited from foedus::Initializable Public Member Functions inherited from foedus::Initializable | |

| virtual | ~Initializable () |

Friends | |

| std::ostream & | operator<< (std::ostream &o, const Thread &v) |

| Enumerator | |

|---|---|

| kMaxFindPagesBatch |

Max size for find_or_read_snapshot_pages_batch() etc. This must be same or less than CacheHashtable::kMaxFindBatchSize. |

Definition at line 50 of file thread.hpp.

|

delete |

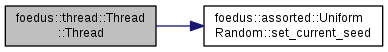

| foedus::thread::Thread::Thread | ( | Engine * | engine, |

| ThreadId | id, | ||

| ThreadGlobalOrdinal | global_ordinal | ||

| ) |

Definition at line 32 of file thread.cpp.

References foedus::assorted::UniformRandom::set_current_seed().

| foedus::thread::Thread::~Thread | ( | ) |

Definition at line 40 of file thread.cpp.

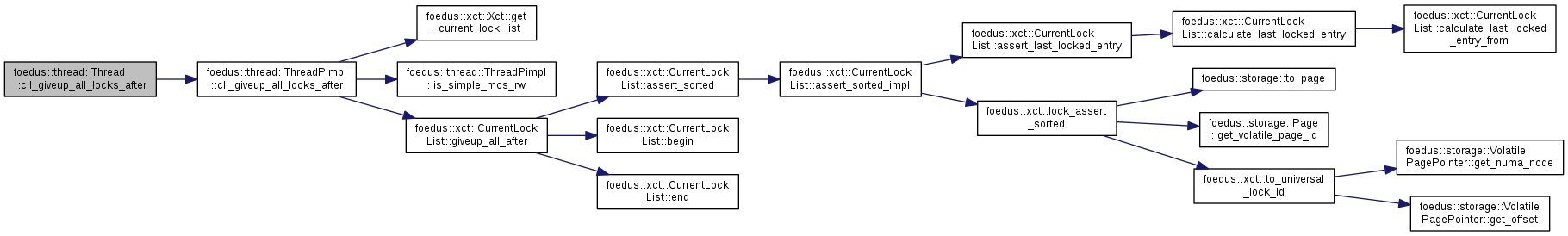

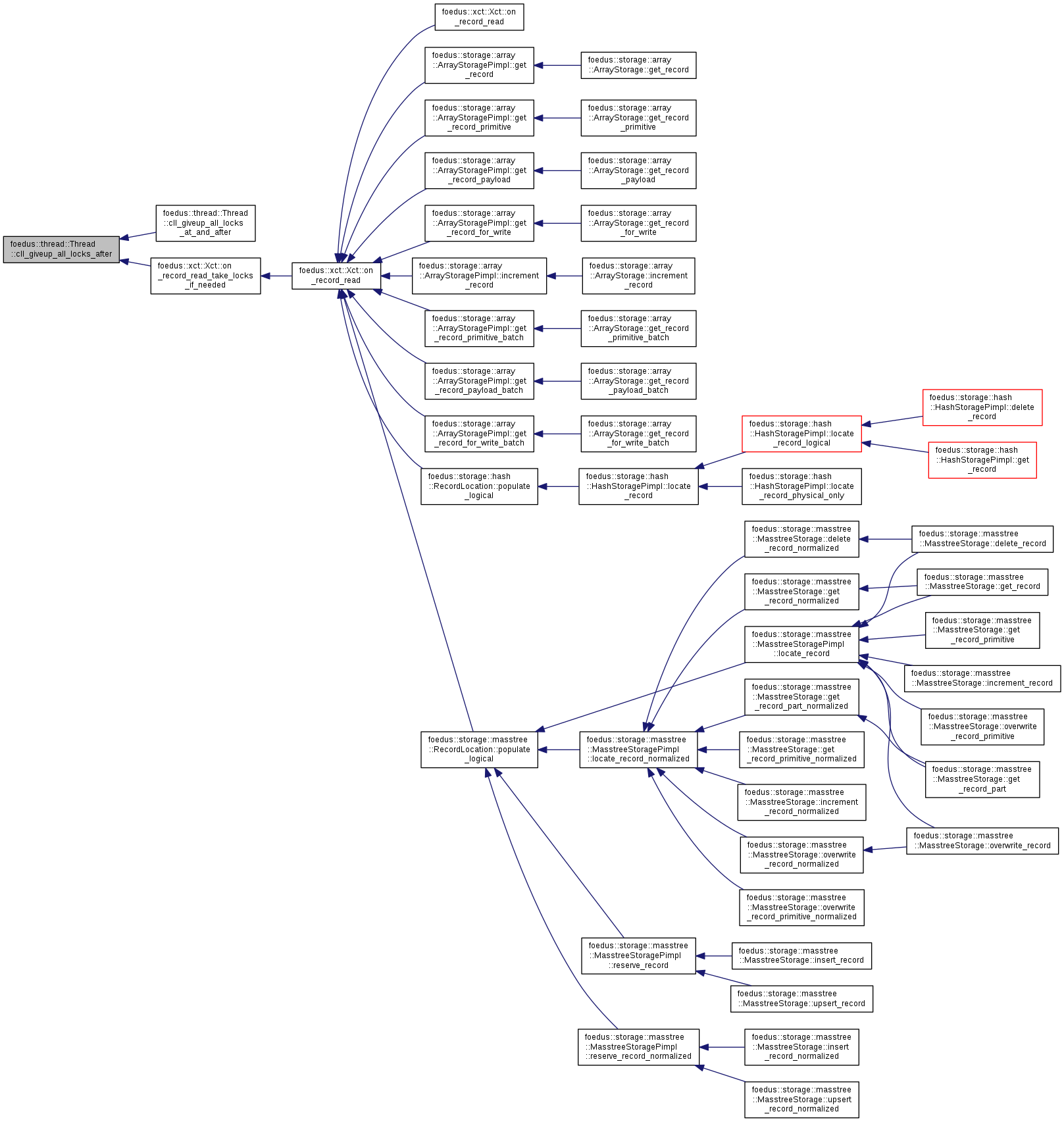

| void foedus::thread::Thread::cll_giveup_all_locks_after | ( | xct::UniversalLockId | address | ) |

This gives-up locks in CLL that are not yet taken.

preferred mode will be set to either NoLock or same as taken_mode, and all incomplete async locks will be cancelled.

Definition at line 847 of file thread_pimpl.cpp.

References foedus::thread::ThreadPimpl::cll_giveup_all_locks_after().

Referenced by cll_giveup_all_locks_at_and_after(), and foedus::xct::Xct::on_record_read_take_locks_if_needed().

|

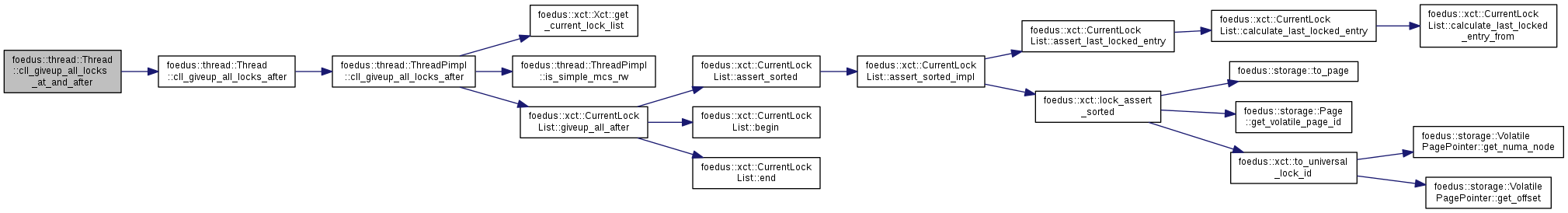

inline |

Definition at line 297 of file thread.hpp.

References cll_giveup_all_locks_after(), and foedus::xct::kNullUniversalLockId.

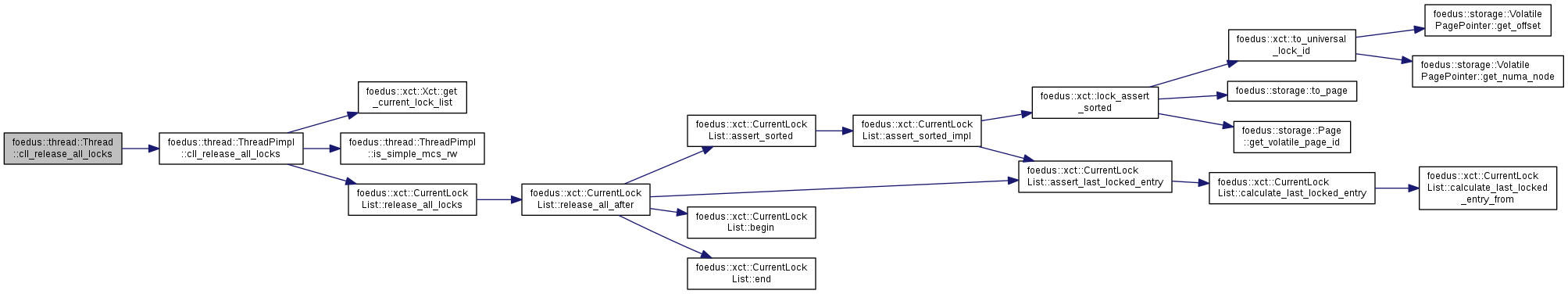

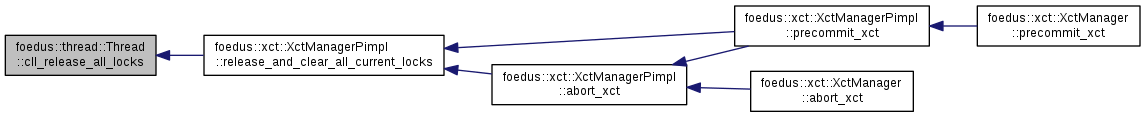

| void foedus::thread::Thread::cll_release_all_locks | ( | ) |

Definition at line 841 of file thread_pimpl.cpp.

References foedus::thread::ThreadPimpl::cll_release_all_locks().

Referenced by foedus::xct::XctManagerPimpl::release_and_clear_all_current_locks().

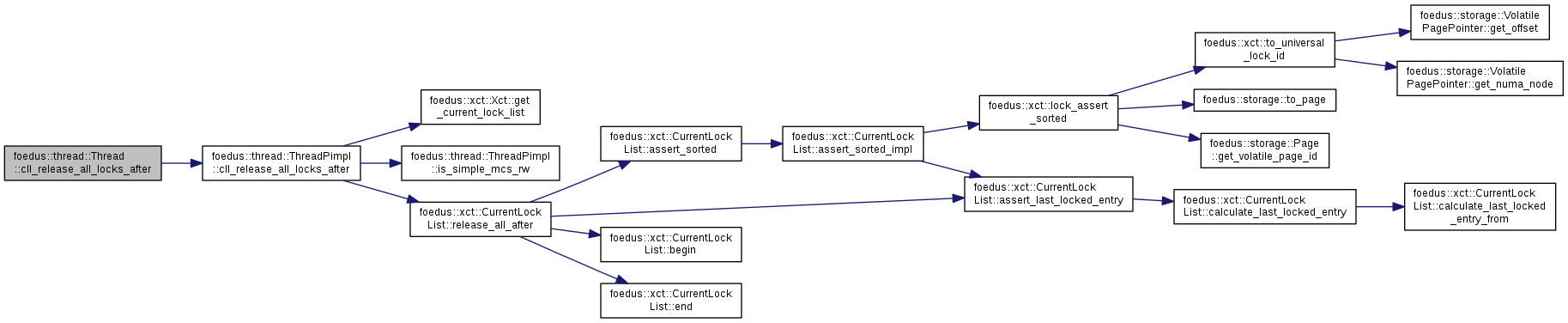

| void foedus::thread::Thread::cll_release_all_locks_after | ( | xct::UniversalLockId | address | ) |

Release all locks in CLL of this thread whose addresses are canonically ordered before the parameter.

This is used where we need to rule out the risk of deadlock.

Definition at line 844 of file thread_pimpl.cpp.

References foedus::thread::ThreadPimpl::cll_release_all_locks_after().

Referenced by cll_release_all_locks_at_and_after(), and foedus::xct::XctManagerPimpl::precommit_xct_lock().

|

inline |

same as mcs_release_all_current_locks_after(address - 1)

Definition at line 310 of file thread.hpp.

References cll_release_all_locks_after(), and foedus::xct::kNullUniversalLockId.

| ErrorCode foedus::thread::Thread::cll_try_or_acquire_multiple_locks | ( | xct::LockListPosition | upto_pos | ) |

Acquire multiple locks up to the given position in canonical order.

This is invoked by the thread to keep itself in canonical mode. This method is unconditional, meaning waits forever until we acquire the locks. Hence, this method must be invoked when the thread is still in canonical mode. Otherwise, it risks deadlock.

Definition at line 853 of file thread_pimpl.cpp.

References foedus::thread::ThreadPimpl::cll_try_or_acquire_multiple_locks().

Referenced by foedus::xct::Xct::on_record_read_take_locks_if_needed().

| ErrorCode foedus::thread::Thread::cll_try_or_acquire_single_lock | ( | xct::LockListPosition | pos | ) |

Methods related to Current Lock List (CLL) These are the only interface in Thread to lock records.

We previously had methods to directly lock without CLL, but we now prohibit bypassing CLL. CLL guarantees deadlock-free lock handling. CLL only handle record locks. In FOEDUS, normal transactions never take page lock. Only system transactions are allowed to take page locks.Methods below take or release locks, so they receive MCS_RW_IMPL, a template param. Inline definitions of CurrentLockList methods below.

To avoid vtable and allow inlining, we define them at the bottom of this file. Acquire one lock in this CLL.

This method automatically checks if we are following canonical mode, and acquire the lock unconditionally when in canonical mode (never returns until acquire), and try the lock instanteneously when not in canonical mode (returns RaceAbort immediately).

These are inlined primarily because they receive a template param, not because we want to inline for performance. We could do explicit instantiations, but not that lengthy, either. Just inlining them is easier in this case.

Definition at line 850 of file thread_pimpl.cpp.

References foedus::thread::ThreadPimpl::cll_try_or_acquire_single_lock().

Referenced by foedus::xct::Xct::on_record_read_take_locks_if_needed(), and foedus::xct::XctManagerPimpl::precommit_xct_lock().

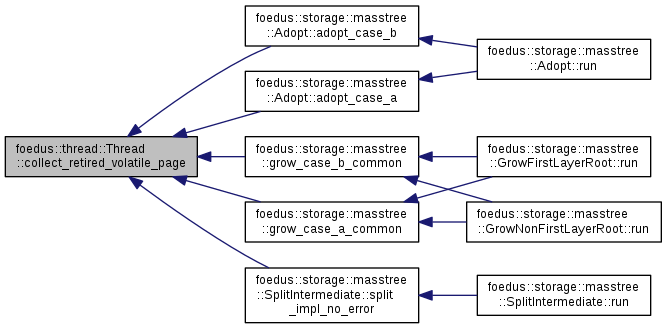

| void foedus::thread::Thread::collect_retired_volatile_page | ( | storage::VolatilePagePointer | ptr | ) |

Keeps the specified volatile page as retired as of the current epoch.

| [in] | ptr | the volatile page that has been retired |

This thread buffers such pages and returns to volatile page pool when it is safe to do so.

Definition at line 113 of file thread.cpp.

References foedus::thread::ThreadPimpl::collect_retired_volatile_page().

Referenced by foedus::storage::masstree::Adopt::adopt_case_a(), foedus::storage::masstree::Adopt::adopt_case_b(), foedus::storage::masstree::grow_case_a_common(), foedus::storage::masstree::grow_case_b_common(), and foedus::storage::masstree::SplitIntermediate::split_impl_no_error().

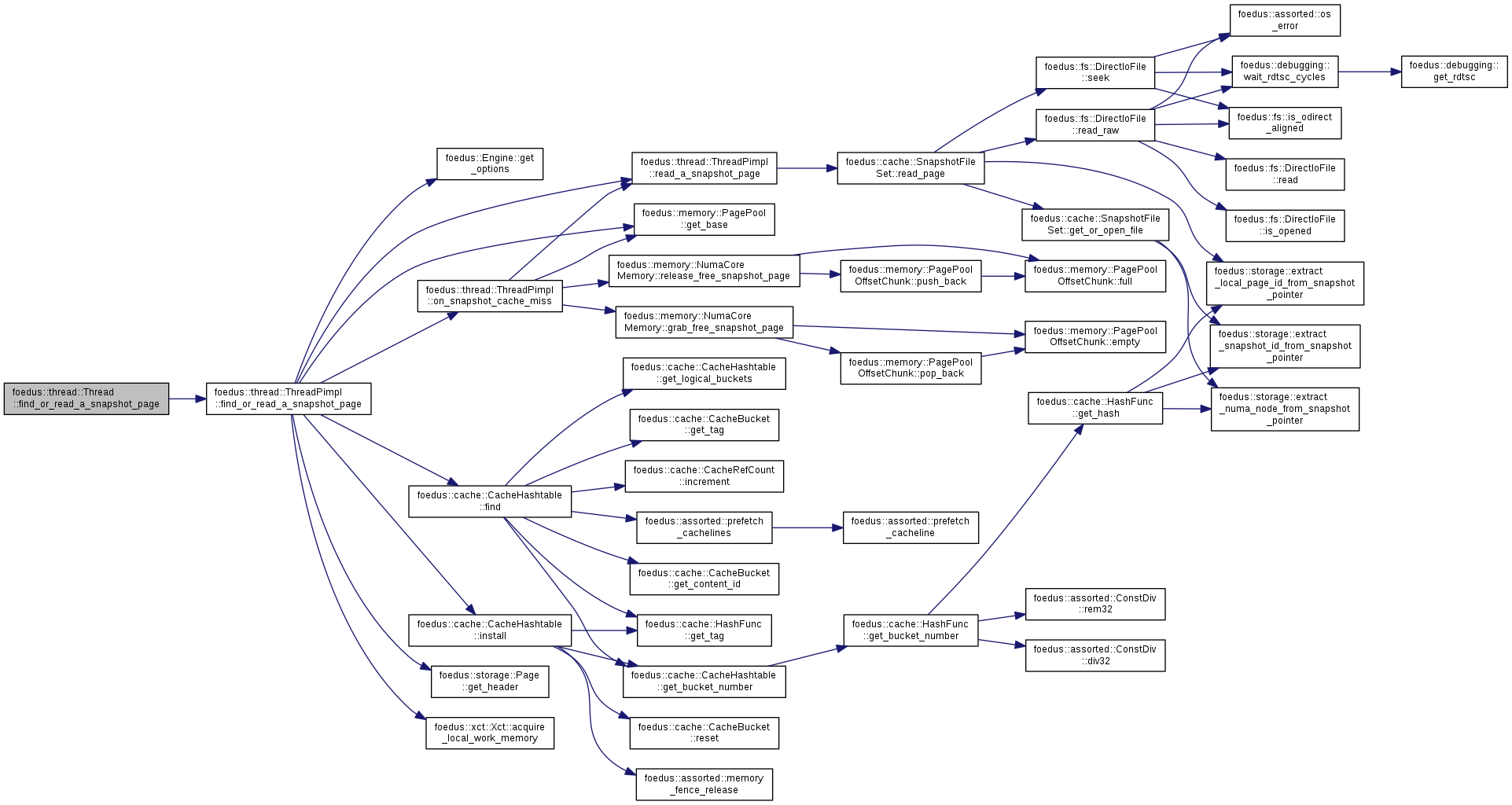

| ErrorCode foedus::thread::Thread::find_or_read_a_snapshot_page | ( | storage::SnapshotPagePointer | page_id, |

| storage::Page ** | out | ||

| ) |

Find the given page in snapshot cache, reading it if not found.

Definition at line 95 of file thread.cpp.

References foedus::thread::ThreadPimpl::find_or_read_a_snapshot_page().

Referenced by foedus::storage::hash::HashStoragePimpl::follow_page(), foedus::storage::hash::HashStoragePimpl::follow_page_bin_head(), foedus::storage::hash::HashStoragePimpl::locate_record_in_snapshot(), foedus::storage::masstree::MasstreeStoragePimpl::prefetch_pages_follow(), and foedus::storage::array::ArrayStoragePimpl::prefetch_pages_recurse().

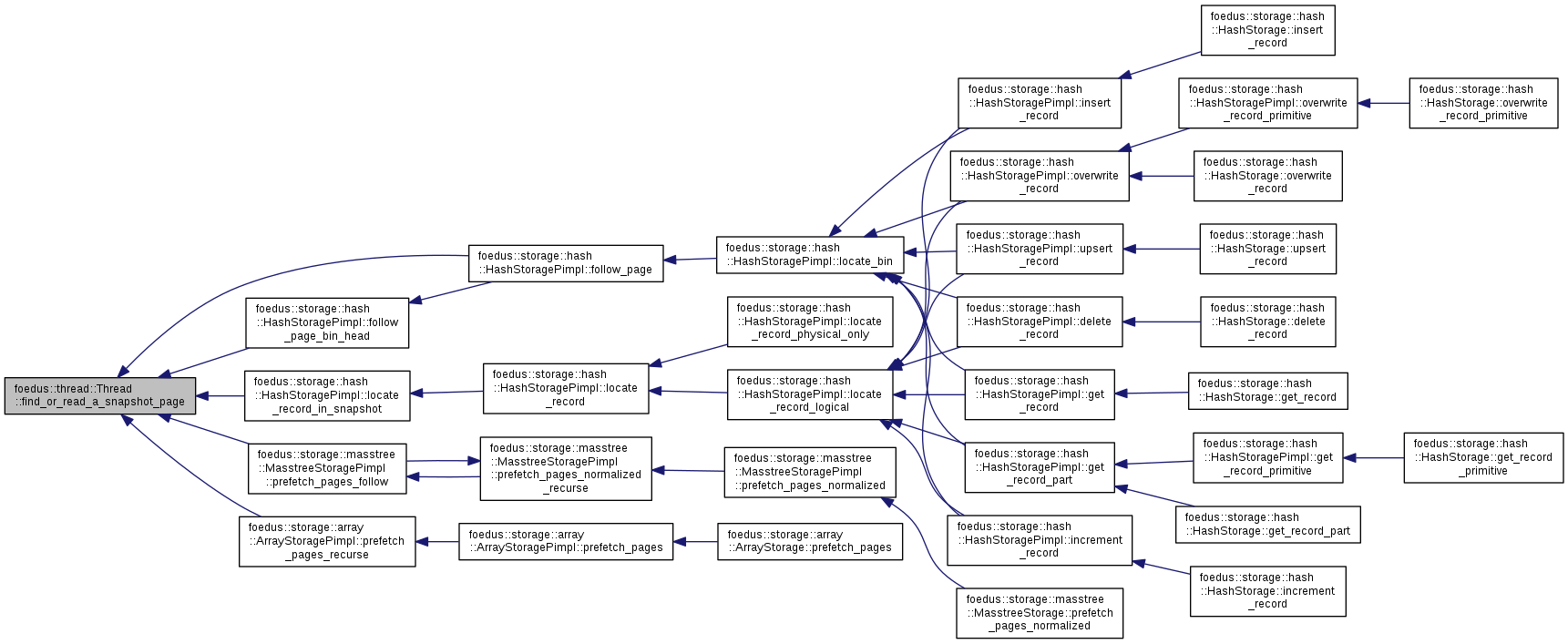

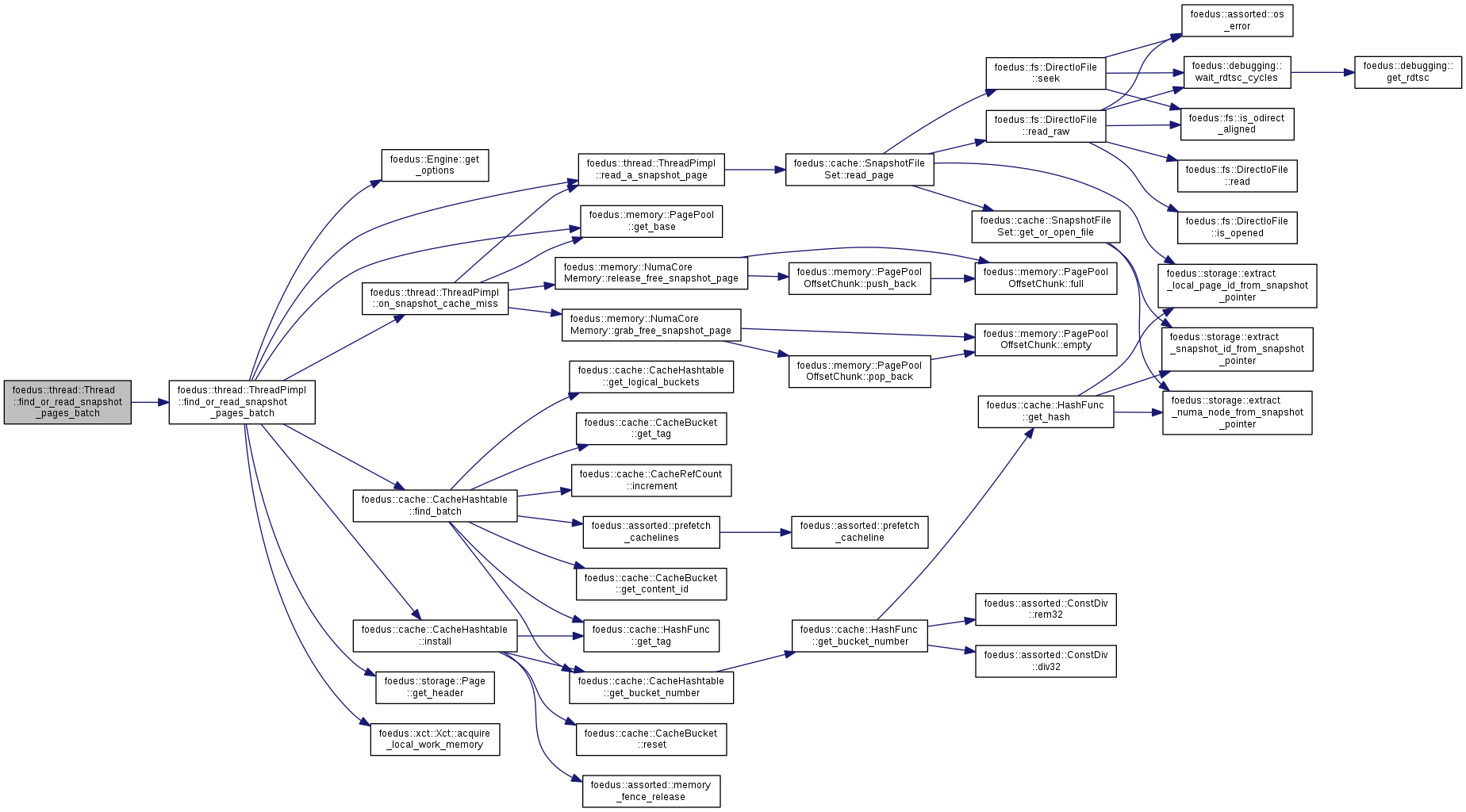

| ErrorCode foedus::thread::Thread::find_or_read_snapshot_pages_batch | ( | uint16_t | batch_size, |

| const storage::SnapshotPagePointer * | page_ids, | ||

| storage::Page ** | out | ||

| ) |

Batched version of find_or_read_a_snapshot_page().

| [in] | batch_size | Batch size. Must be kMaxFindPagesBatch or less. |

| [in] | page_ids | Array of Page IDs to look for, size=batch_size |

| [out] | out | Output |

This might perform much faster because of parallel prefetching, SIMD-ized hash calculattion (planned, not implemented yet) etc.

Definition at line 100 of file thread.cpp.

References foedus::thread::ThreadPimpl::find_or_read_snapshot_pages_batch().

| ErrorCode foedus::thread::Thread::follow_page_pointer | ( | storage::VolatilePageInit | page_initializer, |

| bool | tolerate_null_pointer, | ||

| bool | will_modify, | ||

| bool | take_ptr_set_snapshot, | ||

| storage::DualPagePointer * | pointer, | ||

| storage::Page ** | page, | ||

| const storage::Page * | parent, | ||

| uint16_t | index_in_parent | ||

| ) |

A general method to follow (read) a page pointer.

| [in] | page_initializer | callback function in case we need to initialize a new volatile page. null if it never happens (eg tolerate_null_pointer is false). |

| [in] | tolerate_null_pointer | when true and when both the volatile and snapshot pointers seem null, we return null page rather than creating a new volatile page. |

| [in] | will_modify | if true, we always return a non-null volatile page. This is true when we are to modify the page, such as insert/delete. |

| [in] | take_ptr_set_snapshot | if true, we add the address of volatile page pointer to ptr set when we do not follow a volatile pointer (null or volatile). This is usually true to make sure we get aware of new page installment by concurrent threads. If the isolation level is not serializable, we don't take ptr set anyways. |

| [in,out] | pointer | the page pointer. |

| [out] | page | the read page. |

| [in] | parent | the parent page that contains a pointer to the page. |

| [in] | index_in_parent | Some index (meaning depends on page type) of pointer in parent page to the page. |

This is the primary way to retrieve a page pointed by a pointer in various places. Depending on the current transaction's isolation level and storage type (represented by the various arguments), this does a whole lots of things to comply with our commit protocol.

Remember that DualPagePointer maintains volatile and snapshot pointers. We sometimes have to install a new volatile page or add the pointer to ptr set for serializability. That logic is a bit too lengthy method to duplicate in each page type, so generalize it here.

Definition at line 353 of file thread_pimpl.cpp.

References foedus::thread::ThreadPimpl::follow_page_pointer().

Referenced by foedus::storage::masstree::MasstreeStoragePimpl::follow_page(), foedus::storage::hash::HashStoragePimpl::follow_page(), foedus::storage::hash::HashStoragePimpl::follow_page_bin_head(), foedus::storage::array::ArrayStoragePimpl::follow_pointer(), foedus::storage::masstree::MasstreeStoragePimpl::get_first_root(), foedus::storage::array::ArrayStoragePimpl::get_root_page(), and foedus::storage::hash::HashStoragePimpl::get_root_page().

| ErrorCode foedus::thread::Thread::follow_page_pointers_for_read_batch | ( | uint16_t | batch_size, |

| storage::VolatilePageInit | page_initializer, | ||

| bool | tolerate_null_pointer, | ||

| bool | take_ptr_set_snapshot, | ||

| storage::DualPagePointer ** | pointers, | ||

| storage::Page ** | parents, | ||

| const uint16_t * | index_in_parents, | ||

| bool * | followed_snapshots, | ||

| storage::Page ** | out | ||

| ) |

Batched version of follow_page_pointer with will_modify==false.

| [in] | batch_size | Batch size. Must be kMaxFindPagesBatch or less. |

| [in] | page_initializer | callback function in case we need to initialize a new volatile page. null if it never happens (eg tolerate_null_pointer is false). |

| [in] | tolerate_null_pointer | when true and when both the volatile and snapshot pointers seem null, we return null page rather than creating a new volatile page. |

| [in] | take_ptr_set_snapshot | if true, we add the address of volatile page pointer to ptr set when we do not follow a volatile pointer (null or volatile). This is usually true to make sure we get aware of new page installment by concurrent threads. If the isolation level is not serializable, we don't take ptr set anyways. |

| [in,out] | pointers | the page pointers. |

| [in] | parents | the parent page that contains a pointer to the page. |

| [in] | index_in_parents | Some index (meaning depends on page type) of pointer in parent page to the page. |

| [in,out] | followed_snapshots | As input, must be same as parents[i]==followed_snapshots[i]. As output, same as out[i]->header().snapshot_. We receive/emit this to avoid accessing page header. |

| [out] | out | the read page. |

Definition at line 373 of file thread_pimpl.cpp.

References foedus::thread::ThreadPimpl::follow_page_pointers_for_read_batch().

Referenced by foedus::storage::array::ArrayStoragePimpl::follow_pointers_for_read_batch().

| ErrorCode foedus::thread::Thread::follow_page_pointers_for_write_batch | ( | uint16_t | batch_size, |

| storage::VolatilePageInit | page_initializer, | ||

| storage::DualPagePointer ** | pointers, | ||

| storage::Page ** | parents, | ||

| const uint16_t * | index_in_parents, | ||

| storage::Page ** | out | ||

| ) |

Batched version of follow_page_pointer with will_modify==true and tolerate_null_pointer==true.

| [in] | batch_size | Batch size. Must be kMaxFindPagesBatch or less. |

| [in] | page_initializer | callback function in case we need to initialize a new volatile page. null if it never happens (eg tolerate_null_pointer is false). |

| [in,out] | pointers | the page pointers. |

| [in] | parents | the parent page that contains a pointer to the page. |

| [in] | index_in_parents | Some index (meaning depends on page type) of pointer in parent page to the page. |

| [out] | out | the read page. |

Definition at line 395 of file thread_pimpl.cpp.

References foedus::thread::ThreadPimpl::follow_page_pointers_for_write_batch().

Referenced by foedus::storage::array::ArrayStoragePimpl::follow_pointers_for_write_batch().

| xct::Xct & foedus::thread::Thread::get_current_xct | ( | ) |

Returns the transaction that is currently running on this thread.

Definition at line 75 of file thread.cpp.

References foedus::thread::ThreadPimpl::current_xct_.

Referenced by foedus::xct::XctManagerPimpl::abort_xct(), foedus::storage::sequential::SequentialStorage::append_record(), foedus::xct::XctManagerPimpl::begin_xct(), foedus::xct::RetrospectiveLockList::construct(), foedus::storage::PageHeader::contains_hot_records(), foedus::storage::hash::HashStoragePimpl::follow_page_bin_head(), foedus::storage::array::ArrayStoragePimpl::get_record(), foedus::storage::array::ArrayStoragePimpl::get_record_for_write(), foedus::storage::array::ArrayStoragePimpl::get_record_for_write_batch(), foedus::storage::array::ArrayStoragePimpl::get_record_payload(), foedus::storage::array::ArrayStoragePimpl::get_record_payload_batch(), foedus::storage::array::ArrayStoragePimpl::get_record_primitive(), foedus::storage::array::ArrayStoragePimpl::get_record_primitive_batch(), foedus::storage::array::ArrayStoragePimpl::increment_record(), foedus::storage::array::ArrayStoragePimpl::increment_record_oneshot(), foedus::storage::hash::HashStoragePimpl::locate_bin(), foedus::storage::masstree::MasstreeStoragePimpl::locate_record(), foedus::storage::hash::HashStoragePimpl::locate_record(), foedus::storage::masstree::MasstreeStoragePimpl::locate_record_normalized(), foedus::storage::sequential::SequentialStorageControlBlock::optimistic_read_truncate_epoch(), foedus::storage::array::ArrayStoragePimpl::overwrite_record(), foedus::storage::array::ArrayStoragePimpl::overwrite_record_primitive(), foedus::xct::XctManagerPimpl::precommit_xct(), foedus::xct::XctManagerPimpl::precommit_xct_apply(), foedus::xct::XctManagerPimpl::precommit_xct_lock(), foedus::xct::XctManagerPimpl::precommit_xct_lock_batch_track_moved(), foedus::xct::XctManagerPimpl::precommit_xct_readwrite(), foedus::xct::XctManagerPimpl::precommit_xct_sort_access(), foedus::xct::XctManagerPimpl::precommit_xct_verify_page_version_set(), foedus::xct::XctManagerPimpl::precommit_xct_verify_pointer_set(), foedus::xct::XctManagerPimpl::precommit_xct_verify_readonly(), foedus::xct::XctManagerPimpl::precommit_xct_verify_readwrite(), foedus::storage::masstree::MasstreeStoragePimpl::register_record_write_log(), foedus::storage::hash::HashStoragePimpl::register_record_write_log(), foedus::xct::XctManagerPimpl::release_and_clear_all_current_locks(), foedus::storage::masstree::MasstreeStoragePimpl::reserve_record(), and foedus::storage::masstree::MasstreeStoragePimpl::reserve_record_normalized().

| Engine * foedus::thread::Thread::get_engine | ( | ) | const |

Definition at line 52 of file thread.cpp.

References foedus::thread::ThreadPimpl::engine_.

Referenced by foedus::log::EpochMarkerLogType::apply_engine(), foedus::storage::array::array_volatile_page_init(), foedus::storage::hash::hash_data_volatile_page_init(), foedus::xct::XctManagerPimpl::precommit_xct_lock(), foedus::storage::masstree::GrowFirstLayerRoot::run(), and foedus::storage::hash::HashStoragePimpl::verify_single_thread().

| const memory::GlobalVolatilePageResolver & foedus::thread::Thread::get_global_volatile_page_resolver | ( | ) | const |

Returns the page resolver to convert page ID to page pointer.

Just a shorthand for get_engine()->get_memory_manager()->get_global_volatile_page_resolver().

Definition at line 125 of file thread.cpp.

References foedus::thread::ThreadPimpl::global_volatile_page_resolver_.

Referenced by foedus::storage::masstree::grow_case_a_common(), foedus::storage::masstree::grow_case_b_common(), foedus::xct::Xct::on_record_read(), foedus::xct::Xct::on_record_read_take_locks_if_needed(), foedus::xct::XctManagerPimpl::precommit_xct_lock_track_write(), foedus::xct::XctManagerPimpl::precommit_xct_verify_track_read(), resolve(), resolve_newpage(), foedus::storage::masstree::GrowFirstLayerRoot::run(), foedus::storage::masstree::GrowNonFirstLayerRoot::run(), and foedus::storage::array::ArrayStoragePimpl::verify_single_thread().

| Epoch * foedus::thread::Thread::get_in_commit_epoch_address | ( | ) |

Currently we don't have sysxct_release_locks() etc.

All locks will be automatically released when the sysxct ends. Probably this is enough as sysxct should be short-living.

Definition at line 55 of file thread.cpp.

References foedus::thread::ThreadPimpl::control_block_, and foedus::thread::ThreadControlBlock::in_commit_epoch_.

Referenced by foedus::xct::XctManagerPimpl::precommit_xct_readwrite().

| const memory::LocalPageResolver & foedus::thread::Thread::get_local_volatile_page_resolver | ( | ) | const |

Returns page resolver to convert only local page ID to page pointer.

Definition at line 80 of file thread.cpp.

References foedus::thread::ThreadPimpl::local_volatile_page_resolver_.

Referenced by foedus::storage::masstree::allocate_new_border_page(), foedus::storage::sequential::SequentialStoragePimpl::append_record(), foedus::storage::hash::HashStoragePimpl::follow_page_bin_head(), resolve(), resolve_newpage(), foedus::storage::masstree::SplitBorder::run(), foedus::storage::masstree::ReserveRecords::run(), and foedus::storage::masstree::SplitIntermediate::split_impl_no_error().

|

inline |

Definition at line 368 of file thread.hpp.

Referenced by foedus::xct::RwLockableXctId::hotter().

| xct::McsRwExtendedBlock * foedus::thread::Thread::get_mcs_rw_extended_blocks | ( | ) |

Definition at line 836 of file thread_pimpl.cpp.

References foedus::thread::ThreadPimpl::mcs_rw_extended_blocks_.

| xct::McsRwSimpleBlock * foedus::thread::Thread::get_mcs_rw_simple_blocks | ( | ) |

Unconditionally takes MCS lock on the given mcs_lock.

MCS Locking methods We previously had the locking algorithm implemented here, but we separated it to xct_mcs_impl.cpp/hpp.

We now have only delegation here.Thread -> ThreadPimpl forwardings

Definition at line 833 of file thread_pimpl.cpp.

References foedus::thread::ThreadPimpl::mcs_rw_simple_blocks_.

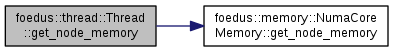

| memory::NumaNodeMemory * foedus::thread::Thread::get_node_memory | ( | ) | const |

Returns the node-shared memory repository of the NUMA node this thread belongs to.

Definition at line 58 of file thread.cpp.

References foedus::thread::ThreadPimpl::core_memory_, and foedus::memory::NumaCoreMemory::get_node_memory().

|

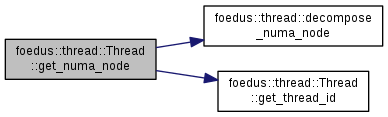

inline |

Definition at line 66 of file thread.hpp.

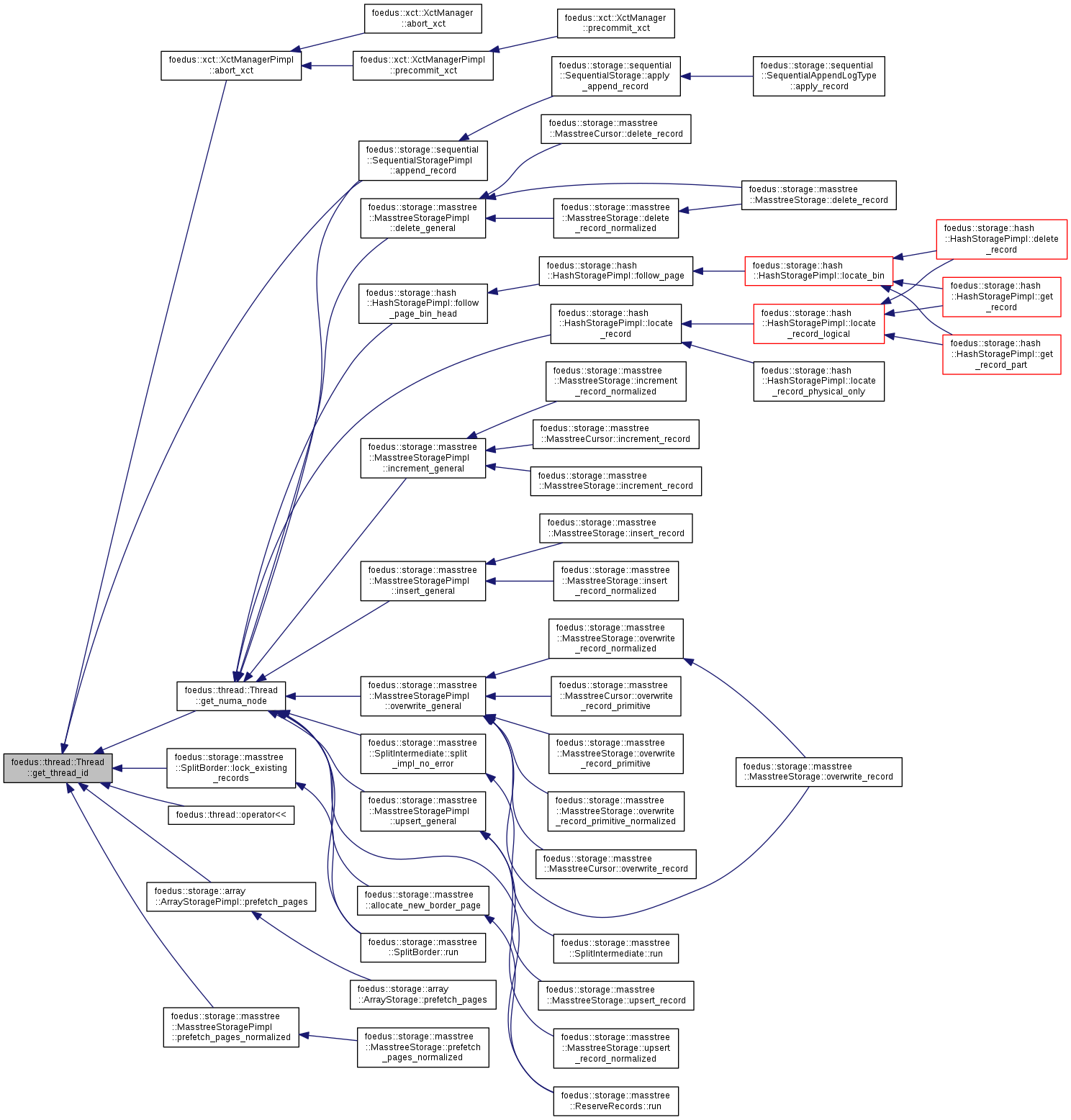

References foedus::thread::decompose_numa_node(), and get_thread_id().

Referenced by foedus::storage::masstree::allocate_new_border_page(), foedus::storage::sequential::SequentialStoragePimpl::append_record(), foedus::storage::masstree::MasstreeStoragePimpl::delete_general(), foedus::storage::hash::HashStoragePimpl::follow_page_bin_head(), foedus::storage::masstree::MasstreeStoragePimpl::increment_general(), foedus::storage::masstree::MasstreeStoragePimpl::insert_general(), foedus::storage::hash::HashStoragePimpl::locate_record(), foedus::storage::masstree::MasstreeStoragePimpl::overwrite_general(), foedus::storage::masstree::SplitBorder::run(), foedus::storage::masstree::ReserveRecords::run(), foedus::storage::masstree::SplitIntermediate::split_impl_no_error(), and foedus::storage::masstree::MasstreeStoragePimpl::upsert_general().

|

inline |

Returns the pimpl of this object.

Use it only when you know what you are doing.

Definition at line 366 of file thread.hpp.

| uint64_t foedus::thread::Thread::get_snapshot_cache_hits | ( | ) | const |

[statistics] count of cache hits in snapshot caches

Definition at line 62 of file thread.cpp.

References foedus::thread::ThreadPimpl::control_block_, and foedus::thread::ThreadControlBlock::stat_snapshot_cache_hits_.

| uint64_t foedus::thread::Thread::get_snapshot_cache_misses | ( | ) | const |

[statistics] count of cache misses in snapshot caches

Definition at line 66 of file thread.cpp.

References foedus::thread::ThreadPimpl::control_block_, and foedus::thread::ThreadControlBlock::stat_snapshot_cache_misses_.

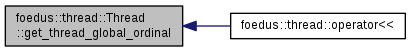

| ThreadGlobalOrdinal foedus::thread::Thread::get_thread_global_ordinal | ( | ) | const |

Definition at line 54 of file thread.cpp.

References foedus::thread::ThreadPimpl::global_ordinal_.

Referenced by foedus::thread::operator<<().

| ThreadId foedus::thread::Thread::get_thread_id | ( | ) | const |

Definition at line 53 of file thread.cpp.

References foedus::thread::ThreadPimpl::id_.

Referenced by foedus::xct::XctManagerPimpl::abort_xct(), foedus::storage::sequential::SequentialStoragePimpl::append_record(), get_numa_node(), foedus::storage::masstree::SplitBorder::lock_existing_records(), foedus::thread::operator<<(), foedus::storage::array::ArrayStoragePimpl::prefetch_pages(), and foedus::storage::masstree::MasstreeStoragePimpl::prefetch_pages_normalized().

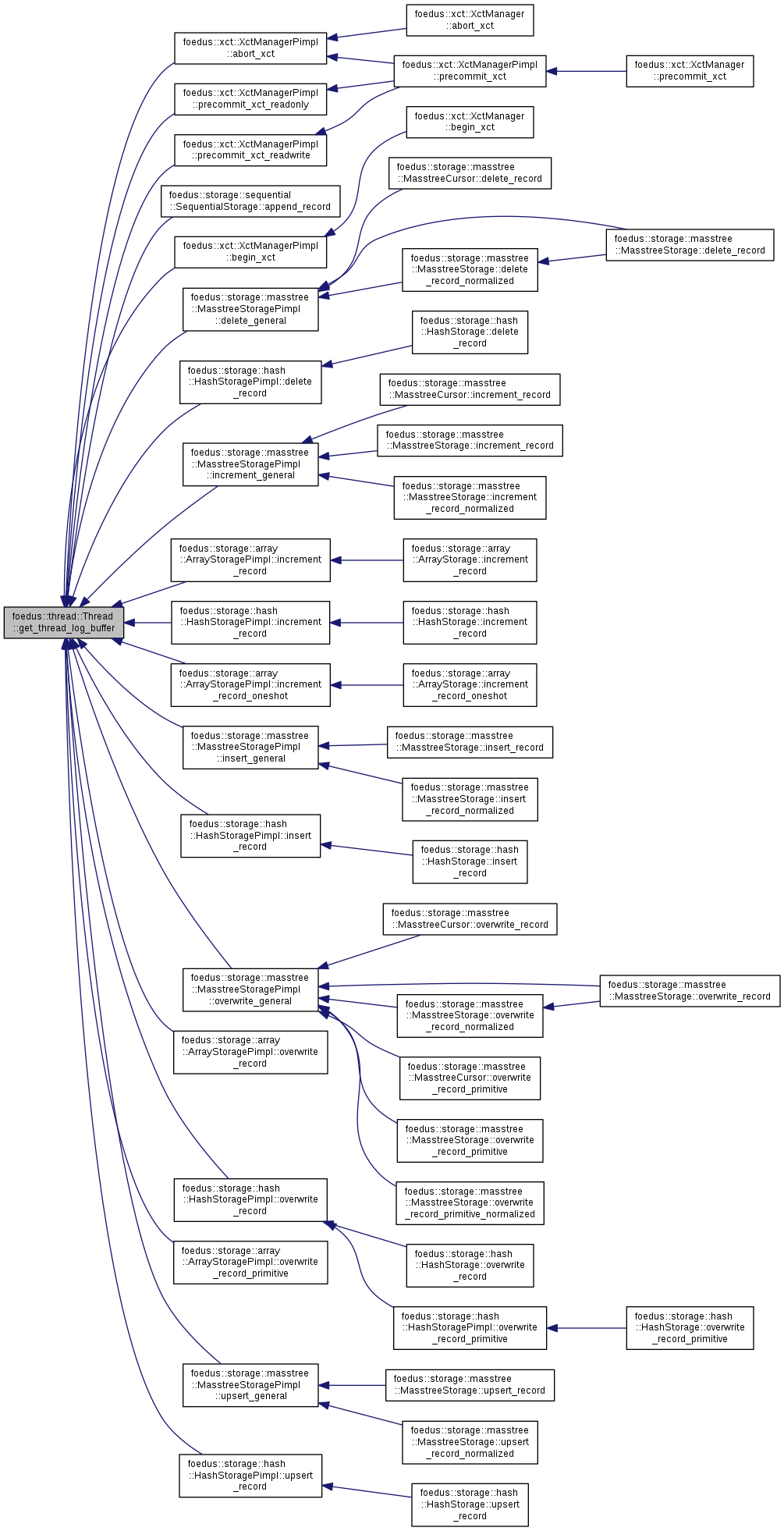

| log::ThreadLogBuffer & foedus::thread::Thread::get_thread_log_buffer | ( | ) |

Returns the private log buffer for this thread.

Definition at line 78 of file thread.cpp.

References foedus::thread::ThreadPimpl::log_buffer_.

Referenced by foedus::xct::XctManagerPimpl::abort_xct(), foedus::storage::sequential::SequentialStorage::append_record(), foedus::xct::XctManagerPimpl::begin_xct(), foedus::storage::masstree::MasstreeStoragePimpl::delete_general(), foedus::storage::hash::HashStoragePimpl::delete_record(), foedus::storage::masstree::MasstreeStoragePimpl::increment_general(), foedus::storage::array::ArrayStoragePimpl::increment_record(), foedus::storage::hash::HashStoragePimpl::increment_record(), foedus::storage::array::ArrayStoragePimpl::increment_record_oneshot(), foedus::storage::masstree::MasstreeStoragePimpl::insert_general(), foedus::storage::hash::HashStoragePimpl::insert_record(), foedus::storage::masstree::MasstreeStoragePimpl::overwrite_general(), foedus::storage::array::ArrayStoragePimpl::overwrite_record(), foedus::storage::hash::HashStoragePimpl::overwrite_record(), foedus::storage::array::ArrayStoragePimpl::overwrite_record_primitive(), foedus::xct::XctManagerPimpl::precommit_xct_readonly(), foedus::xct::XctManagerPimpl::precommit_xct_readwrite(), foedus::storage::masstree::MasstreeStoragePimpl::upsert_general(), and foedus::storage::hash::HashStoragePimpl::upsert_record().

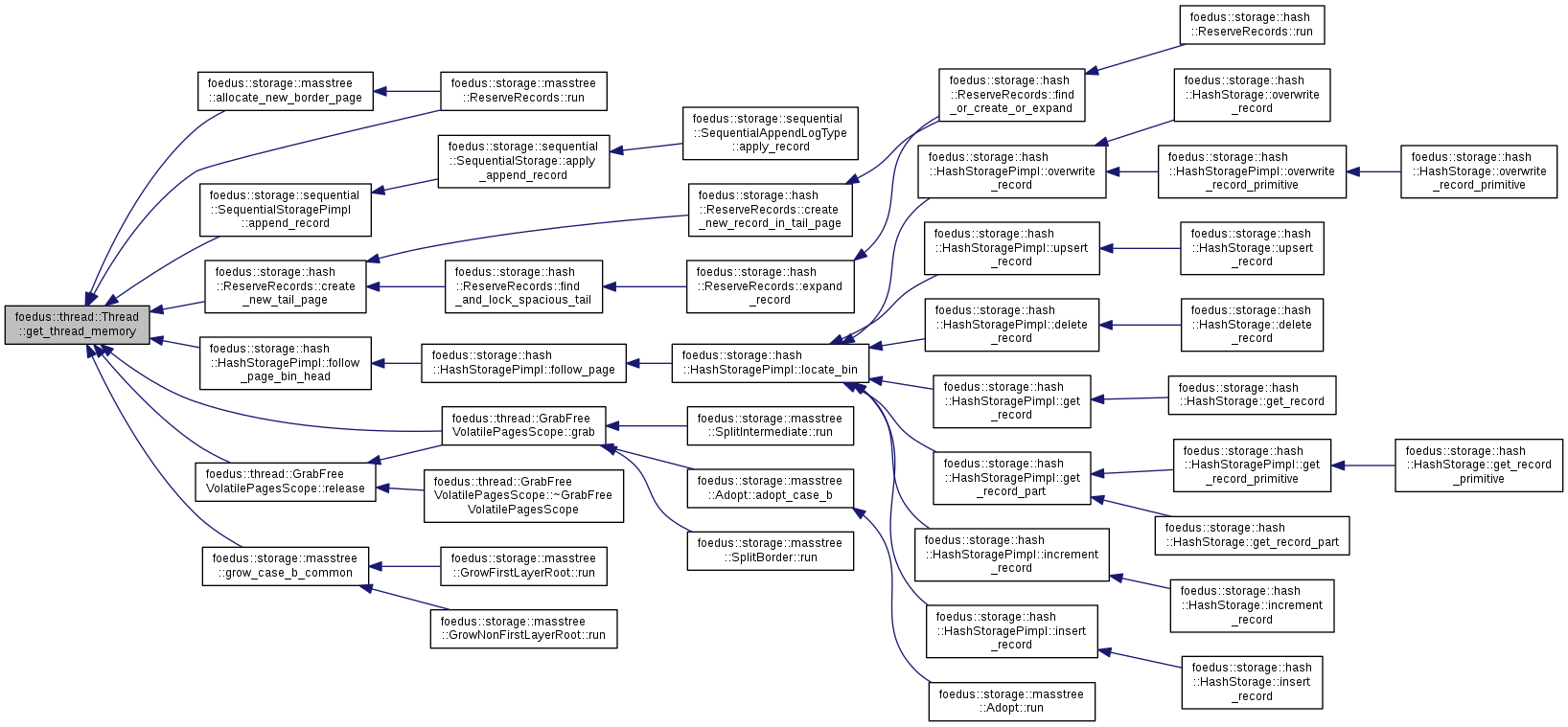

| memory::NumaCoreMemory * foedus::thread::Thread::get_thread_memory | ( | ) | const |

Returns the private memory repository of this thread.

Definition at line 57 of file thread.cpp.

References foedus::thread::ThreadPimpl::core_memory_.

Referenced by foedus::storage::masstree::allocate_new_border_page(), foedus::storage::sequential::SequentialStoragePimpl::append_record(), foedus::storage::hash::ReserveRecords::create_new_tail_page(), foedus::storage::hash::HashStoragePimpl::follow_page_bin_head(), foedus::thread::GrabFreeVolatilePagesScope::grab(), foedus::storage::masstree::grow_case_b_common(), foedus::thread::GrabFreeVolatilePagesScope::release(), and foedus::storage::masstree::ReserveRecords::run().

|

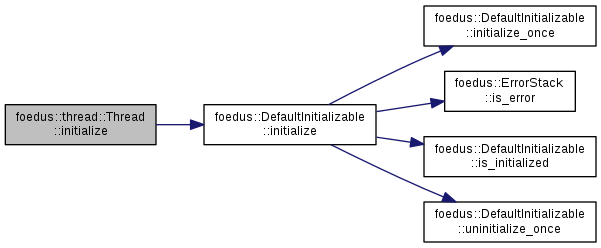

overridevirtual |

Acquires resources in this object, usually called right after constructor.

If and only if the return value was not an error, is_initialized() will return TRUE. This method is usually not idempotent, but some implementation can choose to be. In that case, the implementation class should clarify that it's idempotent. This method is responsible for releasing all acquired resources when initialization fails. This method itself is NOT thread-safe. Do not call this in a racy situation.

Implements foedus::Initializable.

Definition at line 45 of file thread.cpp.

References CHECK_ERROR, foedus::DefaultInitializable::initialize(), and foedus::kRetOk.

| ErrorCode foedus::thread::Thread::install_a_volatile_page | ( | storage::DualPagePointer * | pointer, |

| storage::Page ** | installed_page | ||

| ) |

Installs a volatile page to the given dual pointer as a copy of the snapshot page.

| [in,out] | pointer | dual pointer. volatile pointer will be modified. |

| [out] | installed_page | physical pointer to the installed volatile page. This might point to a page installed by a concurrent thread. |

This is called when a dual pointer has only a snapshot pointer, in other words it is "clean", to create a volatile version for modification.

Definition at line 107 of file thread.cpp.

References foedus::thread::ThreadPimpl::install_a_volatile_page().

Referenced by foedus::storage::masstree::MasstreeStoragePimpl::prefetch_pages_follow(), and foedus::storage::array::ArrayStoragePimpl::prefetch_pages_recurse().

|

overridevirtual |

Returns whether the object has been already initialized or not.

Implements foedus::Initializable.

Definition at line 49 of file thread.cpp.

References foedus::DefaultInitializable::is_initialized().

| bool foedus::thread::Thread::is_running_xct | ( | ) | const |

Returns if this thread is running an active transaction.

Definition at line 76 of file thread.cpp.

References foedus::thread::ThreadPimpl::current_xct_, and foedus::xct::Xct::is_active().

| ErrorCode foedus::thread::Thread::read_a_snapshot_page | ( | storage::SnapshotPagePointer | page_id, |

| storage::Page * | buffer | ||

| ) |

Read a snapshot page using the thread-local file descriptor set.

Definition at line 84 of file thread.cpp.

References foedus::thread::ThreadPimpl::read_a_snapshot_page().

| ErrorCode foedus::thread::Thread::read_snapshot_pages | ( | storage::SnapshotPagePointer | page_id_begin, |

| uint32_t | page_count, | ||

| storage::Page * | buffer | ||

| ) |

Read contiguous pages in one shot.

Other than that same as read_a_snapshot_page().

Definition at line 89 of file thread.cpp.

References foedus::thread::ThreadPimpl::read_snapshot_pages().

| void foedus::thread::Thread::reset_snapshot_cache_counts | ( | ) | const |

[statistics] resets the above two

Definition at line 70 of file thread.cpp.

References foedus::thread::ThreadPimpl::control_block_, foedus::thread::ThreadControlBlock::stat_snapshot_cache_hits_, and foedus::thread::ThreadControlBlock::stat_snapshot_cache_misses_.

| storage::Page * foedus::thread::Thread::resolve | ( | storage::VolatilePagePointer | ptr | ) | const |

Shorthand for get_global_volatile_page_resolver.resolve_offset()

Definition at line 129 of file thread.cpp.

References get_global_volatile_page_resolver(), and foedus::memory::GlobalVolatilePageResolver::resolve_offset().

Referenced by foedus::storage::masstree::MasstreeStoragePimpl::find_border_physical(), foedus::storage::hash::HashStoragePimpl::follow_page_bin_head(), resolve_cast(), and foedus::storage::masstree::verify_page_basic().

| storage::Page * foedus::thread::Thread::resolve | ( | memory::PagePoolOffset | offset | ) | const |

Shorthand for get_local_volatile_page_resolver.resolve_offset()

Definition at line 135 of file thread.cpp.

References get_local_volatile_page_resolver(), and foedus::memory::LocalPageResolver::resolve_offset().

|

inline |

resolve() plus reinterpret_cast

Definition at line 110 of file thread.hpp.

References resolve().

Referenced by foedus::storage::masstree::Adopt::adopt_case_a(), foedus::storage::hash::ReserveRecords::find_and_lock_spacious_tail(), foedus::storage::hash::ReserveRecords::find_or_create_or_expand(), foedus::storage::hash::HashStoragePimpl::follow_page_bin_head(), foedus::storage::hash::HashStoragePimpl::locate_record(), foedus::storage::masstree::MasstreeStoragePimpl::prefetch_pages_follow(), foedus::storage::masstree::MasstreeStoragePimpl::prefetch_pages_normalized_recurse(), foedus::storage::array::ArrayStoragePimpl::prefetch_pages_recurse(), foedus::storage::masstree::MasstreeStoragePimpl::reserve_record(), foedus::storage::masstree::MasstreeStoragePimpl::reserve_record_normalized(), foedus::storage::masstree::MasstreeStoragePimpl::verify_single_thread_border(), and foedus::storage::masstree::MasstreeStoragePimpl::verify_single_thread_intermediate().

|

inline |

Definition at line 116 of file thread.hpp.

References resolve().

| storage::Page * foedus::thread::Thread::resolve_newpage | ( | storage::VolatilePagePointer | ptr | ) | const |

Shorthand for get_global_volatile_page_resolver.resolve_offset_newpage()

Definition at line 132 of file thread.cpp.

References get_global_volatile_page_resolver(), and foedus::memory::GlobalVolatilePageResolver::resolve_offset_newpage().

Referenced by resolve_newpage_cast().

| storage::Page * foedus::thread::Thread::resolve_newpage | ( | memory::PagePoolOffset | offset | ) | const |

Shorthand for get_local_volatile_page_resolver.resolve_offset_newpage()

Definition at line 138 of file thread.cpp.

References get_local_volatile_page_resolver(), and foedus::memory::LocalPageResolver::resolve_offset_newpage().

|

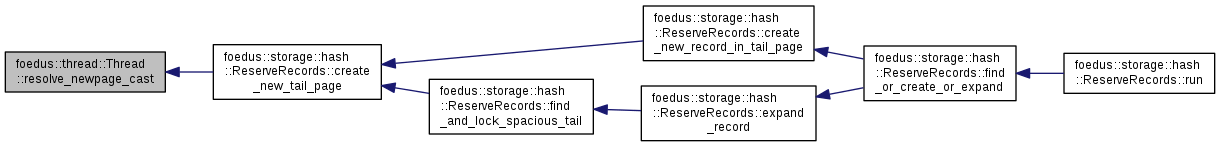

inline |

Definition at line 113 of file thread.hpp.

References resolve_newpage().

Referenced by foedus::storage::hash::ReserveRecords::create_new_tail_page().

|

inline |

Definition at line 119 of file thread.hpp.

References resolve_newpage().

| ErrorCode foedus::thread::Thread::run_nested_sysxct | ( | xct::SysxctFunctor * | functor, |

| uint32_t | max_retries = 0 |

||

| ) |

Methods related to System transactions (sysxct) nested under this thread.

Sysxct methods.

Thread -> ThreadPimpl forwardings

Definition at line 952 of file thread_pimpl.cpp.

References foedus::thread::ThreadPimpl::run_nested_sysxct().

Referenced by foedus::storage::masstree::MasstreeStoragePimpl::fatify_first_root_double(), foedus::storage::masstree::MasstreeStoragePimpl::find_border_physical(), foedus::storage::masstree::MasstreeStoragePimpl::follow_layer(), foedus::storage::masstree::MasstreeStoragePimpl::get_first_root(), foedus::storage::hash::HashStoragePimpl::insert_record(), foedus::storage::hash::HashStoragePimpl::locate_record_reserve_physical(), foedus::storage::masstree::MasstreeStoragePimpl::reserve_record(), foedus::storage::masstree::MasstreeStoragePimpl::reserve_record_normalized(), and foedus::storage::hash::HashStoragePimpl::upsert_record().

| ErrorCode foedus::thread::Thread::sysxct_batch_page_locks | ( | xct::SysxctWorkspace * | sysxct_workspace, |

| uint32_t | lock_count, | ||

| storage::Page ** | pages | ||

| ) |

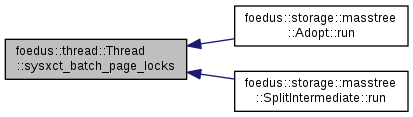

Takes a bunch of page locks for a sysxct running under this thread.

Definition at line 971 of file thread_pimpl.cpp.

References foedus::thread::ThreadPimpl::sysxct_batch_page_locks().

Referenced by foedus::storage::masstree::Adopt::run(), and foedus::storage::masstree::SplitIntermediate::run().

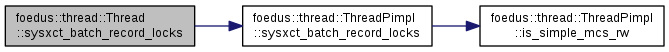

| ErrorCode foedus::thread::Thread::sysxct_batch_record_locks | ( | xct::SysxctWorkspace * | sysxct_workspace, |

| storage::VolatilePagePointer | page_id, | ||

| uint32_t | lock_count, | ||

| xct::RwLockableXctId ** | locks | ||

| ) |

Takes a bunch of locks in the same page for a sysxct running under this thread.

Definition at line 961 of file thread_pimpl.cpp.

References foedus::thread::ThreadPimpl::sysxct_batch_record_locks().

Referenced by foedus::storage::masstree::SplitBorder::lock_existing_records().

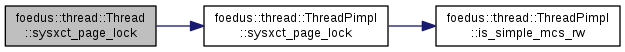

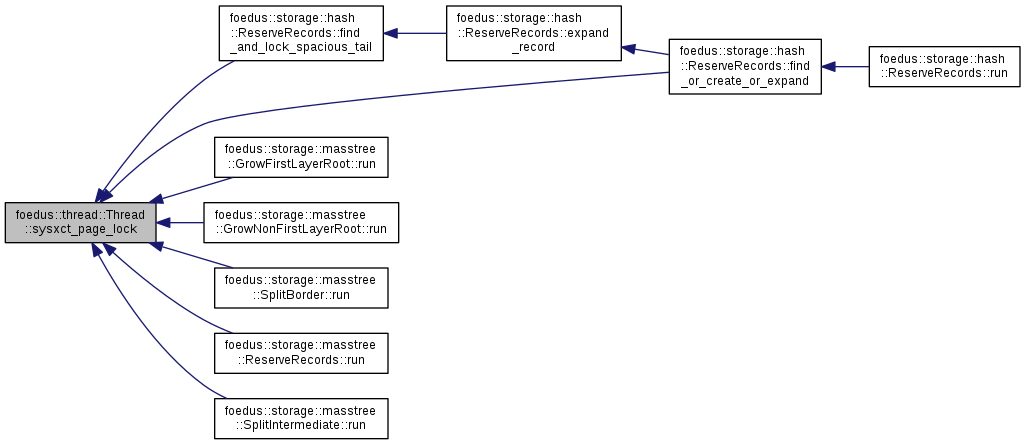

| ErrorCode foedus::thread::Thread::sysxct_page_lock | ( | xct::SysxctWorkspace * | sysxct_workspace, |

| storage::Page * | page | ||

| ) |

Takes a page lock in the same page for a sysxct running under this thread.

Definition at line 968 of file thread_pimpl.cpp.

References foedus::thread::ThreadPimpl::sysxct_page_lock().

Referenced by foedus::storage::hash::ReserveRecords::find_and_lock_spacious_tail(), foedus::storage::hash::ReserveRecords::find_or_create_or_expand(), foedus::storage::masstree::GrowFirstLayerRoot::run(), foedus::storage::masstree::GrowNonFirstLayerRoot::run(), foedus::storage::masstree::SplitBorder::run(), foedus::storage::masstree::ReserveRecords::run(), and foedus::storage::masstree::SplitIntermediate::run().

| ErrorCode foedus::thread::Thread::sysxct_record_lock | ( | xct::SysxctWorkspace * | sysxct_workspace, |

| storage::VolatilePagePointer | page_id, | ||

| xct::RwLockableXctId * | lock | ||

| ) |

Takes a lock for a sysxct running under this thread.

Definition at line 955 of file thread_pimpl.cpp.

References foedus::thread::ThreadPimpl::sysxct_record_lock().

Referenced by foedus::storage::hash::ReserveRecords::expand_record(), foedus::storage::masstree::GrowNonFirstLayerRoot::run(), and foedus::storage::masstree::ReserveRecords::run().

|

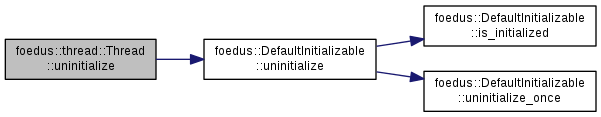

overridevirtual |

An idempotent method to release all resources of this object, if any.

After this method, is_initialized() will return FALSE. Whether this method encounters an error or not, the implementation should make the best effort to release as many resources as possible. In other words, Do not leak all resources because of one issue. This method itself is NOT thread-safe. Do not call this in a racy situation.

Implements foedus::Initializable.

Definition at line 50 of file thread.cpp.

References foedus::DefaultInitializable::uninitialize().

|

friend |

Definition at line 118 of file thread.cpp.