|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

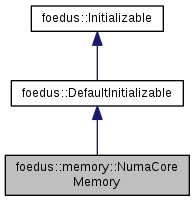

Repository of memories dynamically acquired within one CPU core (thread). More...

Repository of memories dynamically acquired within one CPU core (thread).

One NumaCoreMemory corresponds to one foedus::thread::Thread. Each Thread exclusively access its NumaCoreMemory so that it needs no synchronization nor causes cache misses/cache-line ping-pongs. All memories here are allocated/freed via numa_alloc_interleaved(), numa_alloc_onnode(), and numa_free() (except the user specifies to not use them).

Definition at line 46 of file numa_core_memory.hpp.

#include <numa_core_memory.hpp>

Classes | |

| struct | SmallThreadLocalMemoryPieces |

| Packs pointers to pieces of small_thread_local_memory_. More... | |

Static Public Member Functions | |

| static uint64_t | calculate_local_small_memory_size (const EngineOptions &options) |

|

delete |

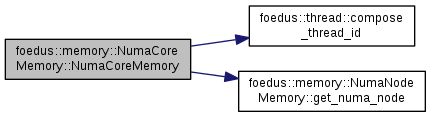

| foedus::memory::NumaCoreMemory::NumaCoreMemory | ( | Engine * | engine, |

| NumaNodeMemory * | node_memory, | ||

| thread::ThreadId | core_id | ||

| ) |

Definition at line 39 of file numa_core_memory.cpp.

References ASSERT_ND, foedus::thread::compose_thread_id(), and foedus::memory::NumaNodeMemory::get_numa_node().

|

static |

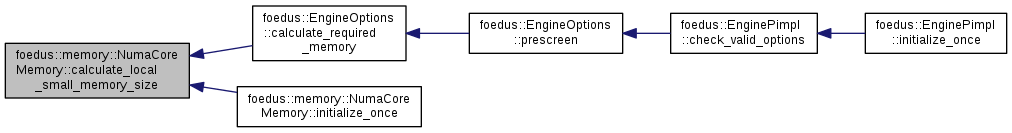

Definition at line 62 of file numa_core_memory.cpp.

References foedus::thread::ThreadOptions::group_count_, foedus::xct::Xct::kMaxPageVersionSets, foedus::xct::Xct::kMaxPointerSets, foedus::xct::XctOptions::max_lock_free_read_set_size_, foedus::xct::XctOptions::max_lock_free_write_set_size_, foedus::xct::XctOptions::max_read_set_size_, foedus::xct::XctOptions::max_write_set_size_, foedus::EngineOptions::thread_, foedus::thread::ThreadOptions::thread_count_per_group_, and foedus::EngineOptions::xct_.

Referenced by foedus::EngineOptions::calculate_required_memory(), and initialize_once().

|

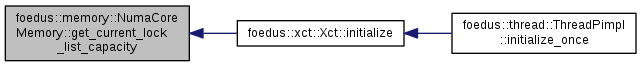

inline |

Definition at line 95 of file numa_core_memory.hpp.

Referenced by foedus::xct::Xct::initialize().

|

inline |

Definition at line 92 of file numa_core_memory.hpp.

Referenced by foedus::xct::Xct::initialize().

|

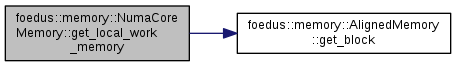

inline |

Definition at line 109 of file numa_core_memory.hpp.

References foedus::memory::AlignedMemory::get_block().

Referenced by foedus::xct::Xct::initialize().

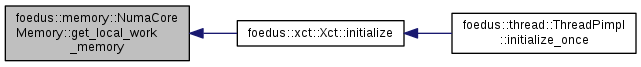

|

inline |

Definition at line 110 of file numa_core_memory.hpp.

References foedus::memory::AlignedMemory::get_size().

Referenced by foedus::xct::Xct::initialize().

|

inline |

Definition at line 64 of file numa_core_memory.hpp.

Referenced by foedus::log::ThreadLogBuffer::initialize_once().

|

inline |

Returns the parent memory repository.

Definition at line 67 of file numa_core_memory.hpp.

Referenced by foedus::thread::Thread::get_node_memory().

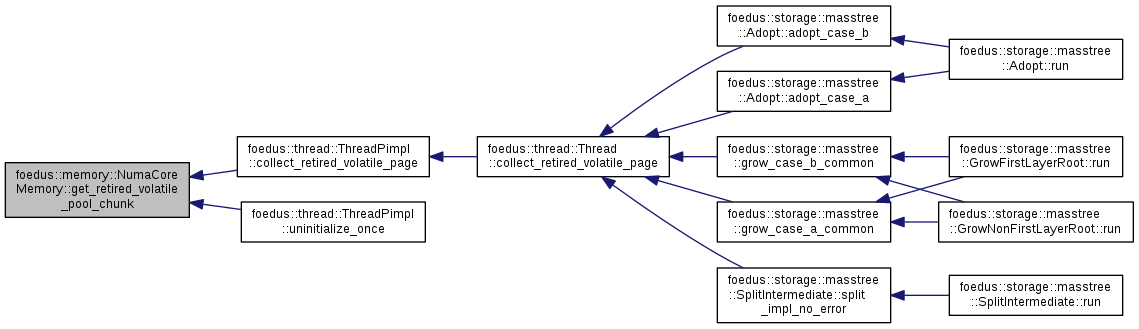

| PagePoolOffsetAndEpochChunk * foedus::memory::NumaCoreMemory::get_retired_volatile_pool_chunk | ( | uint16_t | node | ) |

Definition at line 253 of file numa_core_memory.cpp.

Referenced by foedus::thread::ThreadPimpl::collect_retired_volatile_page(), and foedus::thread::ThreadPimpl::uninitialize_once().

|

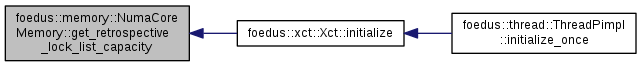

inline |

Definition at line 101 of file numa_core_memory.hpp.

Referenced by foedus::xct::Xct::initialize().

|

inline |

Definition at line 98 of file numa_core_memory.hpp.

Referenced by foedus::xct::Xct::initialize().

|

inline |

Definition at line 105 of file numa_core_memory.hpp.

Referenced by foedus::xct::Xct::initialize().

|

inline |

Definition at line 89 of file numa_core_memory.hpp.

|

inline |

Definition at line 88 of file numa_core_memory.hpp.

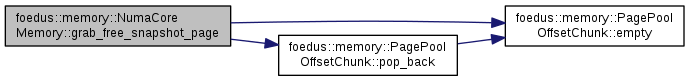

| PagePoolOffset foedus::memory::NumaCoreMemory::grab_free_snapshot_page | ( | ) |

Same, except it's for snapshot page.

Definition at line 221 of file numa_core_memory.cpp.

References ASSERT_ND, foedus::memory::PagePoolOffsetChunk::empty(), foedus::kErrorCodeOk, foedus::memory::PagePoolOffsetChunk::pop_back(), and UNLIKELY.

Referenced by foedus::thread::ThreadPimpl::on_snapshot_cache_miss().

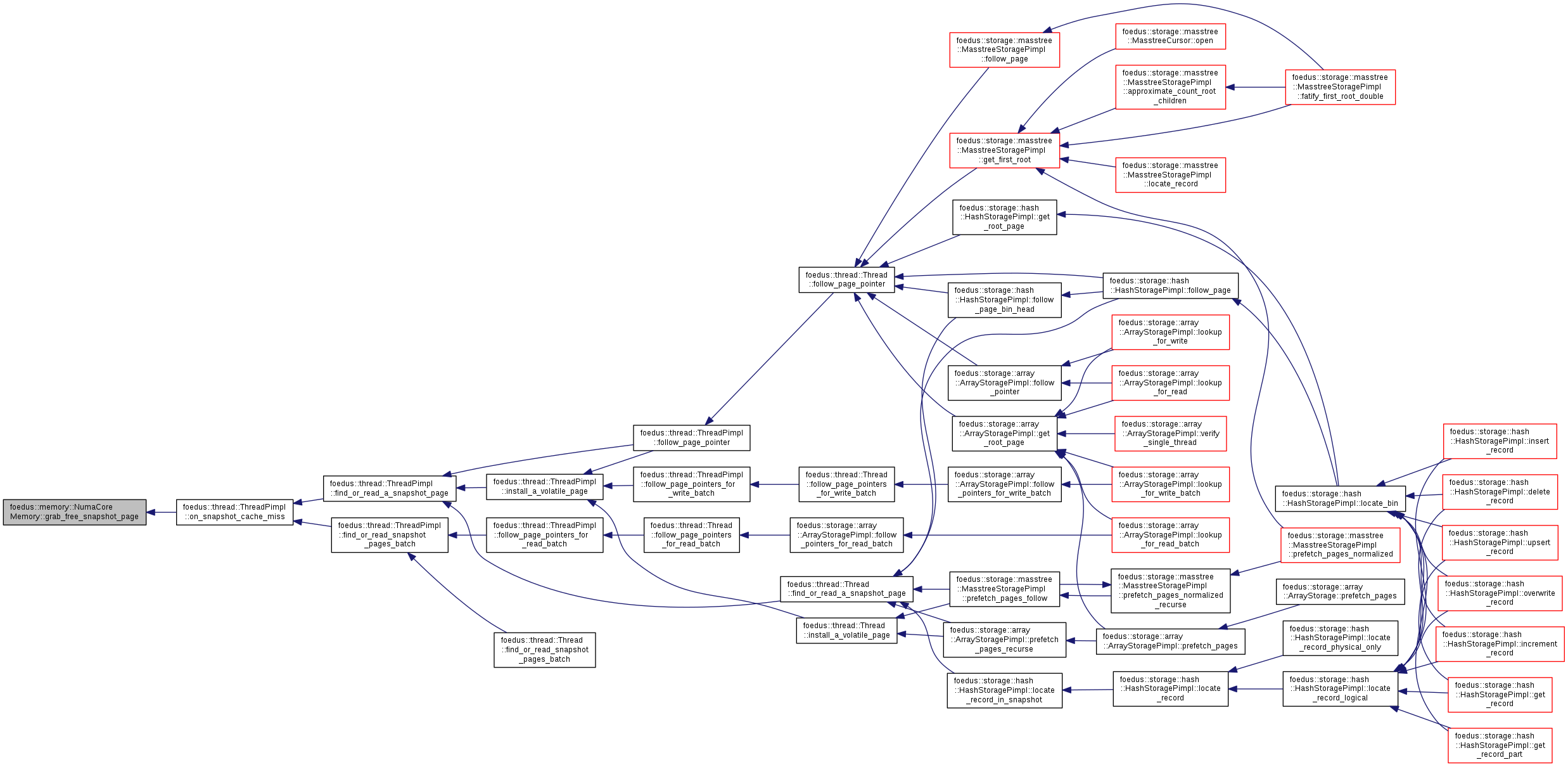

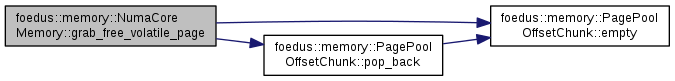

| PagePoolOffset foedus::memory::NumaCoreMemory::grab_free_volatile_page | ( | ) |

Acquires one free volatile page from local page pool.

This method does not return error code to be simple and fast. Instead, The caller MUST check if the returned page is zero or not.

Definition at line 199 of file numa_core_memory.cpp.

References ASSERT_ND, foedus::memory::PagePoolOffsetChunk::empty(), foedus::kErrorCodeOk, foedus::memory::PagePoolOffsetChunk::pop_back(), and UNLIKELY.

Referenced by foedus::storage::masstree::allocate_new_border_page(), foedus::storage::sequential::SequentialStoragePimpl::append_record(), foedus::thread::ThreadPimpl::follow_page_pointer(), foedus::thread::ThreadPimpl::follow_page_pointers_for_read_batch(), foedus::thread::ThreadPimpl::follow_page_pointers_for_write_batch(), foedus::thread::GrabFreeVolatilePagesScope::grab(), grab_free_volatile_page_pointer(), and foedus::storage::masstree::ReserveRecords::run().

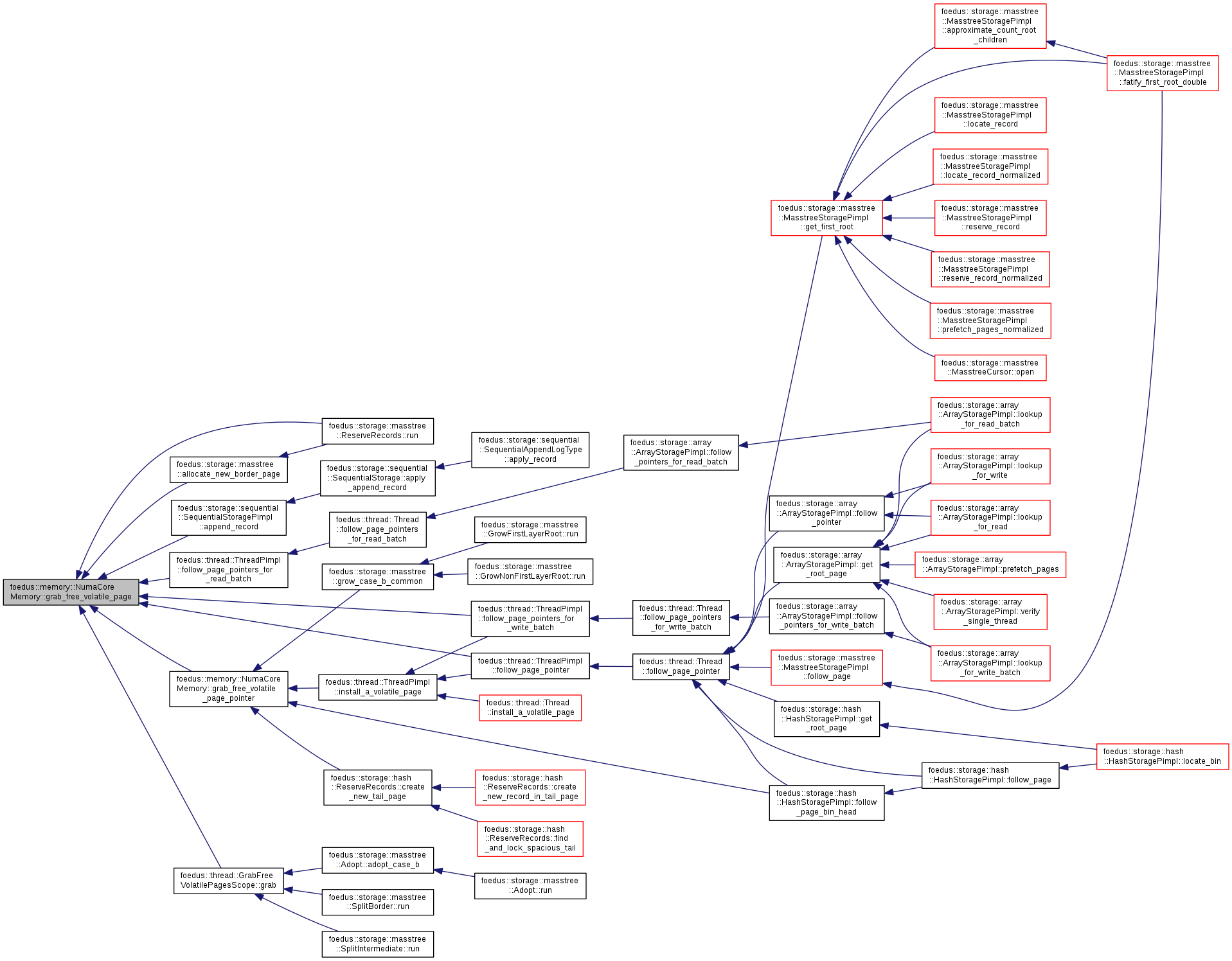

| storage::VolatilePagePointer foedus::memory::NumaCoreMemory::grab_free_volatile_page_pointer | ( | ) |

Wrapper for grab_free_volatile_page().

Definition at line 208 of file numa_core_memory.cpp.

References grab_free_volatile_page(), and foedus::storage::VolatilePagePointer::set().

Referenced by foedus::storage::hash::ReserveRecords::create_new_tail_page(), foedus::storage::hash::HashStoragePimpl::follow_page_bin_head(), foedus::storage::masstree::grow_case_b_common(), and foedus::thread::ThreadPimpl::install_a_volatile_page().

|

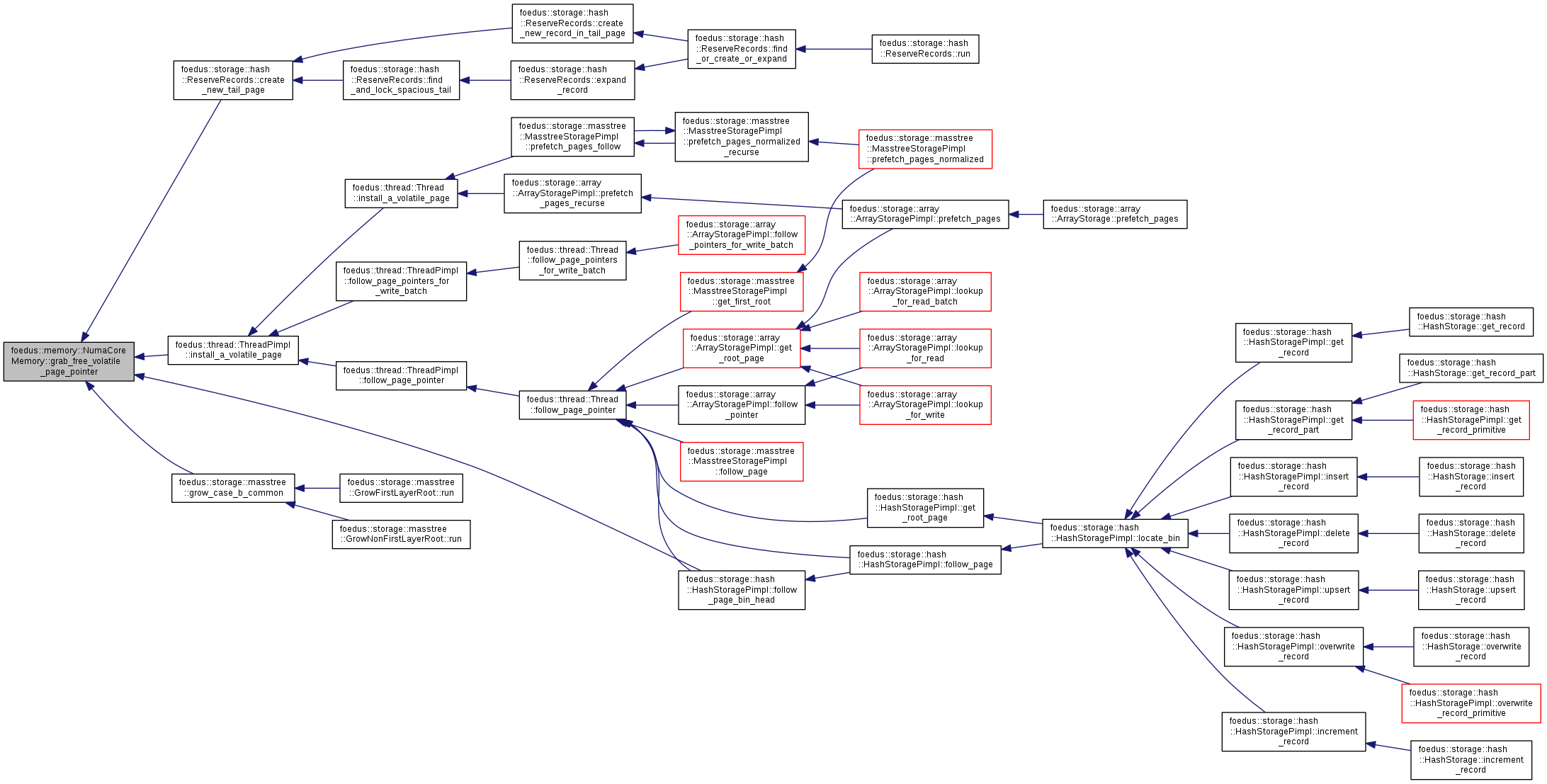

overridevirtual |

Implements foedus::DefaultInitializable.

Definition at line 88 of file numa_core_memory.cpp.

References foedus::memory::NumaNodeMemory::allocate_numa_memory(), ASSERT_ND, calculate_local_small_memory_size(), CHECK_ERROR, foedus::memory::PagePoolOffsetAndEpochChunk::clear(), foedus::memory::AlignedMemory::get_block(), foedus::memory::PagePool::get_free_pool_capacity(), foedus::memory::NumaNodeMemory::get_log_buffer_memory_piece(), foedus::Engine::get_options(), foedus::memory::PagePool::get_recommended_pages_per_grab(), foedus::memory::NumaNodeMemory::get_snapshot_offset_chunk_memory_piece(), foedus::memory::NumaNodeMemory::get_snapshot_pool(), foedus::memory::NumaNodeMemory::get_volatile_offset_chunk_memory_piece(), foedus::memory::NumaNodeMemory::get_volatile_pool(), foedus::memory::PagePool::grab(), foedus::thread::ThreadOptions::group_count_, foedus::xct::Xct::kMaxPageVersionSets, foedus::xct::Xct::kMaxPointerSets, foedus::kRetOk, foedus::EngineOptions::memory_, foedus::memory::MemoryOptions::private_page_pool_initial_grab_, foedus::memory::NumaCoreMemory::SmallThreadLocalMemoryPieces::sysxct_workspace_memory_, foedus::EngineOptions::thread_, foedus::thread::ThreadOptions::thread_count_per_group_, WRAP_ERROR_CODE, foedus::EngineOptions::xct_, foedus::memory::NumaCoreMemory::SmallThreadLocalMemoryPieces::xct_lock_free_read_access_memory_, foedus::memory::NumaCoreMemory::SmallThreadLocalMemoryPieces::xct_lock_free_write_access_memory_, foedus::memory::NumaCoreMemory::SmallThreadLocalMemoryPieces::xct_page_version_memory_, foedus::memory::NumaCoreMemory::SmallThreadLocalMemoryPieces::xct_pointer_access_memory_, foedus::memory::NumaCoreMemory::SmallThreadLocalMemoryPieces::xct_read_access_memory_, and foedus::memory::NumaCoreMemory::SmallThreadLocalMemoryPieces::xct_write_access_memory_.

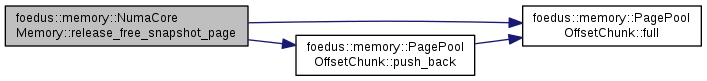

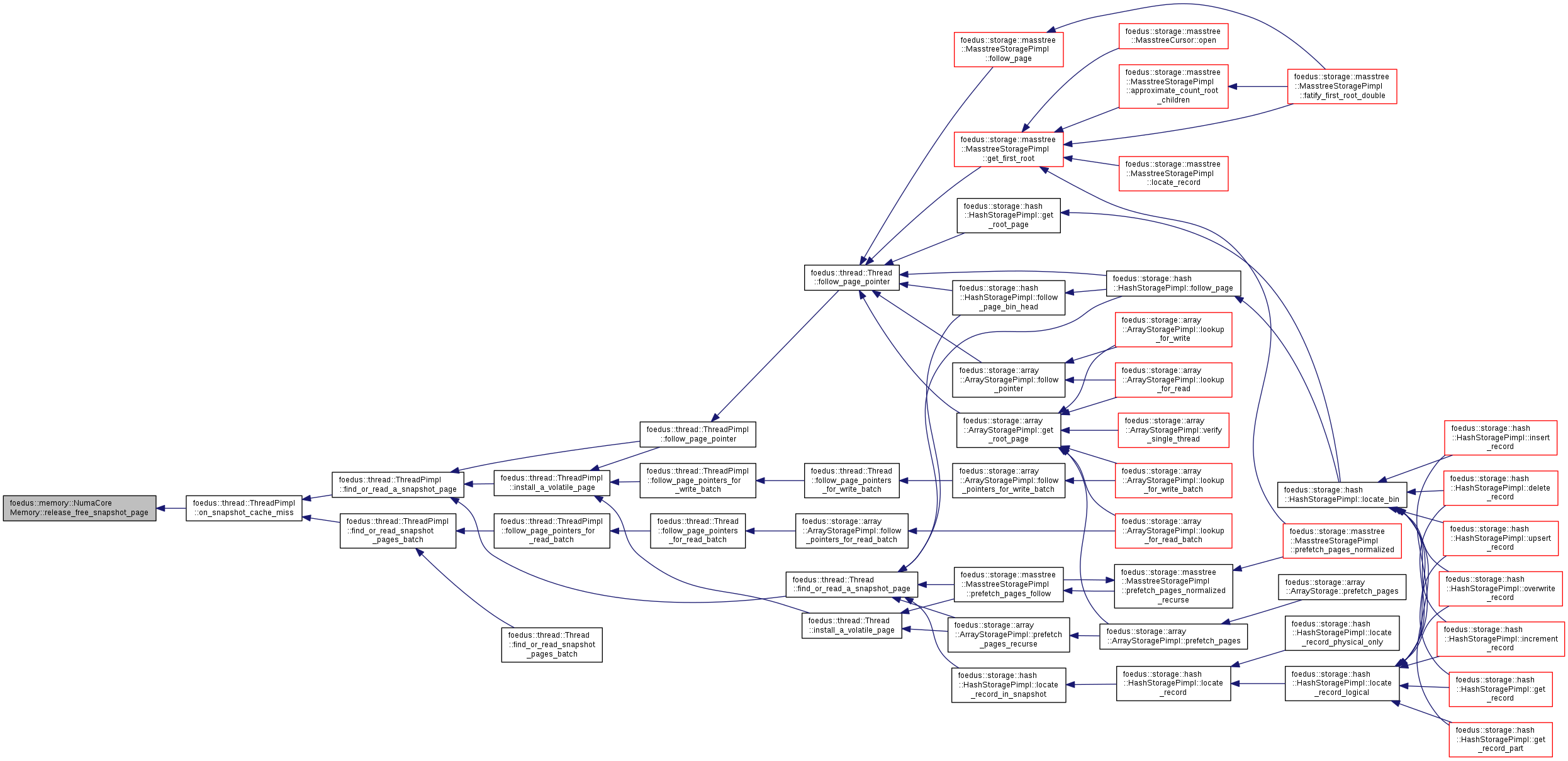

| void foedus::memory::NumaCoreMemory::release_free_snapshot_page | ( | PagePoolOffset | offset | ) |

Same, except it's for snapshot page.

Definition at line 230 of file numa_core_memory.cpp.

References ASSERT_ND, foedus::memory::PagePoolOffsetChunk::full(), foedus::memory::PagePoolOffsetChunk::push_back(), and UNLIKELY.

Referenced by foedus::thread::ThreadPimpl::on_snapshot_cache_miss().

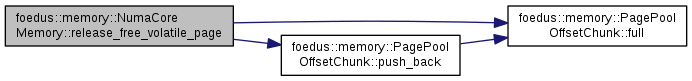

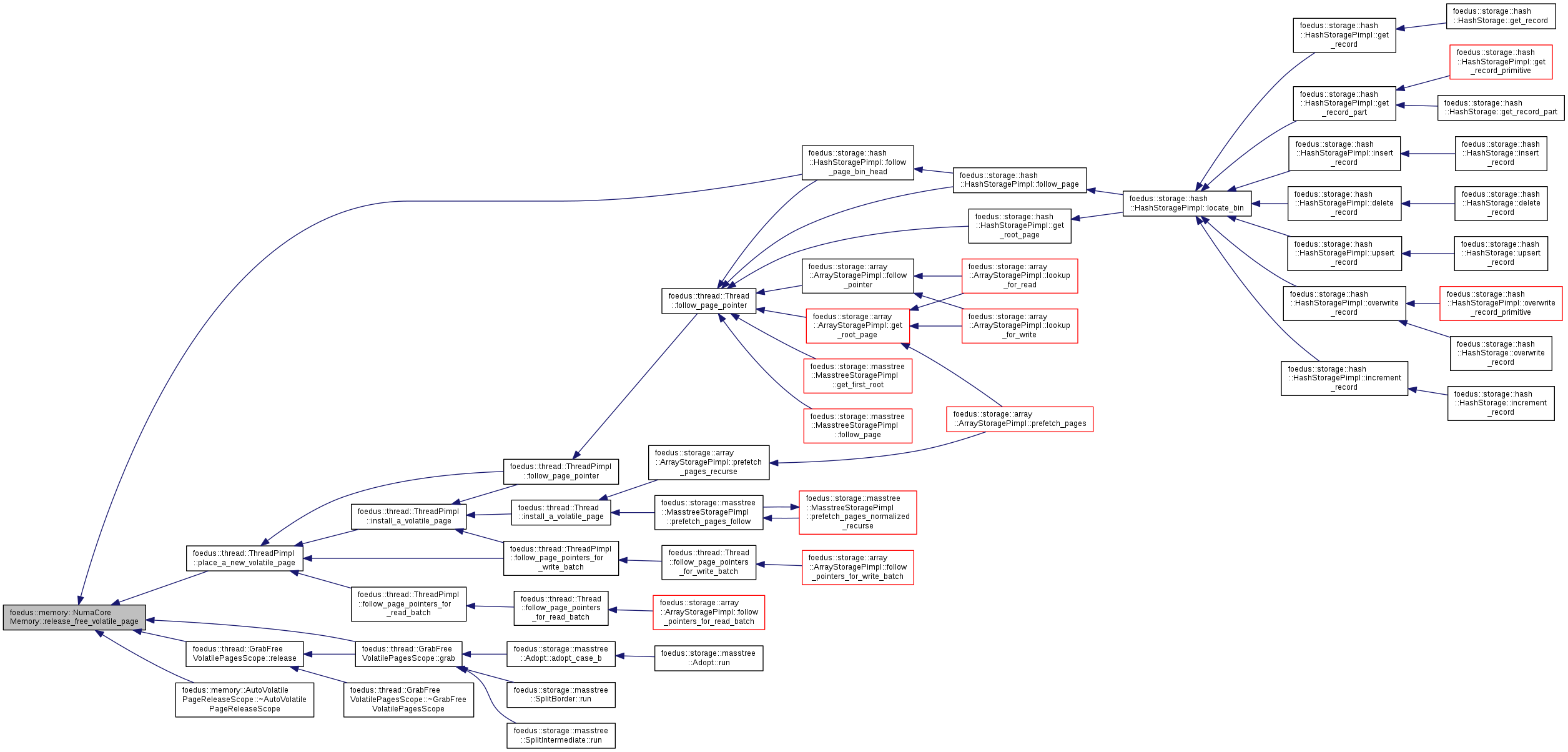

| void foedus::memory::NumaCoreMemory::release_free_volatile_page | ( | PagePoolOffset | offset | ) |

Returns one free volatile page to local page pool.

Definition at line 213 of file numa_core_memory.cpp.

References ASSERT_ND, foedus::memory::PagePoolOffsetChunk::full(), foedus::memory::PagePoolOffsetChunk::push_back(), and UNLIKELY.

Referenced by foedus::storage::hash::HashStoragePimpl::follow_page_bin_head(), foedus::thread::GrabFreeVolatilePagesScope::grab(), foedus::thread::ThreadPimpl::place_a_new_volatile_page(), foedus::thread::GrabFreeVolatilePagesScope::release(), and foedus::memory::AutoVolatilePageReleaseScope::~AutoVolatilePageReleaseScope().

|

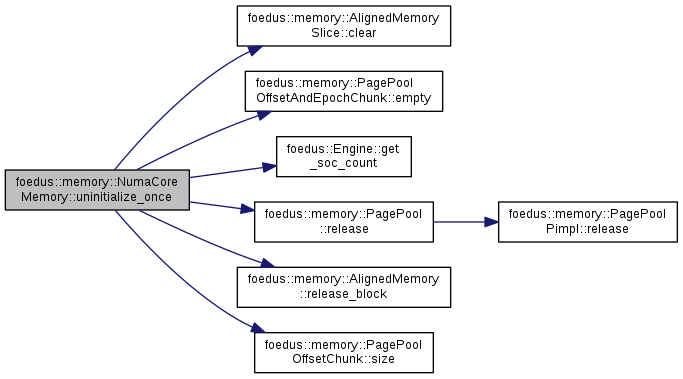

overridevirtual |

Implements foedus::DefaultInitializable.

Definition at line 170 of file numa_core_memory.cpp.

References ASSERT_ND, foedus::memory::AlignedMemorySlice::clear(), foedus::memory::PagePoolOffsetAndEpochChunk::empty(), foedus::Engine::get_soc_count(), foedus::memory::PagePool::release(), foedus::memory::AlignedMemory::release_block(), foedus::memory::PagePoolOffsetChunk::size(), and SUMMARIZE_ERROR_BATCH.