|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

Memory Manager, which controls memory allocations, deallocations, and sharing. More...

Memory Manager, which controls memory allocations, deallocations, and sharing.

This package contains classes that control memory allocations, deallocations, and sharing.

Classes | |

| class | AlignedMemory |

| Represents one memory block aligned to actual OS/hardware pages. More... | |

| struct | AlignedMemorySlice |

| A slice of foedus::memory::AlignedMemory. More... | |

| struct | AutoVolatilePageReleaseScope |

| Automatically invokes a page offset acquired for volatile page. More... | |

| class | DivvyupPageGrabBatch |

| A helper class to grab a bunch of pages from multiple nodes in arbitrary fashion. More... | |

| class | EngineMemory |

| Repository of all memories dynamically acquired and shared within one database engine. More... | |

| struct | GlobalVolatilePageResolver |

| Resolves an offset in a volatile page pool to an actual pointer and vice versa. More... | |

| struct | LocalPageResolver |

| Resolves an offset in local (same NUMA node) page pool to a pointer and vice versa. More... | |

| struct | MemoryOptions |

| Set of options for memory manager. More... | |

| class | NumaCoreMemory |

| Repository of memories dynamically acquired within one CPU core (thread). More... | |

| class | NumaNodeMemory |

| Repository of memories dynamically acquired and shared within one NUMA node (socket). More... | |

| class | NumaNodeMemoryRef |

| A view of NumaNodeMemory for other SOCs and master engine. More... | |

| class | PagePool |

| Page pool for volatile read/write store (VolatilePage) and the read-only bufferpool (SnapshotPage). More... | |

| struct | PagePoolControlBlock |

| Shared data in PagePoolPimpl. More... | |

| class | PagePoolOffsetAndEpochChunk |

| Used to store an epoch value with each entry in PagePoolOffsetChunk. More... | |

| class | PagePoolOffsetChunk |

| To reduce the overhead of grabbing/releasing pages from pool, we pack this many pointers for each grab/release. More... | |

| class | PagePoolOffsetDynamicChunk |

| Used to point to an already existing array. More... | |

| class | PagePoolPimpl |

| Pimpl object of PagePool. More... | |

| class | PageReleaseBatch |

| A helper class to return a bunch of pages to individual nodes. More... | |

| class | RoundRobinPageGrabBatch |

| A helper class to grab a bunch of pages from multiple nodes in round-robin fashion per chunk. More... | |

| struct | ScopedNumaPreferred |

| Automatically sets and resets numa_set_preferred(). More... | |

| class | SharedMemory |

| Represents memory shared between processes. More... | |

Typedefs | |

| typedef uint32_t | PagePoolOffset |

| Offset in PagePool that compactly represents the page address (unlike 8 bytes pointer). More... | |

Functions | |

| bool | is_1gb_hugepage_enabled () |

| Returns if 1GB hugepages were enabled. More... | |

| void | _dummy_static_size_check__COUNTER__ () |

| char * | alloc_mmap (uint64_t size, uint64_t alignment) |

| void * | alloc_mmap_1gb_pages (uint64_t size) |

| std::ostream & | operator<< (std::ostream &o, const AlignedMemory &v) |

| std::ostream & | operator<< (std::ostream &o, const AlignedMemorySlice &v) |

| int64_t | get_numa_node_size (int node) |

| std::ostream & | operator<< (std::ostream &o, const PagePool &v) |

| std::ostream & | operator<< (std::ostream &o, const PagePoolPimpl &v) |

| std::ostream & | operator<< (std::ostream &o, const SharedMemory &v) |

Variables | |

| const uint64_t | kHugepageSize = 1 << 21 |

| So far 2MB is the only page size available via Transparent Huge Page (THP). More... | |

|

inline |

Definition at line 417 of file page_pool.hpp.

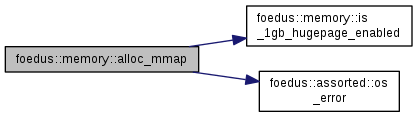

| char* foedus::memory::alloc_mmap | ( | uint64_t | size, |

| uint64_t | alignment | ||

| ) |

Definition at line 64 of file aligned_memory.cpp.

References is_1gb_hugepage_enabled(), MAP_HUGE_1GB, MAP_HUGE_2MB, and foedus::assorted::os_error().

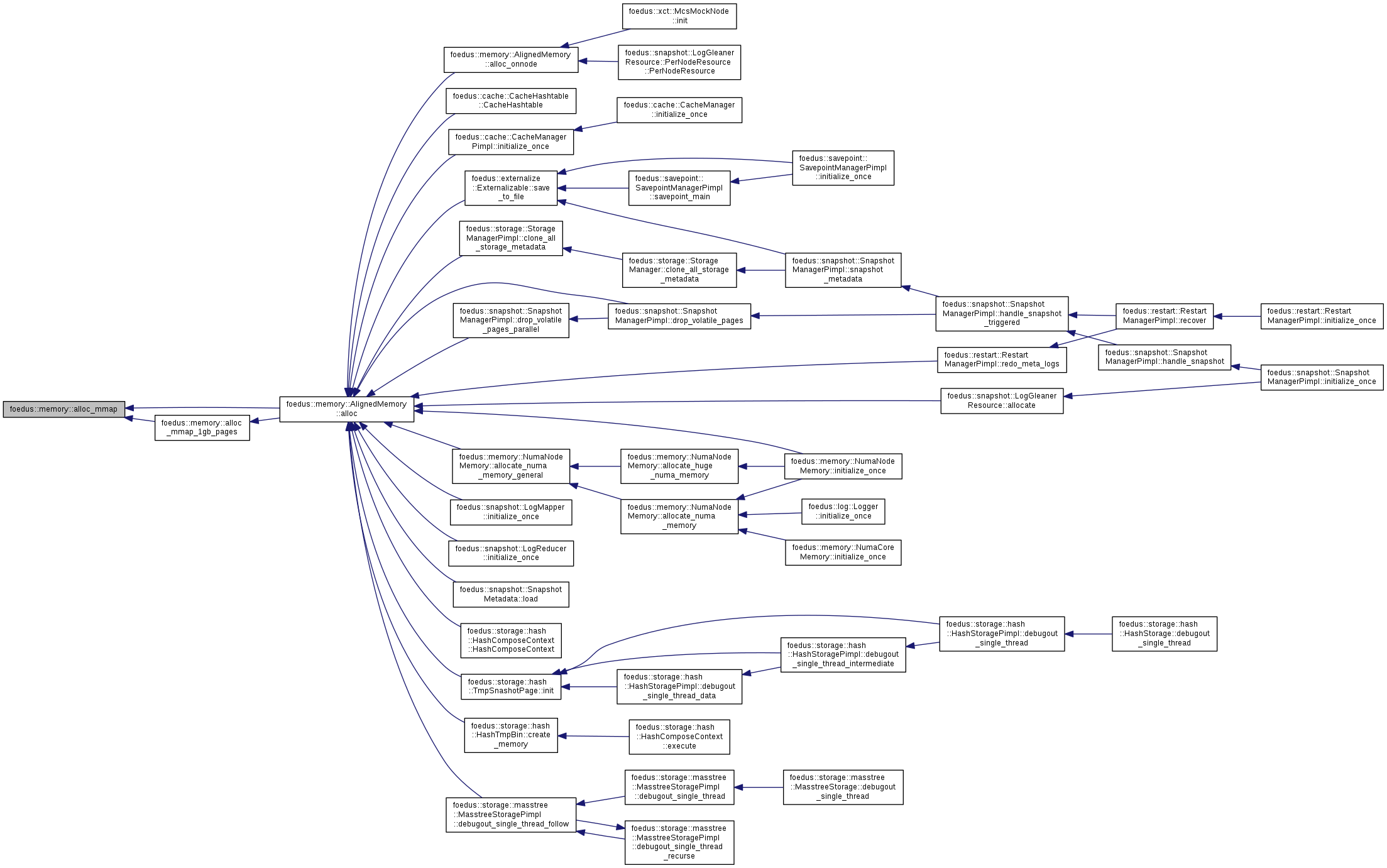

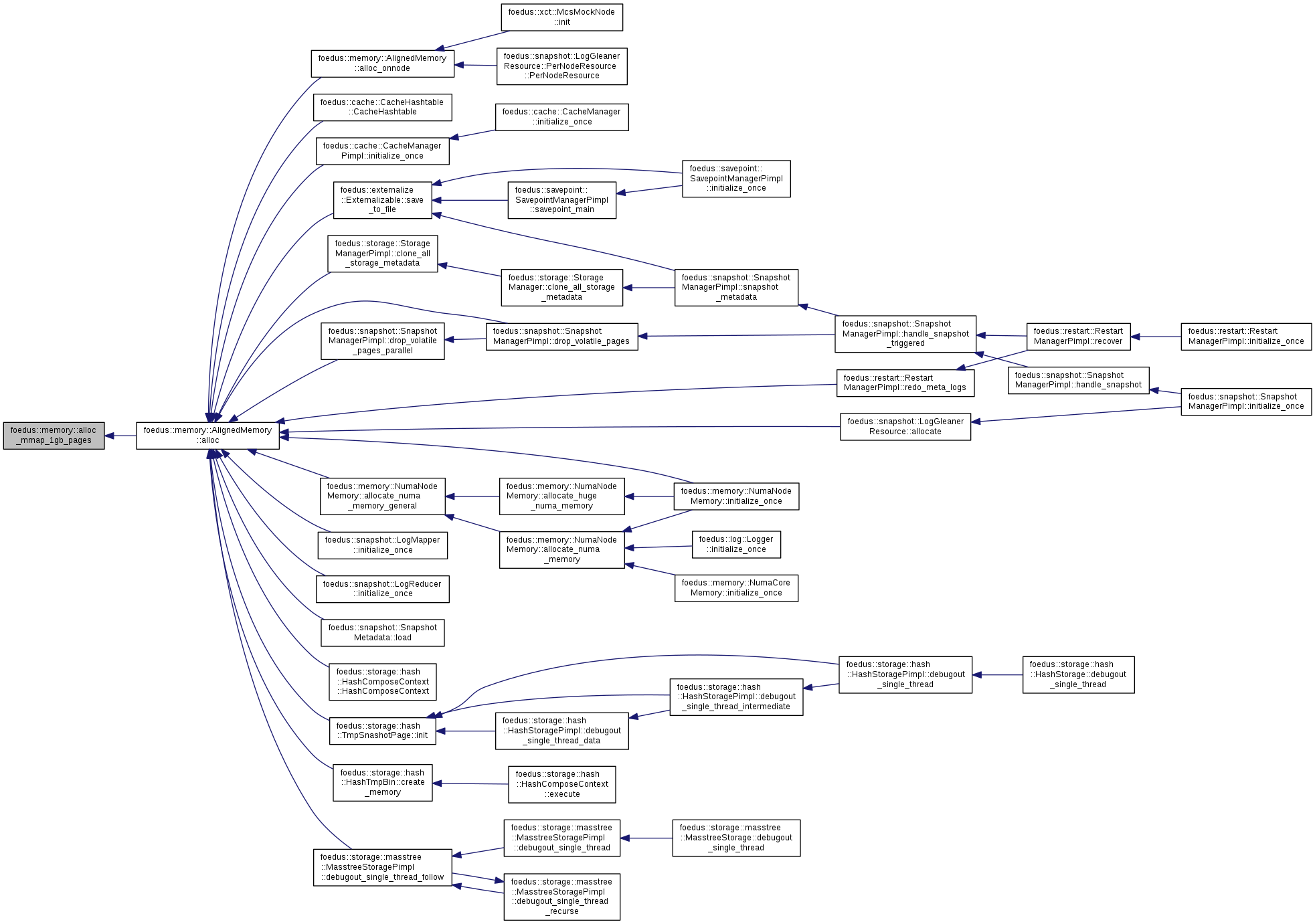

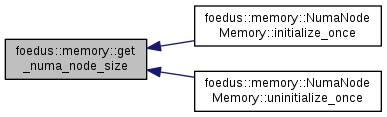

Referenced by foedus::memory::AlignedMemory::alloc(), and alloc_mmap_1gb_pages().

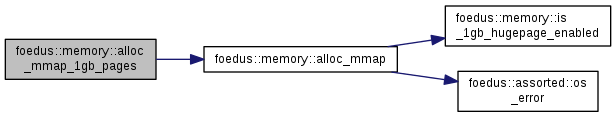

| void* foedus::memory::alloc_mmap_1gb_pages | ( | uint64_t | size | ) |

Definition at line 108 of file aligned_memory.cpp.

References alloc_mmap(), and ASSERT_ND.

Referenced by foedus::memory::AlignedMemory::alloc().

| int64_t foedus::memory::get_numa_node_size | ( | int | node | ) |

Definition at line 49 of file numa_node_memory.cpp.

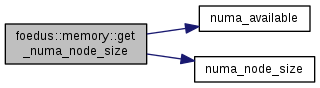

References numa_available(), and numa_node_size().

Referenced by foedus::memory::NumaNodeMemory::initialize_once(), and foedus::memory::NumaNodeMemory::uninitialize_once().

| bool foedus::memory::is_1gb_hugepage_enabled | ( | ) |

Returns if 1GB hugepages were enabled.

Definition at line 293 of file aligned_memory.cpp.

Referenced by alloc_mmap().

| std::ostream& foedus::memory::operator<< | ( | std::ostream & | o, |

| const PagePool & | v | ||

| ) |

Definition at line 148 of file page_pool.cpp.

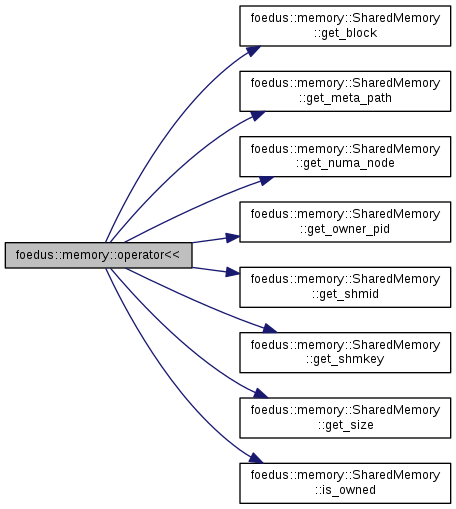

| std::ostream& foedus::memory::operator<< | ( | std::ostream & | o, |

| const SharedMemory & | v | ||

| ) |

Definition at line 245 of file shared_memory.cpp.

References foedus::memory::SharedMemory::get_block(), foedus::memory::SharedMemory::get_meta_path(), foedus::memory::SharedMemory::get_numa_node(), foedus::memory::SharedMemory::get_owner_pid(), foedus::memory::SharedMemory::get_shmid(), foedus::memory::SharedMemory::get_shmkey(), foedus::memory::SharedMemory::get_size(), and foedus::memory::SharedMemory::is_owned().

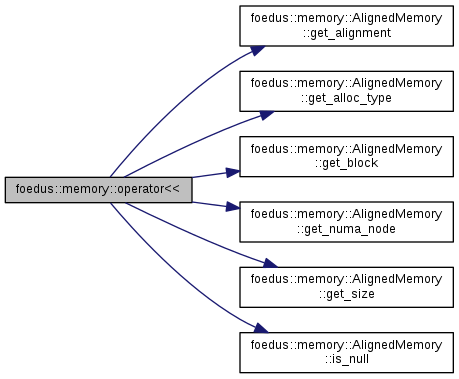

| std::ostream& foedus::memory::operator<< | ( | std::ostream & | o, |

| const AlignedMemory & | v | ||

| ) |

Definition at line 253 of file aligned_memory.cpp.

References foedus::memory::AlignedMemory::get_alignment(), foedus::memory::AlignedMemory::get_alloc_type(), foedus::memory::AlignedMemory::get_block(), foedus::memory::AlignedMemory::get_numa_node(), foedus::memory::AlignedMemory::get_size(), foedus::memory::AlignedMemory::is_null(), foedus::memory::AlignedMemory::kNumaAllocInterleaved, foedus::memory::AlignedMemory::kNumaAllocOnnode, foedus::memory::AlignedMemory::kNumaMmapOneGbPages, and foedus::memory::AlignedMemory::kPosixMemalign.

| std::ostream& foedus::memory::operator<< | ( | std::ostream & | o, |

| const AlignedMemorySlice & | v | ||

| ) |

Definition at line 282 of file aligned_memory.cpp.

References foedus::memory::AlignedMemorySlice::count_, foedus::memory::AlignedMemorySlice::memory_, and foedus::memory::AlignedMemorySlice::offset_.

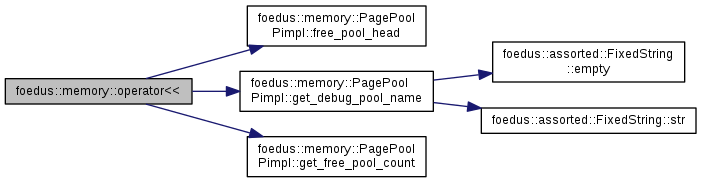

| std::ostream& foedus::memory::operator<< | ( | std::ostream & | o, |

| const PagePoolPimpl & | v | ||

| ) |

Definition at line 343 of file page_pool_pimpl.cpp.

References foedus::memory::PagePoolPimpl::free_pool_capacity_, foedus::memory::PagePoolPimpl::free_pool_head(), foedus::memory::PagePoolPimpl::get_debug_pool_name(), foedus::memory::PagePoolPimpl::get_free_pool_count(), foedus::memory::PagePoolPimpl::memory_, foedus::memory::PagePoolPimpl::memory_size_, foedus::memory::PagePoolPimpl::owns_, foedus::memory::PagePoolPimpl::pages_for_free_pool_, and foedus::memory::PagePoolPimpl::rigorous_page_boundary_check_.