|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

Represents one memory block aligned to actual OS/hardware pages. More...

Represents one memory block aligned to actual OS/hardware pages.

This class is used to allocate and hold memory blocks for objects that must be aligned.

There are a few cases where objects must be on aligned memory.

We do not explicitly use hugepages (2MB/1GB OS page sizes) in our program, but we do allocate memories in a way Linux is strongly advised to use transparent hugepages (THP). posix_memalign() or numa_alloc_interleaved() with big allocation size is a clear hint for Linux to use THP. You don't need mmap or madvise with this strong hint.

After allocating the memory, we zero-clear the memory for two reasons.

To check if THP is actually used, check /proc/meminfo before/after the engine start-up. AnonHugePages tells it. We at least confirmed that THP is used in Fedora 19/20. For more details, see the section in README.markdown.

Definition at line 67 of file aligned_memory.hpp.

#include <aligned_memory.hpp>

Public Types | |

| enum | AllocType { kPosixMemalign = 0, kNumaAllocInterleaved, kNumaAllocOnnode, kNumaMmapOneGbPages } |

| Type of new/delete operation for the block. More... | |

Public Member Functions | |

| AlignedMemory () noexcept | |

| Empty constructor which allocates nothing. More... | |

| AlignedMemory (uint64_t size, uint64_t alignment, AllocType alloc_type, int numa_node) noexcept | |

| Allocate an aligned memory of given size and alignment. More... | |

| AlignedMemory (const AlignedMemory &other)=delete | |

| AlignedMemory & | operator= (const AlignedMemory &other)=delete |

| AlignedMemory (AlignedMemory &&other) noexcept | |

| Move constructor that steals the memory block from other. More... | |

| AlignedMemory & | operator= (AlignedMemory &&other) noexcept |

| Move assignment operator that steals the memory block from other. More... | |

| ~AlignedMemory () | |

| Automatically releases the memory. More... | |

| void | alloc (uint64_t size, uint64_t alignment, AllocType alloc_type, int numa_node) noexcept |

| Allocate a memory, releasing the current memory if exists. More... | |

| void | alloc_onnode (uint64_t size, uint64_t alignment, int numa_node) noexcept |

| Short for alloc(kNumaAllocOnnode) More... | |

| ErrorCode | assure_capacity (uint64_t required_size, double expand_margin=2.0, bool retain_content=false) noexcept |

| If the current size is smaller than the given size, automatically expands. More... | |

| void * | get_block () const |

| Returns the memory block. More... | |

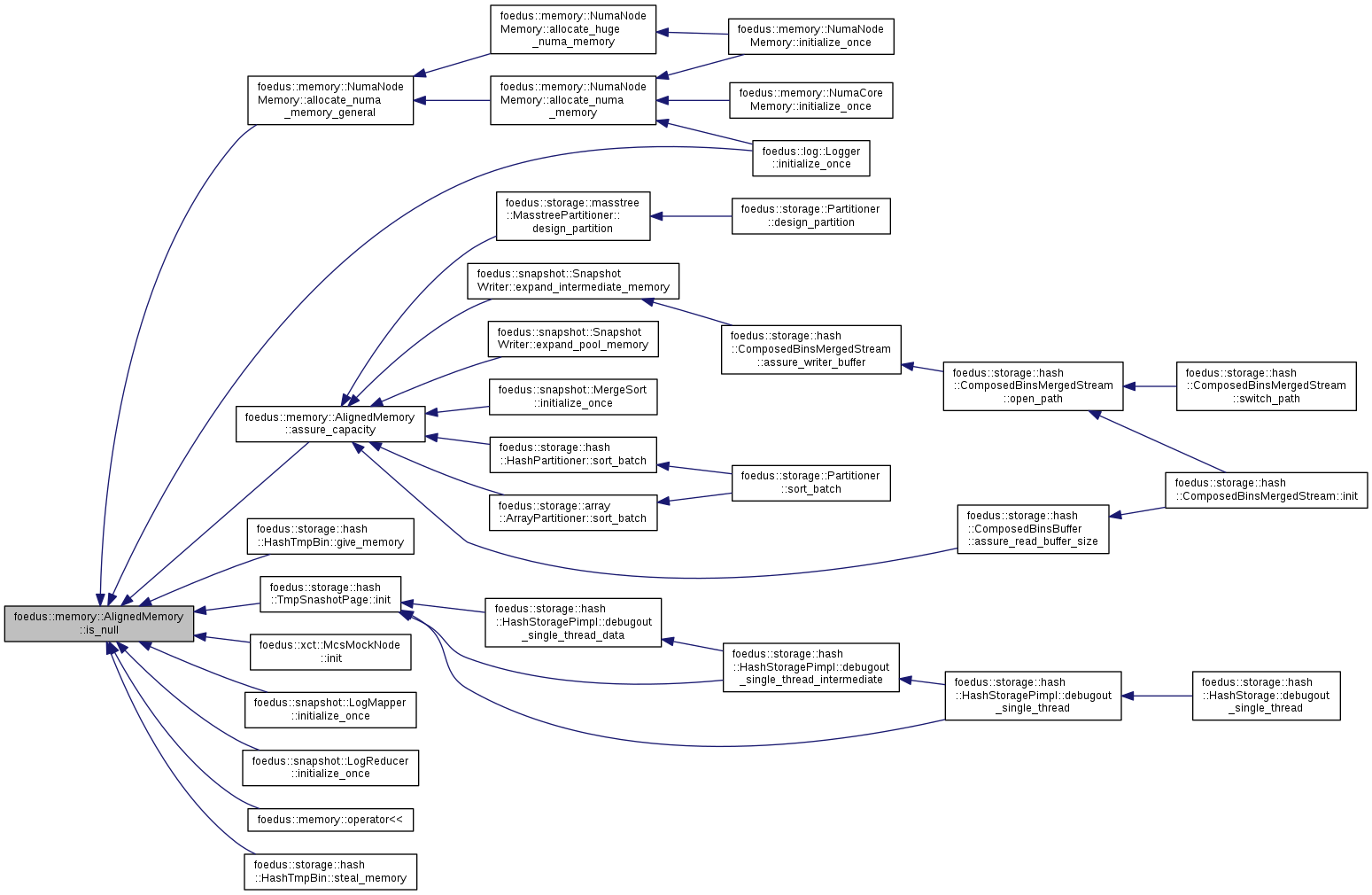

| bool | is_null () const |

| Returns if this object doesn't hold a valid memory block. More... | |

| uint64_t | get_size () const |

| Returns the byte size of the memory block. More... | |

| uint64_t | get_alignment () const |

| Returns the alignment of the memory block. More... | |

| AllocType | get_alloc_type () const |

| Returns type of new/delete operation for the block. More... | |

| int | get_numa_node () const |

| If alloc_type_ is kNumaAllocOnnode, returns the NUMA node this memory was allocated at. More... | |

| void | release_block () |

| Releases the memory block. More... | |

Friends | |

| std::ostream & | operator<< (std::ostream &o, const AlignedMemory &v) |

Type of new/delete operation for the block.

So far we allow posix_memalign and numa_alloc. numa_alloc implicitly aligns the allocated memory, but we can't specify alignment size. Usually it's 4096 bytes aligned, thus always enough for our usage.

| Enumerator | |

|---|---|

| kPosixMemalign |

posix_memalign() and free(). |

| kNumaAllocInterleaved |

numa_alloc_interleaved() and numa_free(). Implicit 4096 bytes alignment. |

| kNumaAllocOnnode |

numa_alloc_onnode() and numa_free(). Implicit 4096 bytes alignment. |

| kNumaMmapOneGbPages |

Usual new()/delete(). We currently don't use this for aligned memory allocation, but may be the best for portability. But, this is not a strong hint for Linux to use THP. hmm.Windows's VirtualAlloc() and VirtualFree(). This option is for using non-transparent 1G hugepages on NUMA node, which requires a special configuration (OS reboot required), so use this with care. Basically, this invokes numa_set_preferred() on the target node and then mmap() with MAP_HUGETLB and MAP_HUGE_1GB. See the readme about 1G hugepage setup. Unfortunately we can't do it without rebooting the linux.. crap! |

Definition at line 77 of file aligned_memory.hpp.

|

inlinenoexcept |

Empty constructor which allocates nothing.

Definition at line 104 of file aligned_memory.hpp.

|

noexcept |

Allocate an aligned memory of given size and alignment.

| [in] | size | Byte size of the memory block. Actual allocation is at least of this size. |

| [in] | alignment | Alignment bytes of the memory block. Must be power of two. Ignored for kNumaAllocOnnode and kNumaAllocInterleaved. |

| [in] | alloc_type | specifies type of new/delete |

| [in] | numa_node | if alloc_type_ is kNumaAllocOnnode, the NUMA node to allocate at. Otherwise ignored. |

Definition at line 52 of file aligned_memory.cpp.

|

delete |

|

noexcept |

Move constructor that steals the memory block from other.

Definition at line 222 of file aligned_memory.cpp.

|

inline |

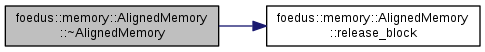

Automatically releases the memory.

Definition at line 138 of file aligned_memory.hpp.

References release_block().

|

noexcept |

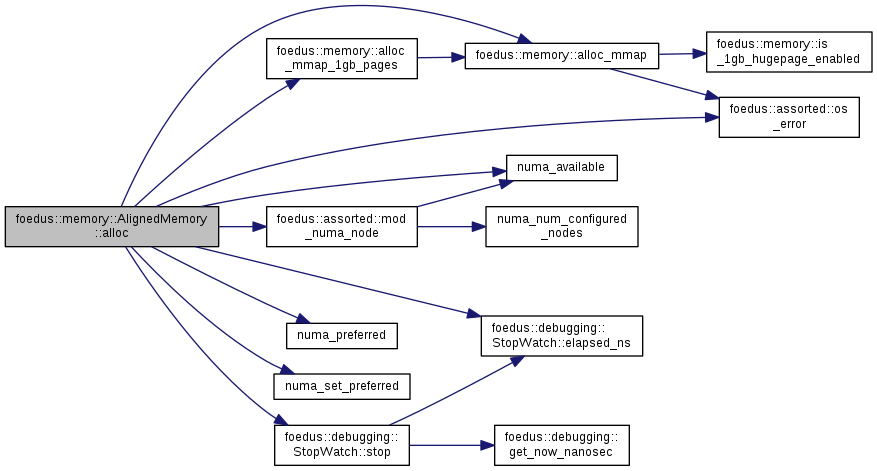

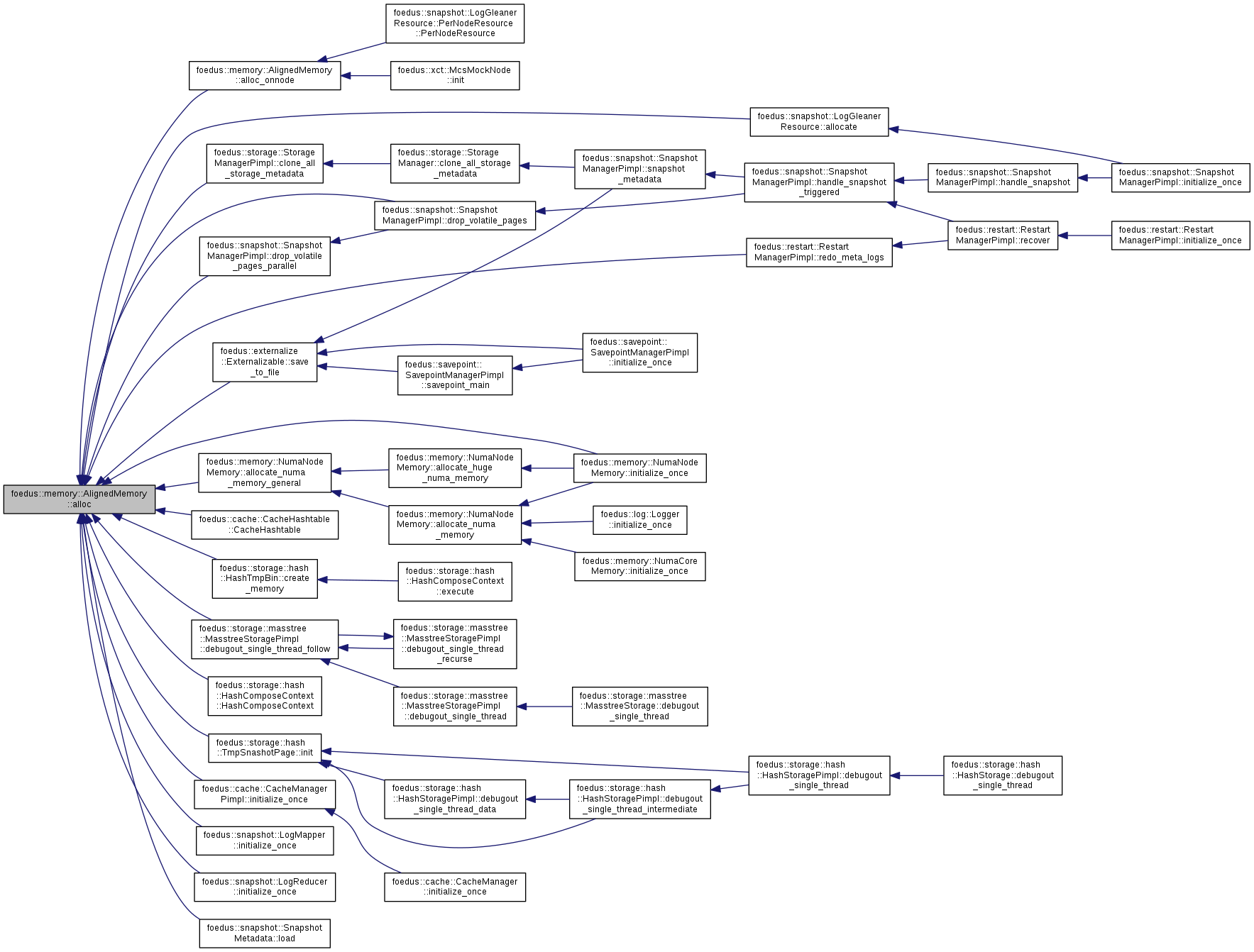

Allocate a memory, releasing the current memory if exists.

Definition at line 113 of file aligned_memory.cpp.

References foedus::memory::alloc_mmap(), foedus::memory::alloc_mmap_1gb_pages(), ASSERT_ND, foedus::debugging::StopWatch::elapsed_ns(), foedus::assorted::mod_numa_node(), numa_available(), numa_preferred(), numa_set_preferred(), foedus::assorted::os_error(), and foedus::debugging::StopWatch::stop().

Referenced by alloc_onnode(), foedus::snapshot::LogGleanerResource::allocate(), foedus::memory::NumaNodeMemory::allocate_numa_memory_general(), foedus::cache::CacheHashtable::CacheHashtable(), foedus::storage::StorageManagerPimpl::clone_all_storage_metadata(), foedus::storage::hash::HashTmpBin::create_memory(), foedus::storage::masstree::MasstreeStoragePimpl::debugout_single_thread_follow(), foedus::snapshot::SnapshotManagerPimpl::drop_volatile_pages(), foedus::snapshot::SnapshotManagerPimpl::drop_volatile_pages_parallel(), foedus::storage::hash::HashComposeContext::HashComposeContext(), foedus::storage::hash::TmpSnashotPage::init(), foedus::cache::CacheManagerPimpl::initialize_once(), foedus::memory::NumaNodeMemory::initialize_once(), foedus::snapshot::LogMapper::initialize_once(), foedus::snapshot::LogReducer::initialize_once(), foedus::snapshot::SnapshotMetadata::load(), foedus::restart::RestartManagerPimpl::redo_meta_logs(), and foedus::externalize::Externalizable::save_to_file().

|

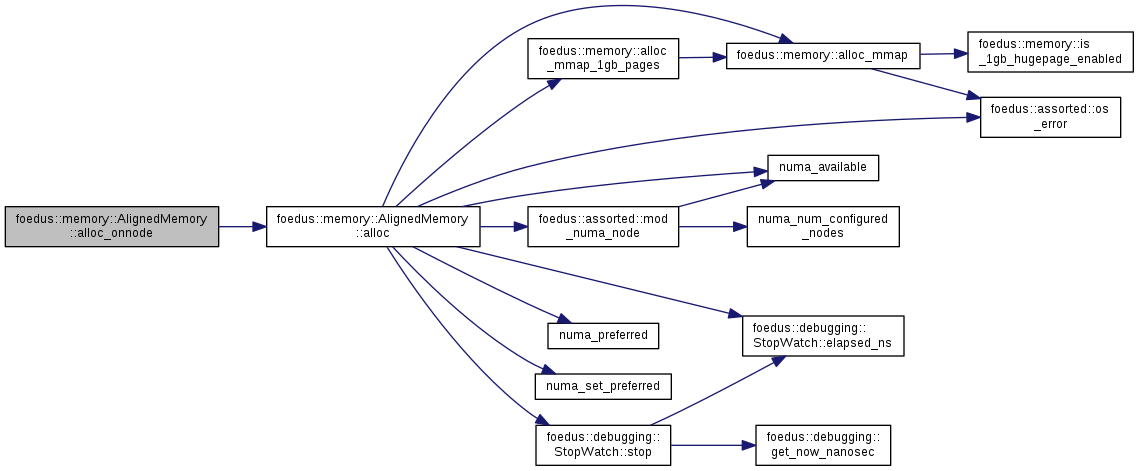

inlinenoexcept |

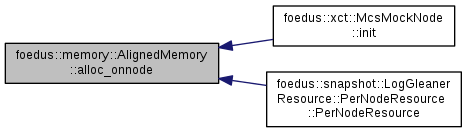

Short for alloc(kNumaAllocOnnode)

Definition at line 147 of file aligned_memory.hpp.

References alloc(), and kNumaAllocOnnode.

Referenced by foedus::xct::McsMockNode< RW_BLOCK >::init(), and foedus::snapshot::LogGleanerResource::PerNodeResource::PerNodeResource().

|

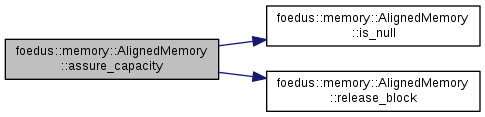

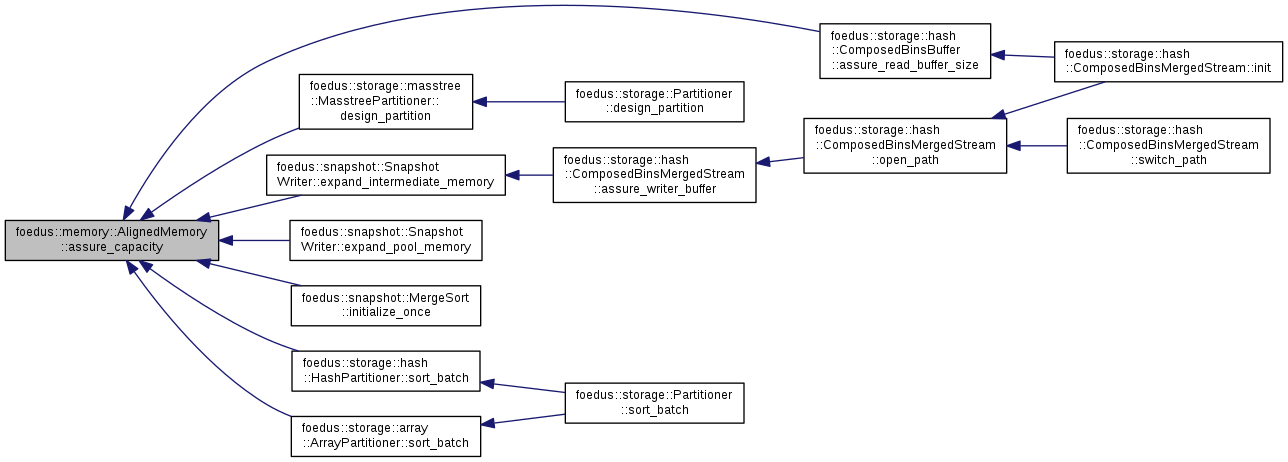

noexcept |

If the current size is smaller than the given size, automatically expands.

This is useful for temporary work buffer.

| [in] | required_size | resulting memory will have at least this size |

| [in] | expand_margin | when expanded, the new size is multiplied with this number to avoid too frequent expansion |

| [in] | retain_content | if specified, copies the current content to the new memory |

Definition at line 182 of file aligned_memory.cpp.

References ASSERT_ND, is_null(), foedus::kErrorCodeInvalidParameter, foedus::kErrorCodeOk, foedus::kErrorCodeOutofmemory, and release_block().

Referenced by foedus::storage::hash::ComposedBinsBuffer::assure_read_buffer_size(), foedus::storage::masstree::MasstreePartitioner::design_partition(), foedus::snapshot::SnapshotWriter::expand_intermediate_memory(), foedus::snapshot::SnapshotWriter::expand_pool_memory(), foedus::snapshot::MergeSort::initialize_once(), foedus::storage::hash::HashPartitioner::sort_batch(), and foedus::storage::array::ArrayPartitioner::sort_batch().

|

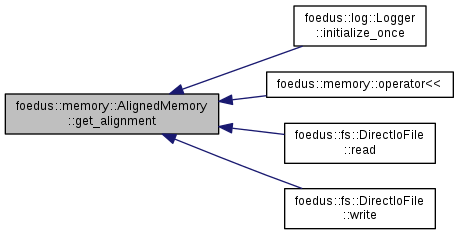

inline |

Returns the alignment of the memory block.

Definition at line 174 of file aligned_memory.hpp.

Referenced by foedus::log::Logger::initialize_once(), foedus::memory::operator<<(), foedus::fs::DirectIoFile::read(), and foedus::fs::DirectIoFile::write().

|

inline |

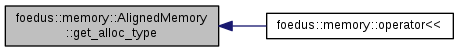

Returns type of new/delete operation for the block.

Definition at line 176 of file aligned_memory.hpp.

Referenced by foedus::memory::operator<<().

|

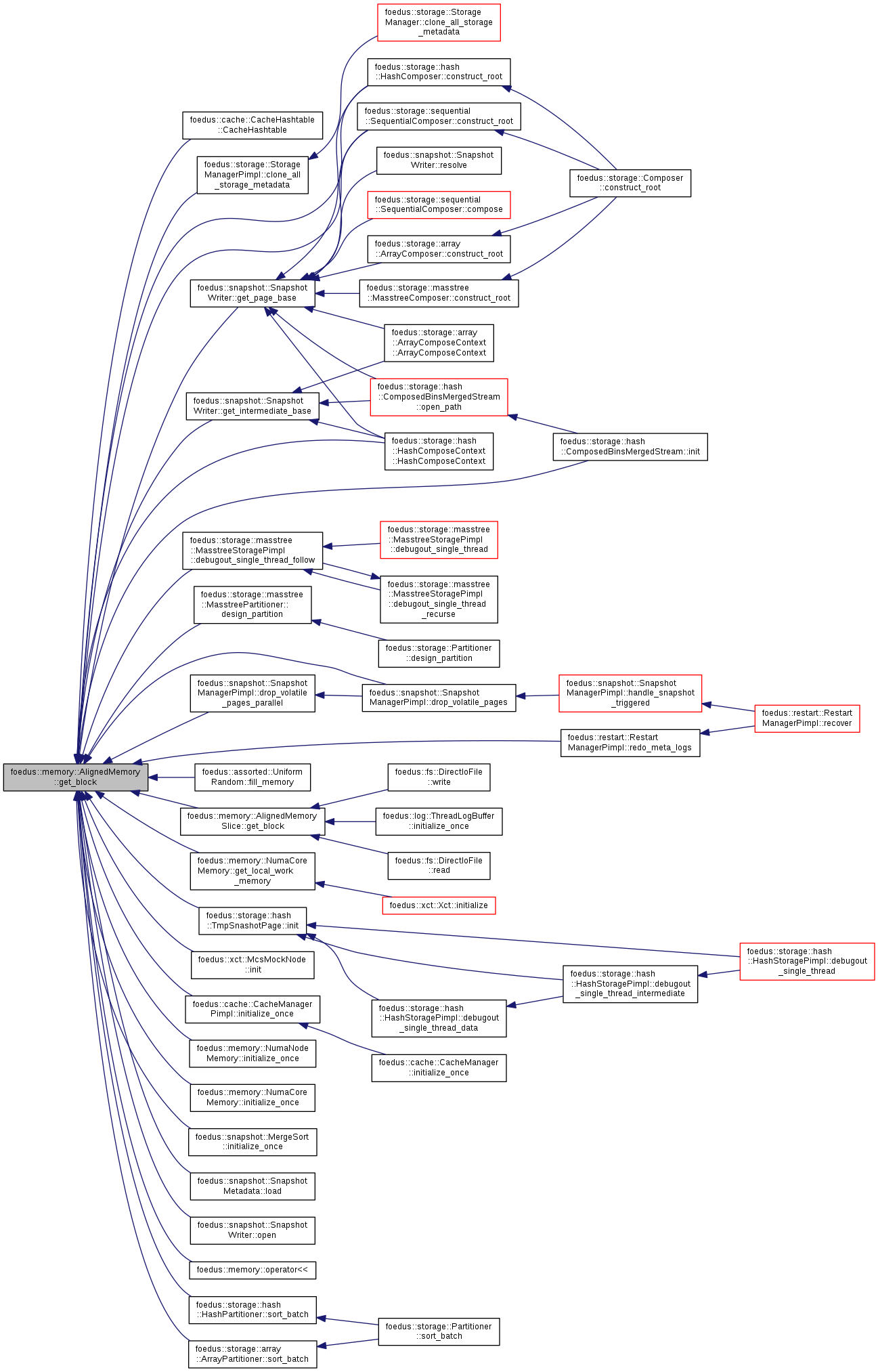

inline |

Returns the memory block.

Definition at line 168 of file aligned_memory.hpp.

Referenced by foedus::cache::CacheHashtable::CacheHashtable(), foedus::storage::StorageManagerPimpl::clone_all_storage_metadata(), foedus::storage::hash::HashComposer::construct_root(), foedus::storage::sequential::SequentialComposer::construct_root(), foedus::storage::masstree::MasstreeStoragePimpl::debugout_single_thread_follow(), foedus::storage::masstree::MasstreePartitioner::design_partition(), foedus::snapshot::SnapshotManagerPimpl::drop_volatile_pages(), foedus::snapshot::SnapshotManagerPimpl::drop_volatile_pages_parallel(), foedus::assorted::UniformRandom::fill_memory(), foedus::memory::AlignedMemorySlice::get_block(), foedus::snapshot::SnapshotWriter::get_intermediate_base(), foedus::memory::NumaCoreMemory::get_local_work_memory(), foedus::snapshot::SnapshotWriter::get_page_base(), foedus::storage::hash::HashComposeContext::HashComposeContext(), foedus::storage::hash::TmpSnashotPage::init(), foedus::xct::McsMockNode< RW_BLOCK >::init(), foedus::storage::hash::ComposedBinsMergedStream::init(), foedus::cache::CacheManagerPimpl::initialize_once(), foedus::memory::NumaNodeMemory::initialize_once(), foedus::memory::NumaCoreMemory::initialize_once(), foedus::snapshot::MergeSort::initialize_once(), foedus::snapshot::SnapshotMetadata::load(), foedus::snapshot::SnapshotWriter::open(), foedus::memory::operator<<(), foedus::restart::RestartManagerPimpl::redo_meta_logs(), foedus::storage::hash::HashPartitioner::sort_batch(), and foedus::storage::array::ArrayPartitioner::sort_batch().

|

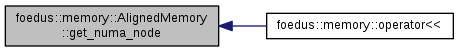

inline |

If alloc_type_ is kNumaAllocOnnode, returns the NUMA node this memory was allocated at.

Definition at line 178 of file aligned_memory.hpp.

Referenced by foedus::memory::operator<<().

|

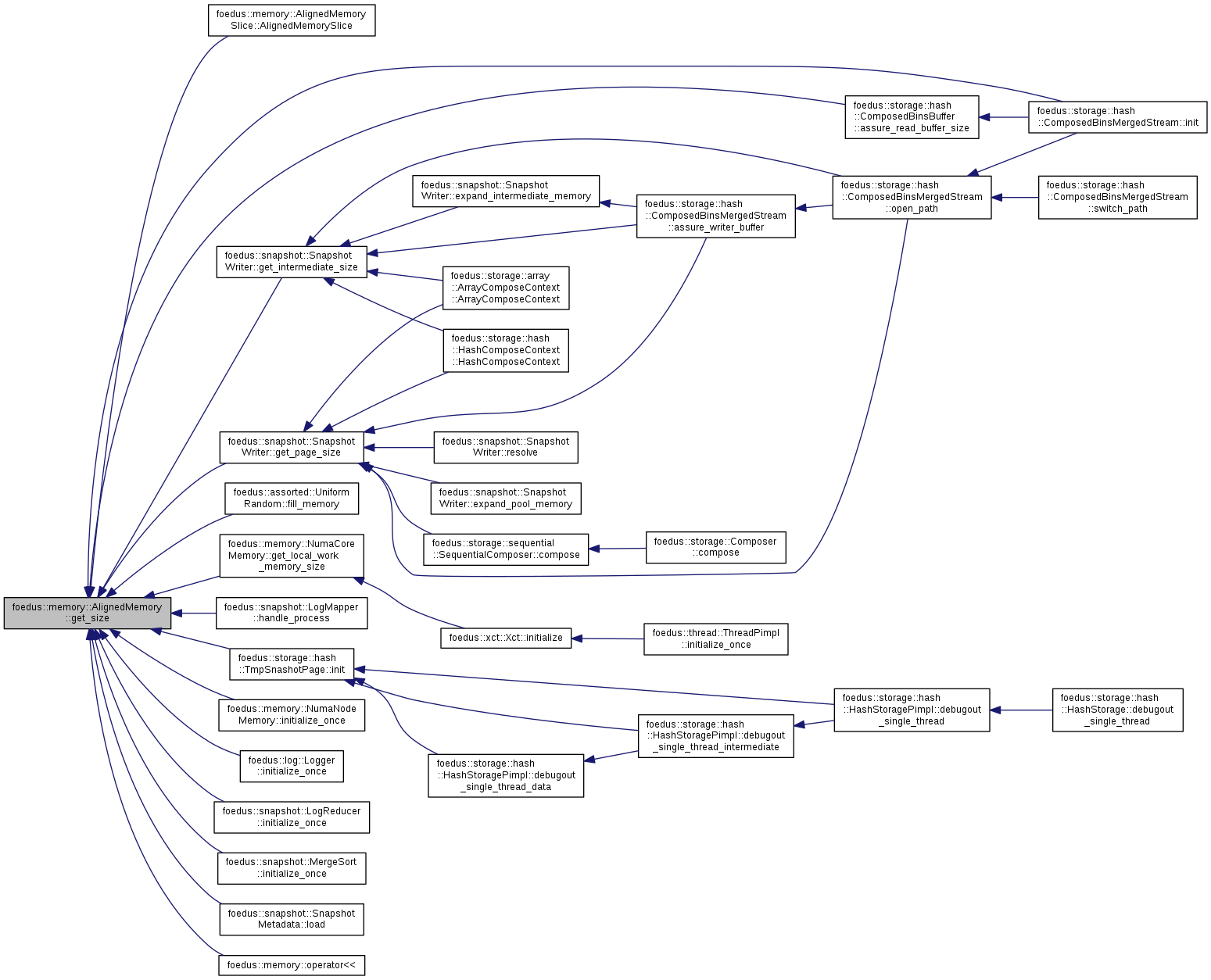

inline |

Returns the byte size of the memory block.

Definition at line 172 of file aligned_memory.hpp.

Referenced by foedus::memory::AlignedMemorySlice::AlignedMemorySlice(), foedus::storage::hash::ComposedBinsBuffer::assure_read_buffer_size(), foedus::assorted::UniformRandom::fill_memory(), foedus::snapshot::SnapshotWriter::get_intermediate_size(), foedus::memory::NumaCoreMemory::get_local_work_memory_size(), foedus::snapshot::SnapshotWriter::get_page_size(), foedus::snapshot::LogMapper::handle_process(), foedus::storage::hash::TmpSnashotPage::init(), foedus::storage::hash::ComposedBinsMergedStream::init(), foedus::memory::NumaNodeMemory::initialize_once(), foedus::log::Logger::initialize_once(), foedus::snapshot::LogReducer::initialize_once(), foedus::snapshot::MergeSort::initialize_once(), foedus::snapshot::SnapshotMetadata::load(), and foedus::memory::operator<<().

|

inline |

Returns if this object doesn't hold a valid memory block.

Definition at line 170 of file aligned_memory.hpp.

References CXX11_NULLPTR.

Referenced by foedus::memory::NumaNodeMemory::allocate_numa_memory_general(), assure_capacity(), foedus::storage::hash::HashTmpBin::give_memory(), foedus::storage::hash::TmpSnashotPage::init(), foedus::xct::McsMockNode< RW_BLOCK >::init(), foedus::snapshot::LogMapper::initialize_once(), foedus::log::Logger::initialize_once(), foedus::snapshot::LogReducer::initialize_once(), foedus::memory::operator<<(), and foedus::storage::hash::HashTmpBin::steal_memory().

|

delete |

|

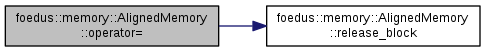

noexcept |

Move assignment operator that steals the memory block from other.

Definition at line 225 of file aligned_memory.cpp.

References release_block().

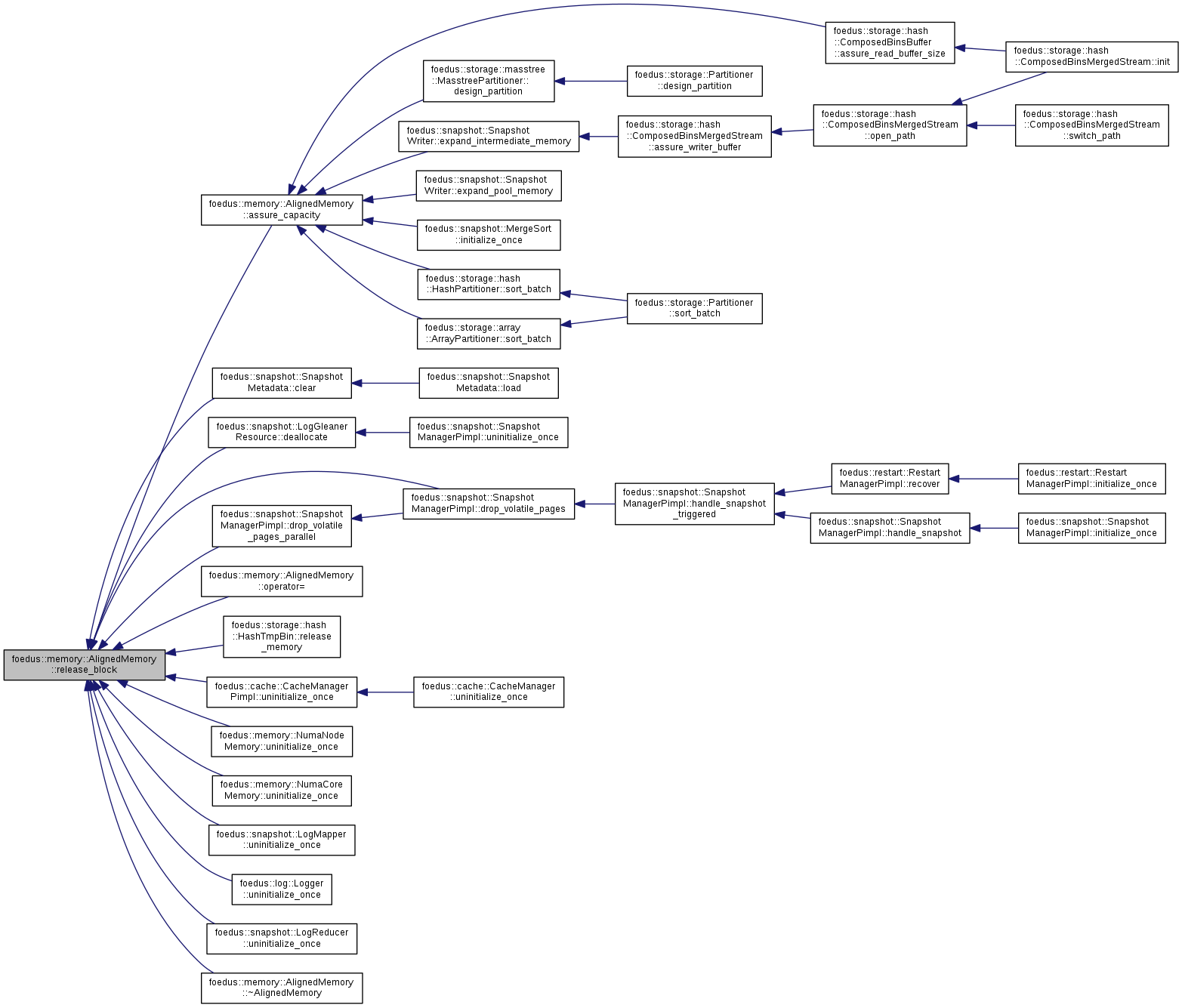

| void foedus::memory::AlignedMemory::release_block | ( | ) |

Releases the memory block.

Definition at line 235 of file aligned_memory.cpp.

References ASSERT_ND, kNumaAllocInterleaved, kNumaAllocOnnode, kNumaMmapOneGbPages, and kPosixMemalign.

Referenced by assure_capacity(), foedus::snapshot::SnapshotMetadata::clear(), foedus::snapshot::LogGleanerResource::deallocate(), foedus::snapshot::SnapshotManagerPimpl::drop_volatile_pages(), foedus::snapshot::SnapshotManagerPimpl::drop_volatile_pages_parallel(), operator=(), foedus::storage::hash::HashTmpBin::release_memory(), foedus::cache::CacheManagerPimpl::uninitialize_once(), foedus::memory::NumaNodeMemory::uninitialize_once(), foedus::memory::NumaCoreMemory::uninitialize_once(), foedus::snapshot::LogMapper::uninitialize_once(), foedus::log::Logger::uninitialize_once(), foedus::snapshot::LogReducer::uninitialize_once(), and ~AlignedMemory().

|

friend |

Definition at line 253 of file aligned_memory.cpp.