|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

Used to store an epoch value with each entry in PagePoolOffsetChunk. More...

Used to store an epoch value with each entry in PagePoolOffsetChunk.

This is used where the page offset can be passed around after some epoch, for example "retired" pages that must be kept intact until next-next epoch.

Definition at line 108 of file page_pool.hpp.

#include <page_pool.hpp>

Classes | |

| struct | OffsetAndEpoch |

Public Types | |

| enum | Constants { kMaxSize = (1 << 16) - 1 } |

Public Member Functions | |

| PagePoolOffsetAndEpochChunk () | |

| uint32_t | capacity () const |

| uint32_t | size () const |

| bool | empty () const |

| bool | full () const |

| void | clear () |

| bool | is_sorted () const |

| void | push_back (PagePoolOffset offset, const Epoch &safe_epoch) |

| void | move_to (PagePoolOffset *destination, uint32_t count) |

| Note that the destination is PagePoolOffset* because that's the only usecase. More... | |

| uint32_t | get_safe_offset_count (const Epoch &threshold) const |

| Returns the number of offsets (always from index-0) whose safe_epoch_ is strictly-before the given epoch. More... | |

| uint32_t | unused_dummy_func_dummy () const |

| Enumerator | |

|---|---|

| kMaxSize |

Max number of pointers to pack. We use this object to pool retired pages, and we observed lots of waits due to full pool that causes the thread to wait for a new epoch. To avoid that, we now use a much larger kMaxSize than PagePoolOffsetChunk. Yes, it means much larger memory consumption in NumaCoreMemory, but shouldn't be a big issue. 8 * 2^16 * nodes * threads. On 16-node/12 threads-per-core (DH), 96MB per node. On 4-node/12 (DL580), 24 MB per node. I'd say negligible. |

Definition at line 110 of file page_pool.hpp.

|

inline |

Definition at line 127 of file page_pool.hpp.

|

inline |

Definition at line 129 of file page_pool.hpp.

References foedus::memory::PagePoolOffsetChunk::kMaxSize.

|

inline |

Definition at line 133 of file page_pool.hpp.

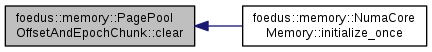

Referenced by foedus::memory::NumaCoreMemory::initialize_once().

|

inline |

Definition at line 131 of file page_pool.hpp.

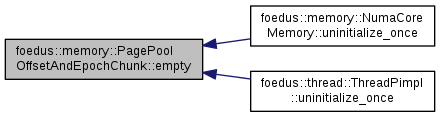

Referenced by foedus::memory::NumaCoreMemory::uninitialize_once(), and foedus::thread::ThreadPimpl::uninitialize_once().

|

inline |

Definition at line 132 of file page_pool.hpp.

References foedus::memory::PagePoolOffsetChunk::kMaxSize.

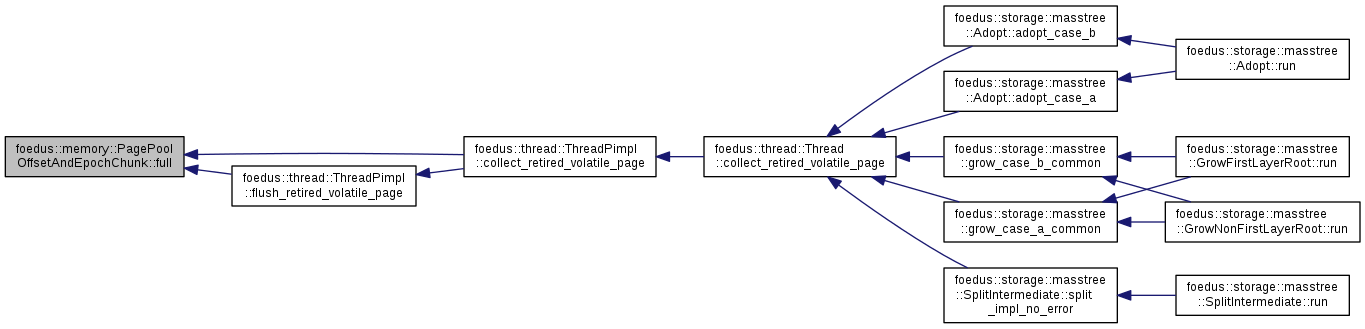

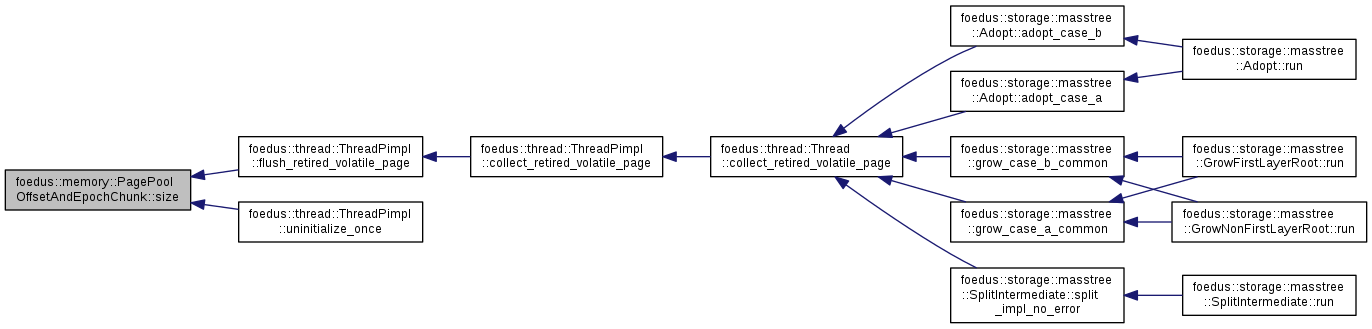

Referenced by foedus::thread::ThreadPimpl::collect_retired_volatile_page(), and foedus::thread::ThreadPimpl::flush_retired_volatile_page().

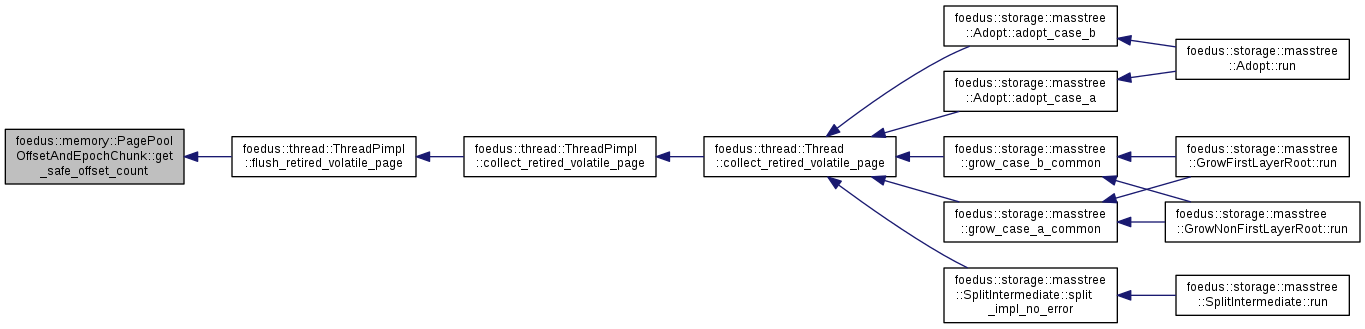

| uint32_t foedus::memory::PagePoolOffsetAndEpochChunk::get_safe_offset_count | ( | const Epoch & | threshold | ) | const |

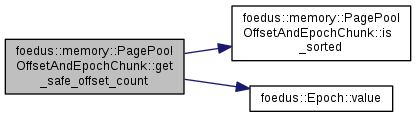

Returns the number of offsets (always from index-0) whose safe_epoch_ is strictly-before the given epoch.

This method does binary search assuming that chunk_ is sorted by safe_epoch_.

| [in] | threshold | epoch that is deemed as unsafe to return. |

Definition at line 57 of file page_pool.cpp.

References ASSERT_ND, is_sorted(), foedus::memory::PagePoolOffsetAndEpochChunk::OffsetAndEpoch::safe_epoch_, and foedus::Epoch::value().

Referenced by foedus::thread::ThreadPimpl::flush_retired_volatile_page().

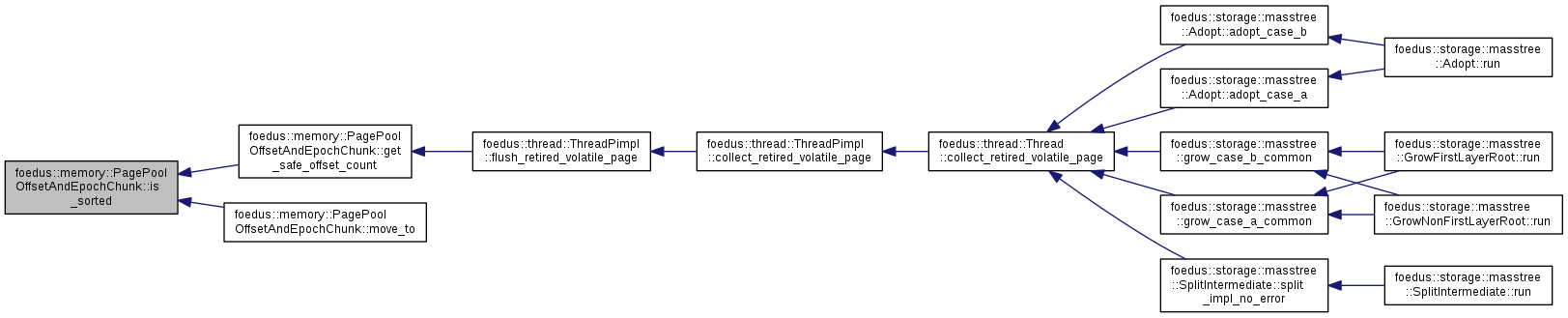

| bool foedus::memory::PagePoolOffsetAndEpochChunk::is_sorted | ( | ) | const |

Definition at line 87 of file page_pool.cpp.

References ASSERT_ND.

Referenced by get_safe_offset_count(), and move_to().

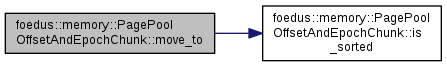

| void foedus::memory::PagePoolOffsetAndEpochChunk::move_to | ( | PagePoolOffset * | destination, |

| uint32_t | count | ||

| ) |

Note that the destination is PagePoolOffset* because that's the only usecase.

Definition at line 72 of file page_pool.cpp.

References ASSERT_ND, is_sorted(), and foedus::memory::PagePoolOffsetAndEpochChunk::OffsetAndEpoch::offset_.

|

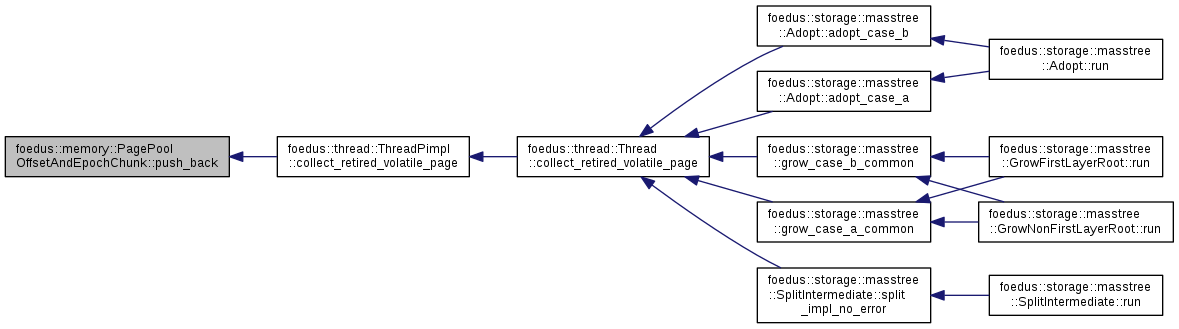

inline |

Definition at line 140 of file page_pool.hpp.

References ASSERT_ND, foedus::memory::PagePoolOffsetChunk::empty(), foedus::memory::PagePoolOffsetChunk::full(), and foedus::Epoch::value().

Referenced by foedus::thread::ThreadPimpl::collect_retired_volatile_page().

|

inline |

Definition at line 130 of file page_pool.hpp.

Referenced by foedus::thread::ThreadPimpl::flush_retired_volatile_page(), and foedus::thread::ThreadPimpl::uninitialize_once().

|

inline |

Definition at line 157 of file page_pool.hpp.