|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

Represents a user transaction. More...

Represents a user transaction.

To obtain this object, call Thread::get_current_xct(). This object represents a user transaction as opposed to physical-only internal transactions (so called system transactions, SysxctScope).

#include <xct.hpp>

Public Types | |

| enum | Constants { kMaxPointerSets = 1024, kMaxPageVersionSets = 1024 } |

Public Member Functions | |

| Xct (Engine *engine, thread::Thread *context, thread::ThreadId thread_id) | |

| Xct (const Xct &other)=delete | |

| Xct & | operator= (const Xct &other)=delete |

| void | initialize (memory::NumaCoreMemory *core_memory, uint32_t *mcs_block_current, uint32_t *mcs_rw_async_mapping_current) |

| void | activate (IsolationLevel isolation_level) |

| Begins the transaction. More... | |

| void | deactivate () |

| Closes the transaction. More... | |

| uint32_t | get_mcs_block_current () const |

| uint32_t | increment_mcs_block_current () |

| void | decrement_mcs_block_current () |

| bool | is_active () const |

| Returns whether the object is an active transaction. More... | |

| bool | is_enable_rll_for_this_xct () const |

| void | set_enable_rll_for_this_xct (bool value) |

| bool | is_default_rll_for_this_xct () const |

| void | set_default_rll_for_this_xct (bool value) |

| uint16_t | get_hot_threshold_for_this_xct () const |

| void | set_hot_threshold_for_this_xct (uint16_t value) |

| uint16_t | get_default_hot_threshold_for_this_xct () const |

| void | set_default_hot_threshold_for_this_xct (uint16_t value) |

| uint16_t | get_rll_threshold_for_this_xct () const |

| void | set_rll_threshold_for_this_xct (uint16_t value) |

| uint16_t | get_default_rll_threshold_for_this_xct () const |

| void | set_default_rll_threshold_for_this_xct (uint16_t value) |

| SysxctWorkspace * | get_sysxct_workspace () const |

| bool | is_read_only () const |

| Returns if this transaction makes no writes. More... | |

| IsolationLevel | get_isolation_level () const |

| Returns the level of isolation for this transaction. More... | |

| const XctId & | get_id () const |

| Returns the ID of this transaction, but note that it is not issued until commit time! More... | |

| thread::Thread * | get_thread_context () |

| thread::ThreadId | get_thread_id () const |

| uint32_t | get_pointer_set_size () const |

| uint32_t | get_page_version_set_size () const |

| uint32_t | get_read_set_size () const |

| uint32_t | get_write_set_size () const |

| uint32_t | get_lock_free_read_set_size () const |

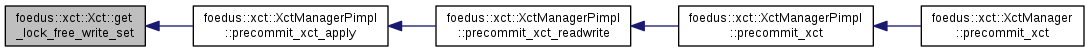

| uint32_t | get_lock_free_write_set_size () const |

| const PointerAccess * | get_pointer_set () const |

| const PageVersionAccess * | get_page_version_set () const |

| ReadXctAccess * | get_read_set () |

| WriteXctAccess * | get_write_set () |

| LockFreeReadXctAccess * | get_lock_free_read_set () |

| LockFreeWriteXctAccess * | get_lock_free_write_set () |

| void | issue_next_id (XctId max_xct_id, Epoch *epoch) |

| Called while a successful commit of xct to issue a new xct id. More... | |

| ErrorCode | add_to_pointer_set (const storage::VolatilePagePointer *pointer_address, storage::VolatilePagePointer observed) |

| Add the given page pointer to the pointer set of this transaction. More... | |

| void | overwrite_to_pointer_set (const storage::VolatilePagePointer *pointer_address, storage::VolatilePagePointer observed) |

| The transaction that has updated the volatile pointer should not abort itself. More... | |

| ErrorCode | add_to_page_version_set (const storage::PageVersion *version_address, storage::PageVersionStatus observed) |

| Add the given page version to the page version set of this transaction. More... | |

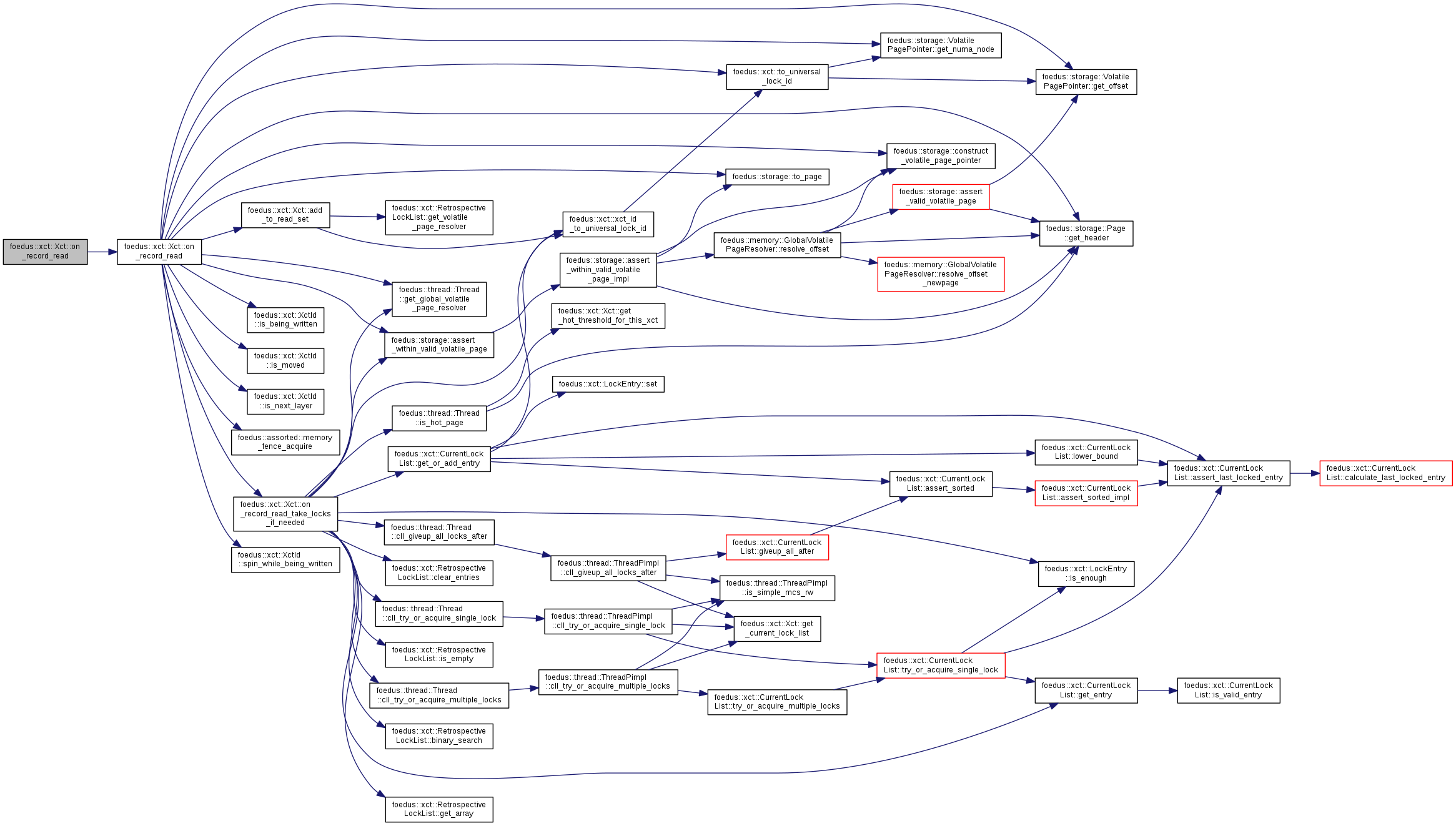

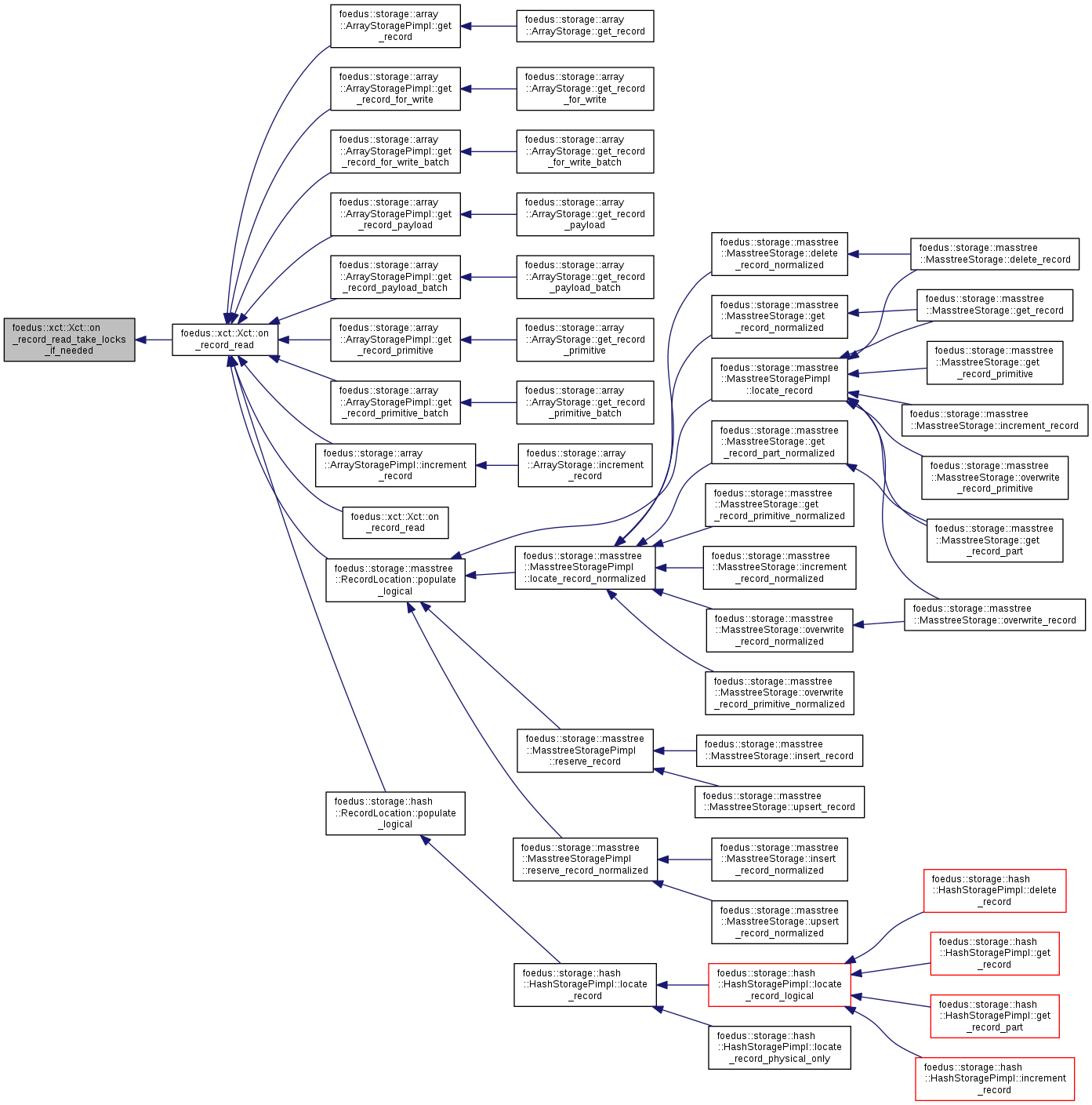

| ErrorCode | on_record_read (bool intended_for_write, RwLockableXctId *tid_address, XctId *observed_xid, ReadXctAccess **read_set_address, bool no_readset_if_moved=false, bool no_readset_if_next_layer=false) |

| The general logic invoked for every record read. More... | |

| ErrorCode | on_record_read (bool intended_for_write, RwLockableXctId *tid_address, bool no_readset_if_moved=false, bool no_readset_if_next_layer=false) |

| Shortcut for a case when you don't need observed_xid/read_set_address back. More... | |

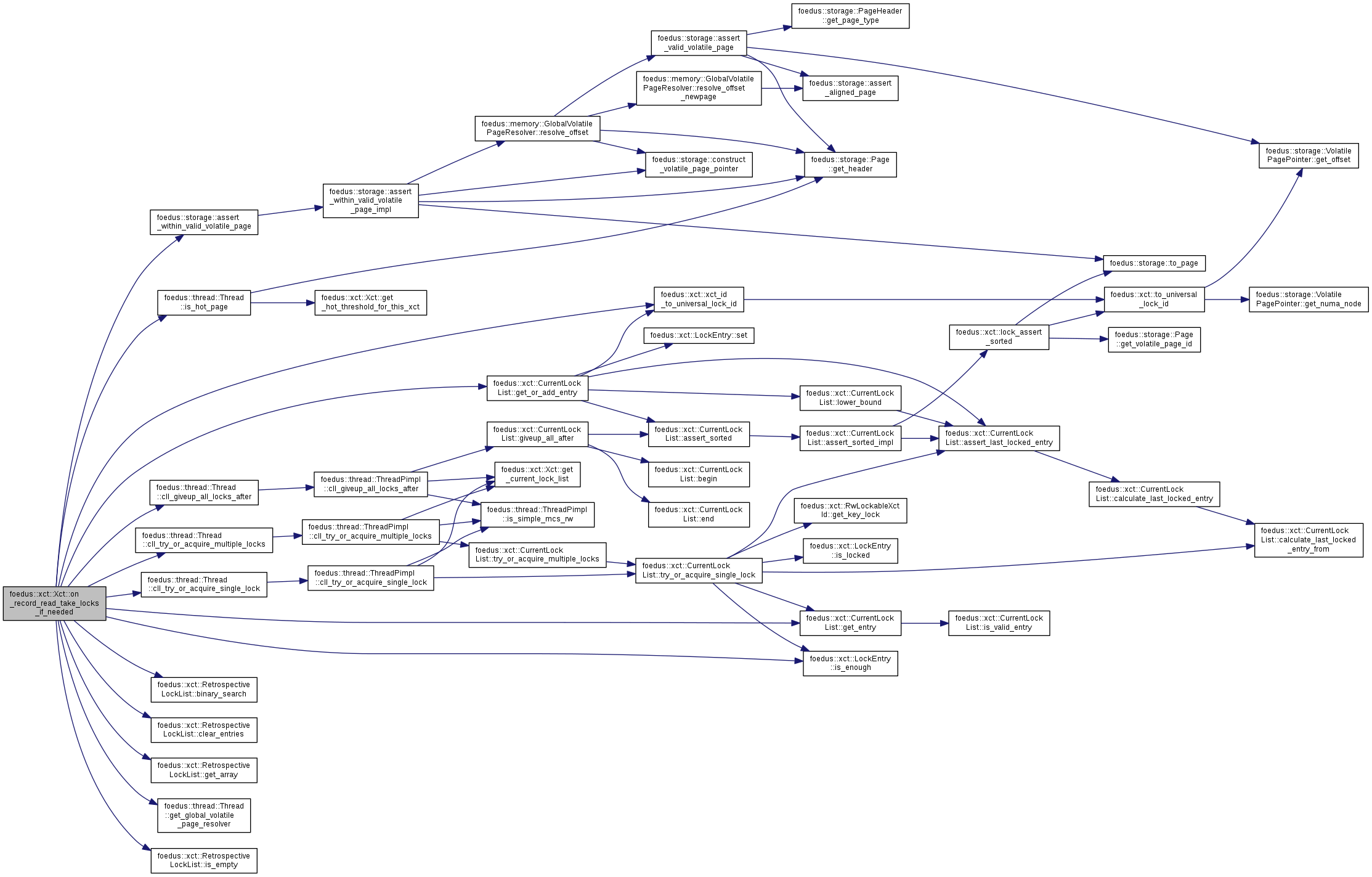

| void | on_record_read_take_locks_if_needed (bool intended_for_write, const storage::Page *page_address, UniversalLockId lock_id, RwLockableXctId *tid_address) |

| subroutine of on_record_read() to take lock(s). More... | |

| ErrorCode | add_related_write_set (ReadXctAccess *related_read_set, RwLockableXctId *tid_address, char *payload_address, log::RecordLogType *log_entry) |

| Registers a write-set related to an existing read-set. More... | |

| ErrorCode | add_to_read_set (storage::StorageId storage_id, XctId observed_owner_id, RwLockableXctId *owner_id_address, ReadXctAccess **read_set_address) |

| Add the given record to the read set of this transaction. More... | |

| ErrorCode | add_to_read_set (storage::StorageId storage_id, XctId observed_owner_id, UniversalLockId owner_lock_id, RwLockableXctId *owner_id_address, ReadXctAccess **read_set_address) |

| Use this in case you already have owner_lock_id. More... | |

| ErrorCode | add_to_write_set (storage::StorageId storage_id, RwLockableXctId *owner_id_address, char *payload_address, log::RecordLogType *log_entry) |

| Add the given record to the write set of this transaction. More... | |

| ErrorCode | add_to_write_set (storage::StorageId storage_id, storage::Record *record, log::RecordLogType *log_entry) |

| Add the given record to the write set of this transaction. More... | |

| ErrorCode | add_to_read_and_write_set (storage::StorageId storage_id, XctId observed_owner_id, RwLockableXctId *owner_id_address, char *payload_address, log::RecordLogType *log_entry) |

| Add a pair of read and write set of this transaction. More... | |

| ErrorCode | add_to_lock_free_read_set (storage::StorageId storage_id, XctId observed_owner_id, RwLockableXctId *owner_id_address) |

| Add the given record to the special read-set that is not placed in usual data pages. More... | |

| ErrorCode | add_to_lock_free_write_set (storage::StorageId storage_id, log::RecordLogType *log_entry) |

| Add the given log to the lock-free write set of this transaction. More... | |

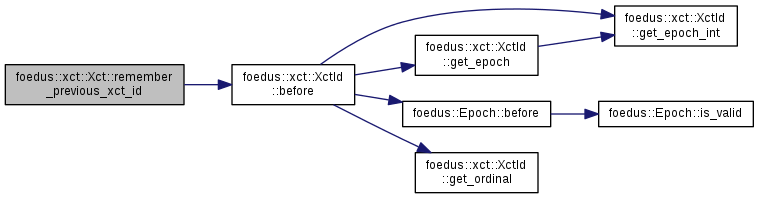

| void | remember_previous_xct_id (XctId new_id) |

| ErrorCode | acquire_local_work_memory (uint32_t size, void **out, uint32_t alignment=8) |

| Get a tentative work memory of the specified size from pre-allocated thread-private memory. More... | |

| xct::CurrentLockList * | get_current_lock_list () |

| const xct::CurrentLockList * | get_current_lock_list () const |

| xct::RetrospectiveLockList * | get_retrospective_lock_list () |

| bool | assert_related_read_write () const __attribute__((always_inline)) |

| This debug method checks whether the related_read_ and related_write_ fileds in read/write sets are consistent. More... | |

Friends | |

| std::ostream & | operator<< (std::ostream &o, const Xct &v) |

| Enumerator | |

|---|---|

| kMaxPointerSets | |

| kMaxPageVersionSets | |

Definition at line 60 of file xct.hpp.

| foedus::xct::Xct::Xct | ( | Engine * | engine, |

| thread::Thread * | context, | ||

| thread::ThreadId | thread_id | ||

| ) |

Definition at line 41 of file xct.cpp.

References foedus::storage::StorageOptions::kDefaultHotThreshold, foedus::xct::XctOptions::kDefaultHotThreshold, and foedus::xct::kSerializable.

|

delete |

|

inline |

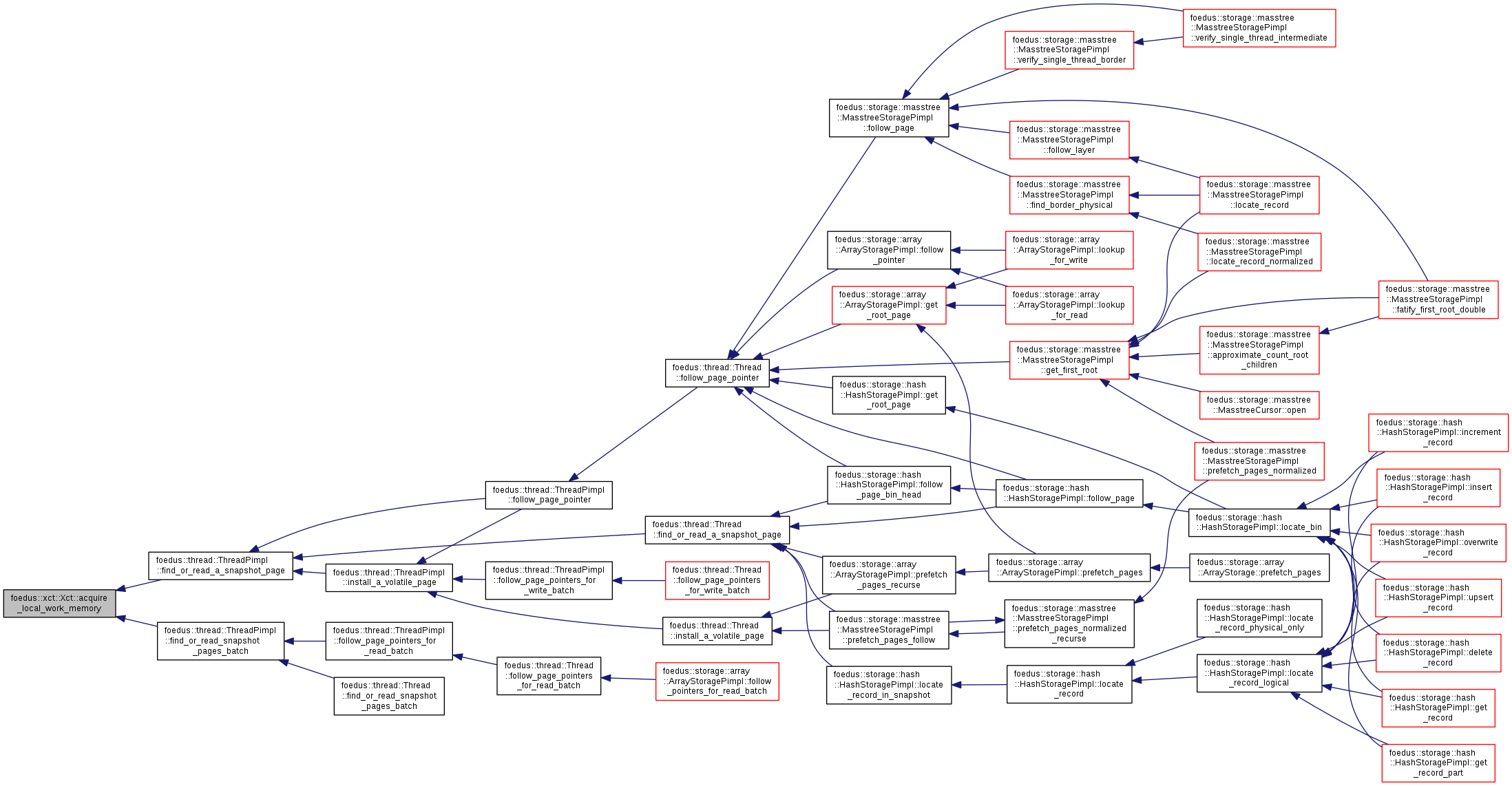

Get a tentative work memory of the specified size from pre-allocated thread-private memory.

The local work memory is recycled after the current transaction.

Definition at line 397 of file xct.hpp.

References foedus::kErrorCodeOk, foedus::kErrorCodeXctNoMoreLocalWorkMemory, and UNLIKELY.

Referenced by foedus::thread::ThreadPimpl::find_or_read_a_snapshot_page(), and foedus::thread::ThreadPimpl::find_or_read_snapshot_pages_batch().

|

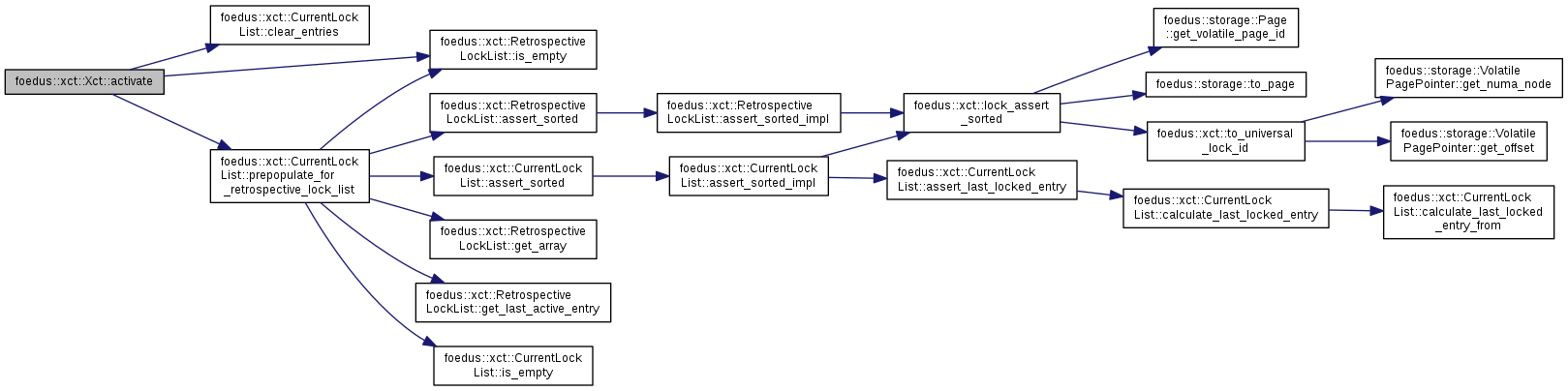

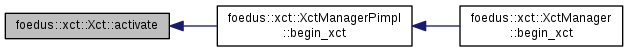

inline |

Begins the transaction.

Definition at line 79 of file xct.hpp.

References ASSERT_ND, foedus::xct::CurrentLockList::clear_entries(), foedus::xct::RetrospectiveLockList::is_empty(), and foedus::xct::CurrentLockList::prepopulate_for_retrospective_lock_list().

Referenced by foedus::xct::XctManagerPimpl::begin_xct().

| ErrorCode foedus::xct::Xct::add_related_write_set | ( | ReadXctAccess * | related_read_set, |

| RwLockableXctId * | tid_address, | ||

| char * | payload_address, | ||

| log::RecordLogType * | log_entry | ||

| ) |

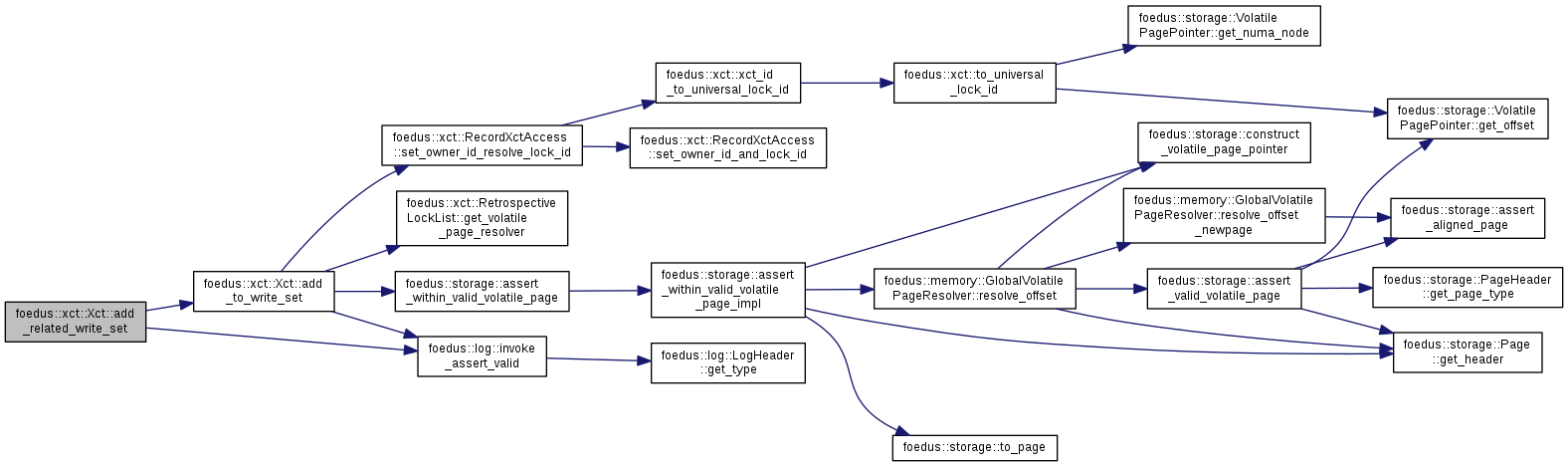

Registers a write-set related to an existing read-set.

This is typically invoked after on_record_read(), which returns the read-set address.

Definition at line 506 of file xct.cpp.

References add_to_write_set(), ASSERT_ND, CHECK_ERROR_CODE, foedus::log::invoke_assert_valid(), foedus::kErrorCodeOk, foedus::xct::RecordXctAccess::owner_id_address_, foedus::xct::WriteXctAccess::related_read_, foedus::xct::ReadXctAccess::related_write_, and foedus::xct::RecordXctAccess::storage_id_.

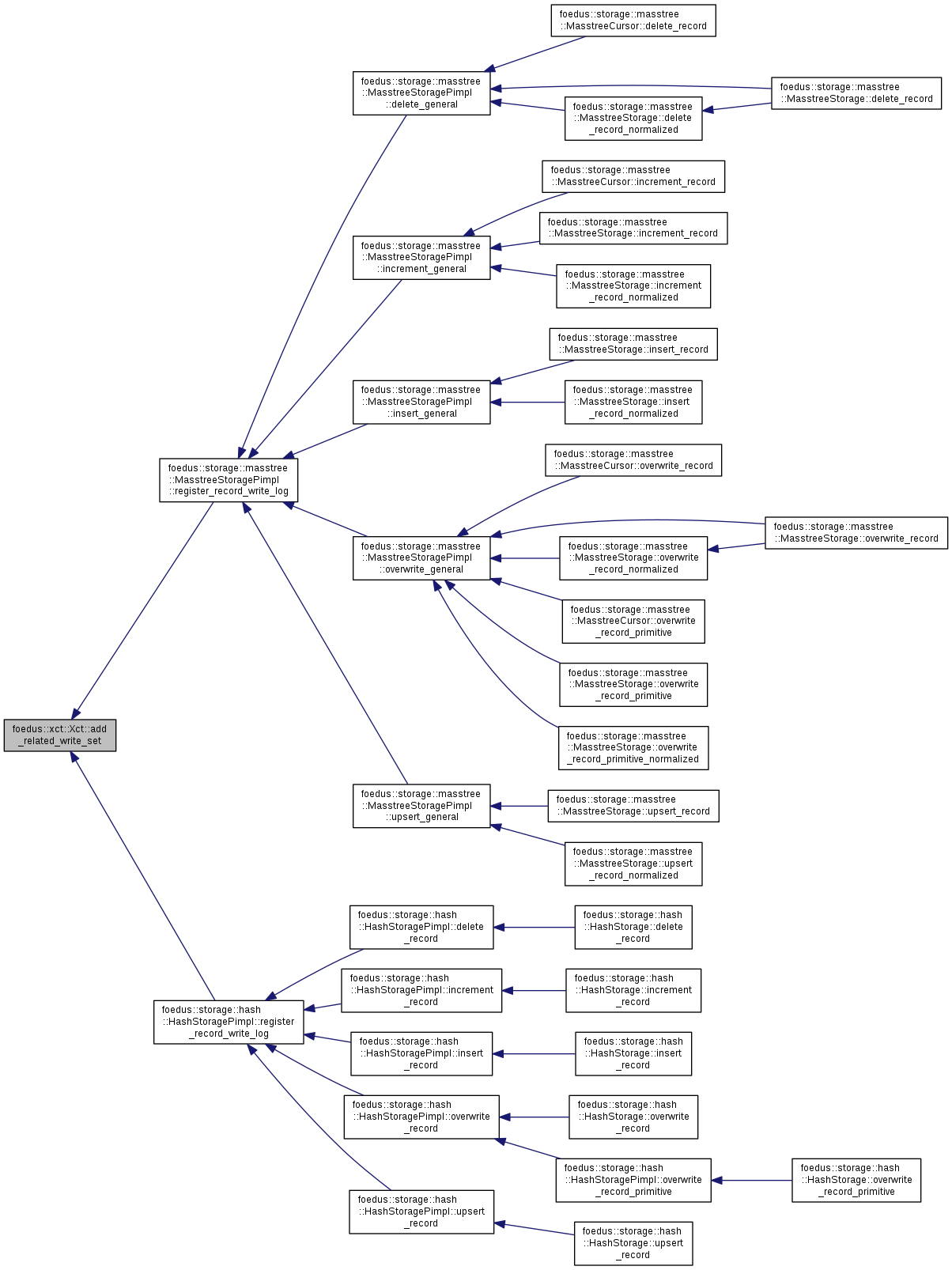

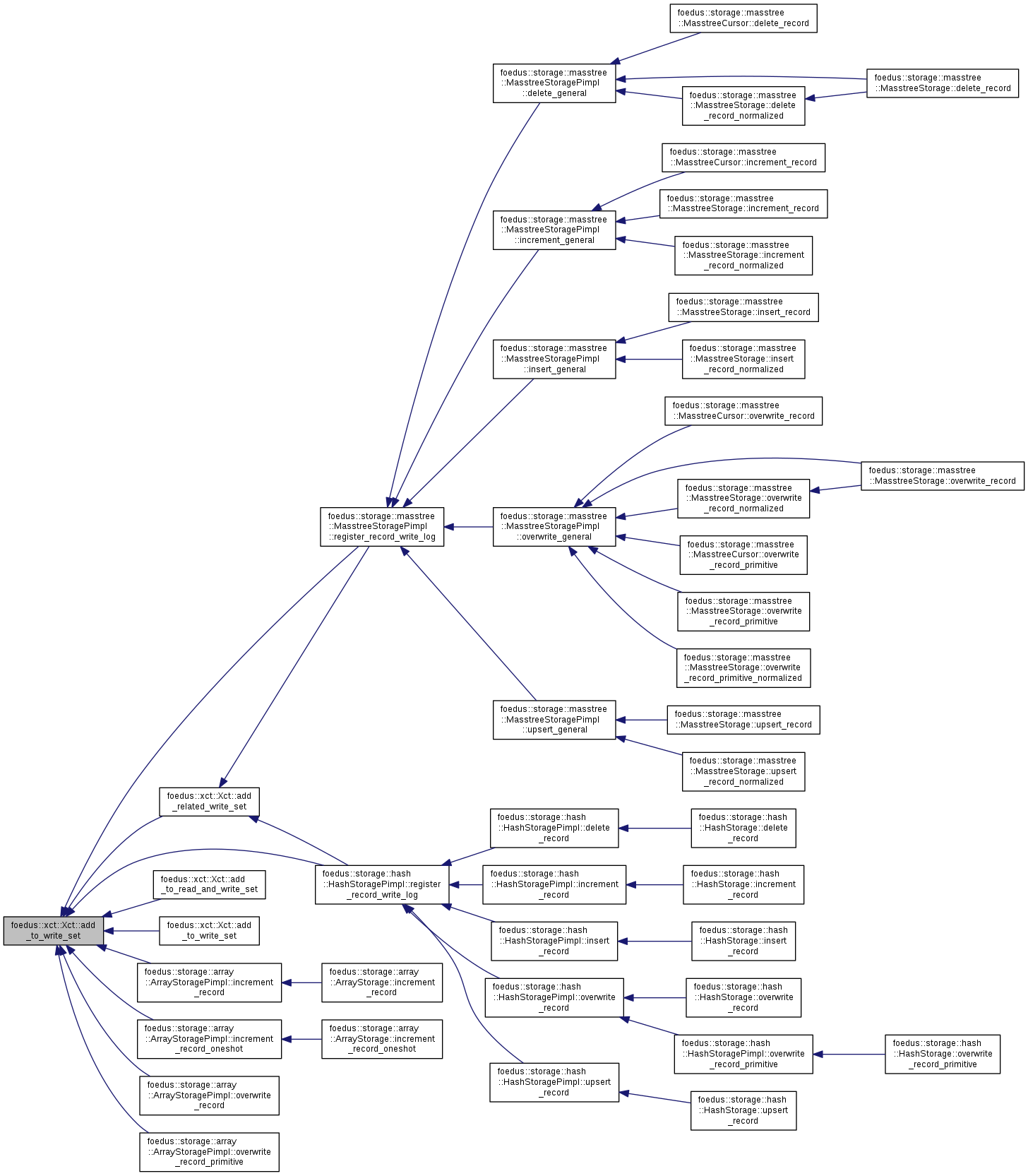

Referenced by foedus::storage::masstree::MasstreeStoragePimpl::register_record_write_log(), and foedus::storage::hash::HashStoragePimpl::register_record_write_log().

| ErrorCode foedus::xct::Xct::add_to_lock_free_read_set | ( | storage::StorageId | storage_id, |

| XctId | observed_owner_id, | ||

| RwLockableXctId * | owner_id_address | ||

| ) |

Add the given record to the special read-set that is not placed in usual data pages.

Definition at line 532 of file xct.cpp.

References ASSERT_ND, foedus::kErrorCodeOk, foedus::kErrorCodeXctReadSetOverflow, foedus::xct::kSerializable, foedus::xct::LockFreeReadXctAccess::observed_owner_id_, foedus::xct::LockFreeReadXctAccess::owner_id_address_, foedus::xct::LockFreeReadXctAccess::storage_id_, and UNLIKELY.

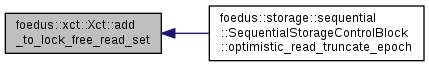

Referenced by foedus::storage::sequential::SequentialStorageControlBlock::optimistic_read_truncate_epoch().

| ErrorCode foedus::xct::Xct::add_to_lock_free_write_set | ( | storage::StorageId | storage_id, |

| log::RecordLogType * | log_entry | ||

| ) |

Add the given log to the lock-free write set of this transaction.

Definition at line 551 of file xct.cpp.

References ASSERT_ND, foedus::log::invoke_assert_valid(), foedus::kErrorCodeOk, foedus::kErrorCodeXctWriteSetOverflow, foedus::xct::LockFreeWriteXctAccess::log_entry_, foedus::xct::LockFreeWriteXctAccess::storage_id_, and UNLIKELY.

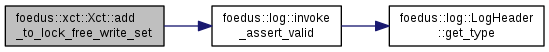

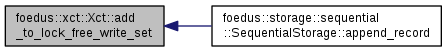

Referenced by foedus::storage::sequential::SequentialStorage::append_record().

| ErrorCode foedus::xct::Xct::add_to_page_version_set | ( | const storage::PageVersion * | version_address, |

| storage::PageVersionStatus | observed | ||

| ) |

Add the given page version to the page version set of this transaction.

This is similar to pointer set. The difference is that this remembers the PageVersion value we observed when we accessed the page. This can capture many more concurrency issues in the page because PageVersion contains many flags and counters. However, PageVersionAccess can't be used if the page itself might be swapped.

Both PointerAccess and PageVersionAccess can be considered as "node set" in [TU2013], but for a little bit different purpose.

Definition at line 242 of file xct.cpp.

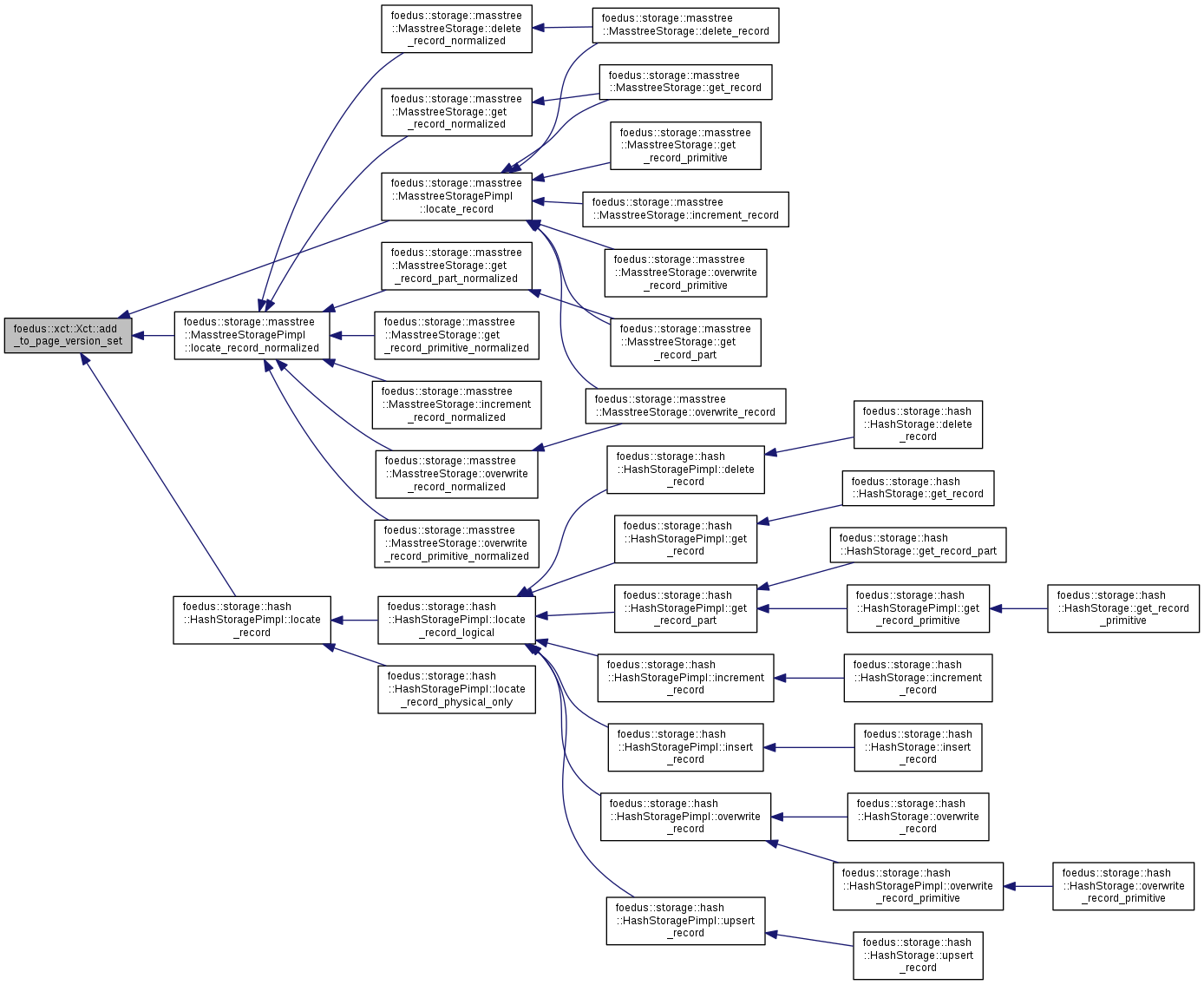

References foedus::xct::PageVersionAccess::address_, ASSERT_ND, foedus::kErrorCodeOk, foedus::kErrorCodeXctPageVersionSetOverflow, kMaxPointerSets, foedus::xct::kSerializable, foedus::xct::PageVersionAccess::observed_, and UNLIKELY.

Referenced by foedus::storage::masstree::MasstreeStoragePimpl::locate_record(), foedus::storage::hash::HashStoragePimpl::locate_record(), and foedus::storage::masstree::MasstreeStoragePimpl::locate_record_normalized().

| ErrorCode foedus::xct::Xct::add_to_pointer_set | ( | const storage::VolatilePagePointer * | pointer_address, |

| storage::VolatilePagePointer | observed | ||

| ) |

Add the given page pointer to the pointer set of this transaction.

You must call this method in the following cases;

To clarify, the first case does not apply to storage types that don't swap volatile pointers. So far, only Masstree Storage has such a swapping for root pages. All other storage types thus don't have to take pointer sets for this.

The second case doesn't apply to snapshot pointers once we follow a snapshot pointer in the tree because everything is assured to be stable once we follow a snapshot pointer.

Definition at line 198 of file xct.cpp.

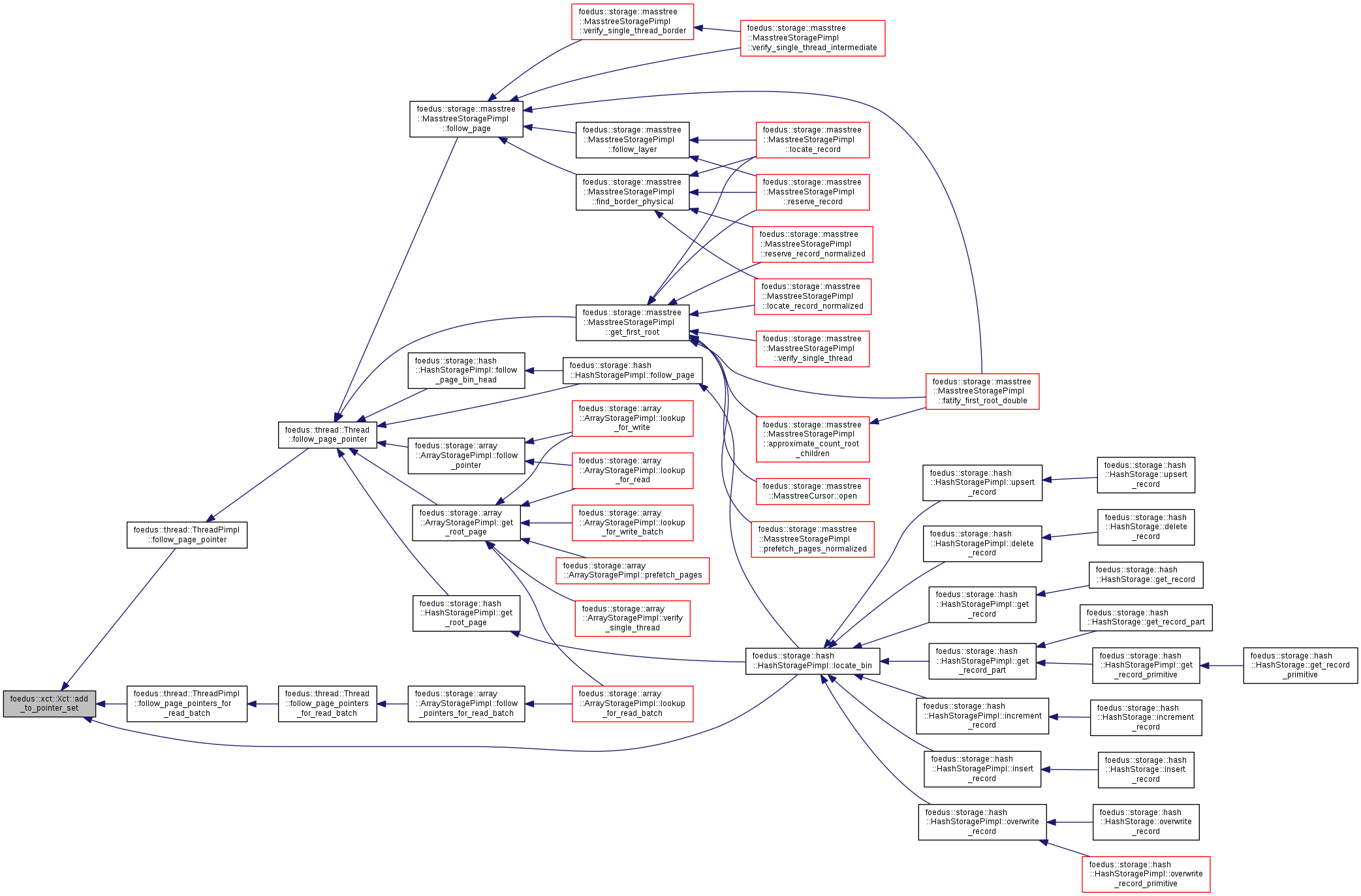

References foedus::xct::PointerAccess::address_, ASSERT_ND, foedus::kErrorCodeOk, foedus::kErrorCodeXctPointerSetOverflow, kMaxPointerSets, foedus::xct::kSerializable, foedus::xct::PointerAccess::observed_, and UNLIKELY.

Referenced by foedus::thread::ThreadPimpl::follow_page_pointer(), foedus::thread::ThreadPimpl::follow_page_pointers_for_read_batch(), and foedus::storage::hash::HashStoragePimpl::locate_bin().

| ErrorCode foedus::xct::Xct::add_to_read_and_write_set | ( | storage::StorageId | storage_id, |

| XctId | observed_owner_id, | ||

| RwLockableXctId * | owner_id_address, | ||

| char * | payload_address, | ||

| log::RecordLogType * | log_entry | ||

| ) |

Add a pair of read and write set of this transaction.

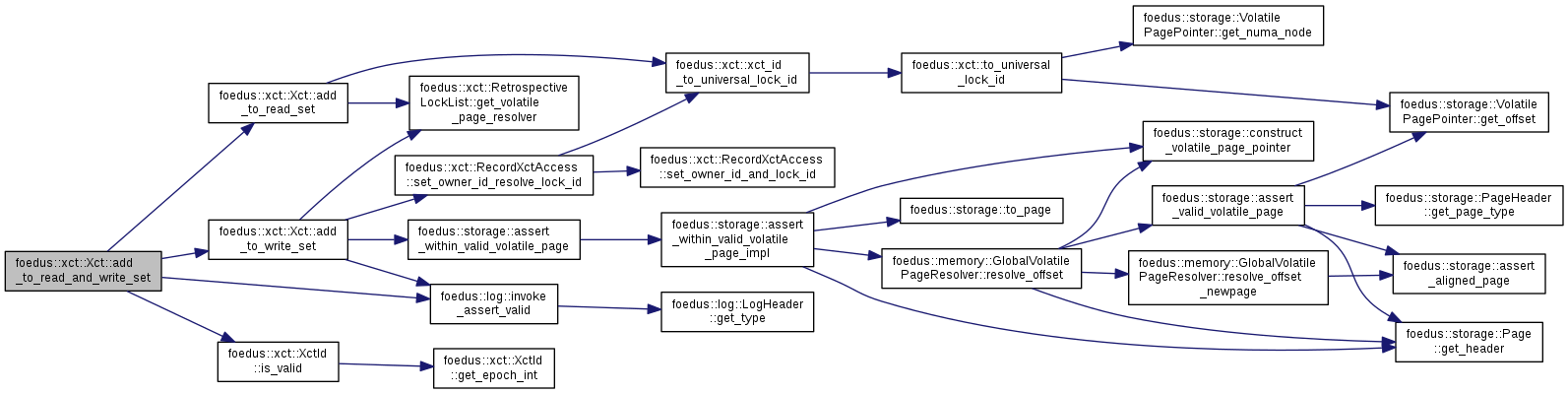

Definition at line 474 of file xct.cpp.

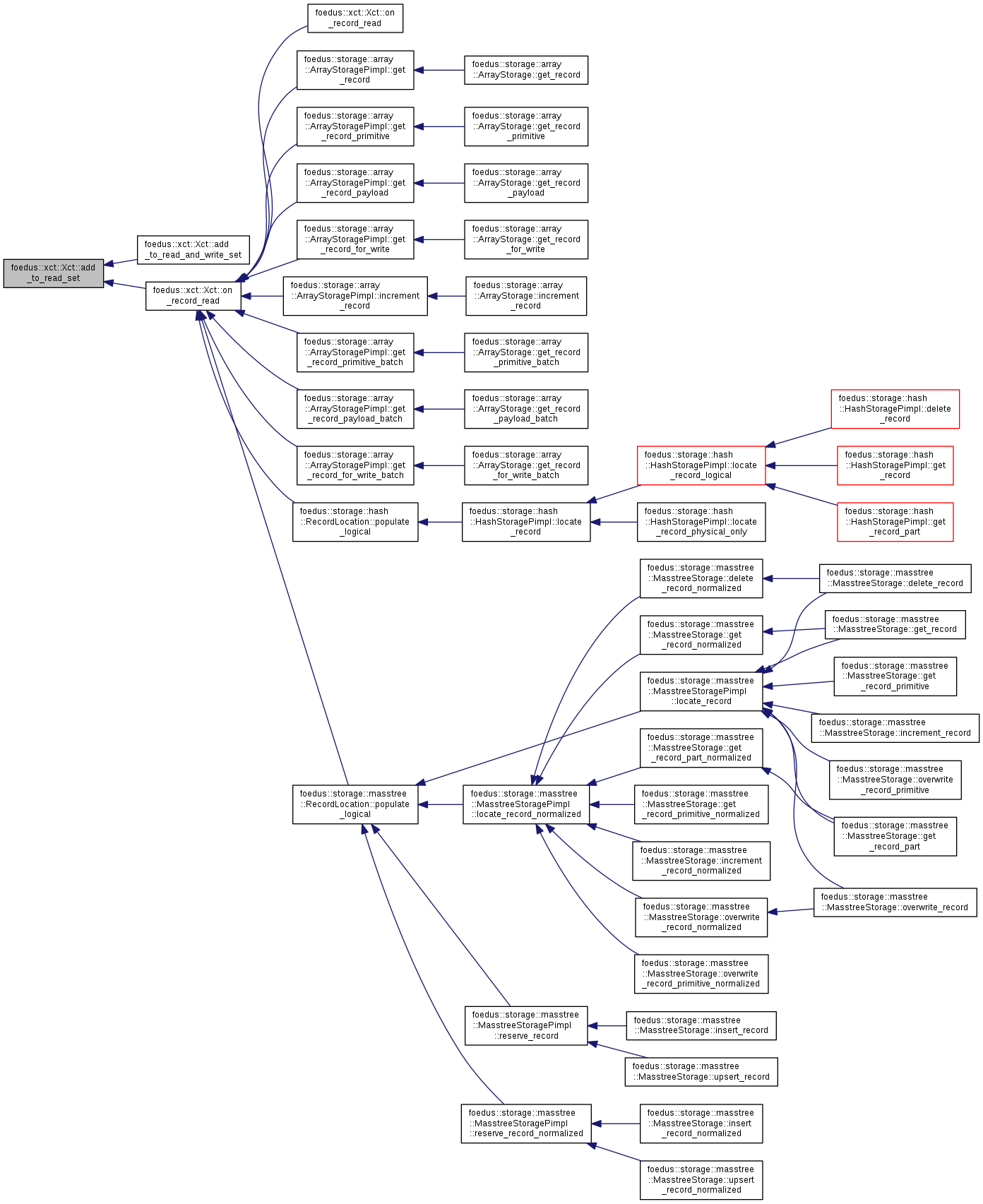

References add_to_read_set(), add_to_write_set(), ASSERT_ND, CHECK_ERROR_CODE, foedus::log::invoke_assert_valid(), foedus::xct::XctId::is_valid(), and foedus::kErrorCodeOk.

| ErrorCode foedus::xct::Xct::add_to_read_set | ( | storage::StorageId | storage_id, |

| XctId | observed_owner_id, | ||

| RwLockableXctId * | owner_id_address, | ||

| ReadXctAccess ** | read_set_address | ||

| ) |

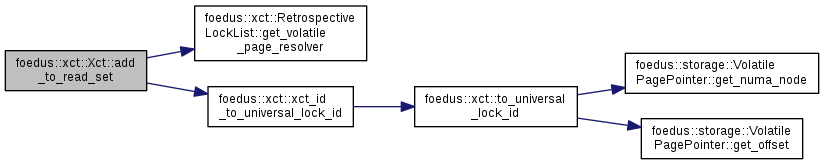

Add the given record to the read set of this transaction.

You must call this method BEFORE reading the data, otherwise it violates the commit protocol.

Definition at line 395 of file xct.cpp.

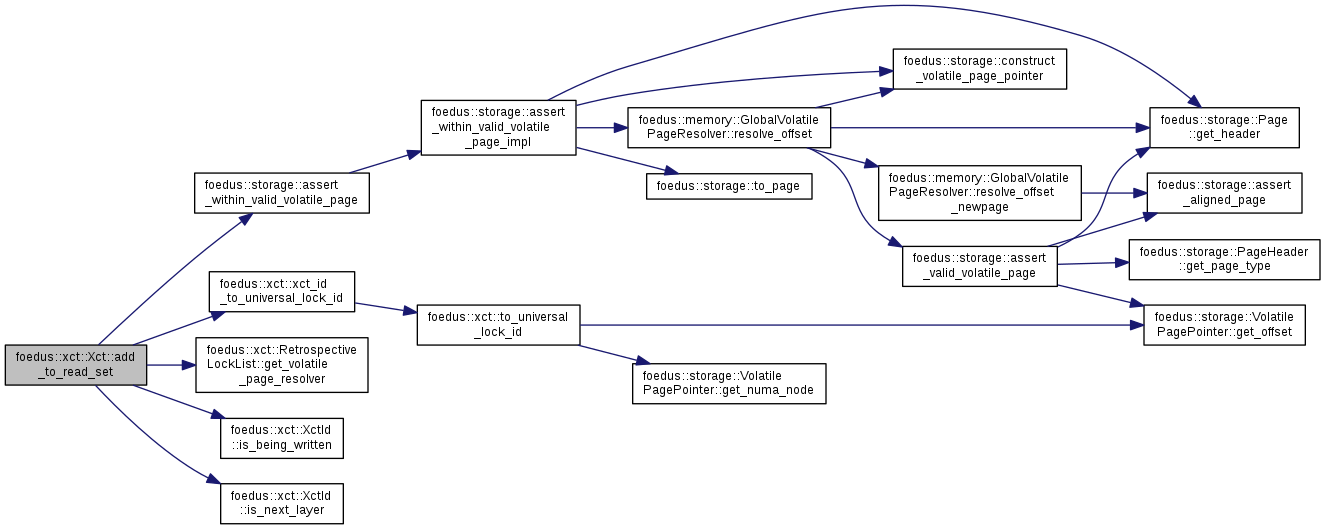

References foedus::xct::RetrospectiveLockList::get_volatile_page_resolver(), and foedus::xct::xct_id_to_universal_lock_id().

Referenced by add_to_read_and_write_set(), and on_record_read().

| ErrorCode foedus::xct::Xct::add_to_read_set | ( | storage::StorageId | storage_id, |

| XctId | observed_owner_id, | ||

| UniversalLockId | owner_lock_id, | ||

| RwLockableXctId * | owner_id_address, | ||

| ReadXctAccess ** | read_set_address | ||

| ) |

Use this in case you already have owner_lock_id.

Slightly faster.

Definition at line 410 of file xct.cpp.

References ASSERT_ND, foedus::storage::assert_within_valid_volatile_page(), foedus::xct::RetrospectiveLockList::get_volatile_page_resolver(), foedus::xct::XctId::is_being_written(), foedus::xct::XctId::is_next_layer(), foedus::kErrorCodeOk, foedus::kErrorCodeXctReadSetOverflow, foedus::xct::RecordXctAccess::ordinal_, UNLIKELY, and foedus::xct::xct_id_to_universal_lock_id().

| ErrorCode foedus::xct::Xct::add_to_write_set | ( | storage::StorageId | storage_id, |

| RwLockableXctId * | owner_id_address, | ||

| char * | payload_address, | ||

| log::RecordLogType * | log_entry | ||

| ) |

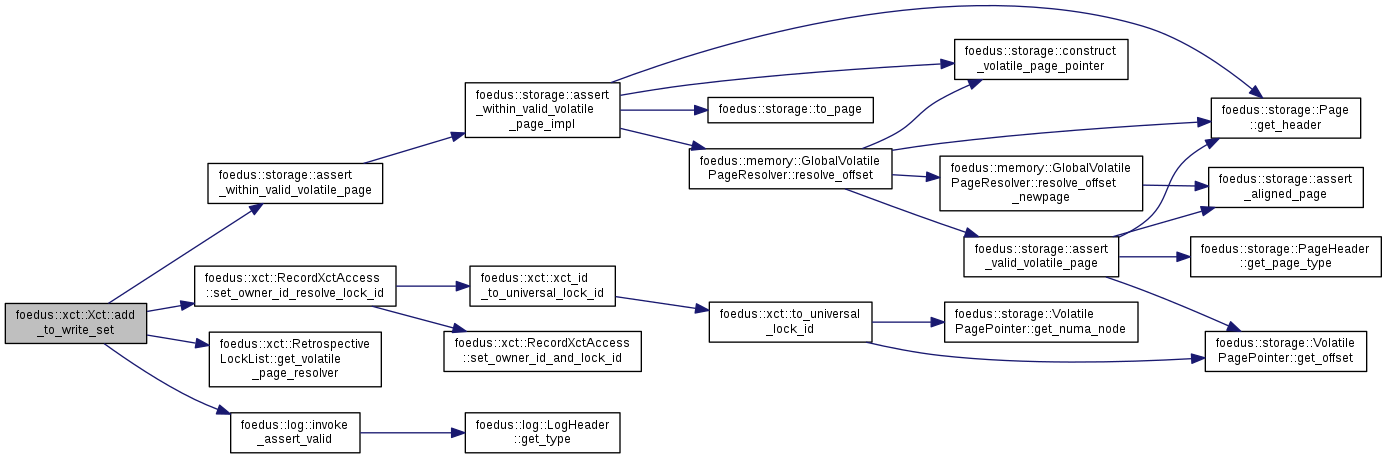

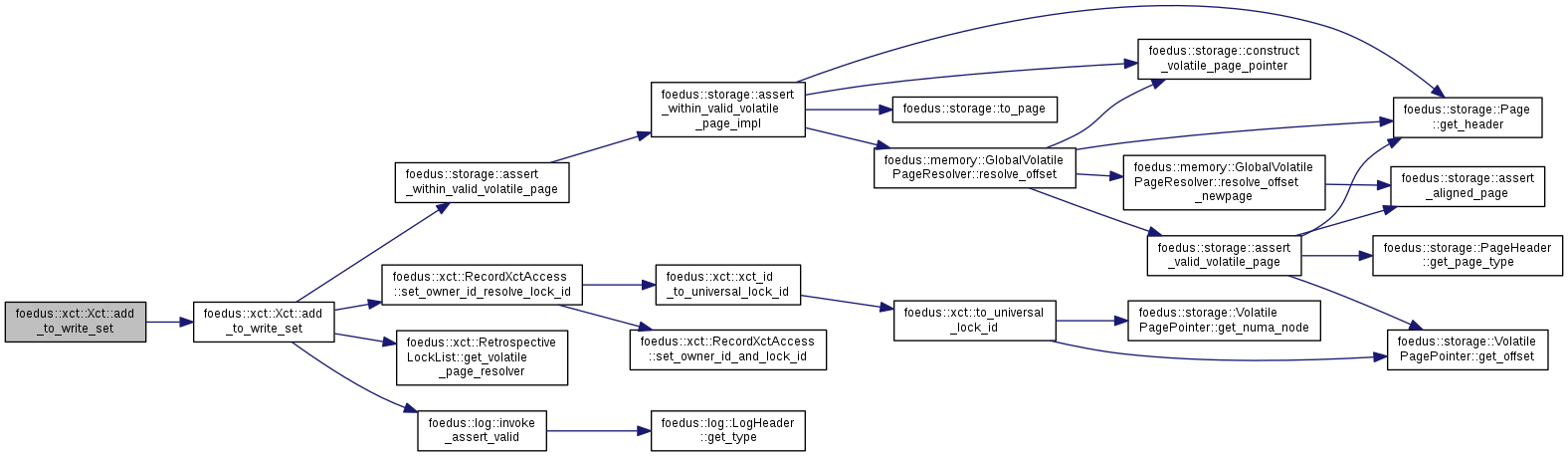

Add the given record to the write set of this transaction.

Definition at line 444 of file xct.cpp.

References ASSERT_ND, foedus::storage::assert_within_valid_volatile_page(), CXX11_NULLPTR, foedus::xct::RetrospectiveLockList::get_volatile_page_resolver(), foedus::log::invoke_assert_valid(), foedus::kErrorCodeOk, foedus::kErrorCodeXctWriteSetOverflow, foedus::xct::WriteXctAccess::log_entry_, foedus::xct::RecordXctAccess::ordinal_, foedus::xct::WriteXctAccess::payload_address_, foedus::xct::WriteXctAccess::related_read_, foedus::xct::RecordXctAccess::set_owner_id_resolve_lock_id(), foedus::xct::RecordXctAccess::storage_id_, and UNLIKELY.

Referenced by add_related_write_set(), add_to_read_and_write_set(), add_to_write_set(), foedus::storage::array::ArrayStoragePimpl::increment_record(), foedus::storage::array::ArrayStoragePimpl::increment_record_oneshot(), foedus::storage::array::ArrayStoragePimpl::overwrite_record(), foedus::storage::array::ArrayStoragePimpl::overwrite_record_primitive(), foedus::storage::masstree::MasstreeStoragePimpl::register_record_write_log(), and foedus::storage::hash::HashStoragePimpl::register_record_write_log().

|

inline |

Add the given record to the write set of this transaction.

Definition at line 354 of file xct.hpp.

References add_to_write_set(), foedus::storage::Record::owner_id_, and foedus::storage::Record::payload_.

|

inline |

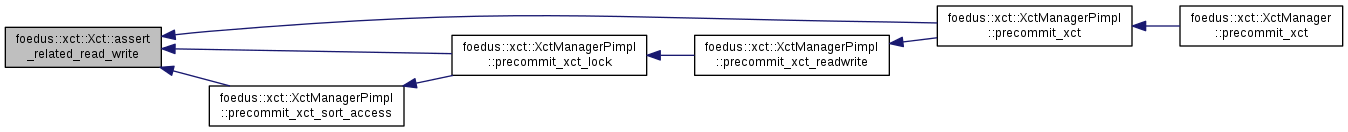

This debug method checks whether the related_read_ and related_write_ fileds in read/write sets are consistent.

This method is completely wiped out in release build.

Definition at line 538 of file xct.hpp.

References ASSERT_ND, foedus::xct::RecordXctAccess::owner_id_address_, foedus::xct::WriteXctAccess::related_read_, and foedus::xct::ReadXctAccess::related_write_.

Referenced by foedus::xct::XctManagerPimpl::precommit_xct(), foedus::xct::XctManagerPimpl::precommit_xct_lock(), and foedus::xct::XctManagerPimpl::precommit_xct_sort_access().

|

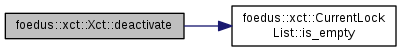

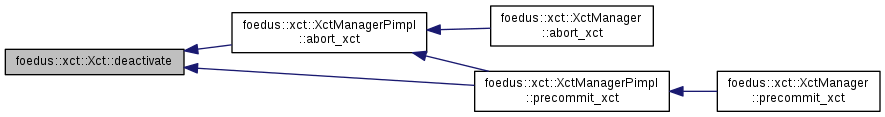

inline |

Closes the transaction.

Definition at line 108 of file xct.hpp.

References ASSERT_ND, and foedus::xct::CurrentLockList::is_empty().

Referenced by foedus::xct::XctManagerPimpl::abort_xct(), and foedus::xct::XctManagerPimpl::precommit_xct().

|

inline |

|

inline |

Definition at line 413 of file xct.hpp.

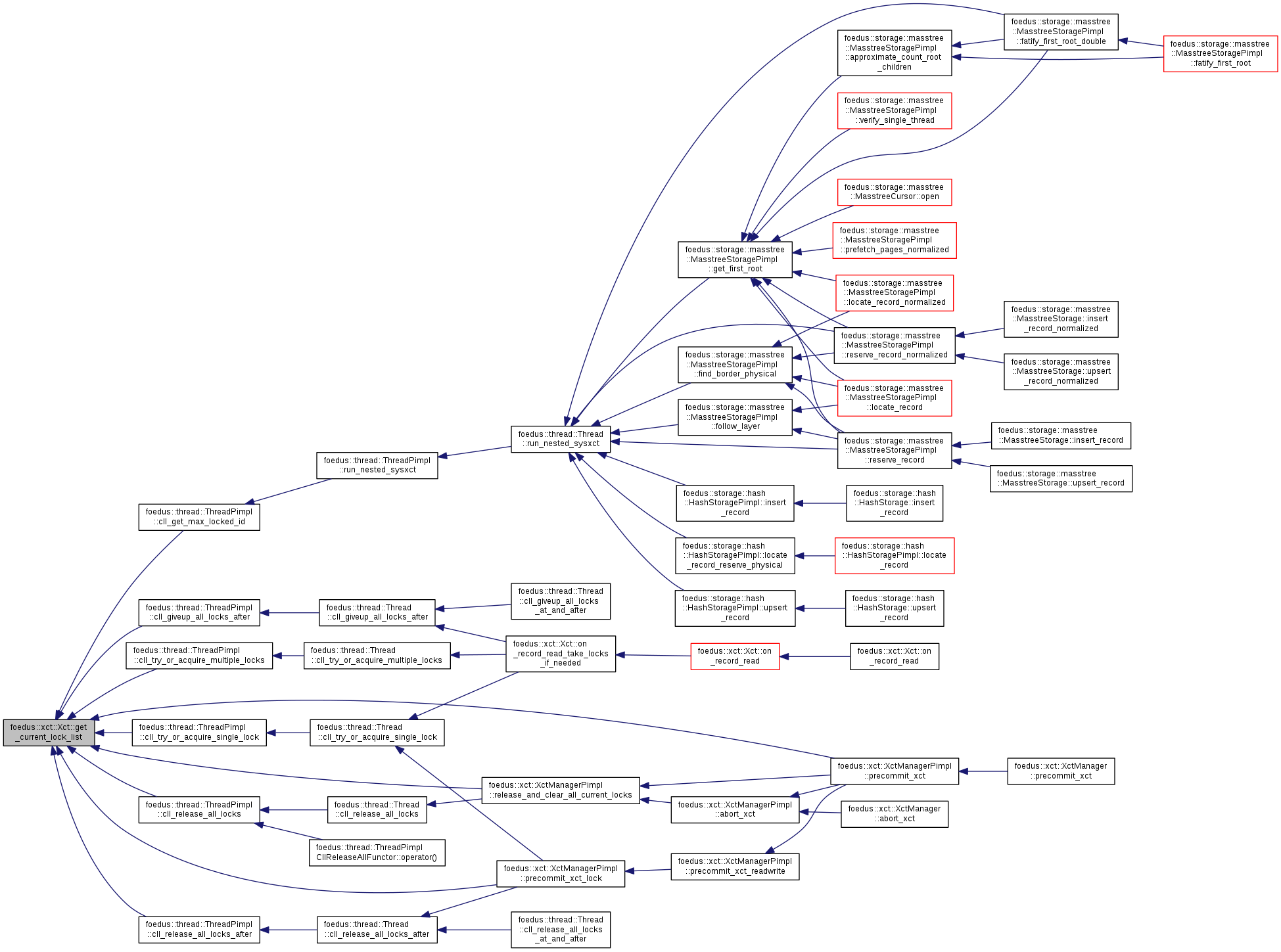

Referenced by foedus::thread::ThreadPimpl::cll_get_max_locked_id(), foedus::thread::ThreadPimpl::cll_giveup_all_locks_after(), foedus::thread::ThreadPimpl::cll_release_all_locks(), foedus::thread::ThreadPimpl::cll_release_all_locks_after(), foedus::thread::ThreadPimpl::cll_try_or_acquire_multiple_locks(), foedus::thread::ThreadPimpl::cll_try_or_acquire_single_lock(), foedus::xct::XctManagerPimpl::precommit_xct(), foedus::xct::XctManagerPimpl::precommit_xct_lock(), and foedus::xct::XctManagerPimpl::release_and_clear_all_current_locks().

|

inline |

|

inline |

Definition at line 130 of file xct.hpp.

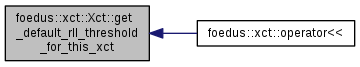

Referenced by foedus::xct::operator<<().

|

inline |

Definition at line 137 of file xct.hpp.

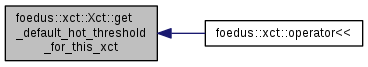

Referenced by foedus::xct::operator<<().

|

inline |

Definition at line 128 of file xct.hpp.

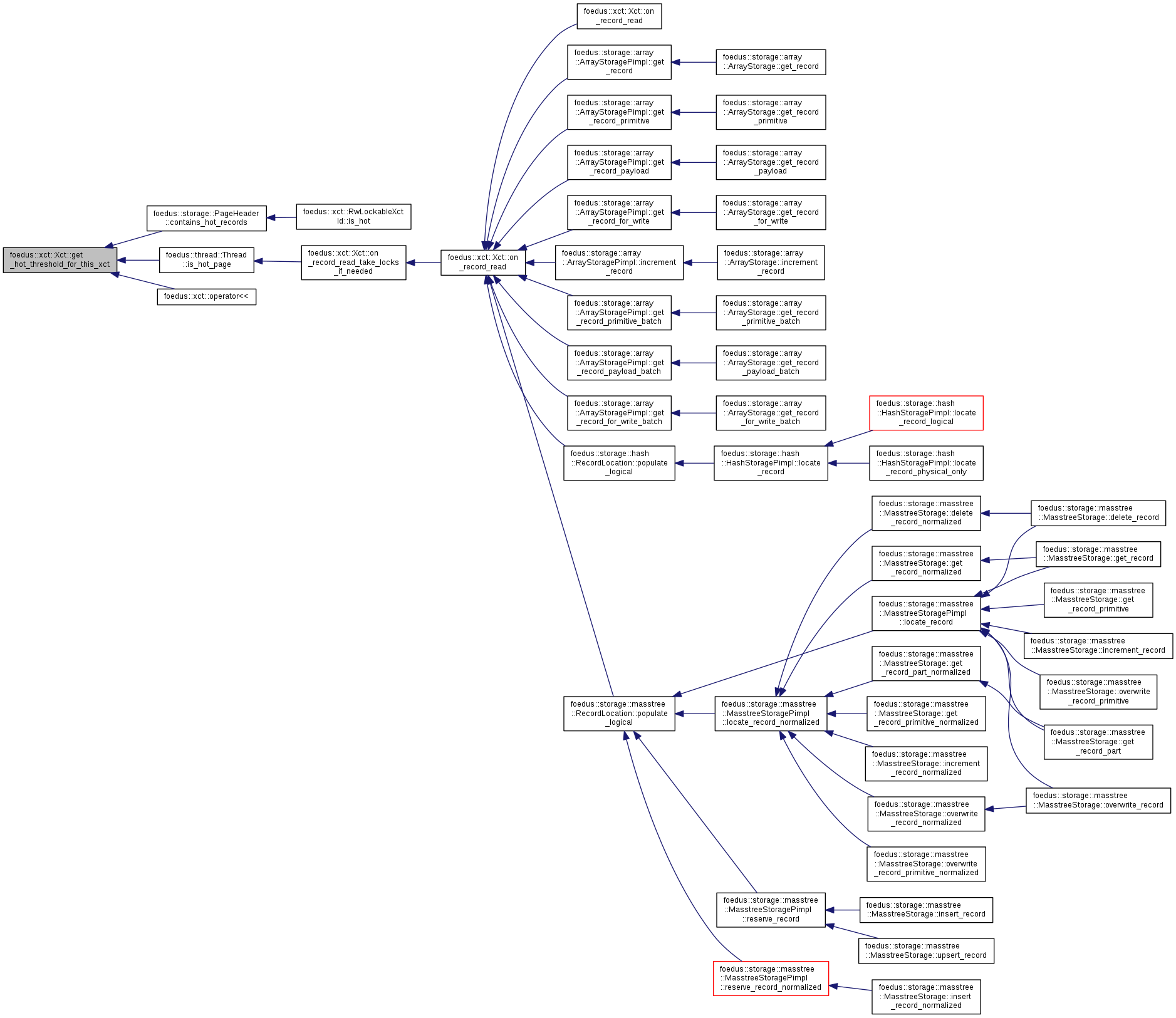

Referenced by foedus::storage::PageHeader::contains_hot_records(), foedus::thread::Thread::is_hot_page(), and foedus::xct::operator<<().

|

inline |

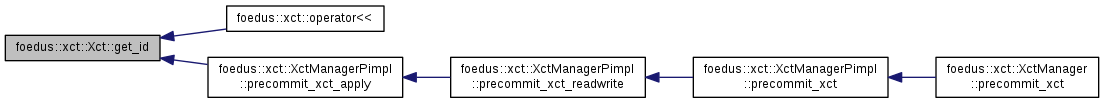

Returns the ID of this transaction, but note that it is not issued until commit time!

Definition at line 151 of file xct.hpp.

Referenced by foedus::xct::operator<<(), and foedus::xct::XctManagerPimpl::precommit_xct_apply().

|

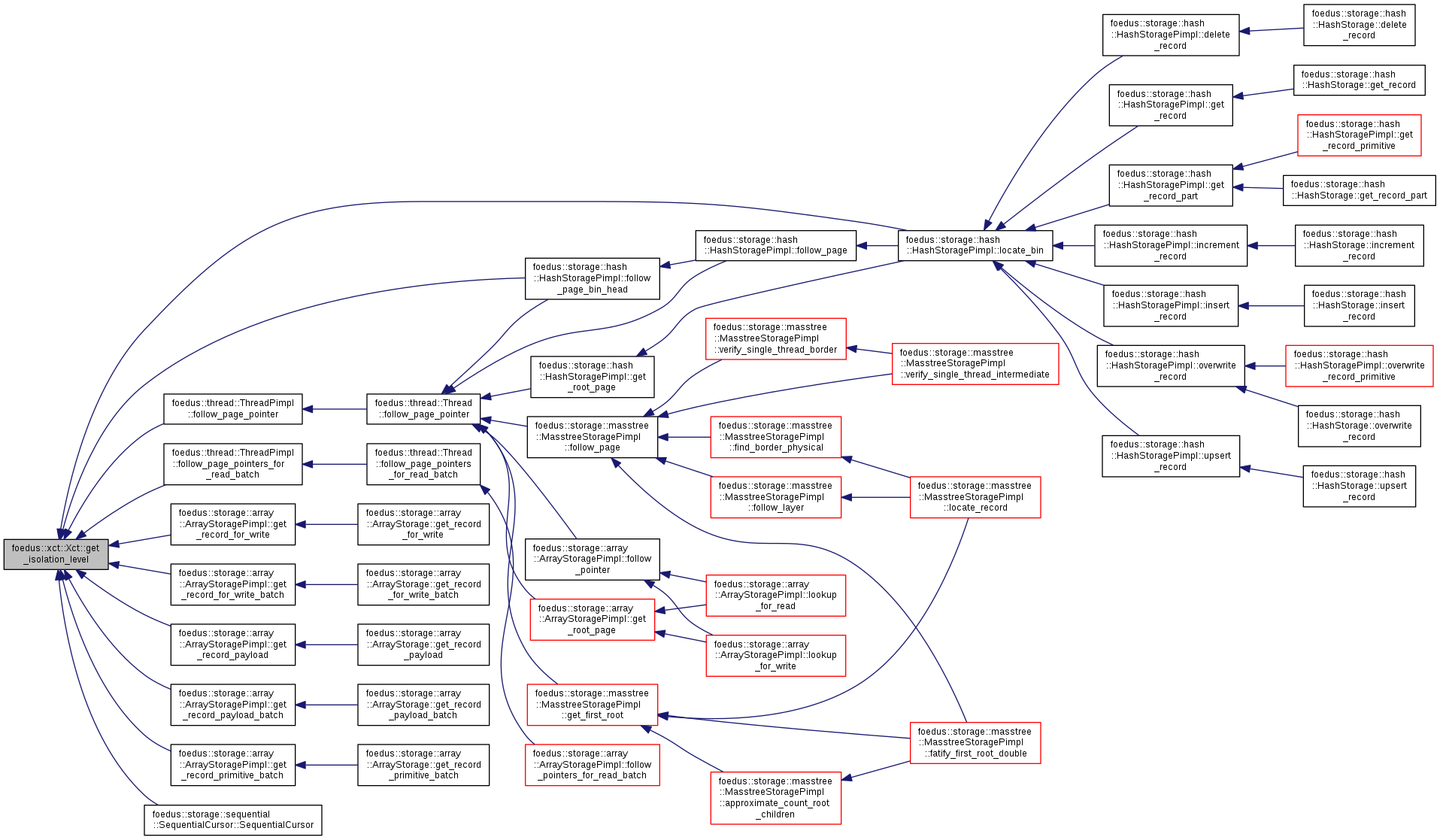

inline |

Returns the level of isolation for this transaction.

Definition at line 149 of file xct.hpp.

Referenced by foedus::storage::hash::HashStoragePimpl::follow_page_bin_head(), foedus::thread::ThreadPimpl::follow_page_pointer(), foedus::thread::ThreadPimpl::follow_page_pointers_for_read_batch(), foedus::storage::array::ArrayStoragePimpl::get_record_for_write(), foedus::storage::array::ArrayStoragePimpl::get_record_for_write_batch(), foedus::storage::array::ArrayStoragePimpl::get_record_payload(), foedus::storage::array::ArrayStoragePimpl::get_record_payload_batch(), foedus::storage::array::ArrayStoragePimpl::get_record_primitive_batch(), foedus::storage::hash::HashStoragePimpl::locate_bin(), and foedus::storage::sequential::SequentialCursor::SequentialCursor().

|

inline |

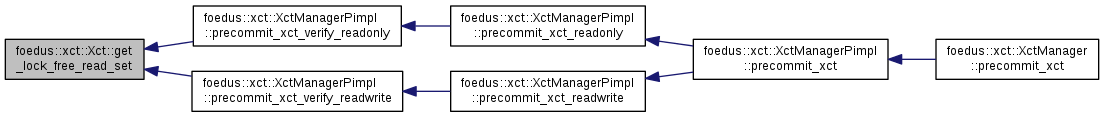

Definition at line 164 of file xct.hpp.

Referenced by foedus::xct::XctManagerPimpl::precommit_xct_verify_readonly(), and foedus::xct::XctManagerPimpl::precommit_xct_verify_readwrite().

|

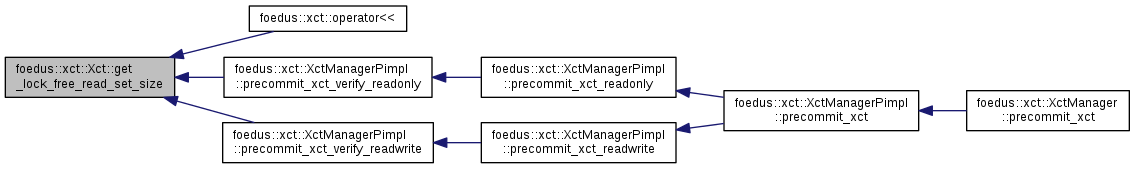

inline |

Definition at line 158 of file xct.hpp.

Referenced by foedus::xct::operator<<(), foedus::xct::XctManagerPimpl::precommit_xct_verify_readonly(), and foedus::xct::XctManagerPimpl::precommit_xct_verify_readwrite().

|

inline |

Definition at line 165 of file xct.hpp.

Referenced by foedus::xct::XctManagerPimpl::precommit_xct_apply().

|

inline |

Definition at line 159 of file xct.hpp.

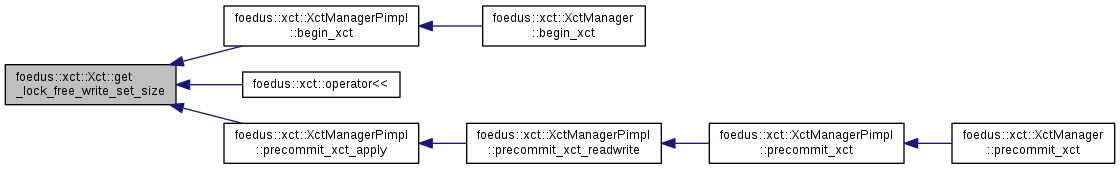

Referenced by foedus::xct::XctManagerPimpl::begin_xct(), foedus::xct::operator<<(), and foedus::xct::XctManagerPimpl::precommit_xct_apply().

|

inline |

Definition at line 116 of file xct.hpp.

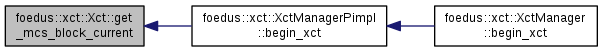

Referenced by foedus::xct::XctManagerPimpl::begin_xct().

|

inline |

Definition at line 161 of file xct.hpp.

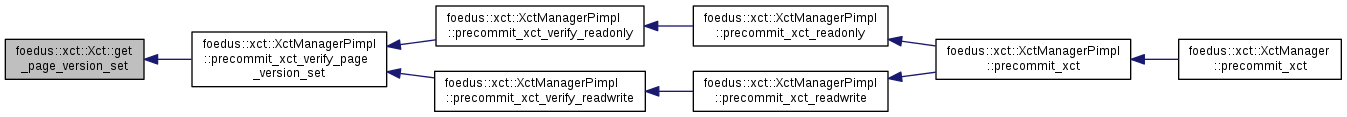

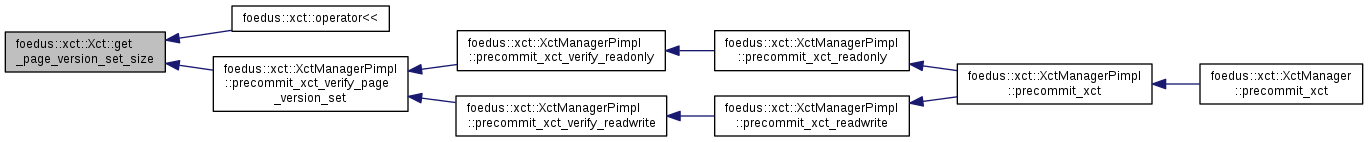

Referenced by foedus::xct::XctManagerPimpl::precommit_xct_verify_page_version_set().

|

inline |

Definition at line 155 of file xct.hpp.

Referenced by foedus::xct::operator<<(), and foedus::xct::XctManagerPimpl::precommit_xct_verify_page_version_set().

|

inline |

Definition at line 160 of file xct.hpp.

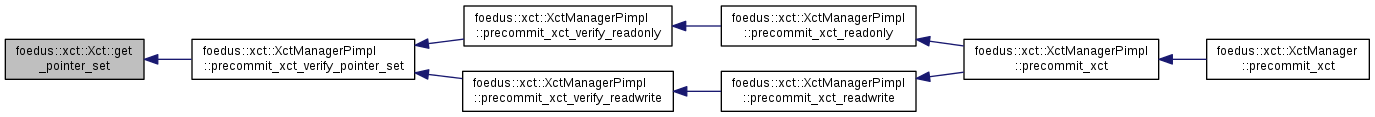

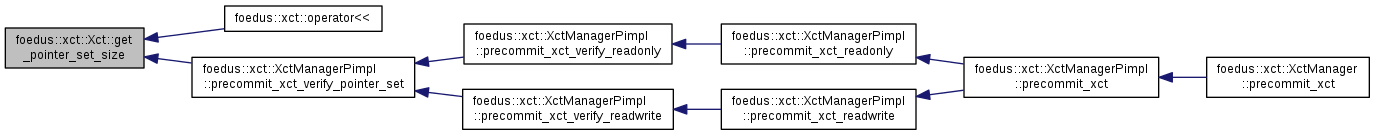

Referenced by foedus::xct::XctManagerPimpl::precommit_xct_verify_pointer_set().

|

inline |

Definition at line 154 of file xct.hpp.

Referenced by foedus::xct::operator<<(), and foedus::xct::XctManagerPimpl::precommit_xct_verify_pointer_set().

|

inline |

Definition at line 162 of file xct.hpp.

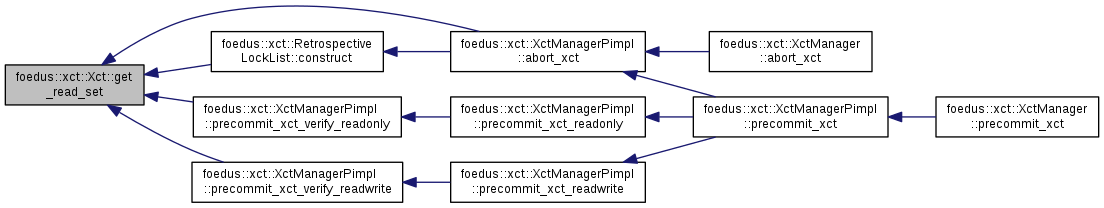

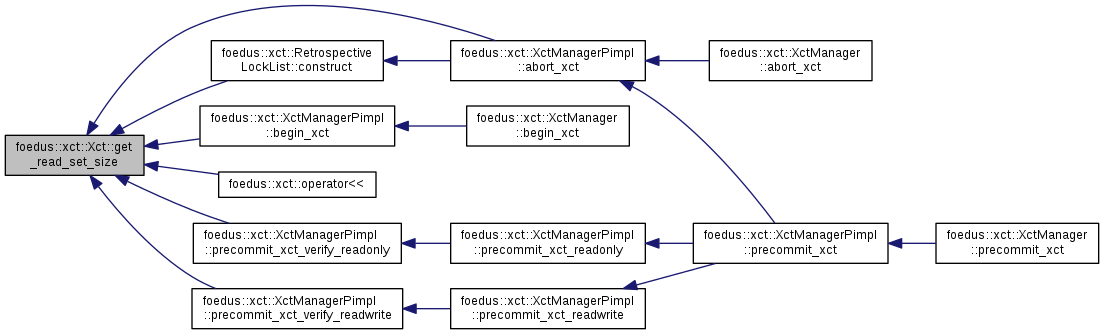

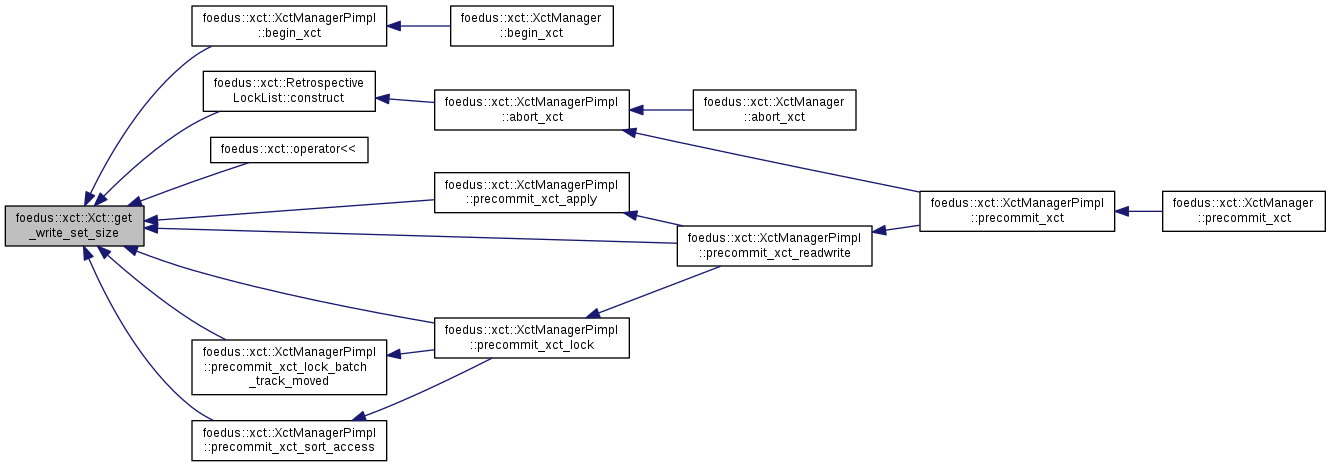

Referenced by foedus::xct::XctManagerPimpl::abort_xct(), foedus::xct::RetrospectiveLockList::construct(), foedus::xct::XctManagerPimpl::precommit_xct_verify_readonly(), and foedus::xct::XctManagerPimpl::precommit_xct_verify_readwrite().

|

inline |

Definition at line 156 of file xct.hpp.

Referenced by foedus::xct::XctManagerPimpl::abort_xct(), foedus::xct::XctManagerPimpl::begin_xct(), foedus::xct::RetrospectiveLockList::construct(), foedus::xct::operator<<(), foedus::xct::XctManagerPimpl::precommit_xct_verify_readonly(), and foedus::xct::XctManagerPimpl::precommit_xct_verify_readwrite().

|

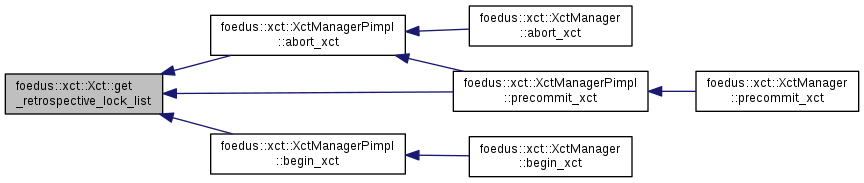

inline |

Definition at line 415 of file xct.hpp.

Referenced by foedus::xct::XctManagerPimpl::abort_xct(), foedus::xct::XctManagerPimpl::begin_xct(), and foedus::xct::XctManagerPimpl::precommit_xct().

|

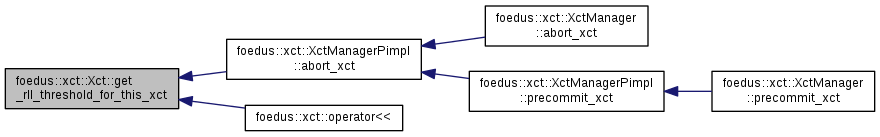

inline |

Definition at line 135 of file xct.hpp.

Referenced by foedus::xct::XctManagerPimpl::abort_xct(), and foedus::xct::operator<<().

|

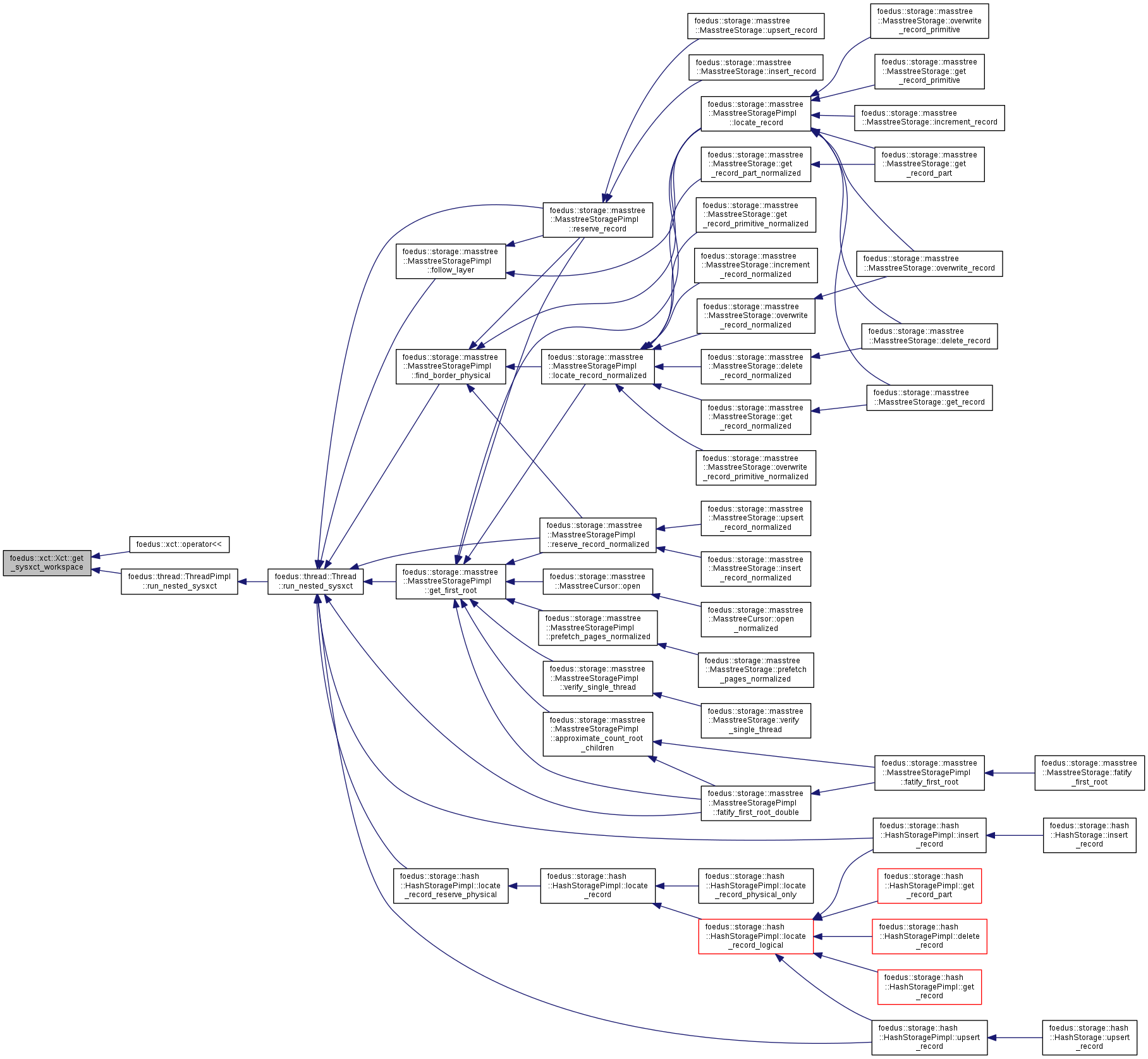

inline |

Definition at line 142 of file xct.hpp.

Referenced by foedus::xct::operator<<(), and foedus::thread::ThreadPimpl::run_nested_sysxct().

|

inline |

|

inline |

|

inline |

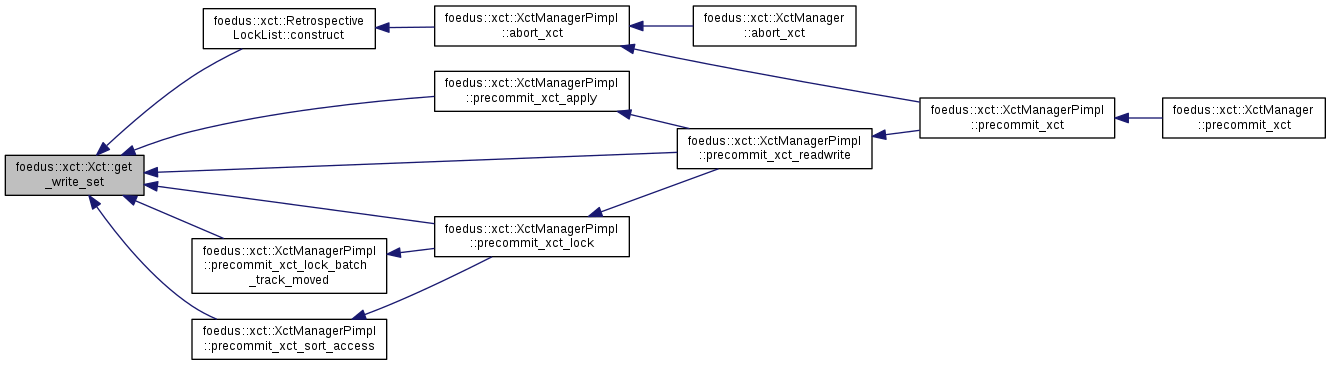

Definition at line 163 of file xct.hpp.

Referenced by foedus::xct::RetrospectiveLockList::construct(), foedus::xct::XctManagerPimpl::precommit_xct_apply(), foedus::xct::XctManagerPimpl::precommit_xct_lock(), foedus::xct::XctManagerPimpl::precommit_xct_lock_batch_track_moved(), foedus::xct::XctManagerPimpl::precommit_xct_readwrite(), and foedus::xct::XctManagerPimpl::precommit_xct_sort_access().

|

inline |

Definition at line 157 of file xct.hpp.

Referenced by foedus::xct::XctManagerPimpl::begin_xct(), foedus::xct::RetrospectiveLockList::construct(), foedus::xct::operator<<(), foedus::xct::XctManagerPimpl::precommit_xct_apply(), foedus::xct::XctManagerPimpl::precommit_xct_lock(), foedus::xct::XctManagerPimpl::precommit_xct_lock_batch_track_moved(), foedus::xct::XctManagerPimpl::precommit_xct_readwrite(), and foedus::xct::XctManagerPimpl::precommit_xct_sort_access().

|

inline |

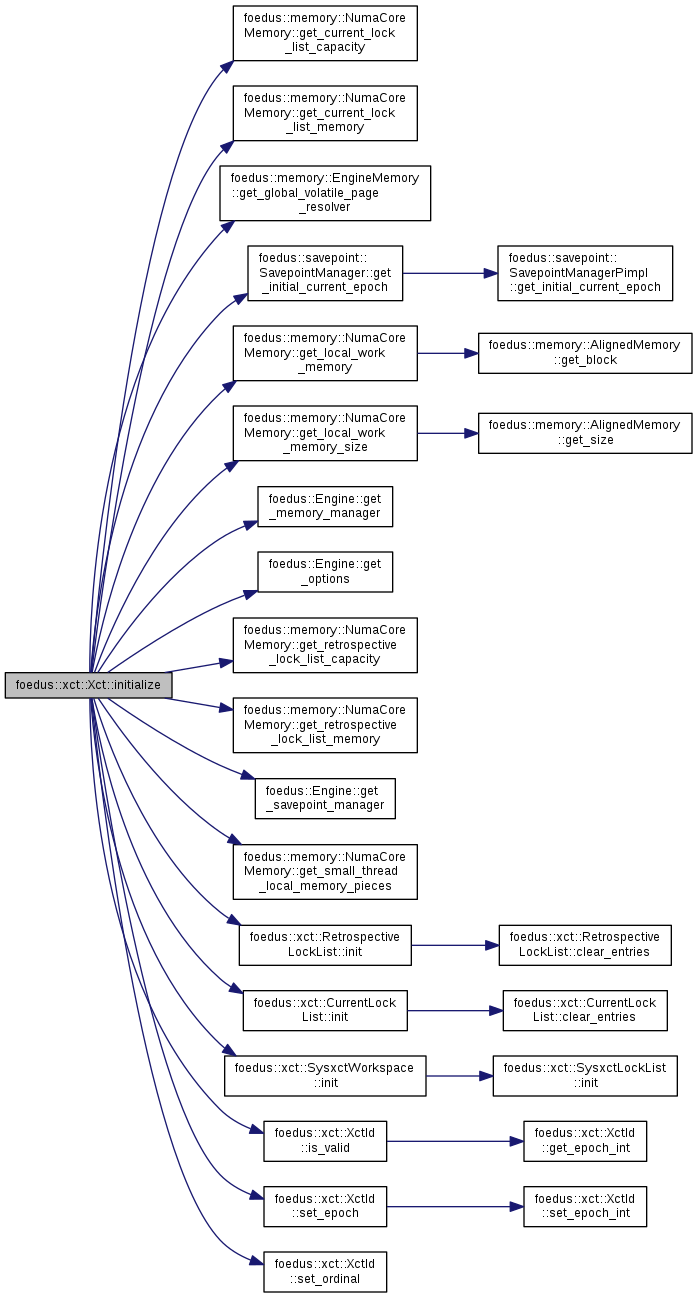

| void foedus::xct::Xct::initialize | ( | memory::NumaCoreMemory * | core_memory, |

| uint32_t * | mcs_block_current, | ||

| uint32_t * | mcs_rw_async_mapping_current | ||

| ) |

Definition at line 77 of file xct.cpp.

References ASSERT_ND, foedus::xct::XctOptions::enable_retrospective_lock_list_, foedus::memory::NumaCoreMemory::get_current_lock_list_capacity(), foedus::memory::NumaCoreMemory::get_current_lock_list_memory(), foedus::memory::EngineMemory::get_global_volatile_page_resolver(), foedus::savepoint::SavepointManager::get_initial_current_epoch(), foedus::memory::NumaCoreMemory::get_local_work_memory(), foedus::memory::NumaCoreMemory::get_local_work_memory_size(), foedus::Engine::get_memory_manager(), foedus::Engine::get_options(), foedus::memory::NumaCoreMemory::get_retrospective_lock_list_capacity(), foedus::memory::NumaCoreMemory::get_retrospective_lock_list_memory(), foedus::Engine::get_savepoint_manager(), foedus::memory::NumaCoreMemory::get_small_thread_local_memory_pieces(), foedus::storage::StorageOptions::hot_threshold_, foedus::xct::XctOptions::hot_threshold_for_retrospective_lock_list_, foedus::xct::RetrospectiveLockList::init(), foedus::xct::CurrentLockList::init(), foedus::xct::SysxctWorkspace::init(), foedus::xct::XctId::is_valid(), foedus::xct::XctOptions::max_lock_free_read_set_size_, foedus::xct::XctOptions::max_lock_free_write_set_size_, foedus::xct::XctOptions::max_read_set_size_, foedus::xct::XctOptions::max_write_set_size_, foedus::xct::XctId::set_epoch(), foedus::xct::XctId::set_ordinal(), foedus::EngineOptions::storage_, and foedus::EngineOptions::xct_.

Referenced by foedus::thread::ThreadPimpl::initialize_once().

|

inline |

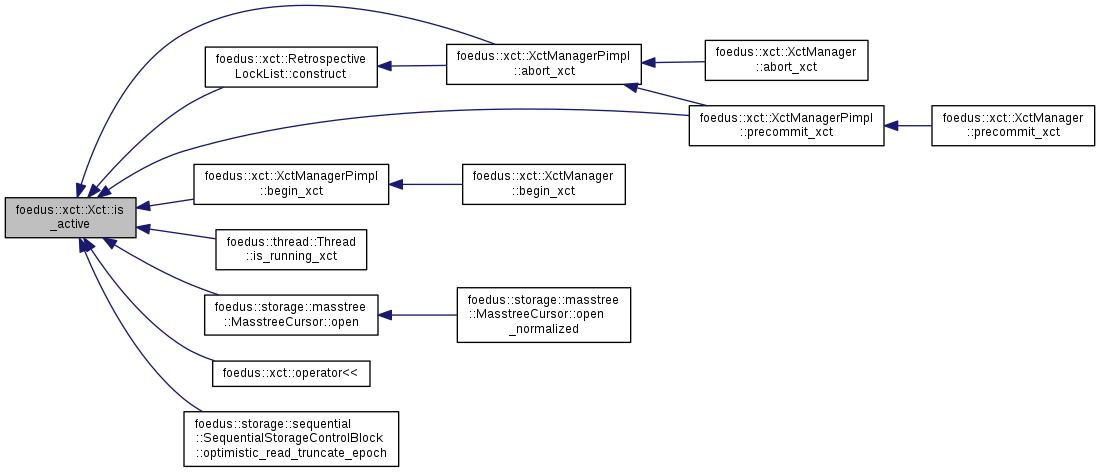

Returns whether the object is an active transaction.

Definition at line 121 of file xct.hpp.

Referenced by foedus::xct::XctManagerPimpl::abort_xct(), foedus::xct::XctManagerPimpl::begin_xct(), foedus::xct::RetrospectiveLockList::construct(), foedus::thread::Thread::is_running_xct(), foedus::storage::masstree::MasstreeCursor::open(), foedus::xct::operator<<(), foedus::storage::sequential::SequentialStorageControlBlock::optimistic_read_truncate_epoch(), and foedus::xct::XctManagerPimpl::precommit_xct().

|

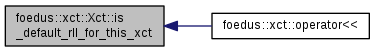

inline |

Definition at line 125 of file xct.hpp.

Referenced by foedus::xct::operator<<().

|

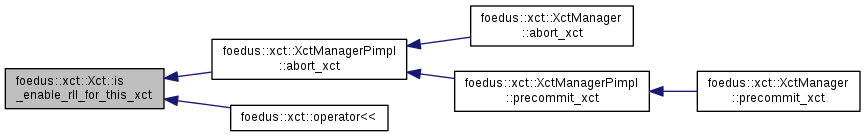

inline |

Definition at line 123 of file xct.hpp.

Referenced by foedus::xct::XctManagerPimpl::abort_xct(), and foedus::xct::operator<<().

|

inline |

Returns if this transaction makes no writes.

Definition at line 145 of file xct.hpp.

Referenced by foedus::xct::XctManagerPimpl::precommit_xct().

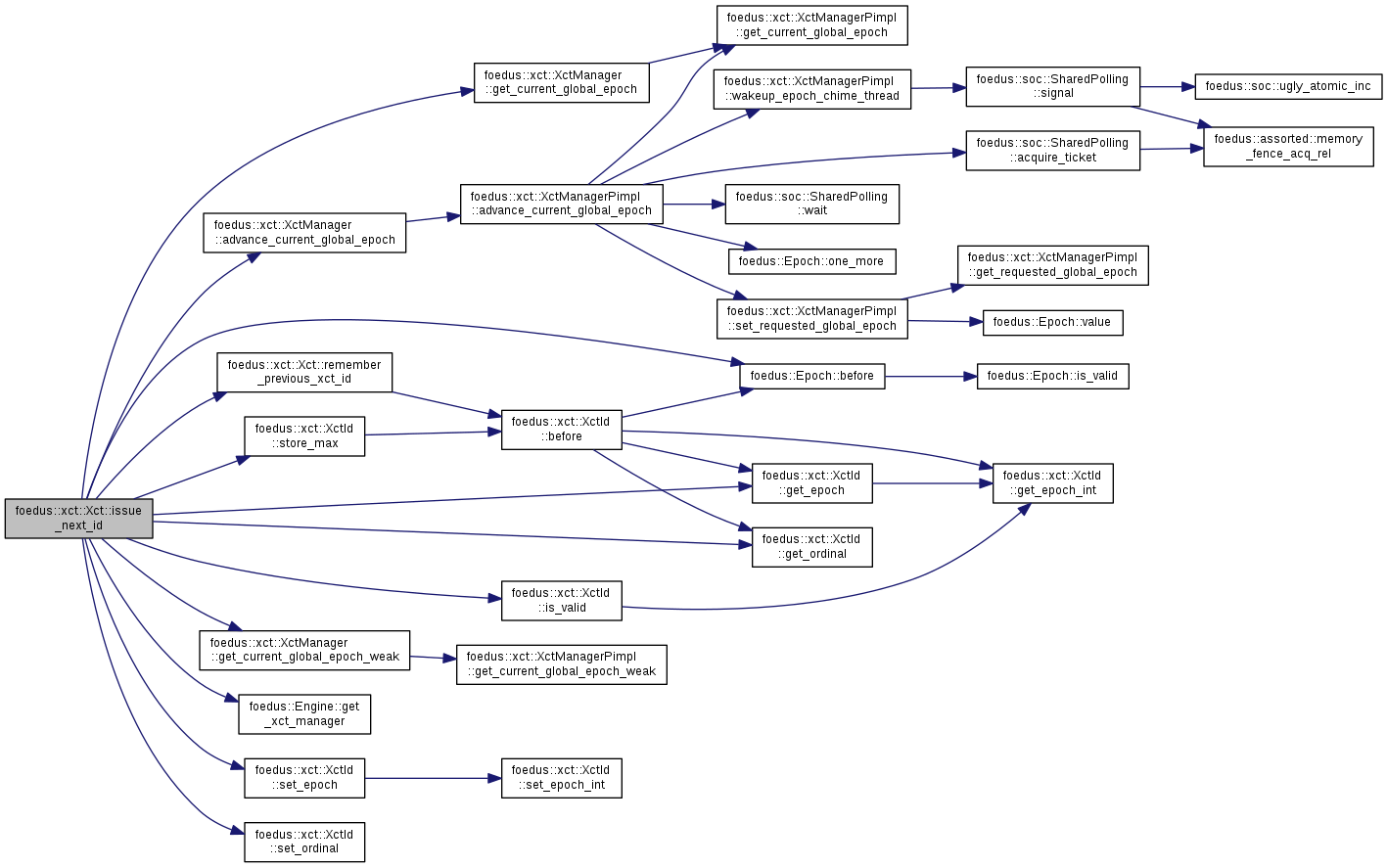

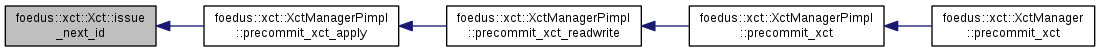

Called while a successful commit of xct to issue a new xct id.

| [in] | max_xct_id | largest xct_id this transaction depends on. |

| [in,out] | epoch | (in) The minimal epoch this transaction has to be in. (out) the epoch this transaction ended up with, which is epoch+1 only when it found ordinal is full for the current epoch. |

This method issues a XctId that satisfies the following properties (see [TU13]). Clarification: "larger" hereby means either a) the epoch is larger or b) the epoch is same and ordinal is larger.

This method also advancec epoch when ordinal is full for the current epoch. This method never fails.

Definition at line 134 of file xct.cpp.

References foedus::xct::XctManager::advance_current_global_epoch(), ASSERT_ND, foedus::Epoch::before(), foedus::xct::XctManager::get_current_global_epoch(), foedus::xct::XctManager::get_current_global_epoch_weak(), foedus::xct::XctId::get_epoch(), foedus::xct::XctId::get_ordinal(), foedus::Engine::get_xct_manager(), foedus::xct::XctId::is_valid(), foedus::xct::kMaxXctOrdinal, remember_previous_xct_id(), foedus::xct::XctId::set_epoch(), foedus::xct::XctId::set_ordinal(), foedus::xct::XctId::store_max(), and UNLIKELY.

Referenced by foedus::xct::XctManagerPimpl::precommit_xct_apply().

| ErrorCode foedus::xct::Xct::on_record_read | ( | bool | intended_for_write, |

| RwLockableXctId * | tid_address, | ||

| XctId * | observed_xid, | ||

| ReadXctAccess ** | read_set_address, | ||

| bool | no_readset_if_moved = false, |

||

| bool | no_readset_if_next_layer = false |

||

| ) |

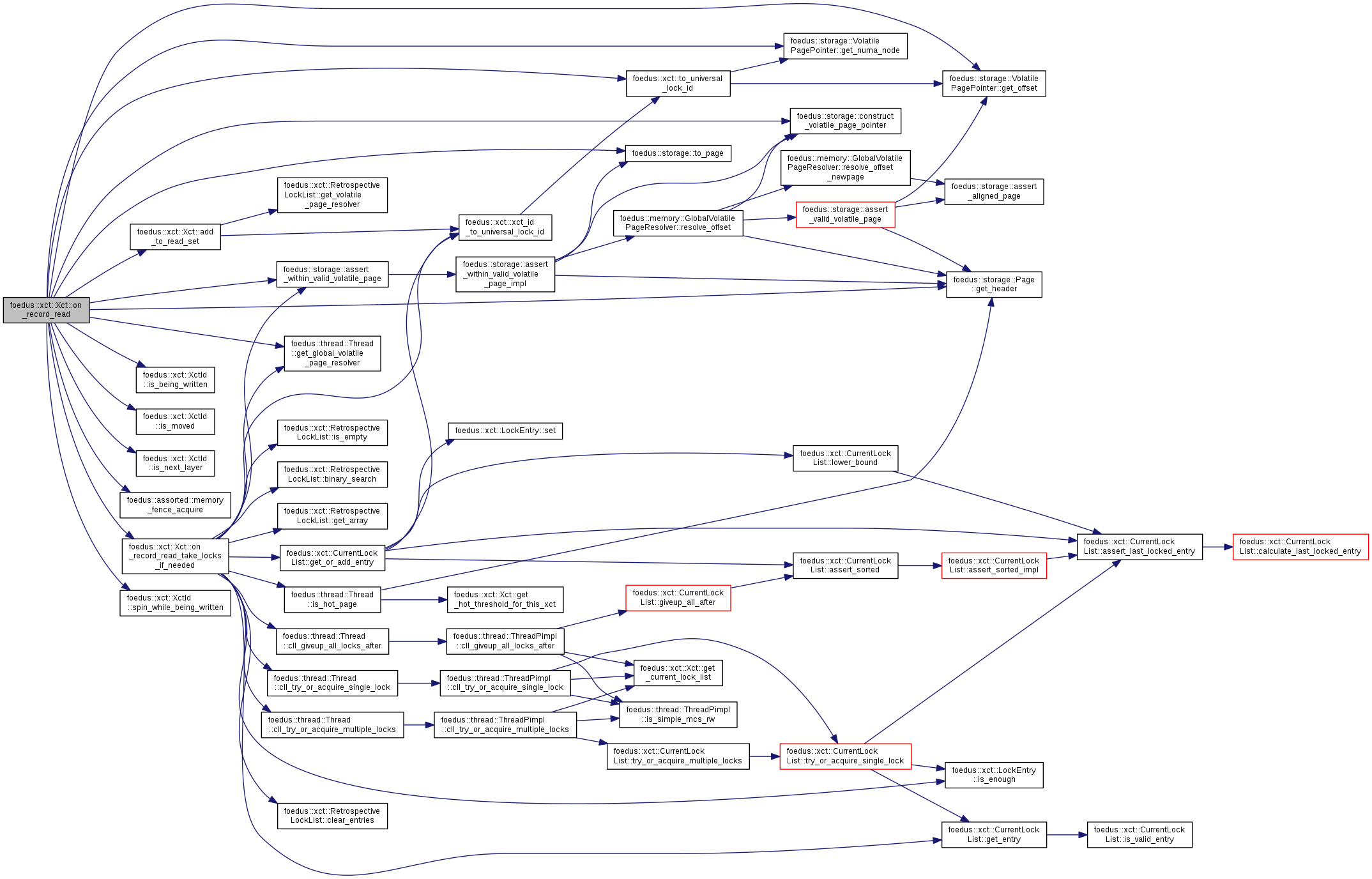

The general logic invoked for every record read.

| [in] | intended_for_write | Hints whether the record will be written after this read |

| [in,out] | tid_address | The record's TID address |

| [out] | observed_xid | Returns the observed XID. See below for more details. |

| [out] | read_set_address | If this method took a read-set, points to the read-set record. nullptr if it didn't. |

| [in] | no_readset_if_moved | When this is true, and if we observe an XID whose is_moved() is on, we do not add it to readset. See the comment below for more details. |

| [in] | no_readset_if_next_layer | When this is true, and if we observe an XID whose is_next_layer() is on, we do not add it to readset. See the comment below for more details. |

You must call this method BEFORE reading the data, otherwise it violates the commit protocol. This method does a few things listed below:

Definition at line 258 of file xct.cpp.

References add_to_read_set(), ASSERT_ND, foedus::storage::assert_within_valid_volatile_page(), CHECK_ERROR_CODE, foedus::storage::construct_volatile_page_pointer(), foedus::thread::Thread::get_global_volatile_page_resolver(), foedus::storage::Page::get_header(), foedus::storage::VolatilePagePointer::get_numa_node(), foedus::storage::VolatilePagePointer::get_offset(), foedus::xct::XctId::is_being_written(), foedus::xct::XctId::is_moved(), foedus::xct::XctId::is_next_layer(), foedus::xct::kDirtyRead, foedus::kErrorCodeOk, foedus::xct::kSerializable, foedus::xct::kSnapshot, foedus::assorted::memory_fence_acquire(), on_record_read_take_locks_if_needed(), foedus::xct::XctId::spin_while_being_written(), foedus::storage::PageHeader::storage_id_, foedus::storage::to_page(), foedus::xct::to_universal_lock_id(), and foedus::xct::RwLockableXctId::xct_id_.

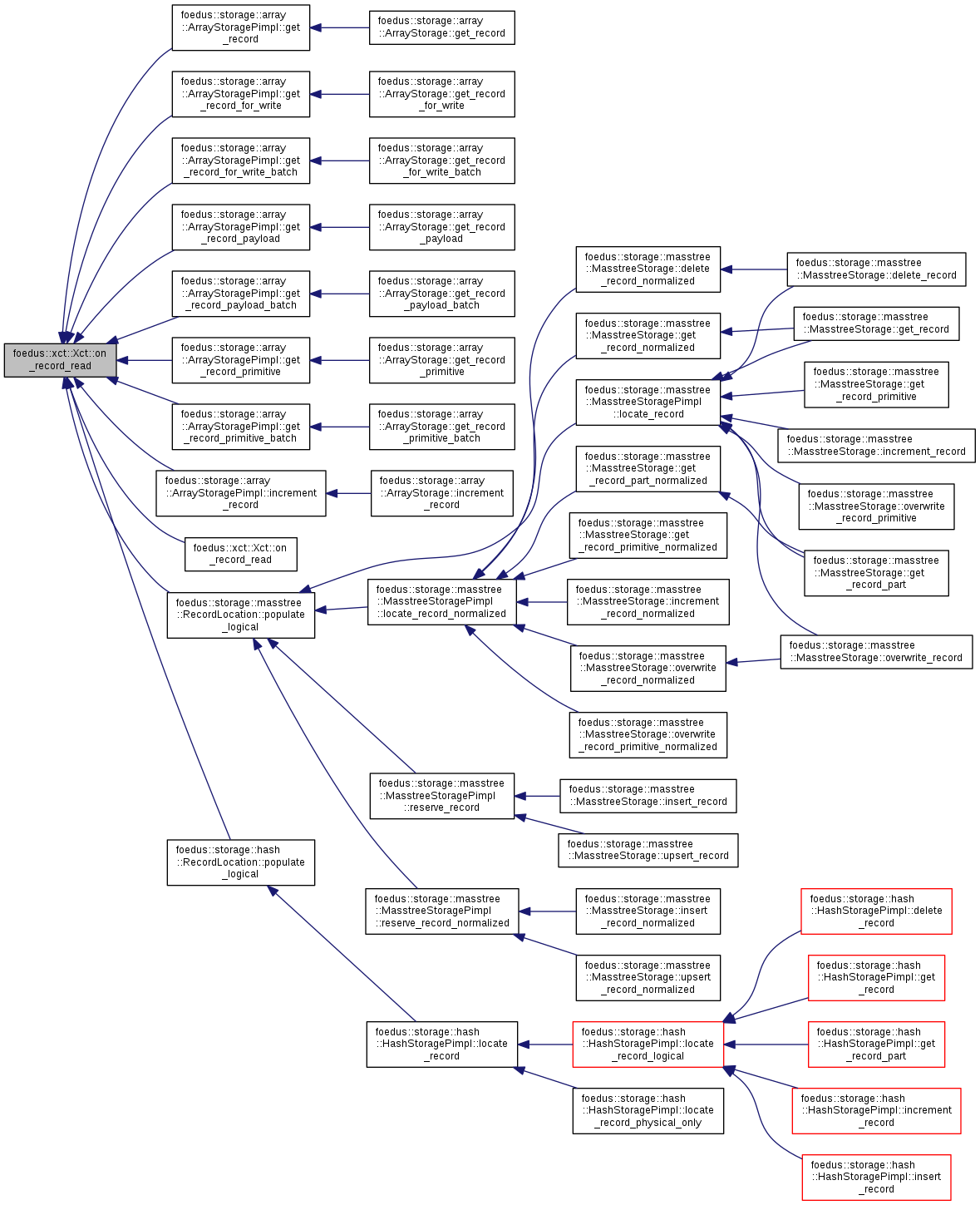

Referenced by foedus::storage::array::ArrayStoragePimpl::get_record(), foedus::storage::array::ArrayStoragePimpl::get_record_for_write(), foedus::storage::array::ArrayStoragePimpl::get_record_for_write_batch(), foedus::storage::array::ArrayStoragePimpl::get_record_payload(), foedus::storage::array::ArrayStoragePimpl::get_record_payload_batch(), foedus::storage::array::ArrayStoragePimpl::get_record_primitive(), foedus::storage::array::ArrayStoragePimpl::get_record_primitive_batch(), foedus::storage::array::ArrayStoragePimpl::increment_record(), on_record_read(), foedus::storage::masstree::RecordLocation::populate_logical(), and foedus::storage::hash::RecordLocation::populate_logical().

|

inline |

Shortcut for a case when you don't need observed_xid/read_set_address back.

Definition at line 286 of file xct.hpp.

References on_record_read().

| void foedus::xct::Xct::on_record_read_take_locks_if_needed | ( | bool | intended_for_write, |

| const storage::Page * | page_address, | ||

| UniversalLockId | lock_id, | ||

| RwLockableXctId * | tid_address | ||

| ) |

subroutine of on_record_read() to take lock(s).

Definition at line 334 of file xct.cpp.

References ASSERT_ND, foedus::storage::assert_within_valid_volatile_page(), foedus::xct::RetrospectiveLockList::binary_search(), foedus::xct::RetrospectiveLockList::clear_entries(), foedus::thread::Thread::cll_giveup_all_locks_after(), foedus::thread::Thread::cll_try_or_acquire_multiple_locks(), foedus::thread::Thread::cll_try_or_acquire_single_lock(), foedus::xct::RetrospectiveLockList::get_array(), foedus::xct::CurrentLockList::get_entry(), foedus::thread::Thread::get_global_volatile_page_resolver(), foedus::xct::CurrentLockList::get_or_add_entry(), foedus::xct::RetrospectiveLockList::is_empty(), foedus::xct::LockEntry::is_enough(), foedus::thread::Thread::is_hot_page(), foedus::kErrorCodeOk, foedus::kErrorCodeXctLockAbort, foedus::xct::kLockListPositionInvalid, foedus::xct::kNullUniversalLockId, foedus::xct::kReadLock, foedus::xct::kWriteLock, foedus::xct::LockEntry::universal_lock_id_, and foedus::xct::xct_id_to_universal_lock_id().

Referenced by on_record_read().

| void foedus::xct::Xct::overwrite_to_pointer_set | ( | const storage::VolatilePagePointer * | pointer_address, |

| storage::VolatilePagePointer | observed | ||

| ) |

The transaction that has updated the volatile pointer should not abort itself.

So, it calls this method to apply the version it installed.

Definition at line 226 of file xct.cpp.

References ASSERT_ND, foedus::xct::kSerializable, and foedus::xct::PointerAccess::observed_.

|

inline |

Definition at line 386 of file xct.hpp.

References ASSERT_ND, and foedus::xct::XctId::before().

Referenced by issue_next_id().

|

inline |

Definition at line 132 of file xct.hpp.

Referenced by foedus::thread::ThreadPimpl::handle_tasks().

|

inline |

Definition at line 126 of file xct.hpp.

Referenced by foedus::thread::ThreadPimpl::handle_tasks().

|

inline |

Definition at line 139 of file xct.hpp.

Referenced by foedus::thread::ThreadPimpl::handle_tasks().

|

inline |

|

inline |

|

inline |

|

friend |