|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

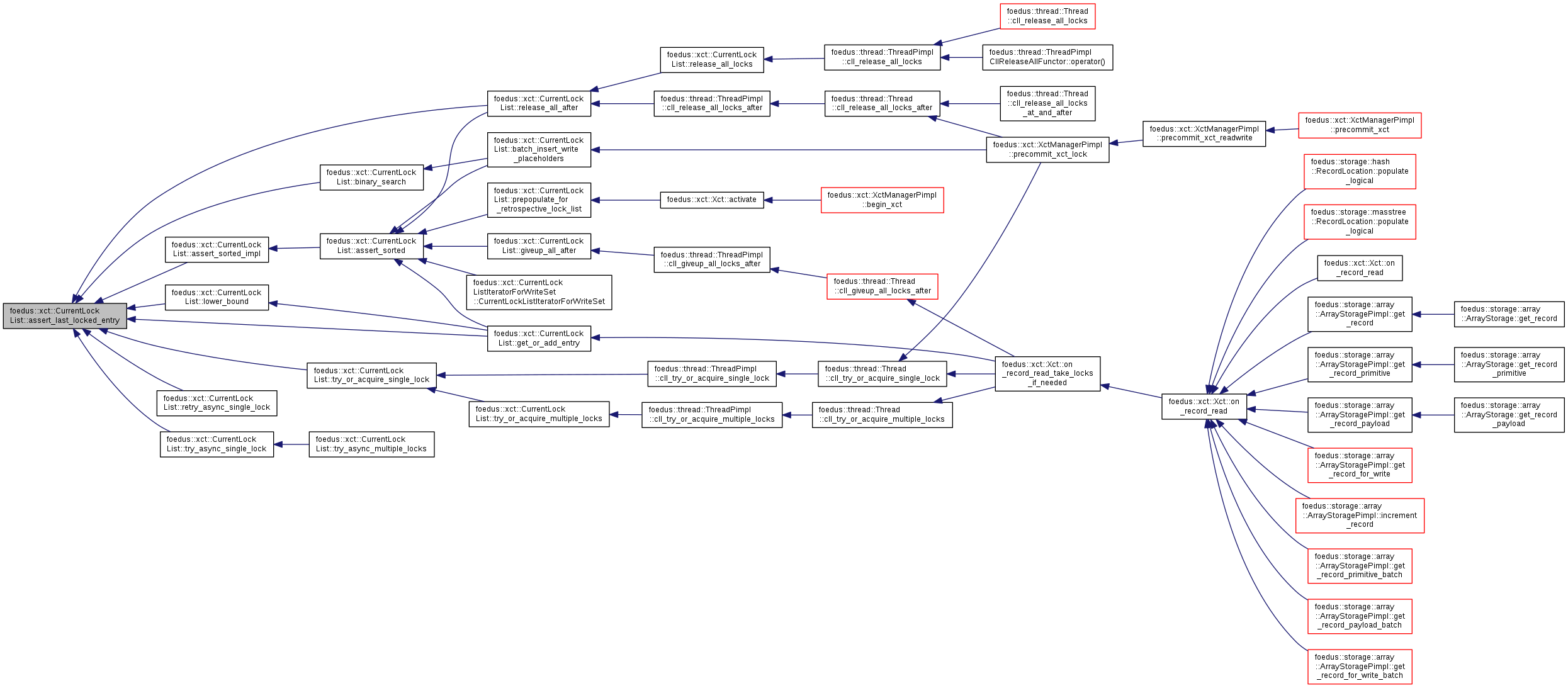

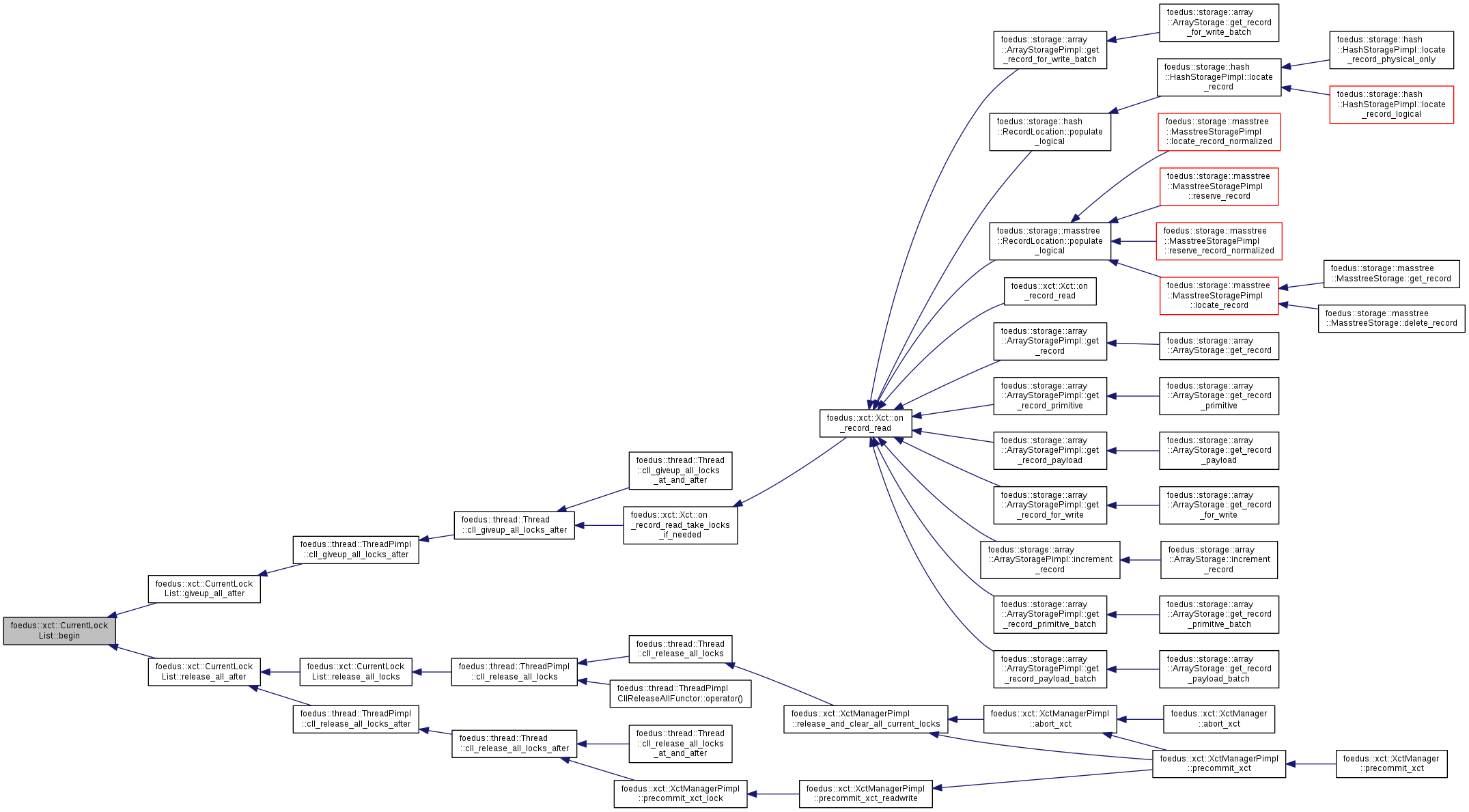

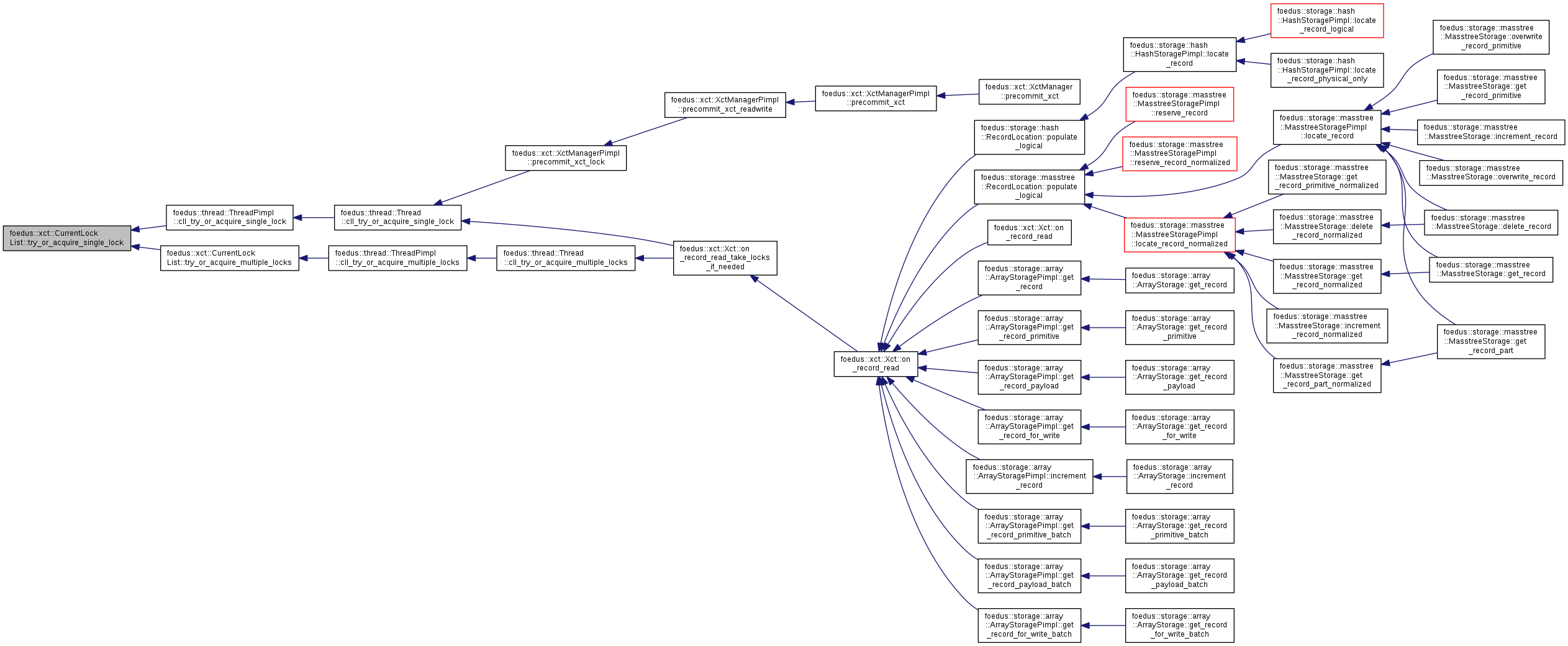

Sorted list of all locks, either read-lock or write-lock, taken in the current run. More...

Sorted list of all locks, either read-lock or write-lock, taken in the current run.

This is NOT a POD because we need dynamic memory for the list. This holds all locks in address order to help our commit protocol.

We so far maintain this object in addition to read-set/write-set. We can merge these objects in to one, which will allow us to add some functionality and optimizations. For example, "read my own write" semantics would be practically implemented with such an integrated list. We can reduce the number of sorting and tracking, too. But, let's do them later.

Definition at line 309 of file retrospective_lock_list.hpp.

#include <retrospective_lock_list.hpp>

Public Member Functions | |

| CurrentLockList () | |

| ~CurrentLockList () | |

| void | init (LockEntry *array, uint32_t capacity, const memory::GlobalVolatilePageResolver &resolver) |

| void | uninit () |

| void | clear_entries () |

| LockListPosition | binary_search (UniversalLockId lock) const |

| Analogous to std::binary_search() for the given lock. More... | |

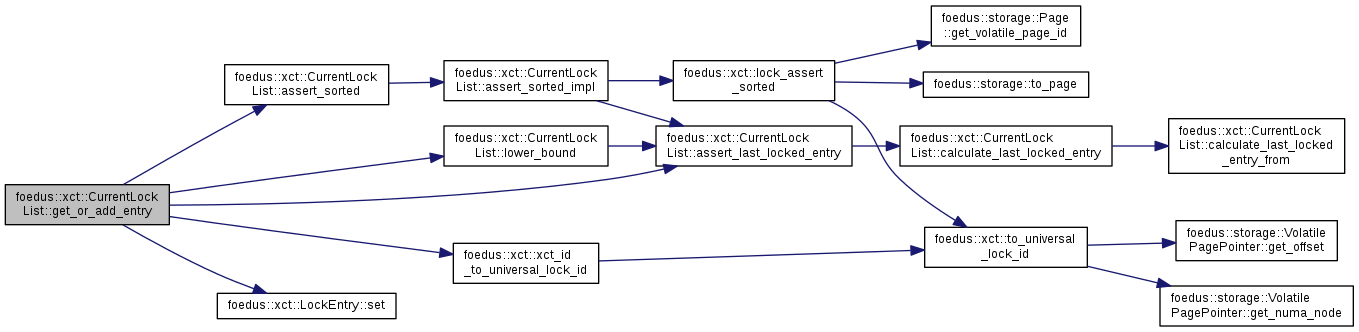

| LockListPosition | get_or_add_entry (UniversalLockId lock_id, RwLockableXctId *lock, LockMode preferred_mode) |

| Adds an entry to this list, re-sorting part of the list if necessary to keep the sortedness. More... | |

| LockListPosition | lower_bound (UniversalLockId lock) const |

| Analogous to std::lower_bound() for the given lock. More... | |

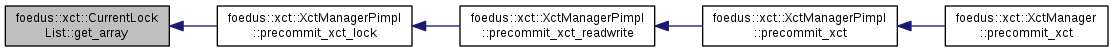

| const LockEntry * | get_array () const |

| LockEntry * | get_array () |

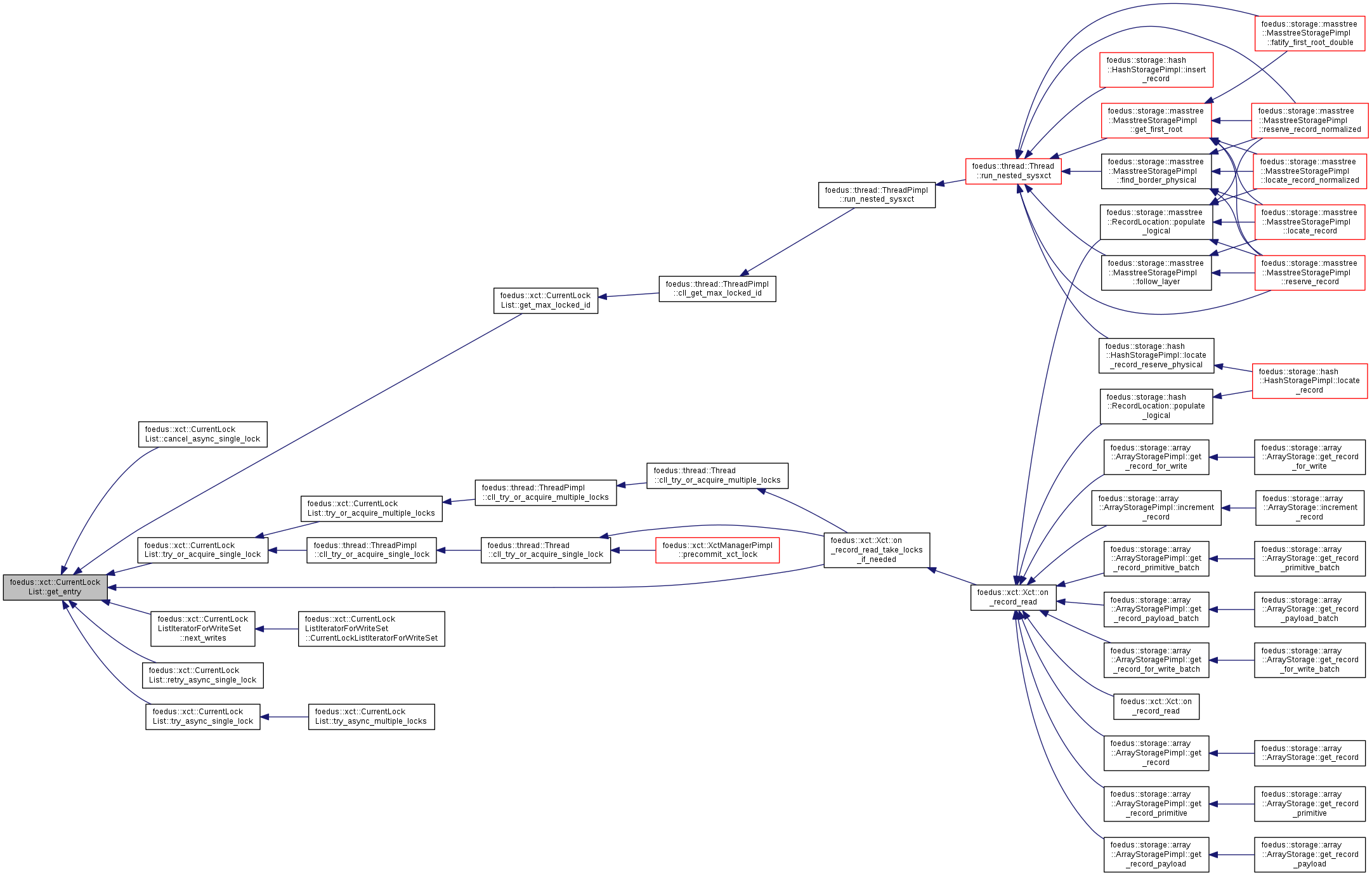

| LockEntry * | get_entry (LockListPosition pos) |

| const LockEntry * | get_entry (LockListPosition pos) const |

| uint32_t | get_capacity () const |

| LockListPosition | get_last_active_entry () const |

| bool | is_valid_entry (LockListPosition pos) const |

| bool | is_empty () const |

| void | assert_sorted () const __attribute__((always_inline)) |

| void | assert_sorted_impl () const |

| LockEntry * | begin () |

| LockEntry * | end () |

| const LockEntry * | cbegin () const |

| const LockEntry * | cend () const |

| const memory::GlobalVolatilePageResolver & | get_volatile_page_resolver () const |

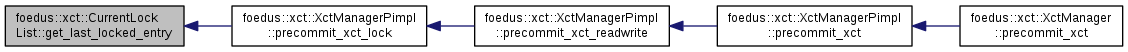

| LockListPosition | get_last_locked_entry () const |

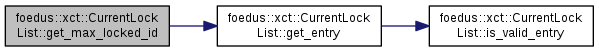

| UniversalLockId | get_max_locked_id () const |

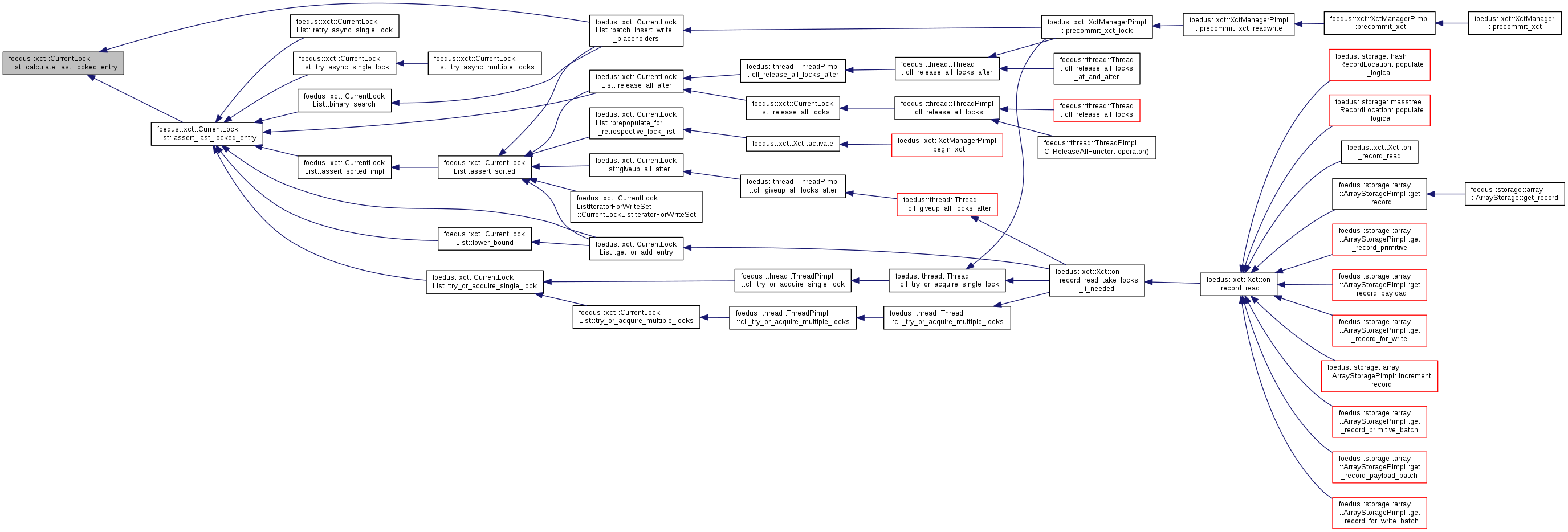

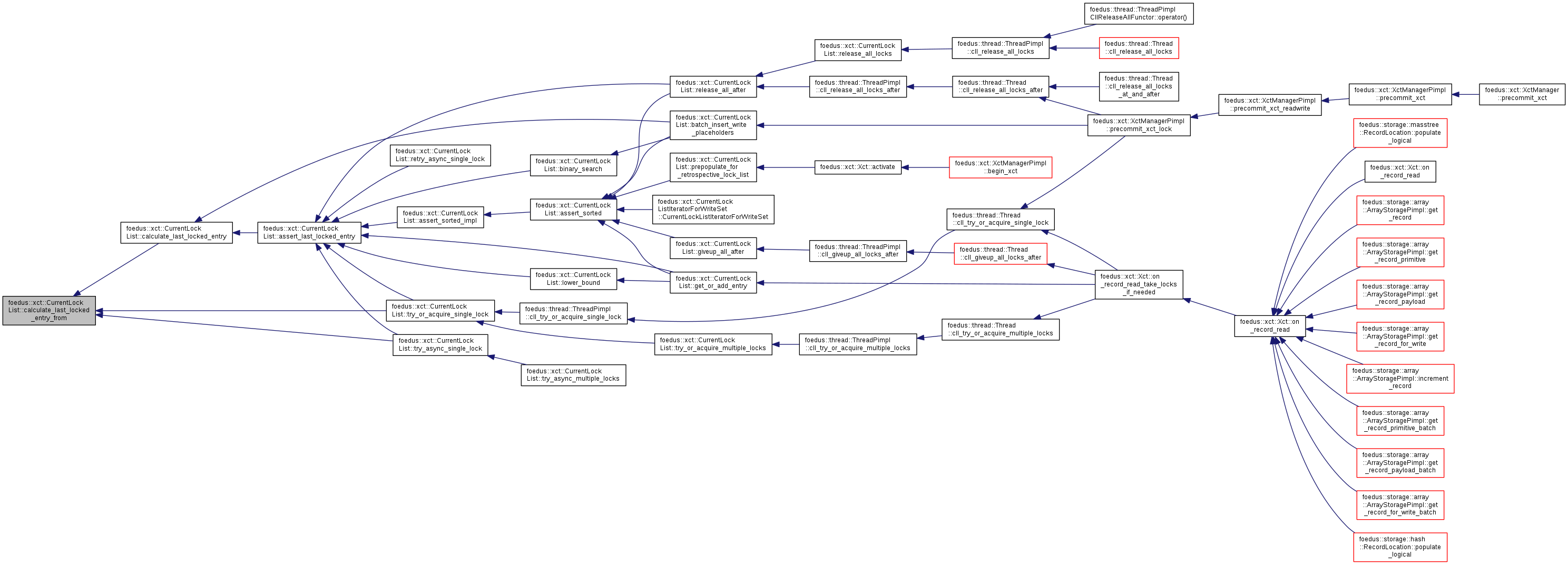

| LockListPosition | calculate_last_locked_entry () const |

| Calculate last_locked_entry_ by really checking the whole list. More... | |

| LockListPosition | calculate_last_locked_entry_from (LockListPosition from) const |

| Only searches among entries at or before "from". More... | |

| void | assert_last_locked_entry () const |

| void | batch_insert_write_placeholders (const WriteXctAccess *write_set, uint32_t write_set_size) |

| Create entries for all write-sets in one-shot. More... | |

| void | prepopulate_for_retrospective_lock_list (const RetrospectiveLockList &rll) |

| Another batch-insert method used at the beginning of a transaction. More... | |

| template<typename MCS_RW_IMPL > | |

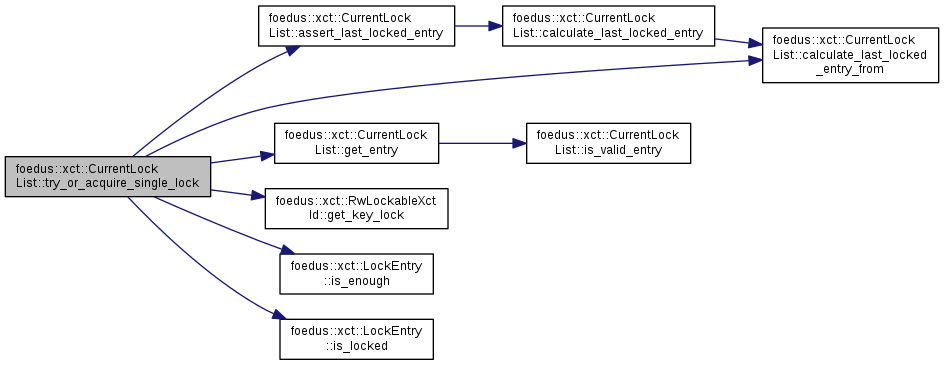

| ErrorCode | try_or_acquire_single_lock (LockListPosition pos, MCS_RW_IMPL *mcs_rw_impl) |

| Methods below take or release locks, so they receive MCS_RW_IMPL, a template param. More... | |

| template<typename MCS_RW_IMPL > | |

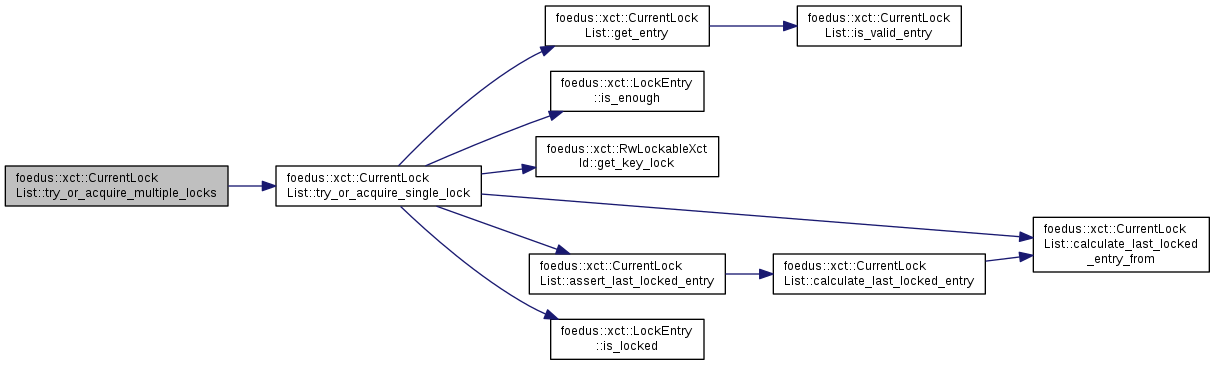

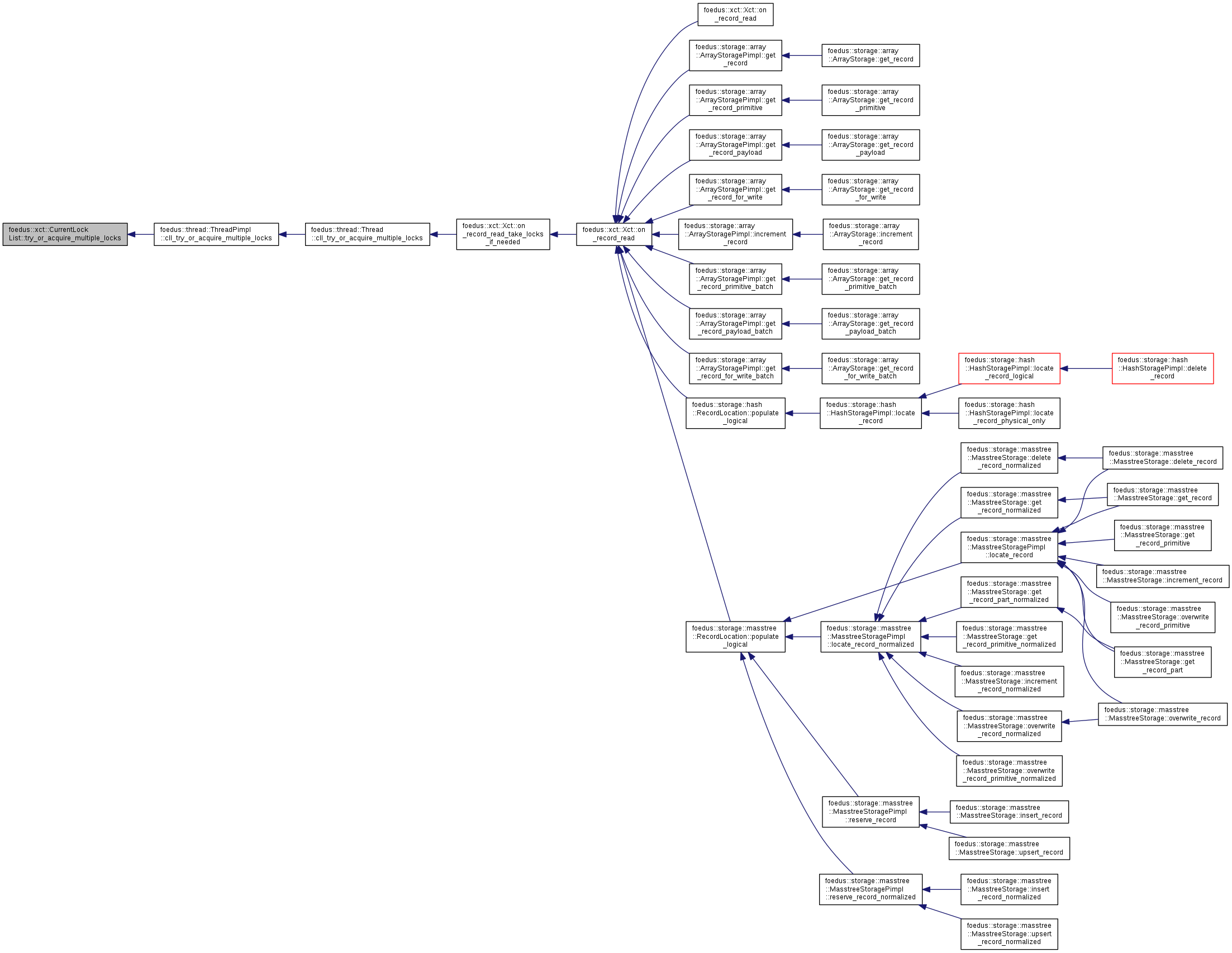

| ErrorCode | try_or_acquire_multiple_locks (LockListPosition upto_pos, MCS_RW_IMPL *mcs_rw_impl) |

| Acquire multiple locks up to the given position in canonical order. More... | |

| template<typename MCS_RW_IMPL > | |

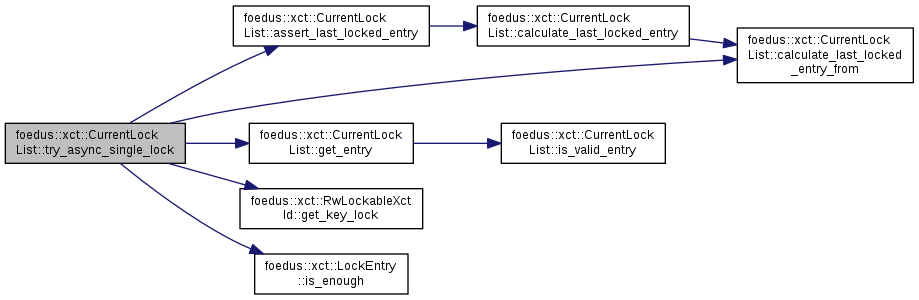

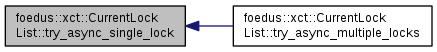

| void | try_async_single_lock (LockListPosition pos, MCS_RW_IMPL *mcs_rw_impl) |

| template<typename MCS_RW_IMPL > | |

| bool | retry_async_single_lock (LockListPosition pos, MCS_RW_IMPL *mcs_rw_impl) |

| template<typename MCS_RW_IMPL > | |

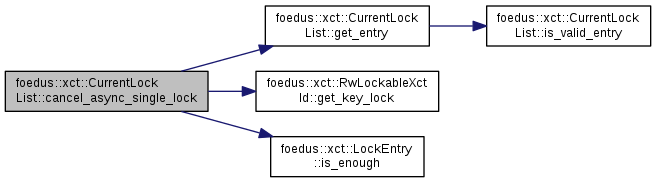

| void | cancel_async_single_lock (LockListPosition pos, MCS_RW_IMPL *mcs_rw_impl) |

| template<typename MCS_RW_IMPL > | |

| void | try_async_multiple_locks (LockListPosition upto_pos, MCS_RW_IMPL *mcs_rw_impl) |

| template<typename MCS_RW_IMPL > | |

| void | release_all_locks (MCS_RW_IMPL *mcs_rw_impl) |

| template<typename MCS_RW_IMPL > | |

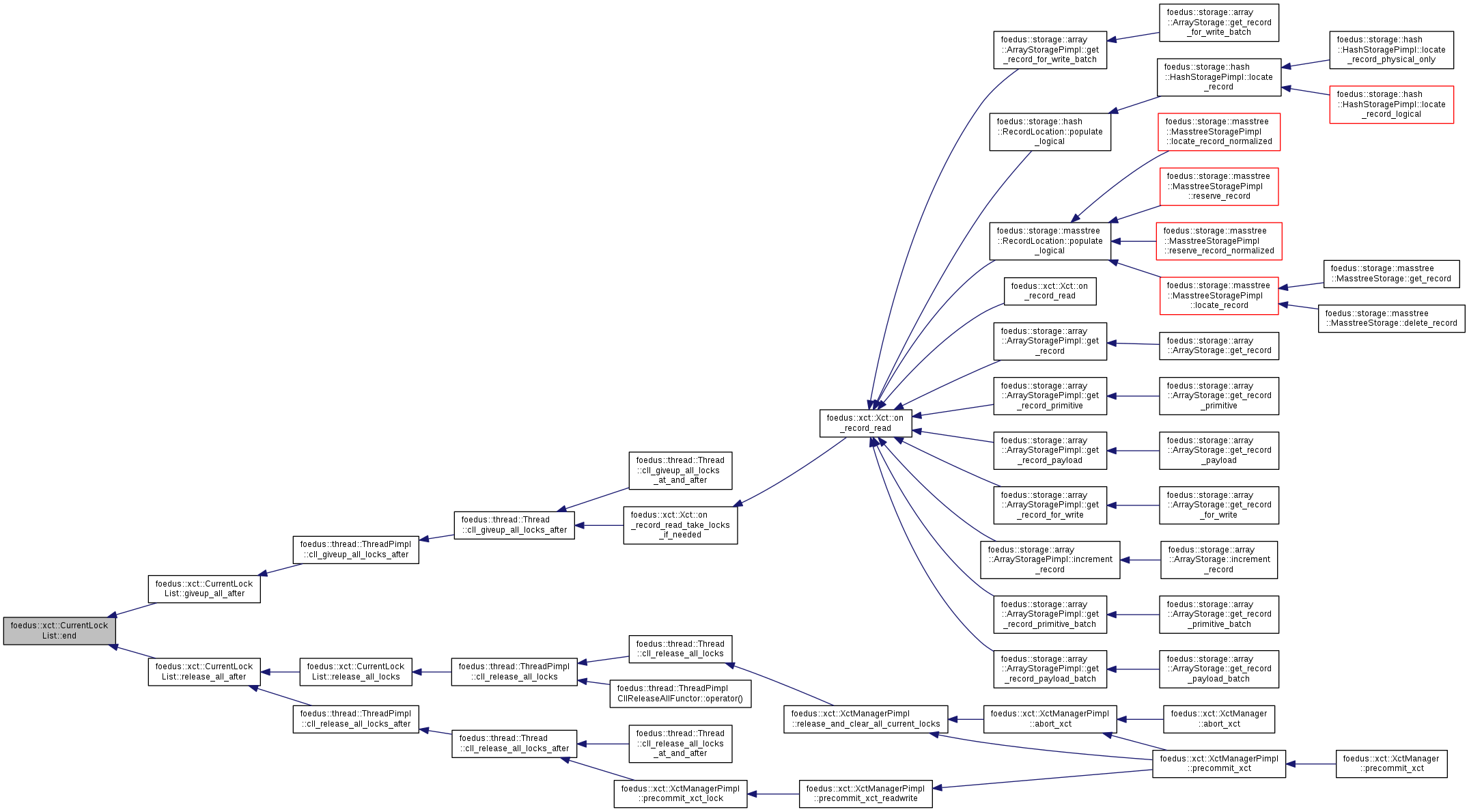

| void | release_all_after (UniversalLockId address, MCS_RW_IMPL *mcs_rw_impl) |

| Release all locks in CLL whose addresses are canonically ordered before the parameter. More... | |

| template<typename MCS_RW_IMPL > | |

| void | release_all_at_and_after (UniversalLockId address, MCS_RW_IMPL *mcs_rw_impl) |

| same as release_all_after(address - 1) More... | |

| template<typename MCS_RW_IMPL > | |

| void | giveup_all_after (UniversalLockId address, MCS_RW_IMPL *mcs_rw_impl) |

| This gives-up locks in CLL that are not yet taken. More... | |

| template<typename MCS_RW_IMPL > | |

| void | giveup_all_at_and_after (UniversalLockId address, MCS_RW_IMPL *mcs_rw_impl) |

Friends | |

| std::ostream & | operator<< (std::ostream &o, const CurrentLockList &v) |

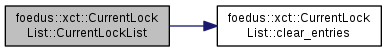

| foedus::xct::CurrentLockList::CurrentLockList | ( | ) |

Definition at line 76 of file retrospective_lock_list.cpp.

References clear_entries().

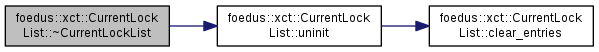

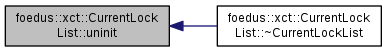

| foedus::xct::CurrentLockList::~CurrentLockList | ( | ) |

Definition at line 82 of file retrospective_lock_list.cpp.

References uninit().

|

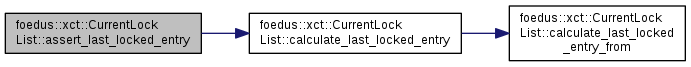

inline |

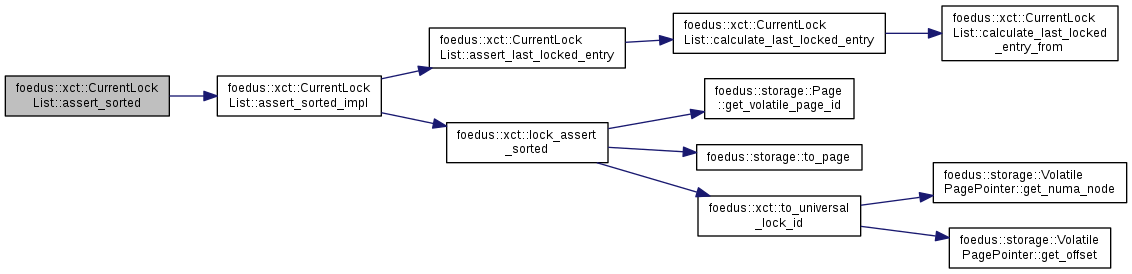

Definition at line 405 of file retrospective_lock_list.hpp.

References ASSERT_ND, and calculate_last_locked_entry().

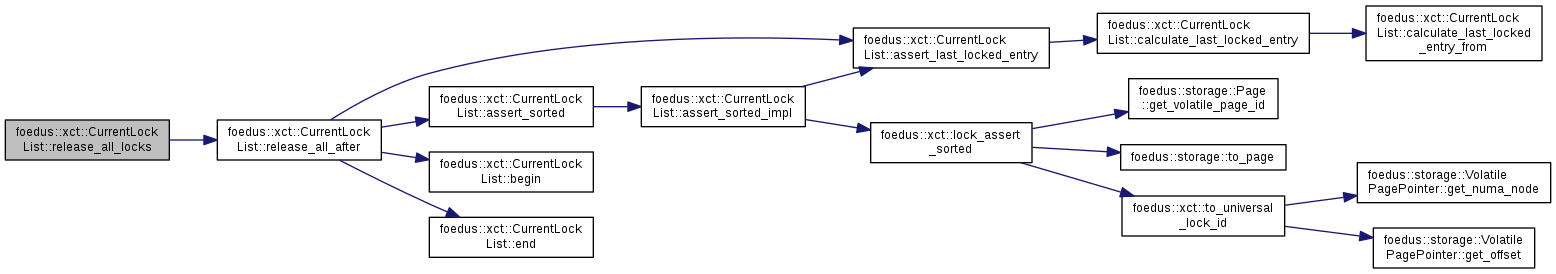

Referenced by assert_sorted_impl(), binary_search(), get_or_add_entry(), lower_bound(), release_all_after(), retry_async_single_lock(), try_async_single_lock(), and try_or_acquire_single_lock().

|

inline |

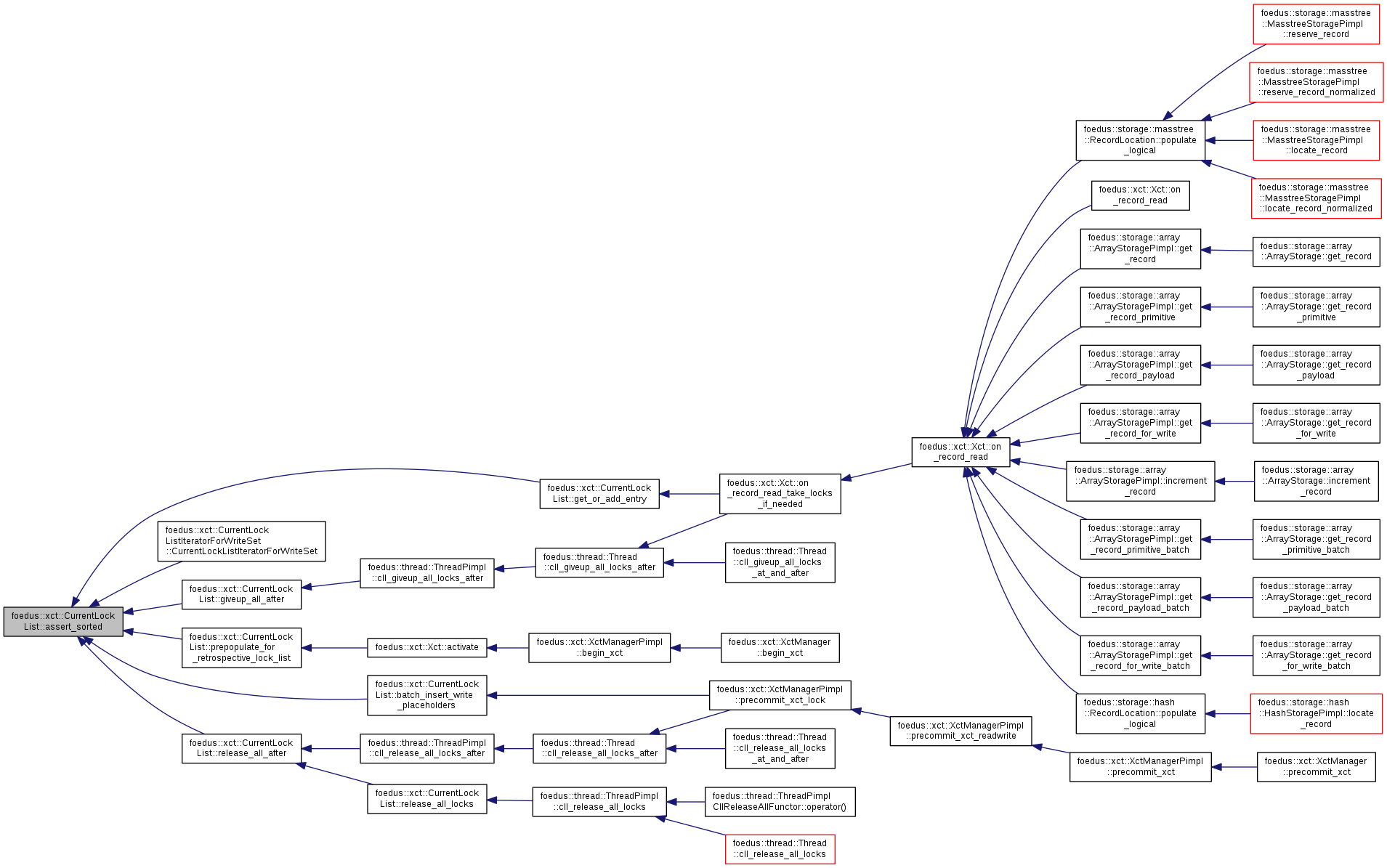

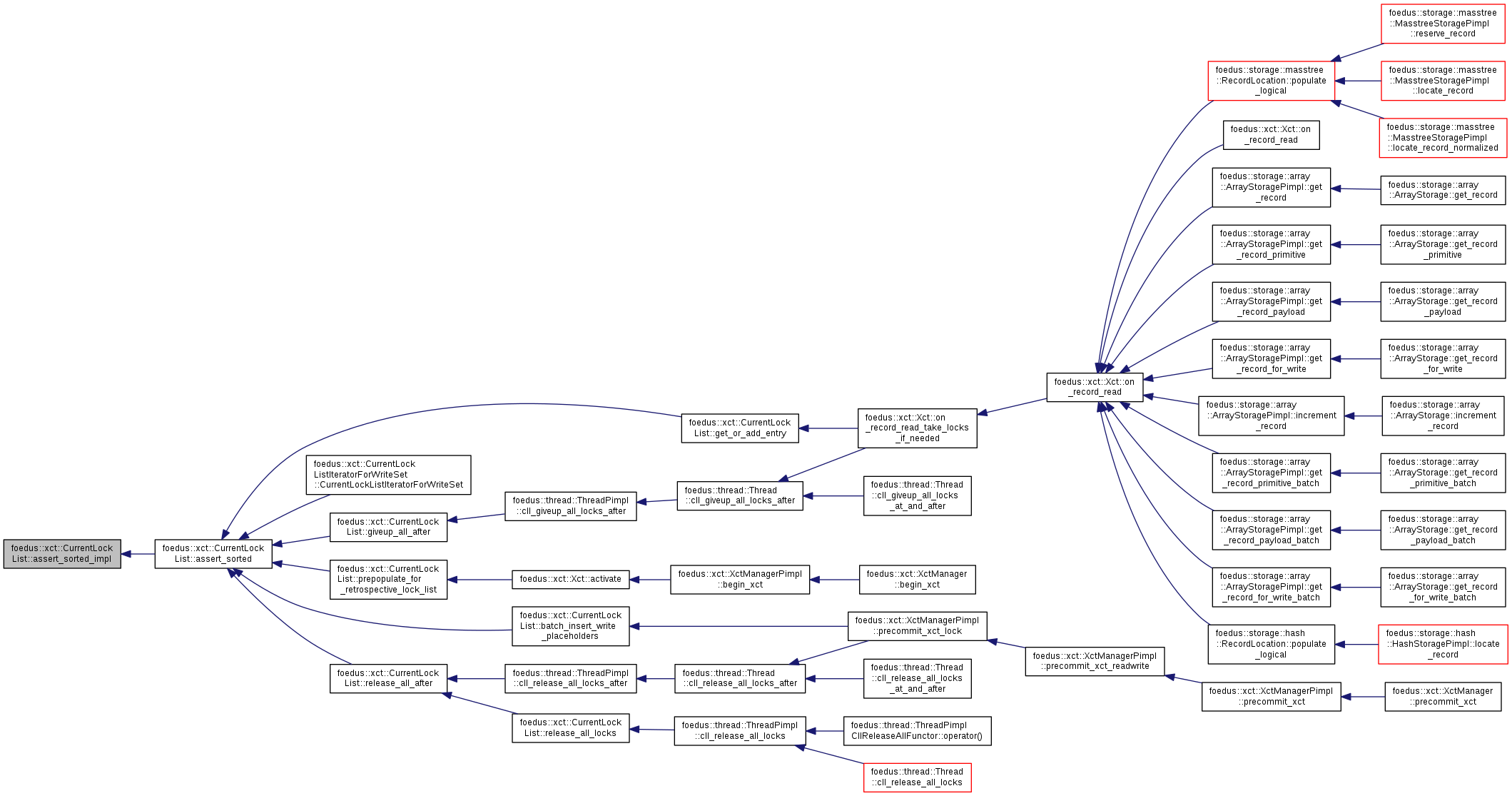

Definition at line 555 of file retrospective_lock_list.hpp.

References assert_sorted_impl().

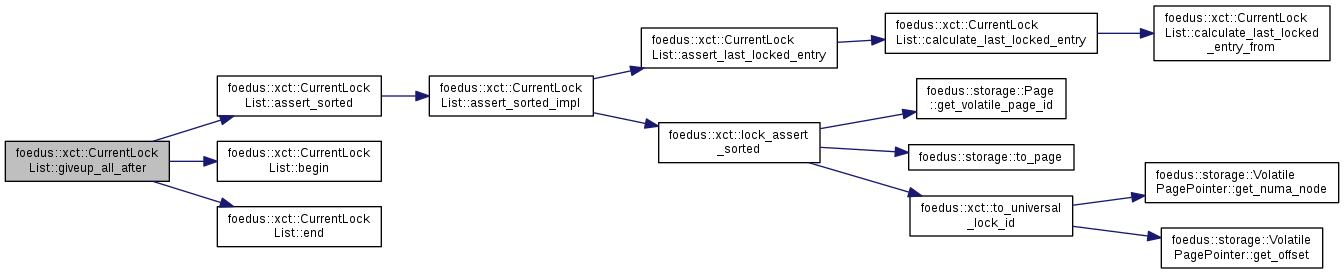

Referenced by batch_insert_write_placeholders(), foedus::xct::CurrentLockListIteratorForWriteSet::CurrentLockListIteratorForWriteSet(), get_or_add_entry(), giveup_all_after(), prepopulate_for_retrospective_lock_list(), and release_all_after().

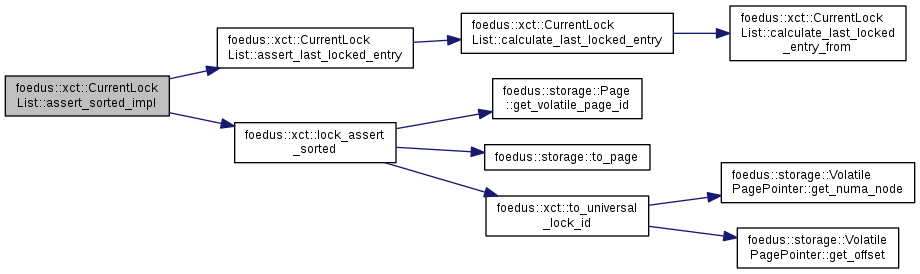

| void foedus::xct::CurrentLockList::assert_sorted_impl | ( | ) | const |

Definition at line 178 of file retrospective_lock_list.cpp.

References assert_last_locked_entry(), and foedus::xct::lock_assert_sorted().

Referenced by assert_sorted().

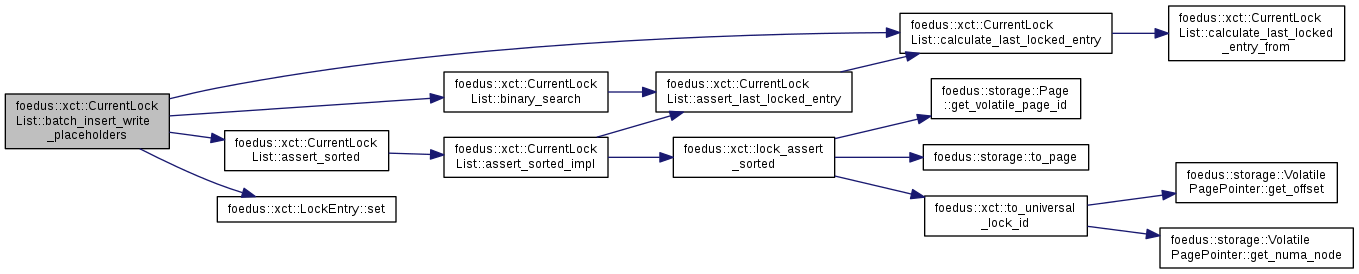

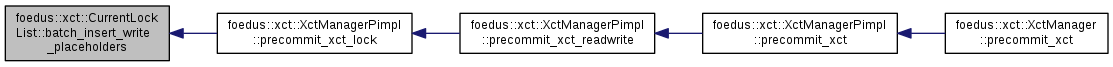

| void foedus::xct::CurrentLockList::batch_insert_write_placeholders | ( | const WriteXctAccess * | write_set, |

| uint32_t | write_set_size | ||

| ) |

Create entries for all write-sets in one-shot.

| [in] | write_set | write-sets to create placeholders for. Must be canonically sorted. |

| [in] | write_set_size | count of entries in write_set |

During precommit, we must create an entry for every write-set. Rather than doing it one by one, this method creates placeholder entries for all of them. The placeholders are not locked yet (taken_mode_ == kNoLock).

Definition at line 329 of file retrospective_lock_list.cpp.

References ASSERT_ND, assert_sorted(), binary_search(), calculate_last_locked_entry(), foedus::xct::kLockListPositionInvalid, foedus::xct::kNoLock, foedus::xct::kWriteLock, foedus::xct::RecordXctAccess::ordinal_, foedus::xct::RecordXctAccess::owner_id_address_, foedus::xct::RecordXctAccess::owner_lock_id_, foedus::xct::LockEntry::preferred_mode_, foedus::xct::LockEntry::set(), and foedus::xct::LockEntry::universal_lock_id_.

Referenced by foedus::xct::XctManagerPimpl::precommit_xct_lock().

|

inline |

Definition at line 371 of file retrospective_lock_list.hpp.

Referenced by giveup_all_after(), and release_all_after().

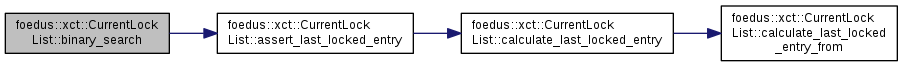

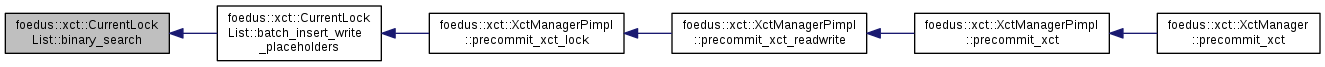

| LockListPosition foedus::xct::CurrentLockList::binary_search | ( | UniversalLockId | lock | ) | const |

Analogous to std::binary_search() for the given lock.

Data manipulation (search/add/etc)

Definition at line 189 of file retrospective_lock_list.cpp.

References assert_last_locked_entry().

Referenced by batch_insert_write_placeholders().

|

inline |

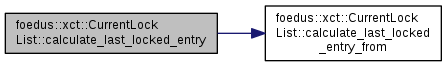

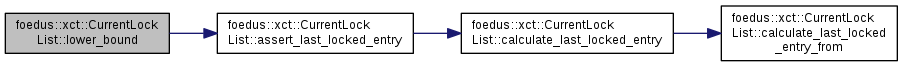

Calculate last_locked_entry_ by really checking the whole list.

Usually for sanity checks

Definition at line 393 of file retrospective_lock_list.hpp.

References calculate_last_locked_entry_from().

Referenced by assert_last_locked_entry(), and batch_insert_write_placeholders().

|

inline |

Only searches among entries at or before "from".

Definition at line 397 of file retrospective_lock_list.hpp.

References foedus::xct::kLockListPositionInvalid.

Referenced by calculate_last_locked_entry(), try_async_single_lock(), and try_or_acquire_single_lock().

|

inline |

Definition at line 724 of file retrospective_lock_list.hpp.

References ASSERT_ND, get_entry(), foedus::xct::RwLockableXctId::get_key_lock(), foedus::xct::LockEntry::is_enough(), foedus::xct::kNoLock, foedus::xct::kReadLock, foedus::xct::LockEntry::lock_, foedus::xct::LockEntry::mcs_block_, foedus::xct::LockEntry::preferred_mode_, and foedus::xct::LockEntry::taken_mode_.

|

inline |

Definition at line 373 of file retrospective_lock_list.hpp.

|

inline |

Definition at line 374 of file retrospective_lock_list.hpp.

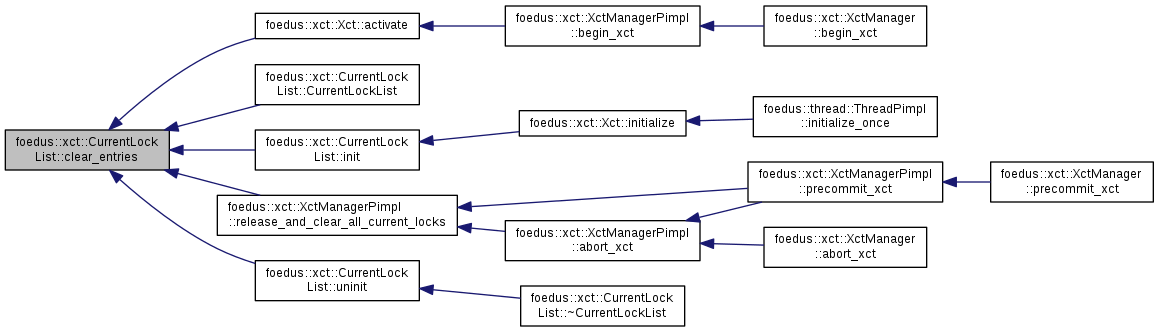

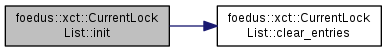

| void foedus::xct::CurrentLockList::clear_entries | ( | ) |

Definition at line 96 of file retrospective_lock_list.cpp.

References foedus::xct::kLockListPositionInvalid, foedus::xct::kNoLock, foedus::xct::LockEntry::lock_, foedus::xct::LockEntry::preferred_mode_, foedus::xct::LockEntry::taken_mode_, and foedus::xct::LockEntry::universal_lock_id_.

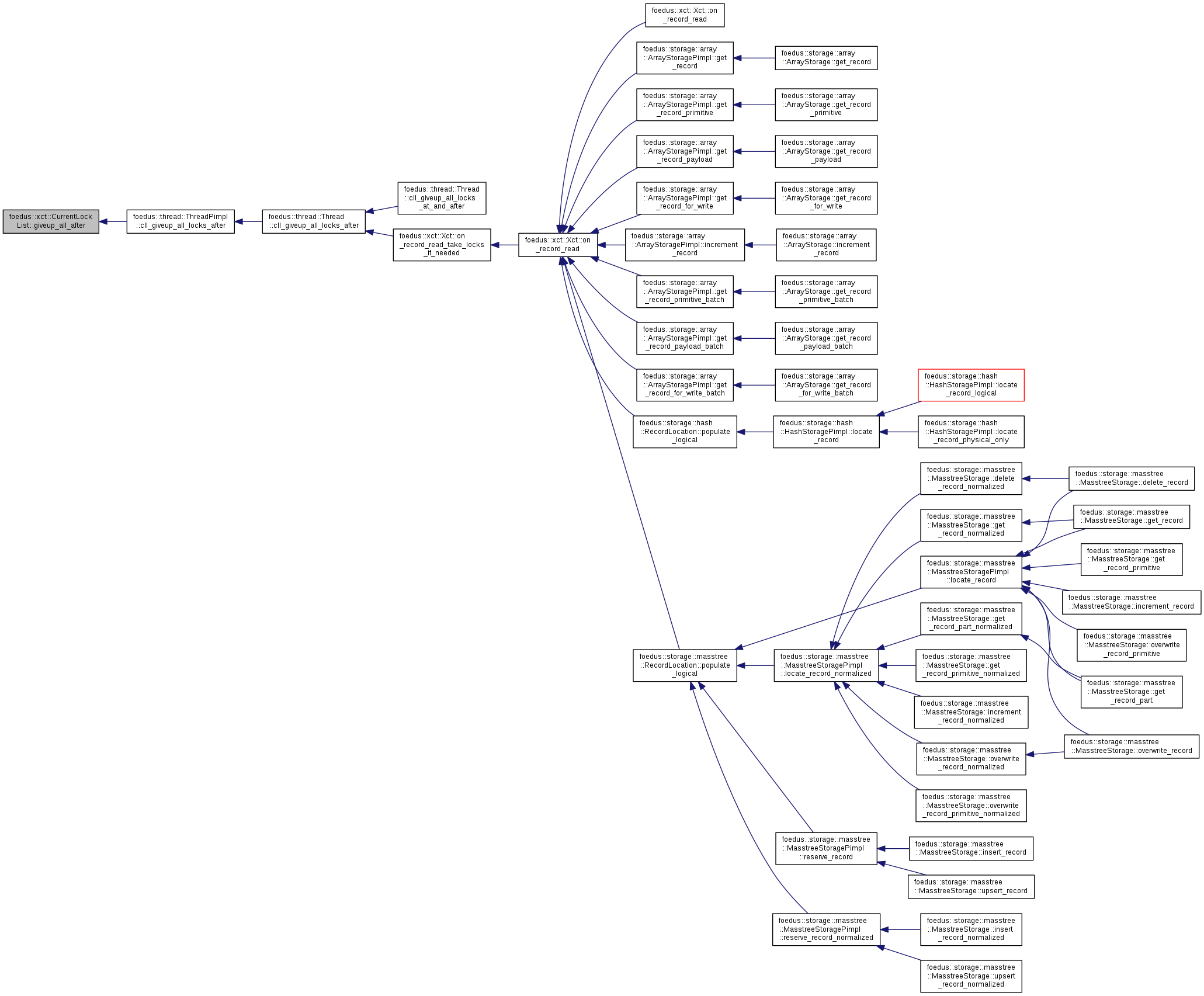

Referenced by foedus::xct::Xct::activate(), CurrentLockList(), init(), foedus::xct::XctManagerPimpl::release_and_clear_all_current_locks(), and uninit().

|

inline |

Definition at line 372 of file retrospective_lock_list.hpp.

Referenced by giveup_all_after(), and release_all_after().

|

inline |

Definition at line 350 of file retrospective_lock_list.hpp.

Referenced by foedus::xct::XctManagerPimpl::precommit_xct_lock().

|

inline |

Definition at line 351 of file retrospective_lock_list.hpp.

|

inline |

Definition at line 360 of file retrospective_lock_list.hpp.

|

inline |

Definition at line 352 of file retrospective_lock_list.hpp.

References ASSERT_ND, and is_valid_entry().

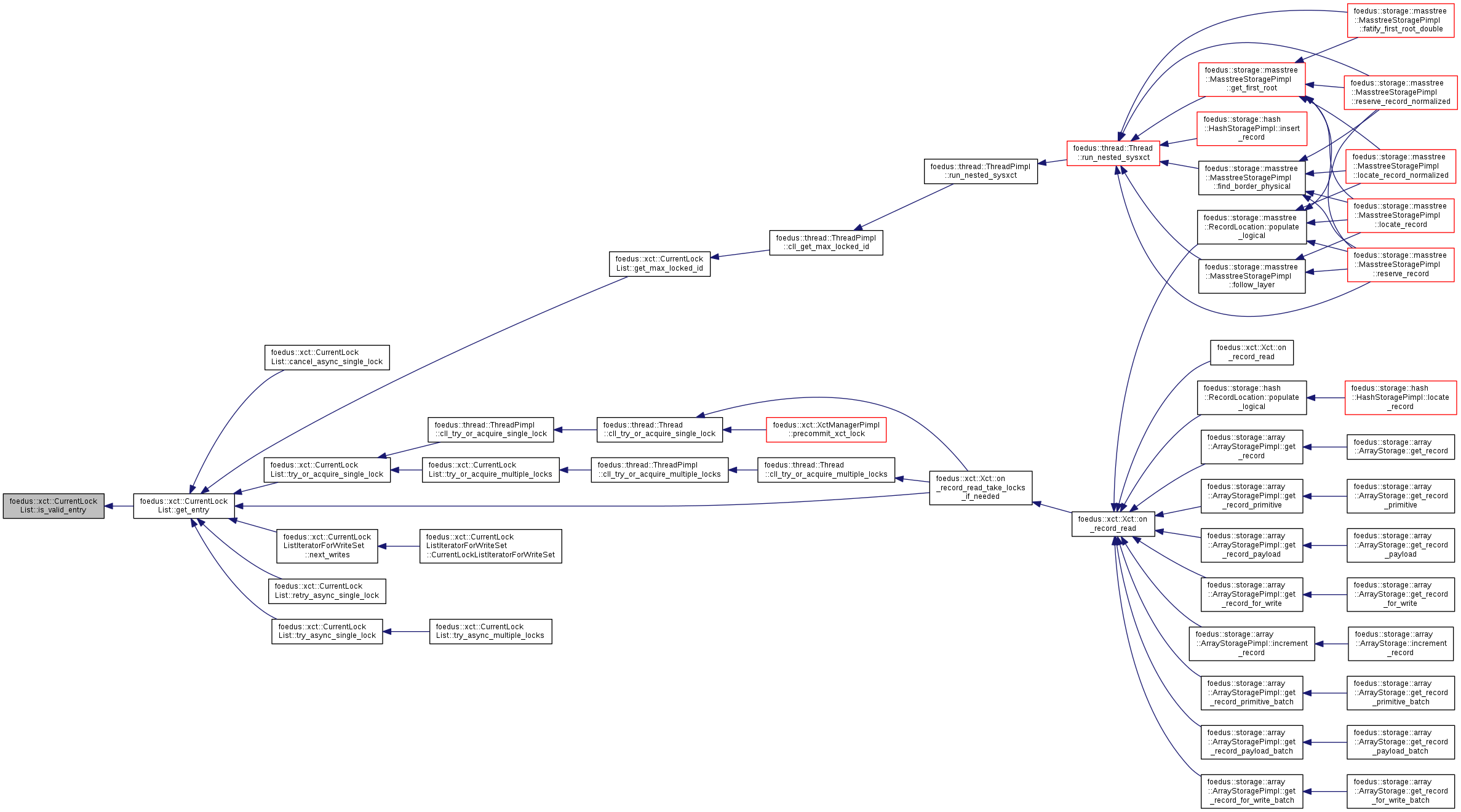

Referenced by cancel_async_single_lock(), get_max_locked_id(), foedus::xct::CurrentLockListIteratorForWriteSet::next_writes(), foedus::xct::Xct::on_record_read_take_locks_if_needed(), retry_async_single_lock(), try_async_single_lock(), and try_or_acquire_single_lock().

|

inline |

Definition at line 356 of file retrospective_lock_list.hpp.

References ASSERT_ND, and is_valid_entry().

|

inline |

Definition at line 361 of file retrospective_lock_list.hpp.

|

inline |

Definition at line 383 of file retrospective_lock_list.hpp.

Referenced by foedus::xct::XctManagerPimpl::precommit_xct_lock().

|

inline |

Definition at line 385 of file retrospective_lock_list.hpp.

References get_entry(), foedus::xct::kLockListPositionInvalid, foedus::xct::kNullUniversalLockId, and foedus::xct::LockEntry::universal_lock_id_.

Referenced by foedus::thread::ThreadPimpl::cll_get_max_locked_id().

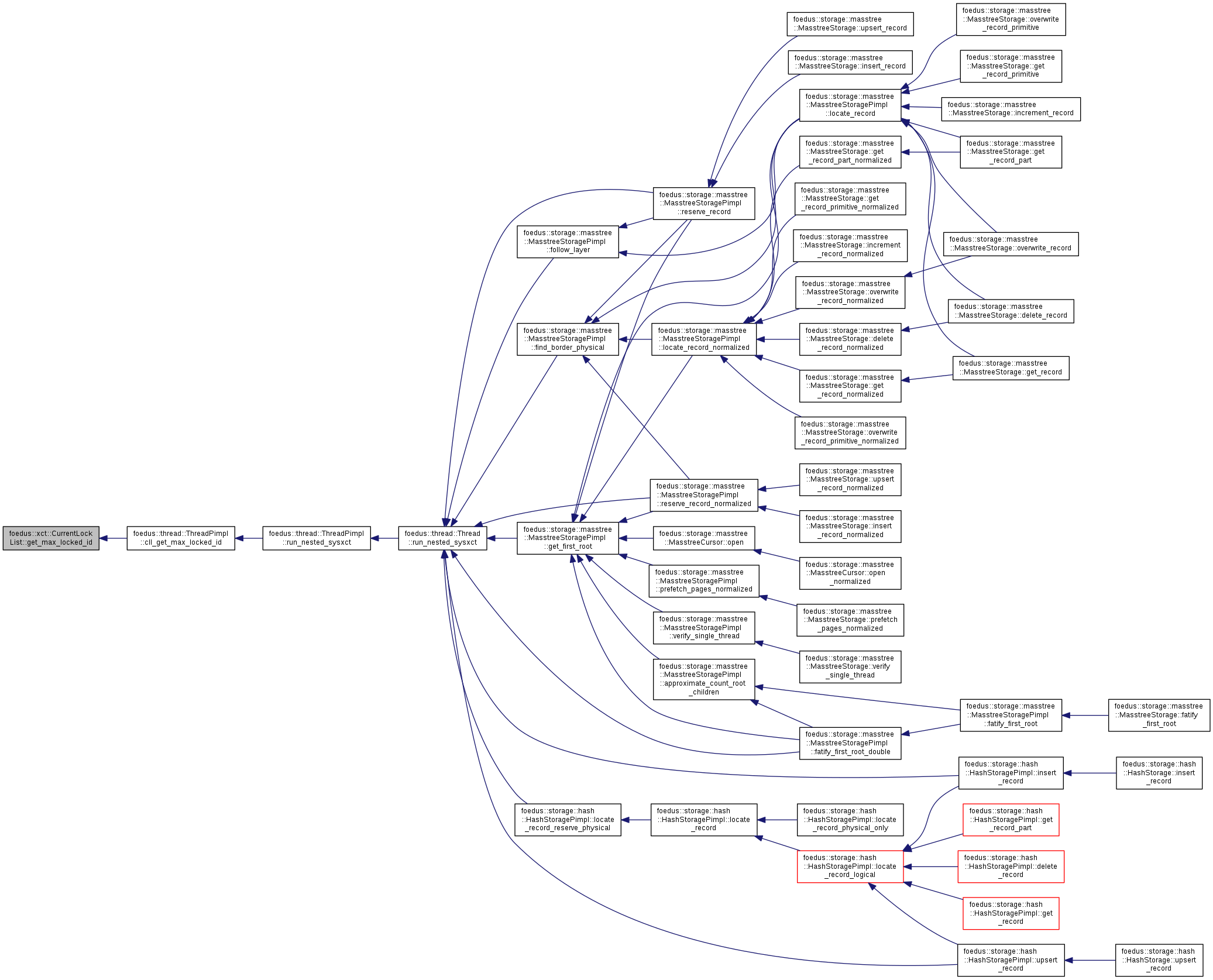

| LockListPosition foedus::xct::CurrentLockList::get_or_add_entry | ( | UniversalLockId | lock_id, |

| RwLockableXctId * | lock, | ||

| LockMode | preferred_mode | ||

| ) |

Adds an entry to this list, re-sorting part of the list if necessary to keep the sortedness.

If there is an existing entry for the lock, it just returns its position. If not, this method creates a new entry with taken_mode=kNoLock.

Definition at line 204 of file retrospective_lock_list.cpp.

References assert_last_locked_entry(), ASSERT_ND, assert_sorted(), foedus::xct::kLockListPositionInvalid, foedus::xct::kNoLock, lower_bound(), foedus::xct::LockEntry::preferred_mode_, foedus::xct::LockEntry::set(), and foedus::xct::xct_id_to_universal_lock_id().

Referenced by foedus::xct::Xct::on_record_read_take_locks_if_needed().

|

inline |

Definition at line 375 of file retrospective_lock_list.hpp.

|

inline |

This gives-up locks in CLL that are not yet taken.

preferred mode will be set to either NoLock or same as taken_mode, and all incomplete async locks will be cancelled. Unlike clear_entries(), this leaves the entries.

Definition at line 826 of file retrospective_lock_list.hpp.

References ASSERT_ND, assert_sorted(), begin(), end(), foedus::xct::kNoLock, foedus::xct::kReadLock, and foedus::xct::kWriteLock.

Referenced by foedus::thread::ThreadPimpl::cll_giveup_all_locks_after().

|

inline |

Definition at line 886 of file retrospective_lock_list.hpp.

References foedus::xct::kNullUniversalLockId.

| void foedus::xct::CurrentLockList::init | ( | LockEntry * | array, |

| uint32_t | capacity, | ||

| const memory::GlobalVolatilePageResolver & | resolver | ||

| ) |

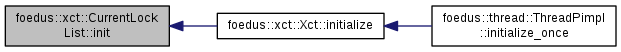

Definition at line 86 of file retrospective_lock_list.cpp.

References clear_entries().

Referenced by foedus::xct::Xct::initialize().

|

inline |

Definition at line 365 of file retrospective_lock_list.hpp.

References foedus::xct::kLockListPositionInvalid.

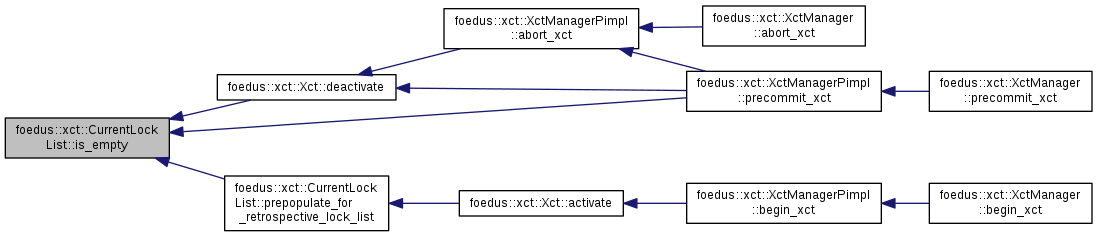

Referenced by foedus::xct::Xct::deactivate(), foedus::xct::XctManagerPimpl::precommit_xct(), and prepopulate_for_retrospective_lock_list().

|

inline |

Definition at line 362 of file retrospective_lock_list.hpp.

References foedus::xct::kLockListPositionInvalid.

Referenced by get_entry().

| LockListPosition foedus::xct::CurrentLockList::lower_bound | ( | UniversalLockId | lock | ) | const |

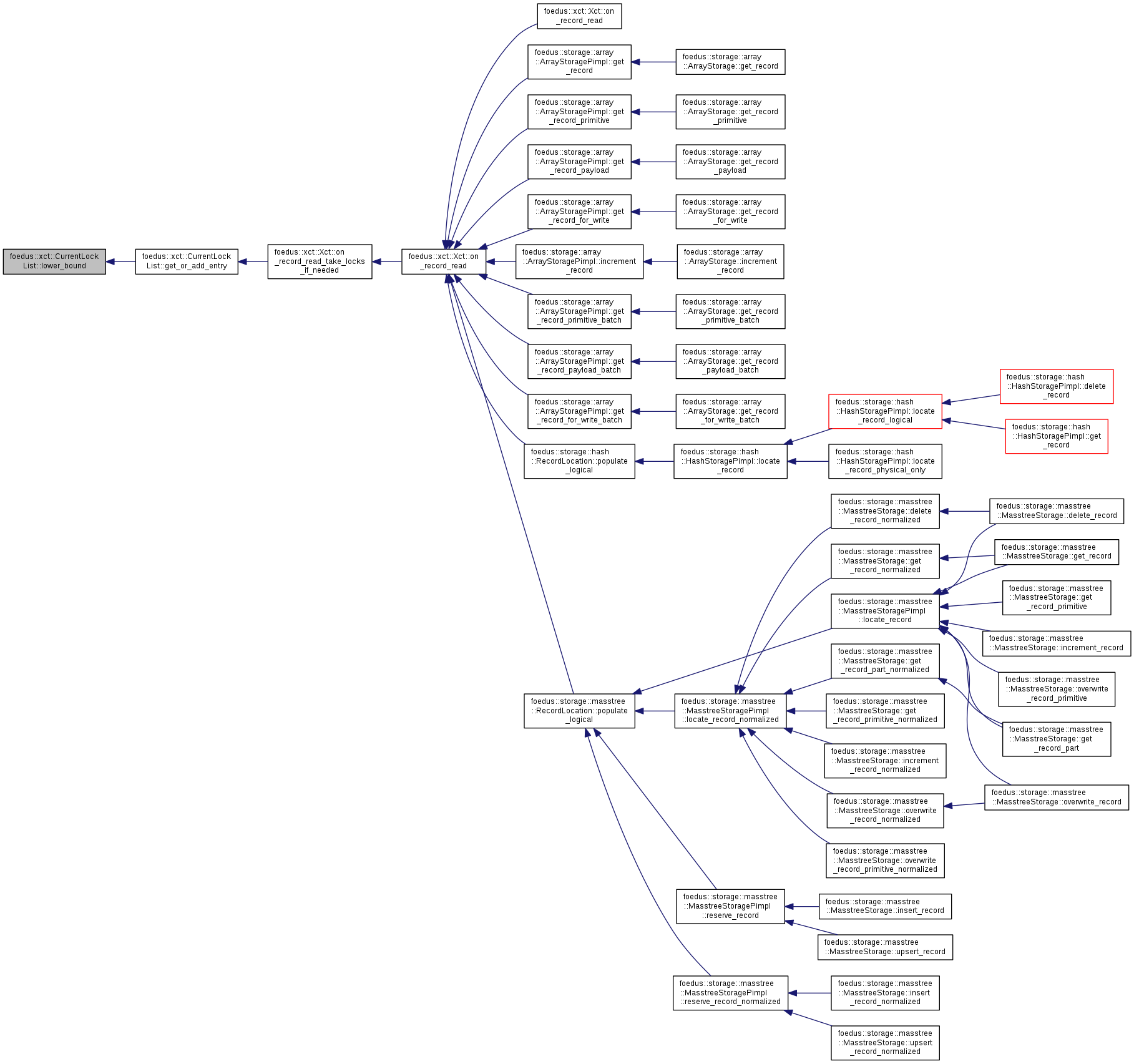

Analogous to std::lower_bound() for the given lock.

Definition at line 196 of file retrospective_lock_list.cpp.

References assert_last_locked_entry().

Referenced by get_or_add_entry().

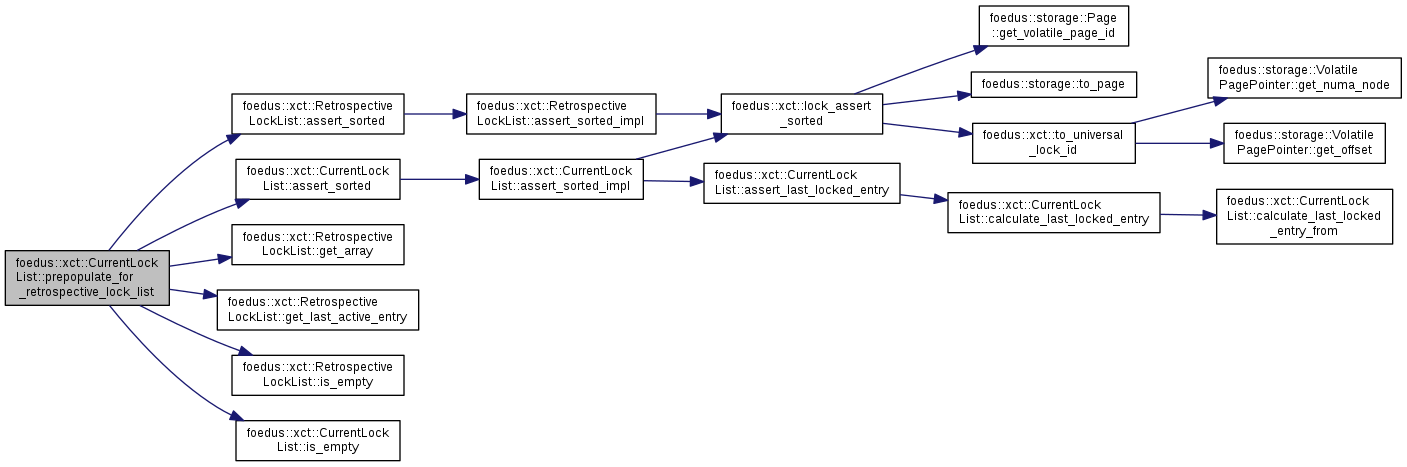

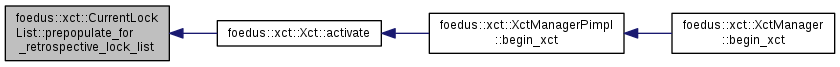

| void foedus::xct::CurrentLockList::prepopulate_for_retrospective_lock_list | ( | const RetrospectiveLockList & | rll | ) |

Another batch-insert method used at the beginning of a transaction.

When an Xct has RLL, it will highly likely lock all of them. So, it pre-populates CLL entries for all of them at the beginning. This is both for simplicity and performance.

Definition at line 445 of file retrospective_lock_list.cpp.

References ASSERT_ND, foedus::xct::RetrospectiveLockList::assert_sorted(), assert_sorted(), foedus::xct::RetrospectiveLockList::get_array(), foedus::xct::RetrospectiveLockList::get_last_active_entry(), foedus::xct::RetrospectiveLockList::is_empty(), is_empty(), and foedus::xct::kLockListPositionInvalid.

Referenced by foedus::xct::Xct::activate().

|

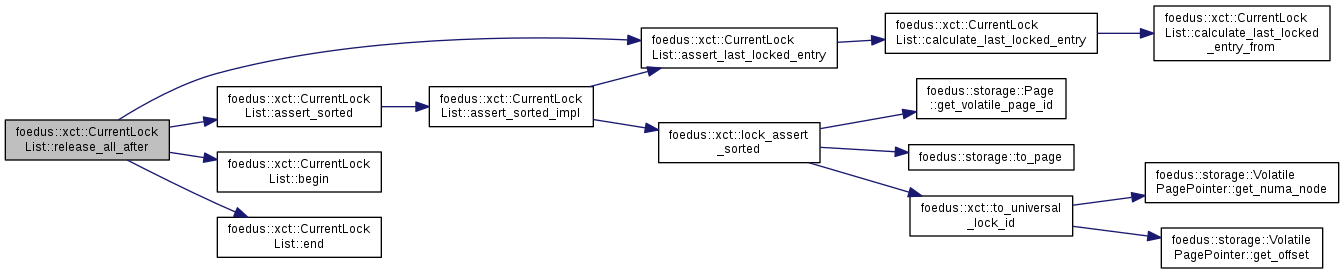

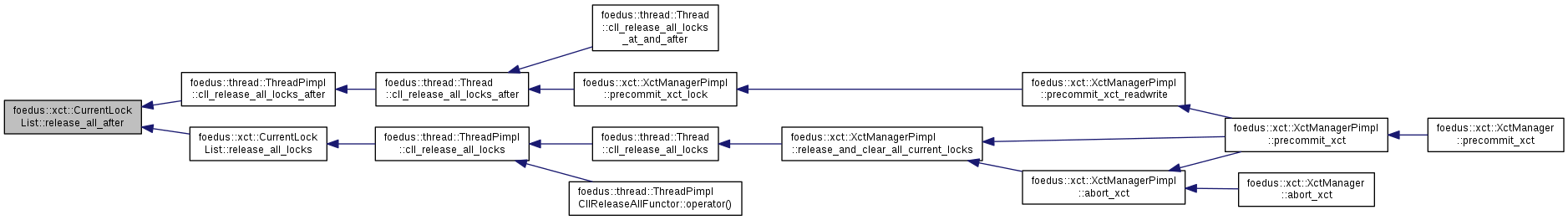

inline |

Release all locks in CLL whose addresses are canonically ordered before the parameter.

This is used where we need to rule out the risk of deadlock. Unlike clear_entries(), this leaves the entries.

Definition at line 753 of file retrospective_lock_list.hpp.

References assert_last_locked_entry(), ASSERT_ND, assert_sorted(), begin(), end(), foedus::xct::kLockListPositionInvalid, foedus::xct::kNoLock, foedus::xct::kReadLock, and foedus::xct::kWriteLock.

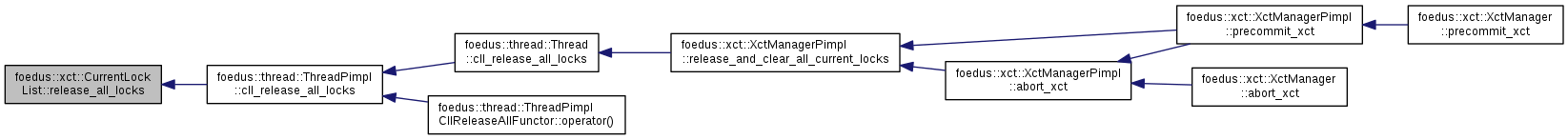

Referenced by foedus::thread::ThreadPimpl::cll_release_all_locks_after(), and release_all_locks().

|

inline |

same as release_all_after(address - 1)

Definition at line 815 of file retrospective_lock_list.hpp.

References foedus::xct::kNullUniversalLockId.

|

inline |

Definition at line 473 of file retrospective_lock_list.hpp.

References foedus::xct::kNullUniversalLockId, and release_all_after().

Referenced by foedus::thread::ThreadPimpl::cll_release_all_locks().

|

inline |

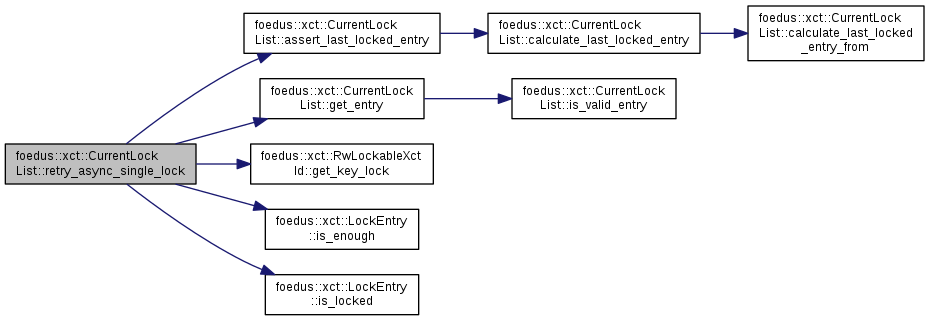

Definition at line 696 of file retrospective_lock_list.hpp.

References assert_last_locked_entry(), ASSERT_ND, get_entry(), foedus::xct::RwLockableXctId::get_key_lock(), foedus::xct::LockEntry::is_enough(), foedus::xct::LockEntry::is_locked(), foedus::xct::kNoLock, foedus::xct::kReadLock, foedus::xct::kWriteLock, foedus::xct::LockEntry::lock_, foedus::xct::LockEntry::mcs_block_, foedus::xct::LockEntry::preferred_mode_, and foedus::xct::LockEntry::taken_mode_.

|

inline |

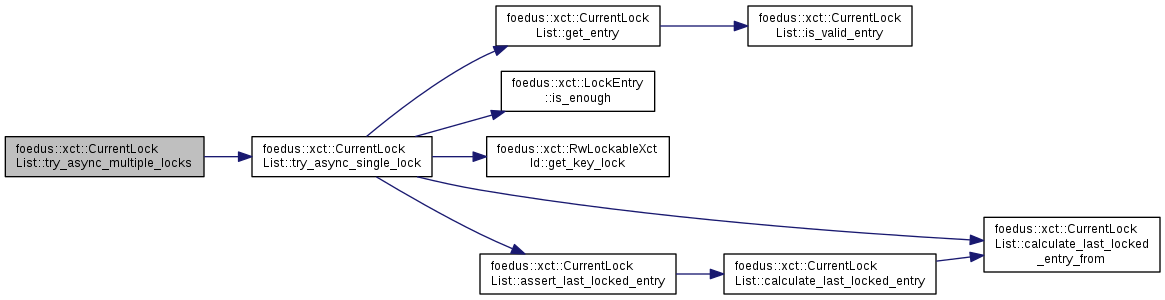

Definition at line 742 of file retrospective_lock_list.hpp.

References ASSERT_ND, foedus::xct::kLockListPositionInvalid, and try_async_single_lock().

|

inline |

Definition at line 649 of file retrospective_lock_list.hpp.

References foedus::xct::AcquireAsyncRet::acquired_, assert_last_locked_entry(), ASSERT_ND, foedus::xct::AcquireAsyncRet::block_index_, calculate_last_locked_entry_from(), get_entry(), foedus::xct::RwLockableXctId::get_key_lock(), foedus::xct::LockEntry::is_enough(), foedus::xct::kNoLock, foedus::xct::kReadLock, foedus::xct::kWriteLock, foedus::xct::LockEntry::lock_, foedus::xct::LockEntry::mcs_block_, foedus::xct::LockEntry::preferred_mode_, and foedus::xct::LockEntry::taken_mode_.

Referenced by try_async_multiple_locks().

|

inline |

Acquire multiple locks up to the given position in canonical order.

This is invoked by the thread to keep itself in canonical mode. This method is unconditional, meaning waits forever until we acquire the locks. Hence, this method must be invoked when the thread is still in canonical mode. Otherwise, it risks deadlock.

Definition at line 636 of file retrospective_lock_list.hpp.

References ASSERT_ND, CHECK_ERROR_CODE, foedus::kErrorCodeOk, foedus::xct::kLockListPositionInvalid, and try_or_acquire_single_lock().

Referenced by foedus::thread::ThreadPimpl::cll_try_or_acquire_multiple_locks().

|

inline |

Methods below take or release locks, so they receive MCS_RW_IMPL, a template param.

Inline definitions of CurrentLockList methods below.

To avoid vtable and allow inlining, we define them at the bottom of this file. Acquire one lock in this CLL.

This method automatically checks if we are following canonical mode, and acquire the lock unconditionally when in canonical mode (never returns until acquire), and try the lock instanteneously when not in canonical mode (returns RaceAbort immediately).

These are inlined primarily because they receive a template param, not because we want to inline for performance. We could do explicit instantiations, but not that lengthy, either. Just inlining them is easier in this case.

Definition at line 569 of file retrospective_lock_list.hpp.

References assert_last_locked_entry(), ASSERT_ND, calculate_last_locked_entry_from(), get_entry(), foedus::xct::RwLockableXctId::get_key_lock(), foedus::xct::LockEntry::is_enough(), foedus::xct::LockEntry::is_locked(), foedus::kErrorCodeOk, foedus::kErrorCodeXctLockAbort, foedus::xct::kLockListPositionInvalid, foedus::xct::kNoLock, foedus::xct::kReadLock, foedus::xct::kWriteLock, foedus::xct::LockEntry::lock_, foedus::xct::LockEntry::mcs_block_, foedus::xct::LockEntry::preferred_mode_, and foedus::xct::LockEntry::taken_mode_.

Referenced by foedus::thread::ThreadPimpl::cll_try_or_acquire_single_lock(), and try_or_acquire_multiple_locks().

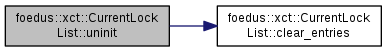

| void foedus::xct::CurrentLockList::uninit | ( | ) |

Definition at line 109 of file retrospective_lock_list.cpp.

References clear_entries().

Referenced by ~CurrentLockList().

|

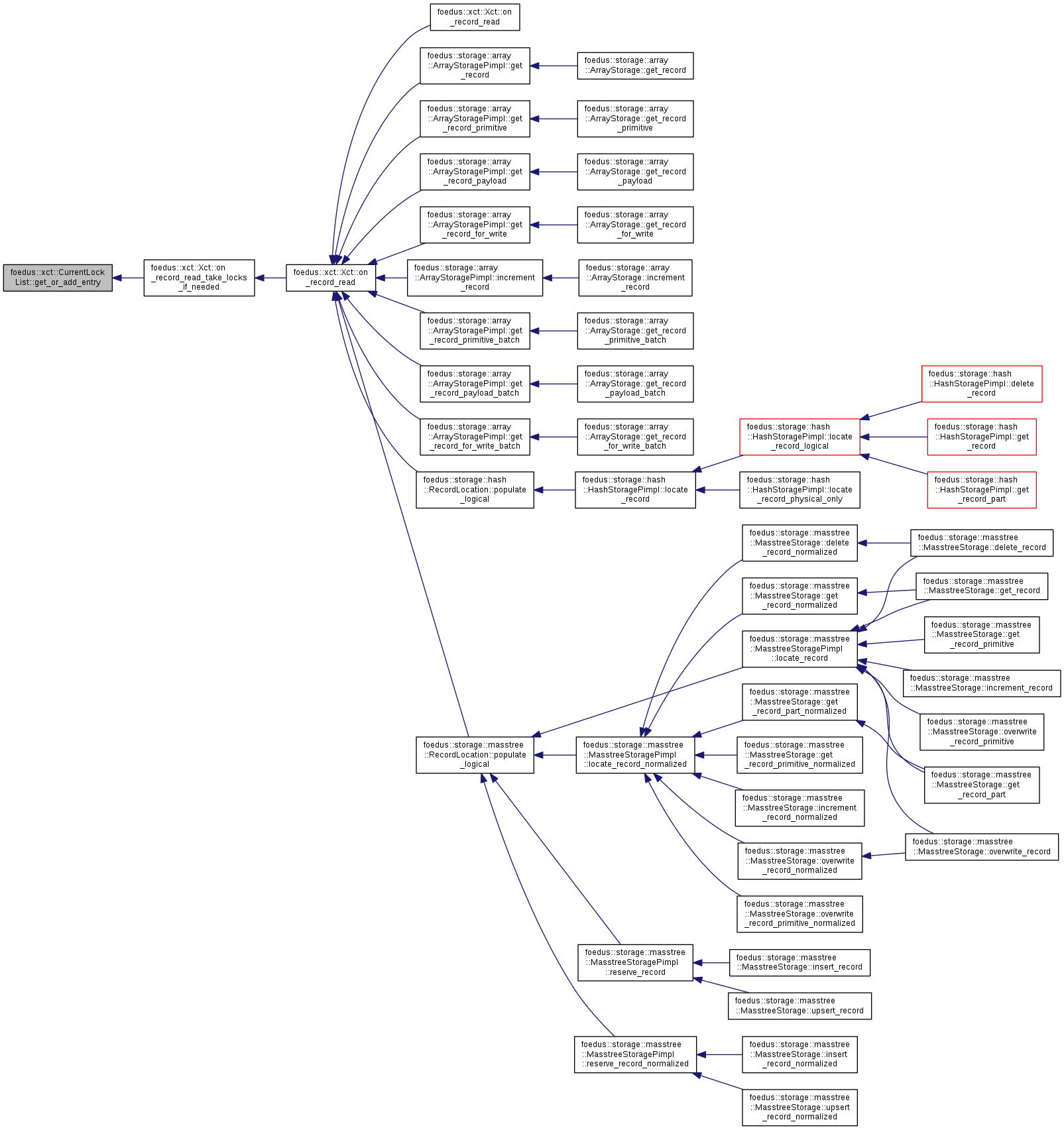

friend |

Definition at line 132 of file retrospective_lock_list.cpp.