|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

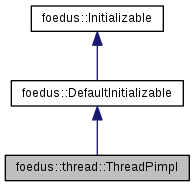

Pimpl object of Thread. More...

Pimpl object of Thread.

A private pimpl object for Thread. Do not include this header from a client program unless you know what you are doing.

Especially, this class heavily uses C++11 classes, which is why we separate this class from Thread. Be aware of notices in C++11 Keywords in Public Headers unless your client program allows C++11.

Definition at line 159 of file thread_pimpl.hpp.

#include <thread_pimpl.hpp>

Public Member Functions | |

| ThreadPimpl ()=delete | |

| ThreadPimpl (Engine *engine, Thread *holder, ThreadId id, ThreadGlobalOrdinal global_ordinal) | |

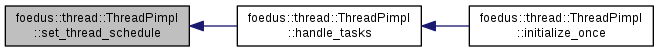

| ErrorStack | initialize_once () override final |

| ErrorStack | uninitialize_once () override final |

| void | handle_tasks () |

| Main routine of the worker thread. More... | |

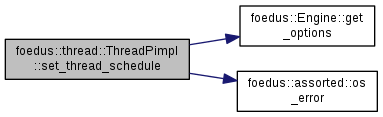

| void | set_thread_schedule () |

| initializes the thread's policy/priority More... | |

| bool | is_stop_requested () const |

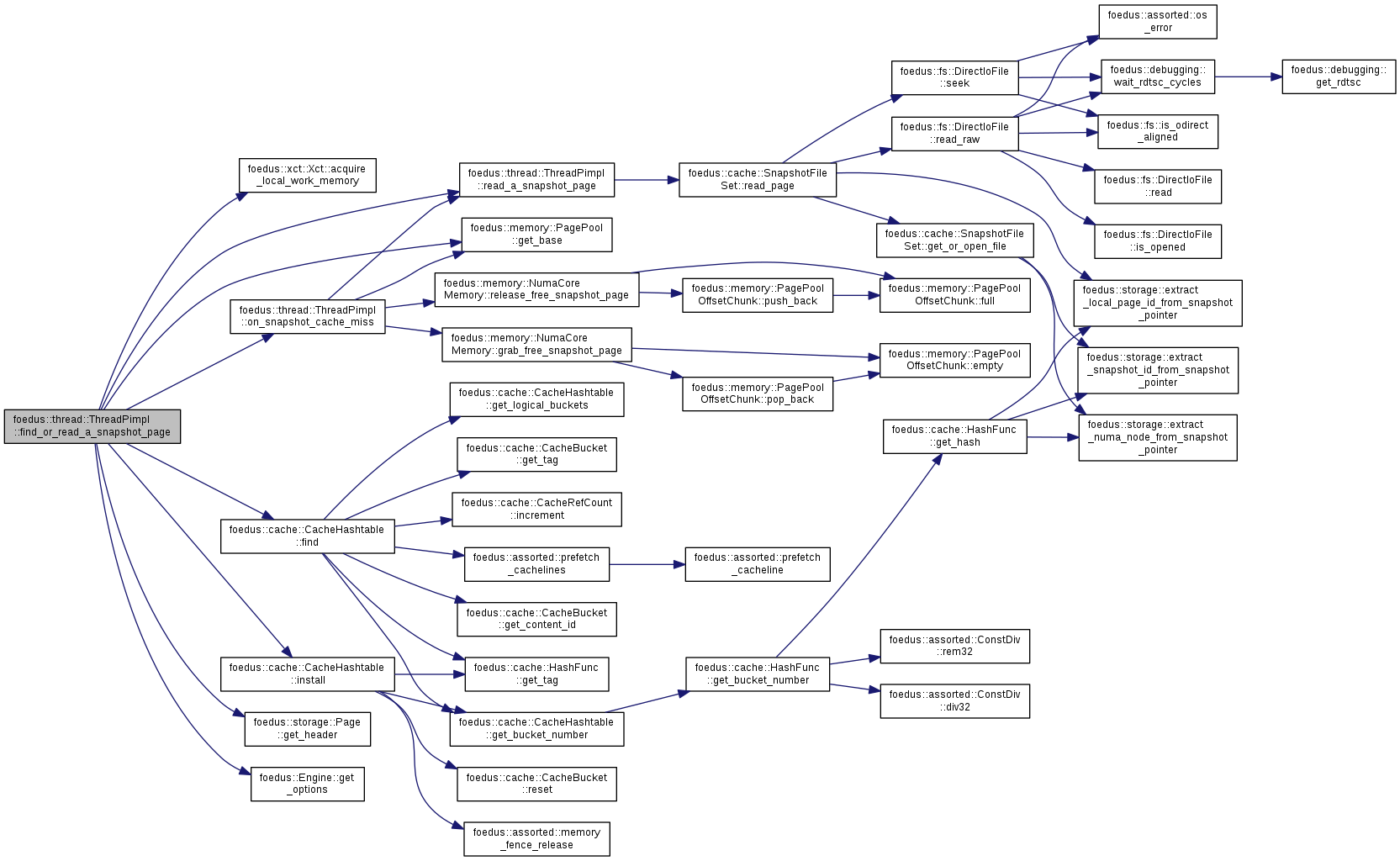

| ErrorCode | find_or_read_a_snapshot_page (storage::SnapshotPagePointer page_id, storage::Page **out) |

| Find the given page in snapshot cache, reading it if not found. More... | |

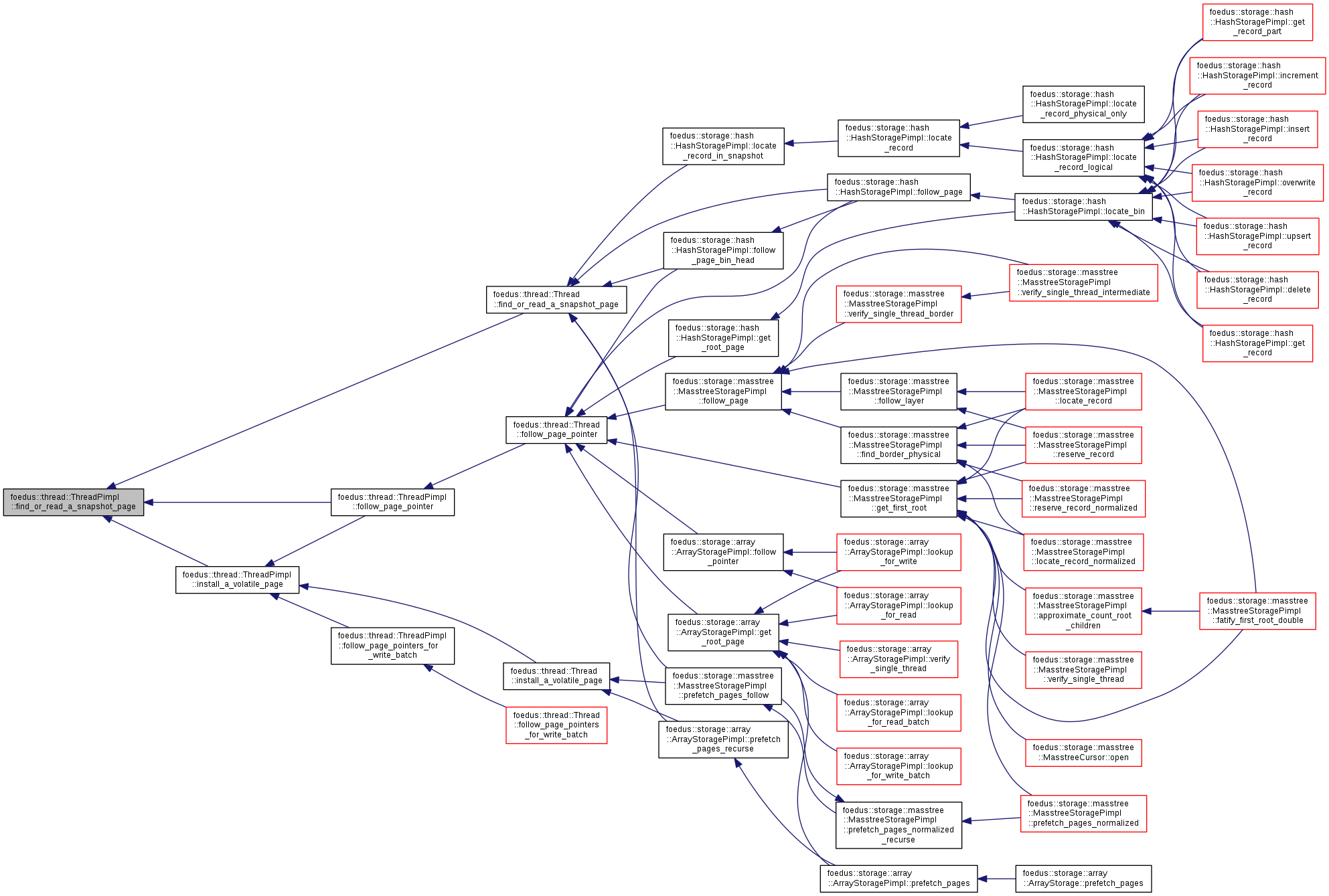

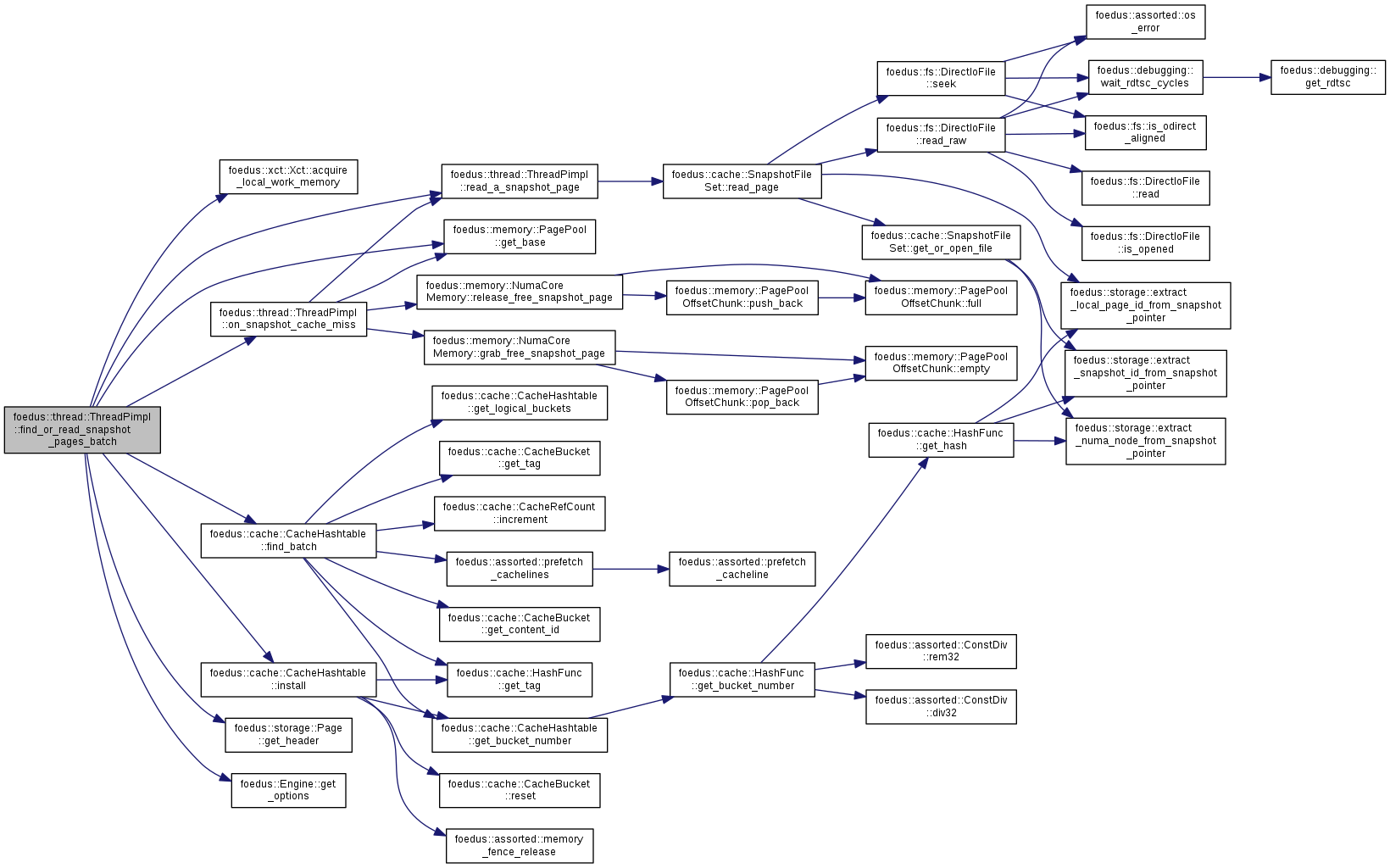

| ErrorCode | find_or_read_snapshot_pages_batch (uint16_t batch_size, const storage::SnapshotPagePointer *page_ids, storage::Page **out) |

| Batched version of find_or_read_a_snapshot_page(). More... | |

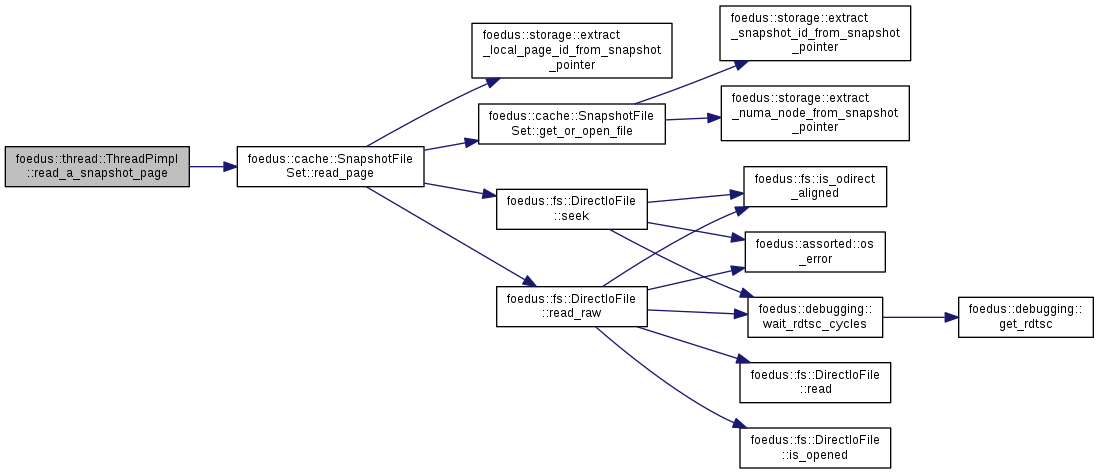

| ErrorCode | read_a_snapshot_page (storage::SnapshotPagePointer page_id, storage::Page *buffer) __attribute__((always_inline)) |

| Read a snapshot page using the thread-local file descriptor set. More... | |

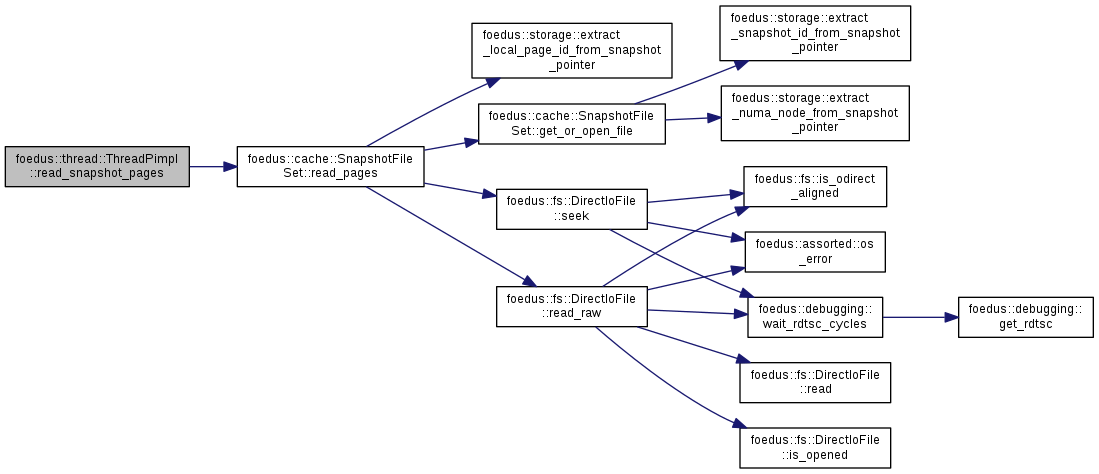

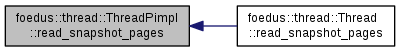

| ErrorCode | read_snapshot_pages (storage::SnapshotPagePointer page_id_begin, uint32_t page_count, storage::Page *buffer) __attribute__((always_inline)) |

| Read contiguous pages in one shot. More... | |

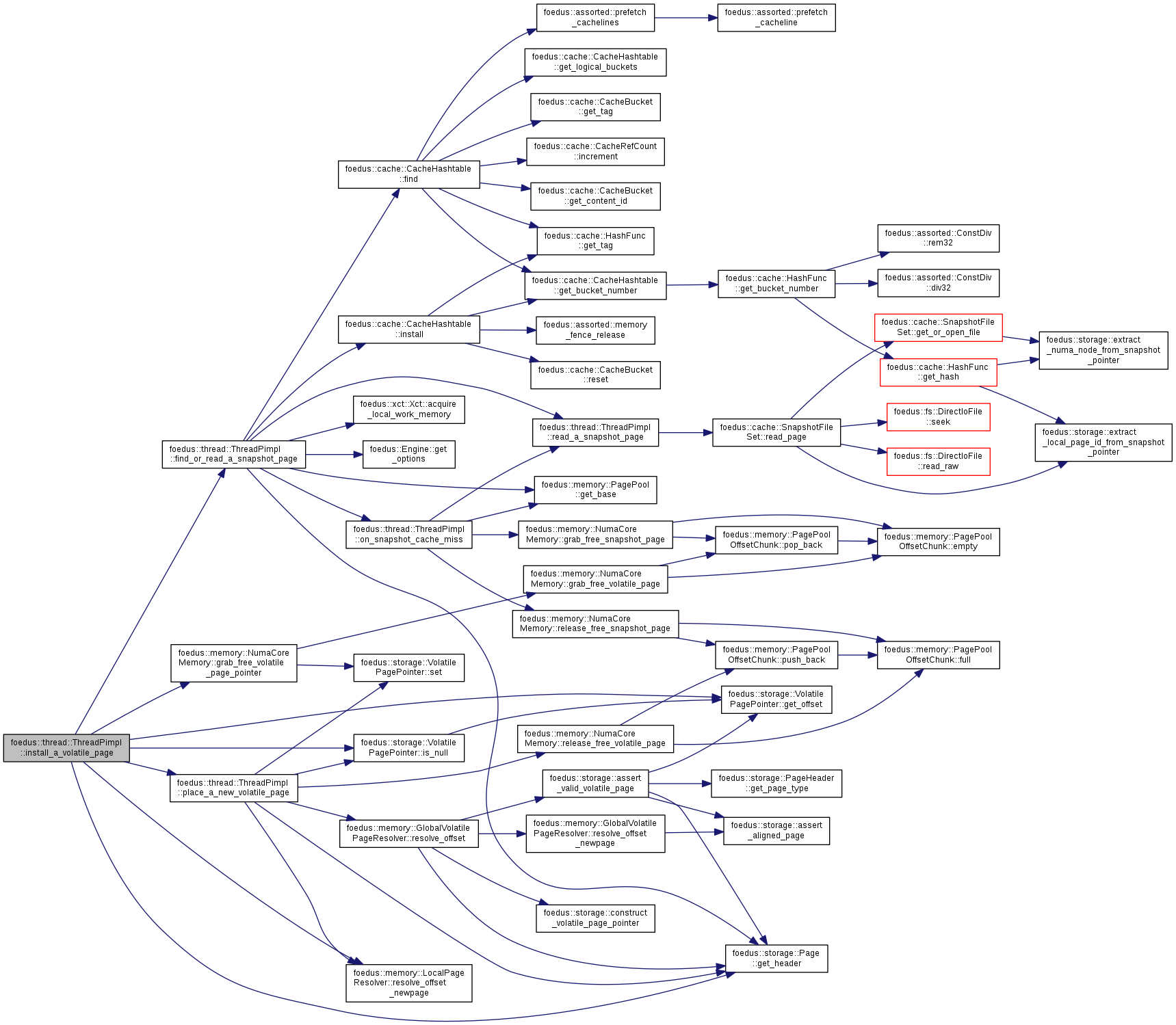

| ErrorCode | install_a_volatile_page (storage::DualPagePointer *pointer, storage::Page **installed_page) |

| Installs a volatile page to the given dual pointer as a copy of the snapshot page. More... | |

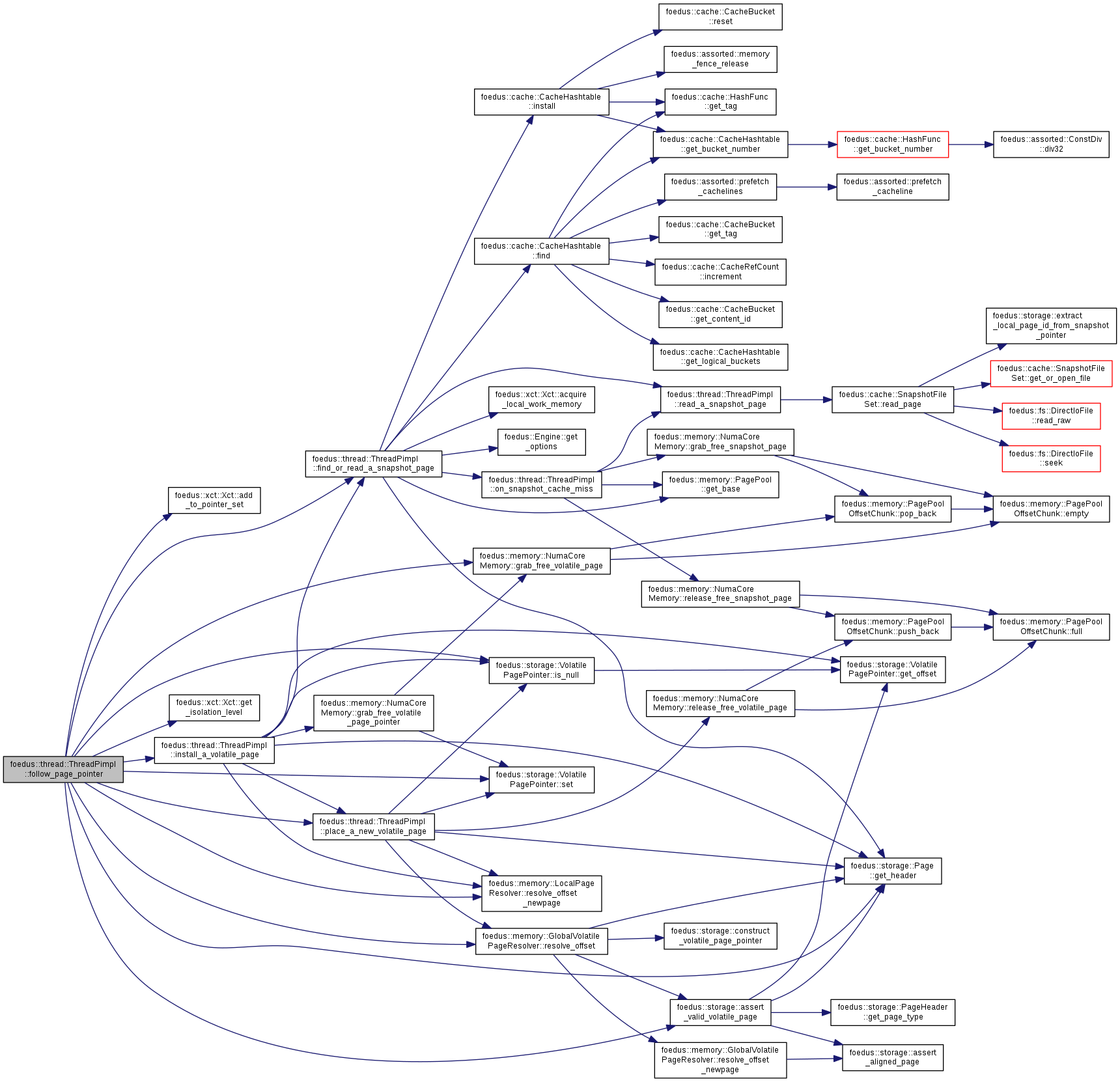

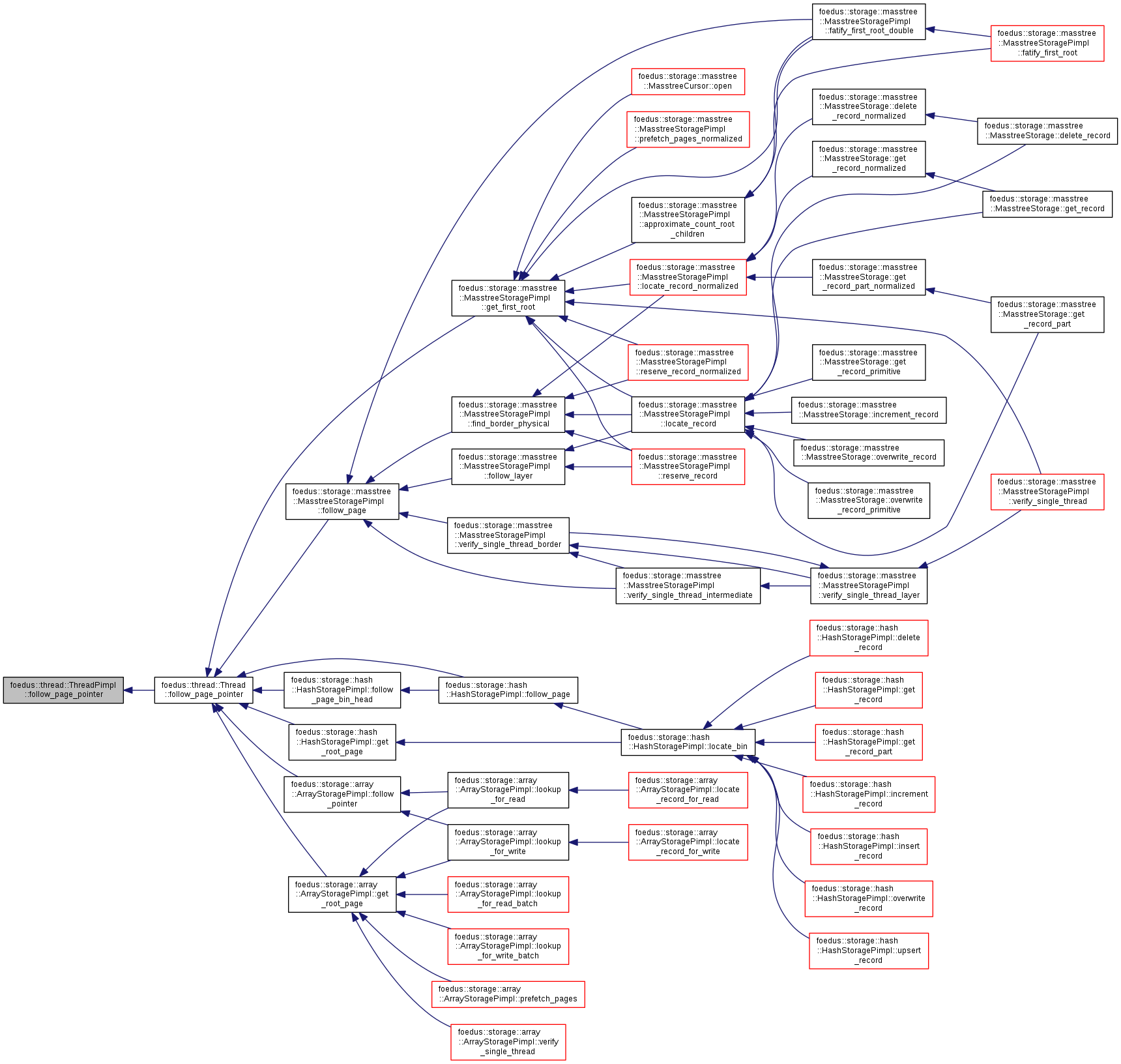

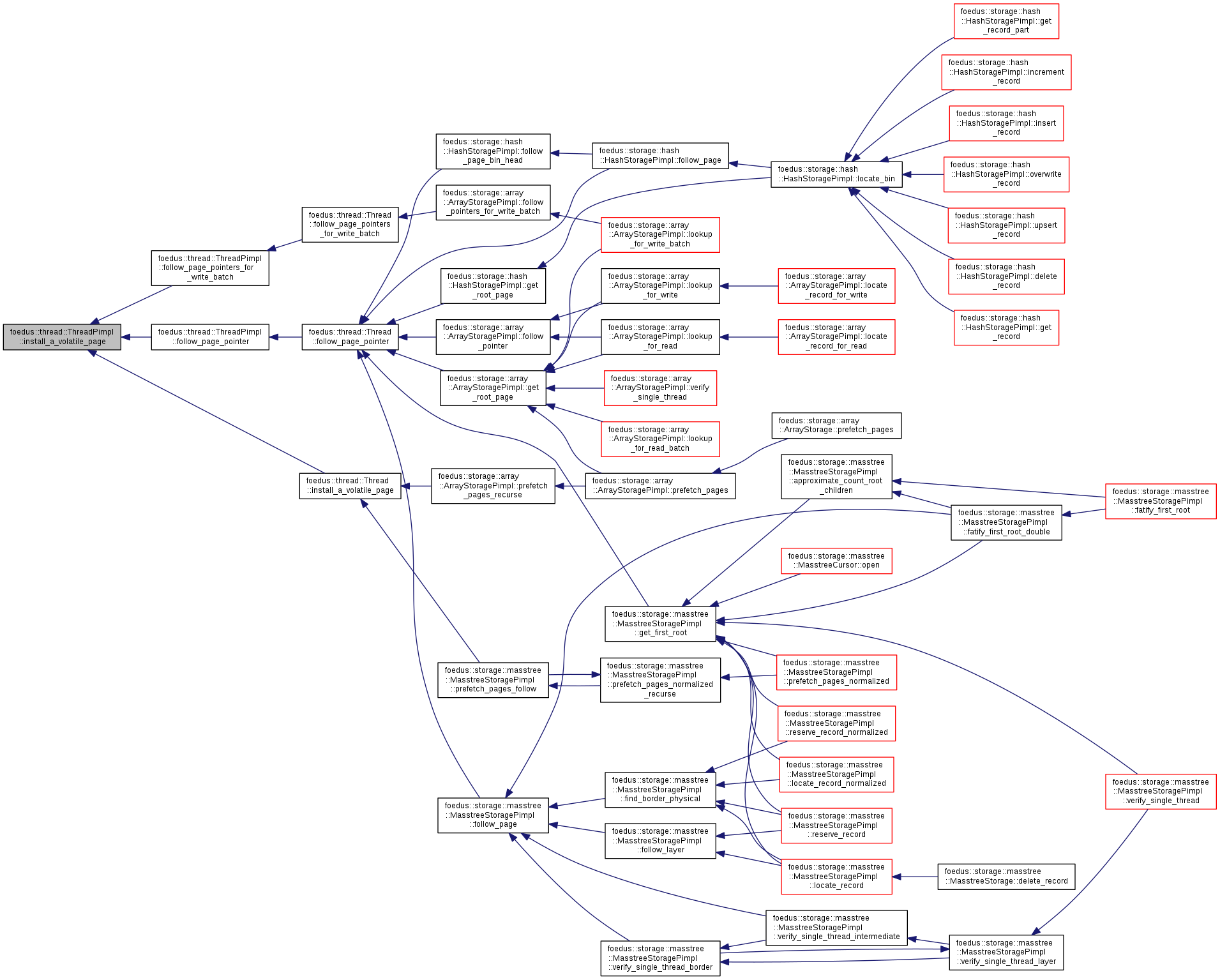

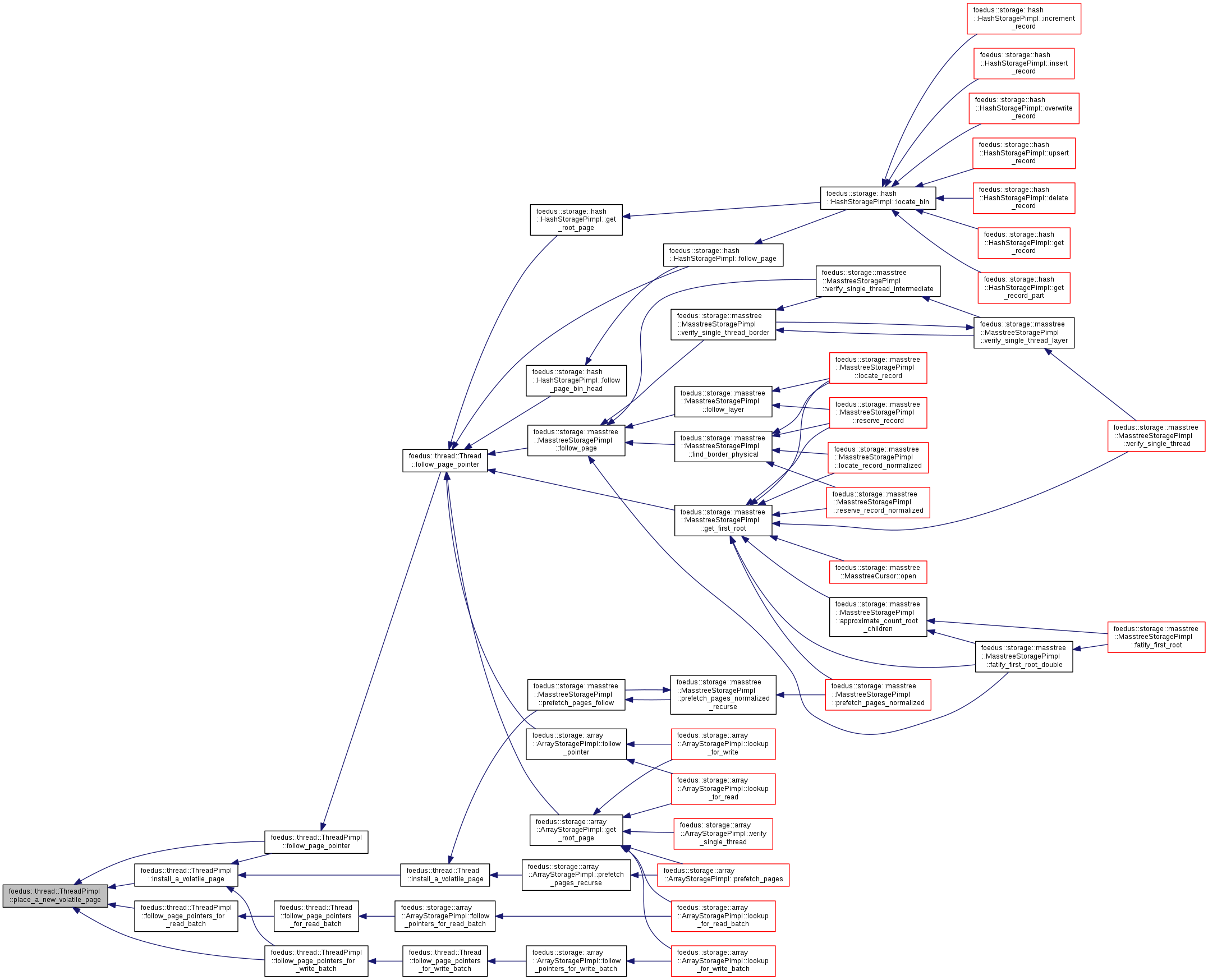

| ErrorCode | follow_page_pointer (storage::VolatilePageInit page_initializer, bool tolerate_null_pointer, bool will_modify, bool take_ptr_set_snapshot, storage::DualPagePointer *pointer, storage::Page **page, const storage::Page *parent, uint16_t index_in_parent) |

| A general method to follow (read) a page pointer. More... | |

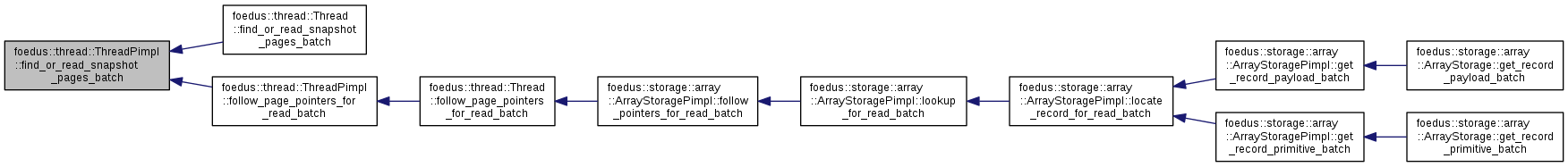

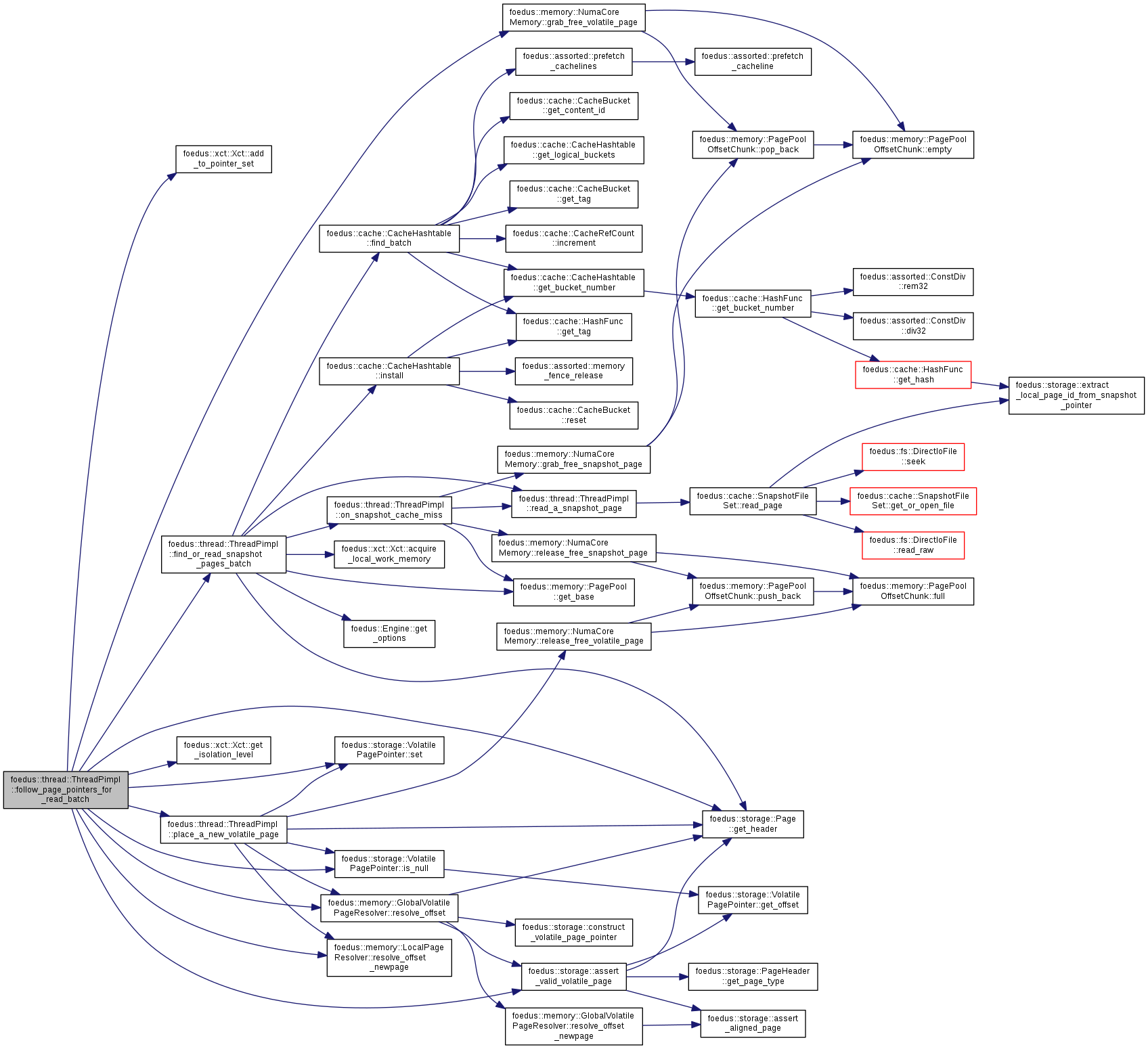

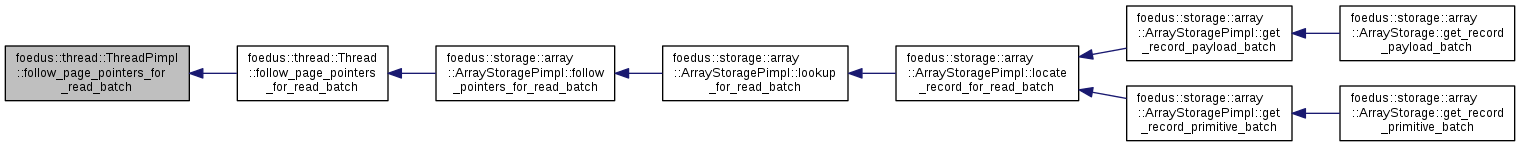

| ErrorCode | follow_page_pointers_for_read_batch (uint16_t batch_size, storage::VolatilePageInit page_initializer, bool tolerate_null_pointer, bool take_ptr_set_snapshot, storage::DualPagePointer **pointers, storage::Page **parents, const uint16_t *index_in_parents, bool *followed_snapshots, storage::Page **out) |

| Batched version of follow_page_pointer with will_modify==false. More... | |

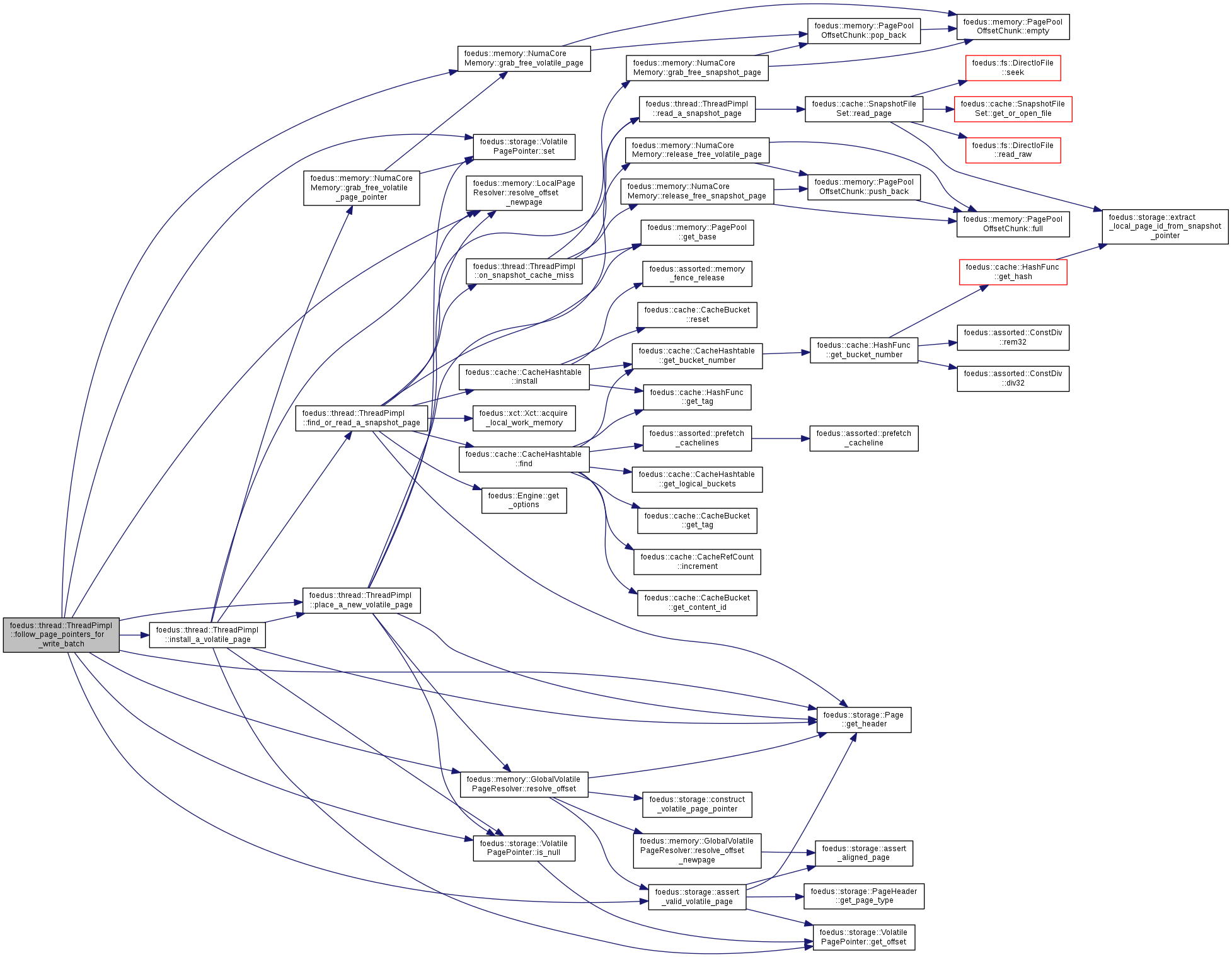

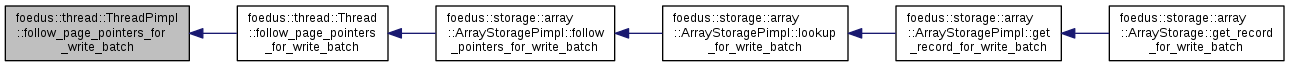

| ErrorCode | follow_page_pointers_for_write_batch (uint16_t batch_size, storage::VolatilePageInit page_initializer, storage::DualPagePointer **pointers, storage::Page **parents, const uint16_t *index_in_parents, storage::Page **out) |

| Batched version of follow_page_pointer with will_modify==true and tolerate_null_pointer==true. More... | |

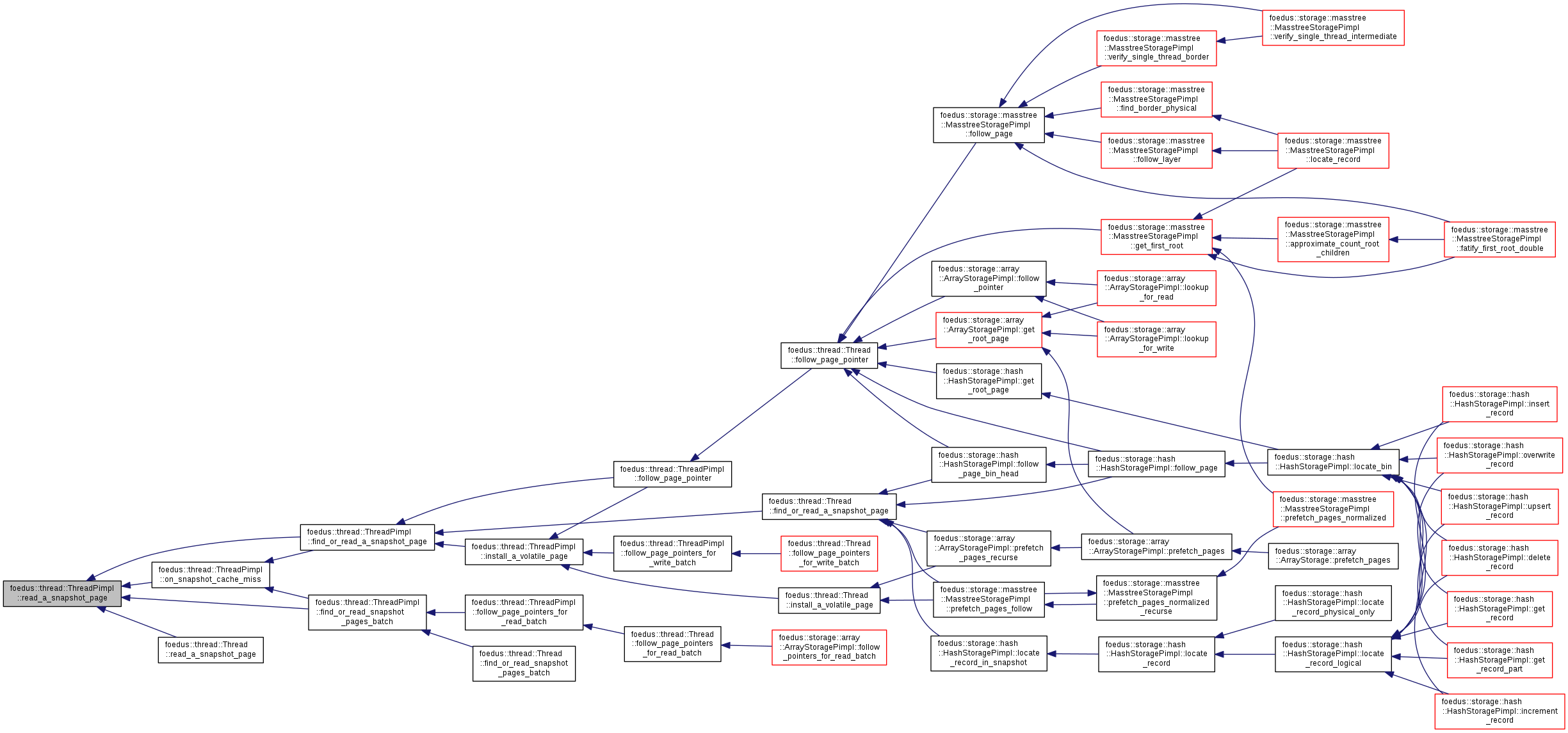

| ErrorCode | on_snapshot_cache_miss (storage::SnapshotPagePointer page_id, memory::PagePoolOffset *pool_offset) |

| storage::Page * | place_a_new_volatile_page (memory::PagePoolOffset new_offset, storage::DualPagePointer *pointer) |

| Subroutine of install_a_volatile_page() and follow_page_pointer() to atomically place the given new volatile page created by this thread. More... | |

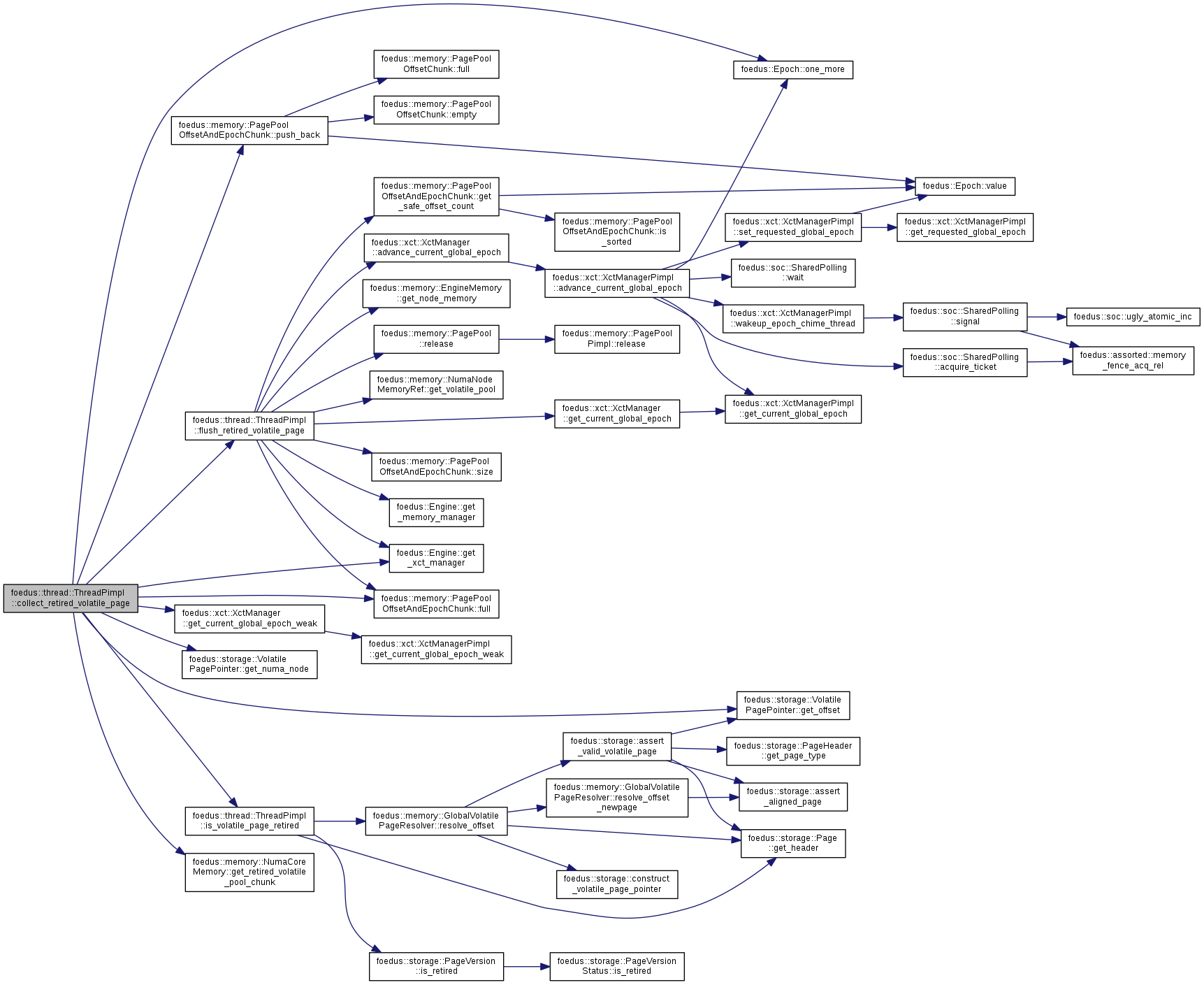

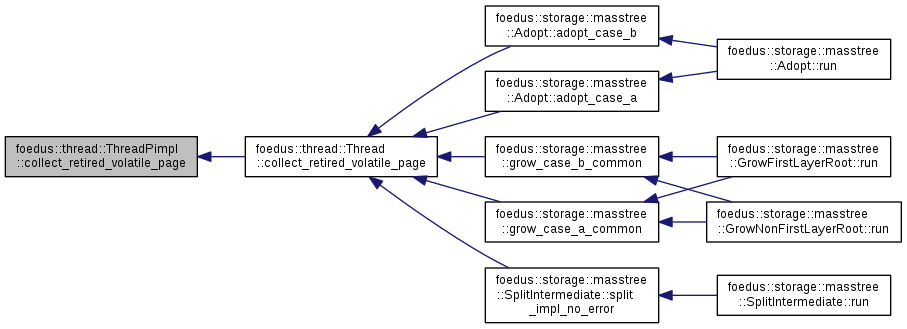

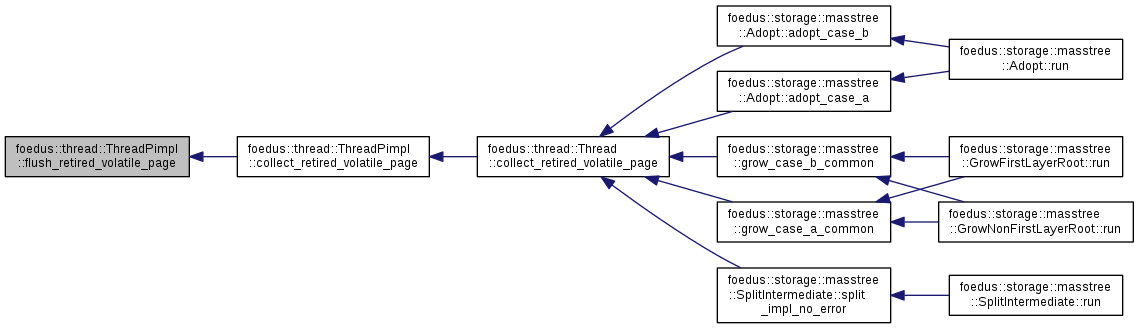

| void | collect_retired_volatile_page (storage::VolatilePagePointer ptr) |

| Keeps the specified volatile page as retired as of the current epoch. More... | |

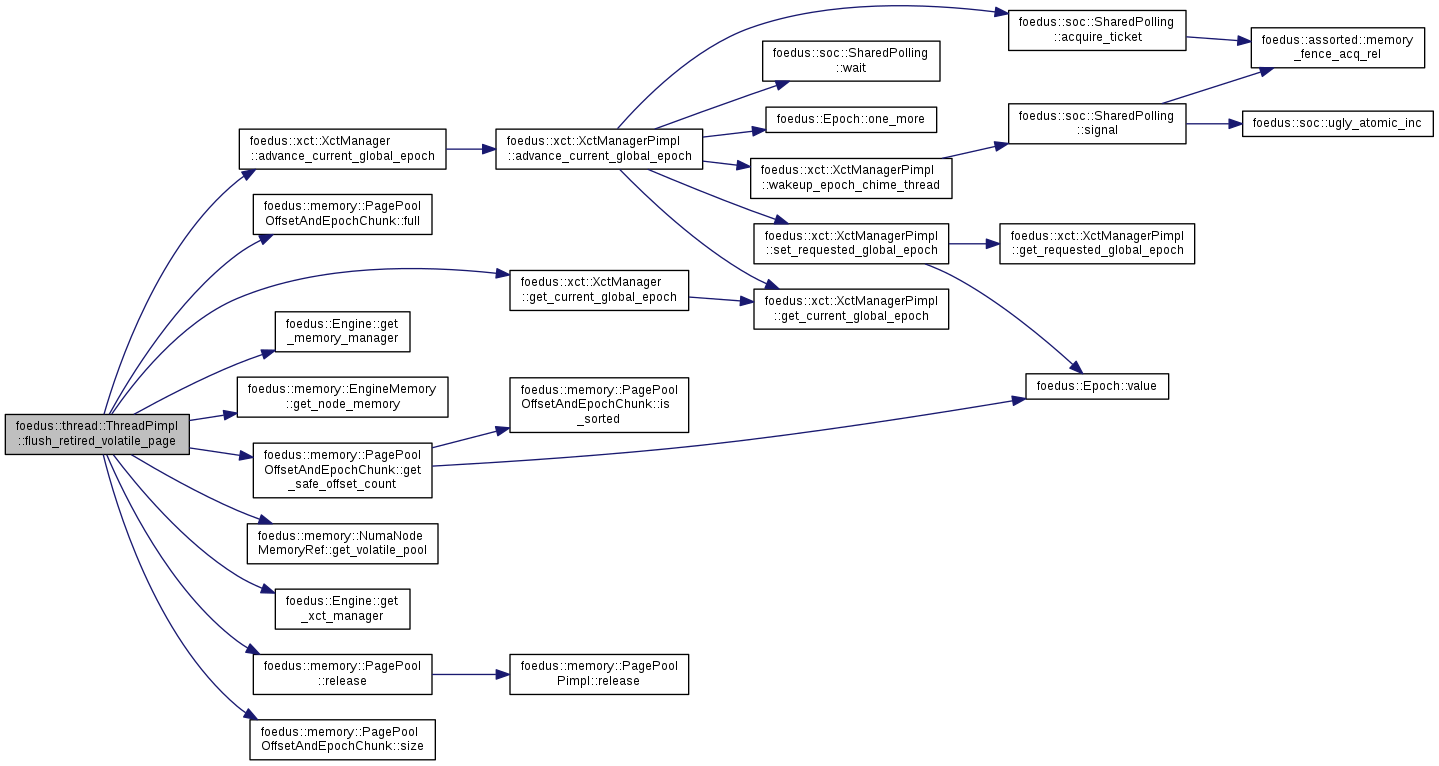

| void | flush_retired_volatile_page (uint16_t node, Epoch current_epoch, memory::PagePoolOffsetAndEpochChunk *chunk) |

| Subroutine of collect_retired_volatile_page() in case the chunk becomes full. More... | |

| bool | is_volatile_page_retired (storage::VolatilePagePointer ptr) |

| Subroutine of collect_retired_volatile_page() just for assertion. More... | |

| ThreadRef | get_thread_ref (ThreadId id) |

| template<typename FUNC > | |

| void | switch_mcs_impl (FUNC func) |

| MCS locks methods. More... | |

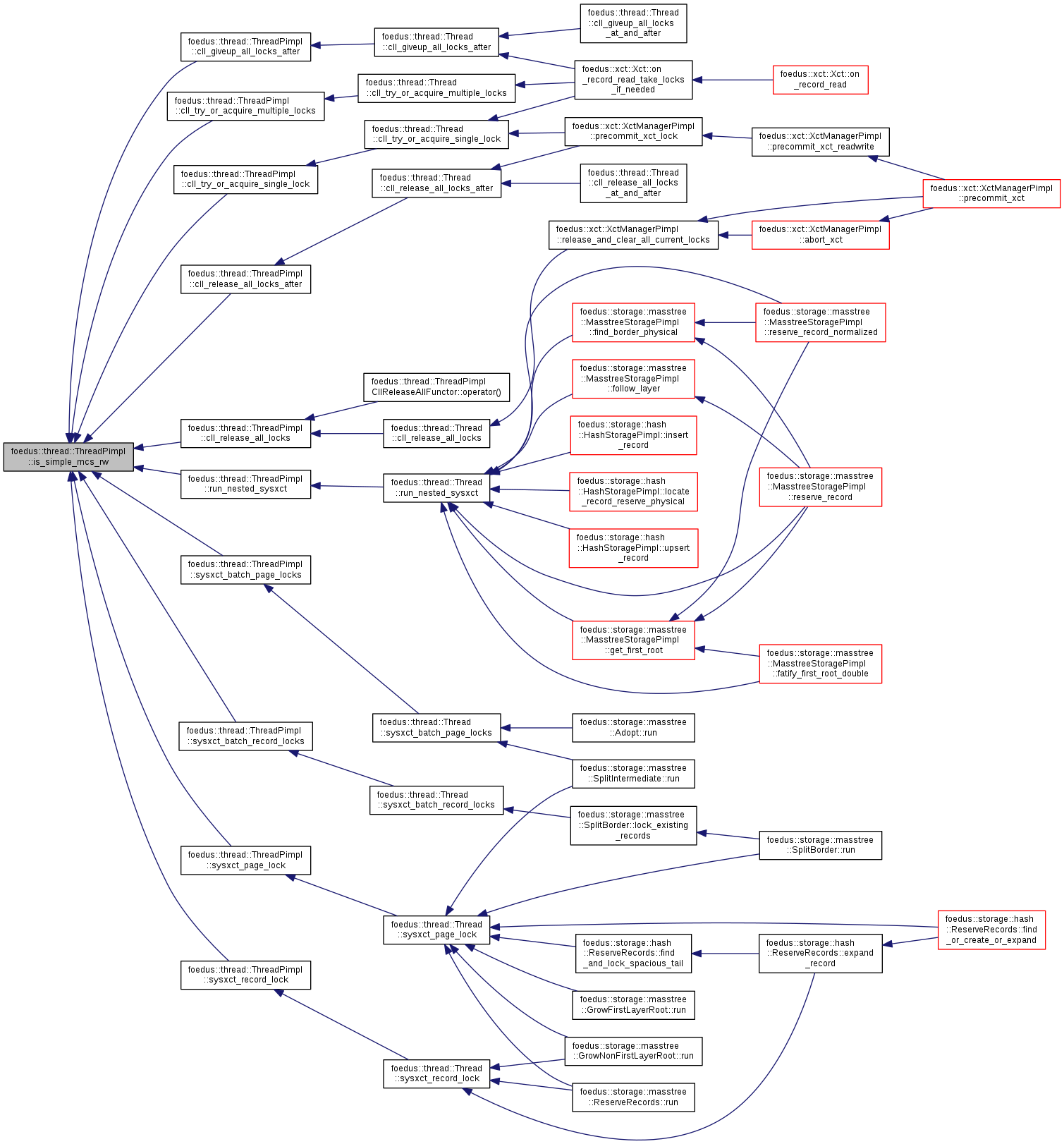

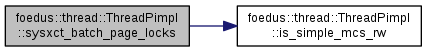

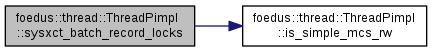

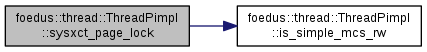

| bool | is_simple_mcs_rw () const |

| void | cll_release_all_locks_after (xct::UniversalLockId address) |

| RW-locks. More... | |

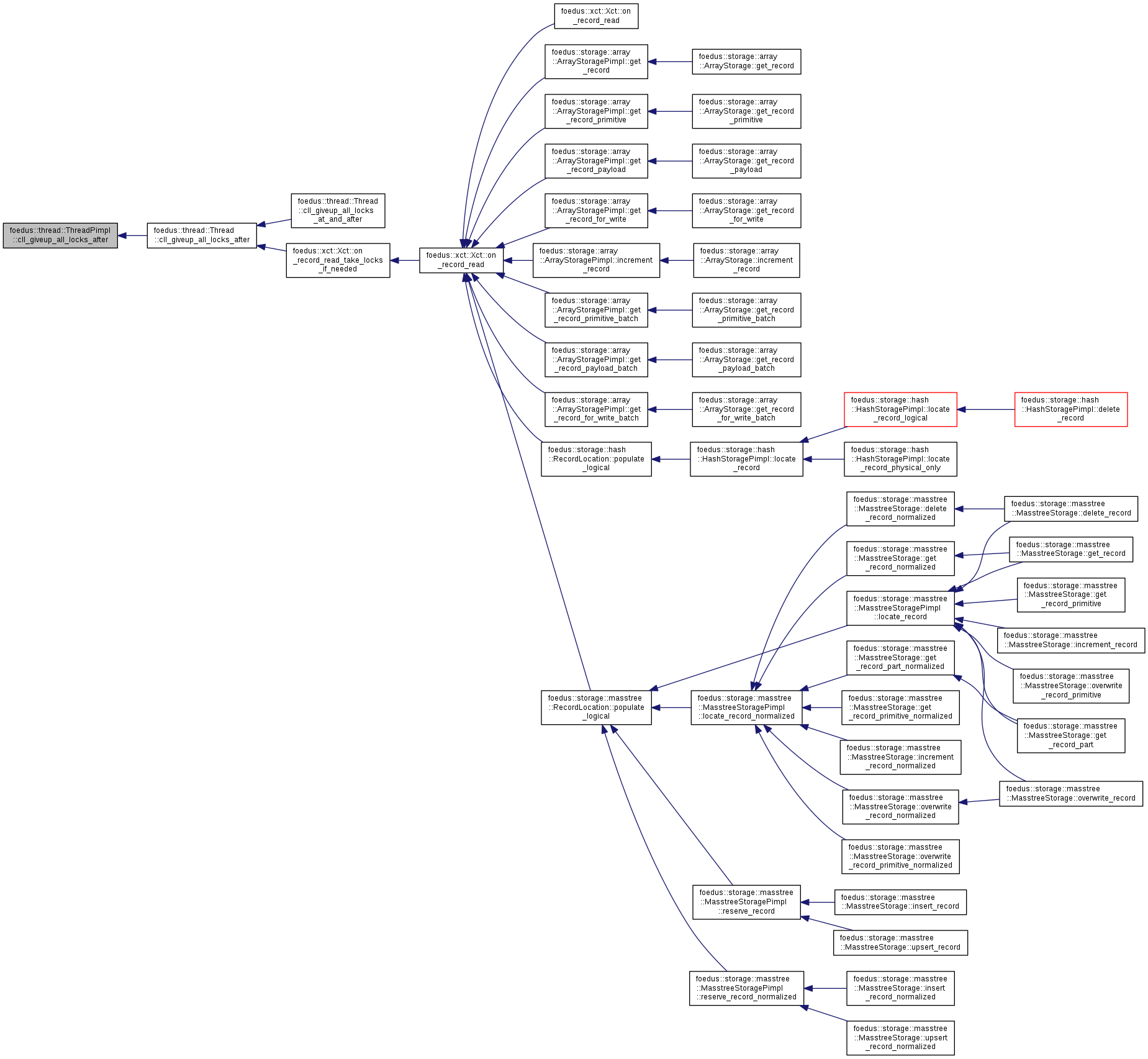

| void | cll_giveup_all_locks_after (xct::UniversalLockId address) |

| ErrorCode | cll_try_or_acquire_single_lock (xct::LockListPosition pos) |

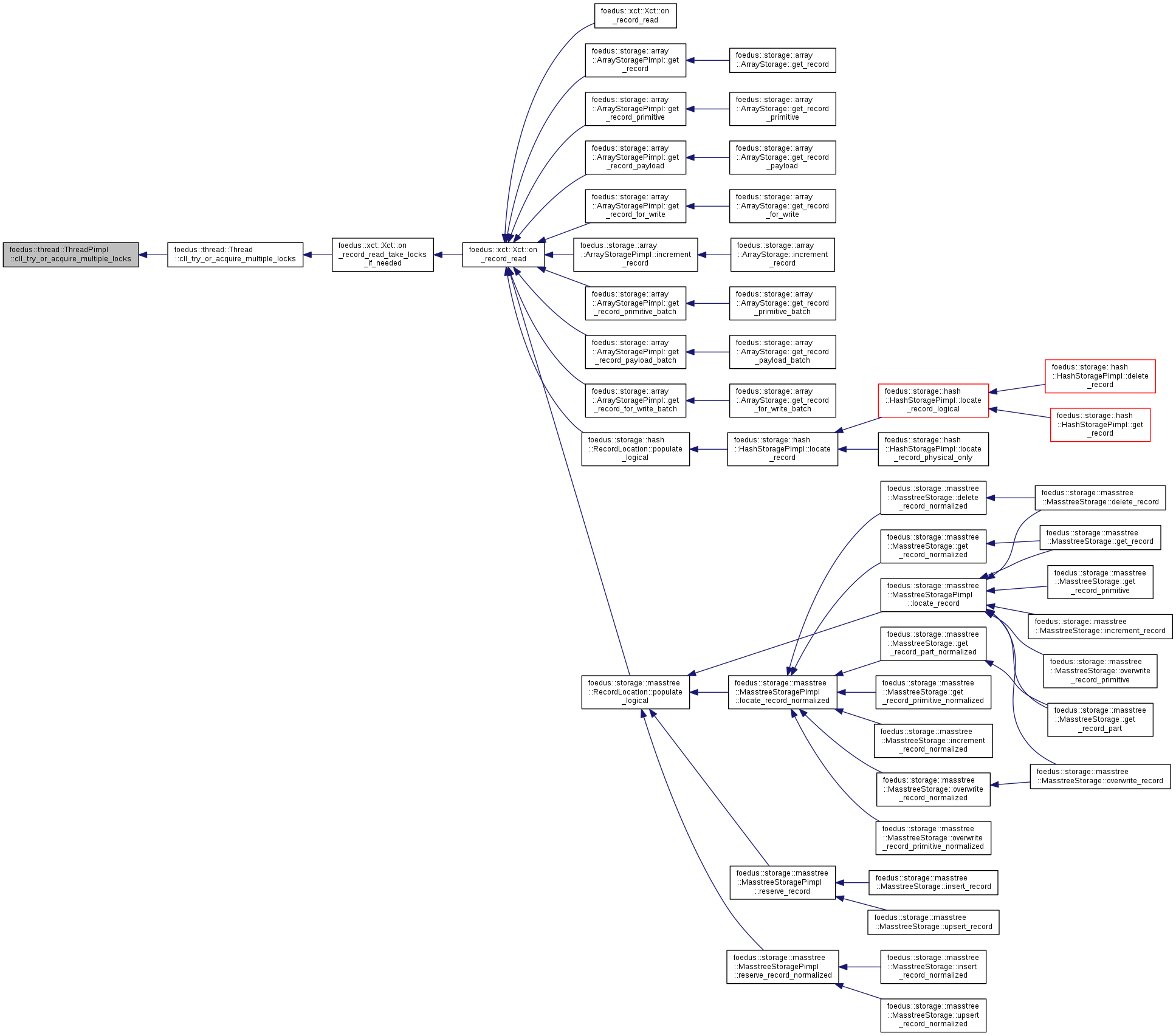

| ErrorCode | cll_try_or_acquire_multiple_locks (xct::LockListPosition upto_pos) |

| void | cll_release_all_locks () |

| xct::UniversalLockId | cll_get_max_locked_id () const |

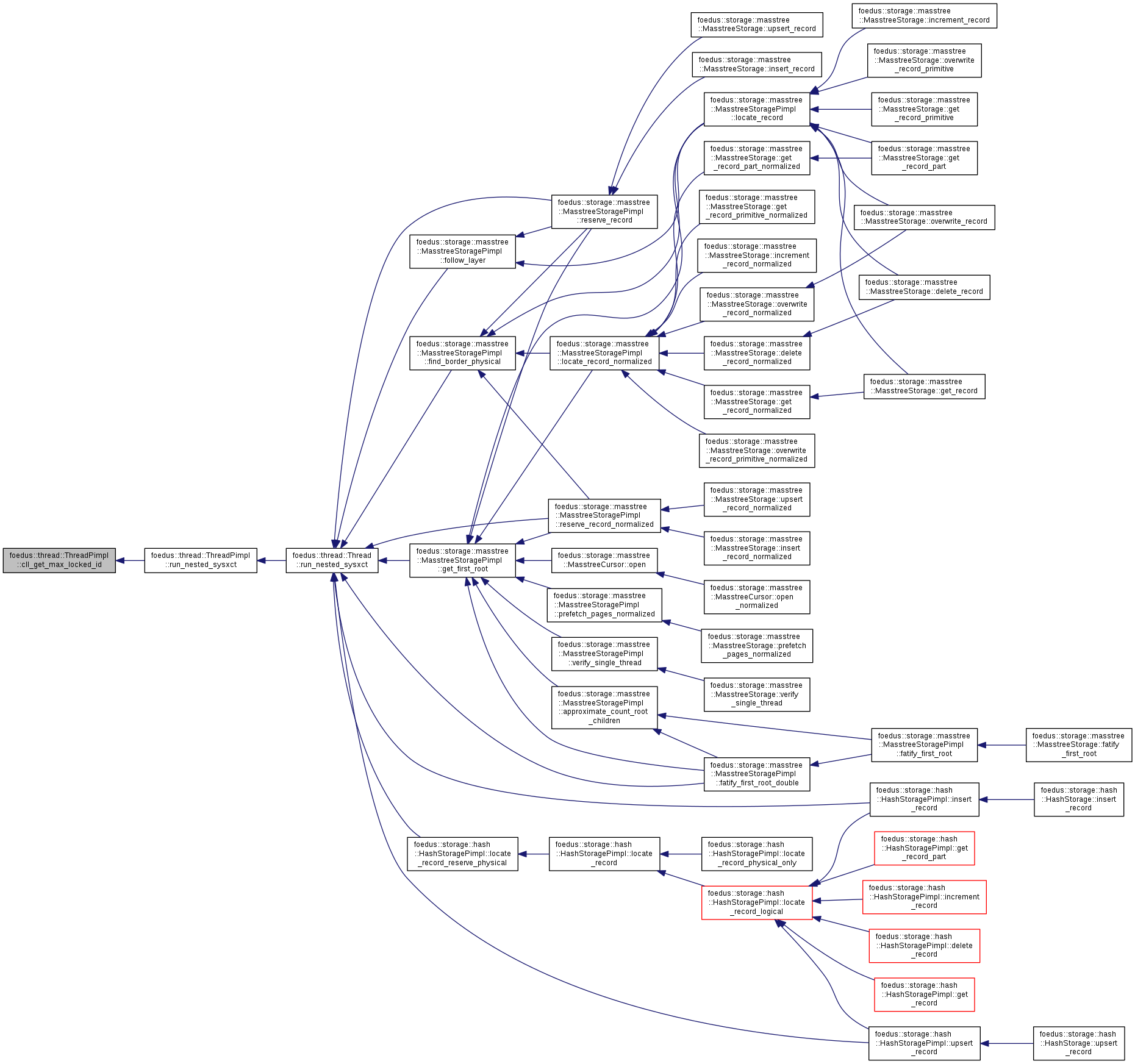

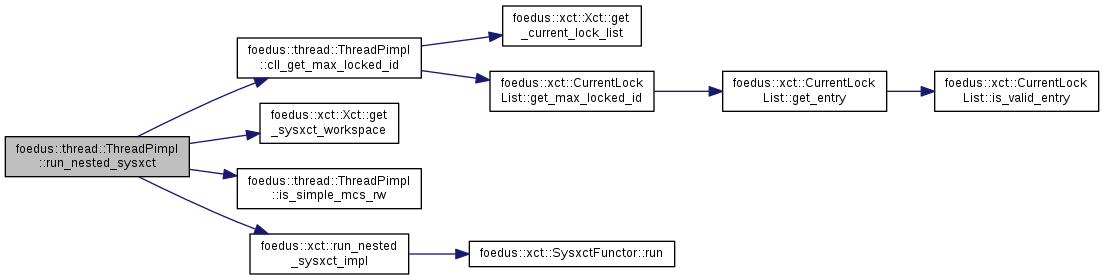

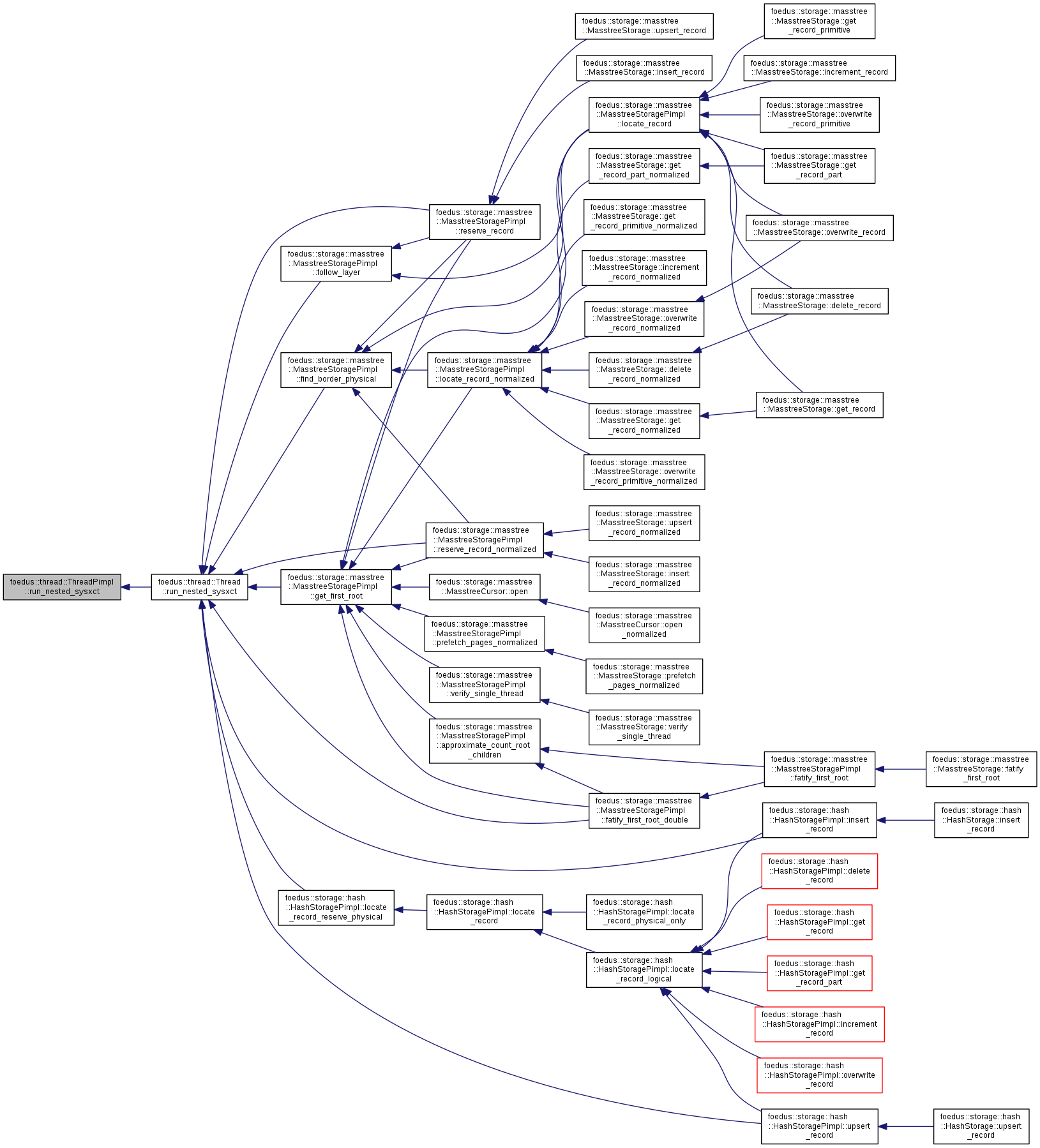

| ErrorCode | run_nested_sysxct (xct::SysxctFunctor *functor, uint32_t max_retries) |

| Sysxct-related. More... | |

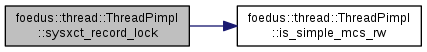

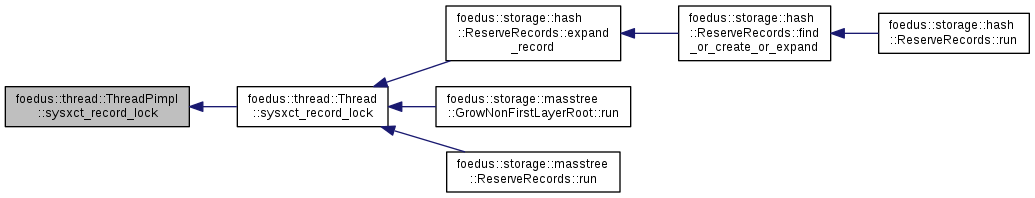

| ErrorCode | sysxct_record_lock (xct::SysxctWorkspace *sysxct_workspace, storage::VolatilePagePointer page_id, xct::RwLockableXctId *lock) |

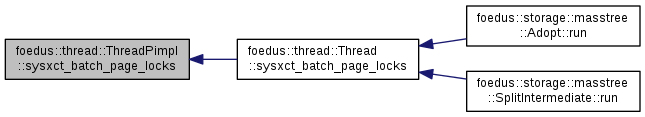

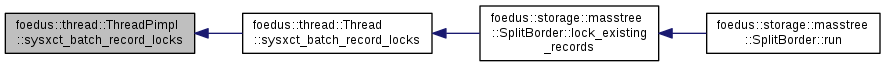

| ErrorCode | sysxct_batch_record_locks (xct::SysxctWorkspace *sysxct_workspace, storage::VolatilePagePointer page_id, uint32_t lock_count, xct::RwLockableXctId **locks) |

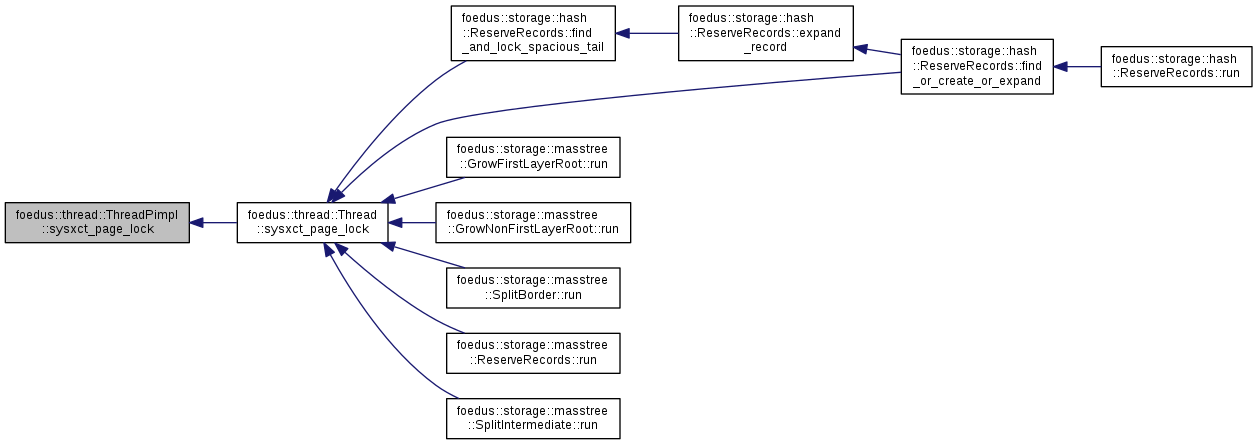

| ErrorCode | sysxct_page_lock (xct::SysxctWorkspace *sysxct_workspace, storage::Page *page) |

| ErrorCode | sysxct_batch_page_locks (xct::SysxctWorkspace *sysxct_workspace, uint32_t lock_count, storage::Page **pages) |

| void | get_mcs_rw_my_blocks (xct::McsRwSimpleBlock **out) |

| void | get_mcs_rw_my_blocks (xct::McsRwExtendedBlock **out) |

Public Member Functions inherited from foedus::DefaultInitializable Public Member Functions inherited from foedus::DefaultInitializable | |

| DefaultInitializable () | |

| virtual | ~DefaultInitializable () |

| DefaultInitializable (const DefaultInitializable &)=delete | |

| DefaultInitializable & | operator= (const DefaultInitializable &)=delete |

| ErrorStack | initialize () override final |

| Typical implementation of Initializable::initialize() that provides initialize-once semantics. More... | |

| ErrorStack | uninitialize () override final |

| Typical implementation of Initializable::uninitialize() that provides uninitialize-once semantics. More... | |

| bool | is_initialized () const override final |

| Returns whether the object has been already initialized or not. More... | |

Public Member Functions inherited from foedus::Initializable Public Member Functions inherited from foedus::Initializable | |

| virtual | ~Initializable () |

Public Attributes | |

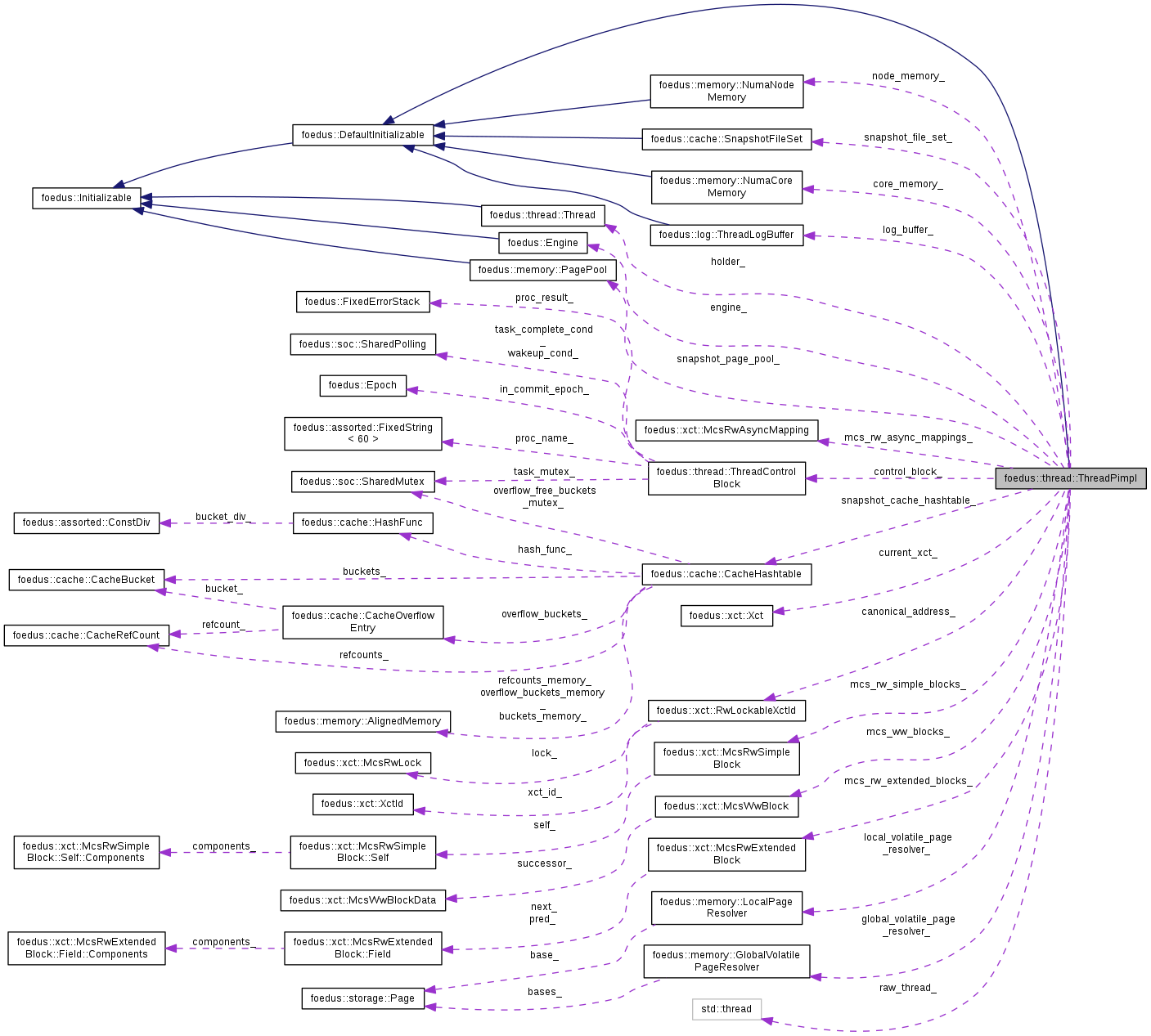

| Engine *const | engine_ |

| MCS locks methods. More... | |

| Thread *const | holder_ |

| The public object that holds this pimpl object. More... | |

| const ThreadId | id_ |

| Unique ID of this thread. More... | |

| const ThreadGroupId | numa_node_ |

| Node this thread belongs to. More... | |

| const ThreadGlobalOrdinal | global_ordinal_ |

| globally and contiguously numbered ID of thread More... | |

| bool | simple_mcs_rw_ |

| shortcut for engine_->get_options().xct_.mcs_implementation_type_ == simple More... | |

| memory::NumaCoreMemory * | core_memory_ |

| Private memory repository of this thread. More... | |

| memory::NumaNodeMemory * | node_memory_ |

| same above More... | |

| cache::CacheHashtable * | snapshot_cache_hashtable_ |

| same above More... | |

| memory::PagePool * | snapshot_page_pool_ |

| shorthand for node_memory_->get_snapshot_pool() More... | |

| memory::GlobalVolatilePageResolver | global_volatile_page_resolver_ |

| Page resolver to convert all page ID to page pointer. More... | |

| memory::LocalPageResolver | local_volatile_page_resolver_ |

| Page resolver to convert only local page ID to page pointer. More... | |

| log::ThreadLogBuffer | log_buffer_ |

| Thread-private log buffer. More... | |

| std::thread | raw_thread_ |

| Encapsulates raw thread object. More... | |

| std::atomic< bool > | raw_thread_set_ |

| Just to make sure raw_thread_ is set. More... | |

| xct::Xct | current_xct_ |

| Current transaction this thread is conveying. More... | |

| cache::SnapshotFileSet | snapshot_file_set_ |

| Each threads maintains a private set of snapshot file descriptors. More... | |

| ThreadControlBlock * | control_block_ |

| void * | task_input_memory_ |

| void * | task_output_memory_ |

| xct::McsWwBlock * | mcs_ww_blocks_ |

| Pre-allocated MCS blocks. More... | |

| xct::McsRwSimpleBlock * | mcs_rw_simple_blocks_ |

| xct::McsRwExtendedBlock * | mcs_rw_extended_blocks_ |

| xct::McsRwAsyncMapping * | mcs_rw_async_mappings_ |

| xct::RwLockableXctId * | canonical_address_ |

Friends | |

| template<typename RW_BLOCK > | |

| class | ThreadPimplMcsAdaptor |

|

delete |

| foedus::thread::ThreadPimpl::ThreadPimpl | ( | Engine * | engine, |

| Thread * | holder, | ||

| ThreadId | id, | ||

| ThreadGlobalOrdinal | global_ordinal | ||

| ) |

Definition at line 55 of file thread_pimpl.cpp.

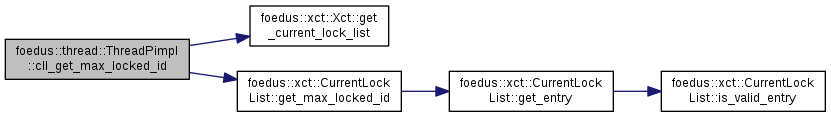

| xct::UniversalLockId foedus::thread::ThreadPimpl::cll_get_max_locked_id | ( | ) | const |

Definition at line 930 of file thread_pimpl.cpp.

References current_xct_, foedus::xct::Xct::get_current_lock_list(), and foedus::xct::CurrentLockList::get_max_locked_id().

Referenced by run_nested_sysxct().

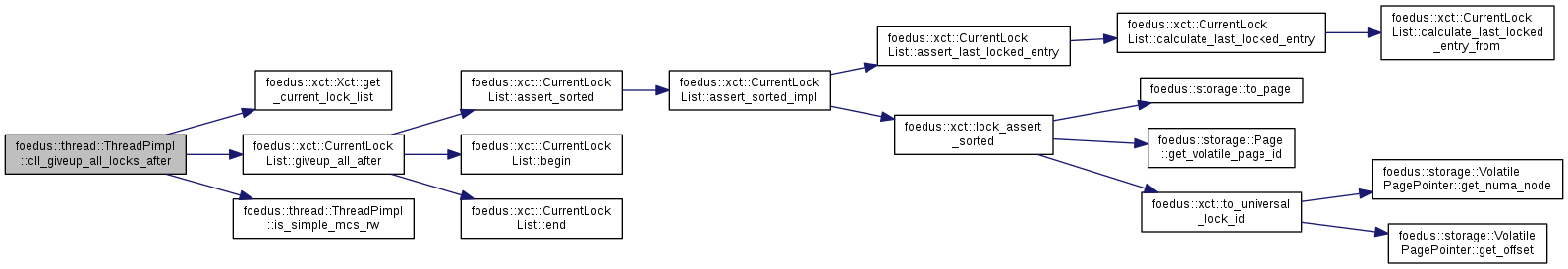

| void foedus::thread::ThreadPimpl::cll_giveup_all_locks_after | ( | xct::UniversalLockId | address | ) |

Definition at line 887 of file thread_pimpl.cpp.

References current_xct_, foedus::xct::Xct::get_current_lock_list(), foedus::xct::CurrentLockList::giveup_all_after(), and is_simple_mcs_rw().

Referenced by foedus::thread::Thread::cll_giveup_all_locks_after().

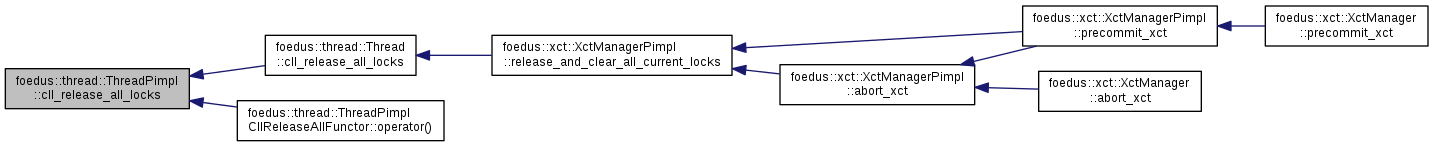

| void foedus::thread::ThreadPimpl::cll_release_all_locks | ( | ) |

Definition at line 919 of file thread_pimpl.cpp.

References current_xct_, foedus::xct::Xct::get_current_lock_list(), is_simple_mcs_rw(), and foedus::xct::CurrentLockList::release_all_locks().

Referenced by foedus::thread::Thread::cll_release_all_locks(), and foedus::thread::ThreadPimplCllReleaseAllFunctor::operator()().

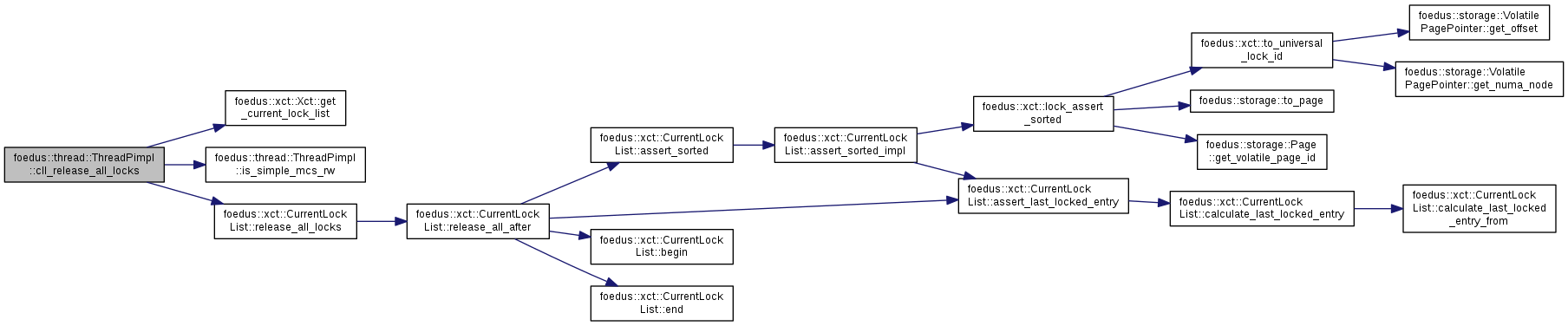

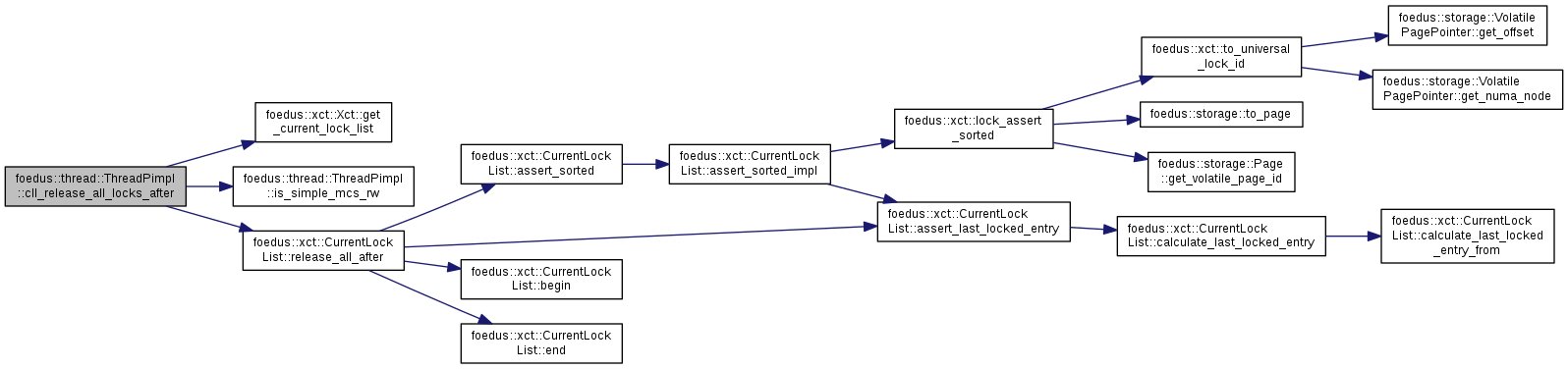

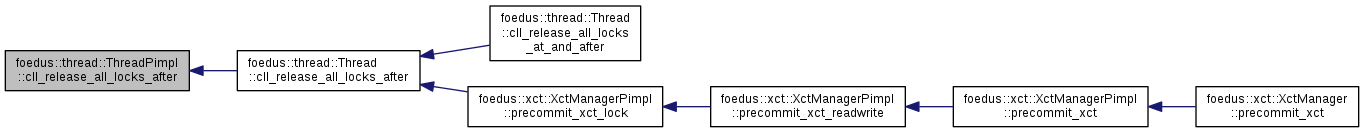

| void foedus::thread::ThreadPimpl::cll_release_all_locks_after | ( | xct::UniversalLockId | address | ) |

RW-locks.

These switch implementations. we could shorten it if we assume C++14 (lambda with auto), but not much. Doesn't matter.

Definition at line 876 of file thread_pimpl.cpp.

References current_xct_, foedus::xct::Xct::get_current_lock_list(), is_simple_mcs_rw(), and foedus::xct::CurrentLockList::release_all_after().

Referenced by foedus::thread::Thread::cll_release_all_locks_after().

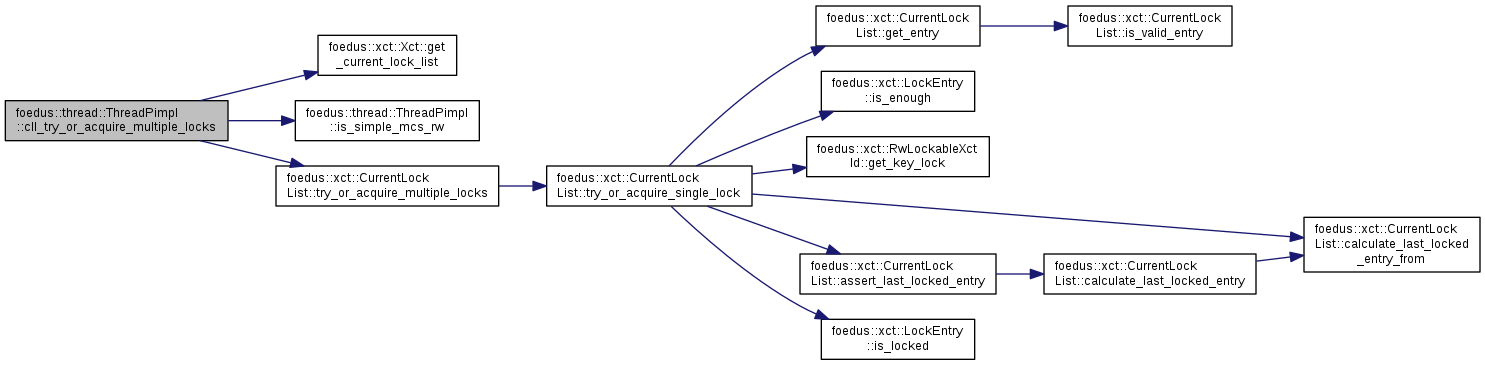

| ErrorCode foedus::thread::ThreadPimpl::cll_try_or_acquire_multiple_locks | ( | xct::LockListPosition | upto_pos | ) |

Definition at line 909 of file thread_pimpl.cpp.

References current_xct_, foedus::xct::Xct::get_current_lock_list(), is_simple_mcs_rw(), and foedus::xct::CurrentLockList::try_or_acquire_multiple_locks().

Referenced by foedus::thread::Thread::cll_try_or_acquire_multiple_locks().

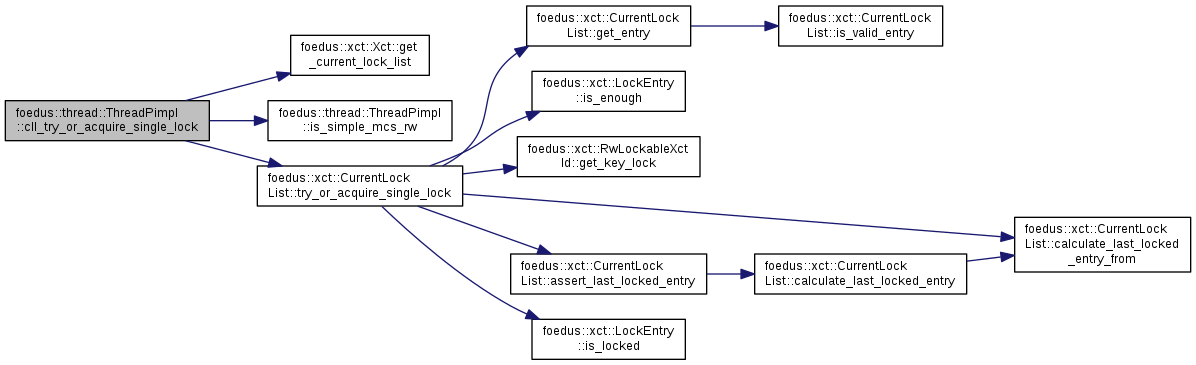

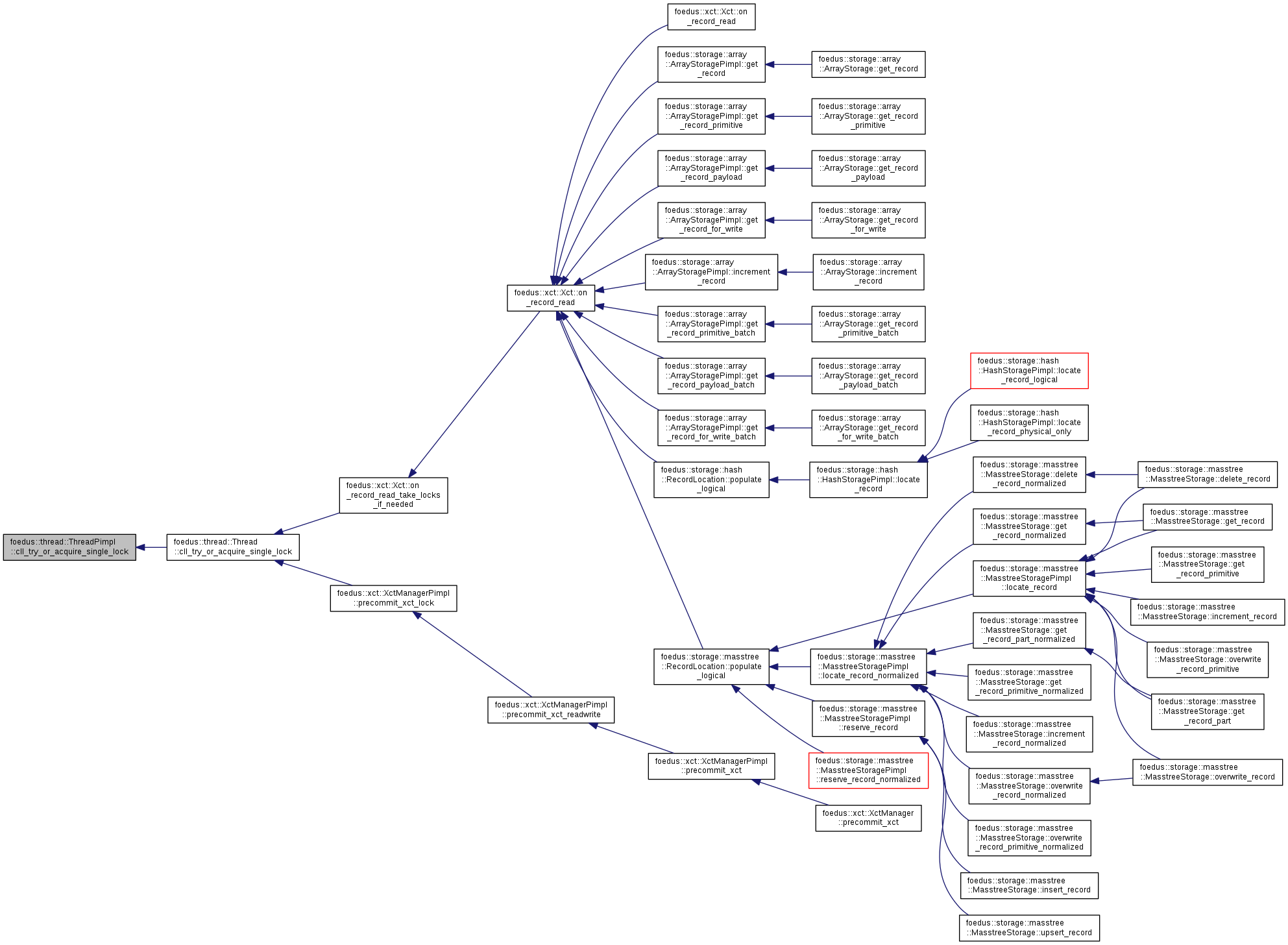

| ErrorCode foedus::thread::ThreadPimpl::cll_try_or_acquire_single_lock | ( | xct::LockListPosition | pos | ) |

Definition at line 898 of file thread_pimpl.cpp.

References current_xct_, foedus::xct::Xct::get_current_lock_list(), is_simple_mcs_rw(), and foedus::xct::CurrentLockList::try_or_acquire_single_lock().

Referenced by foedus::thread::Thread::cll_try_or_acquire_single_lock().

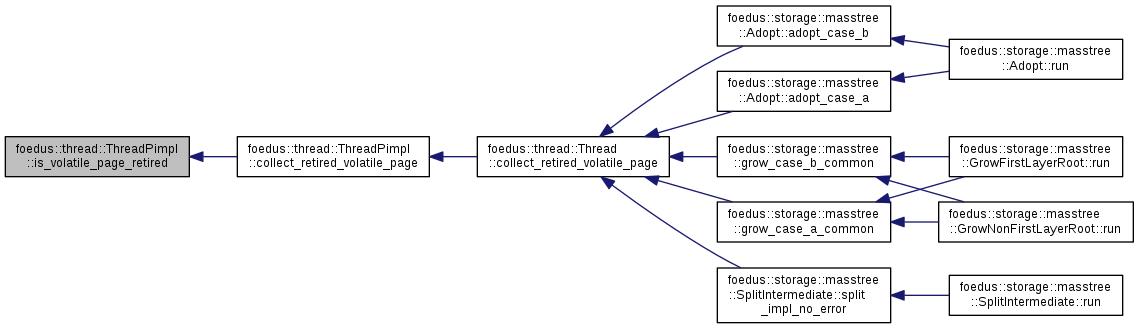

| void foedus::thread::ThreadPimpl::collect_retired_volatile_page | ( | storage::VolatilePagePointer | ptr | ) |

Keeps the specified volatile page as retired as of the current epoch.

Retired page handling methods.

| [in] | ptr | the volatile page that has been retired |

This thread buffers such pages and returns to volatile page pool when it is safe to do so.

Definition at line 777 of file thread_pimpl.cpp.

References ASSERT_ND, core_memory_, engine_, flush_retired_volatile_page(), foedus::memory::PagePoolOffsetAndEpochChunk::full(), foedus::xct::XctManager::get_current_global_epoch_weak(), foedus::storage::VolatilePagePointer::get_numa_node(), foedus::storage::VolatilePagePointer::get_offset(), foedus::memory::NumaCoreMemory::get_retired_volatile_pool_chunk(), foedus::Engine::get_xct_manager(), is_volatile_page_retired(), foedus::Epoch::one_more(), and foedus::memory::PagePoolOffsetAndEpochChunk::push_back().

Referenced by foedus::thread::Thread::collect_retired_volatile_page().

| ErrorCode foedus::thread::ThreadPimpl::find_or_read_a_snapshot_page | ( | storage::SnapshotPagePointer | page_id, |

| storage::Page ** | out | ||

| ) |

Find the given page in snapshot cache, reading it if not found.

Definition at line 647 of file thread_pimpl.cpp.

References foedus::xct::Xct::acquire_local_work_memory(), ASSERT_ND, foedus::EngineOptions::cache_, CHECK_ERROR_CODE, control_block_, current_xct_, engine_, foedus::cache::CacheHashtable::find(), foedus::memory::PagePool::get_base(), foedus::storage::Page::get_header(), foedus::Engine::get_options(), foedus::cache::CacheHashtable::install(), foedus::kErrorCodeOk, foedus::storage::kPageSize, on_snapshot_cache_miss(), foedus::storage::PageHeader::page_id_, read_a_snapshot_page(), foedus::cache::CacheOptions::snapshot_cache_enabled_, snapshot_cache_hashtable_, snapshot_page_pool_, foedus::thread::ThreadControlBlock::stat_snapshot_cache_hits_, and foedus::thread::ThreadControlBlock::stat_snapshot_cache_misses_.

Referenced by foedus::thread::Thread::find_or_read_a_snapshot_page(), follow_page_pointer(), and install_a_volatile_page().

| ErrorCode foedus::thread::ThreadPimpl::find_or_read_snapshot_pages_batch | ( | uint16_t | batch_size, |

| const storage::SnapshotPagePointer * | page_ids, | ||

| storage::Page ** | out | ||

| ) |

Batched version of find_or_read_a_snapshot_page().

| [in] | batch_size | Batch size. Must be kMaxFindPagesBatch or less. |

| [in] | page_ids | Array of Page IDs to look for, size=batch_size |

| [out] | out | Output |

This might perform much faster because of parallel prefetching, SIMD-ized hash calculattion (planned, not implemented yet) etc.

Definition at line 687 of file thread_pimpl.cpp.

References foedus::xct::Xct::acquire_local_work_memory(), ASSERT_ND, foedus::EngineOptions::cache_, CHECK_ERROR_CODE, control_block_, current_xct_, engine_, foedus::cache::CacheHashtable::find_batch(), foedus::memory::PagePool::get_base(), foedus::storage::Page::get_header(), foedus::Engine::get_options(), foedus::cache::CacheHashtable::install(), foedus::kErrorCodeInvalidParameter, foedus::kErrorCodeOk, foedus::thread::Thread::kMaxFindPagesBatch, foedus::storage::kPageSize, on_snapshot_cache_miss(), foedus::storage::PageHeader::page_id_, read_a_snapshot_page(), foedus::cache::CacheOptions::snapshot_cache_enabled_, snapshot_cache_hashtable_, snapshot_page_pool_, foedus::thread::ThreadControlBlock::stat_snapshot_cache_hits_, foedus::thread::ThreadControlBlock::stat_snapshot_cache_misses_, and UNLIKELY.

Referenced by foedus::thread::Thread::find_or_read_snapshot_pages_batch(), and follow_page_pointers_for_read_batch().

| void foedus::thread::ThreadPimpl::flush_retired_volatile_page | ( | uint16_t | node, |

| Epoch | current_epoch, | ||

| memory::PagePoolOffsetAndEpochChunk * | chunk | ||

| ) |

Subroutine of collect_retired_volatile_page() in case the chunk becomes full.

Returns the chunk to volatile pool upto safe epoch. If there aren't enough pages to safely return, advance the epoch (which should be very rare, tho).

Definition at line 789 of file thread_pimpl.cpp.

References foedus::xct::XctManager::advance_current_global_epoch(), ASSERT_ND, engine_, foedus::memory::PagePoolOffsetAndEpochChunk::full(), foedus::xct::XctManager::get_current_global_epoch(), foedus::Engine::get_memory_manager(), foedus::memory::EngineMemory::get_node_memory(), foedus::memory::PagePoolOffsetAndEpochChunk::get_safe_offset_count(), foedus::memory::NumaNodeMemoryRef::get_volatile_pool(), foedus::Engine::get_xct_manager(), id_, foedus::memory::PagePool::release(), and foedus::memory::PagePoolOffsetAndEpochChunk::size().

Referenced by collect_retired_volatile_page().

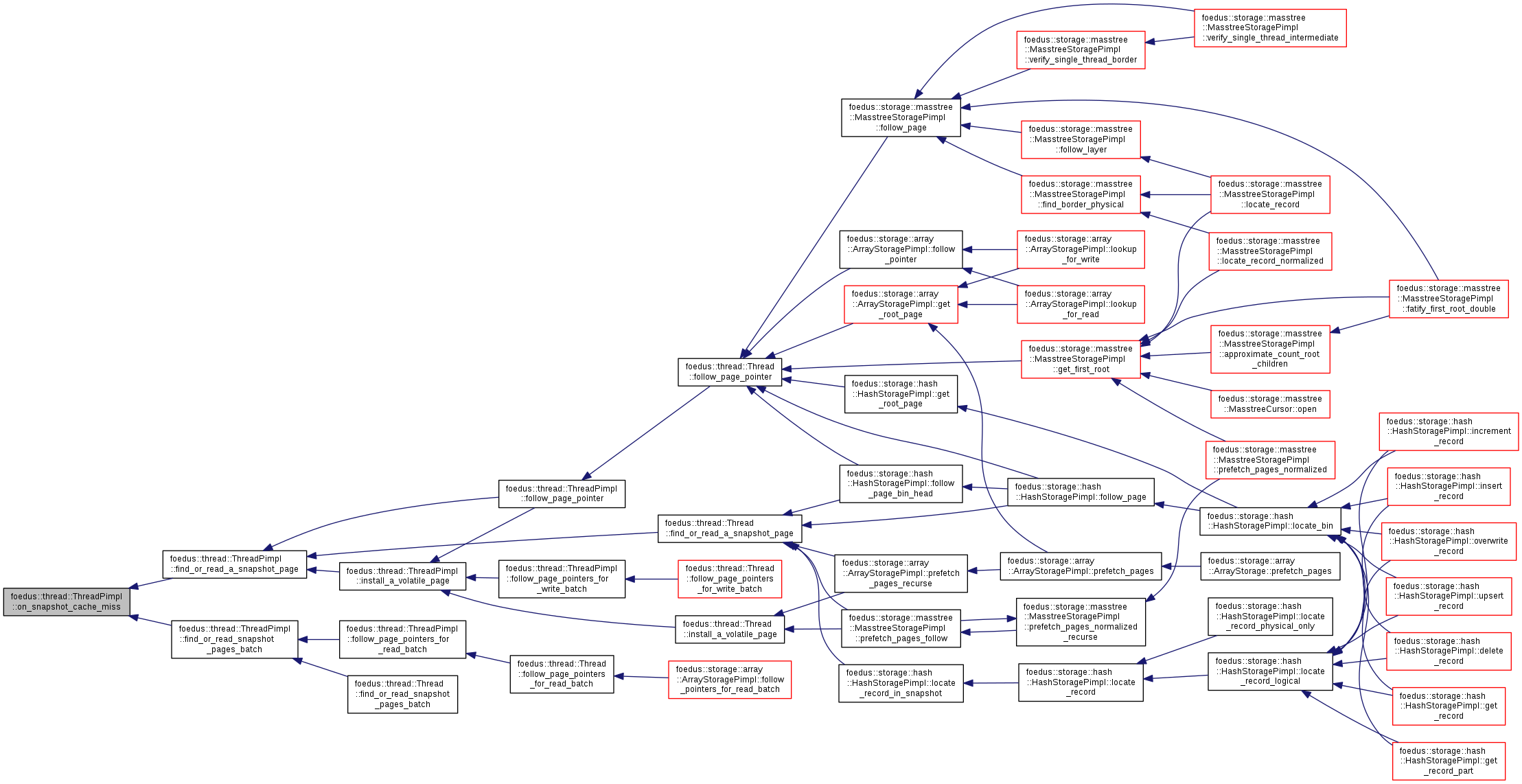

| ErrorCode foedus::thread::ThreadPimpl::follow_page_pointer | ( | storage::VolatilePageInit | page_initializer, |

| bool | tolerate_null_pointer, | ||

| bool | will_modify, | ||

| bool | take_ptr_set_snapshot, | ||

| storage::DualPagePointer * | pointer, | ||

| storage::Page ** | page, | ||

| const storage::Page * | parent, | ||

| uint16_t | index_in_parent | ||

| ) |

A general method to follow (read) a page pointer.

| [in] | page_initializer | callback function in case we need to initialize a new volatile page. null if it never happens (eg tolerate_null_pointer is false). |

| [in] | tolerate_null_pointer | when true and when both the volatile and snapshot pointers seem null, we return null page rather than creating a new volatile page. |

| [in] | will_modify | if true, we always return a non-null volatile page. This is true when we are to modify the page, such as insert/delete. |

| [in] | take_ptr_set_snapshot | if true, we add the address of volatile page pointer to ptr set when we do not follow a volatile pointer (null or volatile). This is usually true to make sure we get aware of new page installment by concurrent threads. If the isolation level is not serializable, we don't take ptr set anyways. |

| [in,out] | pointer | the page pointer. |

| [out] | page | the read page. |

| [in] | parent | the parent page that contains a pointer to the page. |

| [in] | index_in_parent | Some index (meaning depends on page type) of pointer in parent page to the page. |

This is the primary way to retrieve a page pointed by a pointer in various places. Depending on the current transaction's isolation level and storage type (represented by the various arguments), this does a whole lots of things to comply with our commit protocol.

Remember that DualPagePointer maintains volatile and snapshot pointers. We sometimes have to install a new volatile page or add the pointer to ptr set for serializability. That logic is a bit too lengthy method to duplicate in each page type, so generalize it here.

Definition at line 411 of file thread_pimpl.cpp.

References foedus::xct::Xct::add_to_pointer_set(), ASSERT_ND, foedus::storage::assert_valid_volatile_page(), CHECK_ERROR_CODE, core_memory_, current_xct_, foedus::memory::LocalPageResolver::end_, find_or_read_a_snapshot_page(), foedus::storage::Page::get_header(), foedus::xct::Xct::get_isolation_level(), global_volatile_page_resolver_, foedus::memory::NumaCoreMemory::grab_free_volatile_page(), holder_, install_a_volatile_page(), foedus::storage::VolatilePagePointer::is_null(), foedus::kErrorCodeMemoryNoFreePages, foedus::kErrorCodeOk, foedus::xct::kSerializable, local_volatile_page_resolver_, numa_node_, place_a_new_volatile_page(), foedus::memory::GlobalVolatilePageResolver::resolve_offset(), foedus::memory::LocalPageResolver::resolve_offset_newpage(), foedus::storage::VolatilePagePointer::set(), foedus::storage::PageHeader::snapshot_, foedus::storage::DualPagePointer::snapshot_pointer_, UNLIKELY, and foedus::storage::DualPagePointer::volatile_pointer_.

Referenced by foedus::thread::Thread::follow_page_pointer().

| ErrorCode foedus::thread::ThreadPimpl::follow_page_pointers_for_read_batch | ( | uint16_t | batch_size, |

| storage::VolatilePageInit | page_initializer, | ||

| bool | tolerate_null_pointer, | ||

| bool | take_ptr_set_snapshot, | ||

| storage::DualPagePointer ** | pointers, | ||

| storage::Page ** | parents, | ||

| const uint16_t * | index_in_parents, | ||

| bool * | followed_snapshots, | ||

| storage::Page ** | out | ||

| ) |

Batched version of follow_page_pointer with will_modify==false.

| [in] | batch_size | Batch size. Must be kMaxFindPagesBatch or less. |

| [in] | page_initializer | callback function in case we need to initialize a new volatile page. null if it never happens (eg tolerate_null_pointer is false). |

| [in] | tolerate_null_pointer | when true and when both the volatile and snapshot pointers seem null, we return null page rather than creating a new volatile page. |

| [in] | take_ptr_set_snapshot | if true, we add the address of volatile page pointer to ptr set when we do not follow a volatile pointer (null or volatile). This is usually true to make sure we get aware of new page installment by concurrent threads. If the isolation level is not serializable, we don't take ptr set anyways. |

| [in,out] | pointers | the page pointers. |

| [in] | parents | the parent page that contains a pointer to the page. |

| [in] | index_in_parents | Some index (meaning depends on page type) of pointer in parent page to the page. |

| [in,out] | followed_snapshots | As input, must be same as parents[i]==followed_snapshots[i]. As output, same as out[i]->header().snapshot_. We receive/emit this to avoid accessing page header. |

| [out] | out | the read page. |

Definition at line 484 of file thread_pimpl.cpp.

References foedus::xct::Xct::add_to_pointer_set(), ASSERT_ND, foedus::storage::assert_valid_volatile_page(), CHECK_ERROR_CODE, core_memory_, current_xct_, find_or_read_snapshot_pages_batch(), foedus::storage::Page::get_header(), foedus::xct::Xct::get_isolation_level(), global_volatile_page_resolver_, foedus::memory::NumaCoreMemory::grab_free_volatile_page(), holder_, foedus::storage::VolatilePagePointer::is_null(), foedus::kErrorCodeInvalidParameter, foedus::kErrorCodeMemoryNoFreePages, foedus::kErrorCodeOk, foedus::thread::Thread::kMaxFindPagesBatch, foedus::xct::kSerializable, local_volatile_page_resolver_, numa_node_, place_a_new_volatile_page(), foedus::memory::GlobalVolatilePageResolver::resolve_offset(), foedus::memory::LocalPageResolver::resolve_offset_newpage(), foedus::storage::VolatilePagePointer::set(), foedus::storage::PageHeader::snapshot_, foedus::storage::DualPagePointer::snapshot_pointer_, UNLIKELY, and foedus::storage::DualPagePointer::volatile_pointer_.

Referenced by foedus::thread::Thread::follow_page_pointers_for_read_batch().

| ErrorCode foedus::thread::ThreadPimpl::follow_page_pointers_for_write_batch | ( | uint16_t | batch_size, |

| storage::VolatilePageInit | page_initializer, | ||

| storage::DualPagePointer ** | pointers, | ||

| storage::Page ** | parents, | ||

| const uint16_t * | index_in_parents, | ||

| storage::Page ** | out | ||

| ) |

Batched version of follow_page_pointer with will_modify==true and tolerate_null_pointer==true.

| [in] | batch_size | Batch size. Must be kMaxFindPagesBatch or less. |

| [in] | page_initializer | callback function in case we need to initialize a new volatile page. null if it never happens (eg tolerate_null_pointer is false). |

| [in,out] | pointers | the page pointers. |

| [in] | parents | the parent page that contains a pointer to the page. |

| [in] | index_in_parents | Some index (meaning depends on page type) of pointer in parent page to the page. |

| [out] | out | the read page. |

Definition at line 595 of file thread_pimpl.cpp.

References ASSERT_ND, foedus::storage::assert_valid_volatile_page(), CHECK_ERROR_CODE, core_memory_, foedus::storage::Page::get_header(), global_volatile_page_resolver_, foedus::memory::NumaCoreMemory::grab_free_volatile_page(), holder_, install_a_volatile_page(), foedus::storage::VolatilePagePointer::is_null(), foedus::kErrorCodeMemoryNoFreePages, foedus::kErrorCodeOk, local_volatile_page_resolver_, numa_node_, place_a_new_volatile_page(), foedus::memory::GlobalVolatilePageResolver::resolve_offset(), foedus::memory::LocalPageResolver::resolve_offset_newpage(), foedus::storage::VolatilePagePointer::set(), foedus::storage::PageHeader::snapshot_, foedus::storage::DualPagePointer::snapshot_pointer_, UNLIKELY, and foedus::storage::DualPagePointer::volatile_pointer_.

Referenced by foedus::thread::Thread::follow_page_pointers_for_write_batch().

|

inline |

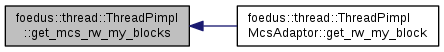

Definition at line 314 of file thread_pimpl.hpp.

References mcs_rw_simple_blocks_.

Referenced by foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::get_rw_my_block().

|

inline |

Definition at line 315 of file thread_pimpl.hpp.

References mcs_rw_extended_blocks_.

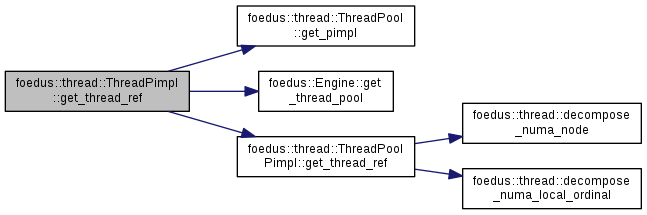

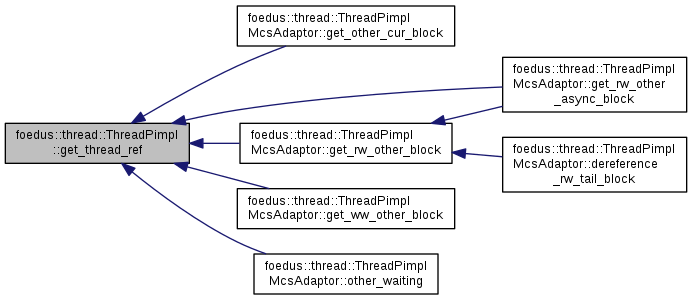

Definition at line 767 of file thread_pimpl.cpp.

References engine_, foedus::thread::ThreadPool::get_pimpl(), foedus::Engine::get_thread_pool(), and foedus::thread::ThreadPoolPimpl::get_thread_ref().

Referenced by foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::get_other_cur_block(), foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::get_rw_other_async_block(), foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::get_rw_other_block(), foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::get_ww_other_block(), and foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::other_waiting().

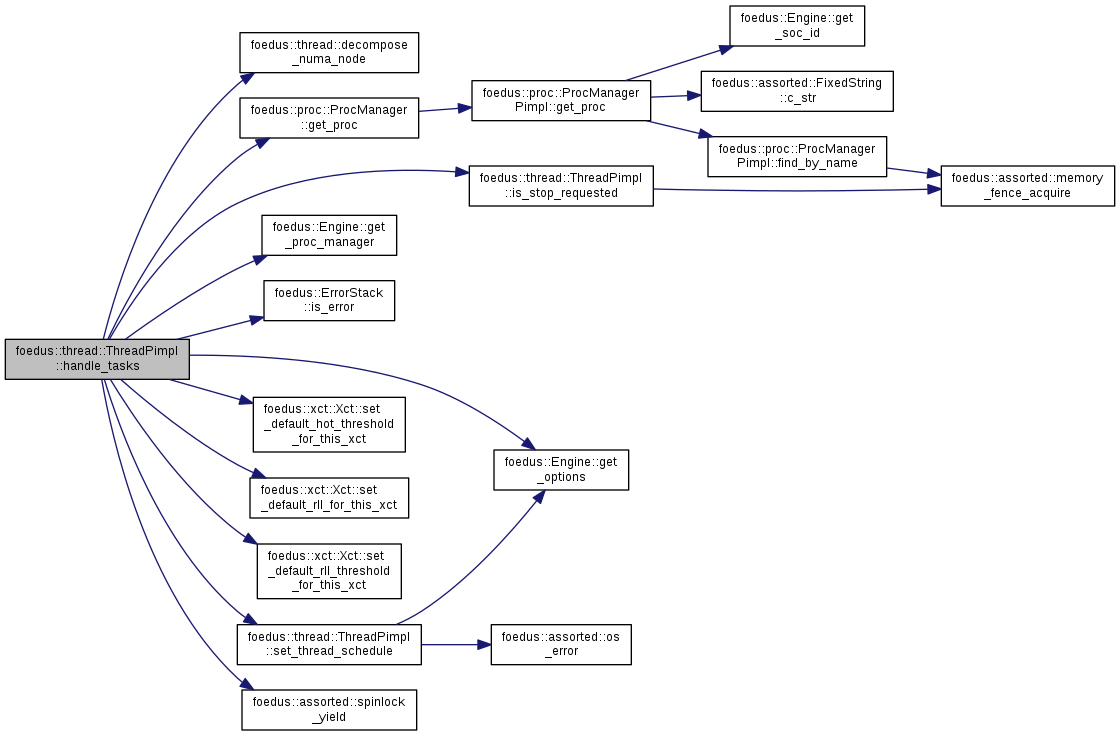

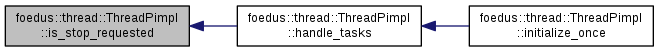

| void foedus::thread::ThreadPimpl::handle_tasks | ( | ) |

Main routine of the worker thread.

This method keeps checking current_task_. Whenever it retrieves a task, it runs it and re-sets current_task_ when it's done. It exists when exit_requested_ is set.

Definition at line 163 of file thread_pimpl.cpp.

References ASSERT_ND, control_block_, current_xct_, foedus::thread::decompose_numa_node(), foedus::xct::XctOptions::enable_retrospective_lock_list_, engine_, foedus::Engine::get_options(), foedus::proc::ProcManager::get_proc(), foedus::Engine::get_proc_manager(), holder_, foedus::storage::StorageOptions::hot_threshold_, foedus::xct::XctOptions::hot_threshold_for_retrospective_lock_list_, id_, foedus::ErrorStack::is_error(), is_stop_requested(), foedus::thread::kNotInitialized, foedus::thread::kRunningTask, foedus::soc::ThreadMemoryAnchors::kTaskOutputMemorySize, foedus::thread::kTerminated, foedus::thread::kWaitingForClientRelease, foedus::thread::kWaitingForExecution, foedus::thread::kWaitingForTask, foedus::xct::Xct::set_default_hot_threshold_for_this_xct(), foedus::xct::Xct::set_default_rll_for_this_xct(), foedus::xct::Xct::set_default_rll_threshold_for_this_xct(), set_thread_schedule(), foedus::assorted::spinlock_yield(), foedus::EngineOptions::storage_, task_input_memory_, task_output_memory_, and foedus::EngineOptions::xct_.

Referenced by initialize_once().

|

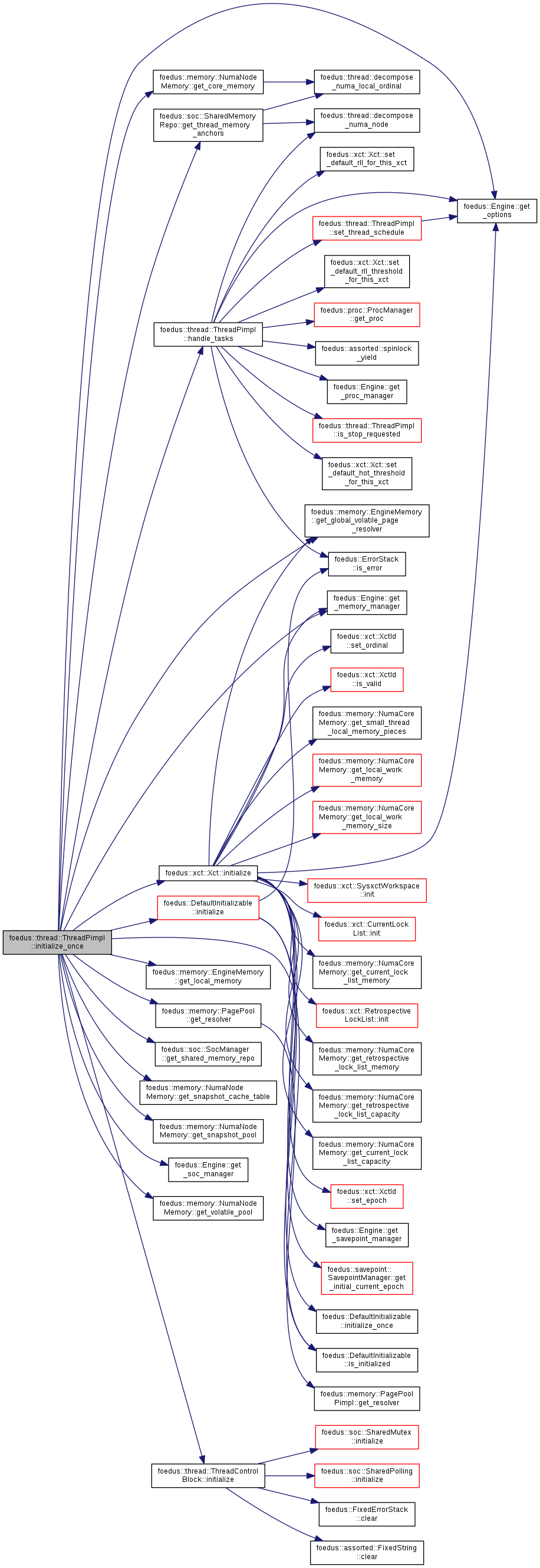

finaloverridevirtual |

Implements foedus::DefaultInitializable.

Definition at line 81 of file thread_pimpl.cpp.

References ASSERT_ND, foedus::EngineOptions::cache_, CHECK_ERROR, control_block_, core_memory_, current_xct_, engine_, foedus::memory::NumaNodeMemory::get_core_memory(), foedus::memory::EngineMemory::get_global_volatile_page_resolver(), foedus::memory::EngineMemory::get_local_memory(), foedus::Engine::get_memory_manager(), foedus::Engine::get_options(), foedus::memory::PagePool::get_resolver(), foedus::soc::SocManager::get_shared_memory_repo(), foedus::memory::NumaNodeMemory::get_snapshot_cache_table(), foedus::memory::NumaNodeMemory::get_snapshot_pool(), foedus::Engine::get_soc_manager(), foedus::soc::SharedMemoryRepo::get_thread_memory_anchors(), foedus::memory::NumaNodeMemory::get_volatile_pool(), global_volatile_page_resolver_, handle_tasks(), id_, foedus::thread::ThreadControlBlock::initialize(), foedus::xct::Xct::initialize(), foedus::DefaultInitializable::initialize(), foedus::DefaultInitializable::is_initialized(), foedus::xct::XctOptions::kMcsImplementationTypeExtended, foedus::xct::XctOptions::kMcsImplementationTypeSimple, foedus::kRetOk, local_volatile_page_resolver_, log_buffer_, foedus::thread::ThreadControlBlock::mcs_block_current_, foedus::xct::XctOptions::mcs_implementation_type_, foedus::thread::ThreadControlBlock::mcs_rw_async_mapping_current_, mcs_rw_async_mappings_, mcs_rw_extended_blocks_, mcs_rw_simple_blocks_, mcs_ww_blocks_, node_memory_, raw_thread_, raw_thread_set_, simple_mcs_rw_, foedus::cache::CacheOptions::snapshot_cache_enabled_, snapshot_cache_hashtable_, snapshot_file_set_, snapshot_page_pool_, task_input_memory_, task_output_memory_, and foedus::EngineOptions::xct_.

| ErrorCode foedus::thread::ThreadPimpl::install_a_volatile_page | ( | storage::DualPagePointer * | pointer, |

| storage::Page ** | installed_page | ||

| ) |

Installs a volatile page to the given dual pointer as a copy of the snapshot page.

| [in,out] | pointer | dual pointer. volatile pointer will be modified. |

| [out] | installed_page | physical pointer to the installed volatile page. This might point to a page installed by a concurrent thread. |

This is called when a dual pointer has only a snapshot pointer, in other words it is "clean", to create a volatile version for modification.

Definition at line 292 of file thread_pimpl.cpp.

References ASSERT_ND, CHECK_ERROR_CODE, core_memory_, foedus::memory::LocalPageResolver::end_, find_or_read_a_snapshot_page(), foedus::storage::Page::get_header(), foedus::storage::VolatilePagePointer::get_offset(), foedus::memory::NumaCoreMemory::grab_free_volatile_page_pointer(), foedus::storage::VolatilePagePointer::is_null(), foedus::kErrorCodeMemoryNoFreePages, foedus::kErrorCodeOk, foedus::storage::kPageSize, local_volatile_page_resolver_, foedus::storage::PageHeader::page_id_, place_a_new_volatile_page(), foedus::memory::LocalPageResolver::resolve_offset_newpage(), foedus::storage::PageHeader::snapshot_, foedus::storage::DualPagePointer::snapshot_pointer_, UNLIKELY, and foedus::storage::VolatilePagePointer::word.

Referenced by follow_page_pointer(), follow_page_pointers_for_write_batch(), and foedus::thread::Thread::install_a_volatile_page().

|

inline |

Definition at line 282 of file thread_pimpl.hpp.

References simple_mcs_rw_.

Referenced by cll_giveup_all_locks_after(), cll_release_all_locks(), cll_release_all_locks_after(), cll_try_or_acquire_multiple_locks(), cll_try_or_acquire_single_lock(), run_nested_sysxct(), sysxct_batch_page_locks(), sysxct_batch_record_locks(), sysxct_page_lock(), and sysxct_record_lock().

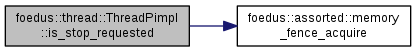

| bool foedus::thread::ThreadPimpl::is_stop_requested | ( | ) | const |

Definition at line 158 of file thread_pimpl.cpp.

References control_block_, foedus::thread::kWaitingForTerminate, foedus::assorted::memory_fence_acquire(), and foedus::thread::ThreadControlBlock::status_.

Referenced by handle_tasks().

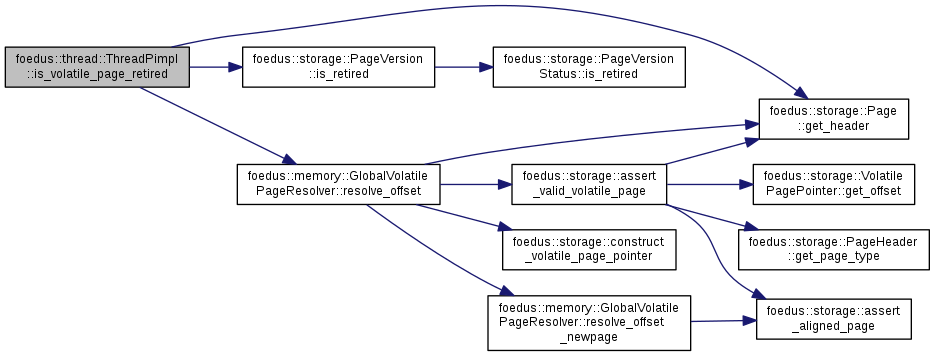

| bool foedus::thread::ThreadPimpl::is_volatile_page_retired | ( | storage::VolatilePagePointer | ptr | ) |

Subroutine of collect_retired_volatile_page() just for assertion.

Definition at line 818 of file thread_pimpl.cpp.

References foedus::storage::Page::get_header(), global_volatile_page_resolver_, foedus::storage::PageVersion::is_retired(), foedus::storage::PageHeader::page_version_, and foedus::memory::GlobalVolatilePageResolver::resolve_offset().

Referenced by collect_retired_volatile_page().

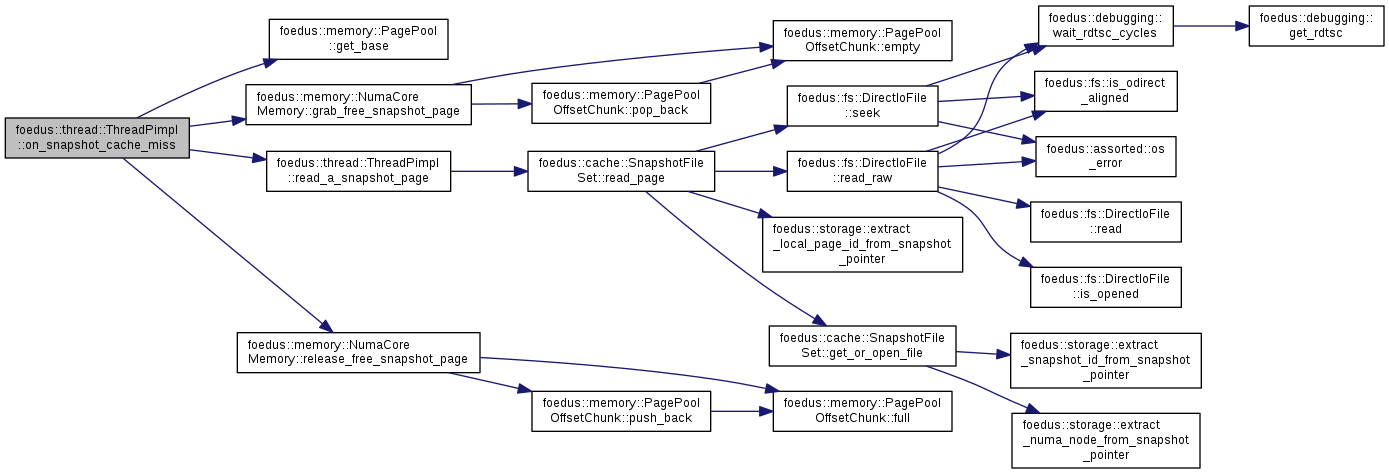

| ErrorCode foedus::thread::ThreadPimpl::on_snapshot_cache_miss | ( | storage::SnapshotPagePointer | page_id, |

| memory::PagePoolOffset * | pool_offset | ||

| ) |

Definition at line 741 of file thread_pimpl.cpp.

References core_memory_, foedus::memory::PagePool::get_base(), foedus::memory::NumaCoreMemory::grab_free_snapshot_page(), holder_, foedus::kErrorCodeCacheNoFreePages, foedus::kErrorCodeOk, read_a_snapshot_page(), foedus::memory::NumaCoreMemory::release_free_snapshot_page(), and snapshot_page_pool_.

Referenced by find_or_read_a_snapshot_page(), and find_or_read_snapshot_pages_batch().

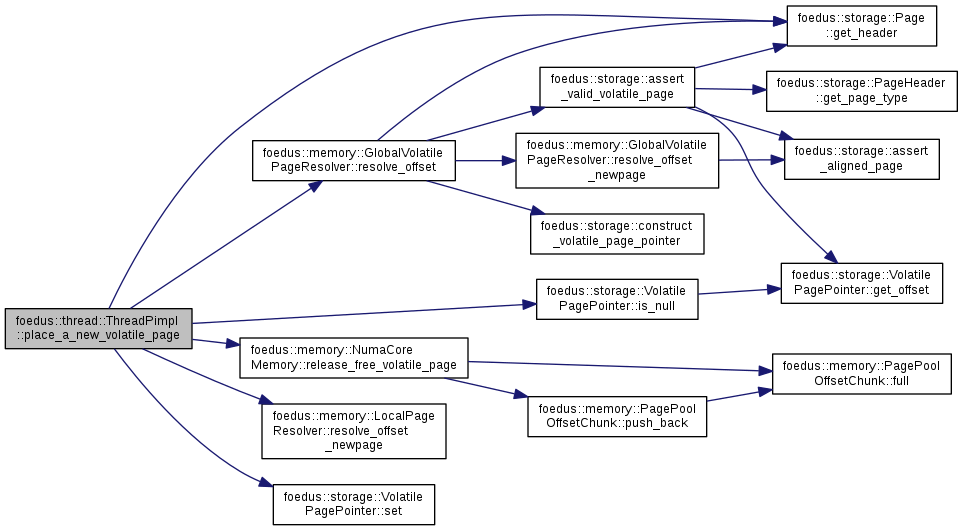

| storage::Page * foedus::thread::ThreadPimpl::place_a_new_volatile_page | ( | memory::PagePoolOffset | new_offset, |

| storage::DualPagePointer * | pointer | ||

| ) |

Subroutine of install_a_volatile_page() and follow_page_pointer() to atomically place the given new volatile page created by this thread.

| [in] | new_offset | offset of the new volatile page created by this thread |

| [in,out] | pointer | the address to place a new pointer. |

Due to concurrent threads, this method might discard the given volatile page and pick a page placed by another thread. In that case, new_offset will be released to the free pool.

Definition at line 317 of file thread_pimpl.cpp.

References ASSERT_ND, core_memory_, foedus::storage::Page::get_header(), global_volatile_page_resolver_, id_, foedus::storage::VolatilePagePointer::is_null(), local_volatile_page_resolver_, numa_node_, foedus::memory::NumaCoreMemory::release_free_volatile_page(), foedus::memory::GlobalVolatilePageResolver::resolve_offset(), foedus::memory::LocalPageResolver::resolve_offset_newpage(), foedus::storage::VolatilePagePointer::set(), foedus::storage::PageHeader::snapshot_, foedus::storage::DualPagePointer::volatile_pointer_, and foedus::storage::VolatilePagePointer::word.

Referenced by follow_page_pointer(), follow_page_pointers_for_read_batch(), follow_page_pointers_for_write_batch(), and install_a_volatile_page().

|

inline |

Read a snapshot page using the thread-local file descriptor set.

Definition at line 512 of file thread_pimpl.hpp.

References foedus::cache::SnapshotFileSet::read_page(), and snapshot_file_set_.

Referenced by find_or_read_a_snapshot_page(), find_or_read_snapshot_pages_batch(), on_snapshot_cache_miss(), and foedus::thread::Thread::read_a_snapshot_page().

|

inline |

Read contiguous pages in one shot.

Other than that same as read_a_snapshot_page().

Definition at line 517 of file thread_pimpl.hpp.

References foedus::cache::SnapshotFileSet::read_pages(), and snapshot_file_set_.

Referenced by foedus::thread::Thread::read_snapshot_pages().

| ErrorCode foedus::thread::ThreadPimpl::run_nested_sysxct | ( | xct::SysxctFunctor * | functor, |

| uint32_t | max_retries | ||

| ) |

Sysxct-related.

Impl. mostly just forwarding to SysxctLockList.

Definition at line 980 of file thread_pimpl.cpp.

References cll_get_max_locked_id(), current_xct_, foedus::xct::Xct::get_sysxct_workspace(), is_simple_mcs_rw(), and foedus::xct::run_nested_sysxct_impl().

Referenced by foedus::thread::Thread::run_nested_sysxct().

| void foedus::thread::ThreadPimpl::set_thread_schedule | ( | ) |

initializes the thread's policy/priority

Definition at line 239 of file thread_pimpl.cpp.

References engine_, foedus::Engine::get_options(), id_, foedus::assorted::os_error(), foedus::thread::ThreadOptions::overwrite_thread_schedule_, raw_thread_, raw_thread_set_, SPINLOCK_WHILE, foedus::EngineOptions::thread_, foedus::thread::ThreadOptions::thread_policy_, and foedus::thread::ThreadOptions::thread_priority_.

Referenced by handle_tasks().

| void foedus::thread::ThreadPimpl::switch_mcs_impl | ( | FUNC | func | ) |

MCS locks methods.

These just delegate to xct_mcs_impl. Comments ommit as they are the same as xct_mcs_impl's.

| ErrorCode foedus::thread::ThreadPimpl::sysxct_batch_page_locks | ( | xct::SysxctWorkspace * | sysxct_workspace, |

| uint32_t | lock_count, | ||

| storage::Page ** | pages | ||

| ) |

Definition at line 1049 of file thread_pimpl.cpp.

References ASSERT_ND, is_simple_mcs_rw(), foedus::xct::SysxctWorkspace::lock_list_, and foedus::xct::SysxctWorkspace::running_sysxct_.

Referenced by foedus::thread::Thread::sysxct_batch_page_locks().

| ErrorCode foedus::thread::ThreadPimpl::sysxct_batch_record_locks | ( | xct::SysxctWorkspace * | sysxct_workspace, |

| storage::VolatilePagePointer | page_id, | ||

| uint32_t | lock_count, | ||

| xct::RwLockableXctId ** | locks | ||

| ) |

Definition at line 1021 of file thread_pimpl.cpp.

References ASSERT_ND, is_simple_mcs_rw(), foedus::xct::SysxctWorkspace::lock_list_, and foedus::xct::SysxctWorkspace::running_sysxct_.

Referenced by foedus::thread::Thread::sysxct_batch_record_locks().

| ErrorCode foedus::thread::ThreadPimpl::sysxct_page_lock | ( | xct::SysxctWorkspace * | sysxct_workspace, |

| storage::Page * | page | ||

| ) |

Definition at line 1036 of file thread_pimpl.cpp.

References ASSERT_ND, is_simple_mcs_rw(), foedus::xct::SysxctWorkspace::lock_list_, and foedus::xct::SysxctWorkspace::running_sysxct_.

Referenced by foedus::thread::Thread::sysxct_page_lock().

| ErrorCode foedus::thread::ThreadPimpl::sysxct_record_lock | ( | xct::SysxctWorkspace * | sysxct_workspace, |

| storage::VolatilePagePointer | page_id, | ||

| xct::RwLockableXctId * | lock | ||

| ) |

Definition at line 1007 of file thread_pimpl.cpp.

References ASSERT_ND, is_simple_mcs_rw(), foedus::xct::SysxctWorkspace::lock_list_, and foedus::xct::SysxctWorkspace::running_sysxct_.

Referenced by foedus::thread::Thread::sysxct_record_lock().

|

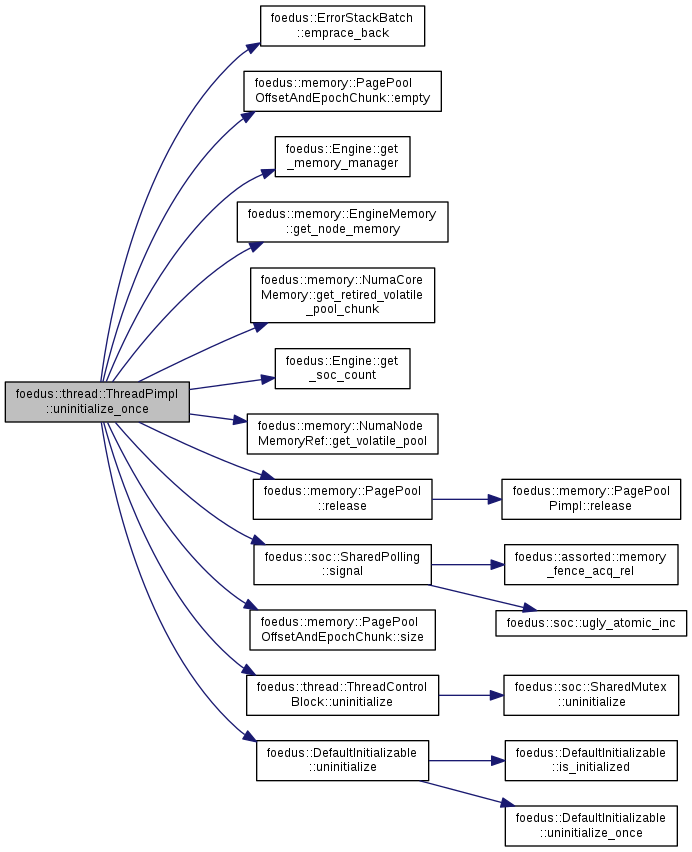

finaloverridevirtual |

Implements foedus::DefaultInitializable.

Definition at line 122 of file thread_pimpl.cpp.

References ASSERT_ND, control_block_, core_memory_, foedus::ErrorStackBatch::emprace_back(), foedus::memory::PagePoolOffsetAndEpochChunk::empty(), engine_, foedus::Engine::get_memory_manager(), foedus::memory::EngineMemory::get_node_memory(), foedus::memory::NumaCoreMemory::get_retired_volatile_pool_chunk(), foedus::Engine::get_soc_count(), foedus::memory::NumaNodeMemoryRef::get_volatile_pool(), id_, foedus::thread::kTerminated, foedus::thread::kWaitingForTerminate, log_buffer_, node_memory_, raw_thread_, foedus::memory::PagePool::release(), foedus::soc::SharedPolling::signal(), foedus::memory::PagePoolOffsetAndEpochChunk::size(), snapshot_cache_hashtable_, snapshot_file_set_, foedus::thread::ThreadControlBlock::status_, SUMMARIZE_ERROR_BATCH, foedus::thread::ThreadControlBlock::uninitialize(), foedus::DefaultInitializable::uninitialize(), and foedus::thread::ThreadControlBlock::wakeup_cond_.

|

friend |

Definition at line 161 of file thread_pimpl.hpp.

| xct::RwLockableXctId* foedus::thread::ThreadPimpl::canonical_address_ |

Definition at line 392 of file thread_pimpl.hpp.

| ThreadControlBlock* foedus::thread::ThreadPimpl::control_block_ |

Definition at line 382 of file thread_pimpl.hpp.

Referenced by foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::add_rw_async_mapping(), foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::cancel_new_block(), find_or_read_a_snapshot_page(), find_or_read_snapshot_pages_batch(), foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::get_cur_block(), foedus::thread::Thread::get_in_commit_epoch_address(), foedus::thread::Thread::get_snapshot_cache_hits(), foedus::thread::Thread::get_snapshot_cache_misses(), handle_tasks(), initialize_once(), is_stop_requested(), foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::issue_new_block(), foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::me_waiting(), foedus::thread::operator<<(), foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::remove_rw_async_mapping(), foedus::thread::Thread::reset_snapshot_cache_counts(), and uninitialize_once().

| memory::NumaCoreMemory* foedus::thread::ThreadPimpl::core_memory_ |

Private memory repository of this thread.

ThreadPimpl does NOT own it, meaning it doesn't call its initialize()/uninitialize(). EngineMemory owns it in terms of that.

Definition at line 345 of file thread_pimpl.hpp.

Referenced by collect_retired_volatile_page(), follow_page_pointer(), follow_page_pointers_for_read_batch(), follow_page_pointers_for_write_batch(), foedus::thread::Thread::get_node_memory(), foedus::thread::Thread::get_thread_memory(), initialize_once(), install_a_volatile_page(), on_snapshot_cache_miss(), place_a_new_volatile_page(), and uninitialize_once().

| xct::Xct foedus::thread::ThreadPimpl::current_xct_ |

Current transaction this thread is conveying.

Each thread can run at most one transaction at once. If this thread is not conveying any transaction, current_xct_.is_active() == false.

Definition at line 375 of file thread_pimpl.hpp.

Referenced by cll_get_max_locked_id(), cll_giveup_all_locks_after(), cll_release_all_locks(), cll_release_all_locks_after(), cll_try_or_acquire_multiple_locks(), cll_try_or_acquire_single_lock(), find_or_read_a_snapshot_page(), find_or_read_snapshot_pages_batch(), follow_page_pointer(), follow_page_pointers_for_read_batch(), foedus::thread::Thread::get_current_xct(), handle_tasks(), initialize_once(), foedus::thread::Thread::is_hot_page(), foedus::thread::Thread::is_running_xct(), and run_nested_sysxct().

| Engine* const foedus::thread::ThreadPimpl::engine_ |

MCS locks methods.

Definition at line 320 of file thread_pimpl.hpp.

Referenced by collect_retired_volatile_page(), find_or_read_a_snapshot_page(), find_or_read_snapshot_pages_batch(), flush_retired_volatile_page(), foedus::thread::Thread::get_engine(), get_thread_ref(), handle_tasks(), initialize_once(), set_thread_schedule(), and uninitialize_once().

| const ThreadGlobalOrdinal foedus::thread::ThreadPimpl::global_ordinal_ |

globally and contiguously numbered ID of thread

Definition at line 336 of file thread_pimpl.hpp.

Referenced by foedus::thread::Thread::get_thread_global_ordinal().

| memory::GlobalVolatilePageResolver foedus::thread::ThreadPimpl::global_volatile_page_resolver_ |

Page resolver to convert all page ID to page pointer.

Definition at line 354 of file thread_pimpl.hpp.

Referenced by foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::add_rw_async_mapping(), follow_page_pointer(), follow_page_pointers_for_read_batch(), follow_page_pointers_for_write_batch(), foedus::thread::Thread::get_global_volatile_page_resolver(), foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::get_rw_other_async_block(), initialize_once(), is_volatile_page_retired(), place_a_new_volatile_page(), and foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::remove_rw_async_mapping().

| Thread* const foedus::thread::ThreadPimpl::holder_ |

The public object that holds this pimpl object.

Definition at line 325 of file thread_pimpl.hpp.

Referenced by follow_page_pointer(), follow_page_pointers_for_read_batch(), follow_page_pointers_for_write_batch(), handle_tasks(), and on_snapshot_cache_miss().

| const ThreadId foedus::thread::ThreadPimpl::id_ |

Unique ID of this thread.

Definition at line 330 of file thread_pimpl.hpp.

Referenced by flush_retired_volatile_page(), foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::get_my_id(), foedus::thread::Thread::get_thread_id(), handle_tasks(), initialize_once(), place_a_new_volatile_page(), set_thread_schedule(), and uninitialize_once().

| memory::LocalPageResolver foedus::thread::ThreadPimpl::local_volatile_page_resolver_ |

Page resolver to convert only local page ID to page pointer.

Definition at line 356 of file thread_pimpl.hpp.

Referenced by follow_page_pointer(), follow_page_pointers_for_read_batch(), follow_page_pointers_for_write_batch(), foedus::thread::Thread::get_local_volatile_page_resolver(), initialize_once(), install_a_volatile_page(), and place_a_new_volatile_page().

| log::ThreadLogBuffer foedus::thread::ThreadPimpl::log_buffer_ |

Thread-private log buffer.

Definition at line 361 of file thread_pimpl.hpp.

Referenced by foedus::thread::Thread::get_thread_log_buffer(), initialize_once(), and uninitialize_once().

| xct::McsRwAsyncMapping* foedus::thread::ThreadPimpl::mcs_rw_async_mappings_ |

Definition at line 390 of file thread_pimpl.hpp.

Referenced by foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::add_rw_async_mapping(), initialize_once(), and foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::remove_rw_async_mapping().

| xct::McsRwExtendedBlock* foedus::thread::ThreadPimpl::mcs_rw_extended_blocks_ |

Definition at line 389 of file thread_pimpl.hpp.

Referenced by foedus::thread::Thread::get_mcs_rw_extended_blocks(), get_mcs_rw_my_blocks(), and initialize_once().

| xct::McsRwSimpleBlock* foedus::thread::ThreadPimpl::mcs_rw_simple_blocks_ |

Definition at line 388 of file thread_pimpl.hpp.

Referenced by get_mcs_rw_my_blocks(), foedus::thread::Thread::get_mcs_rw_simple_blocks(), and initialize_once().

| xct::McsWwBlock* foedus::thread::ThreadPimpl::mcs_ww_blocks_ |

Pre-allocated MCS blocks.

index 0 is not used so that successor_block=0 means null.

Definition at line 387 of file thread_pimpl.hpp.

Referenced by foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::get_ww_my_block(), and initialize_once().

| memory::NumaNodeMemory* foedus::thread::ThreadPimpl::node_memory_ |

same above

Definition at line 347 of file thread_pimpl.hpp.

Referenced by initialize_once(), and uninitialize_once().

| const ThreadGroupId foedus::thread::ThreadPimpl::numa_node_ |

Node this thread belongs to.

Definition at line 333 of file thread_pimpl.hpp.

Referenced by follow_page_pointer(), follow_page_pointers_for_read_batch(), follow_page_pointers_for_write_batch(), foedus::thread::ThreadPimplMcsAdaptor< RW_BLOCK >::get_my_numa_node(), and place_a_new_volatile_page().

| std::thread foedus::thread::ThreadPimpl::raw_thread_ |

Encapsulates raw thread object.

Definition at line 366 of file thread_pimpl.hpp.

Referenced by initialize_once(), set_thread_schedule(), and uninitialize_once().

| std::atomic<bool> foedus::thread::ThreadPimpl::raw_thread_set_ |

Just to make sure raw_thread_ is set.

Otherwise pthread_getschedparam will complain.

Definition at line 368 of file thread_pimpl.hpp.

Referenced by initialize_once(), and set_thread_schedule().

| bool foedus::thread::ThreadPimpl::simple_mcs_rw_ |

shortcut for engine_->get_options().xct_.mcs_implementation_type_ == simple

Definition at line 338 of file thread_pimpl.hpp.

Referenced by initialize_once(), and is_simple_mcs_rw().

| cache::CacheHashtable* foedus::thread::ThreadPimpl::snapshot_cache_hashtable_ |

same above

Definition at line 349 of file thread_pimpl.hpp.

Referenced by find_or_read_a_snapshot_page(), find_or_read_snapshot_pages_batch(), initialize_once(), and uninitialize_once().

| cache::SnapshotFileSet foedus::thread::ThreadPimpl::snapshot_file_set_ |

Each threads maintains a private set of snapshot file descriptors.

Definition at line 380 of file thread_pimpl.hpp.

Referenced by initialize_once(), read_a_snapshot_page(), read_snapshot_pages(), and uninitialize_once().

| memory::PagePool* foedus::thread::ThreadPimpl::snapshot_page_pool_ |

shorthand for node_memory_->get_snapshot_pool()

Definition at line 351 of file thread_pimpl.hpp.

Referenced by find_or_read_a_snapshot_page(), find_or_read_snapshot_pages_batch(), initialize_once(), and on_snapshot_cache_miss().

| void* foedus::thread::ThreadPimpl::task_input_memory_ |

Definition at line 383 of file thread_pimpl.hpp.

Referenced by handle_tasks(), and initialize_once().

| void* foedus::thread::ThreadPimpl::task_output_memory_ |

Definition at line 384 of file thread_pimpl.hpp.

Referenced by handle_tasks(), and initialize_once().