|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

A NUMA-local hashtable of cached snapshot pages. More...

A NUMA-local hashtable of cached snapshot pages.

Definition at line 233 of file cache_hashtable.hpp.

#include <cache_hashtable.hpp>

Classes | |

| struct | EvictArgs |

| Parameters for evict() More... | |

| struct | Stat |

Public Types | |

| enum | Constants { kMaxFindBatchSize = 32 } |

Public Member Functions | |

| CacheHashtable (BucketId physical_buckets, uint16_t numa_node) | |

| ContentId | find (storage::SnapshotPagePointer page_id) const __attribute__((always_inline)) |

| Returns an offset for the given page ID opportunistically. More... | |

| ErrorCode | find_batch (uint16_t batch_size, const storage::SnapshotPagePointer *page_ids, ContentId *out) const |

| Batched version of find(). More... | |

| ErrorCode | install (storage::SnapshotPagePointer page_id, ContentId content) |

| Called when a cached page is not found. More... | |

| void | evict (EvictArgs *args) |

| Evict some entries from the hashtable. More... | |

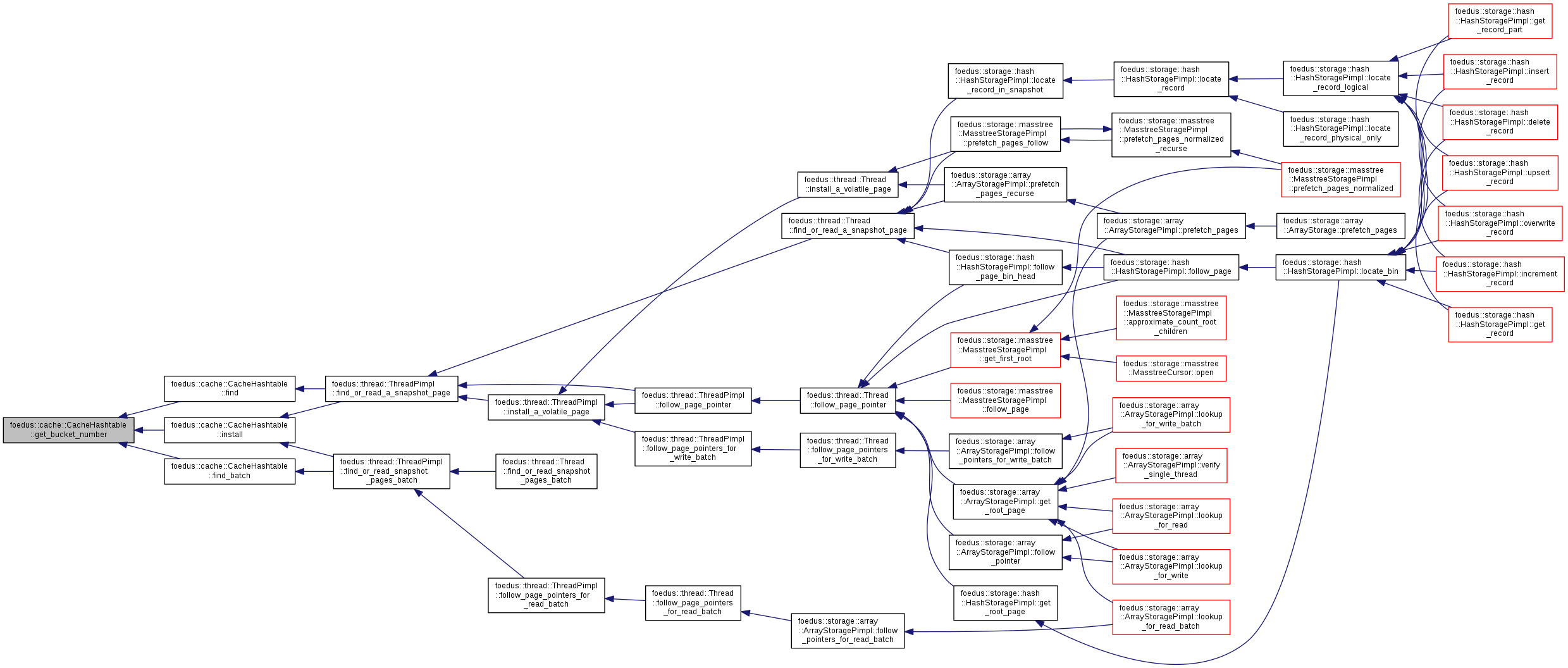

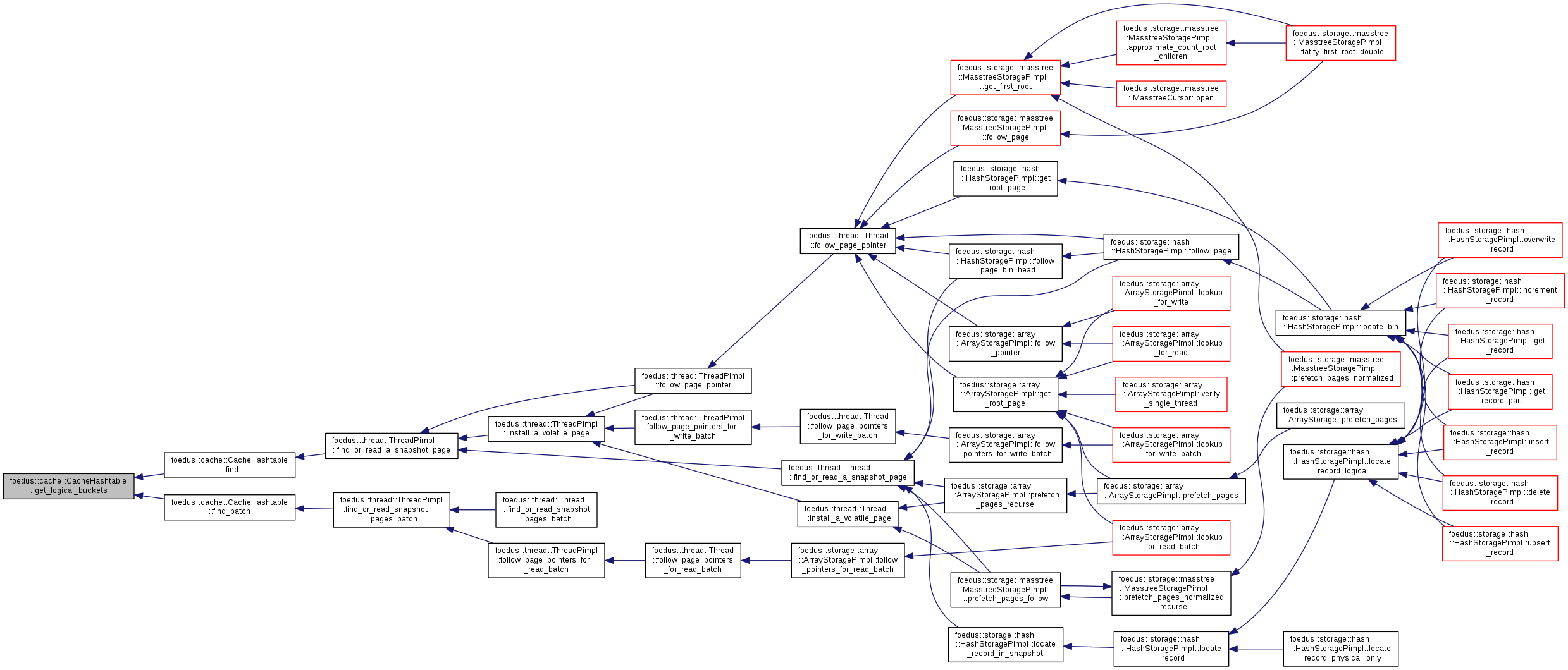

| BucketId | get_logical_buckets () const __attribute__((always_inline)) |

| BucketId | get_physical_buckets () const __attribute__((always_inline)) |

| BucketId | get_bucket_number (storage::SnapshotPagePointer page_id) const __attribute__((always_inline)) |

| Returns a bucket number the given page ID should belong to. More... | |

| ErrorStack | verify_single_thread () const |

| only for debugging. More... | |

| const CacheBucket & | get_bucket (BucketId bucket_id) const |

| Stat | get_stat_single_thread () const |

| only for debugging. More... | |

Protected Member Functions | |

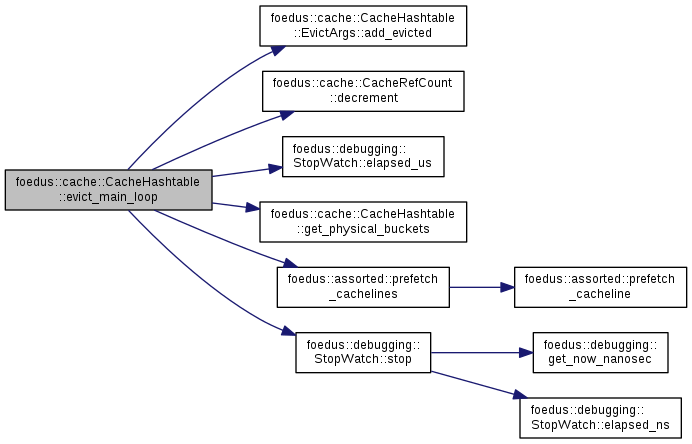

| BucketId | evict_main_loop (EvictArgs *args, BucketId cur, uint16_t loop) |

| void | evict_overflow_loop (EvictArgs *args, uint16_t loop) |

Protected Attributes | |

| const uint16_t | numa_node_ |

| const uint32_t | overflow_buckets_count_ |

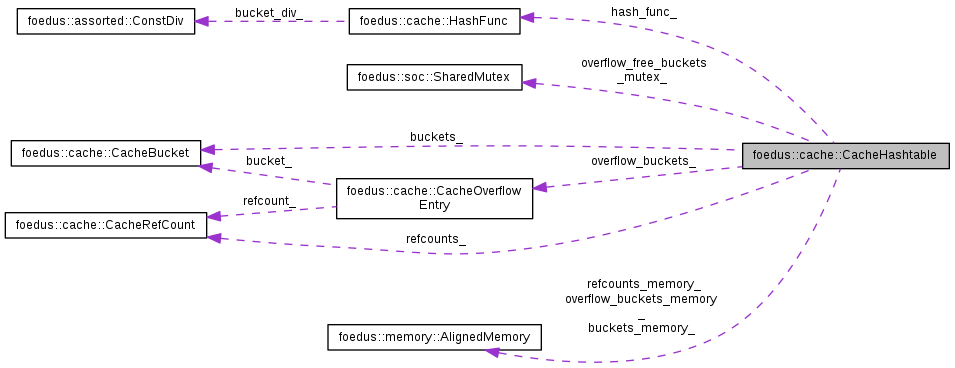

| const HashFunc | hash_func_ |

| memory::AlignedMemory | buckets_memory_ |

| memory::AlignedMemory | refcounts_memory_ |

| memory::AlignedMemory | overflow_buckets_memory_ |

| CacheBucket * | buckets_ |

| CacheRefCount * | refcounts_ |

| CacheOverflowEntry * | overflow_buckets_ |

| OverflowPointer | overflow_buckets_head_ |

| This forms a singly-linked list of active overflow entries. More... | |

| OverflowPointer | overflow_free_buckets_head_ |

| This forms another singly-linked list of free overflow entries. More... | |

| soc::SharedMutex | overflow_free_buckets_mutex_ |

| The mutex to protect free overflow entries. More... | |

| BucketId | clockhand_ |

| We previously stopped eviction here for usual buckets. More... | |

Friends | |

| std::ostream & | operator<< (std::ostream &o, const CacheHashtable &v) |

| Enumerator | |

|---|---|

| kMaxFindBatchSize |

Max size for find_batch() |

Definition at line 235 of file cache_hashtable.hpp.

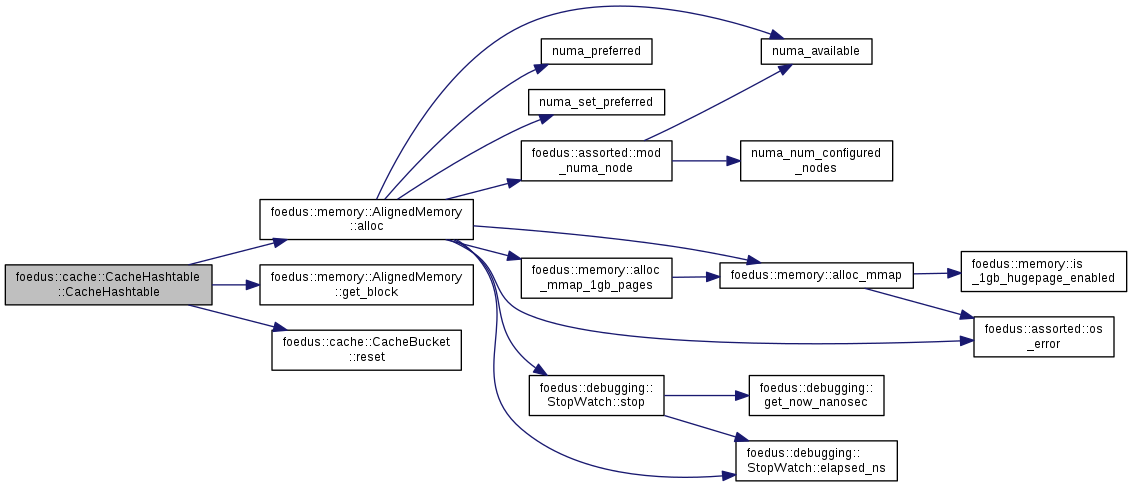

| foedus::cache::CacheHashtable::CacheHashtable | ( | BucketId | physical_buckets, |

| uint16_t | numa_node | ||

| ) |

Definition at line 66 of file cache_hashtable.cpp.

References foedus::memory::AlignedMemory::alloc(), foedus::cache::CacheOverflowEntry::bucket_, buckets_, buckets_memory_, foedus::cache::CacheRefCount::count_, foedus::memory::AlignedMemory::get_block(), foedus::memory::AlignedMemory::kNumaAllocOnnode, foedus::cache::CacheOverflowEntry::next_, overflow_buckets_, overflow_buckets_count_, overflow_buckets_head_, overflow_buckets_memory_, overflow_free_buckets_head_, foedus::cache::CacheOverflowEntry::padding_, foedus::cache::CacheOverflowEntry::refcount_, refcounts_, refcounts_memory_, and foedus::cache::CacheBucket::reset().

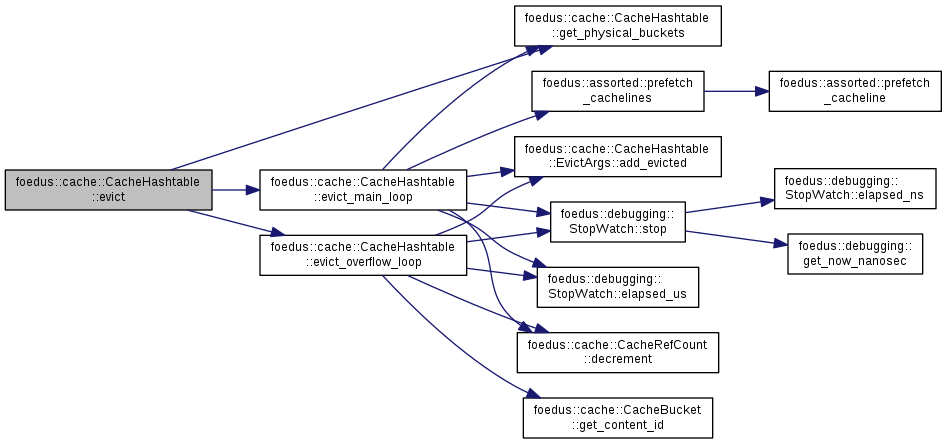

| void foedus::cache::CacheHashtable::evict | ( | CacheHashtable::EvictArgs * | args | ) |

Evict some entries from the hashtable.

Compared to traditional bufferpools, this is much simpler and more scalable thanks to the loose requirements and epoch-based reclamation of the evicted pages. This method only evicts the hashtable entries, so reclaiming the pages pointed from the entries is done by the caller.

Definition at line 171 of file cache_hashtable.cpp.

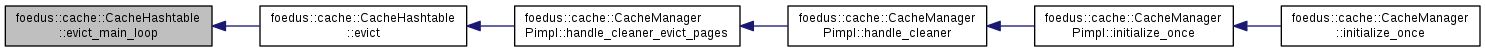

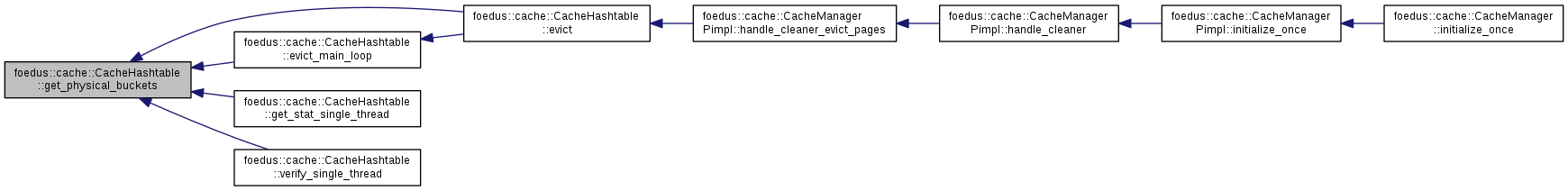

References ASSERT_ND, clockhand_, evict_main_loop(), evict_overflow_loop(), foedus::cache::CacheHashtable::EvictArgs::evicted_count_, get_physical_buckets(), numa_node_, overflow_buckets_head_, and foedus::cache::CacheHashtable::EvictArgs::target_count_.

Referenced by foedus::cache::CacheManagerPimpl::handle_cleaner_evict_pages().

|

protected |

Definition at line 212 of file cache_hashtable.cpp.

References foedus::cache::CacheHashtable::EvictArgs::add_evicted(), ASSERT_ND, ASSUME_ALIGNED, buckets_, clockhand_, foedus::cache::CacheBucket::data_, foedus::cache::CacheRefCount::decrement(), foedus::debugging::StopWatch::elapsed_us(), foedus::cache::CacheHashtable::EvictArgs::evicted_count_, get_physical_buckets(), foedus::assorted::kCachelineSize, LIKELY, numa_node_, foedus::assorted::prefetch_cachelines(), refcounts_, foedus::debugging::StopWatch::stop(), and foedus::cache::CacheHashtable::EvictArgs::target_count_.

Referenced by evict().

|

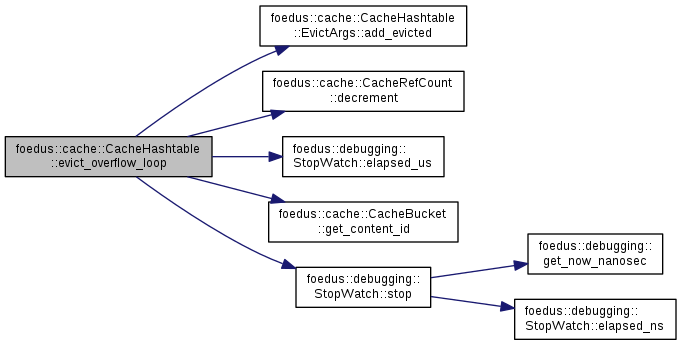

protected |

Definition at line 277 of file cache_hashtable.cpp.

References foedus::cache::CacheHashtable::EvictArgs::add_evicted(), foedus::cache::CacheOverflowEntry::bucket_, foedus::cache::CacheBucket::data_, foedus::cache::CacheRefCount::decrement(), foedus::debugging::StopWatch::elapsed_us(), foedus::cache::CacheBucket::get_content_id(), foedus::cache::CacheOverflowEntry::next_, numa_node_, overflow_buckets_, overflow_buckets_head_, overflow_free_buckets_mutex_, foedus::cache::CacheOverflowEntry::refcount_, and foedus::debugging::StopWatch::stop().

Referenced by evict().

|

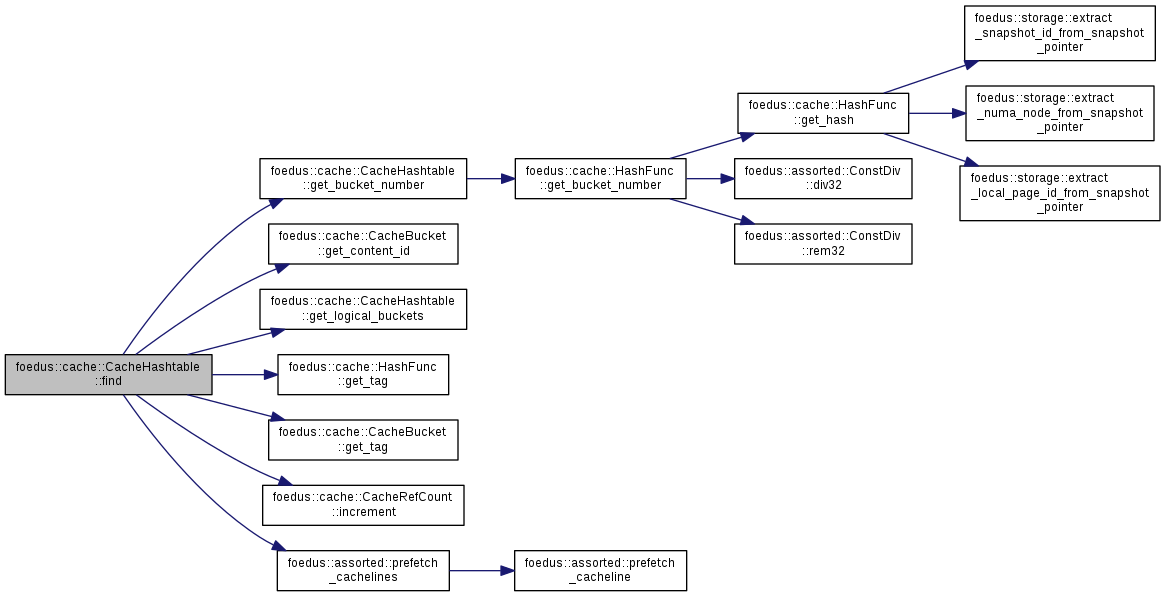

inline |

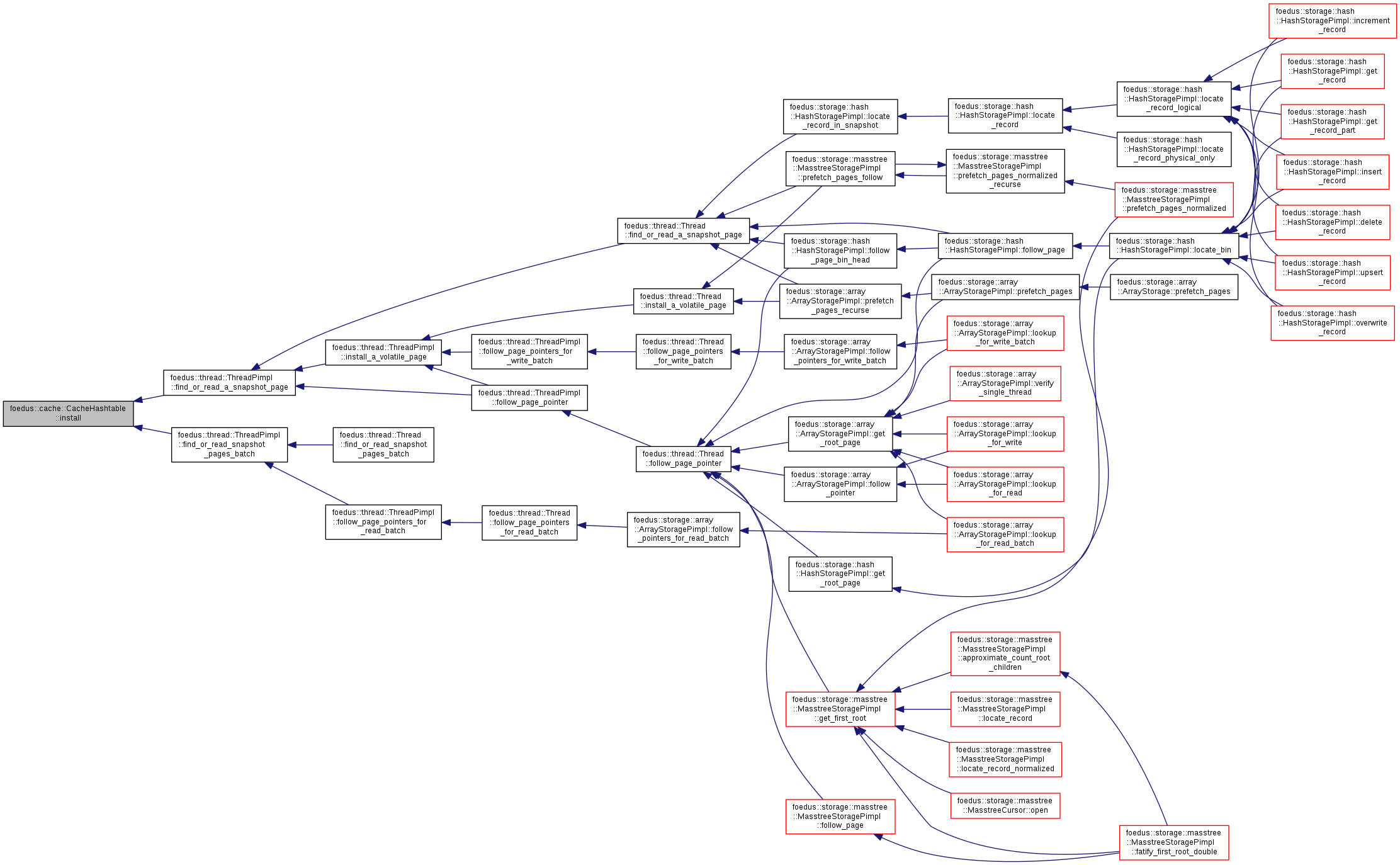

Returns an offset for the given page ID opportunistically.

| [in] | page_id | Page ID to look for |

This doesn't take a lock, so a concurrent thread might have inserted the wanted page concurrrently. It's fine. Then we read the page from snapshot, just wasting a bit. Instead, an entry is removed from the cache gracefully, thus an offset we observed will not become invalid soon (pages are garbage collected with grace period). No precise concurrency control needed.

However, it might (very occasionally) cause a false negative due to the way we verify (each CacheBucket contains only a compressed version of the page Id). So, the caller must check whether the returned ContentId really points to a correct page, and invoke install() in that case. Again, no precise concurrency control required. Even for false positives/negatives, we just get a bit slower. No correctness issue.

Definition at line 415 of file cache_hashtable.hpp.

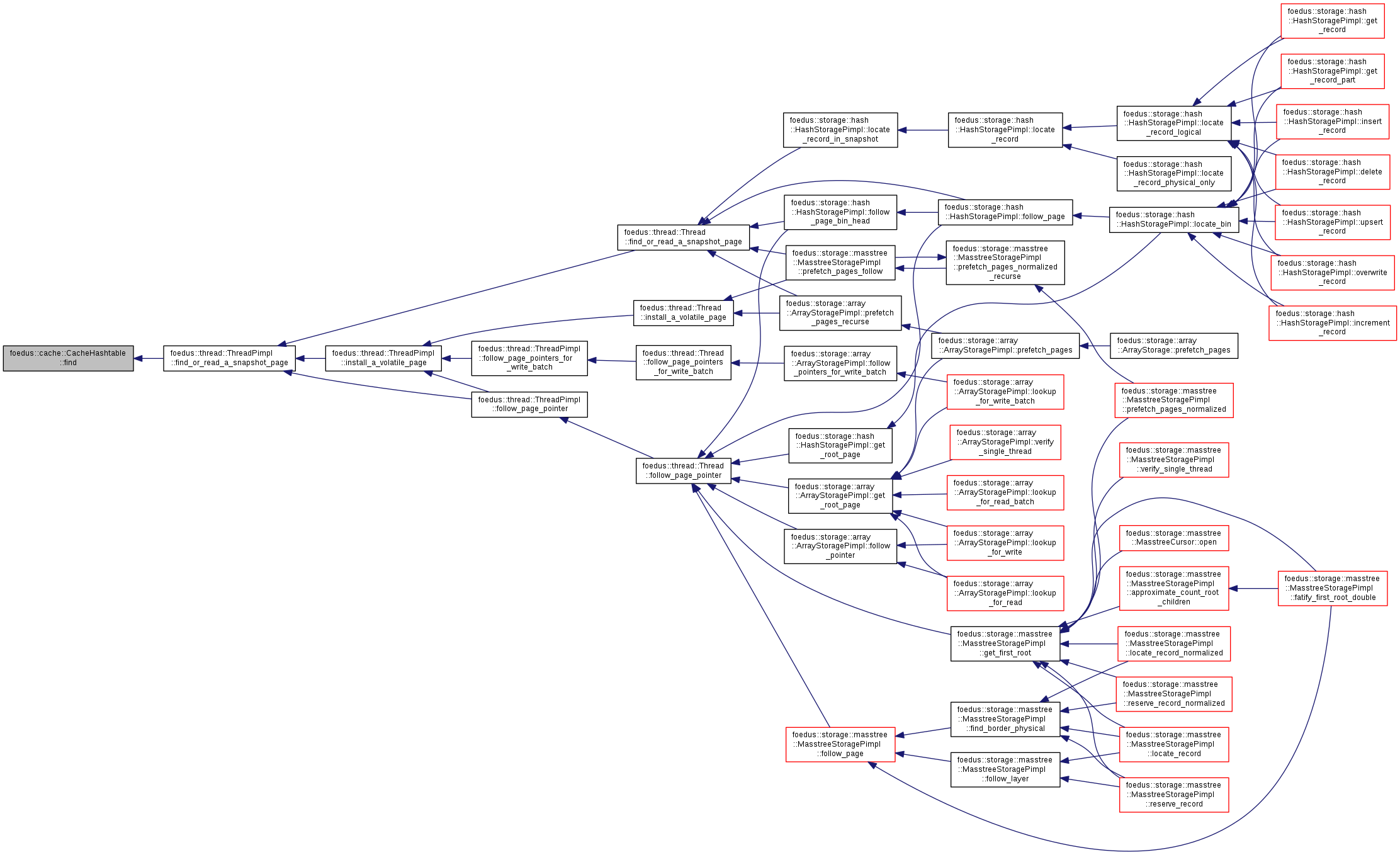

References ASSERT_ND, foedus::cache::CacheOverflowEntry::bucket_, buckets_, get_bucket_number(), foedus::cache::CacheBucket::get_content_id(), get_logical_buckets(), foedus::cache::HashFunc::get_tag(), foedus::cache::CacheBucket::get_tag(), foedus::cache::CacheRefCount::increment(), foedus::cache::kHopNeighbors, foedus::cache::CacheOverflowEntry::next_, overflow_buckets_, overflow_buckets_head_, foedus::assorted::prefetch_cachelines(), foedus::cache::CacheOverflowEntry::refcount_, and refcounts_.

Referenced by foedus::thread::ThreadPimpl::find_or_read_a_snapshot_page().

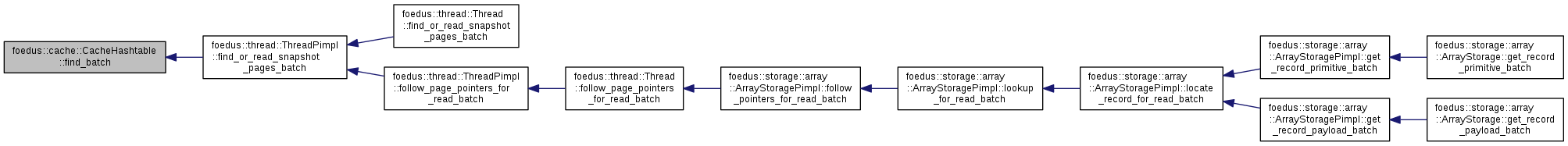

| ErrorCode foedus::cache::CacheHashtable::find_batch | ( | uint16_t | batch_size, |

| const storage::SnapshotPagePointer * | page_ids, | ||

| ContentId * | out | ||

| ) | const |

Batched version of find().

| [in] | batch_size | Batch size. Must be kMaxFindBatchSize or less. |

| [in] | page_ids | Array of Page IDs to look for, size=batch_size |

| [out] | out | Output |

This might perform much faster because of parallel prefetching, SIMD-ized hash calculattion (planned, not implemented yet) etc.

Definition at line 376 of file cache_hashtable.cpp.

References ASSERT_ND, foedus::cache::CacheOverflowEntry::bucket_, buckets_, get_bucket_number(), foedus::cache::CacheBucket::get_content_id(), get_logical_buckets(), foedus::cache::HashFunc::get_tag(), foedus::cache::CacheBucket::get_tag(), foedus::cache::CacheRefCount::increment(), foedus::kErrorCodeInvalidParameter, foedus::kErrorCodeOk, foedus::cache::kHopNeighbors, kMaxFindBatchSize, foedus::cache::CacheOverflowEntry::next_, overflow_buckets_, overflow_buckets_head_, foedus::assorted::prefetch_cachelines(), foedus::cache::CacheOverflowEntry::refcount_, refcounts_, and UNLIKELY.

Referenced by foedus::thread::ThreadPimpl::find_or_read_snapshot_pages_batch().

|

inline |

|

inline |

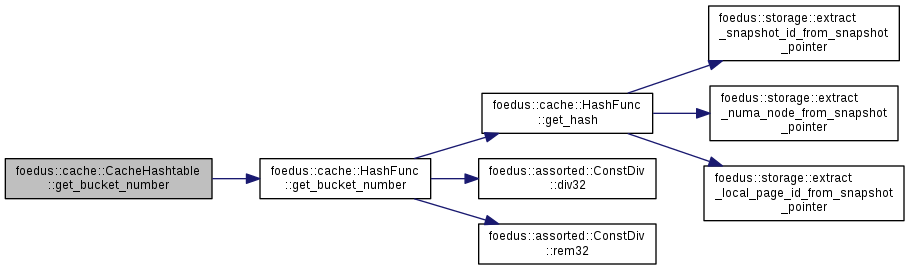

Returns a bucket number the given page ID should belong to.

Definition at line 319 of file cache_hashtable.hpp.

References foedus::cache::HashFunc::get_bucket_number(), and hash_func_.

Referenced by find(), find_batch(), and install().

|

inline |

Definition at line 313 of file cache_hashtable.hpp.

References hash_func_, and foedus::cache::HashFunc::logical_buckets_.

Referenced by find(), and find_batch().

|

inline |

Definition at line 314 of file cache_hashtable.hpp.

References hash_func_, and foedus::cache::HashFunc::physical_buckets_.

Referenced by evict(), evict_main_loop(), get_stat_single_thread(), and verify_single_thread().

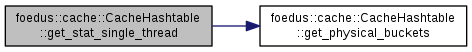

| CacheHashtable::Stat foedus::cache::CacheHashtable::get_stat_single_thread | ( | ) | const |

only for debugging.

you can call this in a race, but the results are a bit inaccurate.

Definition at line 351 of file cache_hashtable.cpp.

References buckets_, get_physical_buckets(), foedus::cache::CacheOverflowEntry::next_, foedus::cache::CacheHashtable::Stat::normal_entries_, overflow_buckets_, overflow_buckets_head_, and foedus::cache::CacheHashtable::Stat::overflow_entries_.

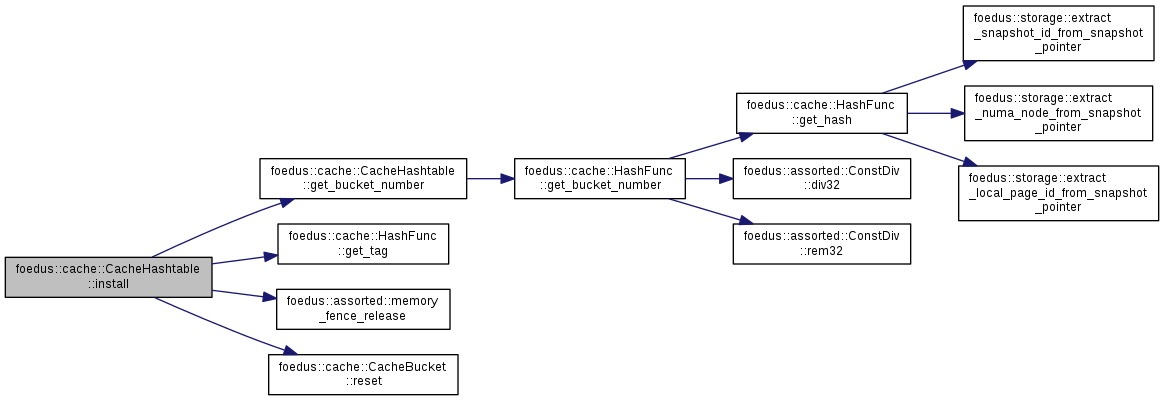

| ErrorCode foedus::cache::CacheHashtable::install | ( | storage::SnapshotPagePointer | page_id, |

| ContentId | content | ||

| ) |

Called when a cached page is not found.

This method installs the new content to this hashtable. We are anyway doing at least 4kb memory copy in this case, so no need for serious optimization.

Definition at line 114 of file cache_hashtable.cpp.

References ASSERT_ND, foedus::cache::CacheOverflowEntry::bucket_, buckets_, foedus::cache::CacheRefCount::count_, get_bucket_number(), foedus::cache::HashFunc::get_tag(), foedus::kErrorCodeCacheTooManyOverflow, foedus::kErrorCodeOk, foedus::cache::kHopNeighbors, foedus::assorted::memory_fence_release(), foedus::cache::CacheOverflowEntry::next_, overflow_buckets_, overflow_buckets_count_, overflow_buckets_head_, overflow_free_buckets_head_, overflow_free_buckets_mutex_, foedus::cache::CacheOverflowEntry::refcount_, refcounts_, and foedus::cache::CacheBucket::reset().

Referenced by foedus::thread::ThreadPimpl::find_or_read_a_snapshot_page(), and foedus::thread::ThreadPimpl::find_or_read_snapshot_pages_batch().

| ErrorStack foedus::cache::CacheHashtable::verify_single_thread | ( | ) | const |

only for debugging.

don't call this in a race

Definition at line 340 of file cache_hashtable.cpp.

References ASSERT_ND, buckets_, get_physical_buckets(), and foedus::kRetOk.

|

friend |

|

protected |

Definition at line 346 of file cache_hashtable.hpp.

Referenced by CacheHashtable(), evict_main_loop(), find(), find_batch(), get_bucket(), get_stat_single_thread(), install(), and verify_single_thread().

|

protected |

Definition at line 341 of file cache_hashtable.hpp.

Referenced by CacheHashtable().

|

protected |

We previously stopped eviction here for usual buckets.

We will resume from this number +1 next time. We will then sequetnially check. The overflow list is fully checked after each wrap-around of this clockhand, so we don't have a dedicated clock hand for overflow list.

Definition at line 378 of file cache_hashtable.hpp.

Referenced by evict(), and evict_main_loop().

|

protected |

Definition at line 340 of file cache_hashtable.hpp.

Referenced by get_bucket_number(), get_logical_buckets(), and get_physical_buckets().

|

protected |

Definition at line 338 of file cache_hashtable.hpp.

Referenced by evict(), evict_main_loop(), and evict_overflow_loop().

|

protected |

Definition at line 350 of file cache_hashtable.hpp.

Referenced by CacheHashtable(), evict_overflow_loop(), find(), find_batch(), get_stat_single_thread(), and install().

|

protected |

Definition at line 339 of file cache_hashtable.hpp.

Referenced by CacheHashtable(), and install().

|

protected |

This forms a singly-linked list of active overflow entries.

This is initially 0 (null), and usually remains 0.

Definition at line 355 of file cache_hashtable.hpp.

Referenced by CacheHashtable(), evict(), evict_overflow_loop(), find(), find_batch(), get_stat_single_thread(), and install().

|

protected |

Definition at line 343 of file cache_hashtable.hpp.

Referenced by CacheHashtable().

|

protected |

This forms another singly-linked list of free overflow entries.

A new entry is consumed from the head (by transactions). A returned entry is added back to the head (by cleaner). This overflow free-list is the only data structure we access with atomic operation/mutex. As a new entry is added to overflow list very occasionally, this should be fine.

Definition at line 364 of file cache_hashtable.hpp.

Referenced by CacheHashtable(), and install().

|

protected |

The mutex to protect free overflow entries.

Actually this is not a shared mutex, but we reuse the class to reduce code.

Definition at line 370 of file cache_hashtable.hpp.

Referenced by evict_overflow_loop(), and install().

|

protected |

Definition at line 347 of file cache_hashtable.hpp.

Referenced by CacheHashtable(), evict_main_loop(), find(), find_batch(), and install().

|

protected |

Definition at line 342 of file cache_hashtable.hpp.

Referenced by CacheHashtable().