|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

A specialized/simplified implementation of an MCS-locking Algorithm for exclusive-only (WW) locks. More...

A specialized/simplified implementation of an MCS-locking Algorithm for exclusive-only (WW) locks.

This is exactly same as MCSg. Most places in our codebase now use RW locks, but still there are a few WW-only places, such as page-lock (well, so far).

Definition at line 182 of file xct_mcs_impl.hpp.

#include <xct_mcs_impl.hpp>

Public Member Functions | |

| McsWwImpl (ADAPTOR adaptor) | |

| McsBlockIndex | acquire_unconditional (McsWwLock *lock) |

| [WW] Unconditionally takes exclusive-only MCS lock on the given lock. More... | |

| McsBlockIndex | acquire_try (McsWwLock *lock) |

| [WW] Try to take an exclusive lock. More... | |

| McsBlockIndex | initial (McsWwLock *lock) |

| [WW] This doesn't use any atomic operation. More... | |

| void | release (McsWwLock *lock, McsBlockIndex block_index) |

| [WW] Unlcok an MCS lock acquired by this thread. More... | |

|

inlineexplicit |

Definition at line 184 of file xct_mcs_impl.hpp.

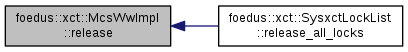

| McsBlockIndex foedus::xct::McsWwImpl< ADAPTOR >::acquire_try | ( | McsWwLock * | lock | ) |

[WW] Try to take an exclusive lock.

Definition at line 137 of file xct_mcs_impl.cpp.

References foedus::xct::assert_mcs_aligned(), ASSERT_ND, foedus::xct::McsWwBlock::clear_successor_release(), foedus::xct::McsWwBlockData::get_thread_id_relaxed(), foedus::xct::McsWwBlockData::is_guest_relaxed(), foedus::xct::McsWwLock::is_locked(), foedus::xct::McsWwBlockData::is_valid_relaxed(), foedus::xct::McsWwLock::tail_, and foedus::xct::McsWwBlockData::word_.

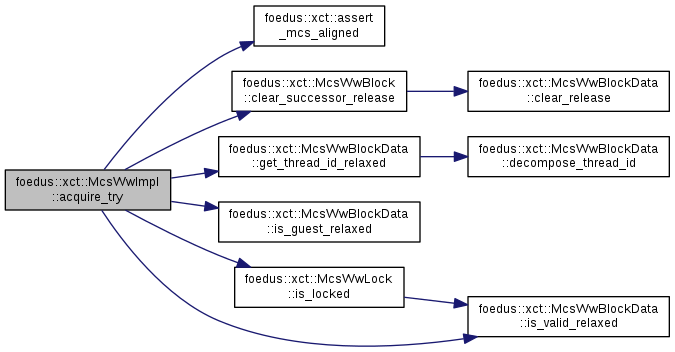

| McsBlockIndex foedus::xct::McsWwImpl< ADAPTOR >::acquire_unconditional | ( | McsWwLock * | mcs_lock | ) |

[WW] Unconditionally takes exclusive-only MCS lock on the given lock.

WW-lock implementations (all simple versions) These do not depend on RW_BLOCK, so they are primary templates without partial specialization.

So we don't need any trick.

Definition at line 53 of file xct_mcs_impl.cpp.

References foedus::xct::assert_mcs_aligned(), ASSERT_ND, foedus::xct::McsWwBlock::clear_successor_release(), foedus::xct::McsWwBlockData::get_block_relaxed(), foedus::xct::McsWwBlockData::get_thread_id_relaxed(), foedus::xct::McsWwBlockData::is_guest_relaxed(), foedus::xct::McsWwLock::is_locked(), foedus::xct::McsWwBlockData::is_valid_relaxed(), foedus::xct::kMcsGuestId, foedus::xct::spin_until(), foedus::xct::McsWwLock::tail_, UNLIKELY, and foedus::xct::McsWwBlockData::word_.

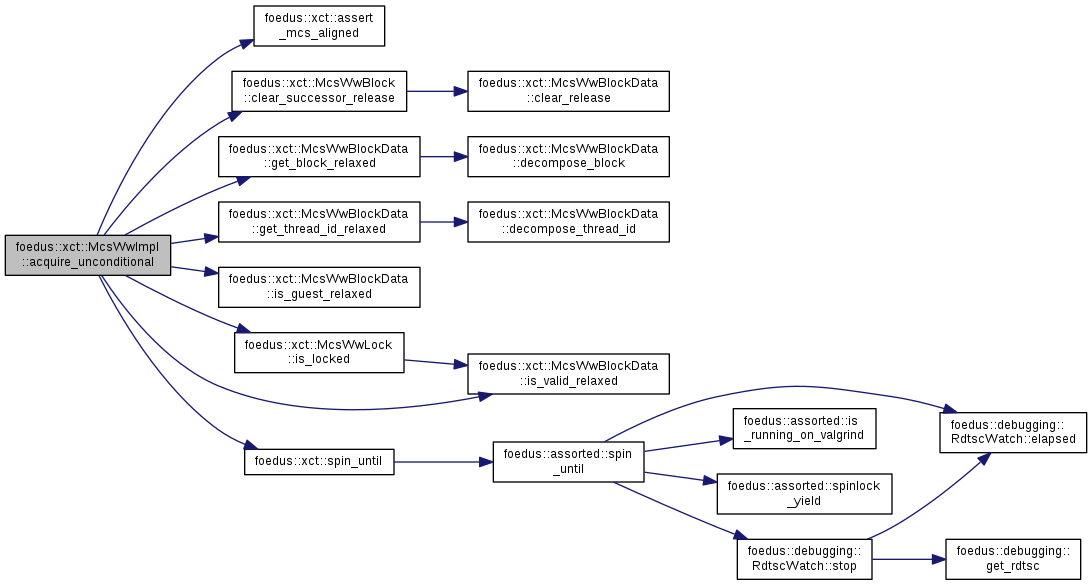

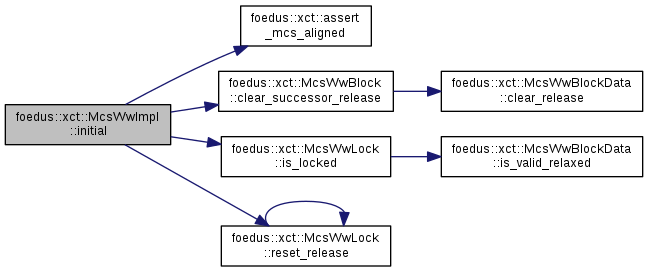

| McsBlockIndex foedus::xct::McsWwImpl< ADAPTOR >::initial | ( | McsWwLock * | lock | ) |

[WW] This doesn't use any atomic operation.

only allowed when there is no race TASK(Hideaki): This will be renamed to mcs_non_racy_lock(). "initial_lock" is ambiguous.

Definition at line 191 of file xct_mcs_impl.cpp.

References foedus::xct::assert_mcs_aligned(), ASSERT_ND, foedus::xct::McsWwBlock::clear_successor_release(), foedus::xct::McsWwLock::is_locked(), and foedus::xct::McsWwLock::reset_release().

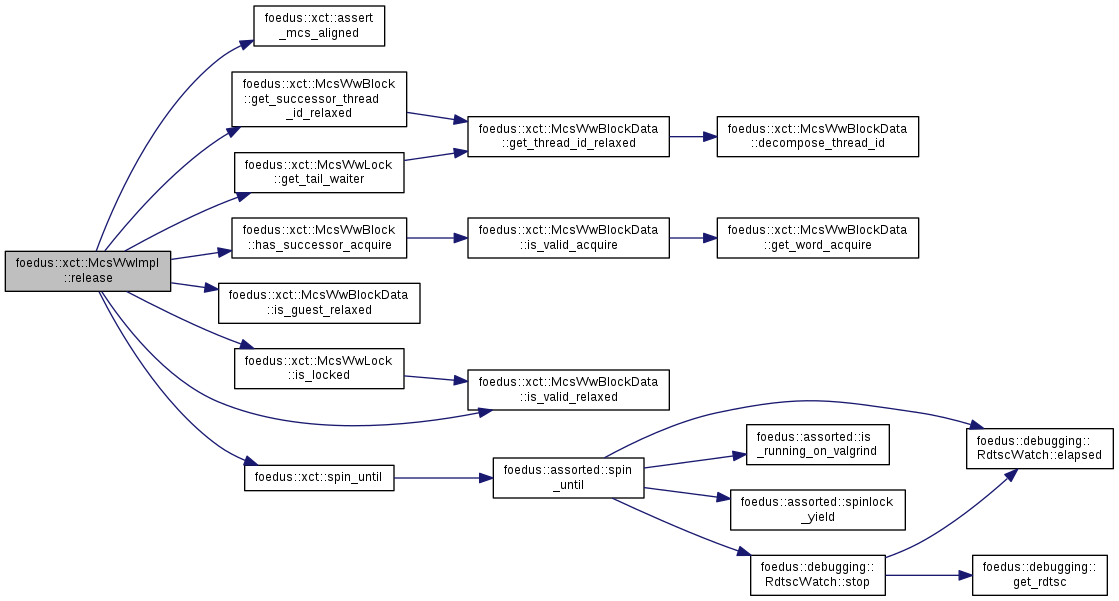

| void foedus::xct::McsWwImpl< ADAPTOR >::release | ( | McsWwLock * | lock, |

| McsBlockIndex | block_index | ||

| ) |

[WW] Unlcok an MCS lock acquired by this thread.

Definition at line 212 of file xct_mcs_impl.cpp.

References foedus::xct::assert_mcs_aligned(), ASSERT_ND, foedus::xct::McsWwBlock::get_successor_thread_id_relaxed(), foedus::xct::McsWwLock::get_tail_waiter(), foedus::xct::McsWwBlock::has_successor_acquire(), foedus::xct::McsWwBlockData::is_guest_relaxed(), foedus::xct::McsWwLock::is_locked(), foedus::xct::McsWwBlockData::is_valid_relaxed(), foedus::xct::spin_until(), foedus::xct::McsWwLock::tail_, UNLIKELY, and foedus::xct::McsWwBlockData::word_.

Referenced by foedus::xct::SysxctLockList::release_all_locks().