|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

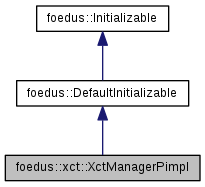

Pimpl object of XctManager. More...

Pimpl object of XctManager.

A private pimpl object for XctManager. Do not include this header from a client program unless you know what you are doing.

Definition at line 100 of file xct_manager_pimpl.hpp.

#include <xct_manager_pimpl.hpp>

Public Attributes | |

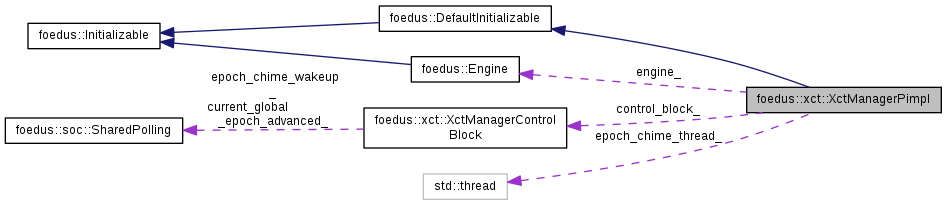

| Engine *const | engine_ |

| XctManagerControlBlock * | control_block_ |

| std::thread | epoch_chime_thread_ |

| This thread keeps advancing the current_global_epoch_. More... | |

|

delete |

|

inlineexplicit |

Definition at line 103 of file xct_manager_pimpl.hpp.

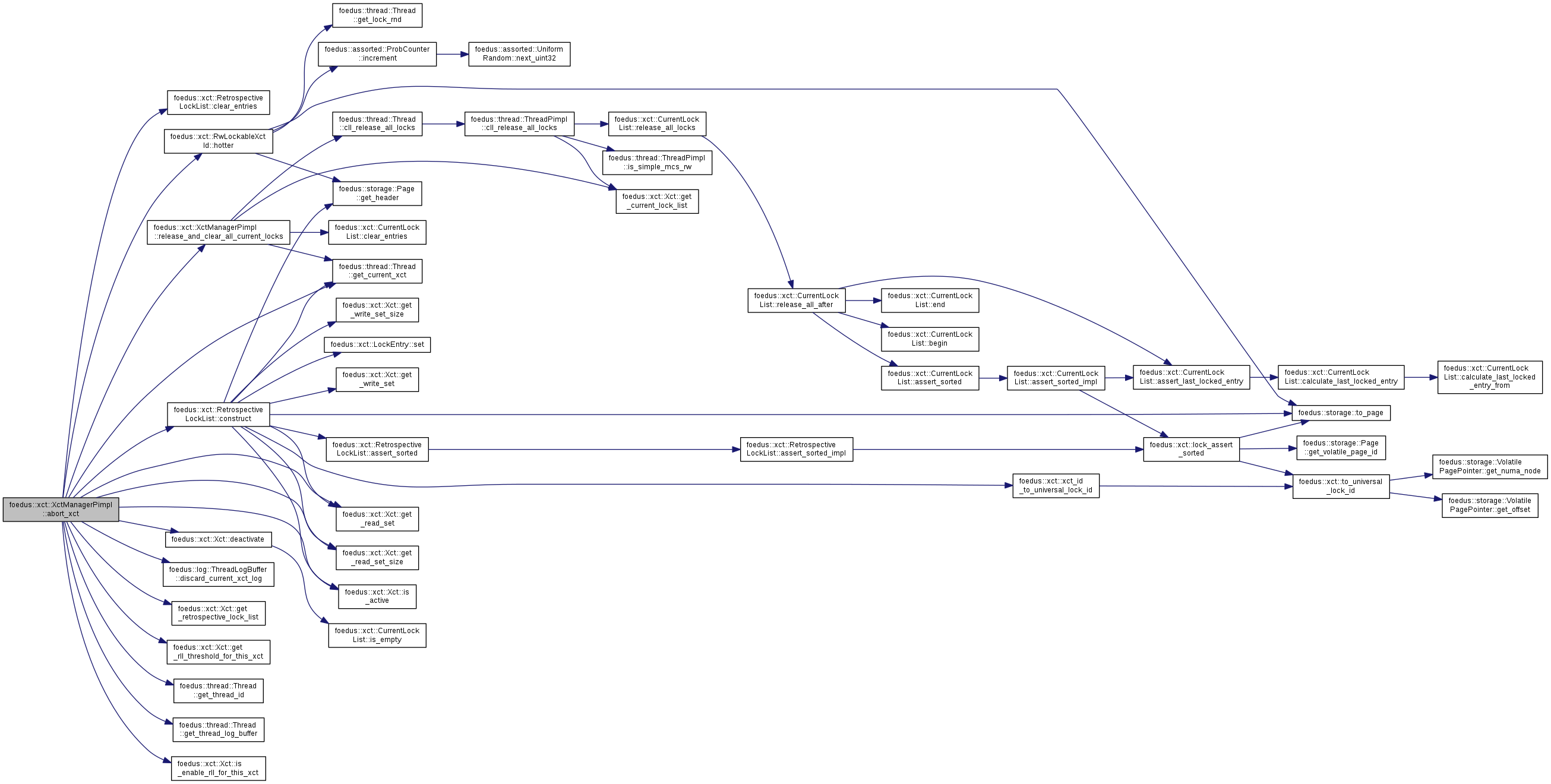

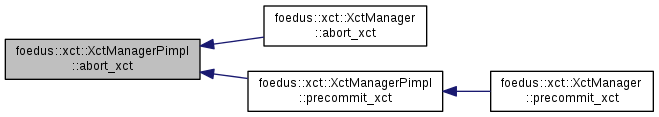

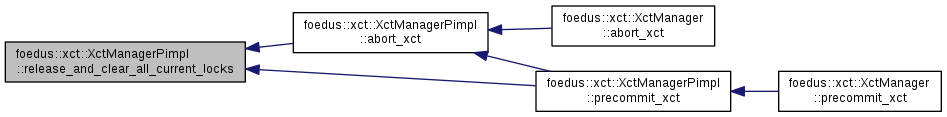

| ErrorCode foedus::xct::XctManagerPimpl::abort_xct | ( | thread::Thread * | context | ) |

Definition at line 954 of file xct_manager_pimpl.cpp.

References foedus::xct::RetrospectiveLockList::clear_entries(), foedus::xct::RetrospectiveLockList::construct(), foedus::xct::Xct::deactivate(), foedus::log::ThreadLogBuffer::discard_current_xct_log(), foedus::thread::Thread::get_current_xct(), foedus::xct::Xct::get_read_set(), foedus::xct::Xct::get_read_set_size(), foedus::xct::Xct::get_retrospective_lock_list(), foedus::xct::Xct::get_rll_threshold_for_this_xct(), foedus::thread::Thread::get_thread_id(), foedus::thread::Thread::get_thread_log_buffer(), foedus::xct::RwLockableXctId::hotter(), foedus::xct::Xct::is_active(), foedus::xct::Xct::is_enable_rll_for_this_xct(), foedus::kErrorCodeOk, foedus::kErrorCodeXctNoXct, foedus::xct::ReadXctAccess::observed_owner_id_, foedus::xct::RecordXctAccess::owner_id_address_, release_and_clear_all_current_locks(), and foedus::xct::RwLockableXctId::xct_id_.

Referenced by foedus::xct::XctManager::abort_xct(), and precommit_xct().

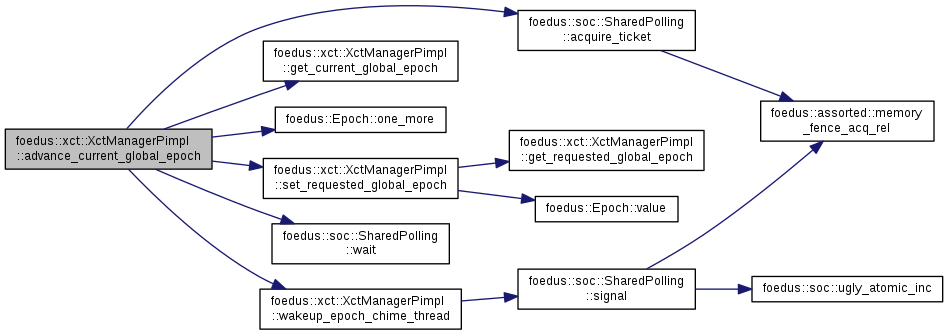

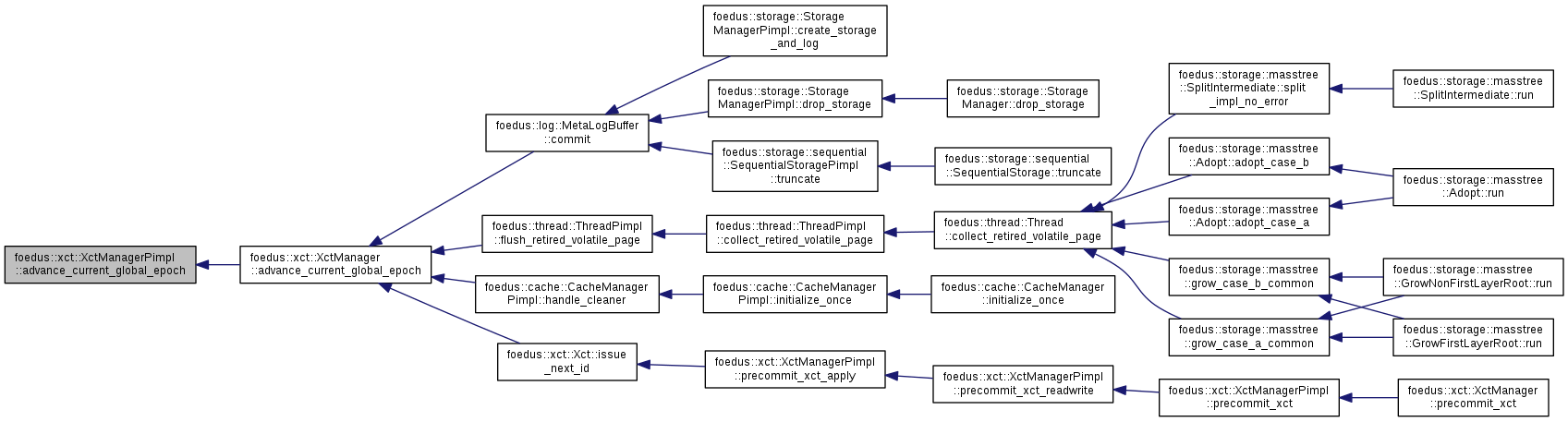

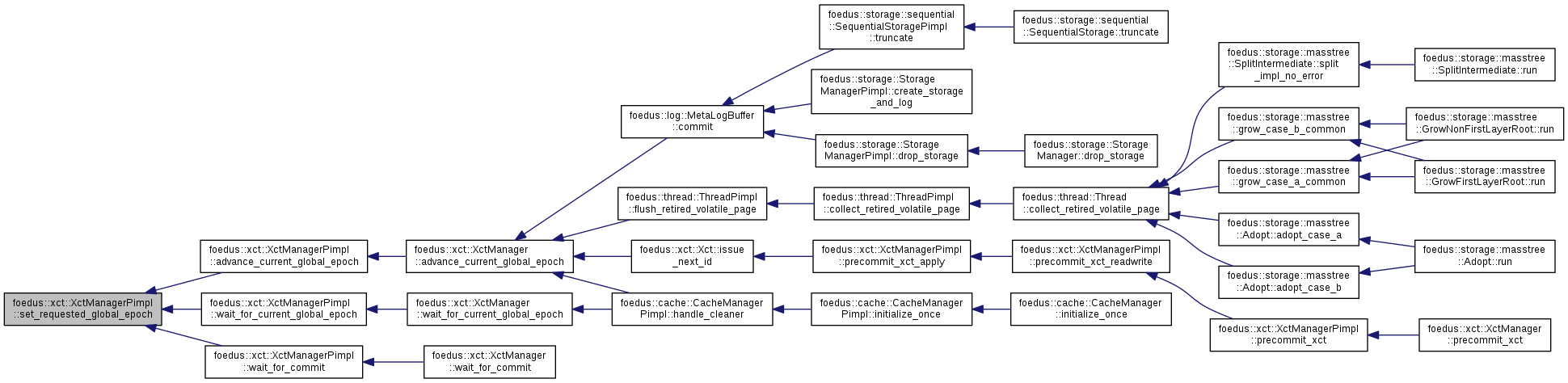

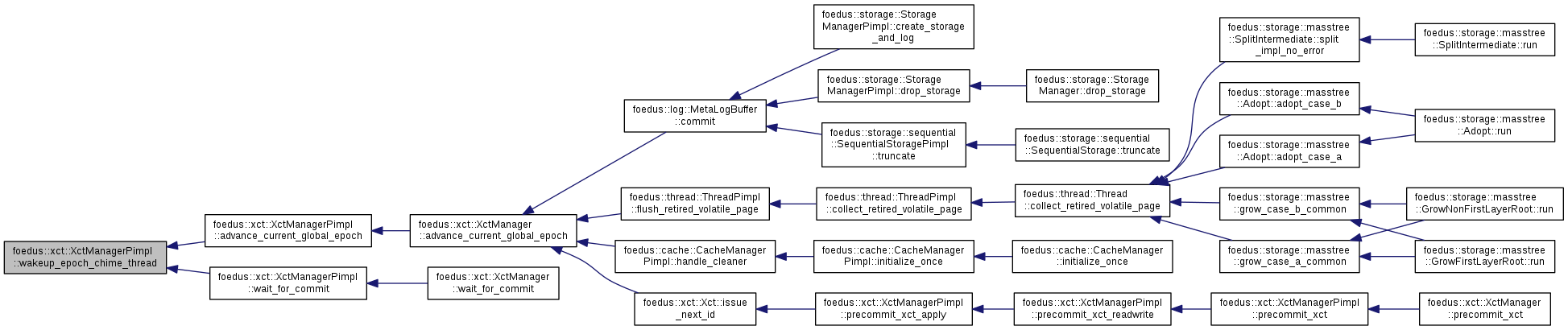

| void foedus::xct::XctManagerPimpl::advance_current_global_epoch | ( | ) |

Definition at line 255 of file xct_manager_pimpl.cpp.

References foedus::soc::SharedPolling::acquire_ticket(), control_block_, foedus::xct::XctManagerControlBlock::current_global_epoch_advanced_, get_current_global_epoch(), foedus::Epoch::one_more(), set_requested_global_epoch(), foedus::soc::SharedPolling::wait(), and wakeup_epoch_chime_thread().

Referenced by foedus::xct::XctManager::advance_current_global_epoch().

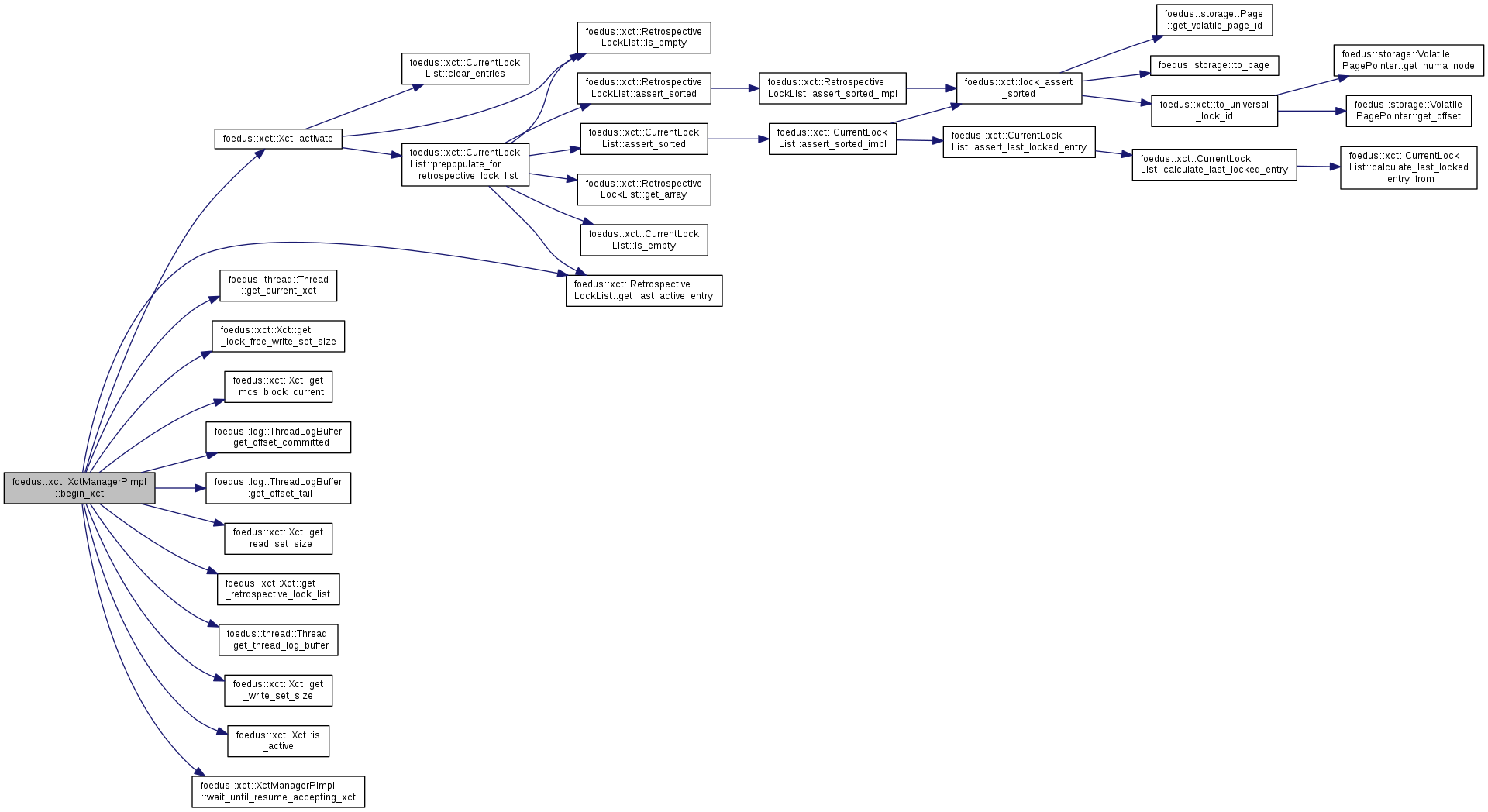

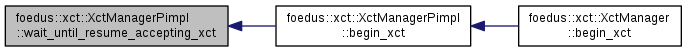

| ErrorCode foedus::xct::XctManagerPimpl::begin_xct | ( | thread::Thread * | context, |

| IsolationLevel | isolation_level | ||

| ) |

User transactions related methods.

Definition at line 307 of file xct_manager_pimpl.cpp.

References foedus::xct::Xct::activate(), ASSERT_ND, control_block_, foedus::thread::Thread::get_current_xct(), foedus::xct::RetrospectiveLockList::get_last_active_entry(), foedus::xct::Xct::get_lock_free_write_set_size(), foedus::xct::Xct::get_mcs_block_current(), foedus::log::ThreadLogBuffer::get_offset_committed(), foedus::log::ThreadLogBuffer::get_offset_tail(), foedus::xct::Xct::get_read_set_size(), foedus::xct::Xct::get_retrospective_lock_list(), foedus::thread::Thread::get_thread_log_buffer(), foedus::xct::Xct::get_write_set_size(), foedus::xct::Xct::is_active(), foedus::kErrorCodeOk, foedus::kErrorCodeXctAlreadyRunning, foedus::xct::XctManagerControlBlock::new_transaction_paused_, UNLIKELY, and wait_until_resume_accepting_xct().

Referenced by foedus::xct::XctManager::begin_xct().

|

inline |

Definition at line 107 of file xct_manager_pimpl.hpp.

References control_block_, and foedus::xct::XctManagerControlBlock::current_global_epoch_.

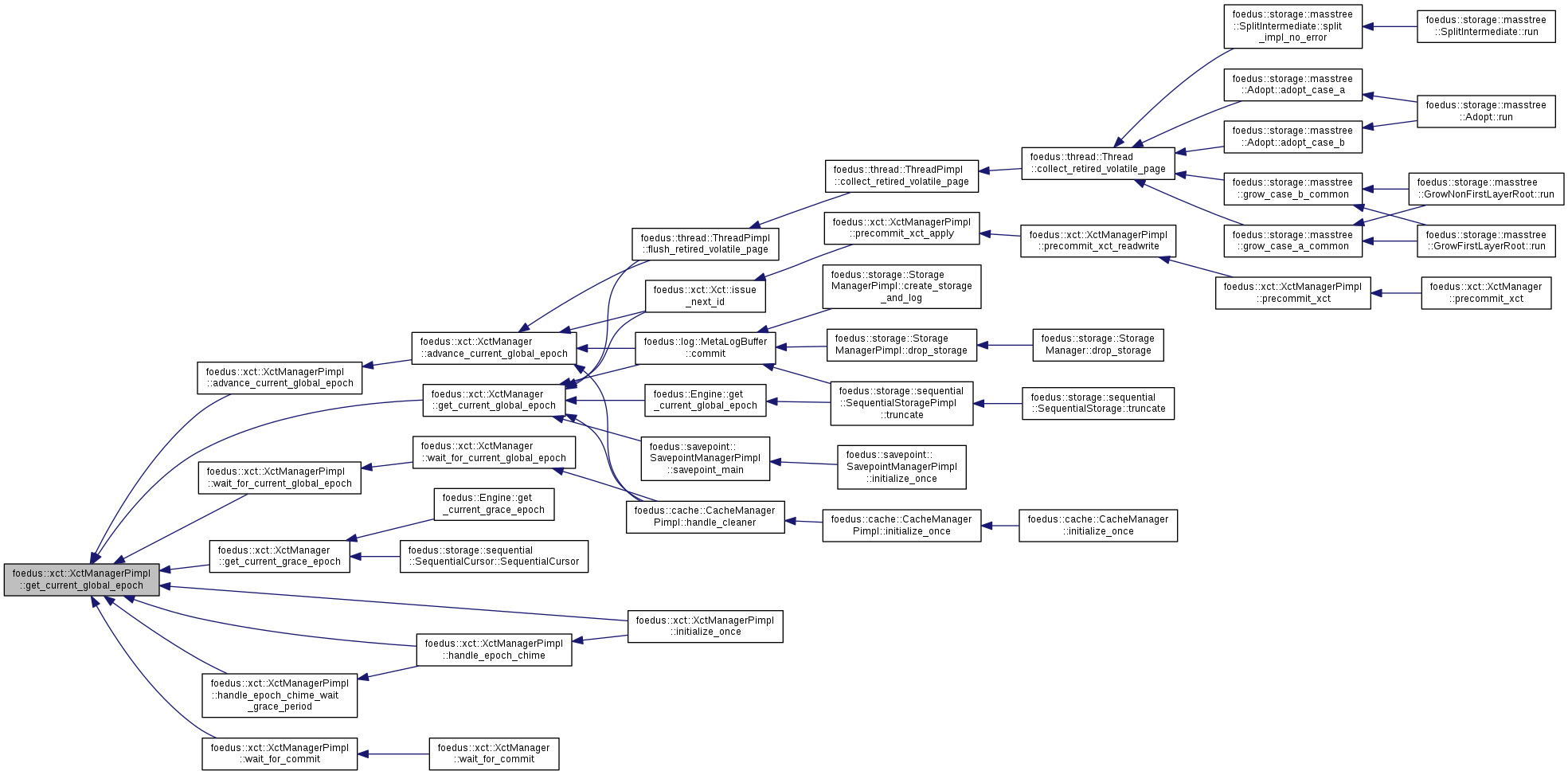

Referenced by advance_current_global_epoch(), foedus::xct::XctManager::get_current_global_epoch(), foedus::xct::XctManager::get_current_grace_epoch(), handle_epoch_chime(), handle_epoch_chime_wait_grace_period(), initialize_once(), wait_for_commit(), and wait_for_current_global_epoch().

|

inline |

Definition at line 113 of file xct_manager_pimpl.hpp.

References control_block_, and foedus::xct::XctManagerControlBlock::current_global_epoch_.

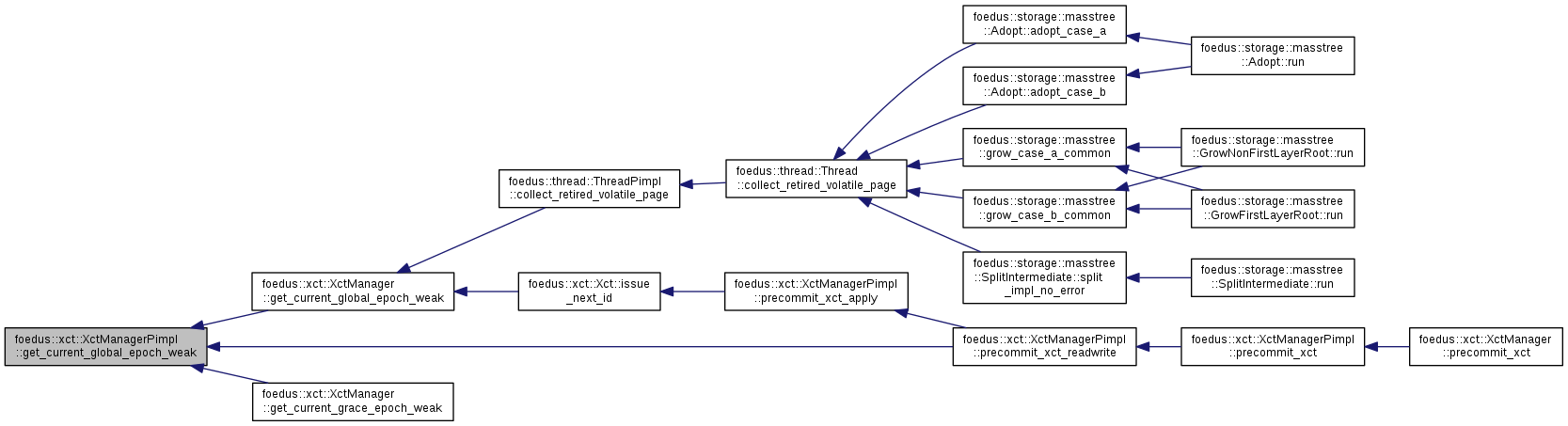

Referenced by foedus::xct::XctManager::get_current_global_epoch_weak(), foedus::xct::XctManager::get_current_grace_epoch_weak(), and precommit_xct_readwrite().

|

inline |

Definition at line 110 of file xct_manager_pimpl.hpp.

References control_block_, and foedus::xct::XctManagerControlBlock::requested_global_epoch_.

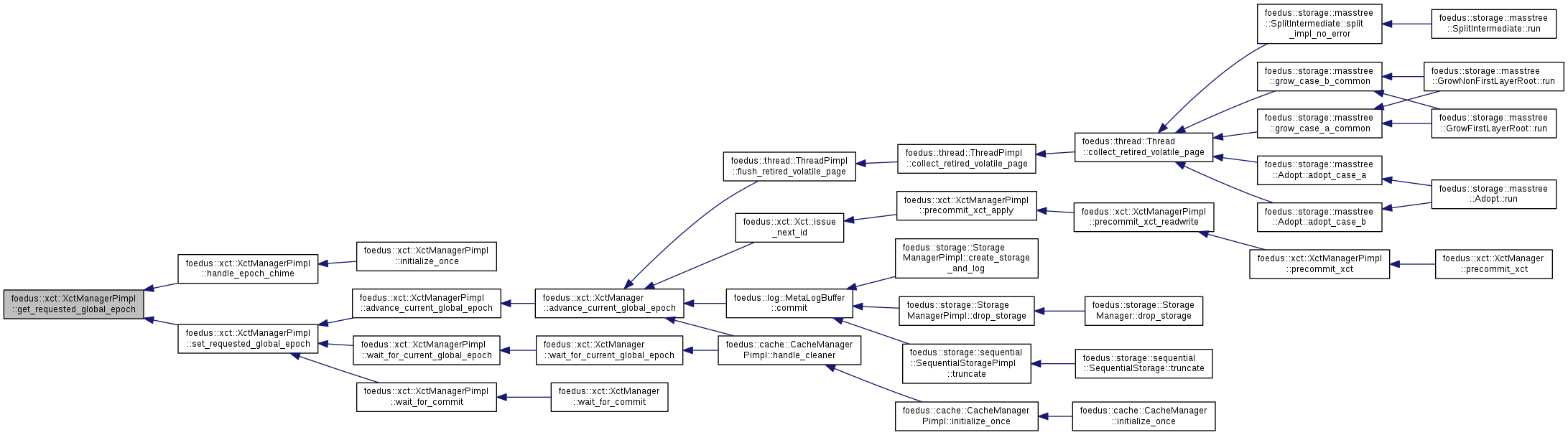

Referenced by handle_epoch_chime(), and set_requested_global_epoch().

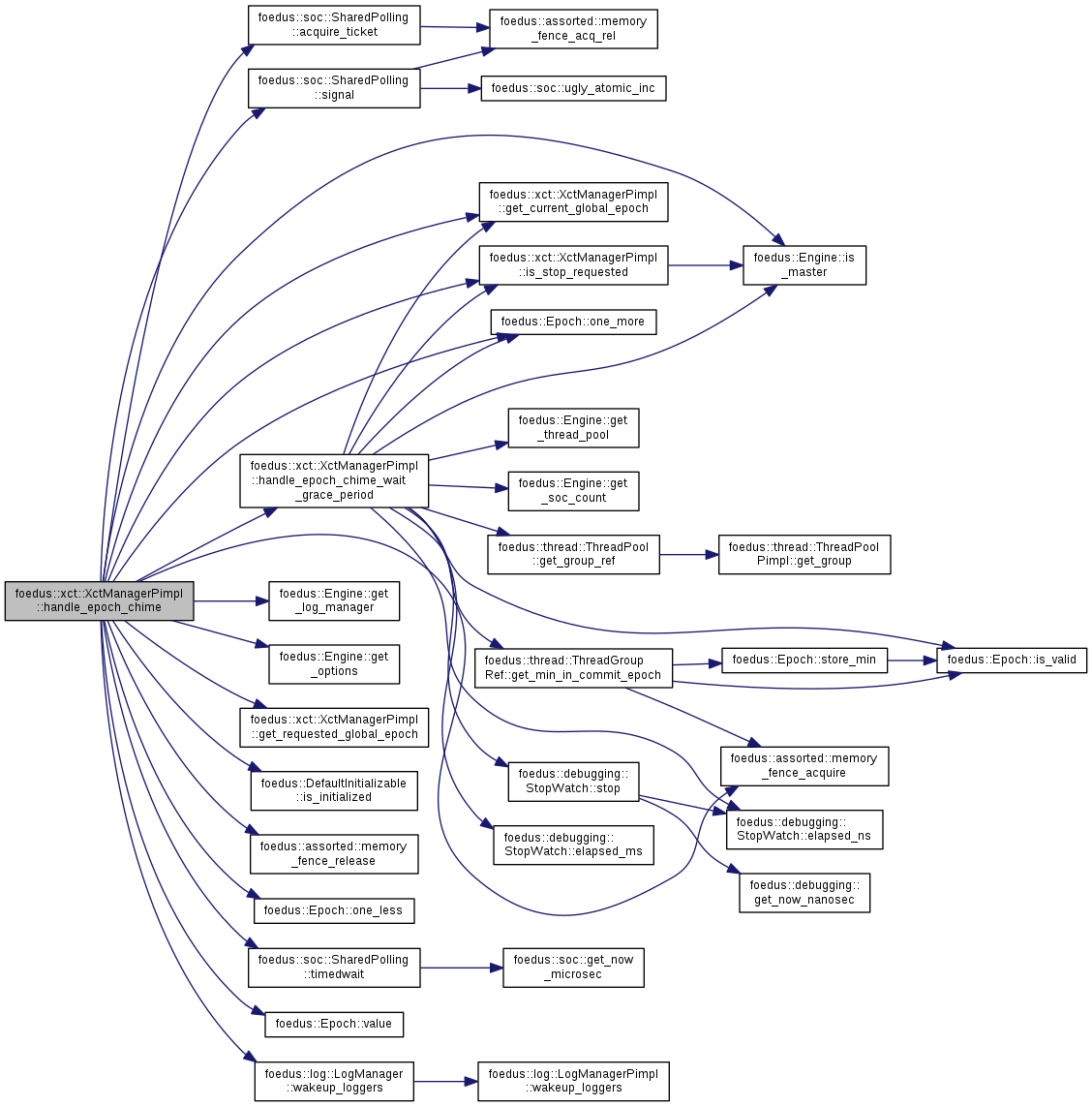

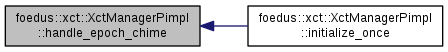

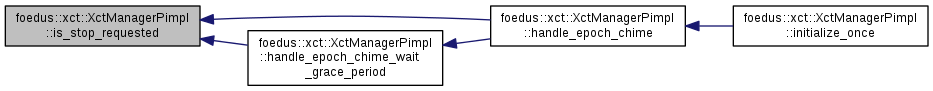

| void foedus::xct::XctManagerPimpl::handle_epoch_chime | ( | ) |

Main routine for epoch_chime_thread_.

Epoch Chime related methods.

This method keeps advancing global_epoch with the interval configured in XctOptions. This method exits when this object's uninitialize() is called.

Definition at line 135 of file xct_manager_pimpl.cpp.

References foedus::soc::SharedPolling::acquire_ticket(), ASSERT_ND, control_block_, foedus::xct::XctManagerControlBlock::current_global_epoch_, foedus::xct::XctManagerControlBlock::current_global_epoch_advanced_, engine_, foedus::xct::XctOptions::epoch_advance_interval_ms_, foedus::xct::XctManagerControlBlock::epoch_chime_wakeup_, get_current_global_epoch(), foedus::Engine::get_log_manager(), foedus::Engine::get_options(), get_requested_global_epoch(), handle_epoch_chime_wait_grace_period(), foedus::DefaultInitializable::is_initialized(), foedus::Engine::is_master(), is_stop_requested(), foedus::soc::kDefaultPollingSpins, foedus::assorted::memory_fence_acquire(), foedus::assorted::memory_fence_release(), foedus::Epoch::one_less(), foedus::Epoch::one_more(), foedus::soc::SharedPolling::signal(), SPINLOCK_WHILE, foedus::soc::SharedPolling::timedwait(), foedus::Epoch::value(), foedus::log::LogManager::wakeup_loggers(), and foedus::EngineOptions::xct_.

Referenced by initialize_once().

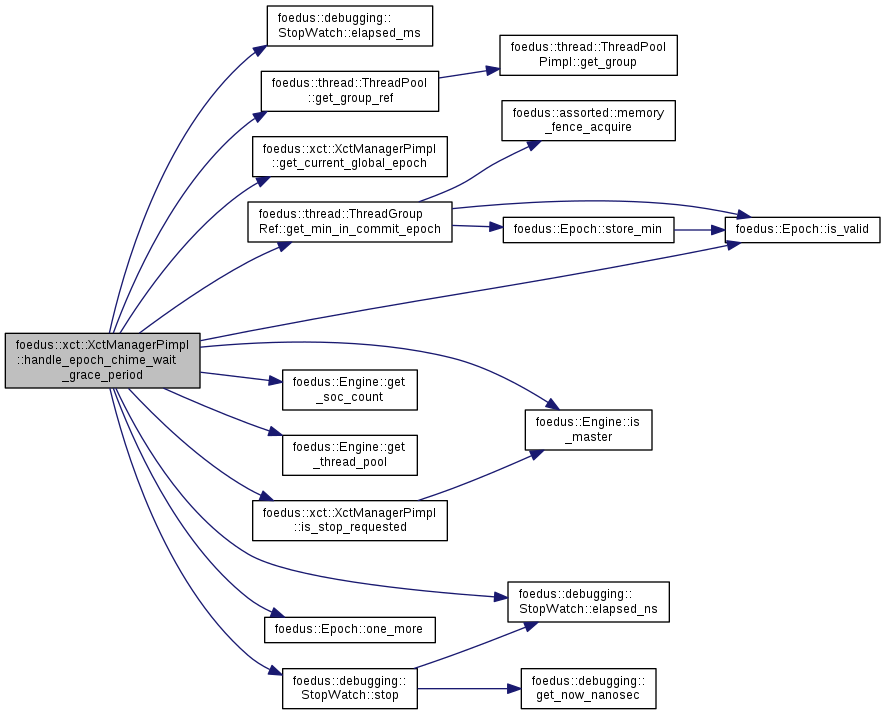

| void foedus::xct::XctManagerPimpl::handle_epoch_chime_wait_grace_period | ( | Epoch | grace_epoch | ) |

Makes sure all worker threads will commit with an epoch larger than grace_epoch.

Definition at line 186 of file xct_manager_pimpl.cpp.

References ASSERT_ND, foedus::debugging::StopWatch::elapsed_ms(), foedus::debugging::StopWatch::elapsed_ns(), engine_, get_current_global_epoch(), foedus::thread::ThreadPool::get_group_ref(), foedus::thread::ThreadGroupRef::get_min_in_commit_epoch(), foedus::Engine::get_soc_count(), foedus::Engine::get_thread_pool(), foedus::Engine::is_master(), is_stop_requested(), foedus::Epoch::is_valid(), foedus::Epoch::one_more(), SPINLOCK_WHILE, and foedus::debugging::StopWatch::stop().

Referenced by handle_epoch_chime().

|

overridevirtual |

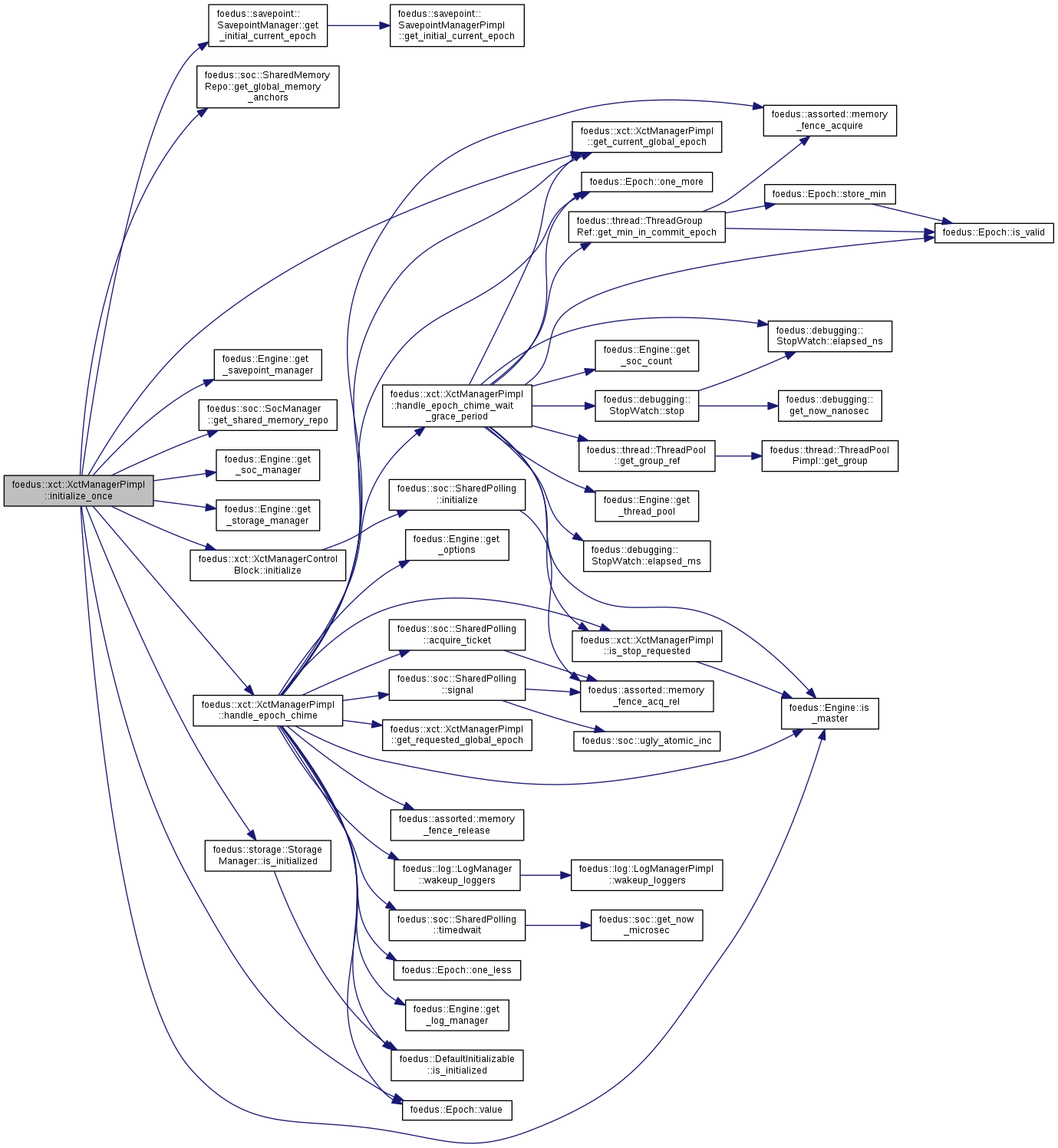

Implements foedus::DefaultInitializable.

Definition at line 84 of file xct_manager_pimpl.cpp.

References ASSERT_ND, control_block_, foedus::xct::XctManagerControlBlock::current_global_epoch_, engine_, foedus::xct::XctManagerControlBlock::epoch_chime_terminate_requested_, epoch_chime_thread_, ERROR_STACK, get_current_global_epoch(), foedus::soc::SharedMemoryRepo::get_global_memory_anchors(), foedus::savepoint::SavepointManager::get_initial_current_epoch(), foedus::Engine::get_savepoint_manager(), foedus::soc::SocManager::get_shared_memory_repo(), foedus::Engine::get_soc_manager(), foedus::Engine::get_storage_manager(), handle_epoch_chime(), foedus::xct::XctManagerControlBlock::initialize(), foedus::storage::StorageManager::is_initialized(), foedus::Engine::is_master(), foedus::kErrorCodeDepedentModuleUnavailableInit, foedus::kRetOk, foedus::xct::XctManagerControlBlock::requested_global_epoch_, foedus::Epoch::value(), and foedus::soc::GlobalMemoryAnchors::xct_manager_memory_.

| bool foedus::xct::XctManagerPimpl::is_stop_requested | ( | ) | const |

Definition at line 125 of file xct_manager_pimpl.cpp.

References ASSERT_ND, control_block_, engine_, foedus::xct::XctManagerControlBlock::epoch_chime_terminate_requested_, and foedus::Engine::is_master().

Referenced by handle_epoch_chime(), and handle_epoch_chime_wait_grace_period().

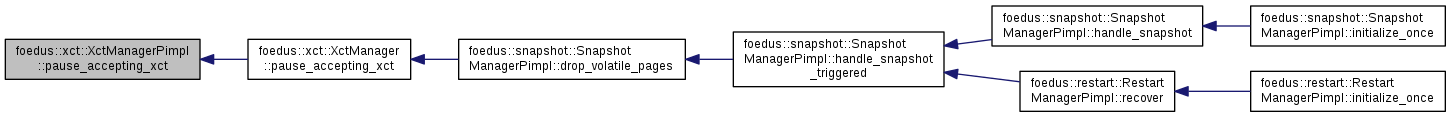

| void foedus::xct::XctManagerPimpl::pause_accepting_xct | ( | ) |

Pause all begin_xct until you call resume_accepting_xct()

Definition at line 327 of file xct_manager_pimpl.cpp.

References control_block_, and foedus::xct::XctManagerControlBlock::new_transaction_paused_.

Referenced by foedus::xct::XctManager::pause_accepting_xct().

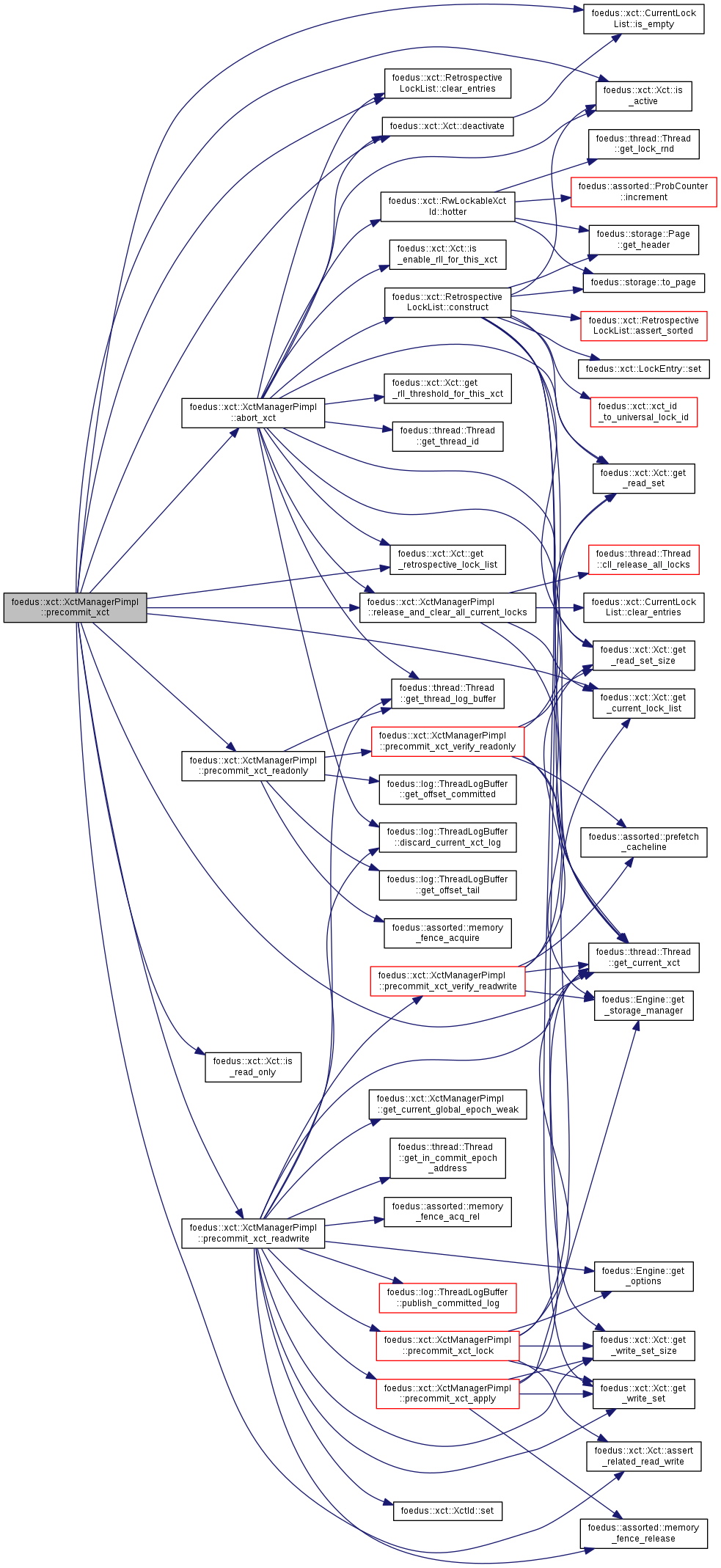

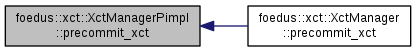

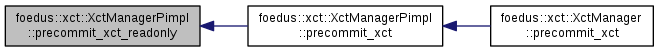

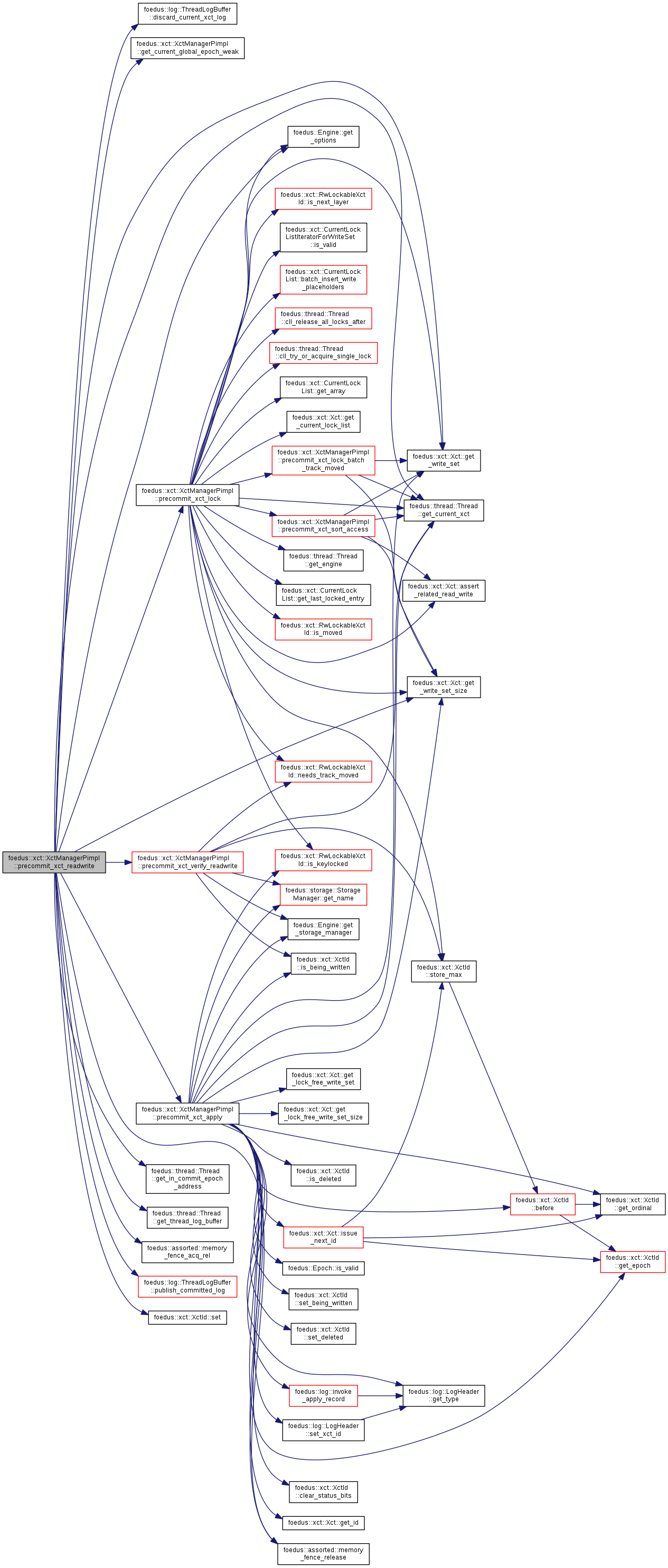

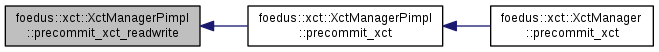

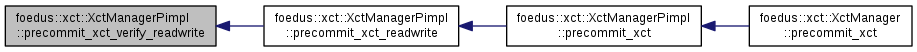

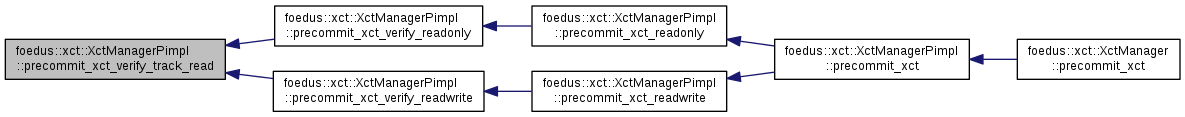

| ErrorCode foedus::xct::XctManagerPimpl::precommit_xct | ( | thread::Thread * | context, |

| Epoch * | commit_epoch | ||

| ) |

This is the gut of commit protocol.

It's mostly same as [TU2013].

Definition at line 343 of file xct_manager_pimpl.cpp.

References abort_xct(), ASSERT_ND, foedus::xct::Xct::assert_related_read_write(), foedus::xct::RetrospectiveLockList::clear_entries(), foedus::xct::Xct::deactivate(), foedus::xct::Xct::get_current_lock_list(), foedus::thread::Thread::get_current_xct(), foedus::xct::Xct::get_retrospective_lock_list(), foedus::xct::Xct::is_active(), foedus::xct::CurrentLockList::is_empty(), foedus::xct::Xct::is_read_only(), foedus::kErrorCodeOk, foedus::kErrorCodeXctNoXct, precommit_xct_readonly(), precommit_xct_readwrite(), and release_and_clear_all_current_locks().

Referenced by foedus::xct::XctManager::precommit_xct().

| bool foedus::xct::XctManagerPimpl::precommit_xct_acquire_writer_lock | ( | thread::Thread * | context, |

| WriteXctAccess * | write | ||

| ) |

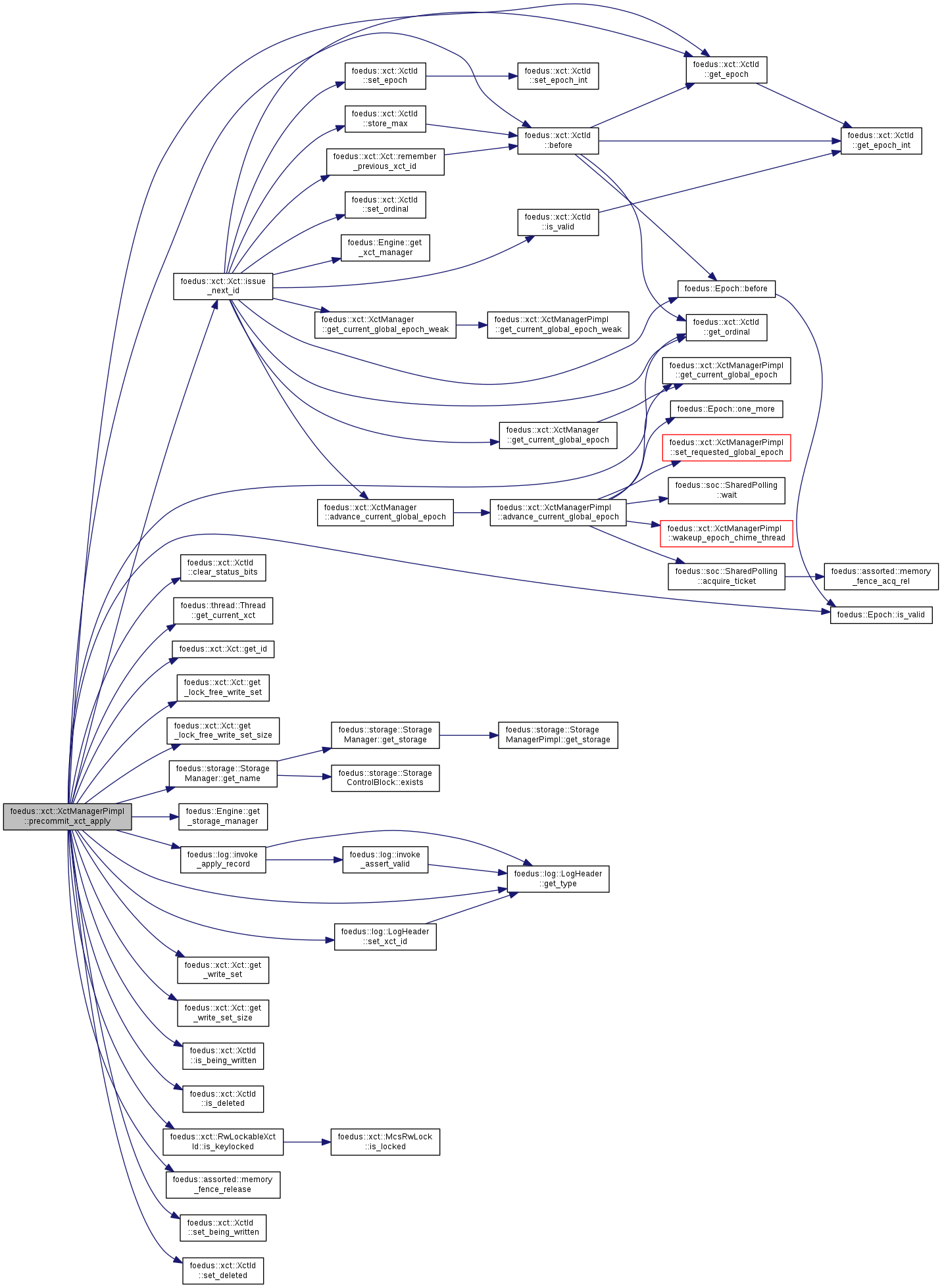

| void foedus::xct::XctManagerPimpl::precommit_xct_apply | ( | thread::Thread * | context, |

| XctId | max_xct_id, | ||

| Epoch * | commit_epoch | ||

| ) |

Phase 3 of precommit_xct()

| [in] | context | thread context |

| [in] | max_xct_id | largest xct_id this transaction depends on, or max(all xct_id). |

| [in,out] | commit_epoch | commit epoch of this transaction. it's finalized in this function. |

Assuming phase 1 and 2 are successfully completed, apply all changes. This method does NOT release locks yet. This is one difference from SILO.

Definition at line 868 of file xct_manager_pimpl.cpp.

References ASSERT_ND, foedus::xct::XctId::before(), foedus::xct::XctId::clear_status_bits(), engine_, foedus::thread::Thread::get_current_xct(), foedus::xct::XctId::get_epoch(), foedus::xct::Xct::get_id(), foedus::xct::Xct::get_lock_free_write_set(), foedus::xct::Xct::get_lock_free_write_set_size(), foedus::storage::StorageManager::get_name(), foedus::xct::XctId::get_ordinal(), foedus::Engine::get_storage_manager(), foedus::log::LogHeader::get_type(), foedus::xct::Xct::get_write_set(), foedus::xct::Xct::get_write_set_size(), foedus::log::BaseLogType::header_, foedus::log::invoke_apply_record(), foedus::xct::XctId::is_being_written(), foedus::xct::XctId::is_deleted(), foedus::xct::RwLockableXctId::is_keylocked(), foedus::Epoch::is_valid(), foedus::xct::Xct::issue_next_id(), foedus::log::kLogCodeHashDelete, foedus::log::kLogCodeMasstreeDelete, foedus::xct::WriteXctAccess::log_entry_, foedus::xct::LockFreeWriteXctAccess::log_entry_, foedus::assorted::memory_fence_release(), foedus::xct::RecordXctAccess::owner_id_address_, foedus::xct::WriteXctAccess::payload_address_, foedus::xct::XctId::set_being_written(), foedus::xct::XctId::set_deleted(), foedus::log::LogHeader::set_xct_id(), foedus::xct::RecordXctAccess::storage_id_, foedus::xct::LockFreeWriteXctAccess::storage_id_, and foedus::xct::RwLockableXctId::xct_id_.

Referenced by precommit_xct_readwrite().

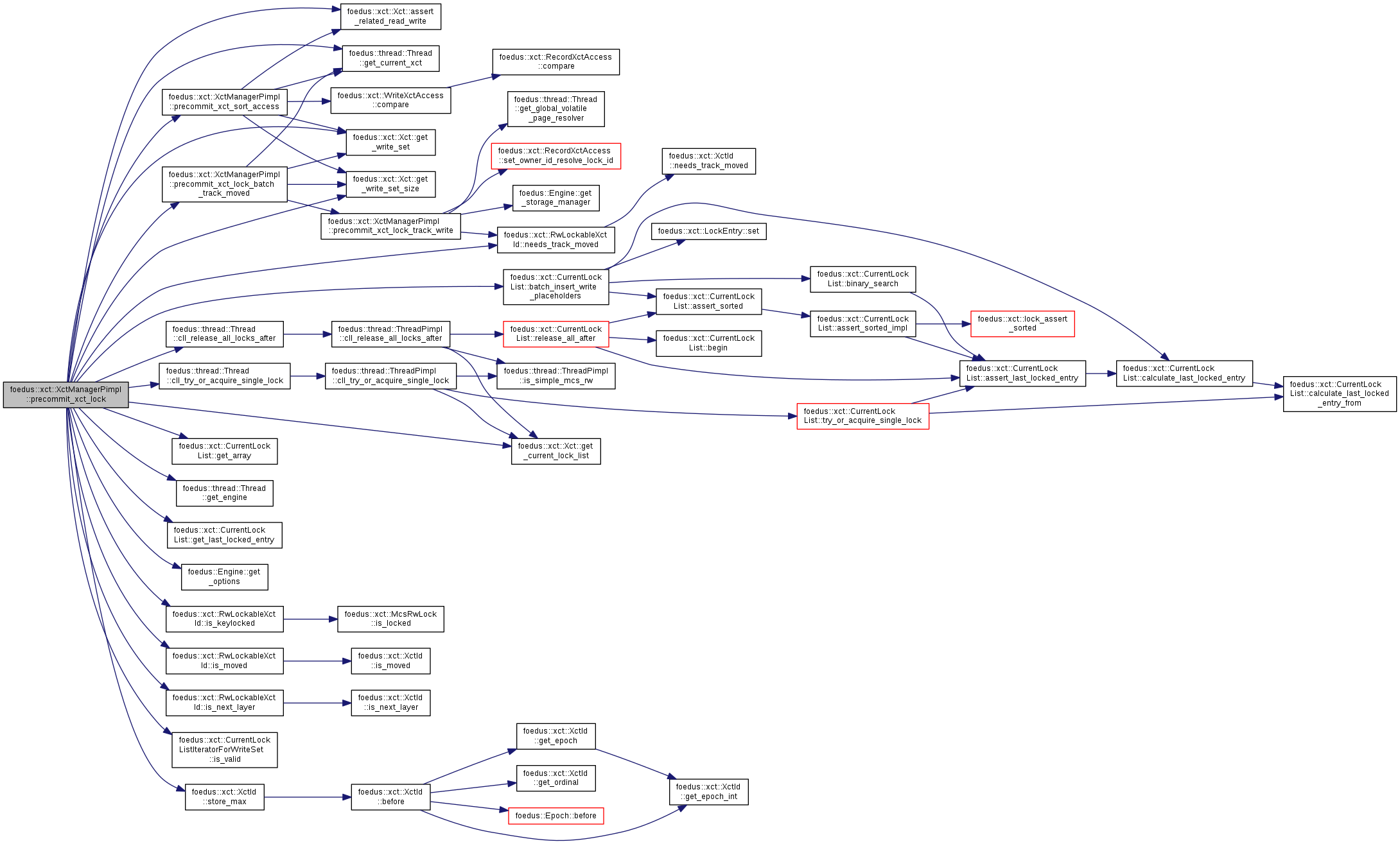

| ErrorCode foedus::xct::XctManagerPimpl::precommit_xct_lock | ( | thread::Thread * | context, |

| XctId * | max_xct_id | ||

| ) |

Phase 1 of precommit_xct()

| [in] | context | thread context |

| [out] | max_xct_id | largest xct_id this transaction depends on, or max(locked xct_id). |

Try to lock all records we are going to write. After phase 2, we take memory fence.

Definition at line 539 of file xct_manager_pimpl.cpp.

References ASSERT_ND, foedus::xct::Xct::assert_related_read_write(), foedus::xct::CurrentLockList::batch_insert_write_placeholders(), CHECK_ERROR_CODE, foedus::thread::Thread::cll_release_all_locks_after(), foedus::thread::Thread::cll_try_or_acquire_single_lock(), foedus::xct::XctOptions::force_canonical_xlocks_in_precommit_, foedus::xct::CurrentLockList::get_array(), foedus::xct::Xct::get_current_lock_list(), foedus::thread::Thread::get_current_xct(), foedus::thread::Thread::get_engine(), foedus::xct::CurrentLockList::get_last_locked_entry(), foedus::Engine::get_options(), foedus::xct::Xct::get_write_set(), foedus::xct::Xct::get_write_set_size(), foedus::xct::RwLockableXctId::is_keylocked(), foedus::xct::RwLockableXctId::is_moved(), foedus::xct::RwLockableXctId::is_next_layer(), foedus::xct::CurrentLockListIteratorForWriteSet::is_valid(), foedus::kErrorCodeOk, foedus::kErrorCodeXctRaceAbort, foedus::xct::kLockListPositionInvalid, foedus::xct::kWriteLock, foedus::xct::LockEntry::lock_, foedus::xct::RwLockableXctId::needs_track_moved(), foedus::xct::ReadXctAccess::observed_owner_id_, foedus::xct::RecordXctAccess::owner_id_address_, precommit_xct_lock_batch_track_moved(), precommit_xct_sort_access(), foedus::xct::LockEntry::preferred_mode_, foedus::xct::WriteXctAccess::related_read_, foedus::xct::XctId::store_max(), foedus::xct::LockEntry::taken_mode_, foedus::xct::LockEntry::universal_lock_id_, UNLIKELY, foedus::EngineOptions::xct_, and foedus::xct::RwLockableXctId::xct_id_.

Referenced by precommit_xct_readwrite().

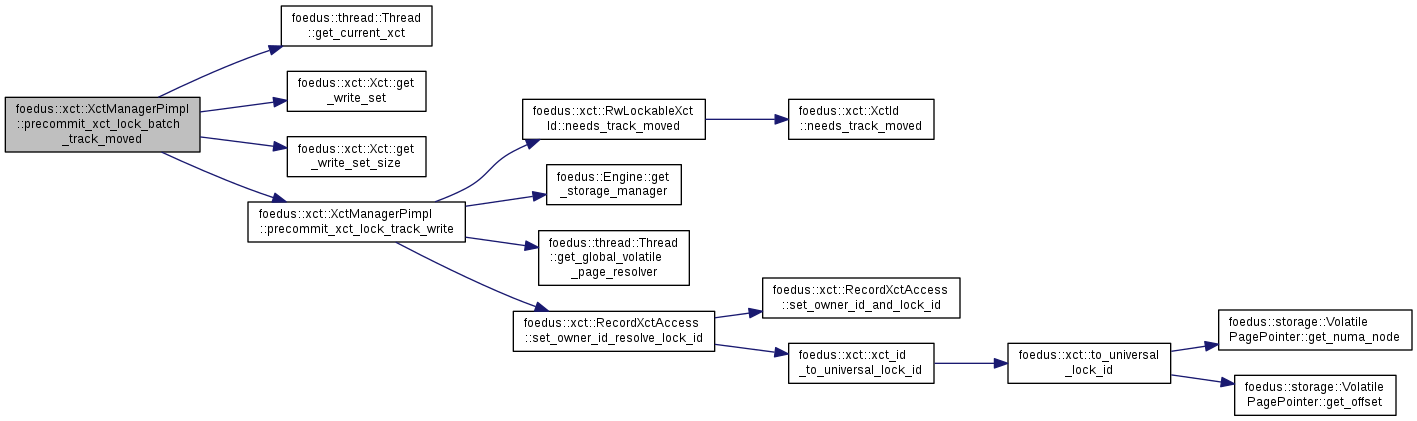

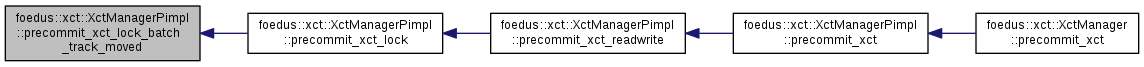

| ErrorCode foedus::xct::XctManagerPimpl::precommit_xct_lock_batch_track_moved | ( | thread::Thread * | context | ) |

Subroutine of precommit_xct_lock to track most of moved records in write-set.

We initially did it per-record while we take a lock, but then we need lots of redoing when the transaction is batch-loading a bunch of records that cause many splits. Thus, before we take X-locks and do final check, we invoke this method to do best-effort tracking in one shot. Note that there still is a chance that the record is moved after this method before we take lock. In that case we redo the process. It happens.

Definition at line 514 of file xct_manager_pimpl.cpp.

References ASSERT_ND, foedus::thread::Thread::get_current_xct(), foedus::xct::Xct::get_write_set(), foedus::xct::Xct::get_write_set_size(), foedus::kErrorCodeOk, foedus::kErrorCodeXctRaceAbort, foedus::xct::RecordXctAccess::owner_id_address_, precommit_xct_lock_track_write(), and UNLIKELY.

Referenced by precommit_xct_lock().

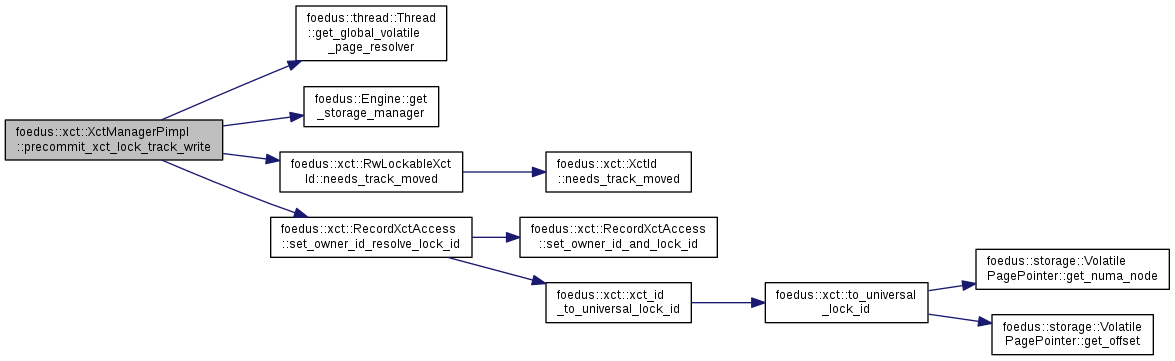

| bool foedus::xct::XctManagerPimpl::precommit_xct_lock_track_write | ( | thread::Thread * | context, |

| WriteXctAccess * | entry | ||

| ) |

used from precommit_xct_lock() to track moved record

Definition at line 432 of file xct_manager_pimpl.cpp.

References ASSERT_ND, engine_, foedus::thread::Thread::get_global_volatile_page_resolver(), foedus::Engine::get_storage_manager(), foedus::xct::RwLockableXctId::needs_track_moved(), foedus::xct::TrackMovedRecordResult::new_owner_address_, foedus::xct::TrackMovedRecordResult::new_payload_address_, foedus::xct::RecordXctAccess::owner_id_address_, foedus::xct::WriteXctAccess::payload_address_, foedus::xct::WriteXctAccess::related_read_, foedus::xct::ReadXctAccess::related_write_, foedus::xct::RecordXctAccess::set_owner_id_resolve_lock_id(), and foedus::xct::RecordXctAccess::storage_id_.

Referenced by precommit_xct_lock_batch_track_moved().

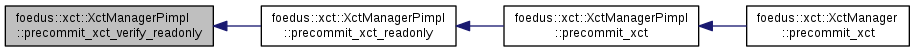

| ErrorCode foedus::xct::XctManagerPimpl::precommit_xct_readonly | ( | thread::Thread * | context, |

| Epoch * | commit_epoch | ||

| ) |

precommit_xct() if the transaction is read-only

If the transaction is read-only, commit-epoch (serialization point) is the largest epoch number in the read set. We don't have to take two memory fences in this case.

Definition at line 371 of file xct_manager_pimpl.cpp.

References ASSERT_ND, foedus::log::ThreadLogBuffer::get_offset_committed(), foedus::log::ThreadLogBuffer::get_offset_tail(), foedus::thread::Thread::get_thread_log_buffer(), foedus::kErrorCodeOk, foedus::kErrorCodeXctRaceAbort, foedus::assorted::memory_fence_acquire(), and precommit_xct_verify_readonly().

Referenced by precommit_xct().

| ErrorCode foedus::xct::XctManagerPimpl::precommit_xct_readwrite | ( | thread::Thread * | context, |

| Epoch * | commit_epoch | ||

| ) |

precommit_xct() if the transaction is read-write

See [TU2013] for the full protocol in this case.

Definition at line 384 of file xct_manager_pimpl.cpp.

References ASSERT_ND, foedus::log::ThreadLogBuffer::discard_current_xct_log(), foedus::log::LogOptions::emulation_, engine_, get_current_global_epoch_weak(), foedus::thread::Thread::get_current_xct(), foedus::thread::Thread::get_in_commit_epoch_address(), foedus::Engine::get_options(), foedus::thread::Thread::get_thread_log_buffer(), foedus::xct::Xct::get_write_set(), foedus::xct::Xct::get_write_set_size(), foedus::Epoch::kEpochInitialDurable, foedus::kErrorCodeOk, foedus::kErrorCodeXctRaceAbort, foedus::EngineOptions::log_, foedus::assorted::memory_fence_acq_rel(), foedus::assorted::memory_fence_release(), foedus::fs::DeviceEmulationOptions::null_device_, precommit_xct_apply(), precommit_xct_lock(), precommit_xct_verify_readwrite(), foedus::log::ThreadLogBuffer::publish_committed_log(), and foedus::xct::XctId::set().

Referenced by precommit_xct().

| bool foedus::xct::XctManagerPimpl::precommit_xct_request_writer_lock | ( | thread::Thread * | context, |

| WriteXctAccess * | write | ||

| ) |

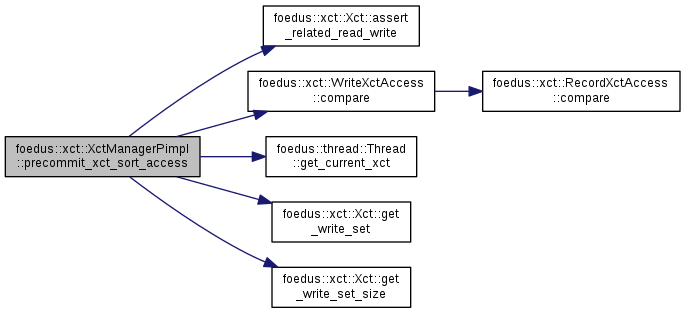

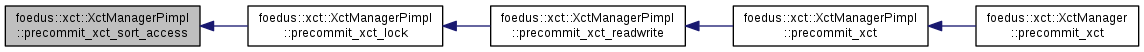

| void foedus::xct::XctManagerPimpl::precommit_xct_sort_access | ( | thread::Thread * | context | ) |

Definition at line 478 of file xct_manager_pimpl.cpp.

References ASSERT_ND, foedus::xct::Xct::assert_related_read_write(), foedus::xct::WriteXctAccess::compare(), foedus::thread::Thread::get_current_xct(), foedus::xct::Xct::get_write_set(), foedus::xct::Xct::get_write_set_size(), foedus::xct::RecordXctAccess::ordinal_, foedus::xct::RecordXctAccess::owner_id_address_, foedus::xct::WriteXctAccess::related_read_, and foedus::xct::ReadXctAccess::related_write_.

Referenced by precommit_xct_lock().

| bool foedus::xct::XctManagerPimpl::precommit_xct_try_acquire_writer_locks | ( | thread::Thread * | context | ) |

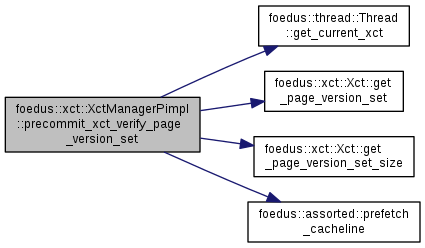

| bool foedus::xct::XctManagerPimpl::precommit_xct_verify_page_version_set | ( | thread::Thread * | context | ) |

Returns false if there is any page version conflict.

Definition at line 847 of file xct_manager_pimpl.cpp.

References foedus::xct::PageVersionAccess::address_, foedus::thread::Thread::get_current_xct(), foedus::xct::Xct::get_page_version_set(), foedus::xct::Xct::get_page_version_set_size(), foedus::xct::PageVersionAccess::observed_, foedus::assorted::prefetch_cacheline(), and foedus::storage::PageVersion::status_.

Referenced by precommit_xct_verify_readonly(), and precommit_xct_verify_readwrite().

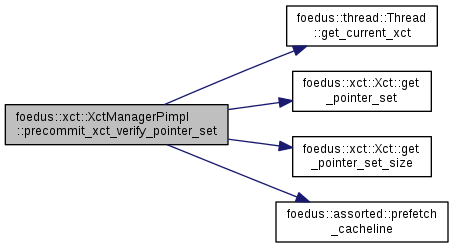

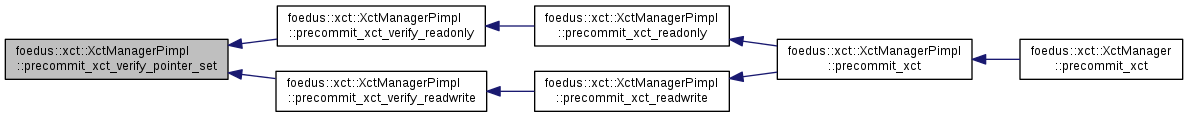

| bool foedus::xct::XctManagerPimpl::precommit_xct_verify_pointer_set | ( | thread::Thread * | context | ) |

Returns false if there is any pointer set conflict.

Definition at line 828 of file xct_manager_pimpl.cpp.

References foedus::xct::PointerAccess::address_, foedus::thread::Thread::get_current_xct(), foedus::xct::Xct::get_pointer_set(), foedus::xct::Xct::get_pointer_set_size(), foedus::xct::PointerAccess::observed_, foedus::assorted::prefetch_cacheline(), and foedus::storage::VolatilePagePointer::word.

Referenced by precommit_xct_verify_readonly(), and precommit_xct_verify_readwrite().

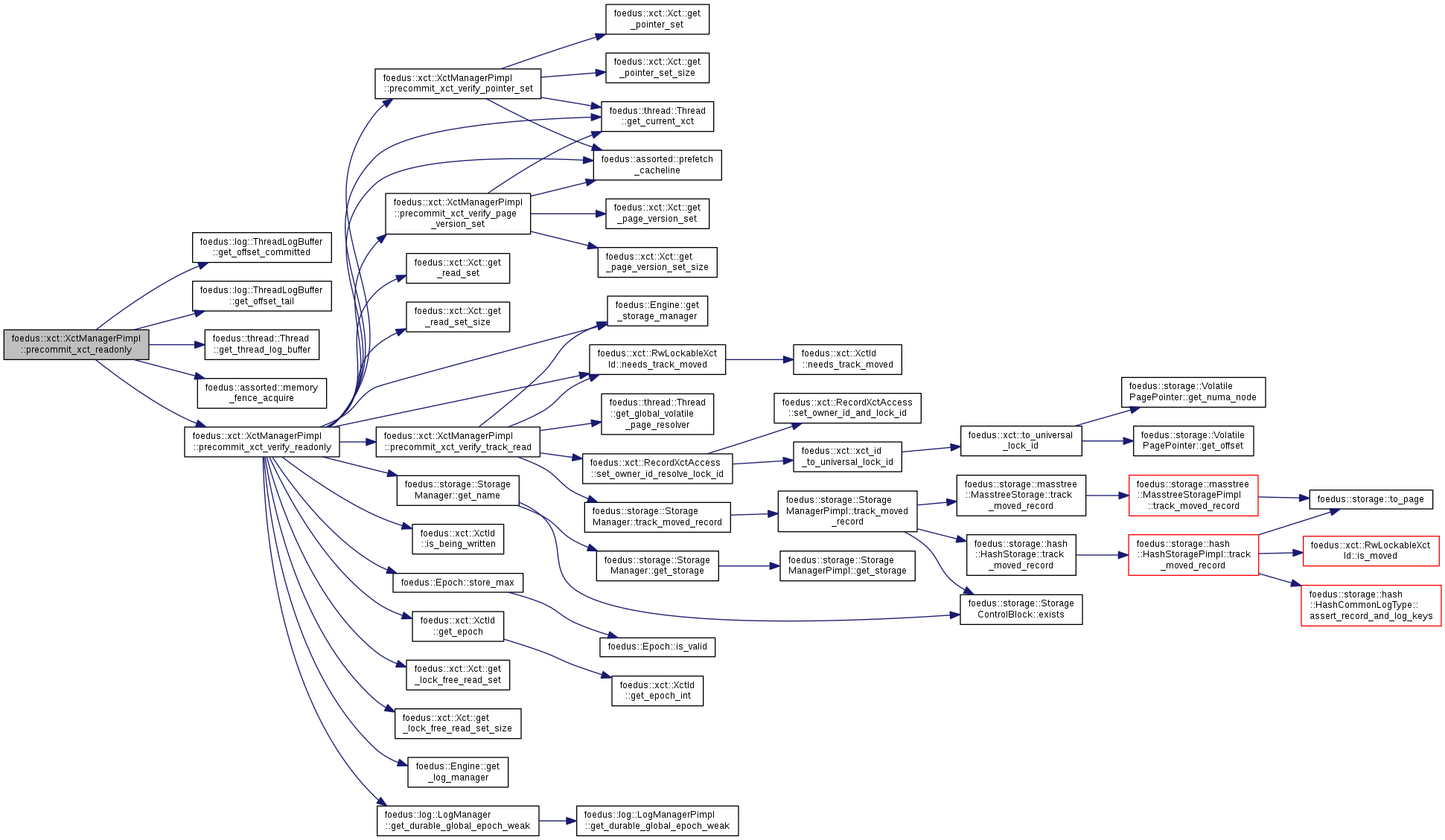

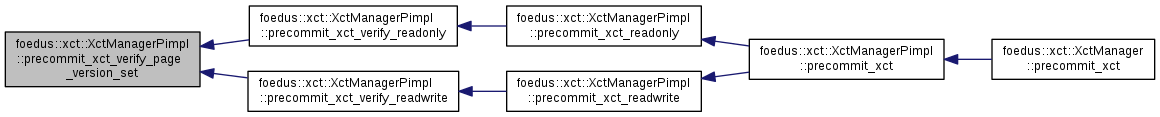

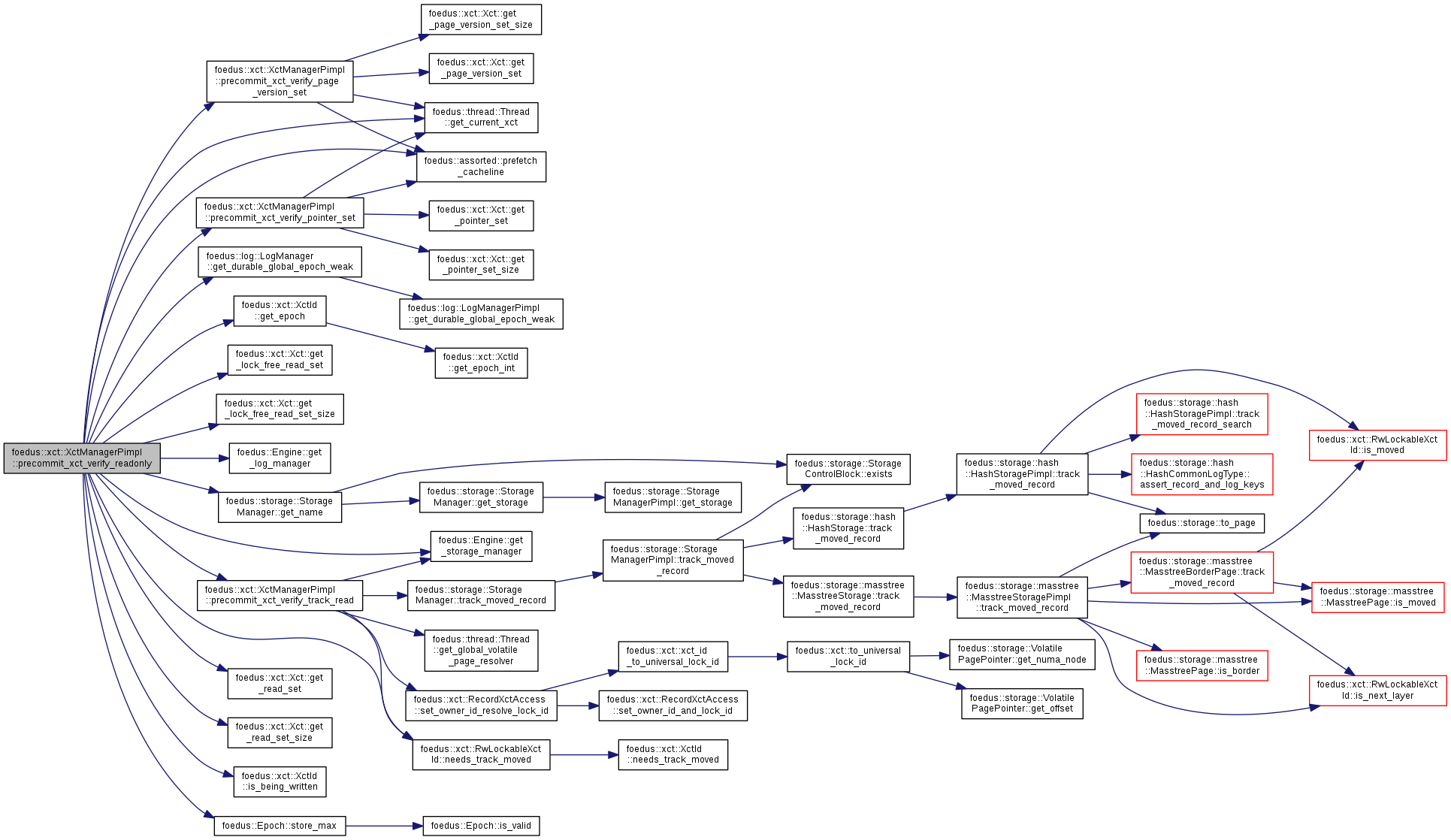

| bool foedus::xct::XctManagerPimpl::precommit_xct_verify_readonly | ( | thread::Thread * | context, |

| Epoch * | commit_epoch | ||

| ) |

Phase 2 of precommit_xct() for read-only case.

Verify the observed read set and set the commit epoch to the highest epoch it observed.

Definition at line 648 of file xct_manager_pimpl.cpp.

References ASSERT_ND, engine_, foedus::thread::Thread::get_current_xct(), foedus::log::LogManager::get_durable_global_epoch_weak(), foedus::xct::XctId::get_epoch(), foedus::xct::Xct::get_lock_free_read_set(), foedus::xct::Xct::get_lock_free_read_set_size(), foedus::Engine::get_log_manager(), foedus::storage::StorageManager::get_name(), foedus::xct::Xct::get_read_set(), foedus::xct::Xct::get_read_set_size(), foedus::Engine::get_storage_manager(), foedus::xct::XctId::is_being_written(), foedus::xct::RwLockableXctId::needs_track_moved(), foedus::xct::ReadXctAccess::observed_owner_id_, foedus::xct::LockFreeReadXctAccess::observed_owner_id_, foedus::xct::RecordXctAccess::owner_id_address_, foedus::xct::LockFreeReadXctAccess::owner_id_address_, precommit_xct_verify_page_version_set(), precommit_xct_verify_pointer_set(), precommit_xct_verify_track_read(), foedus::assorted::prefetch_cacheline(), foedus::xct::RecordXctAccess::storage_id_, foedus::Epoch::store_max(), UNLIKELY, and foedus::xct::RwLockableXctId::xct_id_.

Referenced by precommit_xct_readonly().

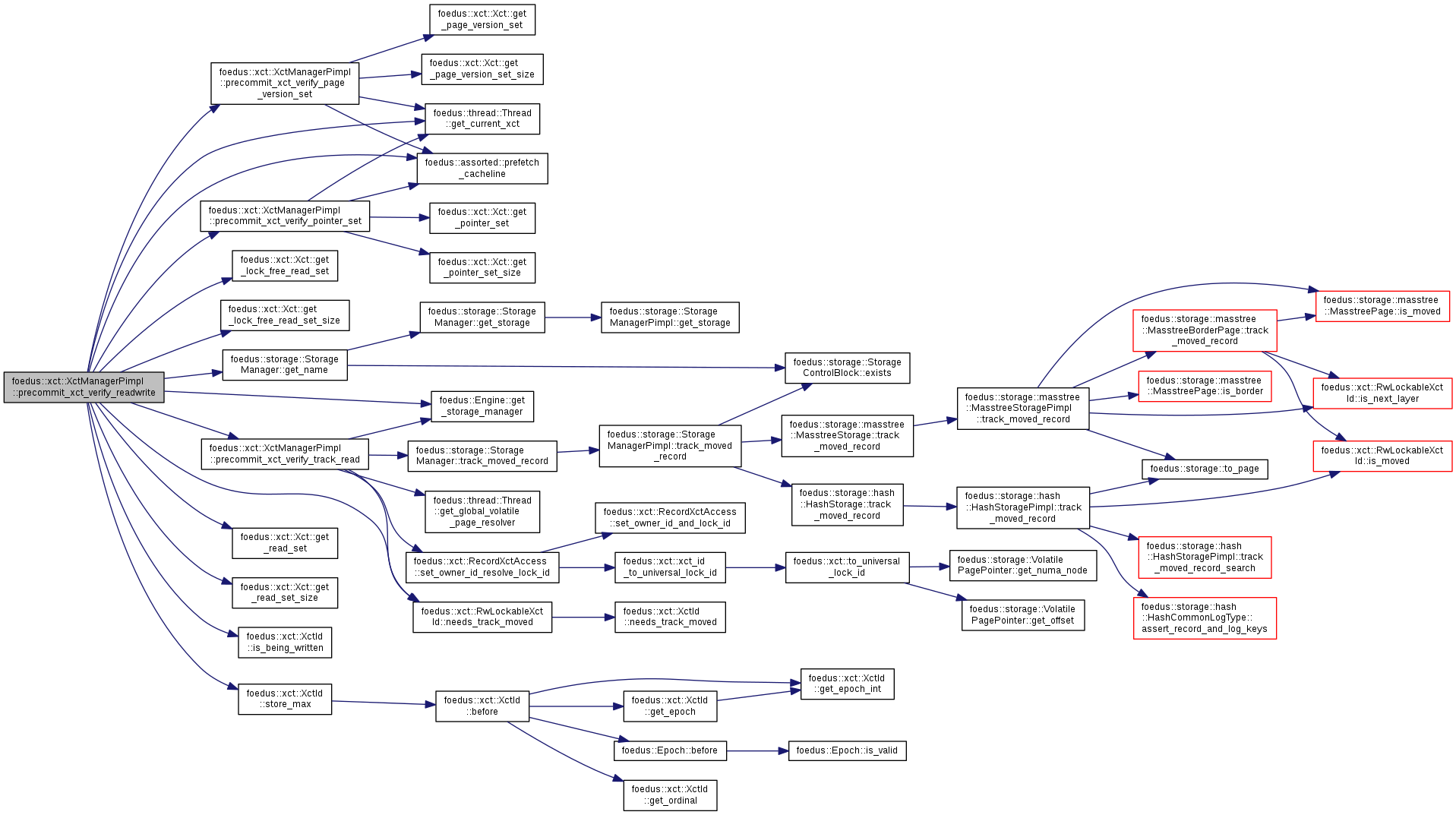

| bool foedus::xct::XctManagerPimpl::precommit_xct_verify_readwrite | ( | thread::Thread * | context, |

| XctId * | max_xct_id | ||

| ) |

Phase 2 of precommit_xct() for read-write case.

| [in] | context | thread context |

| [in,out] | max_xct_id | largest xct_id this transaction depends on, or max(all xct_id). |

Verify the observed read set and write set against the same record. Because phase 2 is after the memory fence, no thread would take new locks while checking.

Definition at line 731 of file xct_manager_pimpl.cpp.

References ASSERT_ND, engine_, foedus::thread::Thread::get_current_xct(), foedus::xct::Xct::get_lock_free_read_set(), foedus::xct::Xct::get_lock_free_read_set_size(), foedus::storage::StorageManager::get_name(), foedus::xct::Xct::get_read_set(), foedus::xct::Xct::get_read_set_size(), foedus::Engine::get_storage_manager(), foedus::xct::XctId::is_being_written(), foedus::xct::RwLockableXctId::needs_track_moved(), foedus::xct::ReadXctAccess::observed_owner_id_, foedus::xct::LockFreeReadXctAccess::observed_owner_id_, foedus::xct::RecordXctAccess::owner_id_address_, foedus::xct::LockFreeReadXctAccess::owner_id_address_, precommit_xct_verify_page_version_set(), precommit_xct_verify_pointer_set(), precommit_xct_verify_track_read(), foedus::assorted::prefetch_cacheline(), foedus::xct::ReadXctAccess::related_write_, foedus::xct::RecordXctAccess::storage_id_, foedus::xct::XctId::store_max(), UNLIKELY, and foedus::xct::RwLockableXctId::xct_id_.

Referenced by precommit_xct_readwrite().

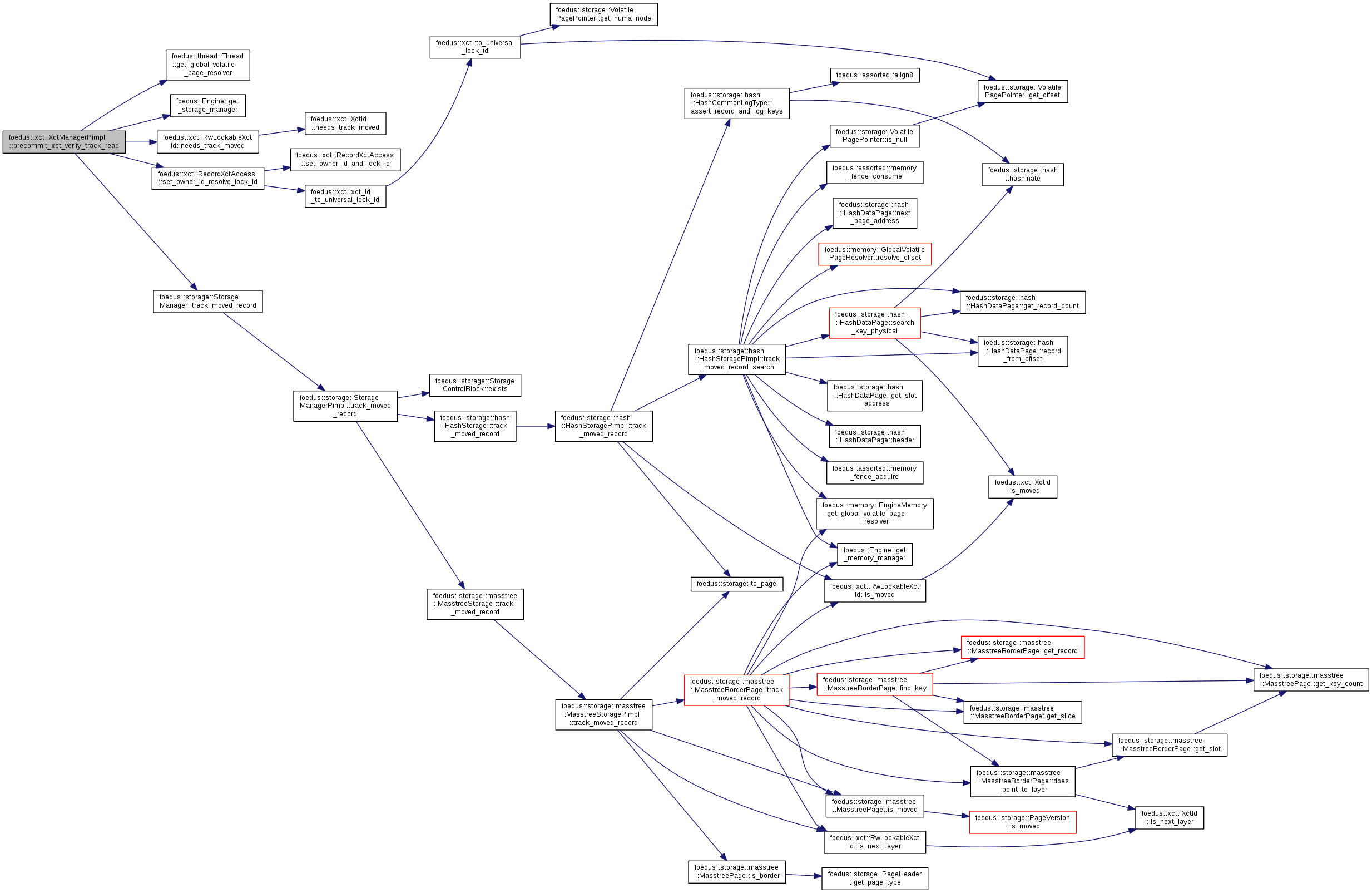

| bool foedus::xct::XctManagerPimpl::precommit_xct_verify_track_read | ( | thread::Thread * | context, |

| ReadXctAccess * | entry | ||

| ) |

used from verification methods to track moved record

Definition at line 458 of file xct_manager_pimpl.cpp.

References ASSERT_ND, engine_, foedus::thread::Thread::get_global_volatile_page_resolver(), foedus::Engine::get_storage_manager(), foedus::xct::RwLockableXctId::needs_track_moved(), foedus::xct::TrackMovedRecordResult::new_owner_address_, foedus::xct::RecordXctAccess::owner_id_address_, foedus::xct::ReadXctAccess::related_write_, foedus::xct::RecordXctAccess::set_owner_id_resolve_lock_id(), foedus::xct::RecordXctAccess::storage_id_, and foedus::storage::StorageManager::track_moved_record().

Referenced by precommit_xct_verify_readonly(), and precommit_xct_verify_readwrite().

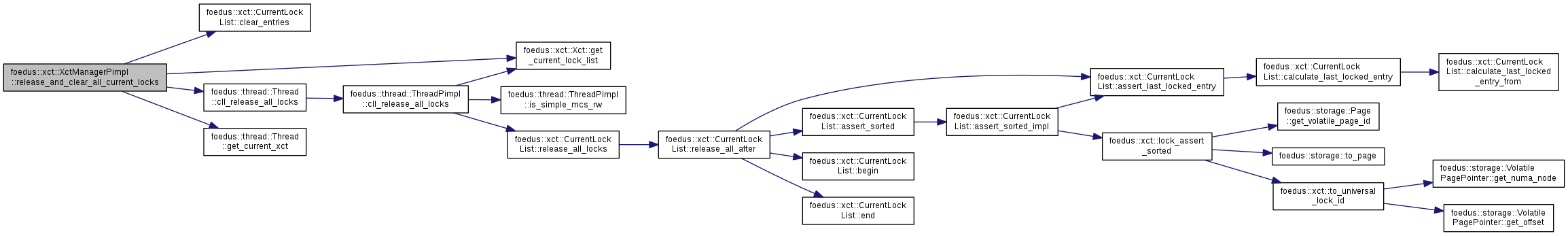

| void foedus::xct::XctManagerPimpl::release_and_clear_all_current_locks | ( | thread::Thread * | context | ) |

unlocking all acquired locks, used when commit/abort.

Definition at line 997 of file xct_manager_pimpl.cpp.

References foedus::xct::CurrentLockList::clear_entries(), foedus::thread::Thread::cll_release_all_locks(), foedus::xct::Xct::get_current_lock_list(), and foedus::thread::Thread::get_current_xct().

Referenced by abort_xct(), and precommit_xct().

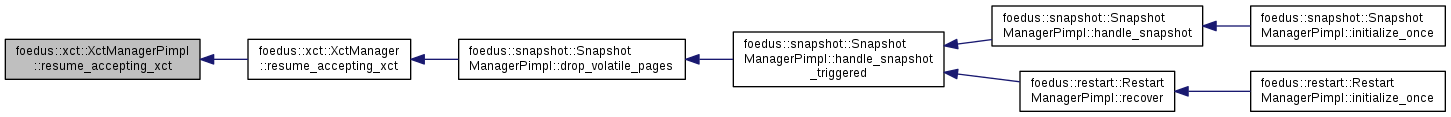

| void foedus::xct::XctManagerPimpl::resume_accepting_xct | ( | ) |

Make sure you call this after pause_accepting_xct().

Definition at line 330 of file xct_manager_pimpl.cpp.

References control_block_, and foedus::xct::XctManagerControlBlock::new_transaction_paused_.

Referenced by foedus::xct::XctManager::resume_accepting_xct().

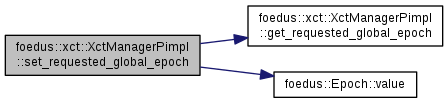

| void foedus::xct::XctManagerPimpl::set_requested_global_epoch | ( | Epoch | request | ) |

Definition at line 241 of file xct_manager_pimpl.cpp.

References control_block_, get_requested_global_epoch(), foedus::xct::XctManagerControlBlock::requested_global_epoch_, and foedus::Epoch::value().

Referenced by advance_current_global_epoch(), wait_for_commit(), and wait_for_current_global_epoch().

|

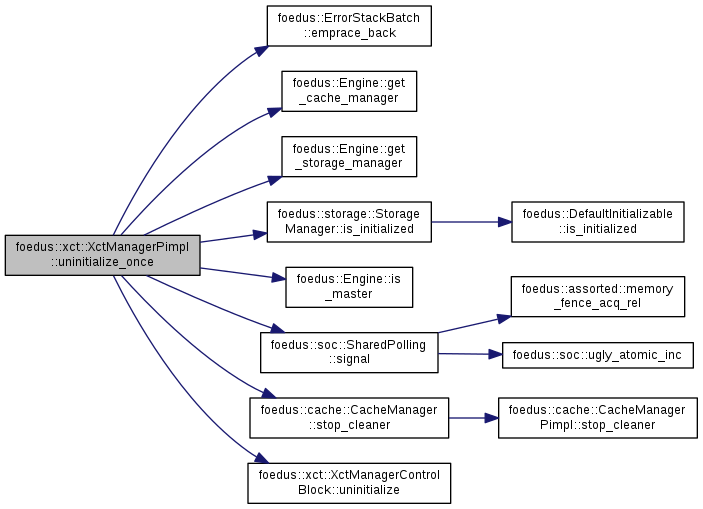

overridevirtual |

Implements foedus::DefaultInitializable.

Definition at line 104 of file xct_manager_pimpl.cpp.

References CHECK_ERROR, control_block_, foedus::ErrorStackBatch::emprace_back(), engine_, foedus::xct::XctManagerControlBlock::epoch_chime_terminate_requested_, epoch_chime_thread_, foedus::xct::XctManagerControlBlock::epoch_chime_wakeup_, ERROR_STACK, foedus::Engine::get_cache_manager(), foedus::Engine::get_storage_manager(), foedus::storage::StorageManager::is_initialized(), foedus::Engine::is_master(), foedus::kErrorCodeDepedentModuleUnavailableUninit, foedus::soc::SharedPolling::signal(), foedus::cache::CacheManager::stop_cleaner(), SUMMARIZE_ERROR_BATCH, and foedus::xct::XctManagerControlBlock::uninitialize().

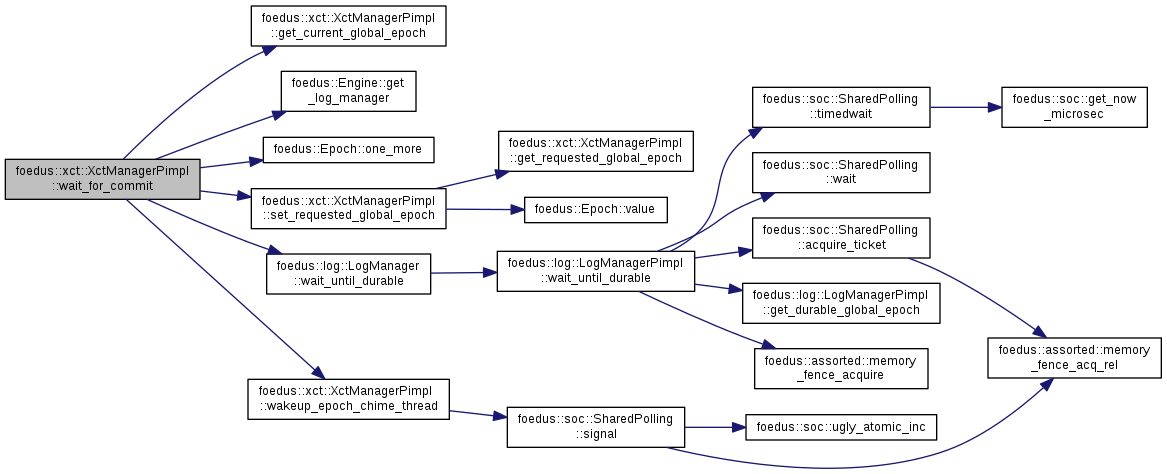

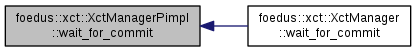

| ErrorCode foedus::xct::XctManagerPimpl::wait_for_commit | ( | Epoch | commit_epoch, |

| int64_t | wait_microseconds | ||

| ) |

Definition at line 290 of file xct_manager_pimpl.cpp.

References engine_, get_current_global_epoch(), foedus::Engine::get_log_manager(), foedus::Epoch::one_more(), set_requested_global_epoch(), foedus::log::LogManager::wait_until_durable(), and wakeup_epoch_chime_thread().

Referenced by foedus::xct::XctManager::wait_for_commit().

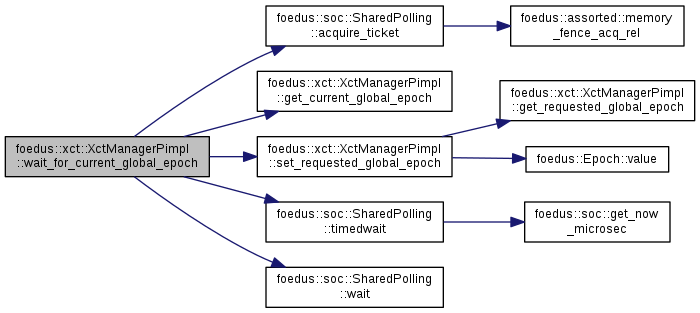

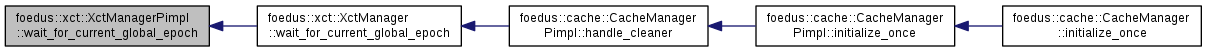

| void foedus::xct::XctManagerPimpl::wait_for_current_global_epoch | ( | Epoch | target_epoch, |

| int64_t | wait_microseconds | ||

| ) |

Definition at line 273 of file xct_manager_pimpl.cpp.

References foedus::soc::SharedPolling::acquire_ticket(), control_block_, foedus::xct::XctManagerControlBlock::current_global_epoch_advanced_, get_current_global_epoch(), set_requested_global_epoch(), foedus::soc::SharedPolling::timedwait(), and foedus::soc::SharedPolling::wait().

Referenced by foedus::xct::XctManager::wait_for_current_global_epoch().

| void foedus::xct::XctManagerPimpl::wait_until_resume_accepting_xct | ( | thread::Thread * | context | ) |

Definition at line 334 of file xct_manager_pimpl.cpp.

References control_block_, and foedus::xct::XctManagerControlBlock::new_transaction_paused_.

Referenced by begin_xct().

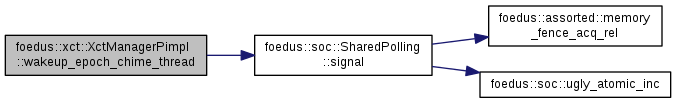

| void foedus::xct::XctManagerPimpl::wakeup_epoch_chime_thread | ( | ) |

Definition at line 237 of file xct_manager_pimpl.cpp.

References control_block_, foedus::xct::XctManagerControlBlock::epoch_chime_wakeup_, and foedus::soc::SharedPolling::signal().

Referenced by advance_current_global_epoch(), and wait_for_commit().

| XctManagerControlBlock* foedus::xct::XctManagerPimpl::control_block_ |

Definition at line 225 of file xct_manager_pimpl.hpp.

Referenced by advance_current_global_epoch(), begin_xct(), get_current_global_epoch(), get_current_global_epoch_weak(), get_requested_global_epoch(), handle_epoch_chime(), initialize_once(), is_stop_requested(), pause_accepting_xct(), resume_accepting_xct(), set_requested_global_epoch(), uninitialize_once(), wait_for_current_global_epoch(), wait_until_resume_accepting_xct(), and wakeup_epoch_chime_thread().

| Engine* const foedus::xct::XctManagerPimpl::engine_ |

Definition at line 224 of file xct_manager_pimpl.hpp.

Referenced by handle_epoch_chime(), handle_epoch_chime_wait_grace_period(), initialize_once(), is_stop_requested(), precommit_xct_apply(), precommit_xct_lock_track_write(), precommit_xct_readwrite(), precommit_xct_verify_readonly(), precommit_xct_verify_readwrite(), precommit_xct_verify_track_read(), uninitialize_once(), and wait_for_commit().

| std::thread foedus::xct::XctManagerPimpl::epoch_chime_thread_ |

This thread keeps advancing the current_global_epoch_.

Launched only in master engine.

Definition at line 231 of file xct_manager_pimpl.hpp.

Referenced by initialize_once(), and uninitialize_once().