|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

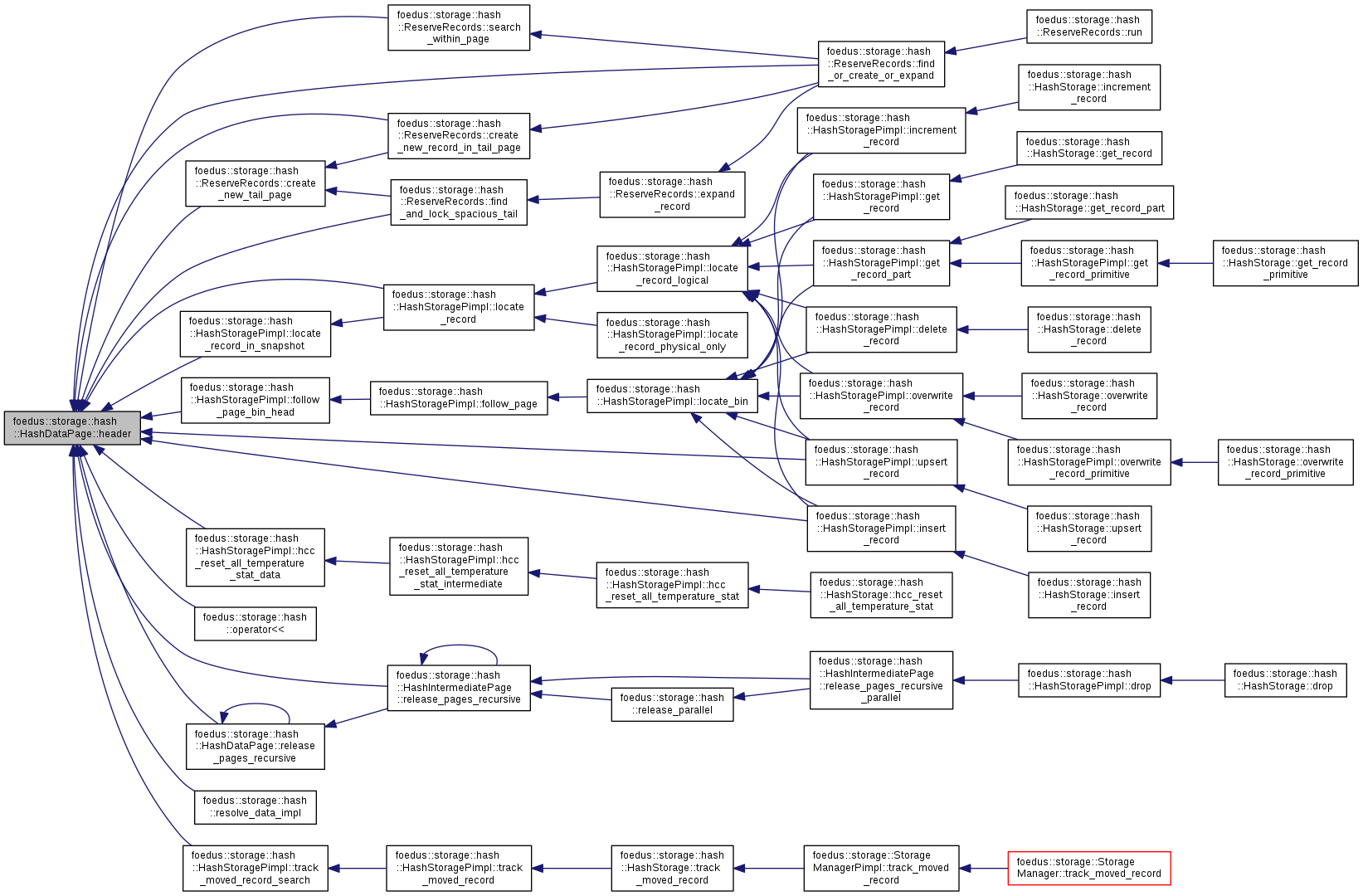

Represents an individual data page in Hashtable Storage. More...

Represents an individual data page in Hashtable Storage.

This is one of the page types in hash. A data page contains full keys and values.

| Headers, including bloom filter for faster check |

| Record Data part, which grows forward |

| Unused part |

|---|

| Slot part (32 bytes per record), which grows backward |

Definition at line 155 of file hash_page_impl.hpp.

#include <hash_page_impl.hpp>

Classes | |

| struct | Slot |

| Fix-sized slot for each record, which is placed at the end of data region. More... | |

Public Member Functions | |

| HashDataPage ()=delete | |

| HashDataPage (const HashDataPage &other)=delete | |

| HashDataPage & | operator= (const HashDataPage &other)=delete |

| void | initialize_volatile_page (StorageId storage_id, VolatilePagePointer page_id, const Page *parent, HashBin bin, uint8_t bin_shifts) |

| void | initialize_snapshot_page (StorageId storage_id, SnapshotPagePointer page_id, HashBin bin, uint8_t bin_bits, uint8_t bin_shifts) |

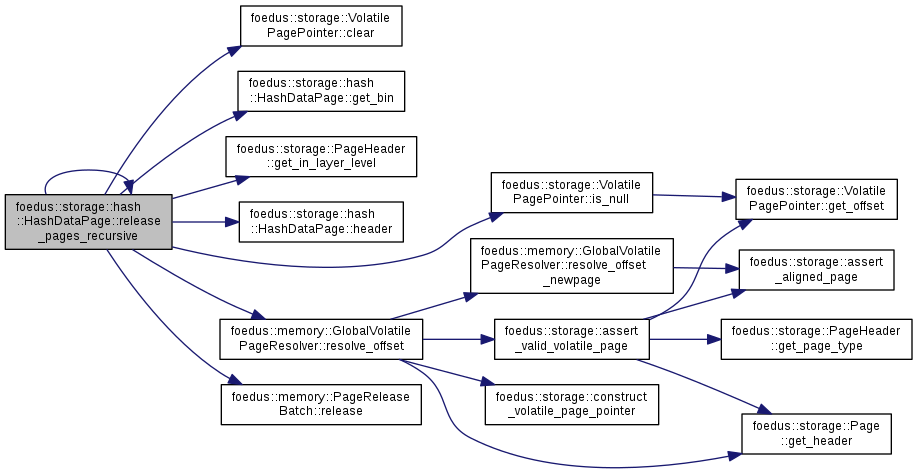

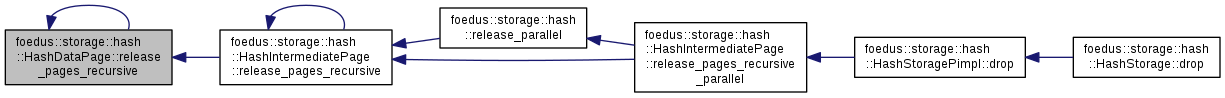

| void | release_pages_recursive (const memory::GlobalVolatilePageResolver &page_resolver, memory::PageReleaseBatch *batch) |

| PageHeader & | header () |

| const PageHeader & | header () const |

| bool | is_locked () const |

| VolatilePagePointer | get_volatile_page_id () const |

| SnapshotPagePointer | get_snapshot_page_id () const |

| const DualPagePointer & | next_page () const __attribute__((always_inline)) |

| DualPagePointer & | next_page () __attribute__((always_inline)) |

| const DualPagePointer * | next_page_address () const __attribute__((always_inline)) |

| DualPagePointer * | next_page_address () __attribute__((always_inline)) |

| const DataPageBloomFilter & | bloom_filter () const __attribute__((always_inline)) |

| DataPageBloomFilter & | bloom_filter () __attribute__((always_inline)) |

| uint16_t | get_record_count () const __attribute__((always_inline)) |

| const Slot & | get_slot (DataPageSlotIndex record) const __attribute__((always_inline)) |

| Slot & | get_slot (DataPageSlotIndex record) __attribute__((always_inline)) |

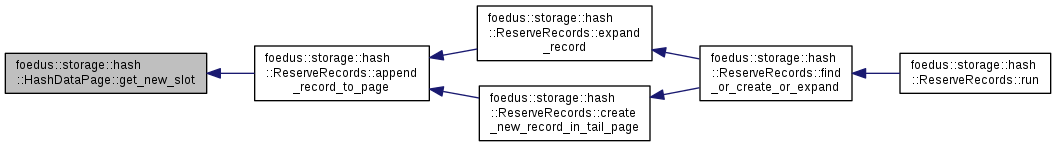

| Slot & | get_new_slot (DataPageSlotIndex record) __attribute__((always_inline)) |

| const Slot * | get_slot_address (DataPageSlotIndex record) const __attribute__((always_inline)) |

| same as &get_slot(), but this is more explicit and easier to understand/maintain More... | |

| Slot * | get_slot_address (DataPageSlotIndex record) __attribute__((always_inline)) |

| DataPageSlotIndex | to_slot_index (const Slot *slot) const __attribute__((always_inline)) |

| char * | record_from_offset (uint16_t offset) |

| const char * | record_from_offset (uint16_t offset) const |

| uint16_t | available_space () const |

| Returns usable space in bytes. More... | |

| uint16_t | next_offset () const |

| Returns offset of a next record. More... | |

| DataPageSlotIndex | reserve_record (HashValue hash, const BloomFilterFingerprint &fingerprint, const void *key, uint16_t key_length, uint16_t payload_length) |

| A system transaction that creates a logically deleted record in this page for the given key. More... | |

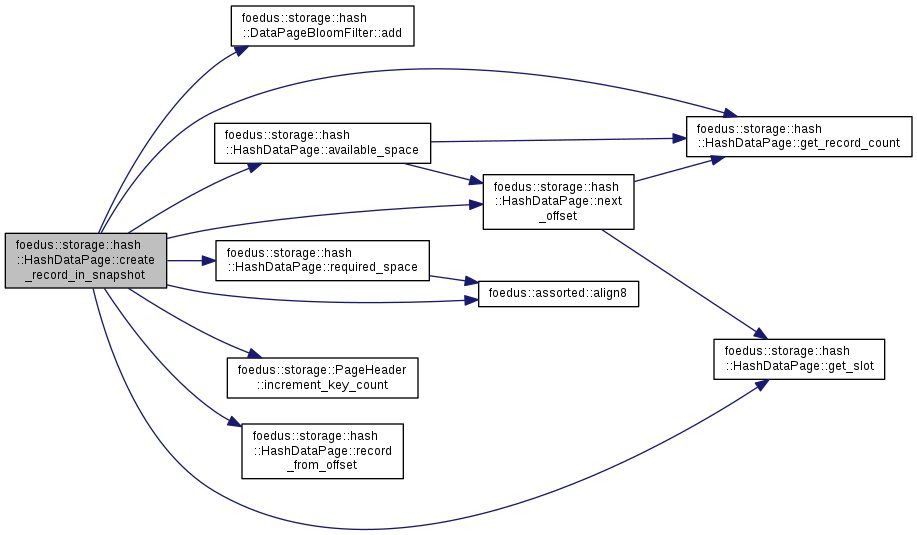

| void | create_record_in_snapshot (xct::XctId xct_id, HashValue hash, const BloomFilterFingerprint &fingerprint, const void *key, uint16_t key_length, const void *payload, uint16_t payload_length) __attribute__((always_inline)) |

| A simplified/efficient version to insert an active record, which must be used only in snapshot pages. More... | |

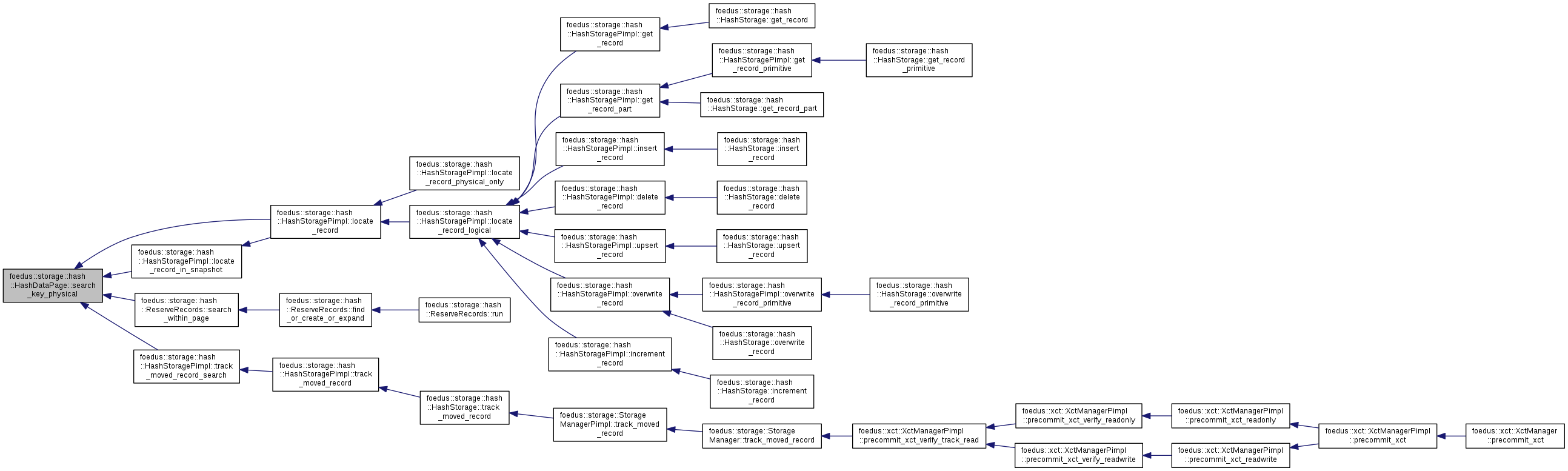

| DataPageSlotIndex | search_key_physical (HashValue hash, const BloomFilterFingerprint &fingerprint, const void *key, KeyLength key_length, DataPageSlotIndex record_count, DataPageSlotIndex check_from=0) const |

| Search for a physical slot that exactly contains the given key. More... | |

| bool | compare_slot_key (DataPageSlotIndex index, HashValue hash, const void *key, uint16_t key_length) const |

| returns whether the slot contains the exact key specified More... | |

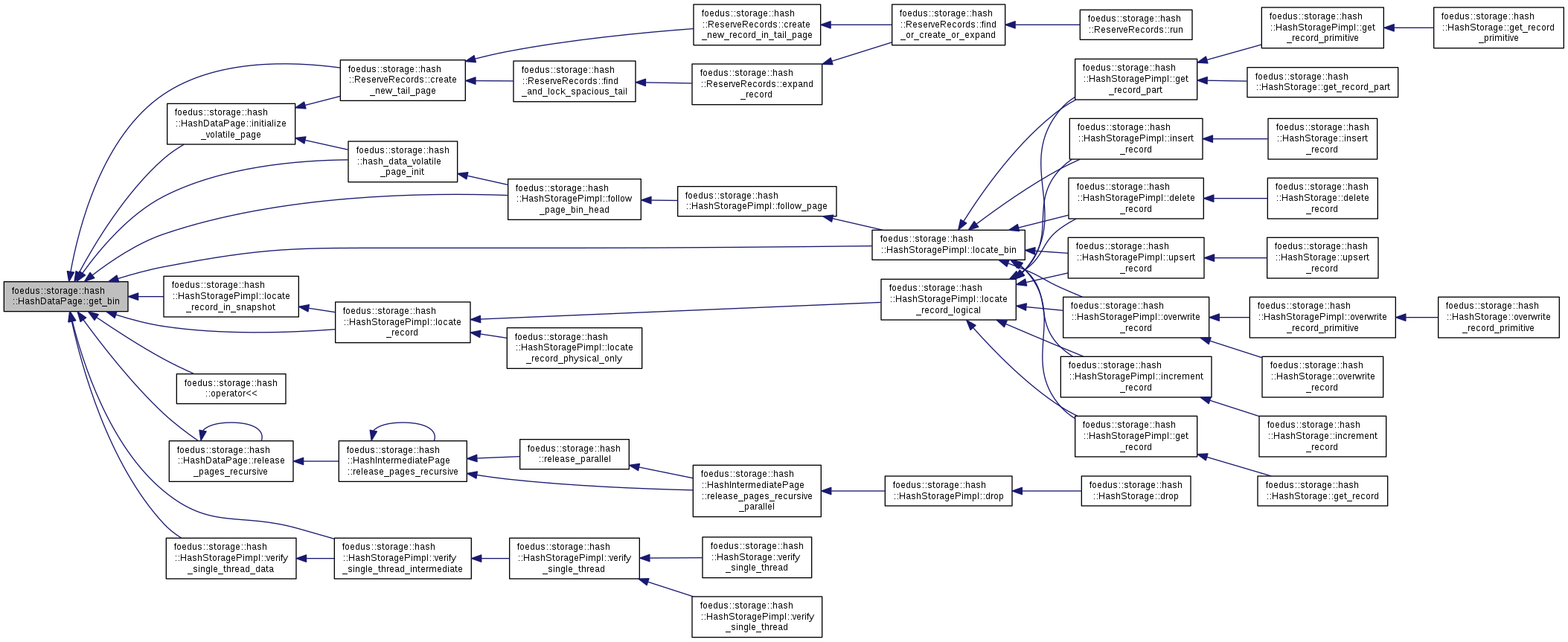

| HashBin | get_bin () const |

| void | assert_bin (HashBin bin) const __attribute__((always_inline)) |

| void | protected_set_bin_shifts (uint8_t bin_shifts) |

| this method should be called only in page creation. More... | |

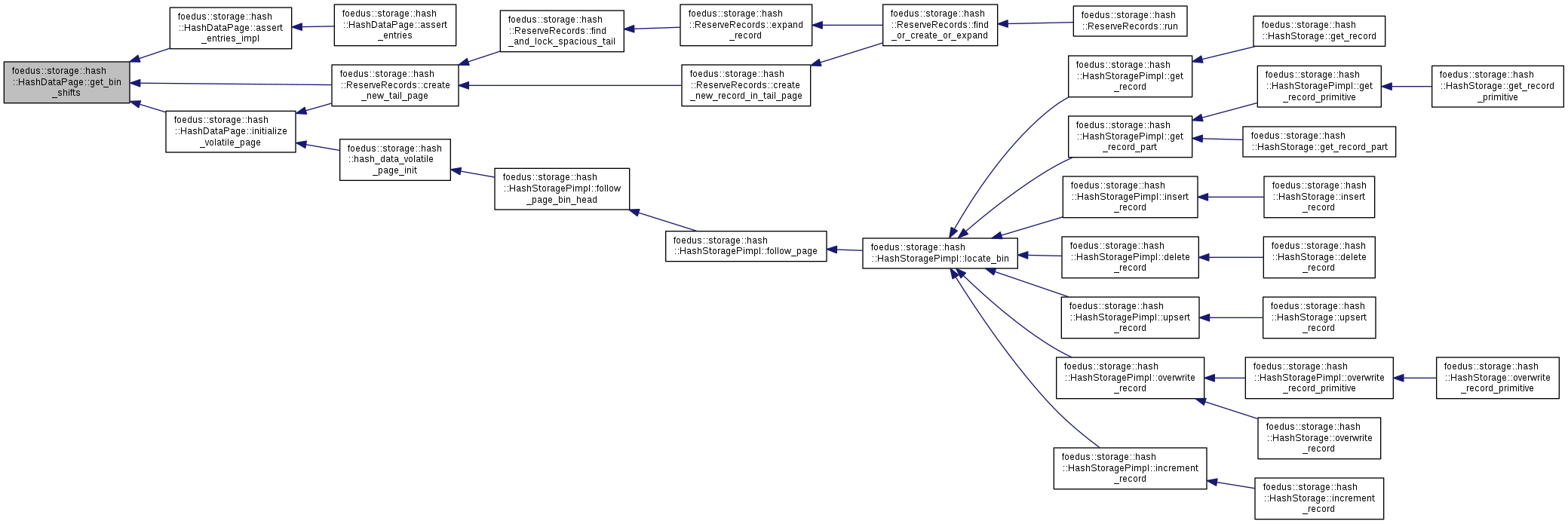

| uint8_t | get_bin_shifts () const |

| void | assert_entries () const __attribute__((always_inline)) |

| void | assert_entries_impl () const |

| defined in hash_page_debug.cpp. More... | |

Static Public Member Functions | |

| static uint16_t | required_space (uint16_t key_length, uint16_t payload_length) |

| returns physical_record_length_ for a new record More... | |

Friends | |

| struct | ReserveRecords |

| std::ostream & | operator<< (std::ostream &o, const HashDataPage &v) |

| defined in hash_page_debug.cpp. More... | |

|

delete |

|

delete |

|

inline |

Definition at line 413 of file hash_page_impl.hpp.

References ASSERT_ND.

|

inline |

Definition at line 424 of file hash_page_impl.hpp.

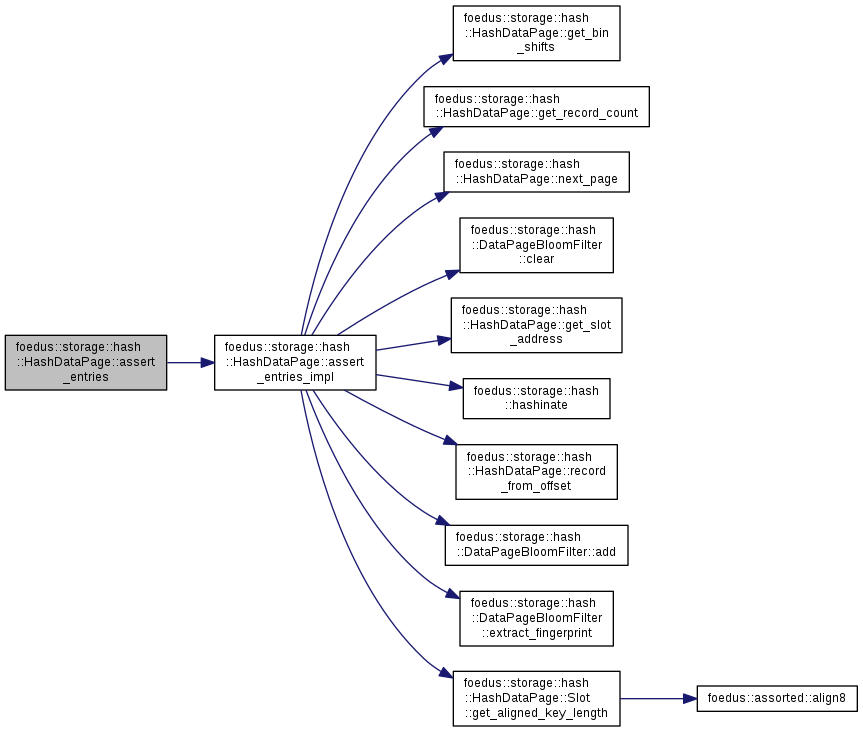

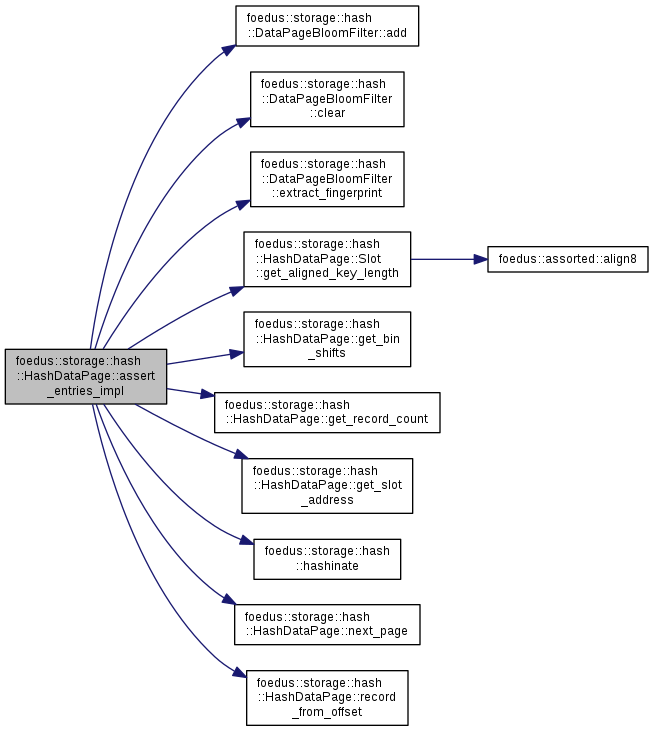

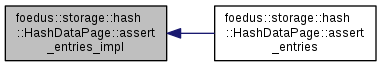

References assert_entries_impl().

| void foedus::storage::hash::HashDataPage::assert_entries_impl | ( | ) | const |

defined in hash_page_debug.cpp.

Definition at line 89 of file hash_page_debug.cpp.

References foedus::storage::hash::DataPageBloomFilter::add(), ASSERT_ND, foedus::storage::hash::DataPageBloomFilter::clear(), foedus::storage::hash::DataPageBloomFilter::extract_fingerprint(), foedus::storage::hash::HashDataPage::Slot::get_aligned_key_length(), get_bin_shifts(), get_record_count(), get_slot_address(), foedus::storage::hash::HashDataPage::Slot::hash_, foedus::storage::hash::hashinate(), foedus::storage::hash::HashDataPage::Slot::key_length_, foedus::storage::hash::kHashDataPageHeaderSize, foedus::storage::kPageSize, next_page(), foedus::storage::hash::HashDataPage::Slot::offset_, foedus::storage::hash::HashDataPage::Slot::payload_length_, foedus::storage::hash::HashDataPage::Slot::physical_record_length_, record_from_offset(), foedus::storage::PageHeader::snapshot_, and foedus::storage::hash::DataPageBloomFilter::values_.

Referenced by assert_entries().

|

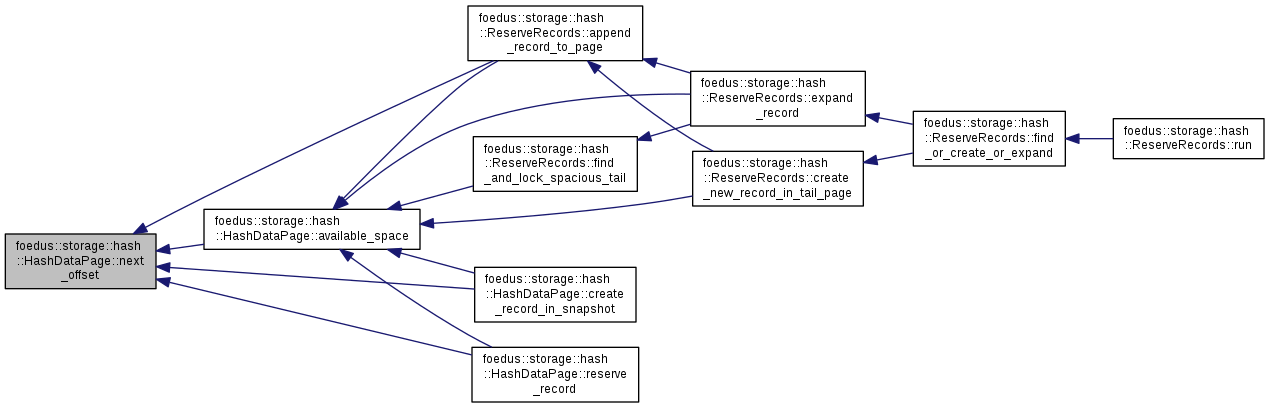

inline |

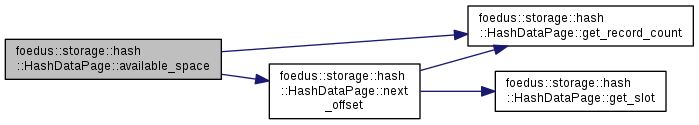

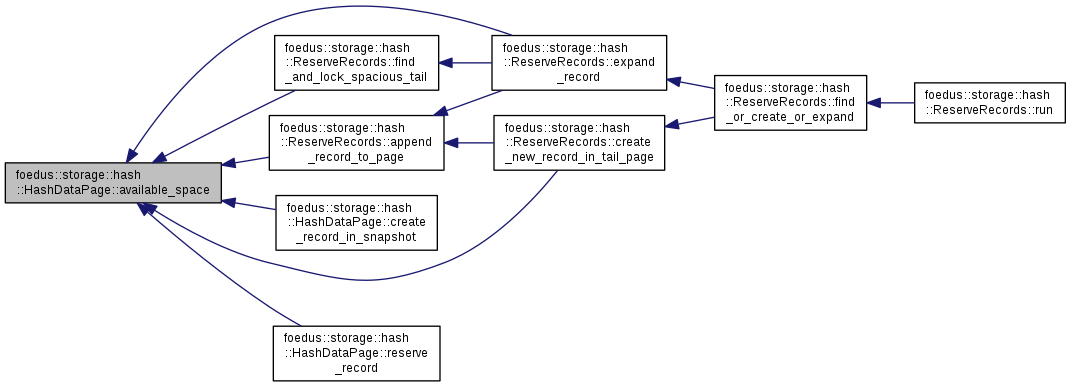

Returns usable space in bytes.

Definition at line 281 of file hash_page_impl.hpp.

References ASSERT_ND, get_record_count(), and next_offset().

Referenced by foedus::storage::hash::ReserveRecords::append_record_to_page(), foedus::storage::hash::ReserveRecords::create_new_record_in_tail_page(), create_record_in_snapshot(), foedus::storage::hash::ReserveRecords::expand_record(), foedus::storage::hash::ReserveRecords::find_and_lock_spacious_tail(), and reserve_record().

|

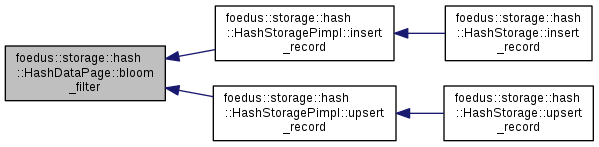

inline |

Definition at line 243 of file hash_page_impl.hpp.

Referenced by foedus::storage::hash::HashStoragePimpl::insert_record(), and foedus::storage::hash::HashStoragePimpl::upsert_record().

|

inline |

Definition at line 244 of file hash_page_impl.hpp.

|

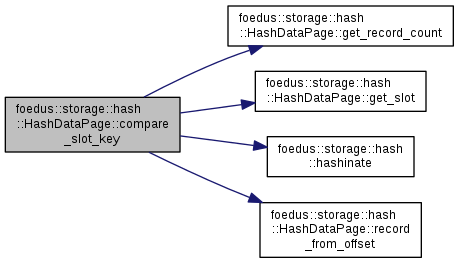

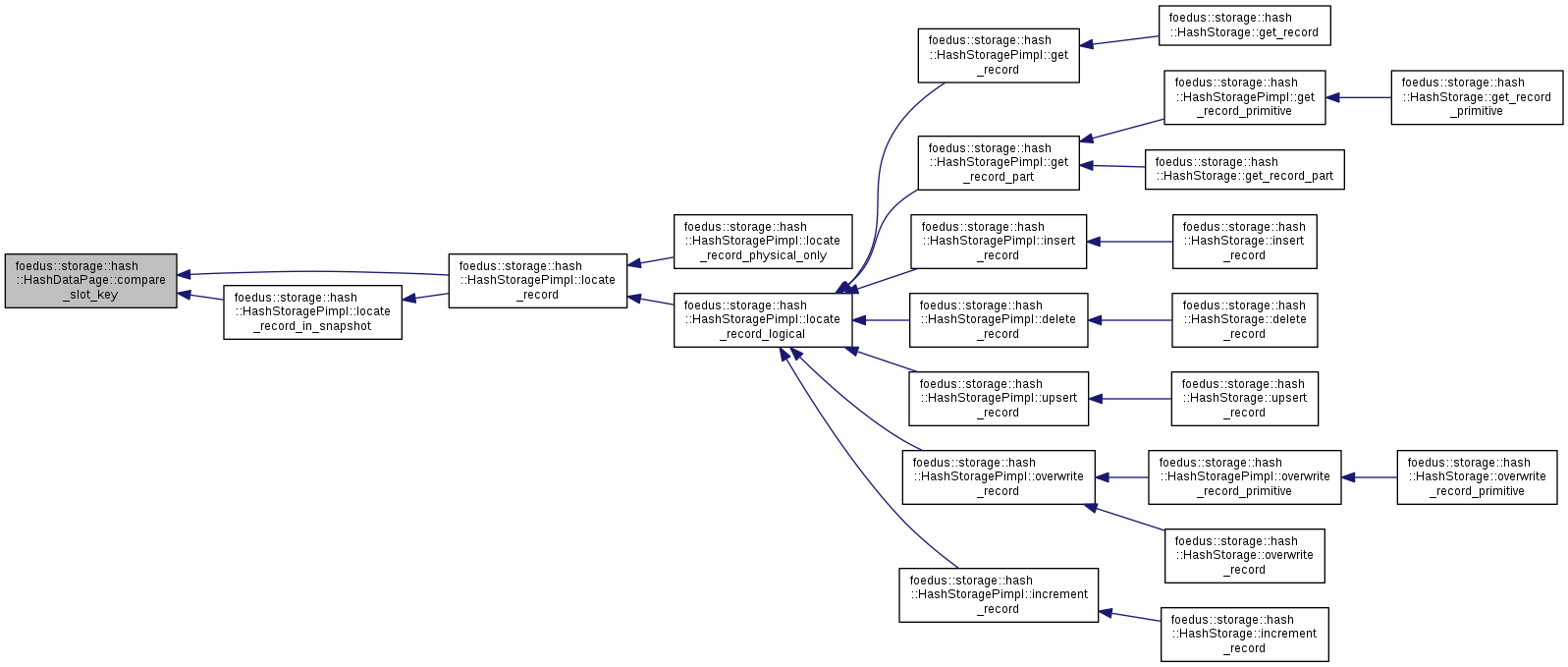

inline |

returns whether the slot contains the exact key specified

Definition at line 396 of file hash_page_impl.hpp.

References ASSERT_ND, get_record_count(), get_slot(), foedus::storage::hash::HashDataPage::Slot::hash_, foedus::storage::hash::hashinate(), foedus::storage::hash::HashDataPage::Slot::key_length_, foedus::storage::hash::HashDataPage::Slot::offset_, and record_from_offset().

Referenced by foedus::storage::hash::HashStoragePimpl::locate_record(), and foedus::storage::hash::HashStoragePimpl::locate_record_in_snapshot().

|

inline |

A simplified/efficient version to insert an active record, which must be used only in snapshot pages.

| [in] | xct_id | XctId of the record. |

| [in] | hash | hash value of the key. |

| [in] | fingerprint | Bloom Filter fingerprint of the key. |

| [in] | key | full key. |

| [in] | key_length | full key length. |

| [in] | payload | the payload of the new record |

| [in] | payload_length | length of payload |

On constructing snapshot pages, we don't need any concurrency control, and we can loosen all restrictions. We thus have this method as an optimized version for snapshot pages. Inlined to make it more efficient.

Definition at line 480 of file hash_page_impl.hpp.

References foedus::storage::hash::DataPageBloomFilter::add(), foedus::assorted::align8(), ASSERT_ND, ASSUME_ALIGNED, available_space(), get_record_count(), get_slot(), foedus::storage::hash::HashDataPage::Slot::hash_, foedus::storage::PageHeader::increment_key_count(), foedus::storage::hash::HashDataPage::Slot::key_length_, foedus::storage::kPageSize, next_offset(), foedus::storage::hash::HashDataPage::Slot::offset_, foedus::storage::hash::HashDataPage::Slot::payload_length_, foedus::storage::hash::HashDataPage::Slot::physical_record_length_, record_from_offset(), required_space(), foedus::storage::PageHeader::snapshot_, foedus::storage::hash::HashDataPage::Slot::tid_, and foedus::xct::RwLockableXctId::xct_id_.

|

inline |

Definition at line 412 of file hash_page_impl.hpp.

Referenced by foedus::storage::hash::ReserveRecords::create_new_tail_page(), foedus::storage::hash::HashStoragePimpl::follow_page_bin_head(), foedus::storage::hash::hash_data_volatile_page_init(), initialize_volatile_page(), foedus::storage::hash::HashStoragePimpl::locate_bin(), foedus::storage::hash::HashStoragePimpl::locate_record(), foedus::storage::hash::HashStoragePimpl::locate_record_in_snapshot(), foedus::storage::hash::operator<<(), release_pages_recursive(), foedus::storage::hash::HashStoragePimpl::verify_single_thread_data(), and foedus::storage::hash::HashStoragePimpl::verify_single_thread_intermediate().

|

inline |

Definition at line 422 of file hash_page_impl.hpp.

References foedus::storage::PageHeader::masstree_layer_.

Referenced by assert_entries_impl(), foedus::storage::hash::ReserveRecords::create_new_tail_page(), and initialize_volatile_page().

|

inline |

Definition at line 254 of file hash_page_impl.hpp.

Referenced by foedus::storage::hash::ReserveRecords::append_record_to_page().

|

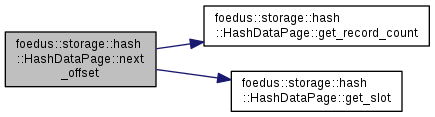

inline |

Definition at line 246 of file hash_page_impl.hpp.

References foedus::storage::PageHeader::key_count_.

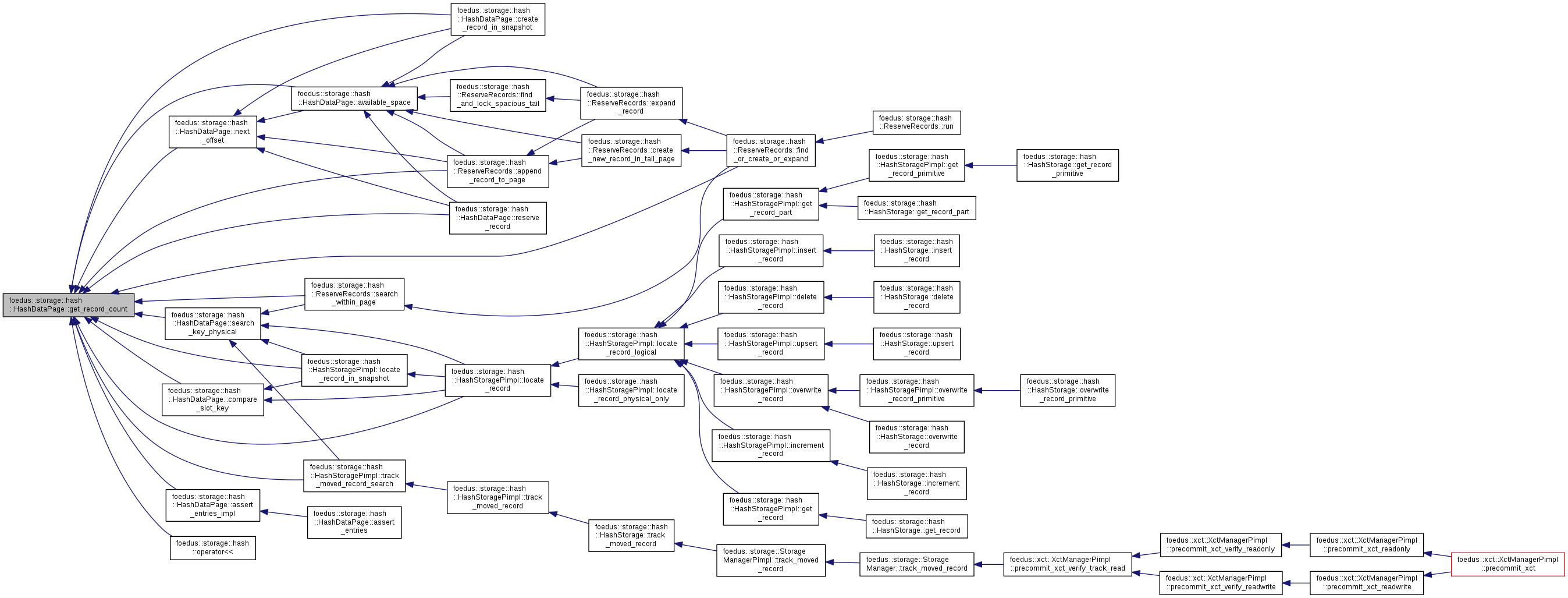

Referenced by foedus::storage::hash::ReserveRecords::append_record_to_page(), assert_entries_impl(), available_space(), compare_slot_key(), create_record_in_snapshot(), foedus::storage::hash::ReserveRecords::find_or_create_or_expand(), foedus::storage::hash::HashStoragePimpl::locate_record(), foedus::storage::hash::HashStoragePimpl::locate_record_in_snapshot(), next_offset(), foedus::storage::hash::operator<<(), reserve_record(), search_key_physical(), foedus::storage::hash::ReserveRecords::search_within_page(), and foedus::storage::hash::HashStoragePimpl::track_moved_record_search().

|

inline |

Definition at line 248 of file hash_page_impl.hpp.

Referenced by compare_slot_key(), create_record_in_snapshot(), foedus::storage::hash::ReserveRecords::expand_record(), foedus::storage::hash::ReserveRecords::find_or_create_or_expand(), next_offset(), reserve_record(), and search_key_physical().

|

inline |

Definition at line 251 of file hash_page_impl.hpp.

|

inline |

same as &get_slot(), but this is more explicit and easier to understand/maintain

Definition at line 259 of file hash_page_impl.hpp.

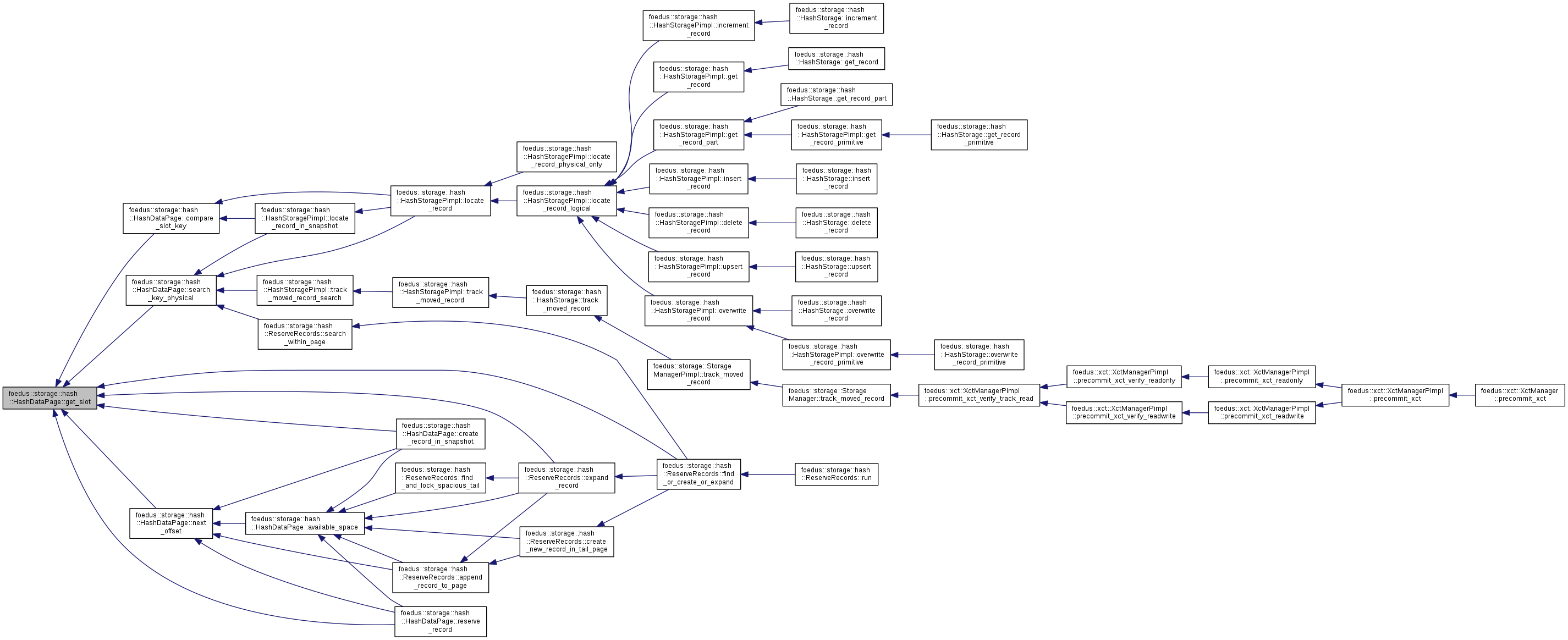

Referenced by assert_entries_impl(), foedus::storage::hash::operator<<(), foedus::storage::hash::RecordLocation::populate_logical(), foedus::storage::hash::RecordLocation::populate_physical(), foedus::storage::hash::HashStoragePimpl::register_record_write_log(), and foedus::storage::hash::HashStoragePimpl::track_moved_record_search().

|

inline |

Definition at line 262 of file hash_page_impl.hpp.

|

inline |

Definition at line 233 of file hash_page_impl.hpp.

References ASSERT_ND, foedus::storage::PageHeader::page_id_, and foedus::storage::PageHeader::snapshot_.

|

inline |

Definition at line 229 of file hash_page_impl.hpp.

References ASSERT_ND, foedus::storage::PageHeader::page_id_, and foedus::storage::PageHeader::snapshot_.

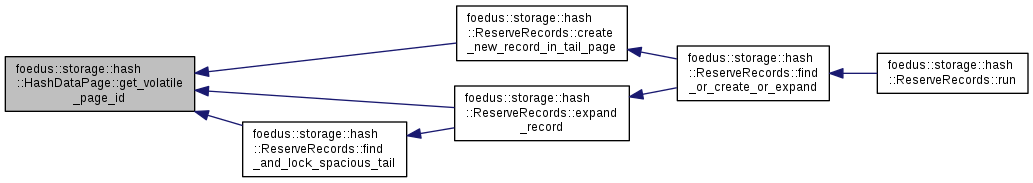

Referenced by foedus::storage::hash::ReserveRecords::create_new_record_in_tail_page(), foedus::storage::hash::ReserveRecords::expand_record(), and foedus::storage::hash::ReserveRecords::find_and_lock_spacious_tail().

|

inline |

Definition at line 225 of file hash_page_impl.hpp.

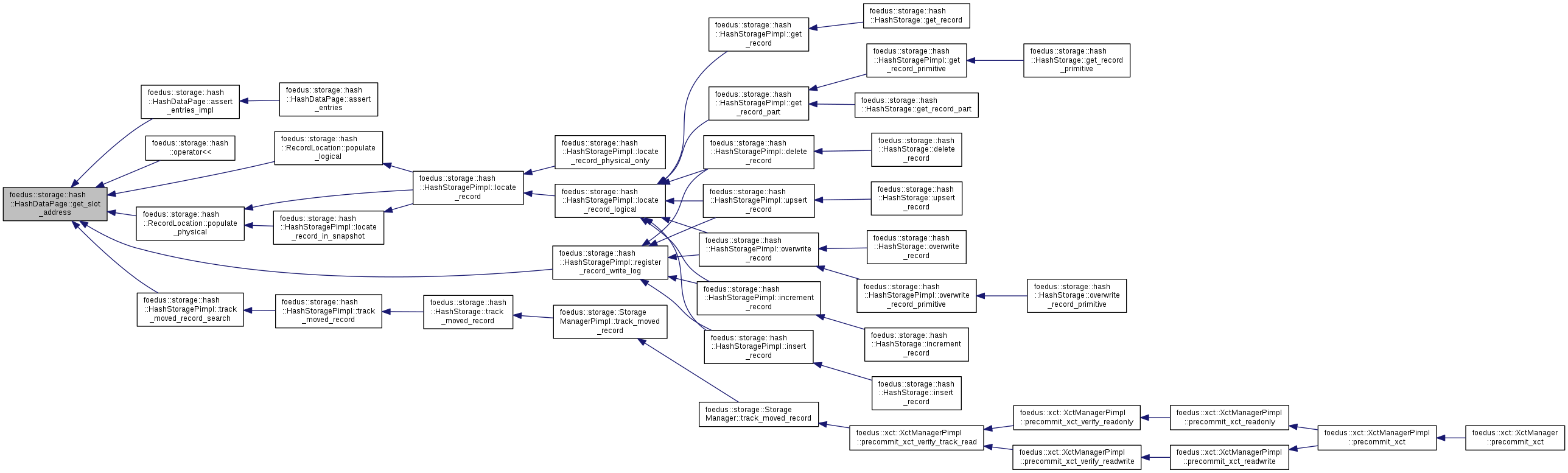

Referenced by foedus::storage::hash::ReserveRecords::create_new_record_in_tail_page(), foedus::storage::hash::ReserveRecords::create_new_tail_page(), foedus::storage::hash::ReserveRecords::find_and_lock_spacious_tail(), foedus::storage::hash::ReserveRecords::find_or_create_or_expand(), foedus::storage::hash::HashStoragePimpl::follow_page_bin_head(), foedus::storage::hash::HashStoragePimpl::hcc_reset_all_temperature_stat_data(), foedus::storage::hash::HashStoragePimpl::insert_record(), foedus::storage::hash::HashStoragePimpl::locate_record(), foedus::storage::hash::HashStoragePimpl::locate_record_in_snapshot(), foedus::storage::hash::operator<<(), foedus::storage::hash::HashIntermediatePage::release_pages_recursive(), release_pages_recursive(), foedus::storage::hash::resolve_data_impl(), foedus::storage::hash::ReserveRecords::search_within_page(), foedus::storage::hash::HashStoragePimpl::track_moved_record_search(), and foedus::storage::hash::HashStoragePimpl::upsert_record().

|

inline |

Definition at line 226 of file hash_page_impl.hpp.

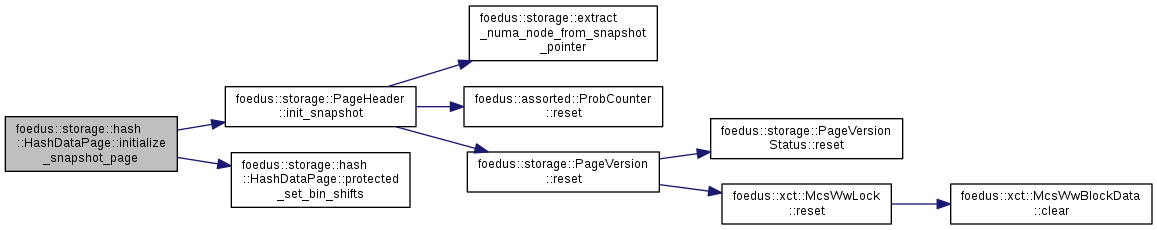

| void foedus::storage::hash::HashDataPage::initialize_snapshot_page | ( | StorageId | storage_id, |

| SnapshotPagePointer | page_id, | ||

| HashBin | bin, | ||

| uint8_t | bin_bits, | ||

| uint8_t | bin_shifts | ||

| ) |

Definition at line 93 of file hash_page_impl.cpp.

References ASSERT_ND, foedus::storage::PageHeader::init_snapshot(), foedus::storage::kHashDataPageType, foedus::storage::kPageSize, and protected_set_bin_shifts().

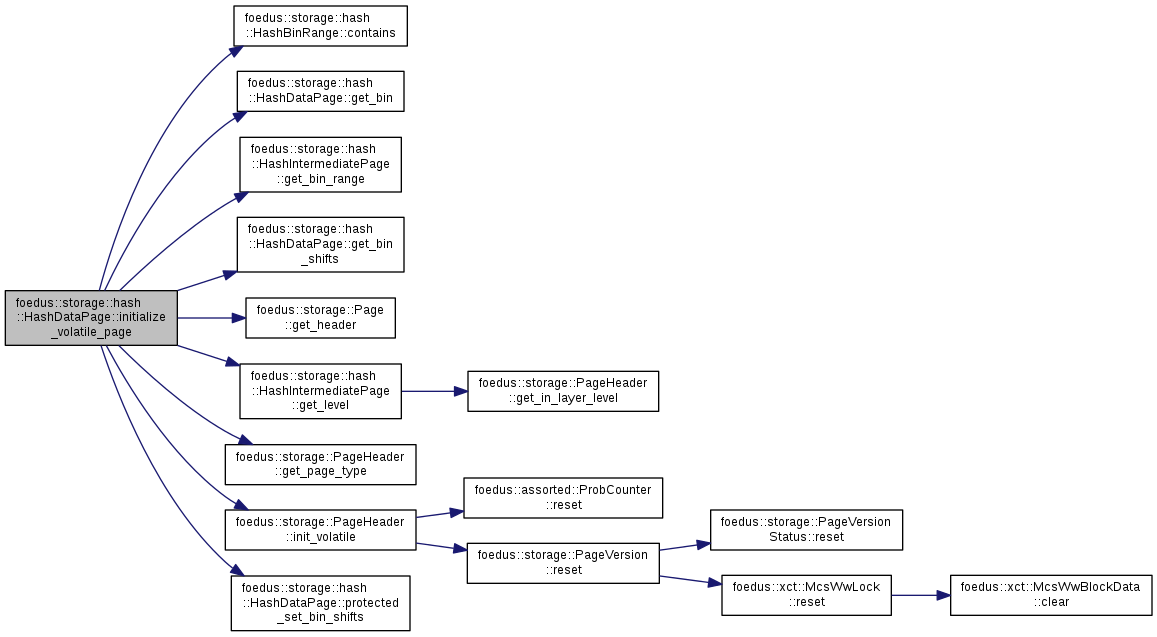

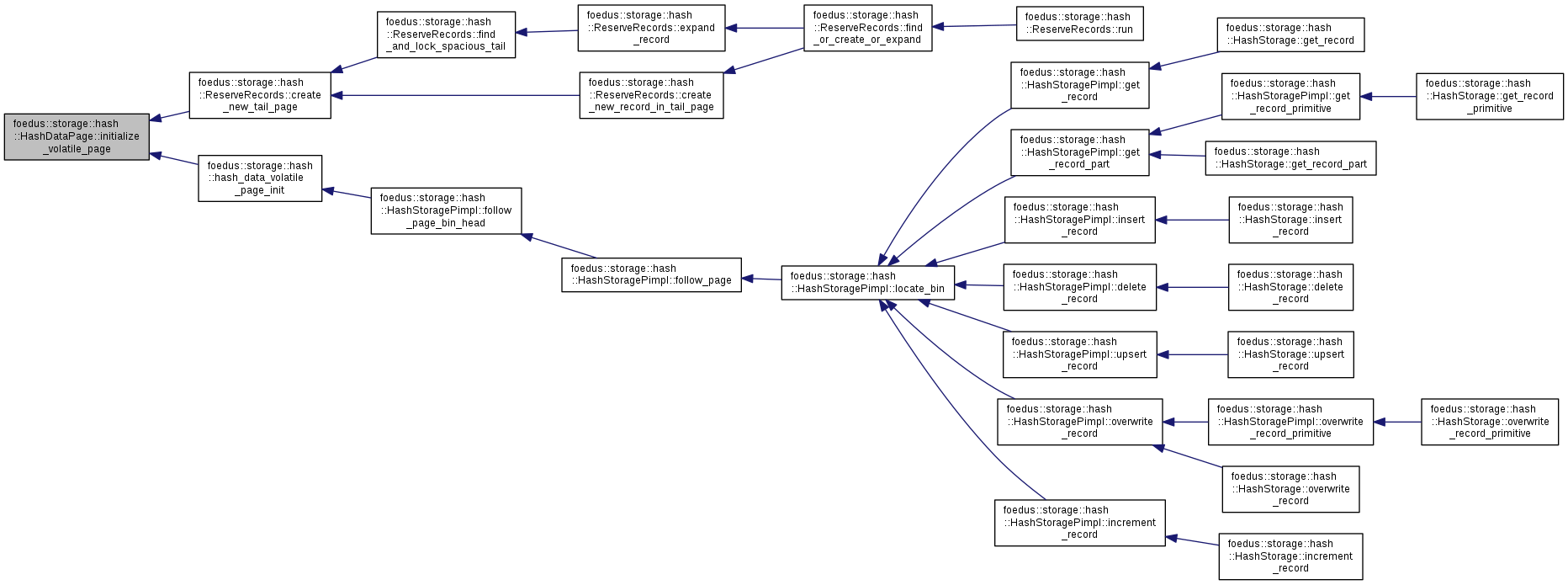

| void foedus::storage::hash::HashDataPage::initialize_volatile_page | ( | StorageId | storage_id, |

| VolatilePagePointer | page_id, | ||

| const Page * | parent, | ||

| HashBin | bin, | ||

| uint8_t | bin_shifts | ||

| ) |

Definition at line 70 of file hash_page_impl.cpp.

References ASSERT_ND, foedus::storage::hash::HashBinRange::contains(), get_bin(), foedus::storage::hash::HashIntermediatePage::get_bin_range(), get_bin_shifts(), foedus::storage::Page::get_header(), foedus::storage::hash::HashIntermediatePage::get_level(), foedus::storage::PageHeader::get_page_type(), foedus::storage::PageHeader::init_volatile(), foedus::storage::kHashDataPageType, foedus::storage::kHashIntermediatePageType, foedus::storage::kPageSize, and protected_set_bin_shifts().

Referenced by foedus::storage::hash::ReserveRecords::create_new_tail_page(), and foedus::storage::hash::hash_data_volatile_page_init().

|

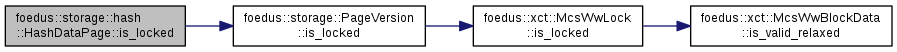

inline |

Definition at line 227 of file hash_page_impl.hpp.

References foedus::storage::PageVersion::is_locked(), and foedus::storage::PageHeader::page_version_.

Referenced by foedus::storage::hash::ReserveRecords::create_new_record_in_tail_page(), foedus::storage::hash::ReserveRecords::create_new_tail_page(), foedus::storage::hash::ReserveRecords::expand_record(), and foedus::storage::hash::ReserveRecords::find_or_create_or_expand().

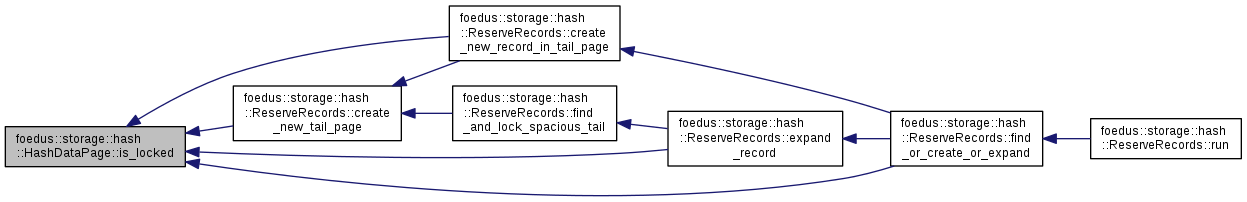

|

inline |

Returns offset of a next record.

Definition at line 291 of file hash_page_impl.hpp.

References ASSERT_ND, get_record_count(), get_slot(), foedus::storage::hash::HashDataPage::Slot::offset_, and foedus::storage::hash::HashDataPage::Slot::physical_record_length_.

Referenced by foedus::storage::hash::ReserveRecords::append_record_to_page(), available_space(), create_record_in_snapshot(), and reserve_record().

|

inline |

Definition at line 238 of file hash_page_impl.hpp.

Referenced by assert_entries_impl(), foedus::storage::hash::ReserveRecords::create_new_record_in_tail_page(), foedus::storage::hash::ReserveRecords::find_and_lock_spacious_tail(), foedus::storage::hash::ReserveRecords::find_or_create_or_expand(), foedus::storage::hash::HashStoragePimpl::follow_page_bin_head(), foedus::storage::hash::operator<<(), and foedus::storage::hash::ReserveRecords::search_within_page().

|

inline |

Definition at line 239 of file hash_page_impl.hpp.

|

inline |

Definition at line 240 of file hash_page_impl.hpp.

Referenced by foedus::storage::hash::HashStoragePimpl::follow_page_bin_head(), foedus::storage::hash::HashStoragePimpl::locate_record(), foedus::storage::hash::HashStoragePimpl::locate_record_in_snapshot(), and foedus::storage::hash::HashStoragePimpl::track_moved_record_search().

|

inline |

Definition at line 241 of file hash_page_impl.hpp.

|

delete |

|

inline |

this method should be called only in page creation.

bin_shifts is immutable after that. we currently reuse header_.masstree_layer_ (not applicable to hash storage) for bin_shifts to squeeze space.

Definition at line 421 of file hash_page_impl.hpp.

References foedus::storage::PageHeader::masstree_layer_.

Referenced by initialize_snapshot_page(), and initialize_volatile_page().

|

inline |

Definition at line 275 of file hash_page_impl.hpp.

Referenced by foedus::storage::hash::ReserveRecords::append_record_to_page(), assert_entries_impl(), compare_slot_key(), create_record_in_snapshot(), foedus::storage::hash::operator<<(), foedus::storage::hash::RecordLocation::populate_logical(), foedus::storage::hash::RecordLocation::populate_physical(), reserve_record(), search_key_physical(), and foedus::storage::hash::HashStoragePimpl::track_moved_record_search().

|

inline |

Definition at line 276 of file hash_page_impl.hpp.

| void foedus::storage::hash::HashDataPage::release_pages_recursive | ( | const memory::GlobalVolatilePageResolver & | page_resolver, |

| memory::PageReleaseBatch * | batch | ||

| ) |

Definition at line 329 of file hash_page_impl.cpp.

References ASSERT_ND, foedus::storage::VolatilePagePointer::clear(), get_bin(), foedus::storage::PageHeader::get_in_layer_level(), header(), foedus::storage::VolatilePagePointer::is_null(), foedus::storage::PageHeader::page_id_, foedus::memory::PageReleaseBatch::release(), release_pages_recursive(), foedus::memory::GlobalVolatilePageResolver::resolve_offset(), foedus::storage::DualPagePointer::volatile_pointer_, and foedus::storage::VolatilePagePointer::word.

Referenced by foedus::storage::hash::HashIntermediatePage::release_pages_recursive(), and release_pages_recursive().

|

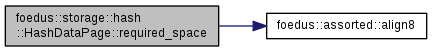

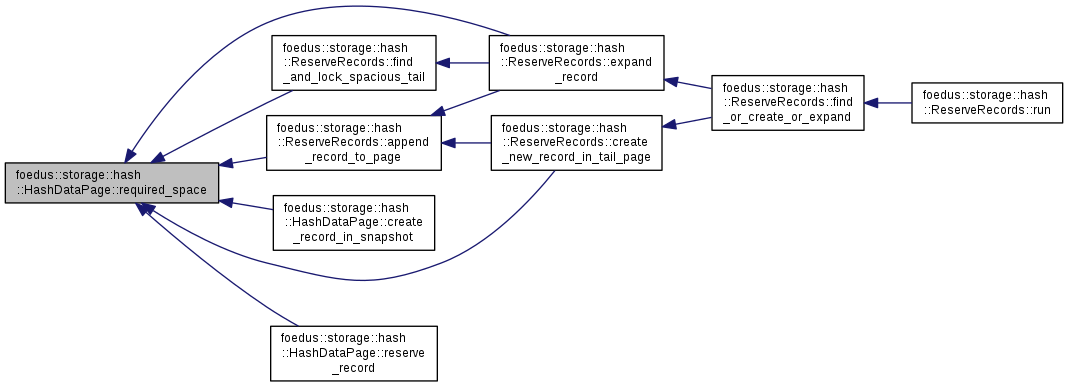

inlinestatic |

returns physical_record_length_ for a new record

Definition at line 302 of file hash_page_impl.hpp.

References foedus::assorted::align8().

Referenced by foedus::storage::hash::ReserveRecords::append_record_to_page(), foedus::storage::hash::ReserveRecords::create_new_record_in_tail_page(), create_record_in_snapshot(), foedus::storage::hash::ReserveRecords::expand_record(), foedus::storage::hash::ReserveRecords::find_and_lock_spacious_tail(), and reserve_record().

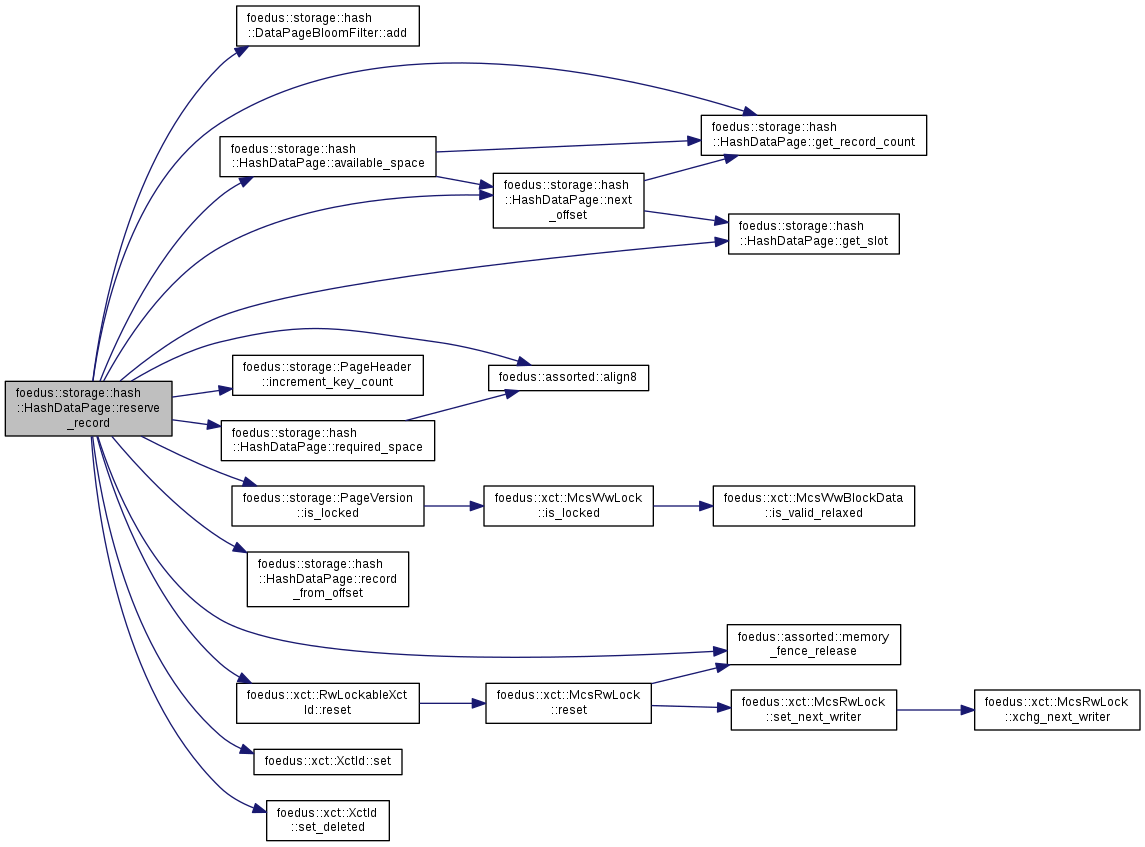

| DataPageSlotIndex foedus::storage::hash::HashDataPage::reserve_record | ( | HashValue | hash, |

| const BloomFilterFingerprint & | fingerprint, | ||

| const void * | key, | ||

| uint16_t | key_length, | ||

| uint16_t | payload_length | ||

| ) |

A system transaction that creates a logically deleted record in this page for the given key.

| [in] | hash | hash value of the key. |

| [in] | fingerprint | Bloom Filter fingerprint of the key. |

| [in] | key | full key. |

| [in] | key_length | full key length. |

| [in] | payload_length | the new record can contain at least this length of payload |

This method also adds the fingerprint to the bloom filter.

Definition at line 170 of file hash_page_impl.cpp.

References foedus::storage::hash::DataPageBloomFilter::add(), foedus::assorted::align8(), ASSERT_ND, available_space(), get_record_count(), get_slot(), foedus::storage::hash::HashDataPage::Slot::hash_, foedus::storage::PageHeader::increment_key_count(), foedus::storage::PageVersion::is_locked(), foedus::Epoch::kEpochInitialCurrent, foedus::storage::hash::HashDataPage::Slot::key_length_, foedus::assorted::memory_fence_release(), next_offset(), foedus::storage::hash::HashDataPage::Slot::offset_, foedus::storage::PageHeader::page_version_, foedus::storage::hash::HashDataPage::Slot::payload_length_, foedus::storage::hash::HashDataPage::Slot::physical_record_length_, record_from_offset(), required_space(), foedus::xct::RwLockableXctId::reset(), foedus::xct::XctId::set(), foedus::xct::XctId::set_deleted(), foedus::storage::hash::HashDataPage::Slot::tid_, and foedus::xct::RwLockableXctId::xct_id_.

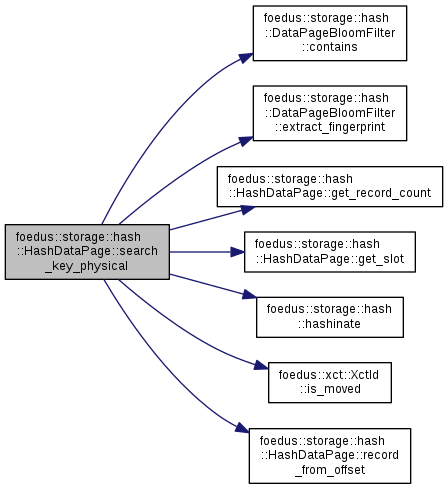

| DataPageSlotIndex foedus::storage::hash::HashDataPage::search_key_physical | ( | HashValue | hash, |

| const BloomFilterFingerprint & | fingerprint, | ||

| const void * | key, | ||

| KeyLength | key_length, | ||

| DataPageSlotIndex | record_count, | ||

| DataPageSlotIndex | check_from = 0 |

||

| ) | const |

Search for a physical slot that exactly contains the given key.

| [in] | hash | hash value of the key. |

| [in] | fingerprint | Bloom Filter fingerprint of the key. |

| [in] | key | full key. |

| [in] | key_length | full key length. |

| [in] | record_count | how many records this page supposedly contains. See below. |

| [in] | check_from | From which record we start searching. If you know that records before this index do definitely not contain the keys, this will speed up the search. The caller must guarantee that none of the records before this index have the key or they have been moved. |

If you have acquired record_count in a protected way (after a page lock, which you still keep) then this method is an exact search. Otherwise, a concurrent thread might be now inserting, so this method might have false negative (which is why you should take PageVersion for miss-search). However, no false positive possible.

This method searches for a physical slot no matter whether the record is logically deleted. However, "moved" records are completely ignored.

This method is physical-only. The returend record might be now being modified or moved. The only contract is the returend slot (if not kSlotNotFound) points to a physical record whose key is exactly same as the given one. It is trivially guaranteed because key/hash in physical records in our hash pages are immutable.

Definition at line 106 of file hash_page_impl.cpp.

References ASSERT_ND, foedus::storage::hash::DataPageBloomFilter::contains(), foedus::storage::hash::DataPageBloomFilter::extract_fingerprint(), get_record_count(), get_slot(), foedus::storage::hash::HashDataPage::Slot::hash_, foedus::storage::hash::hashinate(), foedus::xct::XctId::is_moved(), foedus::storage::hash::HashDataPage::Slot::key_length_, foedus::storage::hash::kSlotNotFound, LIKELY, foedus::storage::hash::HashDataPage::Slot::offset_, record_from_offset(), foedus::storage::hash::HashDataPage::Slot::tid_, and foedus::xct::RwLockableXctId::xct_id_.

Referenced by foedus::storage::hash::HashStoragePimpl::locate_record(), foedus::storage::hash::HashStoragePimpl::locate_record_in_snapshot(), foedus::storage::hash::ReserveRecords::search_within_page(), and foedus::storage::hash::HashStoragePimpl::track_moved_record_search().

|

inline |

Definition at line 266 of file hash_page_impl.hpp.

References ASSERT_ND.

|

friend |

defined in hash_page_debug.cpp.

Definition at line 52 of file hash_page_debug.cpp.

|

friend |

Definition at line 157 of file hash_page_impl.hpp.