|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

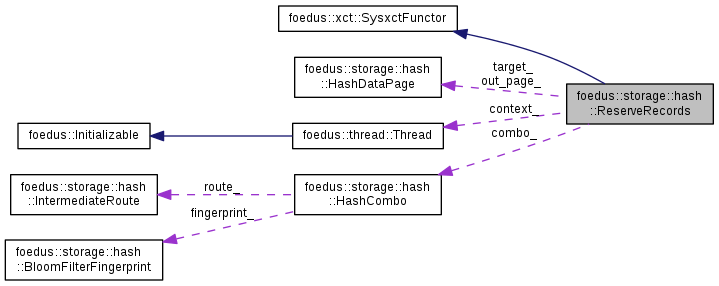

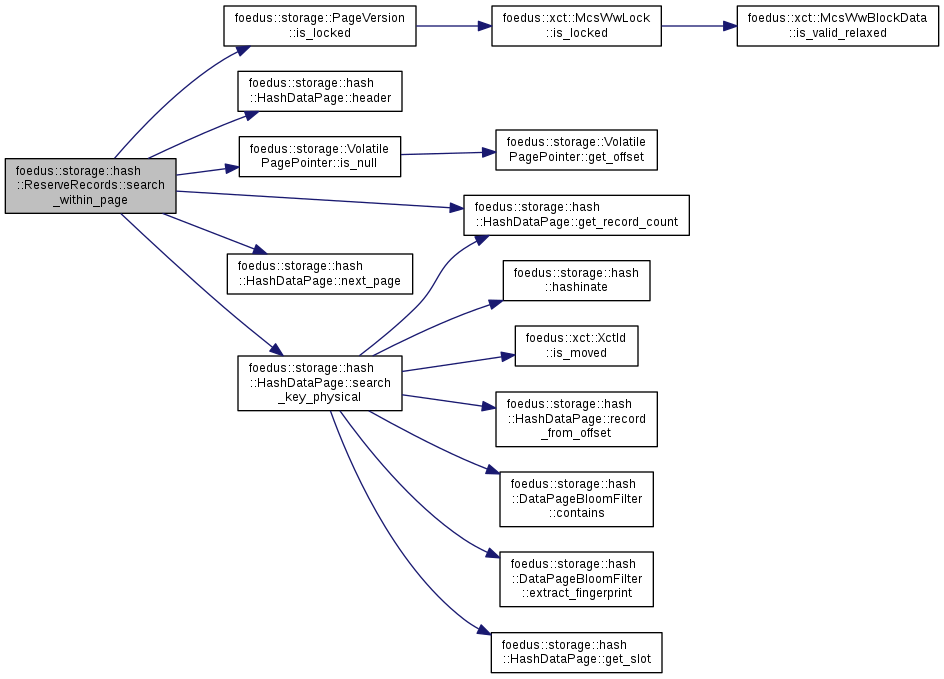

A system transaction to reserve a physical record(s) in a hash data page. More...

A system transaction to reserve a physical record(s) in a hash data page.

A record insertion happens in two steps:

This system transaction does the former.

This does nothing and returns kErrorCodeOk in the following cases:

In all other cases, this sysxct creates or finds the record. In other words, this sysxct guarantees a success as far as it returns kErrorCodeOk.

Locks taken in this sysxct (in order of taking):

Note, however, that it might not be in address order (address order might be tail -> head). We thus might have a false deadlock-abort. However, as far as the first lock on the target_ page is unconditional, all locks after that should be non-racy, so all try-lock should succeed. We might want to specify a bit larger max_retries (5?) for this reason.

So far this sysxct installs only one physical record at a time. TASK(Hideaki): Probably it helps by batching several records.

Definition at line 73 of file hash_reserve_impl.hpp.

#include <hash_reserve_impl.hpp>

Public Member Functions | |

| ReserveRecords (thread::Thread *context, HashDataPage *target, const void *key, KeyLength key_length, const HashCombo &combo, PayloadLength payload_count, PayloadLength aggressive_payload_count_hint, DataPageSlotIndex hint_check_from) | |

| virtual ErrorCode | run (xct::SysxctWorkspace *sysxct_workspace) override |

| Execute the system transaction. More... | |

| ErrorCode | find_or_create_or_expand (xct::SysxctWorkspace *sysxct_workspace, HashDataPage *page, DataPageSlotIndex examined_records) |

| The main loop (well, recursion actually). More... | |

| ErrorCode | expand_record (xct::SysxctWorkspace *sysxct_workspace, HashDataPage *page, DataPageSlotIndex index) |

| ErrorCode | find_and_lock_spacious_tail (xct::SysxctWorkspace *sysxct_workspace, HashDataPage *from_page, HashDataPage **tail) |

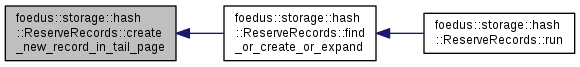

| ErrorCode | create_new_record_in_tail_page (HashDataPage *tail) |

| Installs it as a fresh-new physical record, assuming the given page is the tail and already locked. More... | |

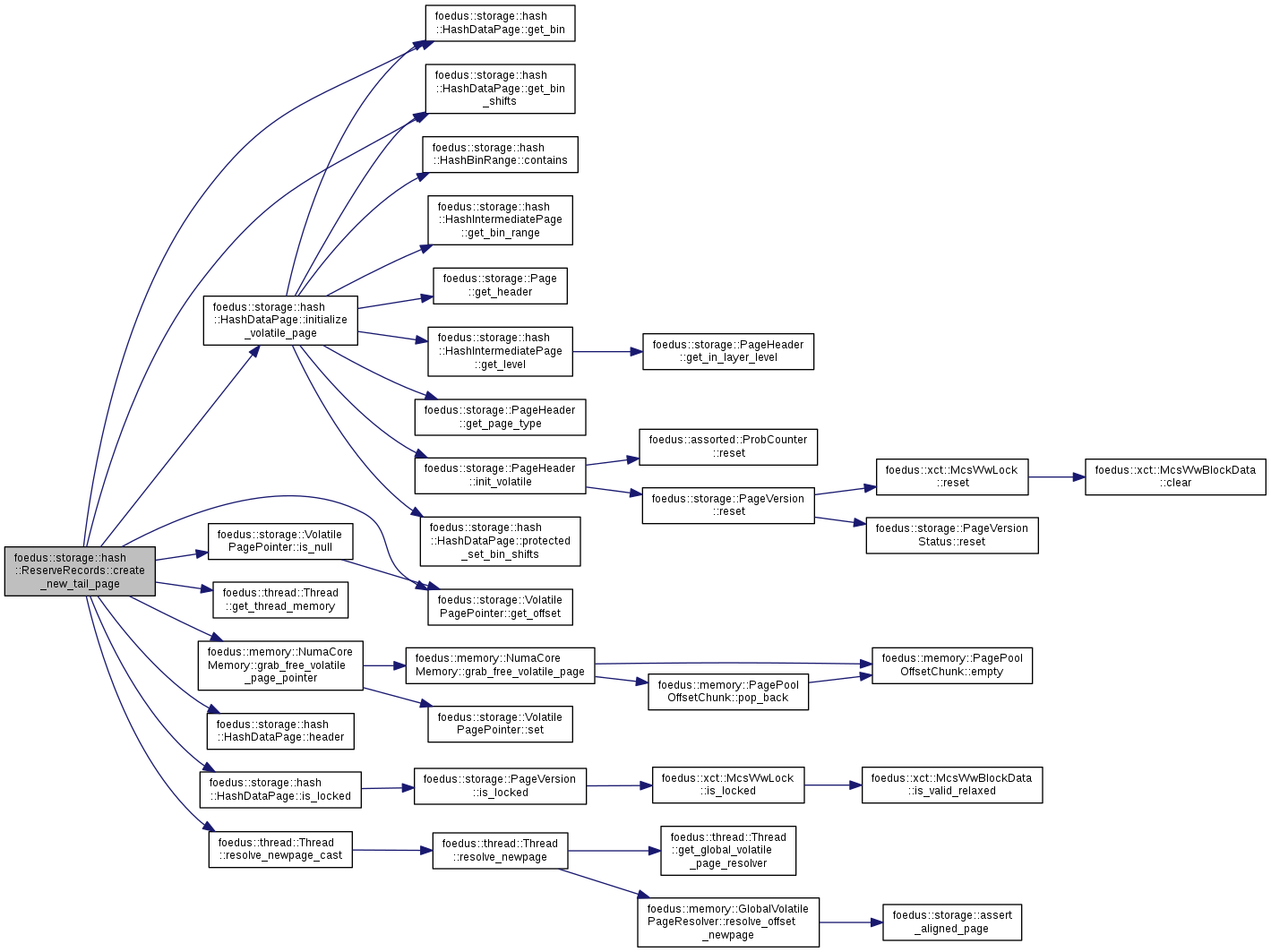

| ErrorCode | create_new_tail_page (HashDataPage *cur_tail, HashDataPage **new_tail) |

| DataPageSlotIndex | search_within_page (const HashDataPage *page, DataPageSlotIndex key_count, DataPageSlotIndex examined_records) const |

| DataPageSlotIndex | append_record_to_page (HashDataPage *page, xct::XctId initial_xid) const |

| Appends a new physical record to the page. More... | |

Public Attributes | |

| thread::Thread *const | context_ |

| Thread context. More... | |

| HashDataPage *const | target_ |

| The data page to install a new physical record. More... | |

| const void *const | key_ |

| The key of the new record. More... | |

| const HashCombo & | combo_ |

| Hash info of the key. More... | |

| const KeyLength | key_length_ |

| Byte length of the key. More... | |

| const PayloadLength | payload_count_ |

| Minimal required length of the payload. More... | |

| const PayloadLength | aggressive_payload_count_hint_ |

| When we expand the record or allocate a new record, we might allocate a larger-than-necessary space guided by this hint. More... | |

| const DataPageSlotIndex | hint_check_from_ |

| The in-page location from which this sysxct will look for matching records. More... | |

| DataPageSlotIndex | out_slot_ |

| [Out] The slot of the record that is found or created. More... | |

| HashDataPage * | out_page_ |

| [Out] The page that contains the found/created record. More... | |

|

inline |

Definition at line 142 of file hash_reserve_impl.hpp.

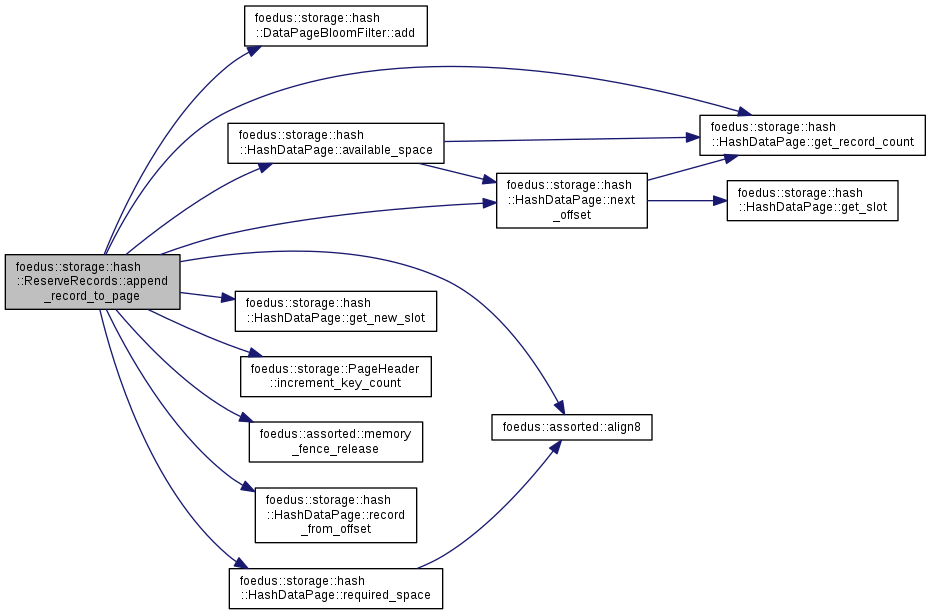

| DataPageSlotIndex foedus::storage::hash::ReserveRecords::append_record_to_page | ( | HashDataPage * | page, |

| xct::XctId | initial_xid | ||

| ) | const |

Appends a new physical record to the page.

The caller must make sure there is no race in the page. In other words, the page must be either locked or a not-yet-published page.

Definition at line 261 of file hash_reserve_impl.cpp.

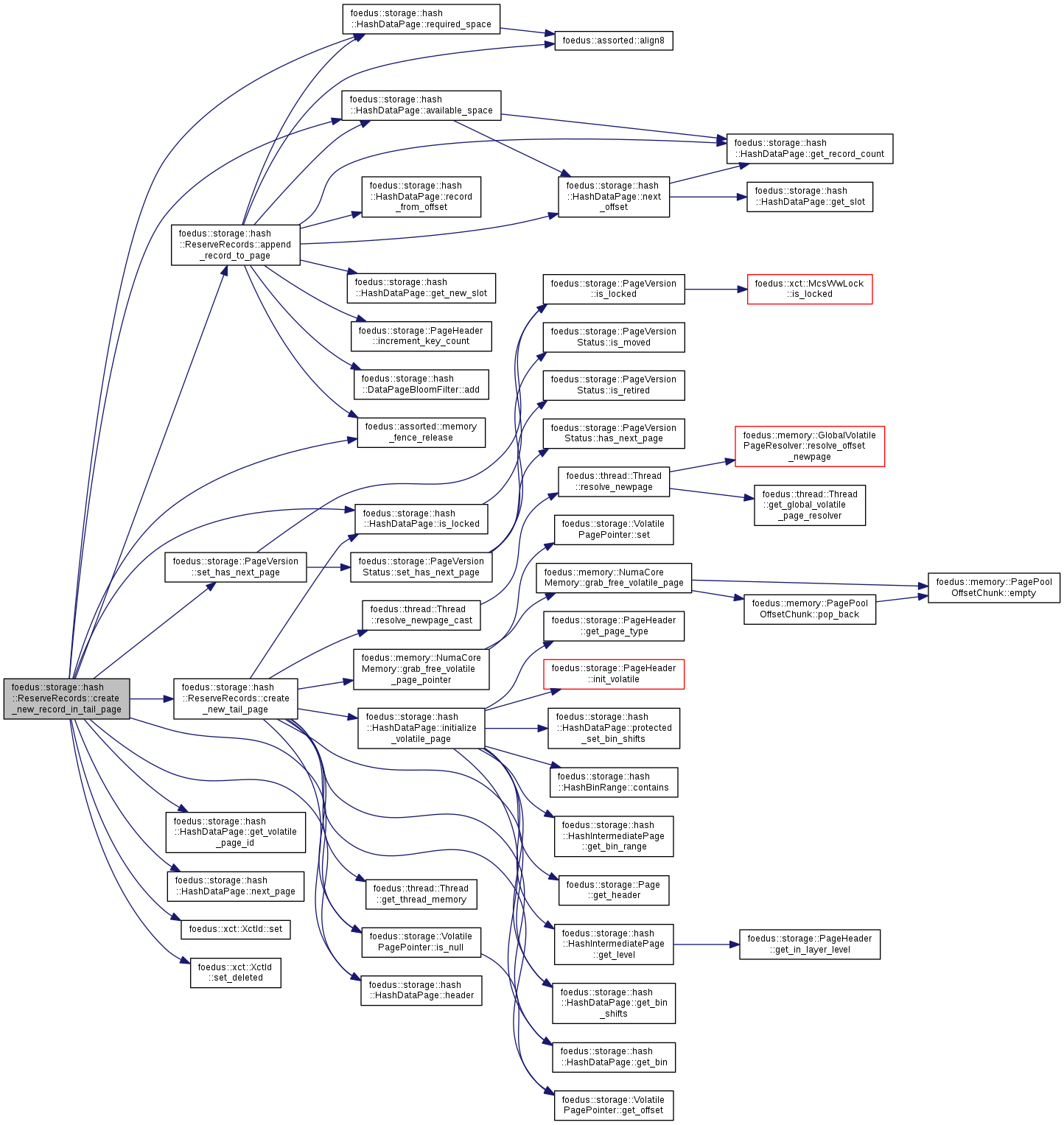

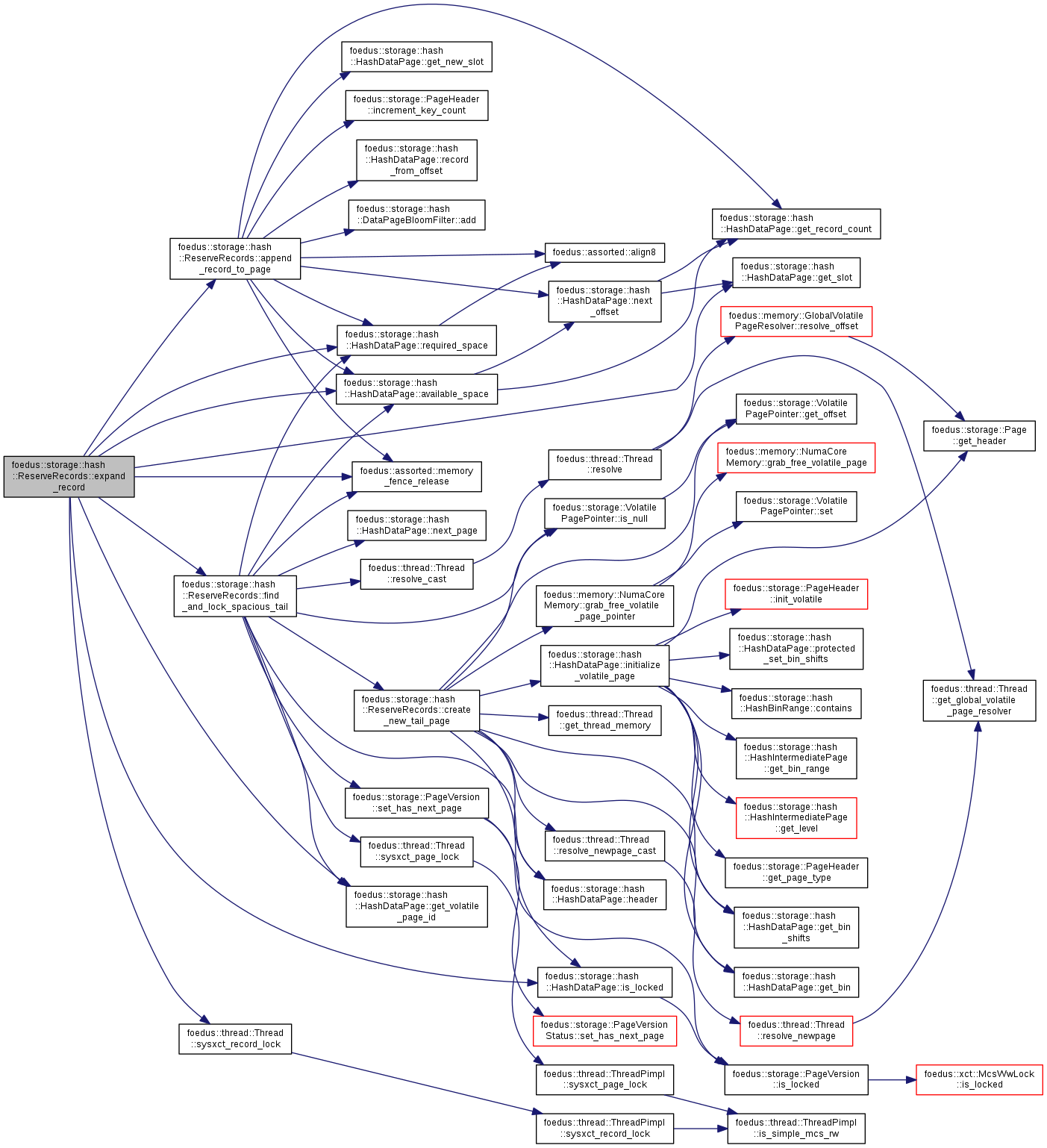

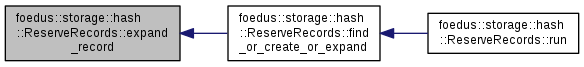

References foedus::storage::hash::DataPageBloomFilter::add(), aggressive_payload_count_hint_, foedus::assorted::align8(), ASSERT_ND, foedus::storage::hash::HashDataPage::available_space(), combo_, foedus::storage::hash::HashCombo::fingerprint_, foedus::storage::hash::HashDataPage::get_new_slot(), foedus::storage::hash::HashDataPage::get_record_count(), foedus::storage::hash::HashCombo::hash_, foedus::storage::PageHeader::increment_key_count(), key_, key_length_, foedus::assorted::memory_fence_release(), foedus::storage::hash::HashDataPage::next_offset(), foedus::storage::hash::HashDataPage::Slot::offset_, payload_count_, foedus::storage::hash::HashDataPage::record_from_offset(), and foedus::storage::hash::HashDataPage::required_space().

Referenced by create_new_record_in_tail_page(), and expand_record().

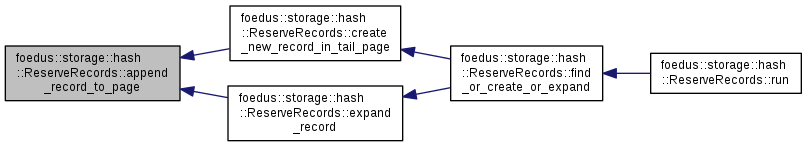

| ErrorCode foedus::storage::hash::ReserveRecords::create_new_record_in_tail_page | ( | HashDataPage * | tail | ) |

Installs it as a fresh-new physical record, assuming the given page is the tail and already locked.

Definition at line 208 of file hash_reserve_impl.cpp.

References aggressive_payload_count_hint_, append_record_to_page(), ASSERT_ND, foedus::storage::hash::HashDataPage::available_space(), CHECK_ERROR_CODE, create_new_tail_page(), foedus::storage::hash::HashDataPage::get_volatile_page_id(), foedus::storage::hash::HashDataPage::header(), foedus::storage::hash::HashDataPage::is_locked(), foedus::storage::VolatilePagePointer::is_null(), foedus::Epoch::kEpochInitialCurrent, foedus::kErrorCodeOk, key_length_, foedus::assorted::memory_fence_release(), foedus::storage::hash::HashDataPage::next_page(), out_page_, out_slot_, foedus::storage::PageHeader::page_version_, foedus::storage::hash::HashDataPage::required_space(), foedus::xct::XctId::set(), foedus::xct::XctId::set_deleted(), foedus::storage::PageVersion::set_has_next_page(), and foedus::storage::DualPagePointer::volatile_pointer_.

Referenced by find_or_create_or_expand().

| ErrorCode foedus::storage::hash::ReserveRecords::create_new_tail_page | ( | HashDataPage * | cur_tail, |

| HashDataPage ** | new_tail | ||

| ) |

Definition at line 187 of file hash_reserve_impl.cpp.

References ASSERT_ND, context_, foedus::storage::hash::HashDataPage::get_bin(), foedus::storage::hash::HashDataPage::get_bin_shifts(), foedus::storage::VolatilePagePointer::get_offset(), foedus::thread::Thread::get_thread_memory(), foedus::memory::NumaCoreMemory::grab_free_volatile_page_pointer(), foedus::storage::hash::HashDataPage::header(), foedus::storage::hash::HashDataPage::initialize_volatile_page(), foedus::storage::hash::HashDataPage::is_locked(), foedus::storage::VolatilePagePointer::is_null(), foedus::kErrorCodeMemoryNoFreePages, foedus::kErrorCodeOk, foedus::thread::Thread::resolve_newpage_cast(), foedus::storage::PageHeader::storage_id_, and UNLIKELY.

Referenced by create_new_record_in_tail_page(), and find_and_lock_spacious_tail().

| ErrorCode foedus::storage::hash::ReserveRecords::expand_record | ( | xct::SysxctWorkspace * | sysxct_workspace, |

| HashDataPage * | page, | ||

| DataPageSlotIndex | index | ||

| ) |

Definition at line 101 of file hash_reserve_impl.cpp.

References aggressive_payload_count_hint_, append_record_to_page(), ASSERT_ND, foedus::storage::hash::HashDataPage::available_space(), CHECK_ERROR_CODE, context_, find_and_lock_spacious_tail(), foedus::storage::hash::HashDataPage::get_slot(), foedus::storage::hash::HashDataPage::get_volatile_page_id(), foedus::storage::hash::HashDataPage::is_locked(), foedus::kErrorCodeOk, foedus::kErrorCodeXctRaceAbort, key_length_, foedus::assorted::memory_fence_release(), out_page_, out_slot_, foedus::storage::hash::HashDataPage::required_space(), foedus::thread::Thread::sysxct_record_lock(), and foedus::storage::hash::HashDataPage::Slot::tid_.

Referenced by find_or_create_or_expand().

| ErrorCode foedus::storage::hash::ReserveRecords::find_and_lock_spacious_tail | ( | xct::SysxctWorkspace * | sysxct_workspace, |

| HashDataPage * | from_page, | ||

| HashDataPage ** | tail | ||

| ) |

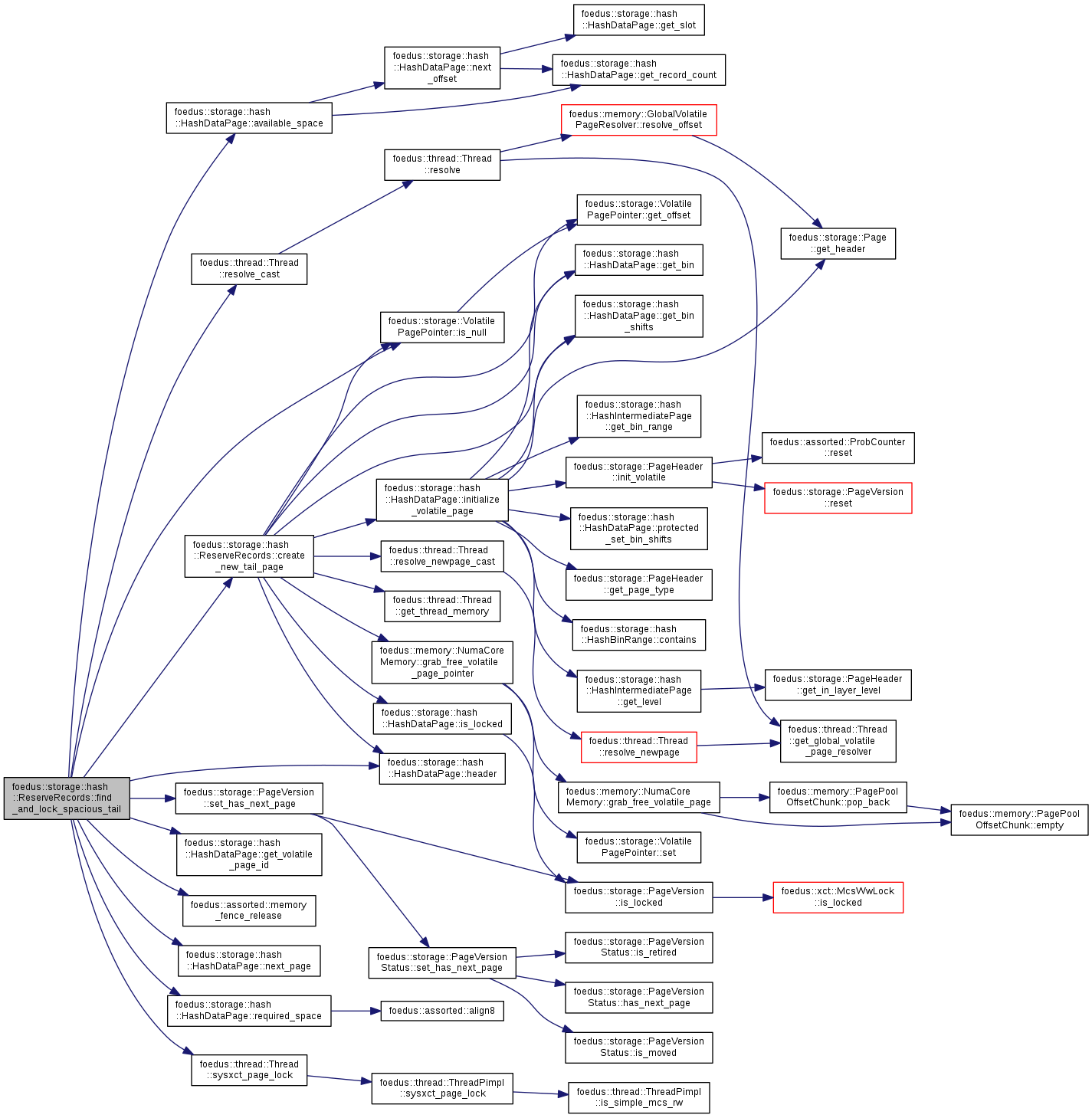

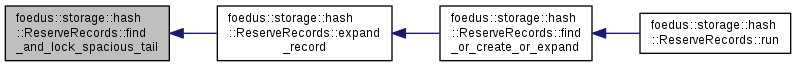

Definition at line 139 of file hash_reserve_impl.cpp.

References aggressive_payload_count_hint_, foedus::storage::hash::HashDataPage::available_space(), CHECK_ERROR_CODE, context_, create_new_tail_page(), foedus::storage::hash::HashDataPage::get_volatile_page_id(), foedus::storage::hash::HashDataPage::header(), foedus::storage::VolatilePagePointer::is_null(), foedus::kErrorCodeOk, key_length_, foedus::assorted::memory_fence_release(), foedus::storage::hash::HashDataPage::next_page(), foedus::storage::PageHeader::page_version_, foedus::storage::hash::HashDataPage::required_space(), foedus::thread::Thread::resolve_cast(), foedus::storage::PageVersion::set_has_next_page(), foedus::thread::Thread::sysxct_page_lock(), and foedus::storage::DualPagePointer::volatile_pointer_.

Referenced by expand_record().

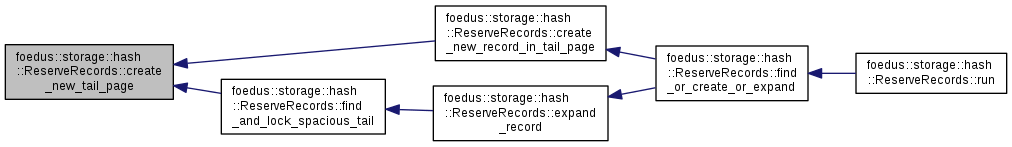

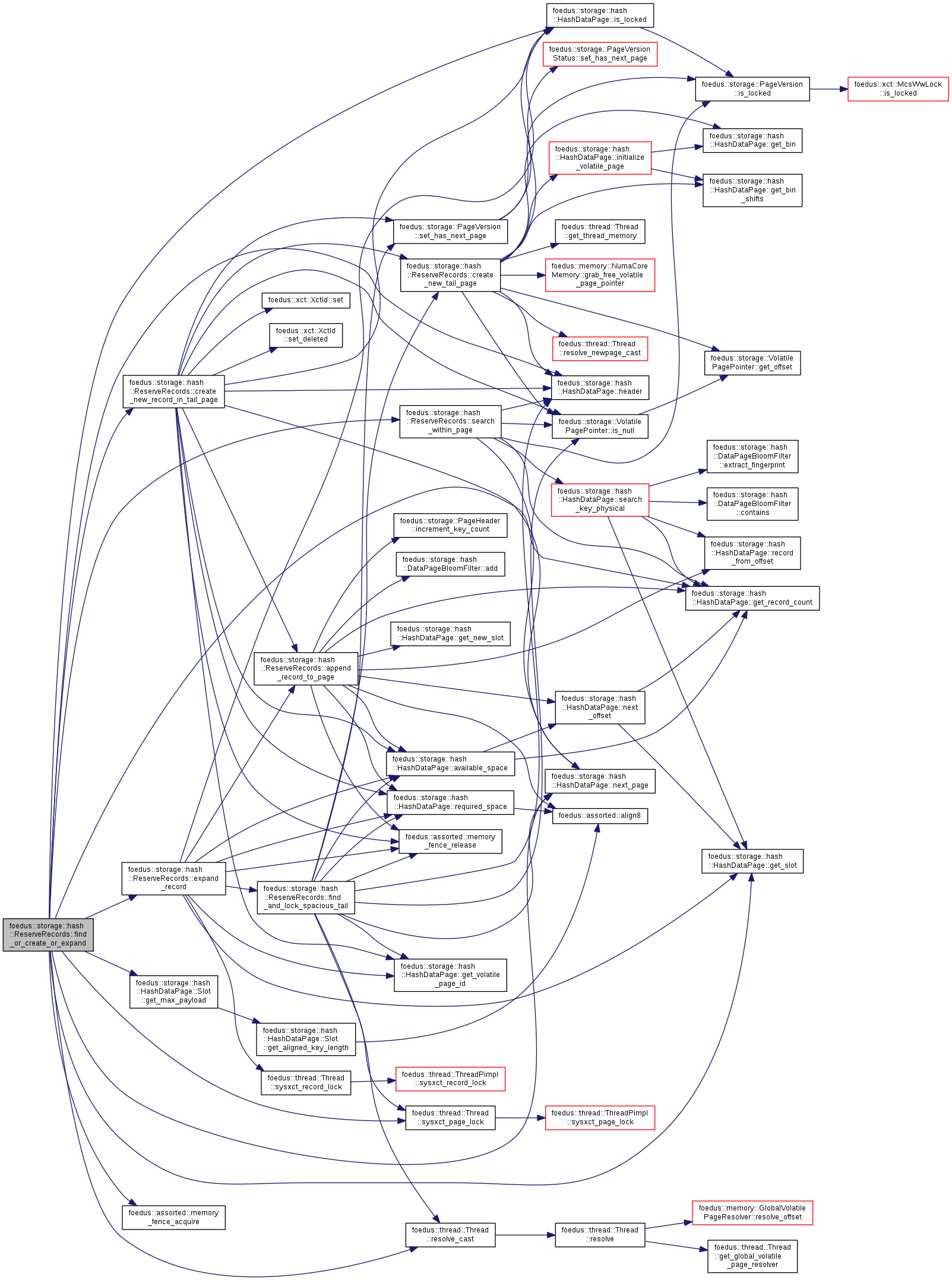

| ErrorCode foedus::storage::hash::ReserveRecords::find_or_create_or_expand | ( | xct::SysxctWorkspace * | sysxct_workspace, |

| HashDataPage * | page, | ||

| DataPageSlotIndex | examined_records | ||

| ) |

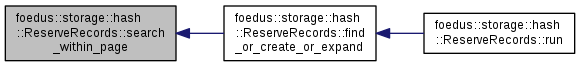

The main loop (well, recursion actually).

Definition at line 36 of file hash_reserve_impl.cpp.

References ASSERT_ND, CHECK_ERROR_CODE, context_, create_new_record_in_tail_page(), expand_record(), foedus::storage::hash::HashDataPage::Slot::get_max_payload(), foedus::storage::hash::HashDataPage::get_record_count(), foedus::storage::hash::HashDataPage::get_slot(), foedus::storage::hash::HashDataPage::header(), foedus::storage::hash::HashDataPage::is_locked(), foedus::kErrorCodeOk, foedus::storage::hash::kSlotNotFound, foedus::assorted::memory_fence_acquire(), foedus::storage::hash::HashDataPage::next_page(), out_page_, out_slot_, payload_count_, foedus::thread::Thread::resolve_cast(), search_within_page(), foedus::storage::PageHeader::snapshot_, foedus::thread::Thread::sysxct_page_lock(), target_, and foedus::storage::DualPagePointer::volatile_pointer_.

Referenced by run().

|

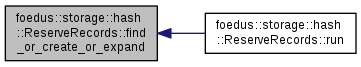

overridevirtual |

Execute the system transaction.

You should override this method.

Implements foedus::xct::SysxctFunctor.

Definition at line 31 of file hash_reserve_impl.cpp.

References aggressive_payload_count_hint_, ASSERT_ND, find_or_create_or_expand(), hint_check_from_, payload_count_, and target_.

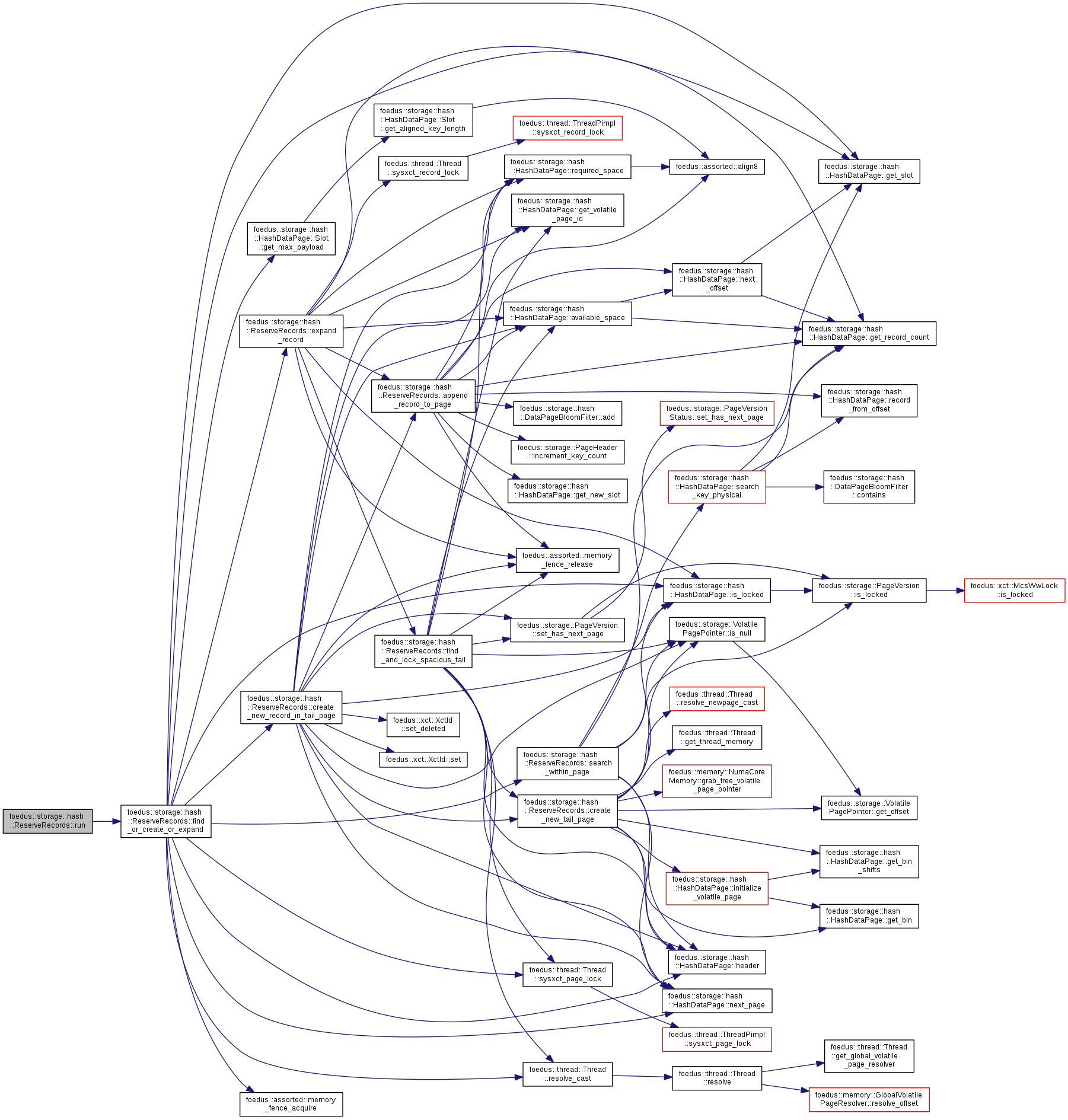

| DataPageSlotIndex foedus::storage::hash::ReserveRecords::search_within_page | ( | const HashDataPage * | page, |

| DataPageSlotIndex | key_count, | ||

| DataPageSlotIndex | examined_records | ||

| ) | const |

Definition at line 244 of file hash_reserve_impl.cpp.

References ASSERT_ND, combo_, foedus::storage::hash::HashCombo::fingerprint_, foedus::storage::hash::HashDataPage::get_record_count(), foedus::storage::hash::HashCombo::hash_, foedus::storage::hash::HashDataPage::header(), foedus::storage::PageVersion::is_locked(), foedus::storage::VolatilePagePointer::is_null(), key_, key_length_, foedus::storage::hash::HashDataPage::next_page(), foedus::storage::PageHeader::page_version_, foedus::storage::hash::HashDataPage::search_key_physical(), foedus::storage::PageHeader::snapshot_, and foedus::storage::DualPagePointer::volatile_pointer_.

Referenced by find_or_create_or_expand().

| const PayloadLength foedus::storage::hash::ReserveRecords::aggressive_payload_count_hint_ |

When we expand the record or allocate a new record, we might allocate a larger-than-necessary space guided by this hint.

It's useful to avoid future record expansion.

Definition at line 113 of file hash_reserve_impl.hpp.

Referenced by append_record_to_page(), create_new_record_in_tail_page(), expand_record(), find_and_lock_spacious_tail(), and run().

| const HashCombo& foedus::storage::hash::ReserveRecords::combo_ |

Hash info of the key.

It's &, so lifetime of the caller's HashCombo object must be longer than this sysxct.

Definition at line 102 of file hash_reserve_impl.hpp.

Referenced by append_record_to_page(), and search_within_page().

| thread::Thread* const foedus::storage::hash::ReserveRecords::context_ |

Thread context.

Definition at line 75 of file hash_reserve_impl.hpp.

Referenced by create_new_tail_page(), expand_record(), find_and_lock_spacious_tail(), and find_or_create_or_expand().

| const DataPageSlotIndex foedus::storage::hash::ReserveRecords::hint_check_from_ |

The in-page location from which this sysxct will look for matching records.

The caller is sure that no record before this position can match the slice. Thanks to append-only writes in data pages, the caller can guarantee that the records it observed are final.

In most cases, this is same as key_count after locking, thus completely avoiding the re-check. In some cases, the caller already found a matching key in this index, but even in that case this sysxct must re-search after locking because the record might be now moved.

Definition at line 124 of file hash_reserve_impl.hpp.

Referenced by run().

| const void* const foedus::storage::hash::ReserveRecords::key_ |

The key of the new record.

Definition at line 97 of file hash_reserve_impl.hpp.

Referenced by append_record_to_page(), and search_within_page().

| const KeyLength foedus::storage::hash::ReserveRecords::key_length_ |

Byte length of the key.

Definition at line 104 of file hash_reserve_impl.hpp.

Referenced by append_record_to_page(), create_new_record_in_tail_page(), expand_record(), find_and_lock_spacious_tail(), and search_within_page().

| HashDataPage* foedus::storage::hash::ReserveRecords::out_page_ |

[Out] The page that contains the found/created record.

As far as this sysxct returns kErrorCodeOk, out_page_ is either the target_ itself or some page after target_.

Definition at line 140 of file hash_reserve_impl.hpp.

Referenced by create_new_record_in_tail_page(), expand_record(), find_or_create_or_expand(), and foedus::storage::hash::HashStoragePimpl::locate_record_reserve_physical().

| DataPageSlotIndex foedus::storage::hash::ReserveRecords::out_slot_ |

[Out] The slot of the record that is found or created.

As far as this sysxct returns kErrorCodeOk, this guarantees the following.

Definition at line 133 of file hash_reserve_impl.hpp.

Referenced by create_new_record_in_tail_page(), expand_record(), find_or_create_or_expand(), and foedus::storage::hash::HashStoragePimpl::locate_record_reserve_physical().

| const PayloadLength foedus::storage::hash::ReserveRecords::payload_count_ |

Minimal required length of the payload.

Definition at line 106 of file hash_reserve_impl.hpp.

Referenced by append_record_to_page(), find_or_create_or_expand(), and run().

| HashDataPage* const foedus::storage::hash::ReserveRecords::target_ |

The data page to install a new physical record.

This might NOT be the head page of the hash bin. The contract here is that the caller must be sure that any pages before this page in the chain must not contain a record of the given key that is not marked as moved. In other words, the caller must have checked that there is no such record before hint_check_from_ of target_.

Of course, by the time this sysxct takes a lock, other threads might insert more records, some of which might have the key. This sysxct thus needs to resume search from target_ and hint_check_from_ (but, not anywhere before!).

Definition at line 95 of file hash_reserve_impl.hpp.

Referenced by find_or_create_or_expand(), and run().