|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

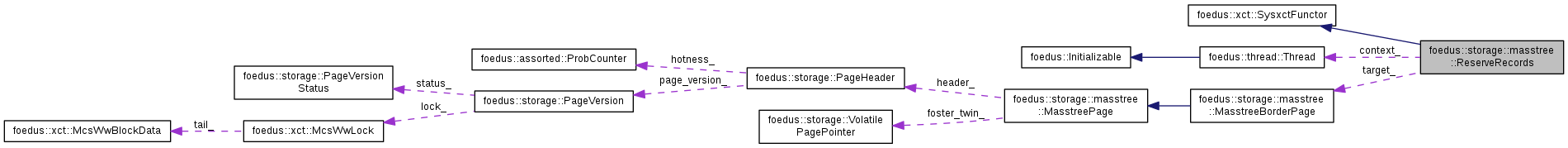

A system transaction to reserve a physical record(s) in a border page. More...

A system transaction to reserve a physical record(s) in a border page.

A record insertion happens in two steps:

This system transaction does the former.

This does nothing and returns kErrorCodeOk in the following cases:

When the 2nd or 3rd case (or 1st case with too-short payload space) happens, the caller will do something else (e.g. split/follow-foster) and retry.

Locks taken in this sysxct (in order of taking):

So far this sysxct installs only one physical record at a time. TASK(Hideaki): Probably it helps by batching several records.

Definition at line 57 of file masstree_reserve_impl.hpp.

#include <masstree_reserve_impl.hpp>

Public Member Functions | |

| ReserveRecords (thread::Thread *context, MasstreeBorderPage *target, KeySlice slice, KeyLength remainder_length, const void *suffix, PayloadLength payload_count, bool should_aggresively_create_next_layer, SlotIndex hint_check_from) | |

| virtual ErrorCode | run (xct::SysxctWorkspace *sysxct_workspace) override |

| Execute the system transaction. More... | |

Public Attributes | |

| thread::Thread *const | context_ |

| Thread context. More... | |

| MasstreeBorderPage *const | target_ |

| The page to install a new physical record. More... | |

| const KeySlice | slice_ |

| The slice of the key. More... | |

| const void *const | suffix_ |

| Suffix of the key. More... | |

| const KeyLength | remainder_length_ |

| Length of the remainder. More... | |

| const PayloadLength | payload_count_ |

| Minimal required length of the payload. More... | |

| const SlotIndex | hint_check_from_ |

| The in-page location from which this sysxct will look for matching records. More... | |

| const bool | should_aggresively_create_next_layer_ |

| When we CAN create a next layer for the new record, whether to make it a next-layer from the beginning, or make it as a usual record first. More... | |

| bool | out_split_needed_ |

| [Out] More... | |

|

inline |

Definition at line 91 of file masstree_reserve_impl.hpp.

|

overridevirtual |

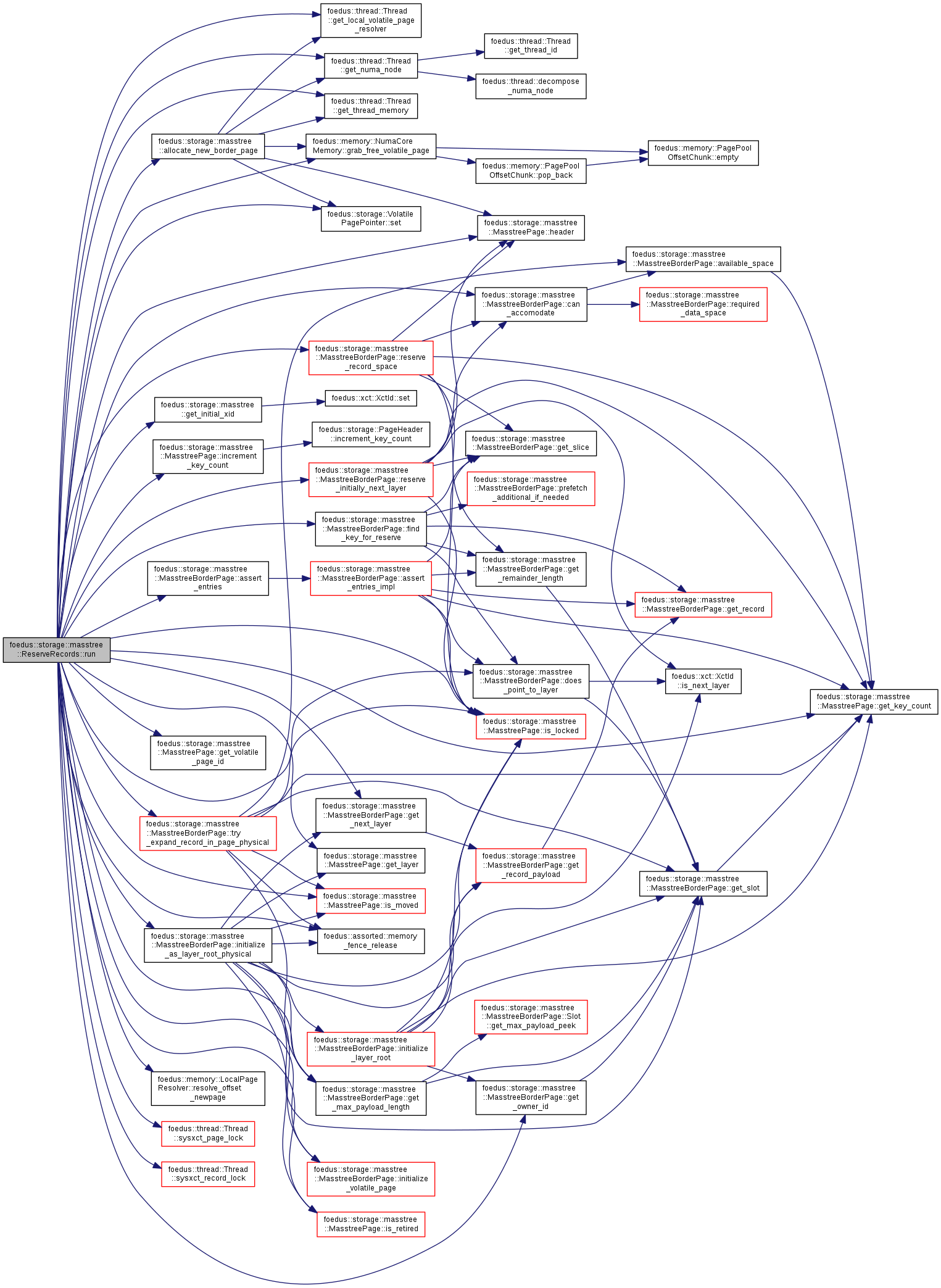

Execute the system transaction.

You should override this method.

Implements foedus::xct::SysxctFunctor.

Definition at line 55 of file masstree_reserve_impl.cpp.

References foedus::storage::masstree::allocate_new_border_page(), foedus::storage::masstree::MasstreeBorderPage::assert_entries(), ASSERT_ND, foedus::storage::masstree::MasstreeBorderPage::can_accomodate(), CHECK_ERROR_CODE, context_, foedus::storage::masstree::MasstreeBorderPage::does_point_to_layer(), foedus::storage::masstree::MasstreeBorderPage::find_key_for_reserve(), foedus::storage::masstree::get_initial_xid(), foedus::storage::masstree::MasstreePage::get_key_count(), foedus::storage::masstree::MasstreePage::get_layer(), foedus::thread::Thread::get_local_volatile_page_resolver(), foedus::storage::masstree::MasstreeBorderPage::get_max_payload_length(), foedus::storage::masstree::MasstreeBorderPage::get_next_layer(), foedus::thread::Thread::get_numa_node(), foedus::storage::masstree::MasstreeBorderPage::get_owner_id(), foedus::thread::Thread::get_thread_memory(), foedus::storage::masstree::MasstreePage::get_volatile_page_id(), foedus::memory::NumaCoreMemory::grab_free_volatile_page(), foedus::storage::masstree::MasstreePage::header(), hint_check_from_, foedus::storage::masstree::MasstreePage::increment_key_count(), foedus::storage::masstree::MasstreeBorderPage::initialize_as_layer_root_physical(), foedus::storage::masstree::MasstreeBorderPage::initialize_volatile_page(), foedus::storage::masstree::MasstreePage::is_locked(), foedus::storage::masstree::MasstreePage::is_moved(), foedus::storage::masstree::MasstreePage::is_retired(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::storage::masstree::MasstreeBorderPage::kConflictingLocalRecord, foedus::kErrorCodeMemoryNoFreePages, foedus::kErrorCodeOk, foedus::storage::masstree::MasstreeBorderPage::kExactMatchLayerPointer, foedus::storage::masstree::MasstreeBorderPage::kExactMatchLocalRecord, foedus::storage::masstree::kInfimumSlice, foedus::storage::masstree::MasstreeBorderPage::kNotFound, foedus::storage::masstree::kSupremumSlice, foedus::assorted::memory_fence_release(), out_split_needed_, foedus::storage::PageHeader::page_id_, payload_count_, remainder_length_, foedus::storage::masstree::MasstreeBorderPage::reserve_initially_next_layer(), foedus::storage::masstree::MasstreeBorderPage::reserve_record_space(), foedus::memory::LocalPageResolver::resolve_offset_newpage(), foedus::storage::VolatilePagePointer::set(), should_aggresively_create_next_layer_, slice_, foedus::storage::PageHeader::snapshot_, foedus::storage::DualPagePointer::snapshot_pointer_, foedus::storage::PageHeader::storage_id_, suffix_, foedus::thread::Thread::sysxct_page_lock(), foedus::thread::Thread::sysxct_record_lock(), target_, foedus::storage::masstree::MasstreeBorderPage::try_expand_record_in_page_physical(), and foedus::storage::DualPagePointer::volatile_pointer_.

| thread::Thread* const foedus::storage::masstree::ReserveRecords::context_ |

| const SlotIndex foedus::storage::masstree::ReserveRecords::hint_check_from_ |

The in-page location from which this sysxct will look for matching records.

The caller is sure that no record before this position can match the slice. Thanks to append-only writes in border pages, the caller can guarantee that the records it observed are final.

In most cases, this is same as key_count after locking, thus completely avoiding the re-check.

Definition at line 78 of file masstree_reserve_impl.hpp.

Referenced by run().

| bool foedus::storage::masstree::ReserveRecords::out_split_needed_ |

[Out]

Definition at line 89 of file masstree_reserve_impl.hpp.

Referenced by foedus::storage::masstree::MasstreeStoragePimpl::reserve_record(), foedus::storage::masstree::MasstreeStoragePimpl::reserve_record_normalized(), and run().

| const PayloadLength foedus::storage::masstree::ReserveRecords::payload_count_ |

Minimal required length of the payload.

Definition at line 69 of file masstree_reserve_impl.hpp.

Referenced by run().

| const KeyLength foedus::storage::masstree::ReserveRecords::remainder_length_ |

Length of the remainder.

Definition at line 67 of file masstree_reserve_impl.hpp.

Referenced by run().

| const bool foedus::storage::masstree::ReserveRecords::should_aggresively_create_next_layer_ |

When we CAN create a next layer for the new record, whether to make it a next-layer from the beginning, or make it as a usual record first.

Definition at line 85 of file masstree_reserve_impl.hpp.

Referenced by run().

| const KeySlice foedus::storage::masstree::ReserveRecords::slice_ |

| const void* const foedus::storage::masstree::ReserveRecords::suffix_ |

| MasstreeBorderPage* const foedus::storage::masstree::ReserveRecords::target_ |

The page to install a new physical record.

Definition at line 61 of file masstree_reserve_impl.hpp.

Referenced by run().