|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

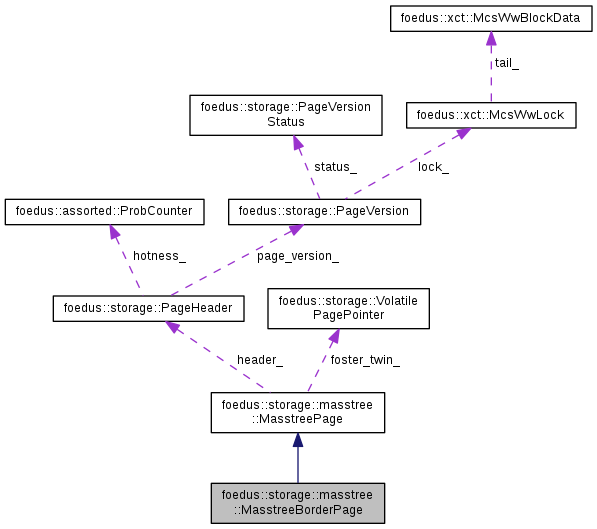

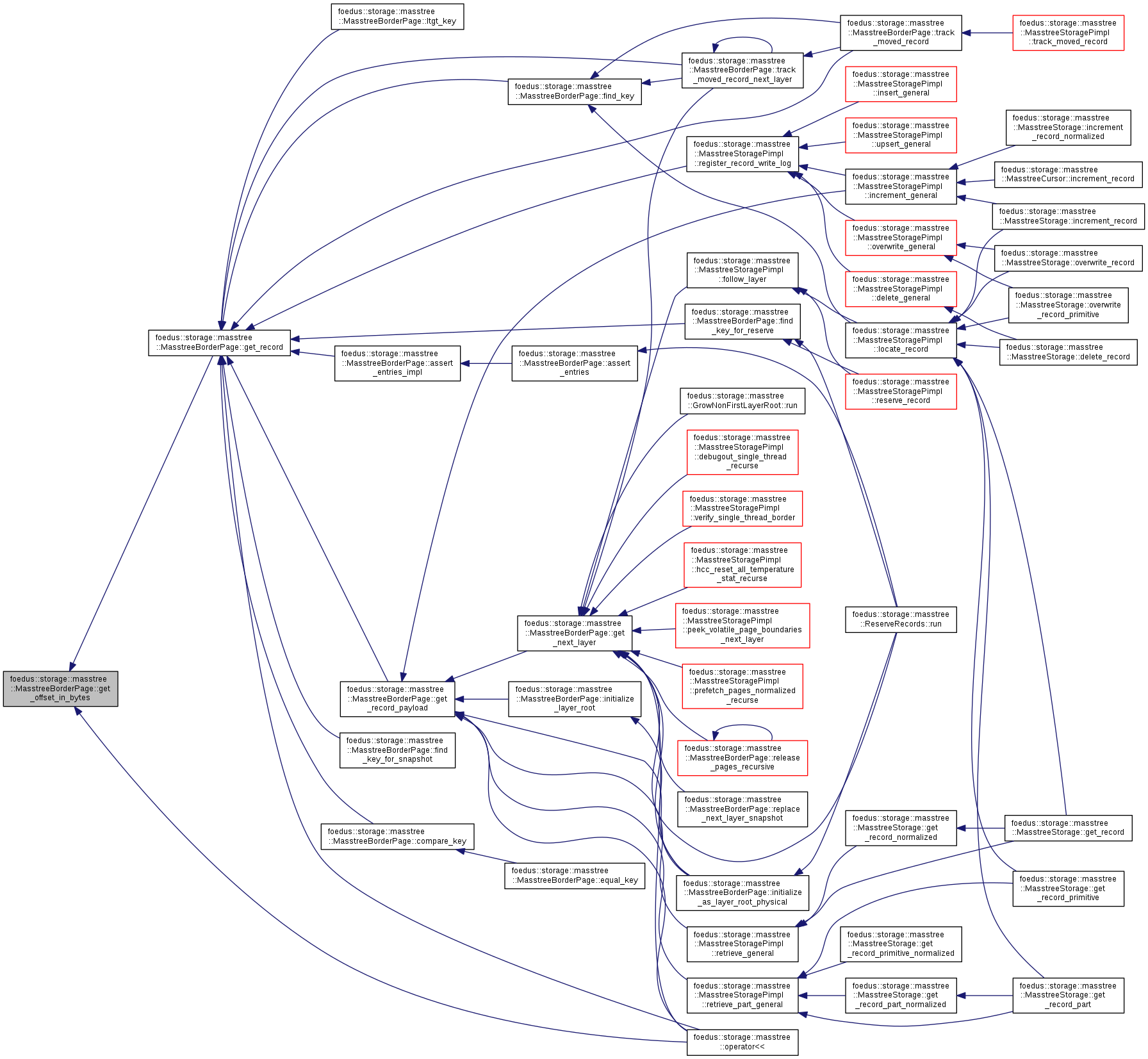

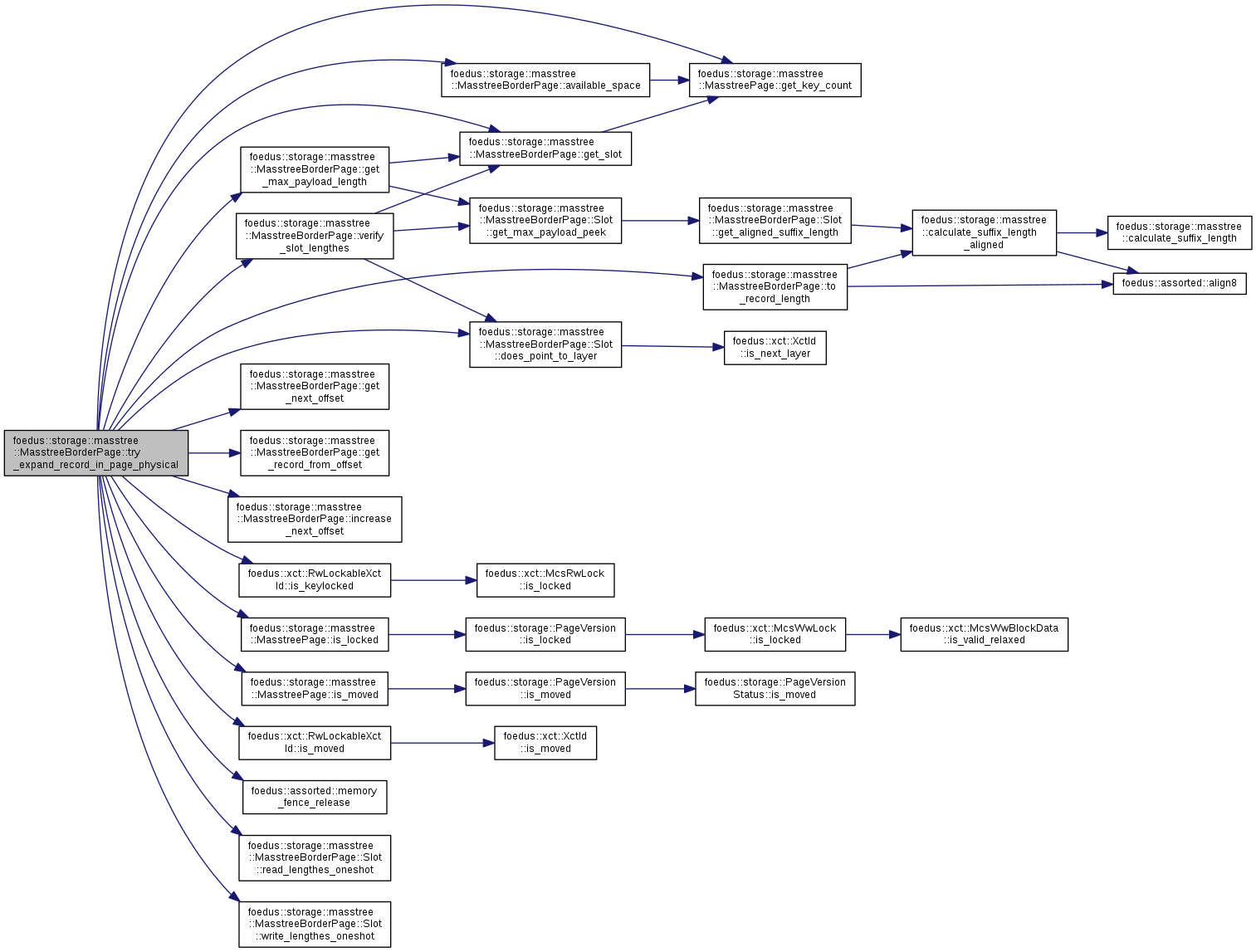

Represents one border page in Masstree Storage. More...

Represents one border page in Masstree Storage.

| Headers, including common part and border-specific part |

| Record Data part, which grows forward |

| Unused part |

|---|

| Slot part (32 bytes per record), which grows backward |

Definition at line 444 of file masstree_page_impl.hpp.

#include <masstree_page_impl.hpp>

Classes | |

| struct | FindKeyForReserveResult |

| return value for find_key_for_reserve(). More... | |

| struct | Slot |

| Fix-sized slot for each record, which is placed at the end of data region. More... | |

| struct | SlotLengthPart |

| A piece of Slot object that must be read/written in one-shot, meaning no one reads half-written values whether it reads old values or new values. More... | |

| union | SlotLengthUnion |

Public Types | |

| enum | MatchType { kNotFound = 0, kExactMatchLocalRecord = 1, kExactMatchLayerPointer = 2, kConflictingLocalRecord = 3 } |

| Used in FindKeyForReserveResult. More... | |

Public Member Functions | |

| MasstreeBorderPage ()=delete | |

| MasstreeBorderPage (const MasstreeBorderPage &other)=delete | |

| MasstreeBorderPage & | operator= (const MasstreeBorderPage &other)=delete |

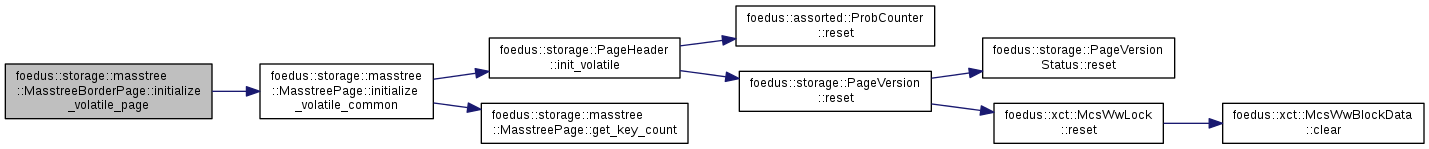

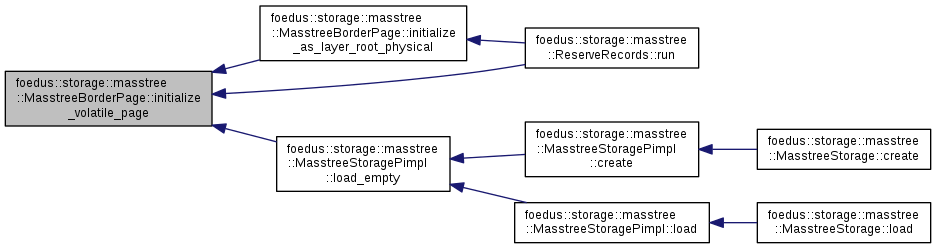

| void | initialize_volatile_page (StorageId storage_id, VolatilePagePointer page_id, uint8_t layer, KeySlice low_fence, KeySlice high_fence) |

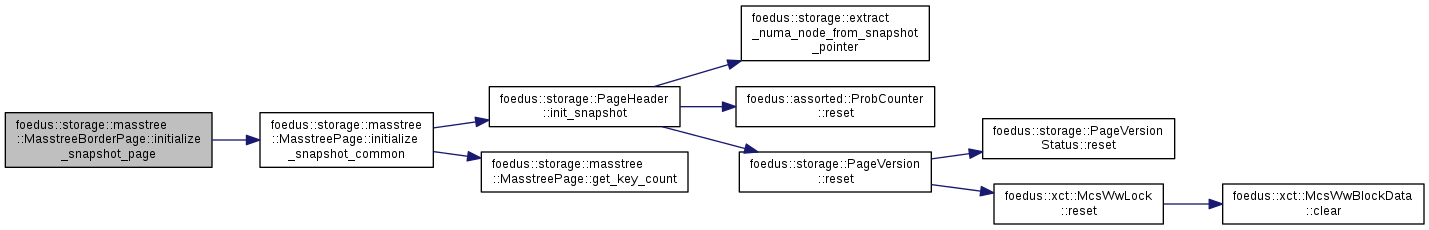

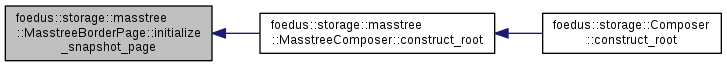

| void | initialize_snapshot_page (StorageId storage_id, SnapshotPagePointer page_id, uint8_t layer, KeySlice low_fence, KeySlice high_fence) |

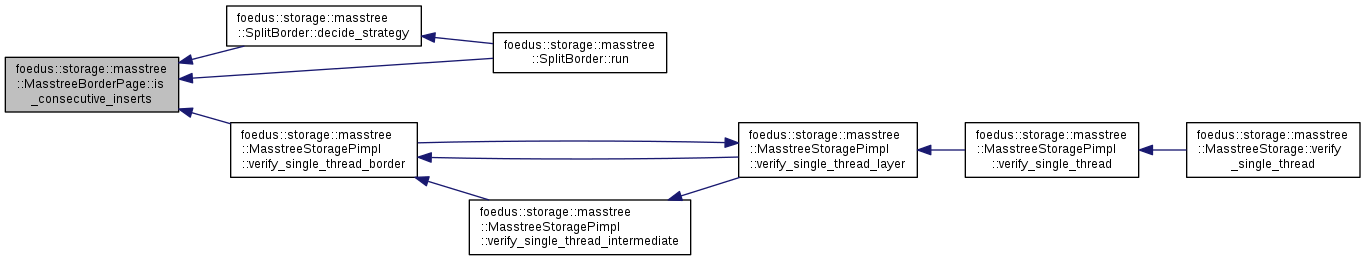

| bool | is_consecutive_inserts () const |

| Whether this page is receiving only sequential inserts. More... | |

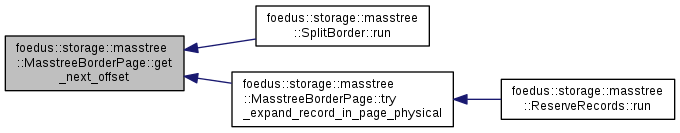

| DataOffset | get_next_offset () const |

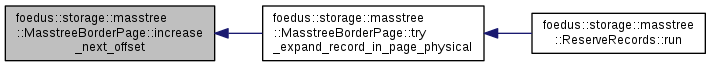

| void | increase_next_offset (DataOffset length) |

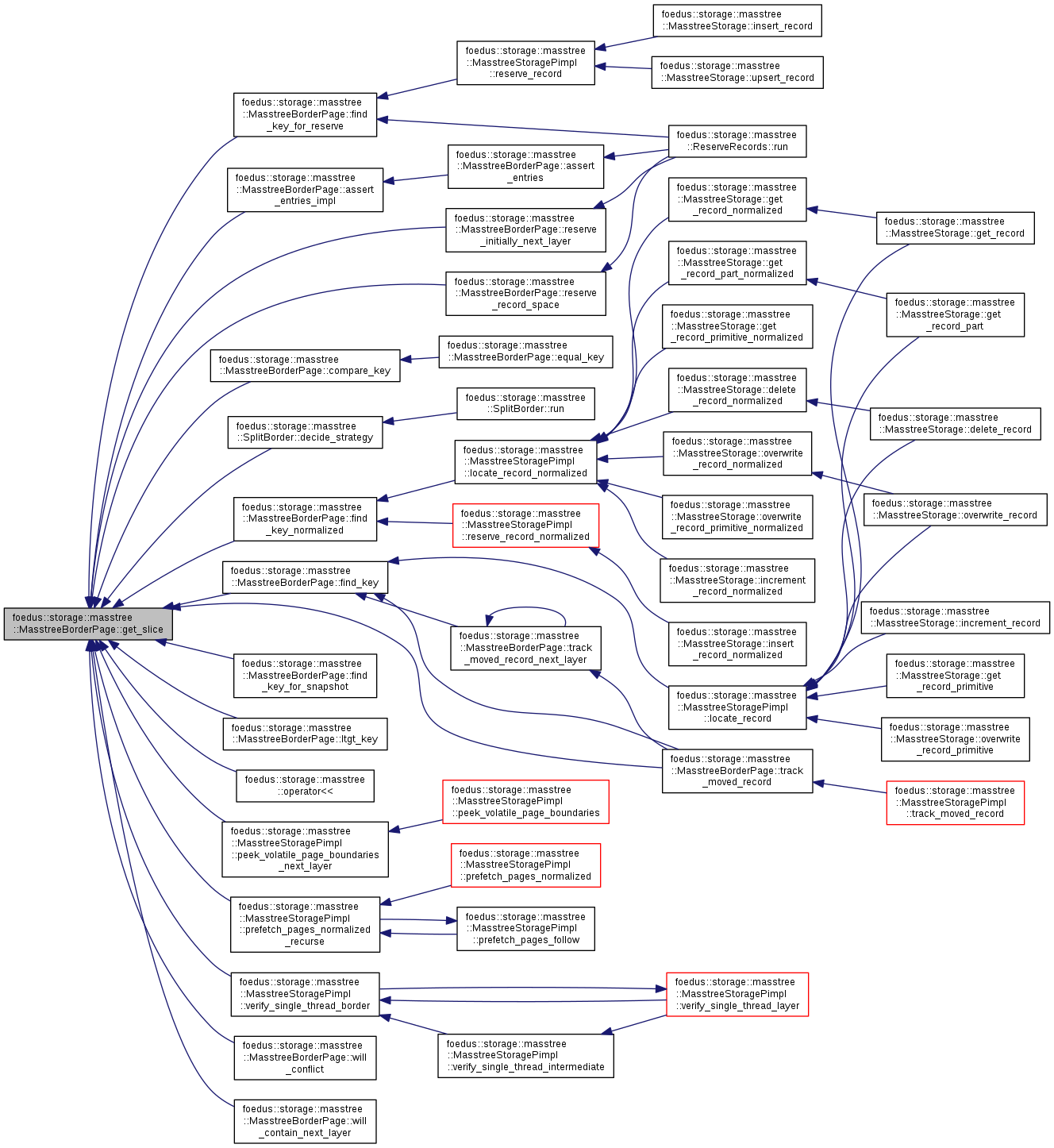

| const Slot * | get_slot (SlotIndex index) const __attribute__((always_inline)) |

| Slot * | get_slot (SlotIndex index) __attribute__((always_inline)) |

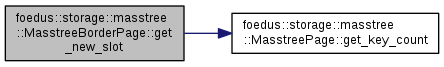

| Slot * | get_new_slot (SlotIndex index) __attribute__((always_inline)) |

| SlotIndex | to_slot_index (const Slot *slot) const __attribute__((always_inline)) |

| DataOffset | available_space () const |

| Returns usable data space in bytes. More... | |

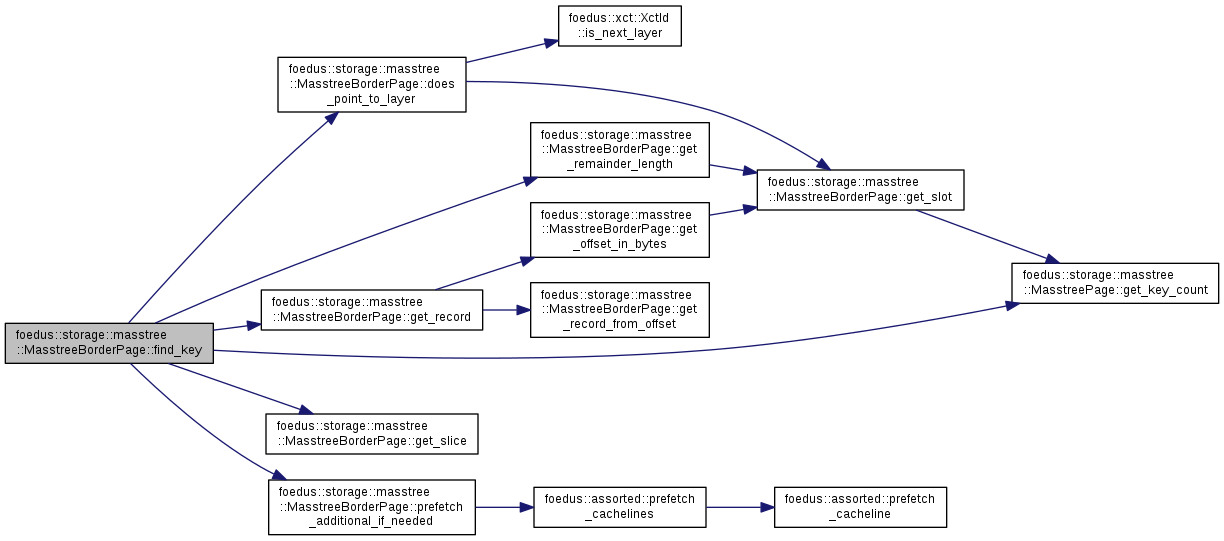

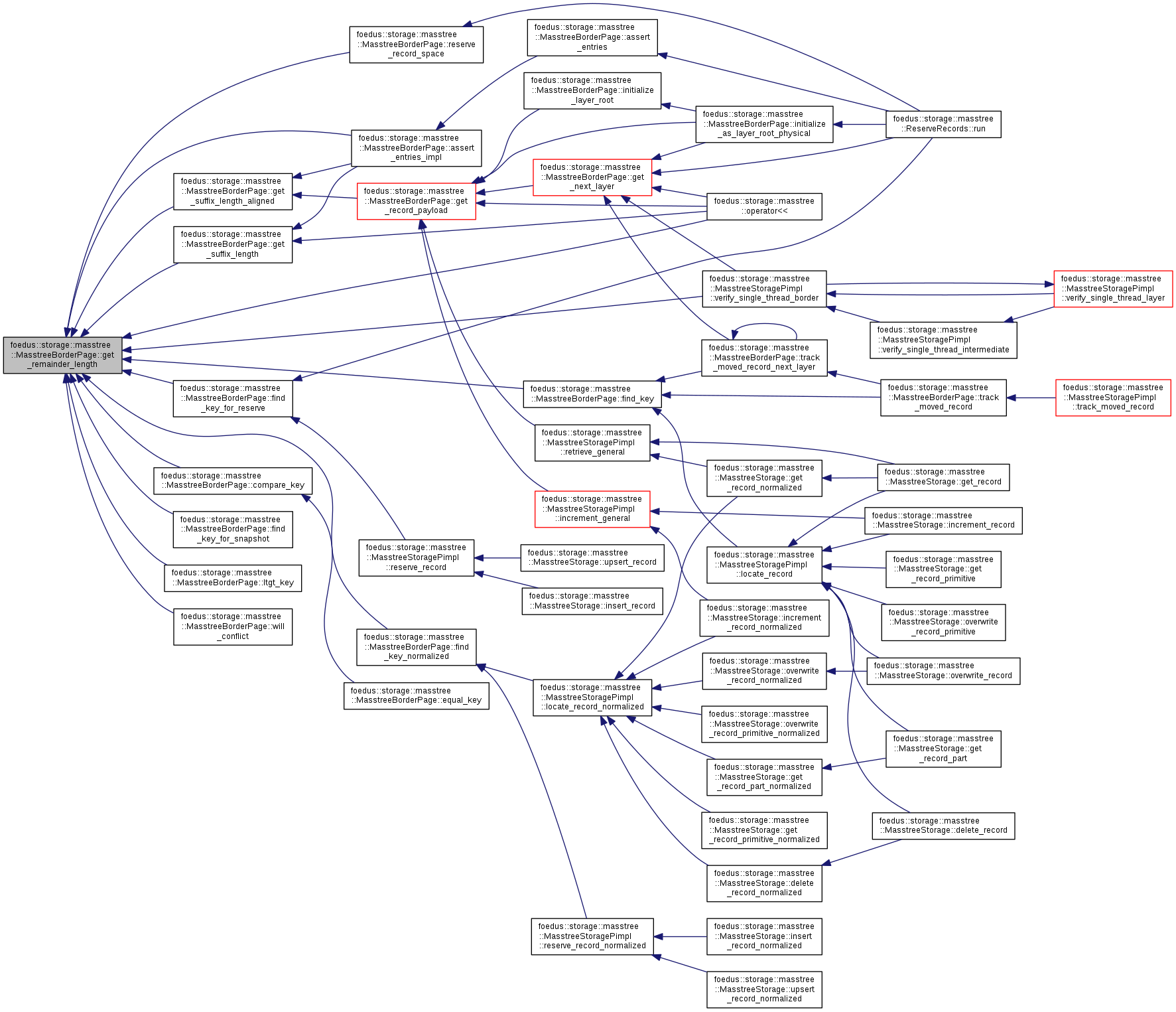

| SlotIndex | find_key (KeySlice slice, const void *suffix, KeyLength remainder) const __attribute__((always_inline)) |

| Navigates a searching key-slice to one of the record in this page. More... | |

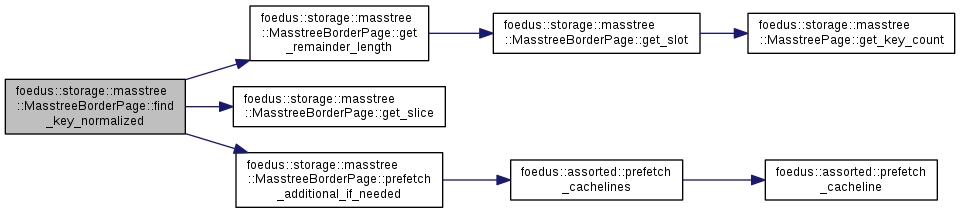

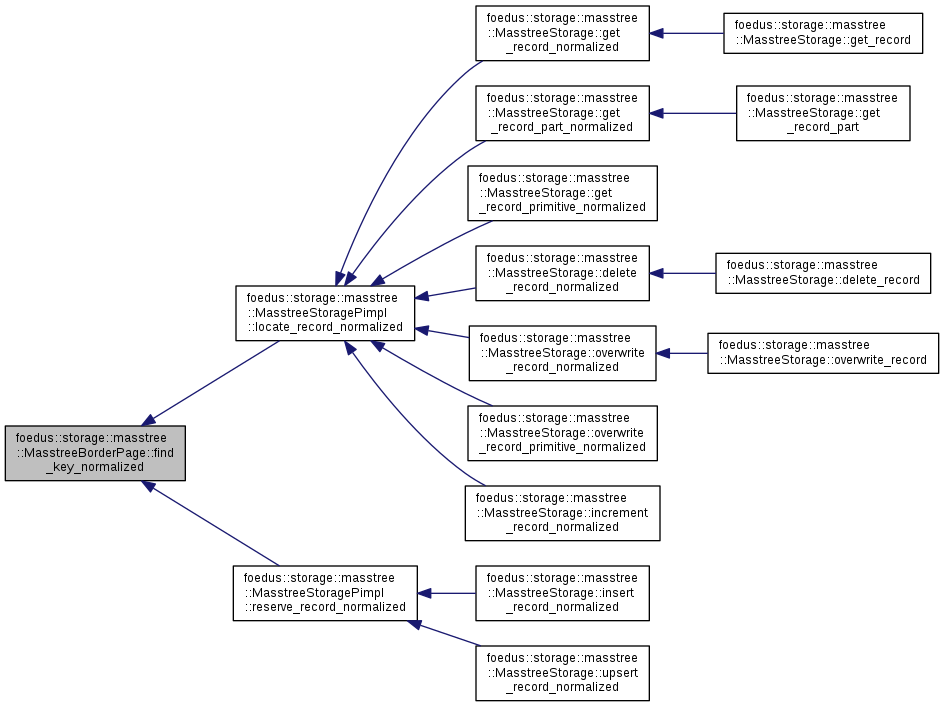

| SlotIndex | find_key_normalized (SlotIndex from_index, SlotIndex to_index, KeySlice slice) const __attribute__((always_inline)) |

| Specialized version for 8 byte native integer search. More... | |

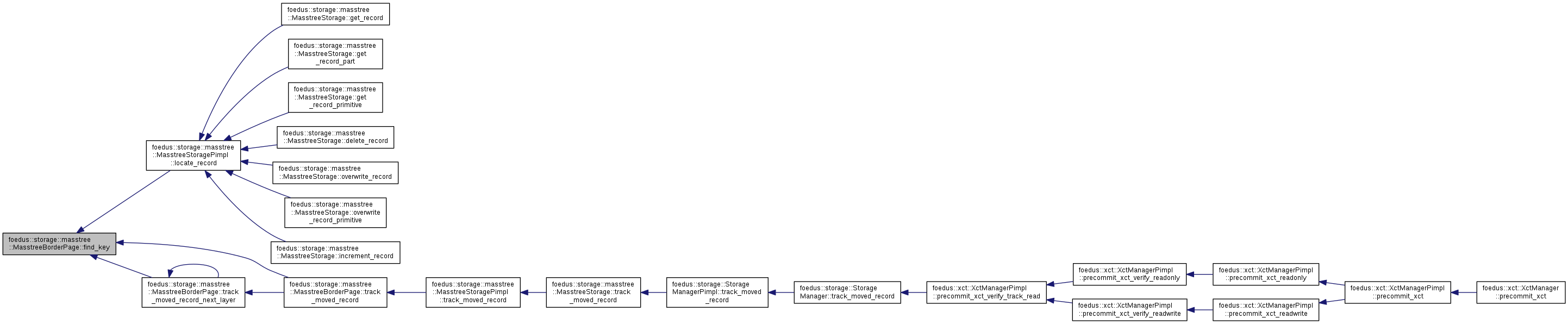

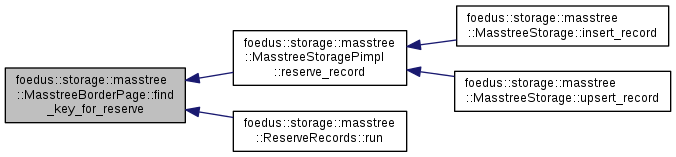

| FindKeyForReserveResult | find_key_for_reserve (SlotIndex from_index, SlotIndex to_index, KeySlice slice, const void *suffix, KeyLength remainder) const __attribute__((always_inline)) |

| This is for the case we are looking for either the matching slot or the slot we will modify. More... | |

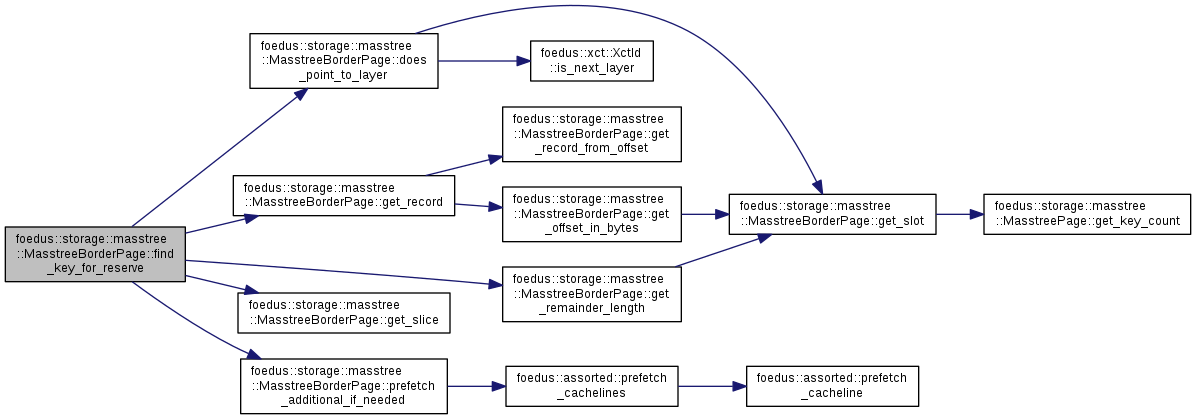

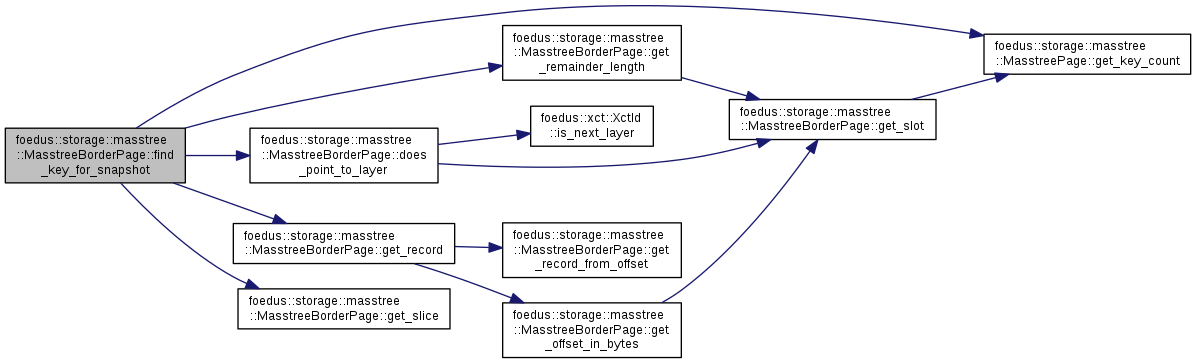

| FindKeyForReserveResult | find_key_for_snapshot (KeySlice slice, const void *suffix, KeyLength remainder) const __attribute__((always_inline)) |

| This one is used for snapshot pages. More... | |

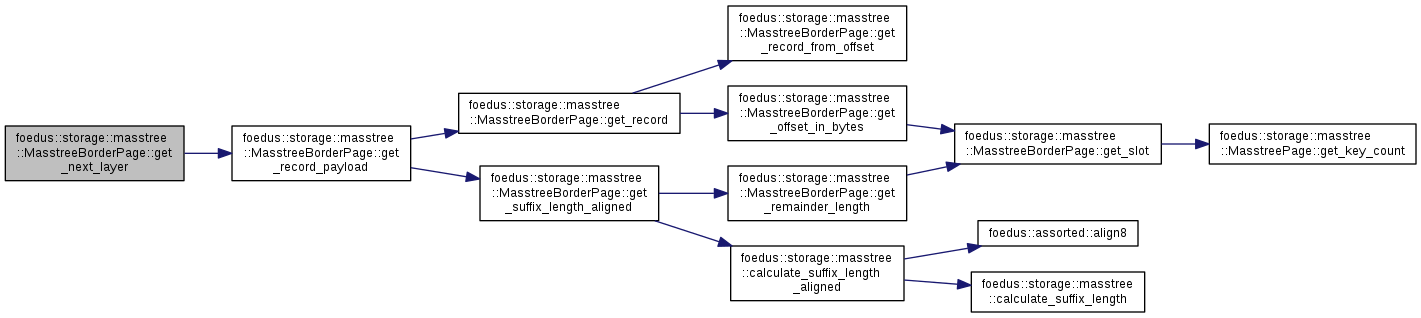

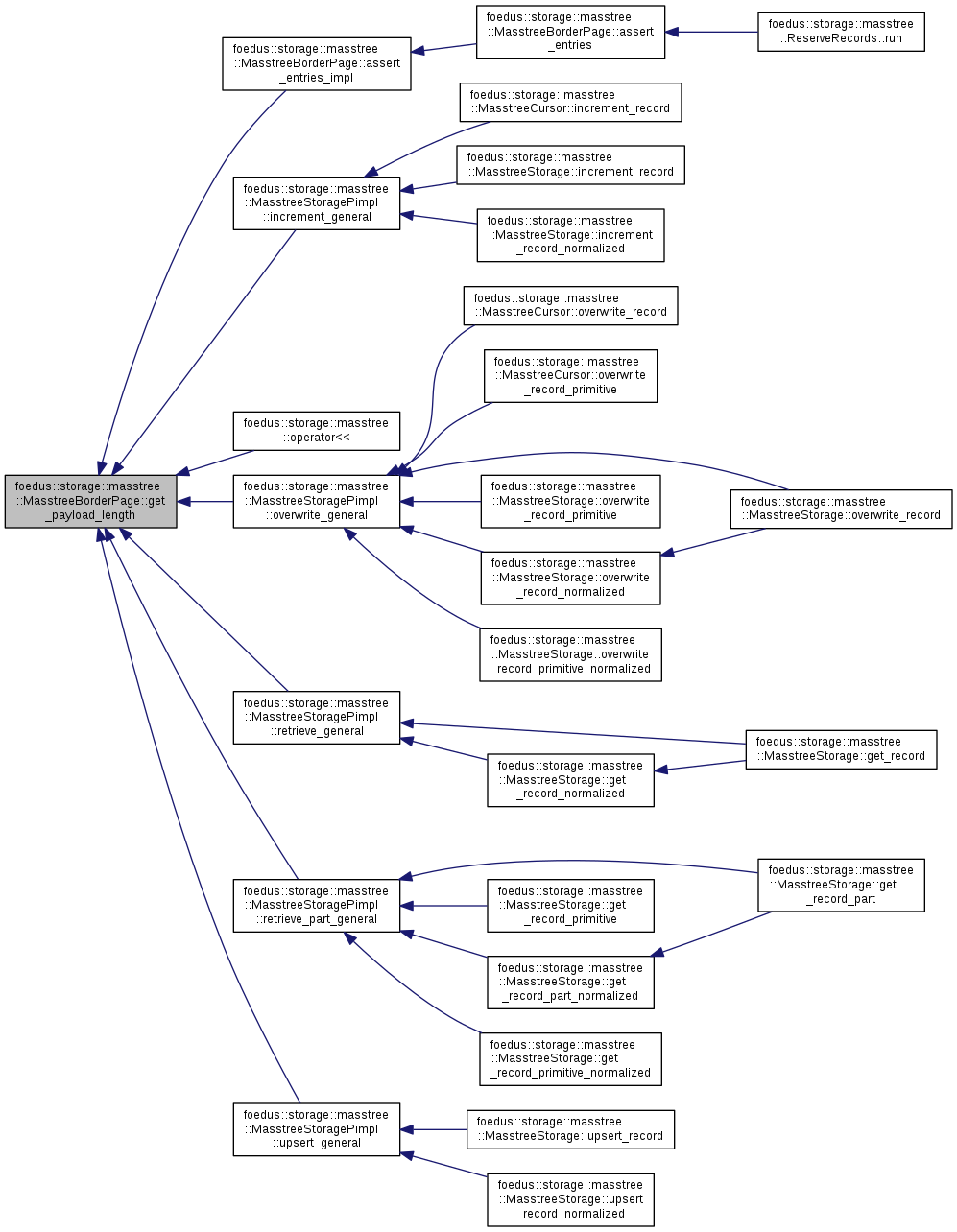

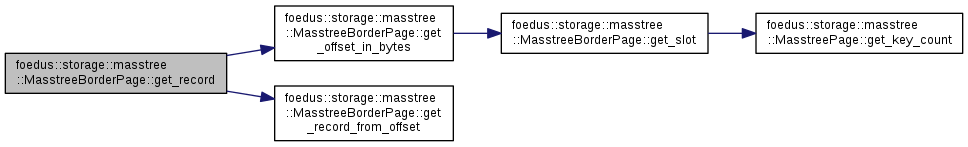

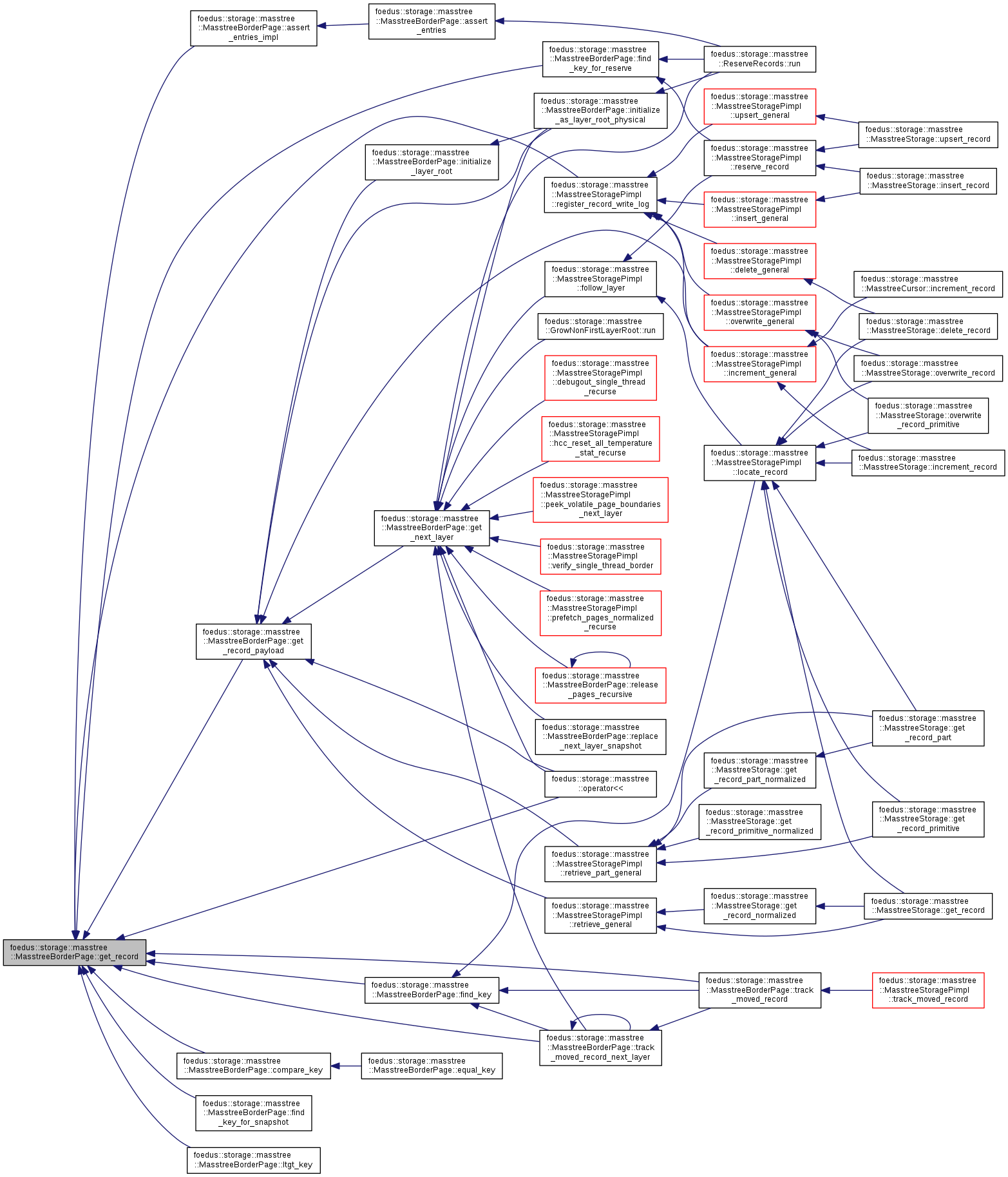

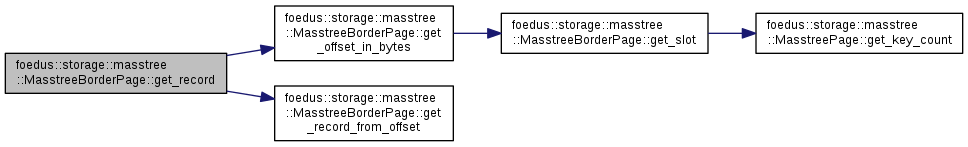

| char * | get_record (SlotIndex index) __attribute__((always_inline)) |

| const char * | get_record (SlotIndex index) const __attribute__((always_inline)) |

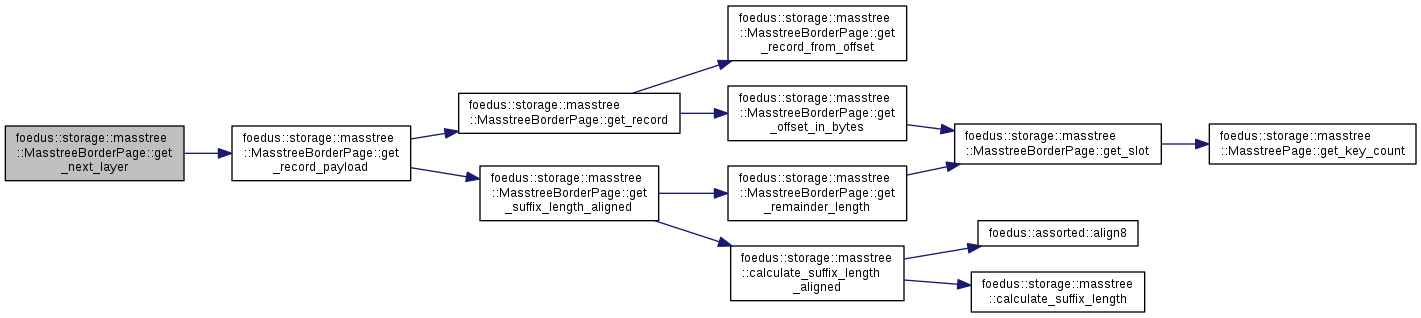

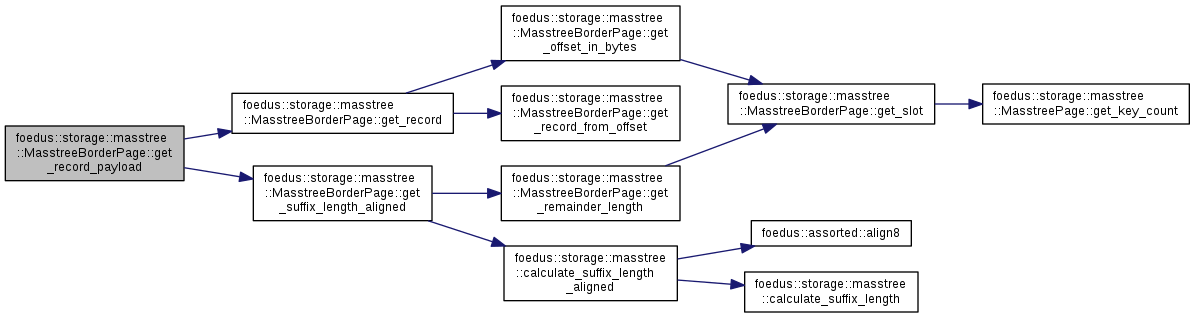

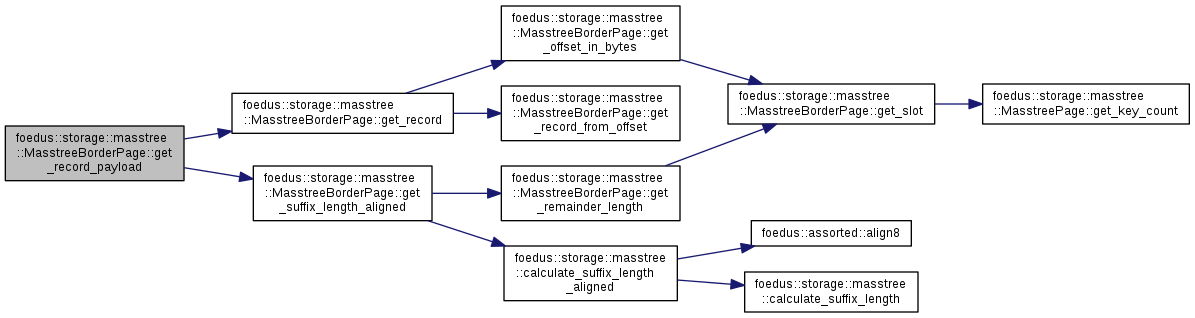

| char * | get_record_payload (SlotIndex index) __attribute__((always_inline)) |

| const char * | get_record_payload (SlotIndex index) const __attribute__((always_inline)) |

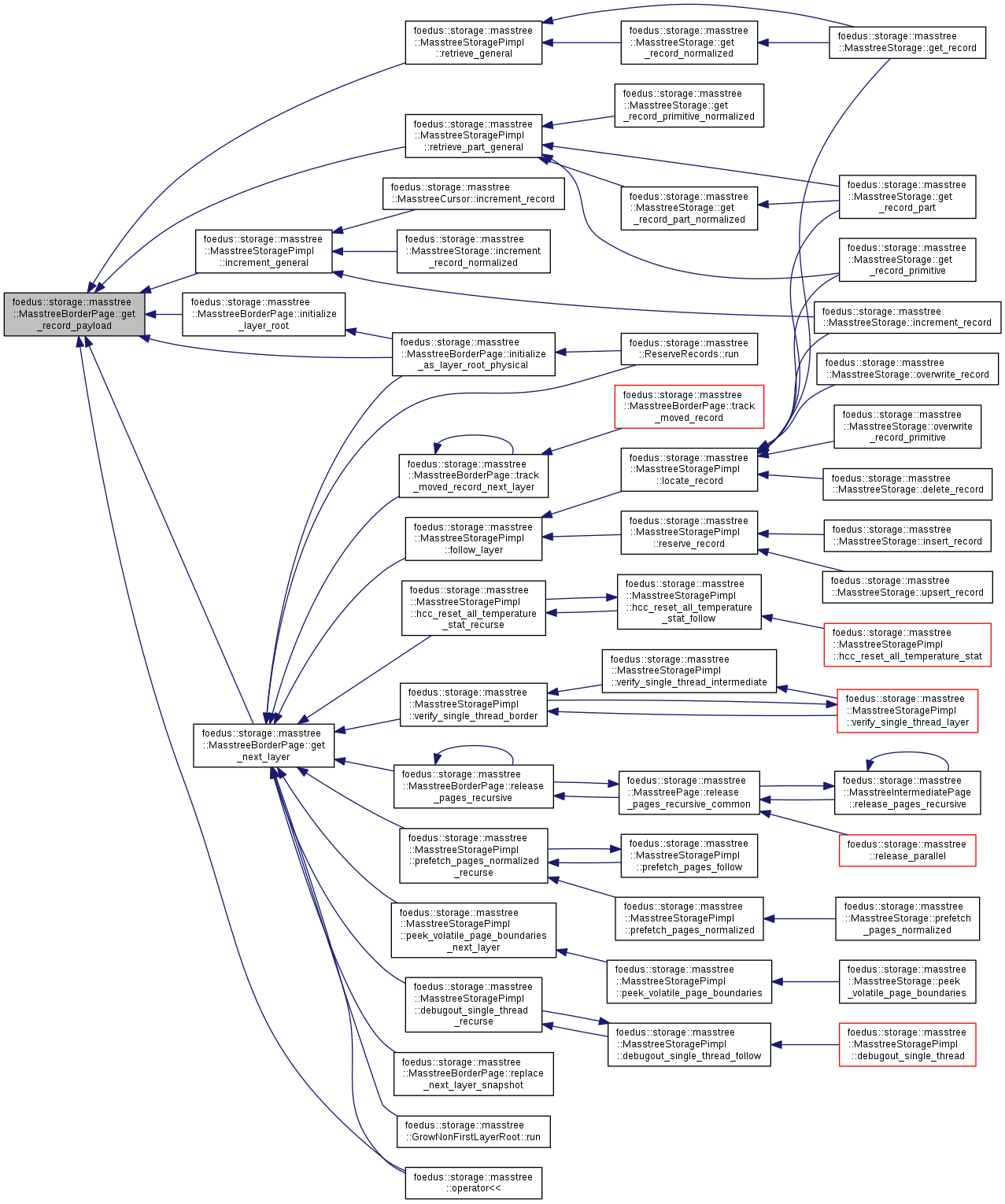

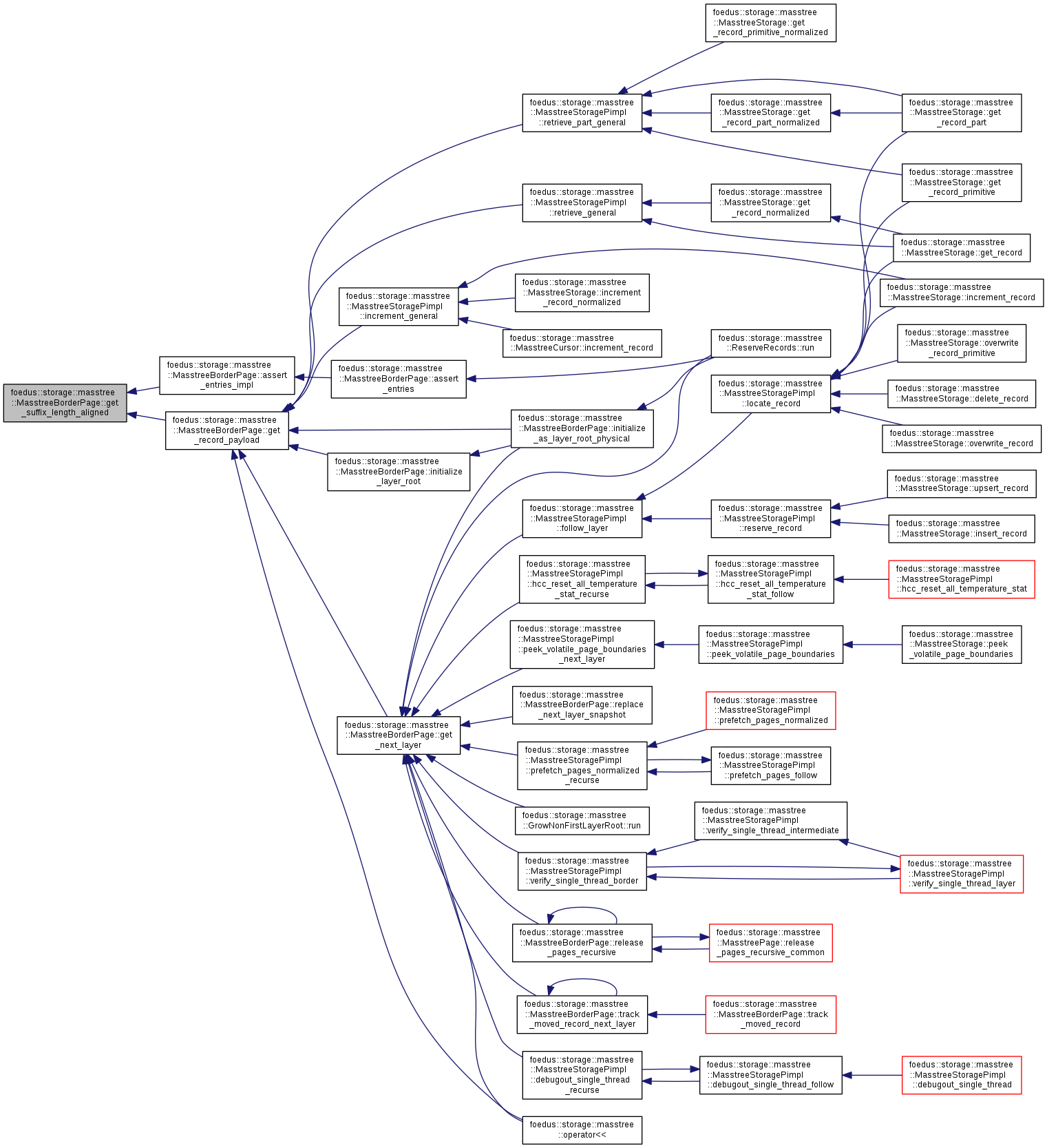

| DualPagePointer * | get_next_layer (SlotIndex index) __attribute__((always_inline)) |

| const DualPagePointer * | get_next_layer (SlotIndex index) const __attribute__((always_inline)) |

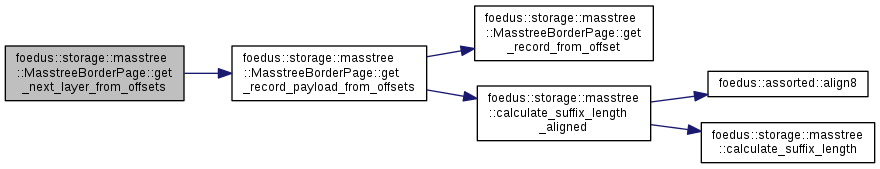

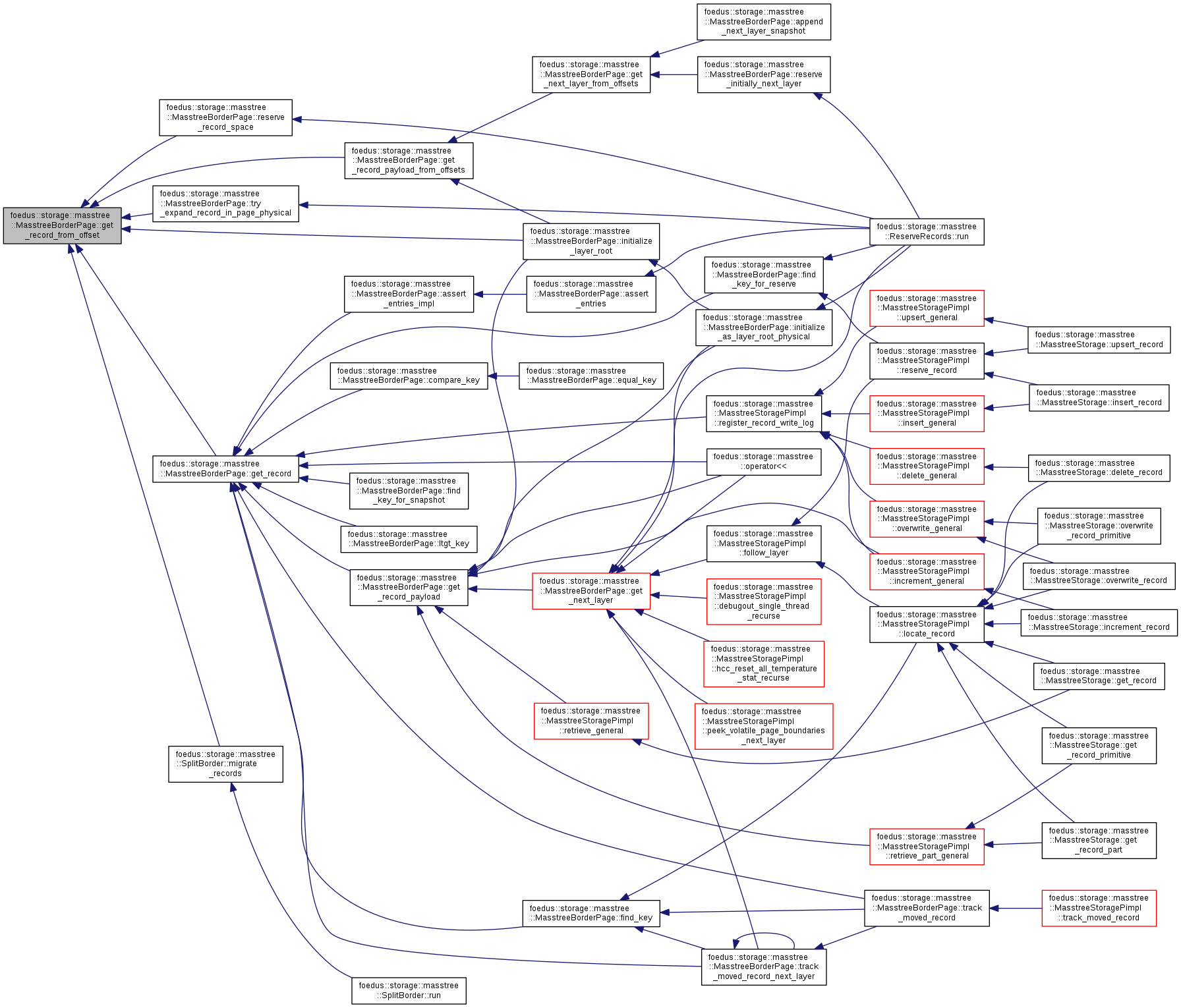

| char * | get_record_from_offset (DataOffset record_offset) __attribute__((always_inline)) |

| Offset versions. More... | |

| const char * | get_record_from_offset (DataOffset record_offset) const __attribute__((always_inline)) |

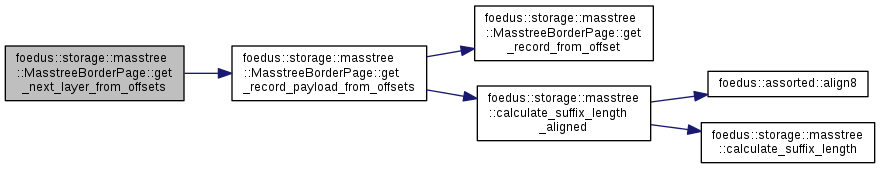

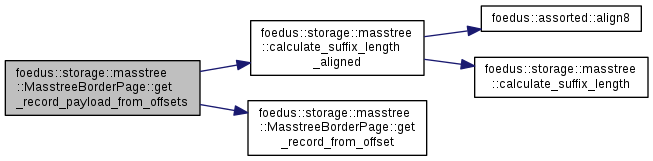

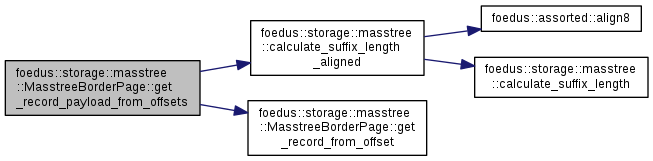

| const char * | get_record_payload_from_offsets (DataOffset record_offset, KeyLength remainder_length) const __attribute__((always_inline)) |

| char * | get_record_payload_from_offsets (DataOffset record_offset, KeyLength remainder_length) __attribute__((always_inline)) |

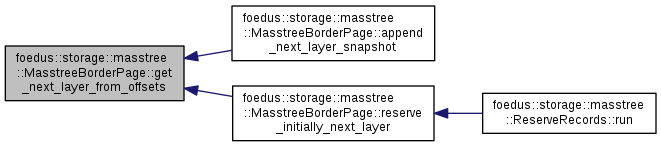

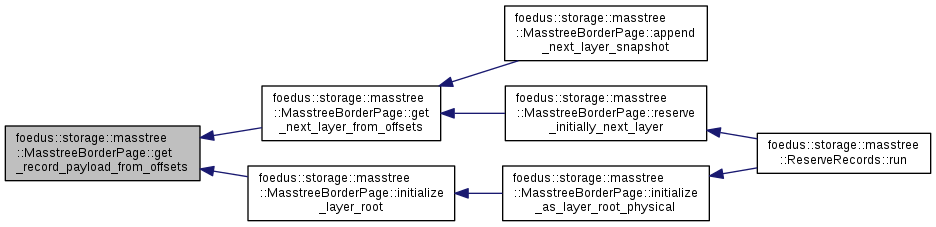

| DualPagePointer * | get_next_layer_from_offsets (DataOffset record_offset, KeyLength remainder_length) __attribute__((always_inline)) |

| const DualPagePointer * | get_next_layer_from_offsets (DataOffset record_offset, KeyLength remainder_length) const __attribute__((always_inline)) |

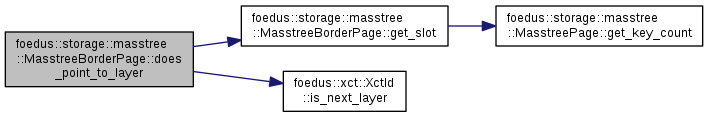

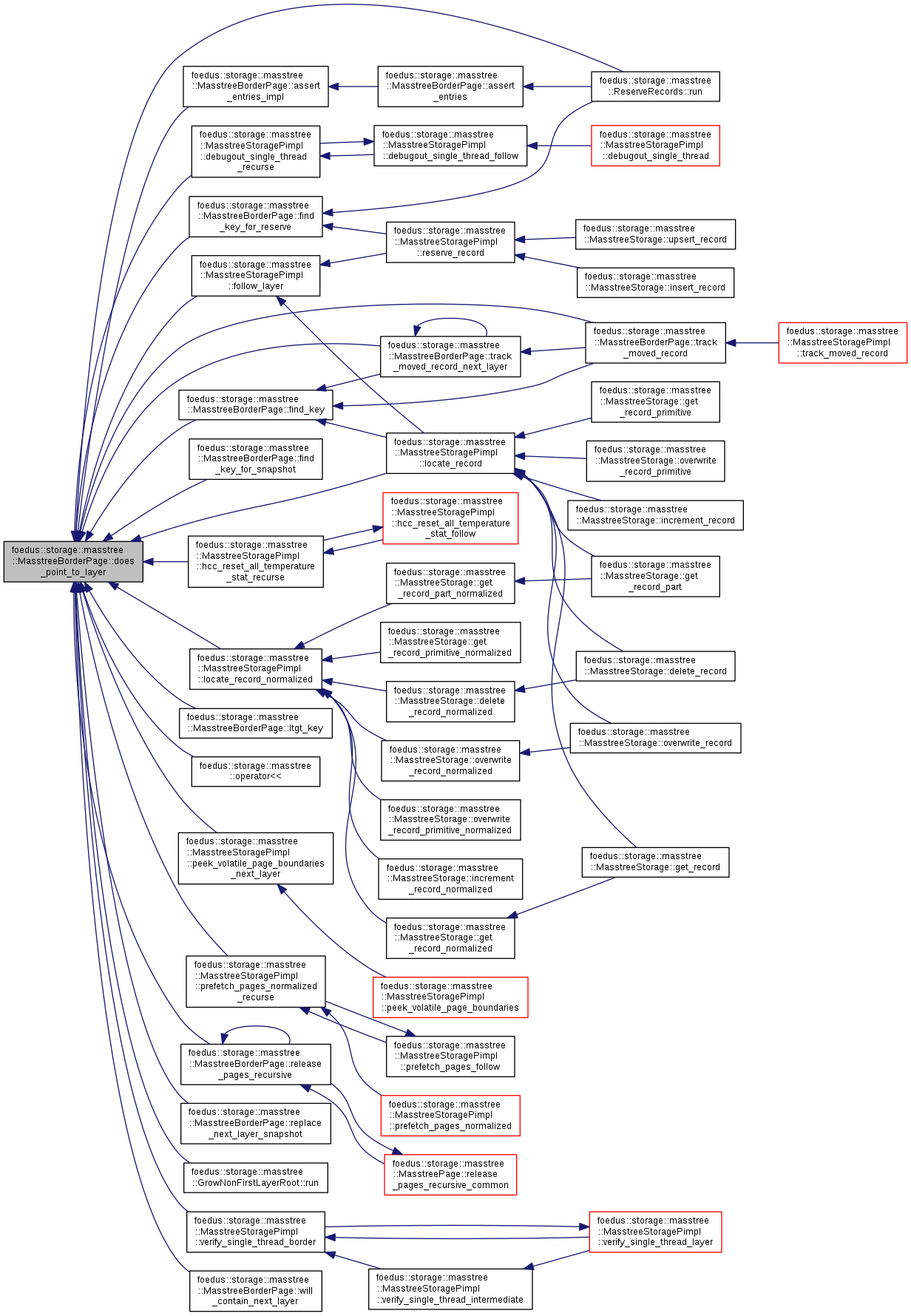

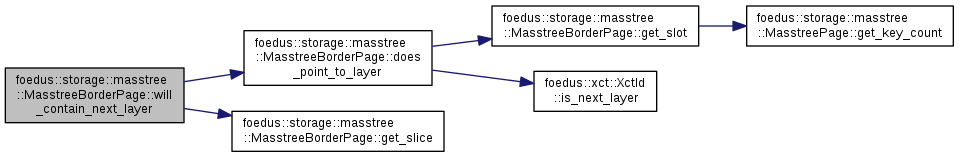

| bool | does_point_to_layer (SlotIndex index) const __attribute__((always_inline)) |

| KeySlice | get_slice (SlotIndex index) const __attribute__((always_inline)) |

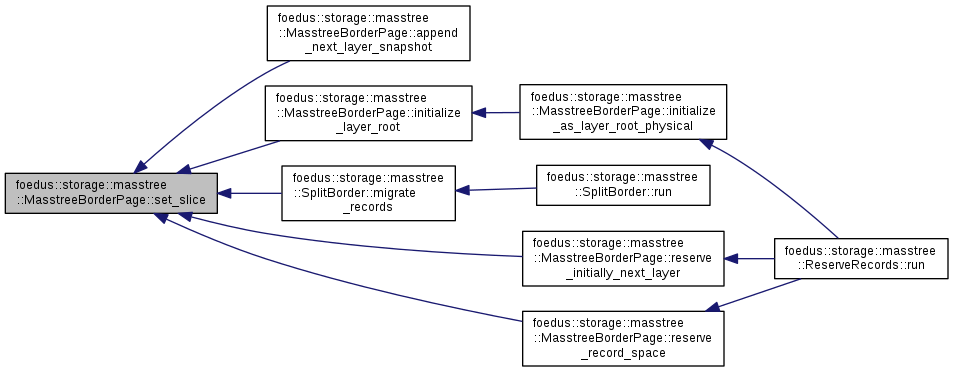

| void | set_slice (SlotIndex index, KeySlice slice) __attribute__((always_inline)) |

| DataOffset | get_offset_in_bytes (SlotIndex index) const __attribute__((always_inline)) |

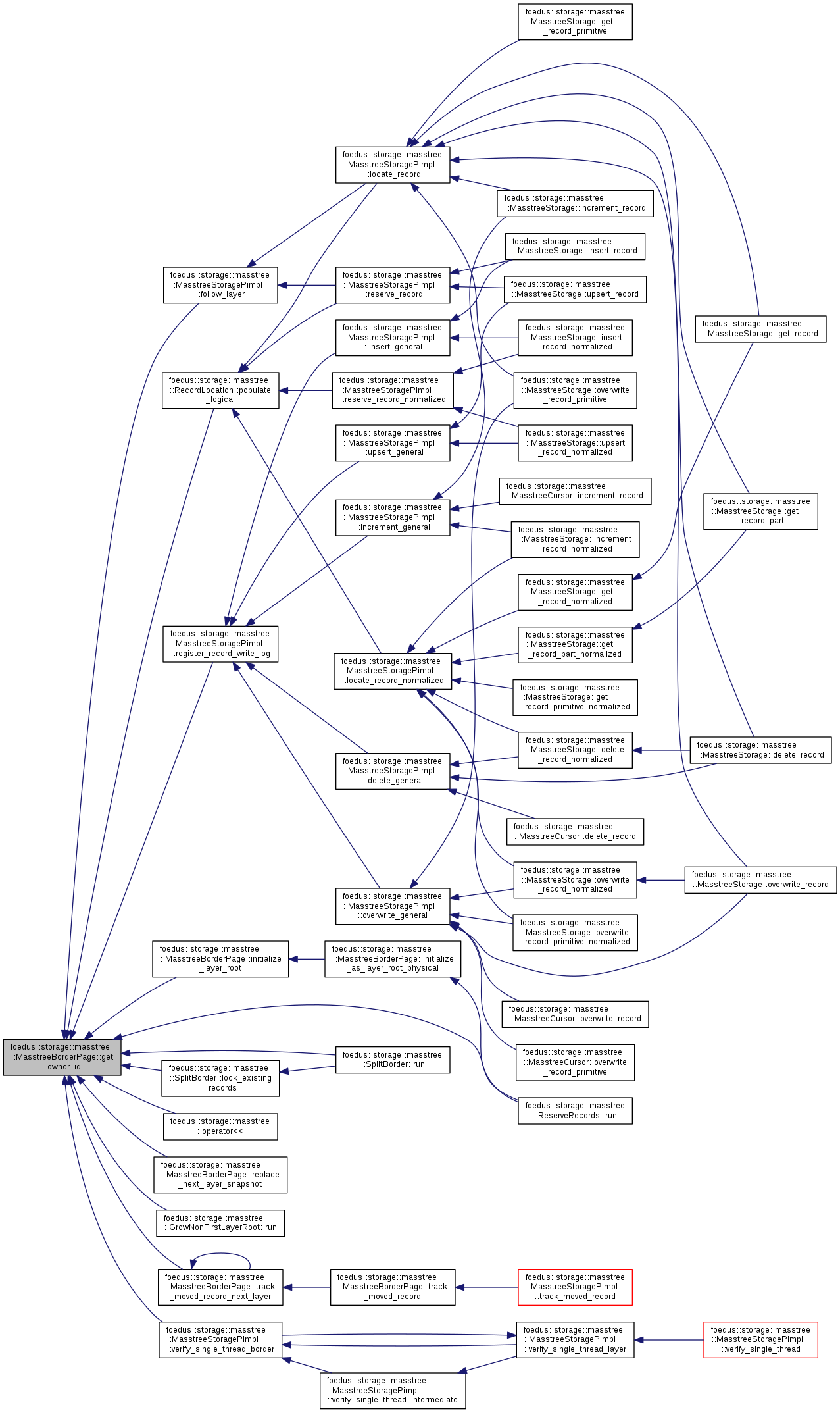

| xct::RwLockableXctId * | get_owner_id (SlotIndex index) __attribute__((always_inline)) |

| const xct::RwLockableXctId * | get_owner_id (SlotIndex index) const __attribute__((always_inline)) |

| KeyLength | get_remainder_length (SlotIndex index) const __attribute__((always_inline)) |

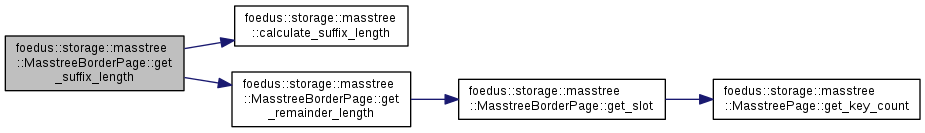

| KeyLength | get_suffix_length (SlotIndex index) const __attribute__((always_inline)) |

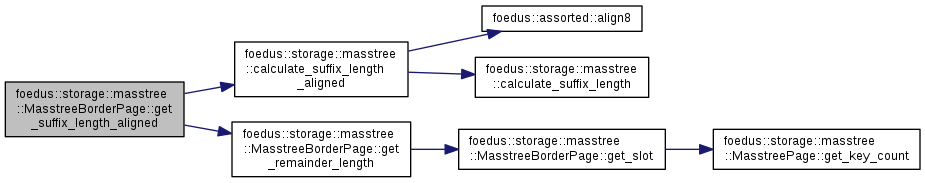

| KeyLength | get_suffix_length_aligned (SlotIndex index) const __attribute__((always_inline)) |

| PayloadLength | get_payload_length (SlotIndex index) const __attribute__((always_inline)) |

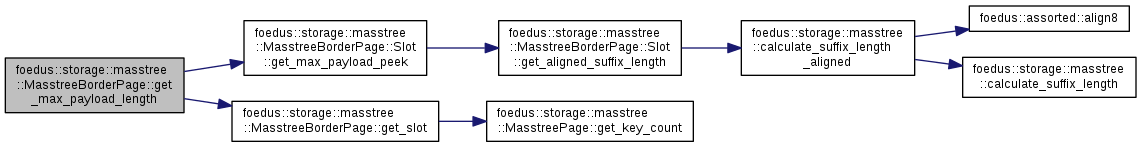

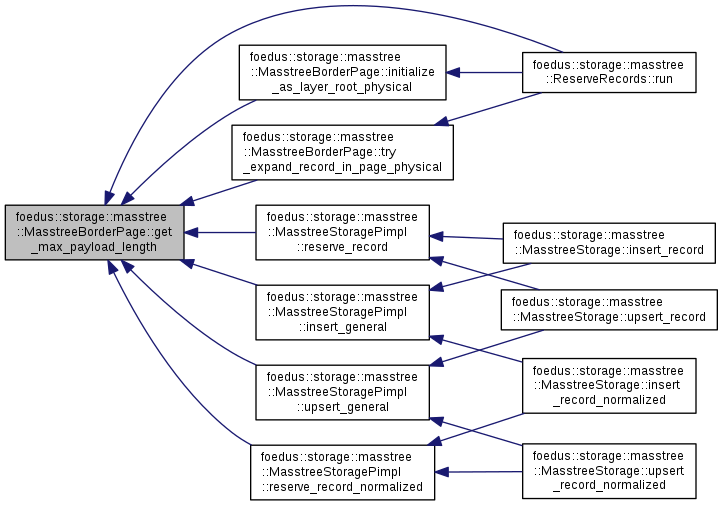

| PayloadLength | get_max_payload_length (SlotIndex index) const __attribute__((always_inline)) |

| bool | can_accomodate (SlotIndex new_index, KeyLength remainder_length, PayloadLength payload_count) const __attribute__((always_inline)) |

| bool | can_accomodate_snapshot (KeyLength remainder_length, PayloadLength payload_count) const __attribute__((always_inline)) |

| Slightly different from can_accomodate() as follows: More... | |

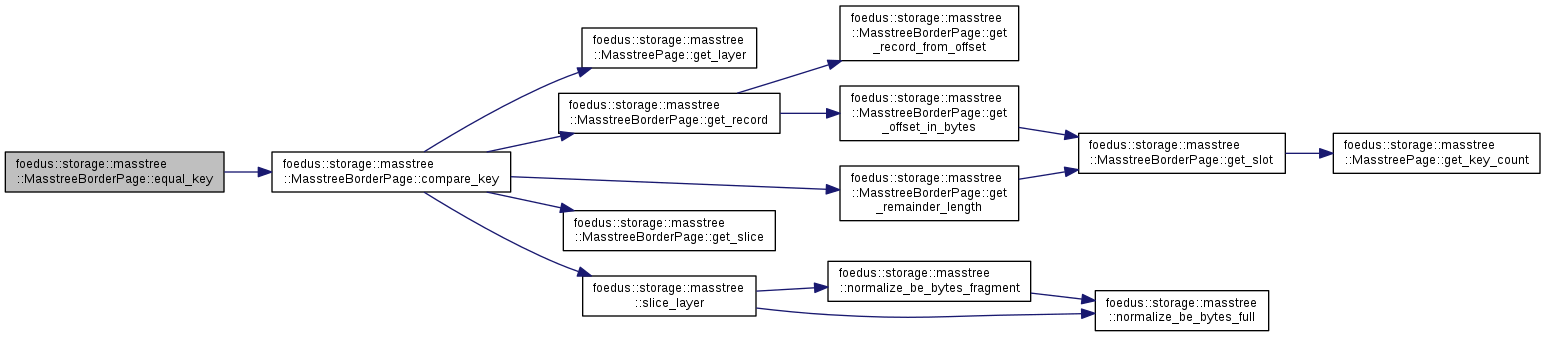

| bool | compare_key (SlotIndex index, const void *be_key, KeyLength key_length) const __attribute__((always_inline)) |

| actually this method should be renamed to equal_key... More... | |

| bool | equal_key (SlotIndex index, const void *be_key, KeyLength key_length) const __attribute__((always_inline)) |

| let's gradually migrate from compare_key() to this. More... | |

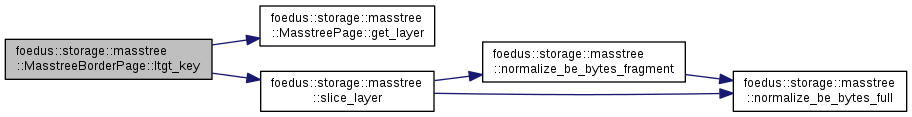

| int | ltgt_key (SlotIndex index, const char *be_key, KeyLength key_length) const __attribute__((always_inline)) |

| compare the key. More... | |

| int | ltgt_key (SlotIndex index, KeySlice slice, const char *suffix, KeyLength remainder) const __attribute__((always_inline)) |

| Overload to receive slice+suffix. More... | |

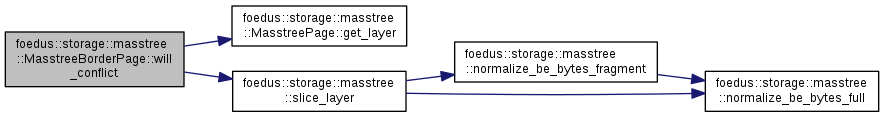

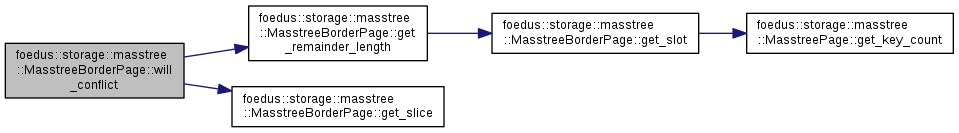

| bool | will_conflict (SlotIndex index, const char *be_key, KeyLength key_length) const __attribute__((always_inline)) |

| Returns whether inserting the key will cause creation of a new next layer. More... | |

| bool | will_conflict (SlotIndex index, KeySlice slice, KeyLength remainder) const __attribute__((always_inline)) |

| Overload to receive slice. More... | |

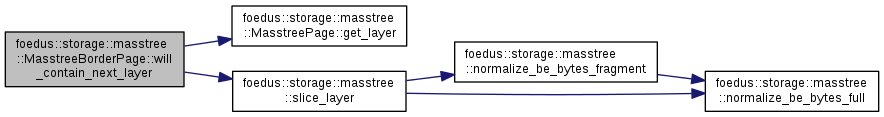

| bool | will_contain_next_layer (SlotIndex index, const char *be_key, KeyLength key_length) const __attribute__((always_inline)) |

| Returns whether the record is a next-layer pointer that would contain the key. More... | |

| bool | will_contain_next_layer (SlotIndex index, KeySlice slice, KeyLength remainder) const __attribute__((always_inline)) |

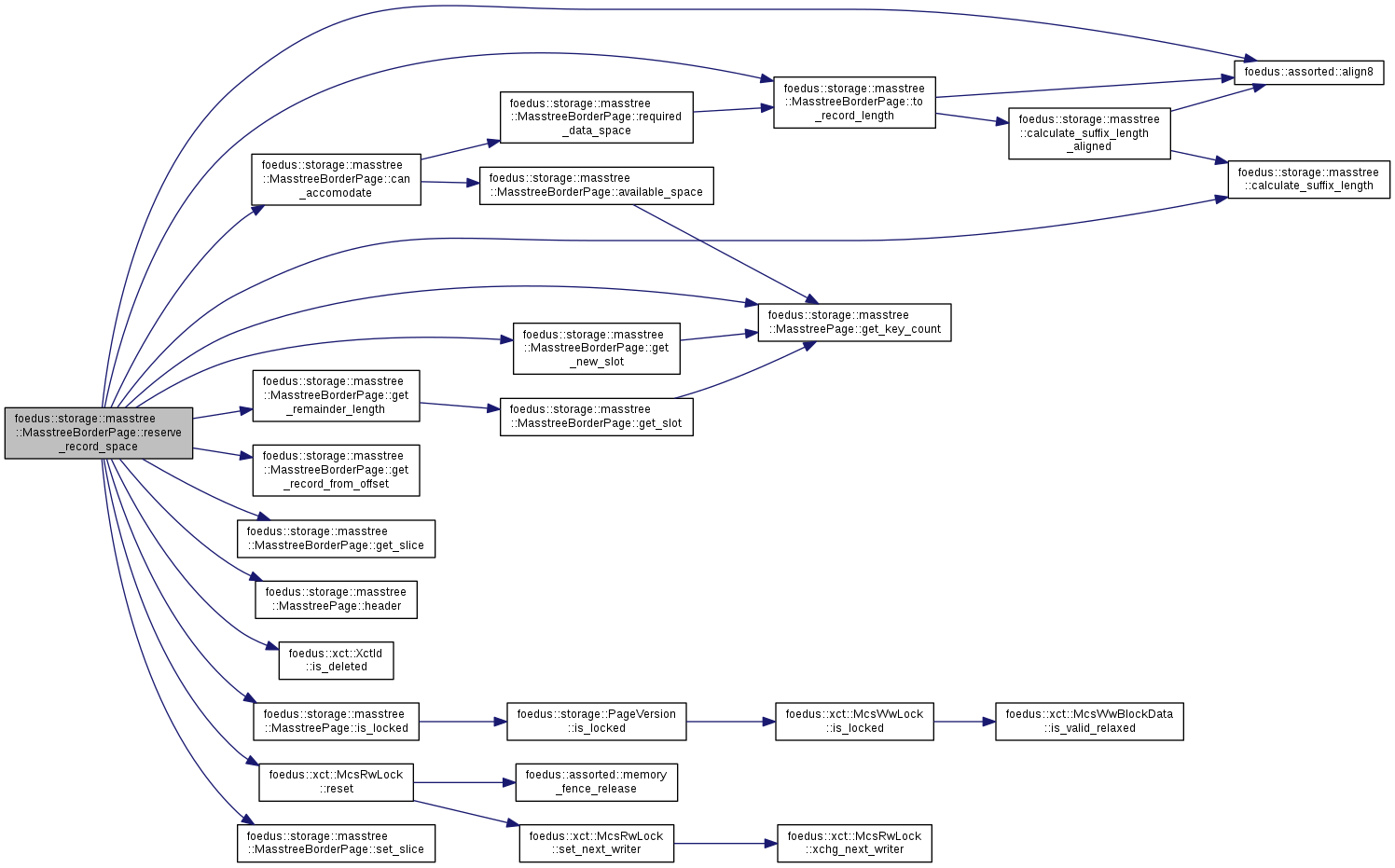

| void | reserve_record_space (SlotIndex index, xct::XctId initial_owner_id, KeySlice slice, const void *suffix, KeyLength remainder_length, PayloadLength payload_count) |

| Installs a new physical record that doesn't exist logically (delete bit on). More... | |

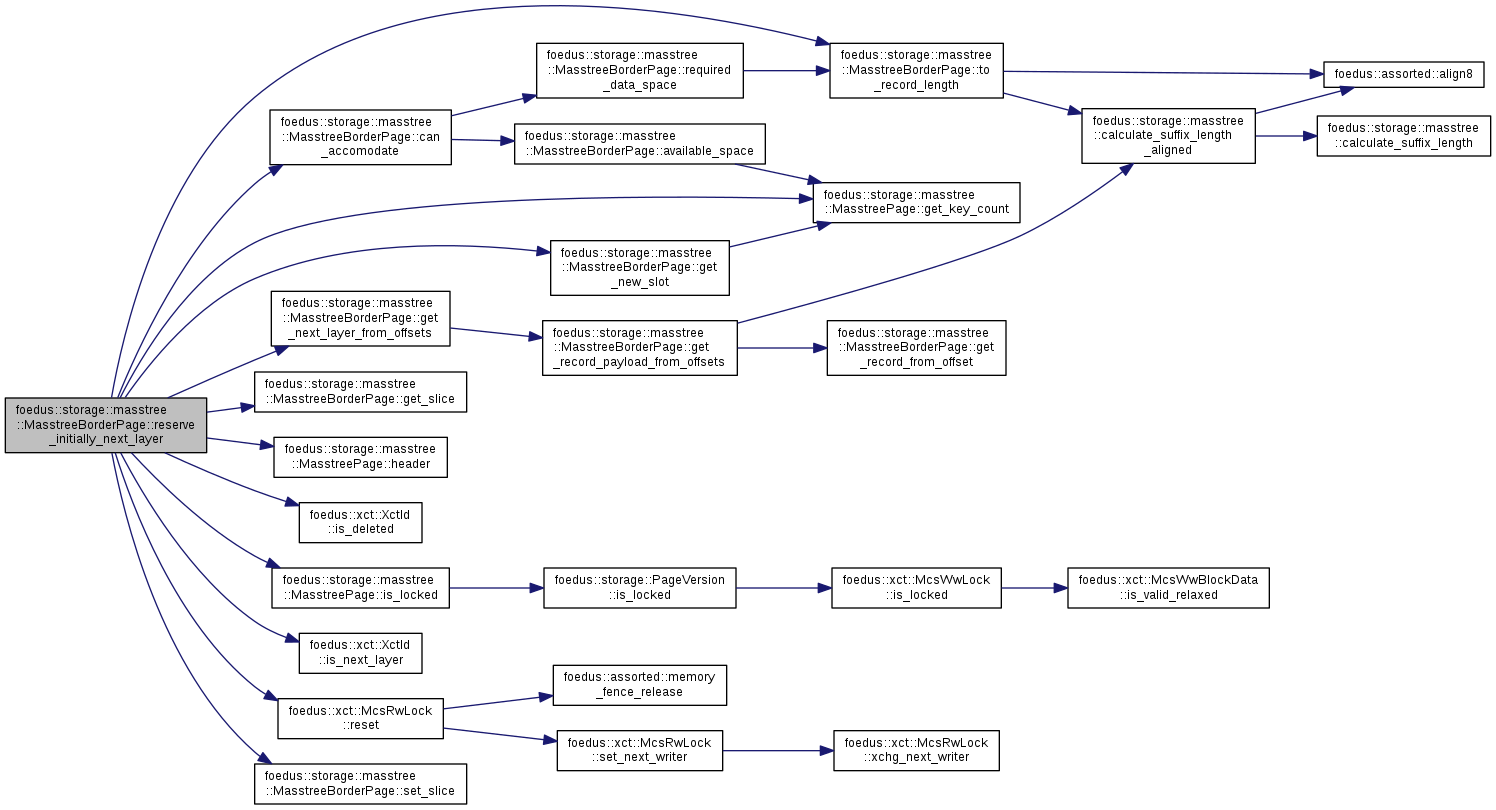

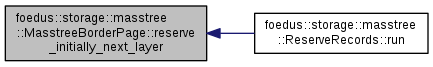

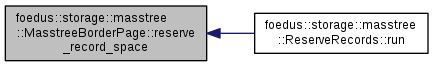

| void | reserve_initially_next_layer (SlotIndex index, xct::XctId initial_owner_id, KeySlice slice, const DualPagePointer &pointer) |

| For creating a record that is initially a next-layer. More... | |

| void | append_next_layer_snapshot (xct::XctId initial_owner_id, KeySlice slice, SnapshotPagePointer pointer) |

| Installs a next layer pointer. More... | |

| void | replace_next_layer_snapshot (SnapshotPagePointer pointer) |

| Same as above, except this is used to transform an existing record at end to a next layer pointer. More... | |

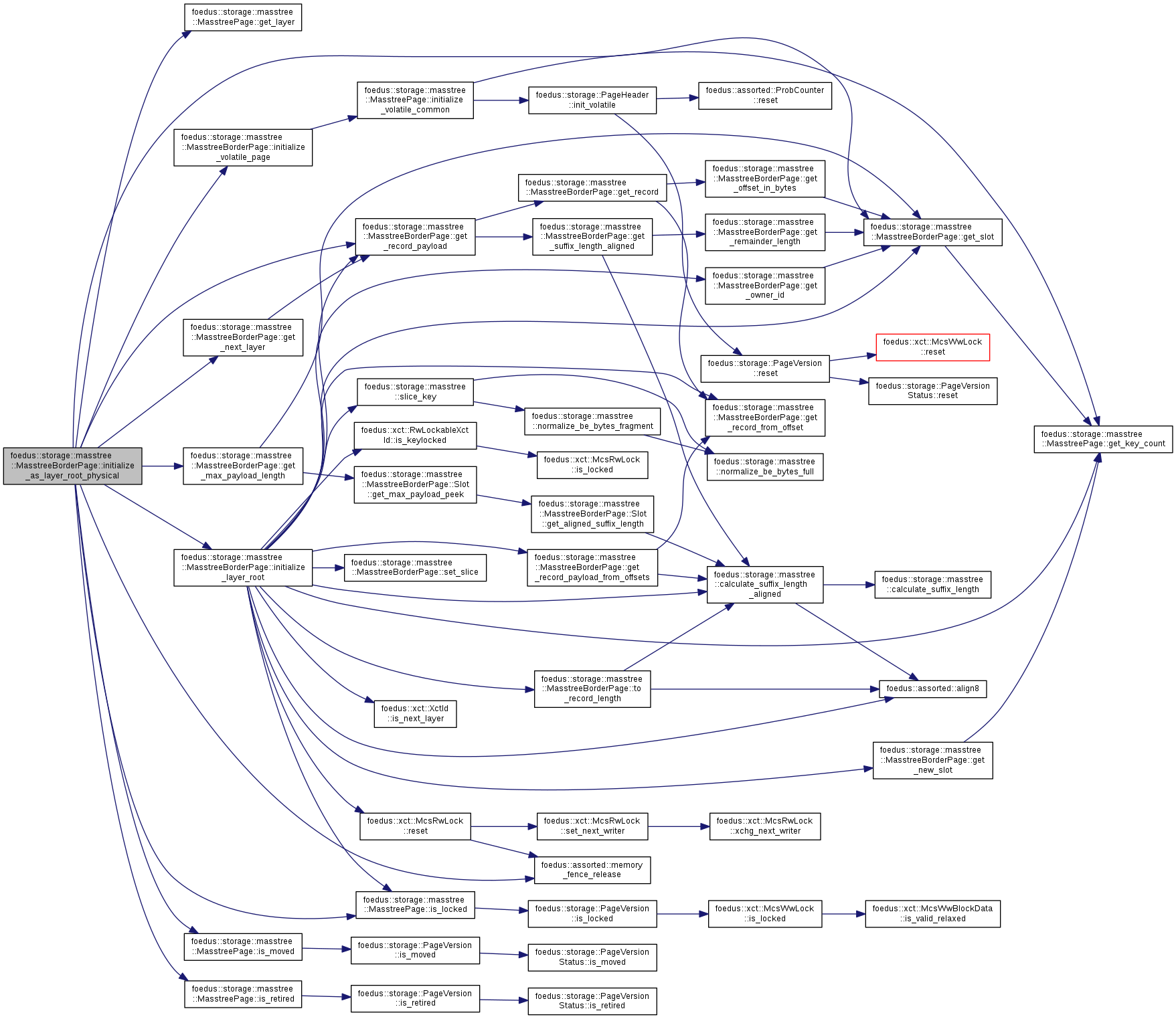

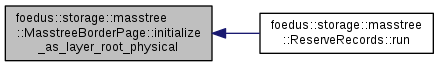

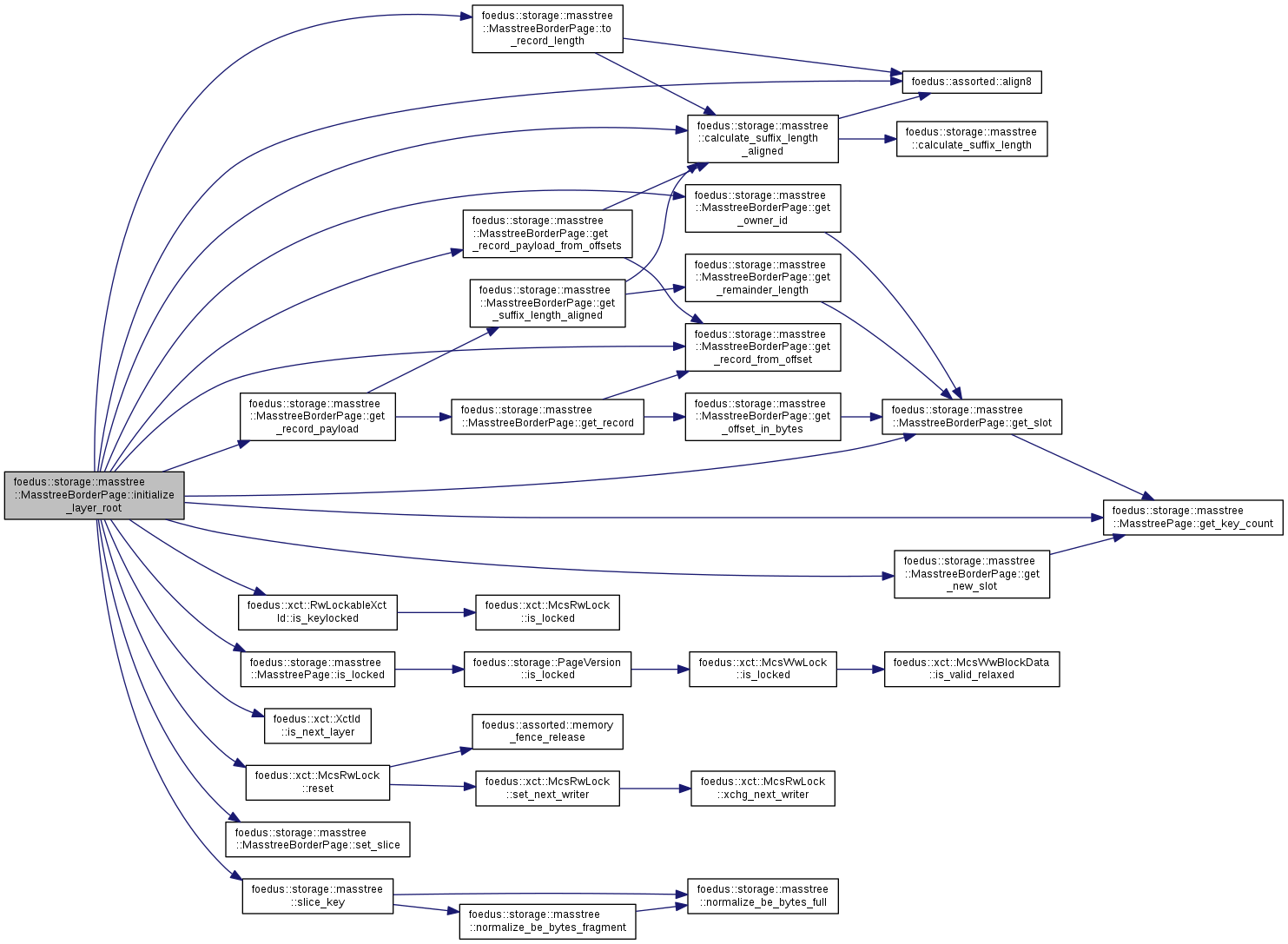

| void | initialize_layer_root (const MasstreeBorderPage *copy_from, SlotIndex copy_index) |

| Copy the initial record that will be the only record for a new root page. More... | |

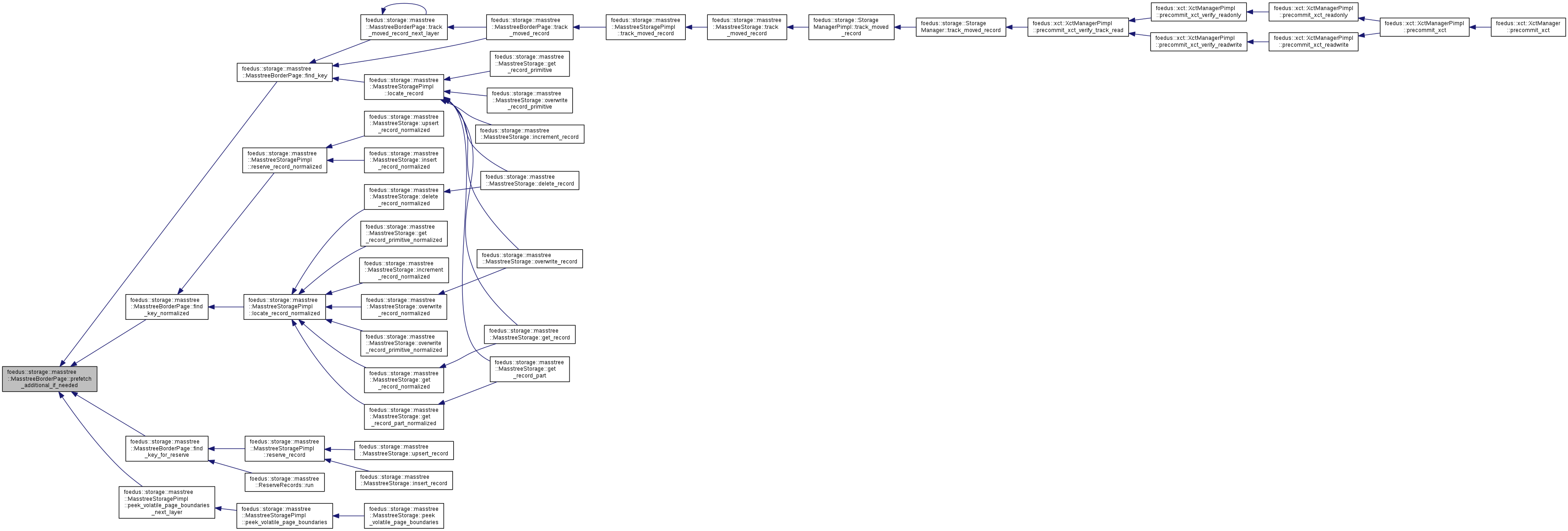

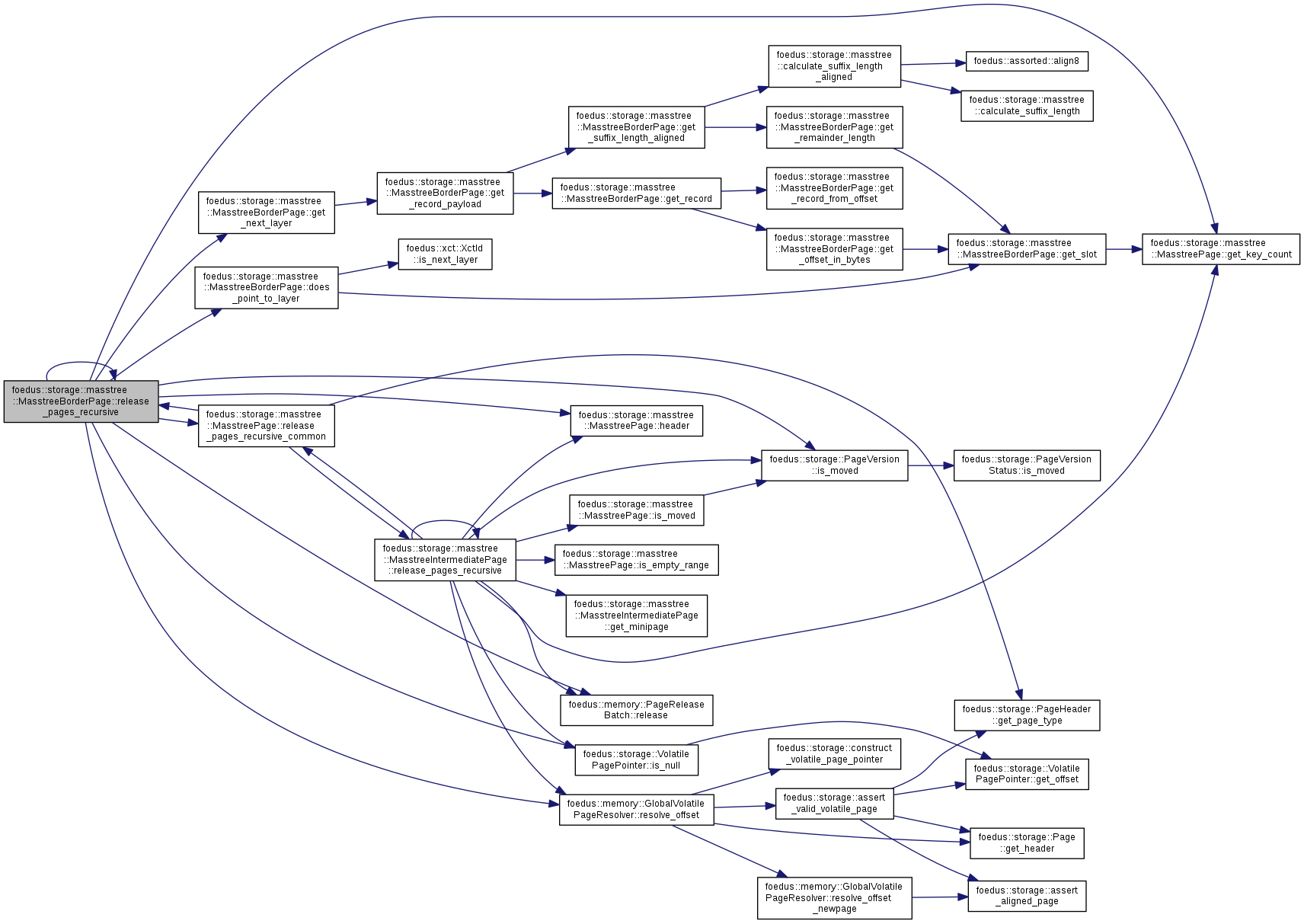

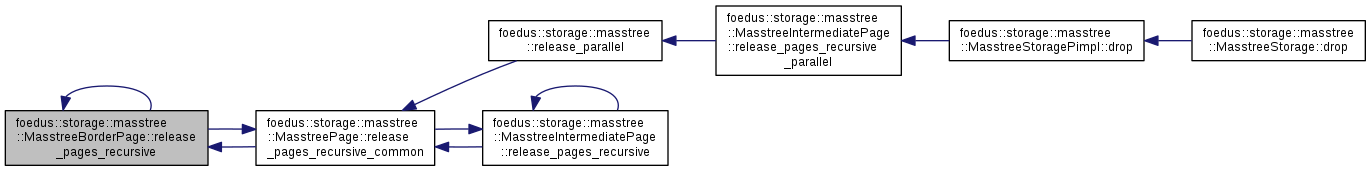

| void | release_pages_recursive (const memory::GlobalVolatilePageResolver &page_resolver, memory::PageReleaseBatch *batch) |

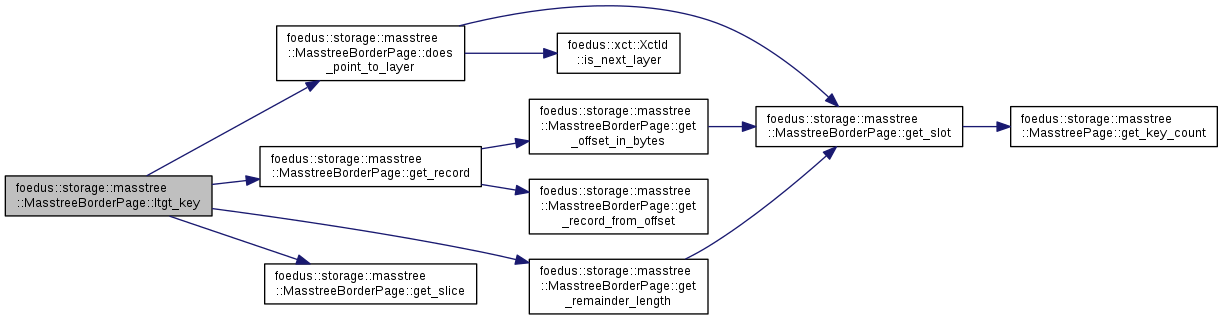

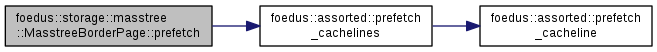

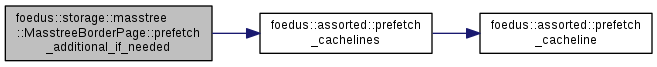

| void | prefetch () const __attribute__((always_inline)) |

| prefetch upto 256th bytes. More... | |

| void | prefetch_additional_if_needed (SlotIndex key_count) const __attribute__((always_inline)) |

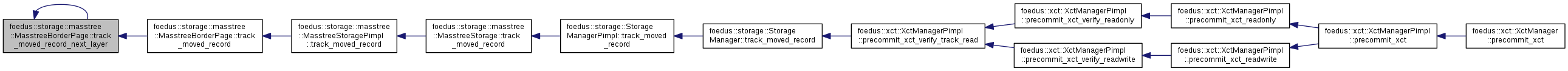

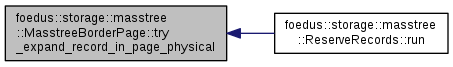

| bool | try_expand_record_in_page_physical (PayloadLength payload_count, SlotIndex record_index) |

| A physical-only method to expand a record within this page without any logical change. More... | |

| void | initialize_as_layer_root_physical (VolatilePagePointer page_id, MasstreeBorderPage *parent, SlotIndex parent_index) |

| A physical-only method to initialize this page as a volatile page of a layer-root pointed from the given parent record. More... | |

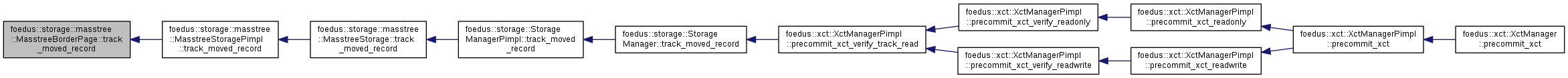

| xct::TrackMovedRecordResult | track_moved_record (Engine *engine, xct::RwLockableXctId *old_address, xct::WriteXctAccess *write_set) |

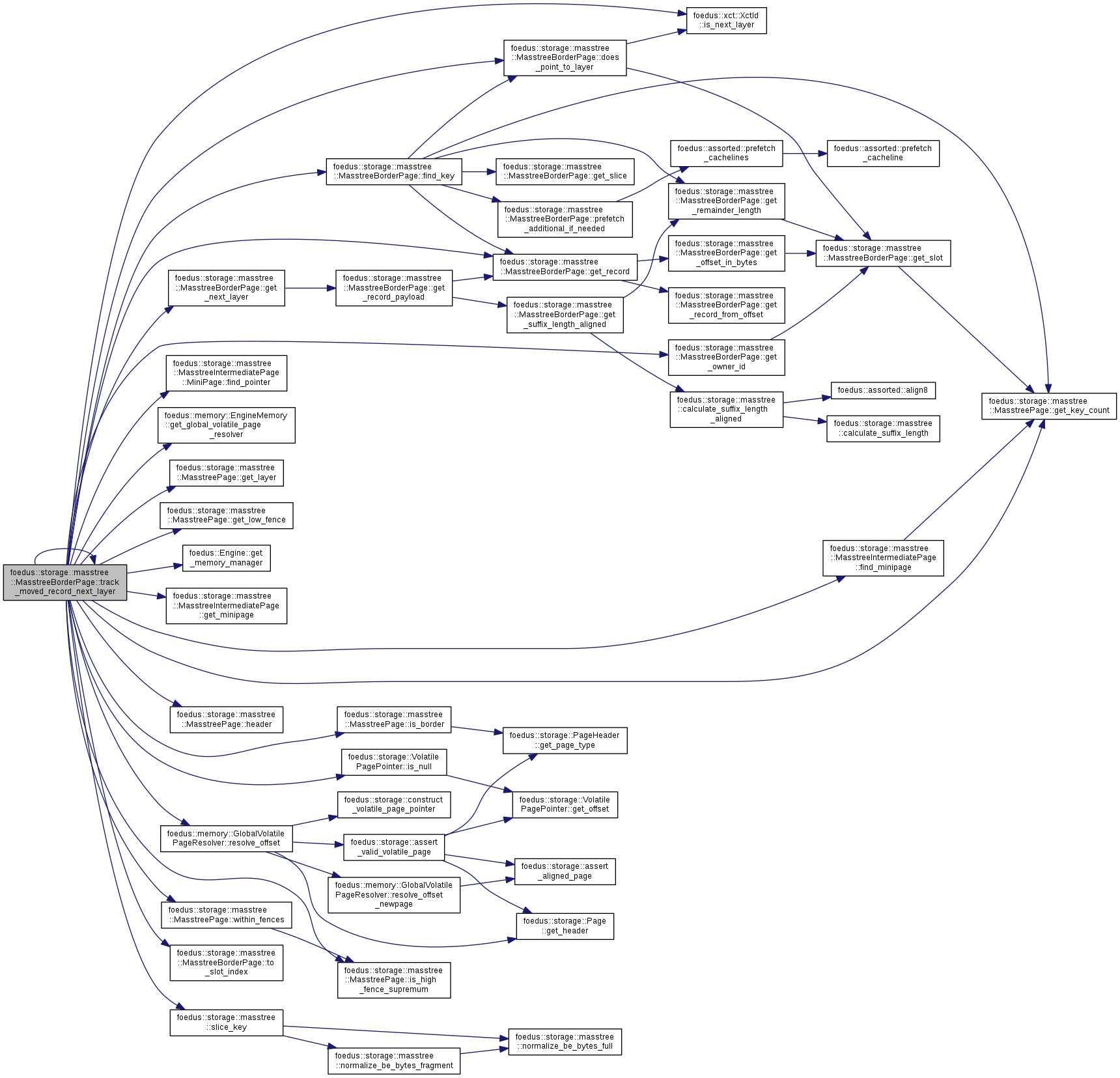

| xct::TrackMovedRecordResult | track_moved_record_next_layer (Engine *engine, xct::RwLockableXctId *old_address) |

| This one further tracks it to next layer. More... | |

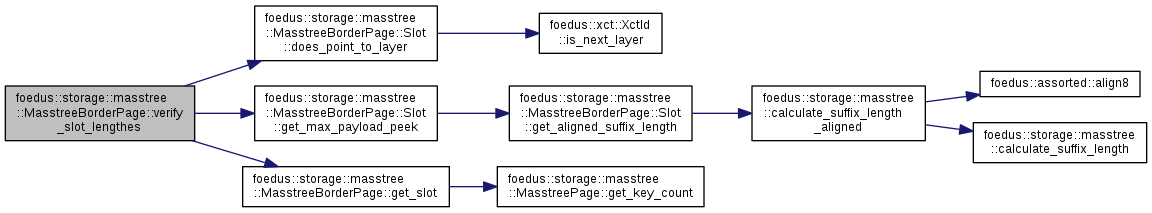

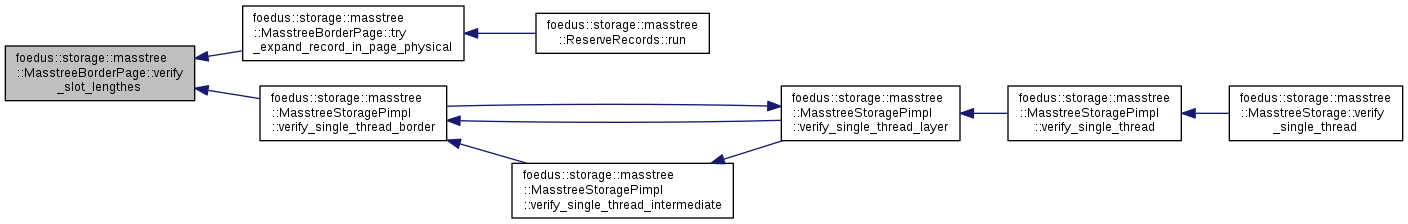

| bool | verify_slot_lengthes (SlotIndex index) const |

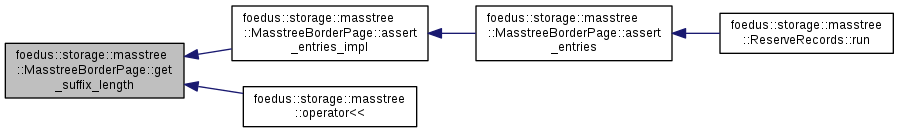

| void | assert_entries () __attribute__((always_inline)) |

| void | assert_entries_impl () const |

| defined in masstree_page_debug.cpp. More... | |

Public Member Functions inherited from foedus::storage::masstree::MasstreePage Public Member Functions inherited from foedus::storage::masstree::MasstreePage | |

| MasstreePage ()=delete | |

| MasstreePage (const MasstreePage &other)=delete | |

| MasstreePage & | operator= (const MasstreePage &other)=delete |

| PageHeader & | header () |

| const PageHeader & | header () const |

| VolatilePagePointer | get_volatile_page_id () const |

| SnapshotPagePointer | get_snapshot_page_id () const |

| bool | is_border () const __attribute__((always_inline)) |

| bool | is_empty_range () const __attribute__((always_inline)) |

| An empty-range page, either intermediate or border, never has any entries. More... | |

| KeySlice | get_low_fence () const __attribute__((always_inline)) |

| KeySlice | get_high_fence () const __attribute__((always_inline)) |

| bool | is_high_fence_supremum () const __attribute__((always_inline)) |

| bool | is_low_fence_infimum () const __attribute__((always_inline)) |

| bool | is_layer_root () const __attribute__((always_inline)) |

| KeySlice | get_foster_fence () const __attribute__((always_inline)) |

| bool | is_foster_minor_null () const __attribute__((always_inline)) |

| bool | is_foster_major_null () const __attribute__((always_inline)) |

| VolatilePagePointer | get_foster_minor () const __attribute__((always_inline)) |

| VolatilePagePointer | get_foster_major () const __attribute__((always_inline)) |

| void | set_foster_twin (VolatilePagePointer minor, VolatilePagePointer major) |

| void | install_foster_twin (VolatilePagePointer minor, VolatilePagePointer major, KeySlice foster_fence) |

| bool | within_fences (KeySlice slice) const __attribute__((always_inline)) |

| bool | within_foster_minor (KeySlice slice) const __attribute__((always_inline)) |

| bool | within_foster_major (KeySlice slice) const __attribute__((always_inline)) |

| bool | has_foster_child () const __attribute__((always_inline)) |

| uint8_t | get_layer () const __attribute__((always_inline)) |

| Layer-0 stores the first 8 byte slice, Layer-1 next 8 byte... More... | |

| uint8_t | get_btree_level () const __attribute__((always_inline)) |

| used only in masstree. More... | |

| SlotIndex | get_key_count () const __attribute__((always_inline)) |

| physical key count (those keys might be deleted) in this page. More... | |

| void | set_key_count (SlotIndex count) __attribute__((always_inline)) |

| void | increment_key_count () __attribute__((always_inline)) |

| void | prefetch_general () const __attribute__((always_inline)) |

| prefetch upto keys/separators, whether this page is border or interior. More... | |

| const PageVersion & | get_version () const __attribute__((always_inline)) |

| PageVersion & | get_version () __attribute__((always_inline)) |

| const PageVersion * | get_version_address () const __attribute__((always_inline)) |

| PageVersion * | get_version_address () __attribute__((always_inline)) |

| xct::McsWwLock * | get_lock_address () __attribute__((always_inline)) |

| bool | is_locked () const __attribute__((always_inline)) |

| bool | is_moved () const __attribute__((always_inline)) |

| bool | is_retired () const __attribute__((always_inline)) |

| void | set_moved () __attribute__((always_inline)) |

| void | set_retired () __attribute__((always_inline)) |

| void | release_pages_recursive_common (const memory::GlobalVolatilePageResolver &page_resolver, memory::PageReleaseBatch *batch) |

| void | set_foster_major_offset_unsafe (memory::PagePoolOffset offset) __attribute__((always_inline)) |

| As the name suggests, this should be used only by composer. More... | |

| void | set_high_fence_unsafe (KeySlice high_fence) __attribute__((always_inline)) |

| As the name suggests, this should be used only by composer. More... | |

Static Public Member Functions | |

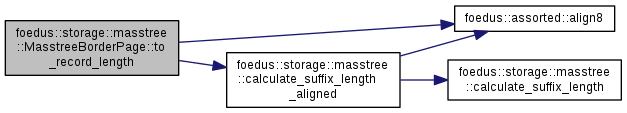

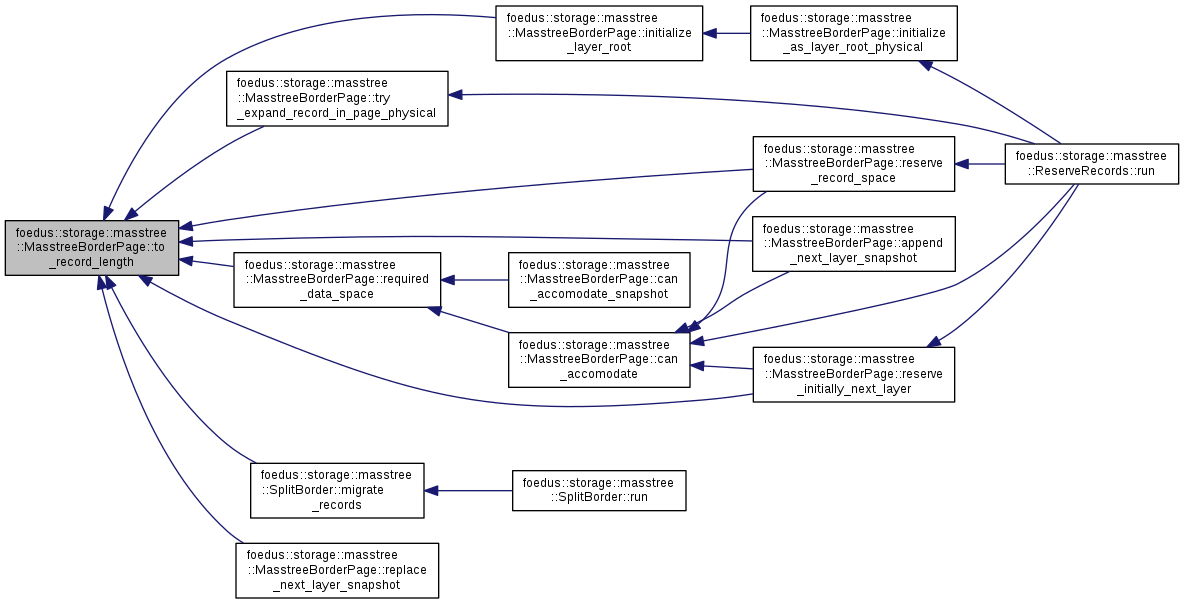

| static DataOffset | to_record_length (KeyLength remainder_length, PayloadLength payload_length) |

| returns minimal physical_record_length_ for the given remainder/payload length. More... | |

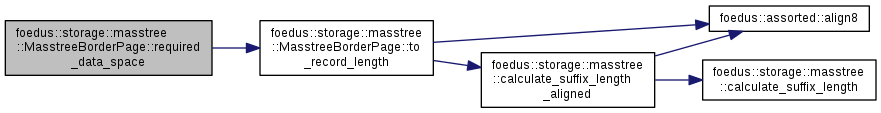

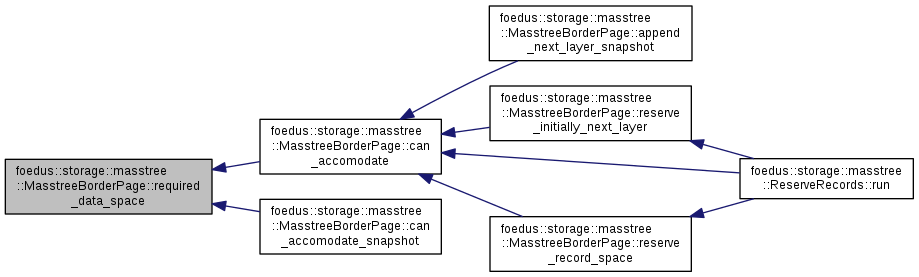

| static DataOffset | required_data_space (KeyLength remainder_length, PayloadLength payload_length) |

| returns the byte size of required contiguous space in data_ to insert a new record of the given remainder/payload length. More... | |

Friends | |

| struct | SplitBorder |

| std::ostream & | operator<< (std::ostream &o, const MasstreeBorderPage &v) |

| defined in masstree_page_debug.cpp. More... | |

Additional Inherited Members | |

Protected Member Functions inherited from foedus::storage::masstree::MasstreePage Protected Member Functions inherited from foedus::storage::masstree::MasstreePage | |

| void | initialize_volatile_common (StorageId storage_id, VolatilePagePointer page_id, PageType page_type, uint8_t layer, uint8_t level, KeySlice low_fence, KeySlice high_fence) |

| void | initialize_snapshot_common (StorageId storage_id, SnapshotPagePointer page_id, PageType page_type, uint8_t layer, uint8_t level, KeySlice low_fence, KeySlice high_fence) |

Protected Attributes inherited from foedus::storage::masstree::MasstreePage Protected Attributes inherited from foedus::storage::masstree::MasstreePage | |

| PageHeader | header_ |

| KeySlice | low_fence_ |

| Inclusive low fence of this page. More... | |

| KeySlice | high_fence_ |

| Inclusive high fence of this page. More... | |

| KeySlice | foster_fence_ |

| Inclusive low_fence of foster child. More... | |

| VolatilePagePointer | foster_twin_ [2] |

| Points to foster children, or tentative child pages. More... | |

Used in FindKeyForReserveResult.

| Enumerator | |

|---|---|

| kNotFound | |

| kExactMatchLocalRecord | |

| kExactMatchLayerPointer | |

| kConflictingLocalRecord | |

Definition at line 585 of file masstree_page_impl.hpp.

|

delete |

|

delete |

|

inline |

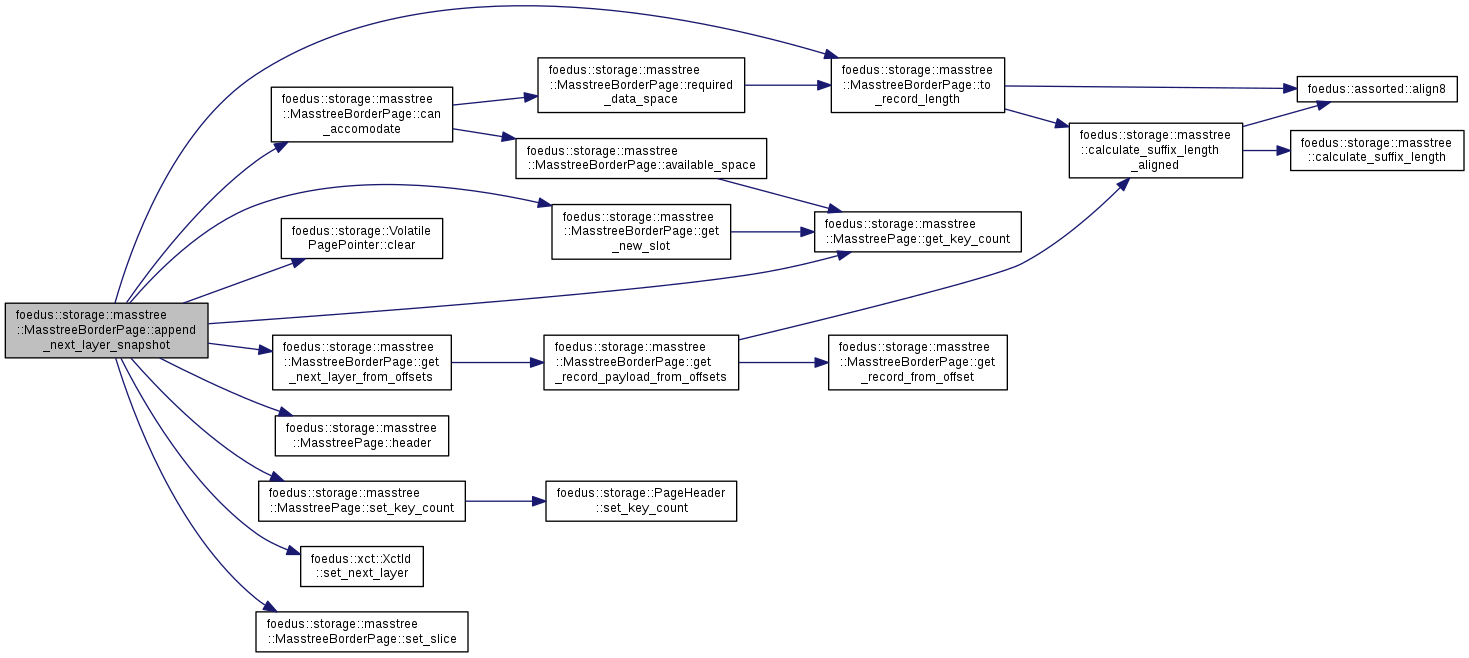

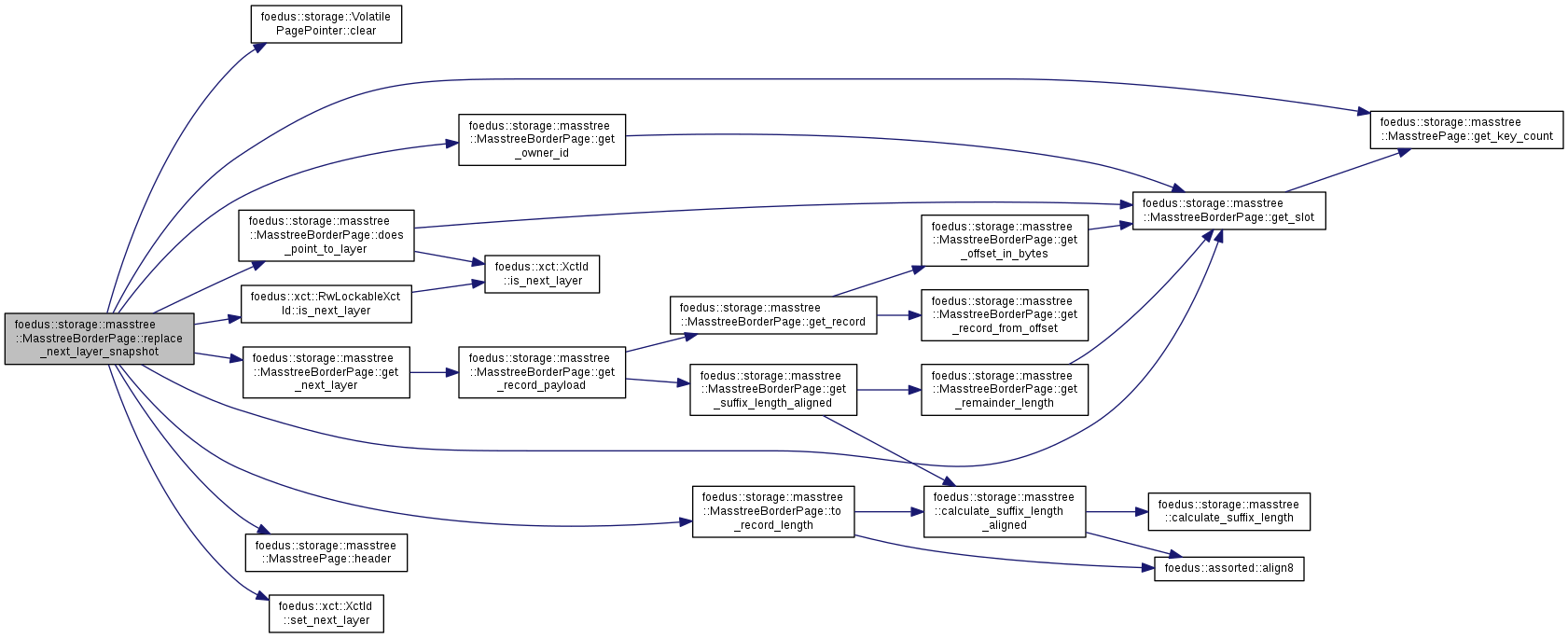

Installs a next layer pointer.

This is used only from snapshot composer, so no race.

Definition at line 1417 of file masstree_page_impl.hpp.

References ASSERT_ND, can_accomodate(), foedus::storage::VolatilePagePointer::clear(), foedus::storage::masstree::MasstreeBorderPage::SlotLengthUnion::components, foedus::storage::masstree::MasstreePage::get_key_count(), get_new_slot(), get_next_layer_from_offsets(), foedus::storage::masstree::MasstreePage::header(), foedus::storage::masstree::kInitiallyNextLayer, foedus::storage::masstree::MasstreeBorderPage::Slot::lengthes_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::offset_, foedus::storage::masstree::MasstreeBorderPage::Slot::original_offset_, foedus::storage::masstree::MasstreeBorderPage::Slot::original_physical_record_length_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::payload_length_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::physical_record_length_, foedus::storage::masstree::MasstreeBorderPage::Slot::remainder_length_, foedus::storage::masstree::MasstreePage::set_key_count(), foedus::xct::XctId::set_next_layer(), set_slice(), foedus::storage::DualPagePointer::snapshot_pointer_, foedus::storage::masstree::MasstreeBorderPage::Slot::tid_, to_record_length(), foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::unused_, foedus::storage::DualPagePointer::volatile_pointer_, and foedus::xct::RwLockableXctId::xct_id_.

|

inline |

Definition at line 988 of file masstree_page_impl.hpp.

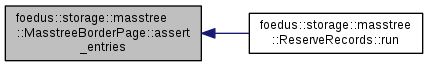

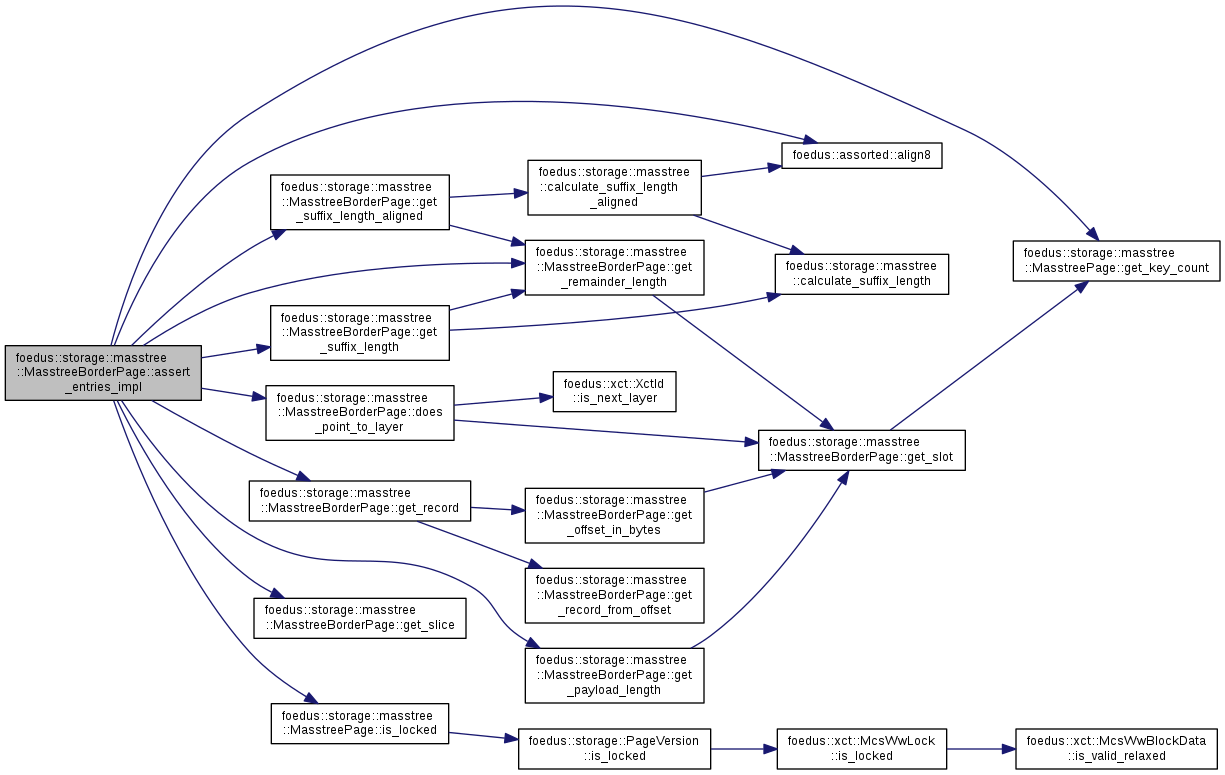

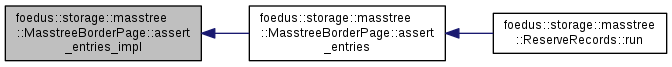

References assert_entries_impl().

Referenced by foedus::storage::masstree::ReserveRecords::run().

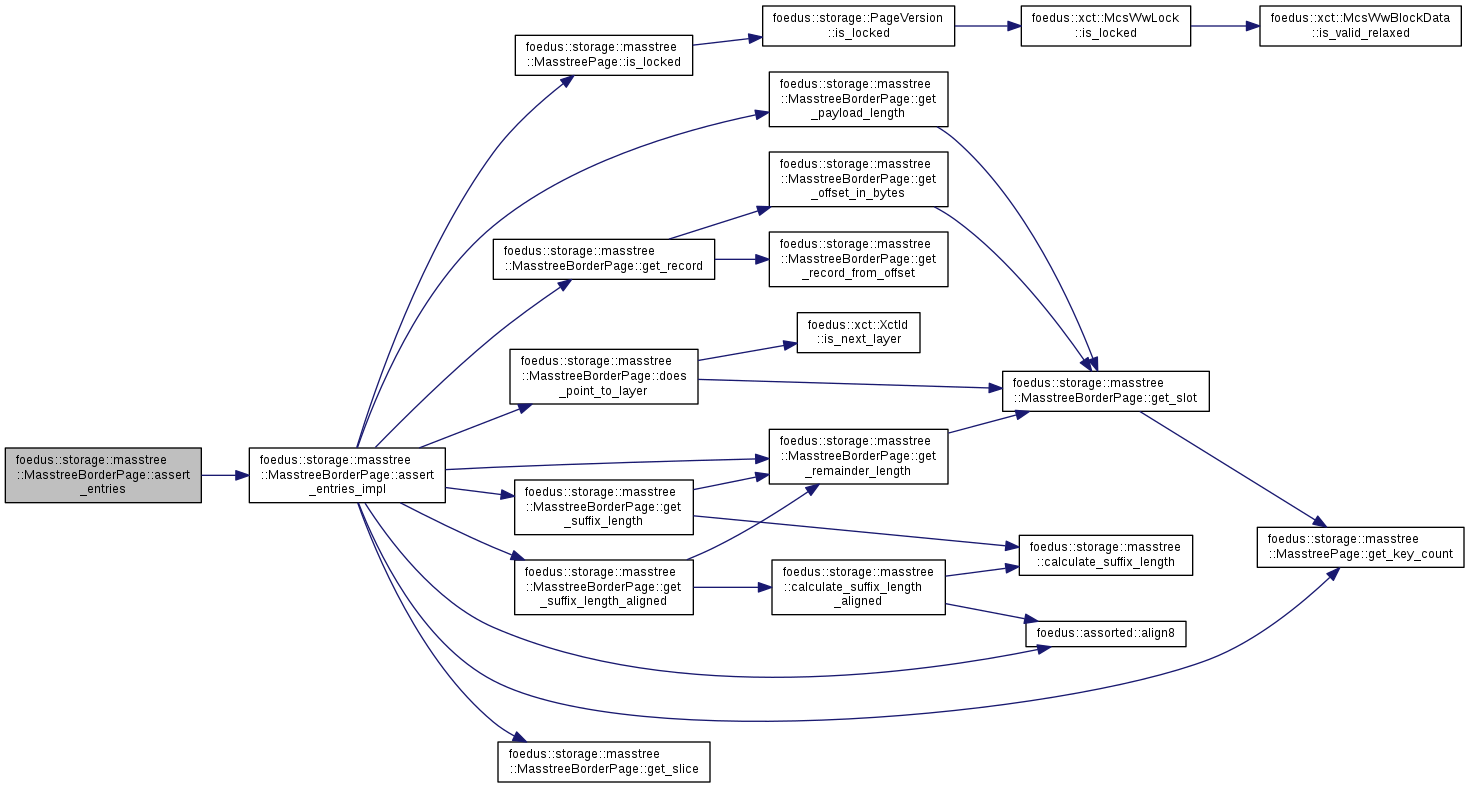

| void foedus::storage::masstree::MasstreeBorderPage::assert_entries_impl | ( | ) | const |

defined in masstree_page_debug.cpp.

Definition at line 114 of file masstree_page_debug.cpp.

References foedus::assorted::align8(), ASSERT_ND, does_point_to_layer(), foedus::storage::masstree::MasstreePage::get_key_count(), get_payload_length(), get_record(), get_remainder_length(), get_slice(), get_suffix_length(), get_suffix_length_aligned(), foedus::storage::masstree::MasstreePage::header_, foedus::storage::masstree::MasstreePage::is_locked(), foedus::storage::masstree::kBorderPageMaxSlots, and foedus::storage::PageHeader::snapshot_.

Referenced by assert_entries().

|

inline |

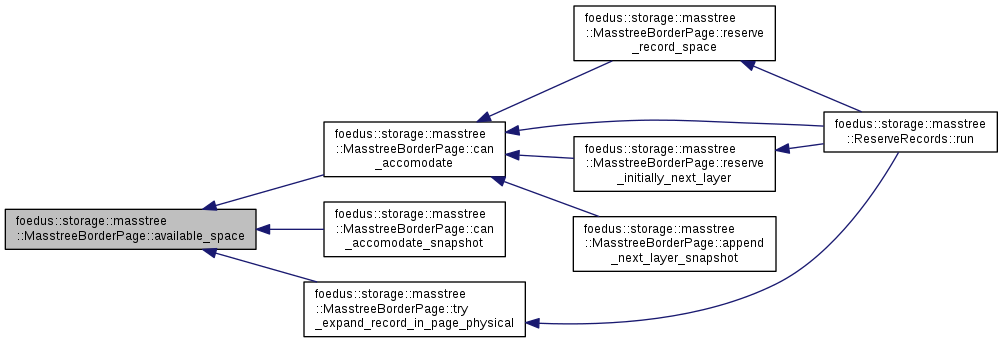

Returns usable data space in bytes.

Definition at line 660 of file masstree_page_impl.hpp.

References ASSERT_ND, and foedus::storage::masstree::MasstreePage::get_key_count().

Referenced by can_accomodate(), can_accomodate_snapshot(), and try_expand_record_in_page_physical().

|

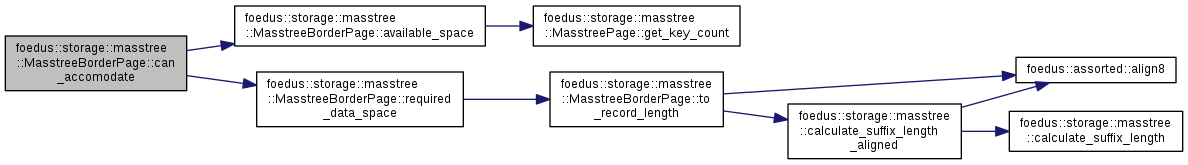

inline |

Definition at line 1570 of file masstree_page_impl.hpp.

References ASSERT_ND, available_space(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::storage::masstree::kInitiallyNextLayer, foedus::storage::masstree::kMaxKeyLength, foedus::storage::masstree::kMaxPayloadLength, and required_data_space().

Referenced by append_next_layer_snapshot(), reserve_initially_next_layer(), reserve_record_space(), and foedus::storage::masstree::ReserveRecords::run().

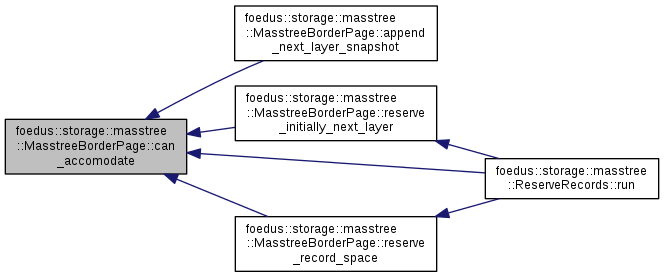

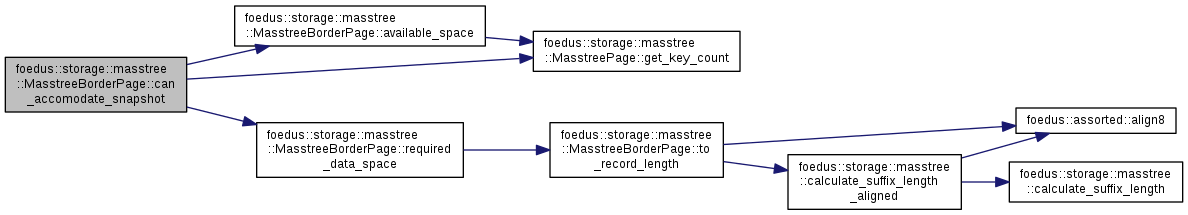

|

inline |

Slightly different from can_accomodate() as follows:

Definition at line 1585 of file masstree_page_impl.hpp.

References ASSERT_ND, available_space(), foedus::storage::masstree::MasstreePage::get_key_count(), foedus::storage::masstree::MasstreePage::header_, foedus::storage::masstree::kBorderPageMaxSlots, required_data_space(), and foedus::storage::PageHeader::snapshot_.

|

inline |

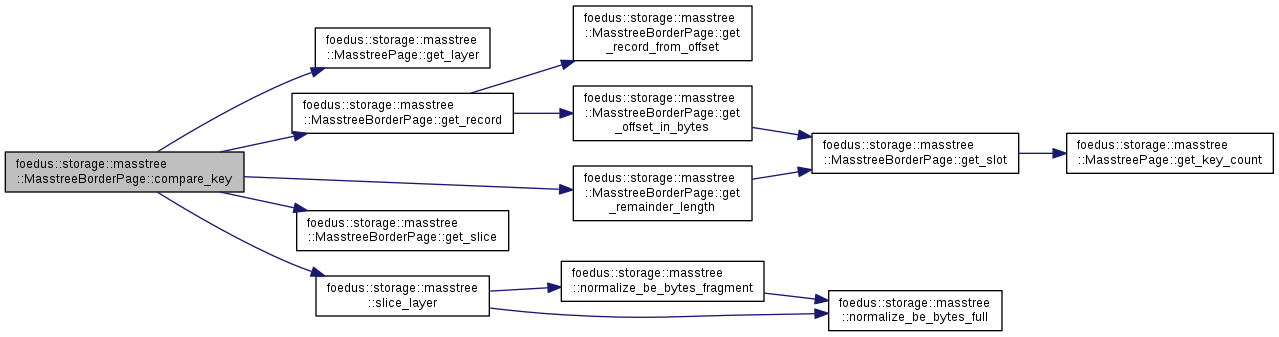

actually this method should be renamed to equal_key...

Definition at line 1600 of file masstree_page_impl.hpp.

References ASSERT_ND, foedus::storage::masstree::MasstreePage::get_layer(), get_record(), get_remainder_length(), get_slice(), foedus::storage::masstree::kBorderPageMaxSlots, and foedus::storage::masstree::slice_layer().

Referenced by equal_key().

|

inline |

Definition at line 796 of file masstree_page_impl.hpp.

References ASSERT_ND, get_slot(), foedus::xct::XctId::is_next_layer(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::storage::masstree::MasstreeBorderPage::Slot::tid_, and foedus::xct::RwLockableXctId::xct_id_.

Referenced by assert_entries_impl(), foedus::storage::masstree::MasstreeStoragePimpl::debugout_single_thread_recurse(), find_key(), find_key_for_reserve(), find_key_for_snapshot(), foedus::storage::masstree::MasstreeStoragePimpl::follow_layer(), foedus::storage::masstree::MasstreeStoragePimpl::hcc_reset_all_temperature_stat_recurse(), foedus::storage::masstree::MasstreeStoragePimpl::locate_record(), foedus::storage::masstree::MasstreeStoragePimpl::locate_record_normalized(), ltgt_key(), foedus::storage::masstree::operator<<(), foedus::storage::masstree::MasstreeStoragePimpl::peek_volatile_page_boundaries_next_layer(), foedus::storage::masstree::MasstreeStoragePimpl::prefetch_pages_normalized_recurse(), release_pages_recursive(), replace_next_layer_snapshot(), foedus::storage::masstree::GrowNonFirstLayerRoot::run(), foedus::storage::masstree::ReserveRecords::run(), track_moved_record(), track_moved_record_next_layer(), foedus::storage::masstree::MasstreeStoragePimpl::verify_single_thread_border(), and will_contain_next_layer().

|

inline |

let's gradually migrate from compare_key() to this.

Definition at line 1624 of file masstree_page_impl.hpp.

References compare_key().

|

inline |

Navigates a searching key-slice to one of the record in this page.

Definition at line 1120 of file masstree_page_impl.hpp.

References ASSERT_ND, does_point_to_layer(), foedus::storage::masstree::MasstreePage::get_key_count(), get_record(), get_remainder_length(), get_slice(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::storage::masstree::kMaxKeyLength, LIKELY, and prefetch_additional_if_needed().

Referenced by foedus::storage::masstree::MasstreeStoragePimpl::locate_record(), track_moved_record(), and track_moved_record_next_layer().

|

inline |

This is for the case we are looking for either the matching slot or the slot we will modify.

Definition at line 1198 of file masstree_page_impl.hpp.

References ASSERT_ND, does_point_to_layer(), get_record(), get_remainder_length(), get_slice(), foedus::storage::masstree::kBorderPageMaxSlots, kConflictingLocalRecord, kExactMatchLayerPointer, kExactMatchLocalRecord, foedus::storage::masstree::kMaxKeyLength, kNotFound, LIKELY, and prefetch_additional_if_needed().

Referenced by foedus::storage::masstree::MasstreeStoragePimpl::reserve_record(), and foedus::storage::masstree::ReserveRecords::run().

|

inline |

This one is used for snapshot pages.

Because keys are fully sorted in snapshot pages, this returns the index of the first record whose key is strictly larger than given key (key_count if not exists).

Definition at line 1256 of file masstree_page_impl.hpp.

References ASSERT_ND, does_point_to_layer(), foedus::storage::masstree::MasstreePage::get_key_count(), get_record(), get_remainder_length(), get_slice(), foedus::storage::masstree::MasstreePage::header_, kConflictingLocalRecord, kExactMatchLayerPointer, kExactMatchLocalRecord, foedus::storage::masstree::kMaxKeyLength, kNotFound, and foedus::storage::PageHeader::snapshot_.

|

inline |

Specialized version for 8 byte native integer search.

Because such a key never goes to second layer, this is much simpler.

Definition at line 1179 of file masstree_page_impl.hpp.

References ASSERT_ND, get_remainder_length(), get_slice(), foedus::storage::masstree::kBorderPageMaxSlots, prefetch_additional_if_needed(), and UNLIKELY.

Referenced by foedus::storage::masstree::MasstreeStoragePimpl::locate_record_normalized(), and foedus::storage::masstree::MasstreeStoragePimpl::reserve_record_normalized().

|

inline |

Definition at line 838 of file masstree_page_impl.hpp.

References foedus::storage::masstree::MasstreeBorderPage::Slot::get_max_payload_peek(), and get_slot().

Referenced by initialize_as_layer_root_physical(), foedus::storage::masstree::MasstreeStoragePimpl::insert_general(), foedus::storage::masstree::MasstreeStoragePimpl::reserve_record(), foedus::storage::masstree::MasstreeStoragePimpl::reserve_record_normalized(), foedus::storage::masstree::ReserveRecords::run(), try_expand_record_in_page_physical(), and foedus::storage::masstree::MasstreeStoragePimpl::upsert_general().

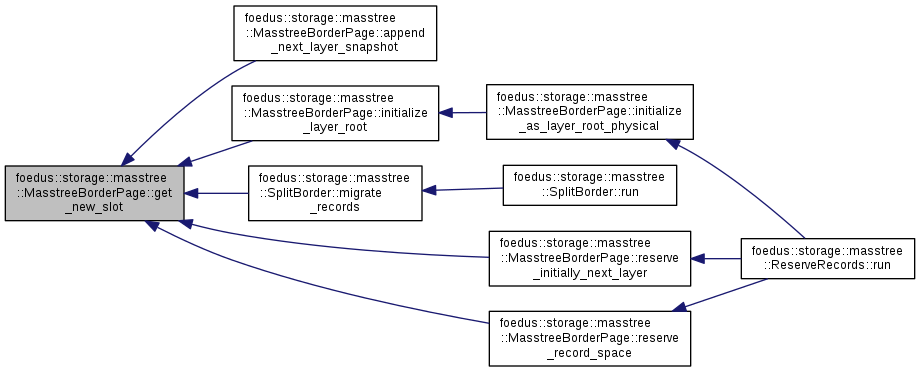

Definition at line 643 of file masstree_page_impl.hpp.

References ASSERT_ND, foedus::storage::masstree::MasstreePage::get_key_count(), and foedus::storage::masstree::kBorderPageMaxSlots.

Referenced by append_next_layer_snapshot(), initialize_layer_root(), foedus::storage::masstree::SplitBorder::migrate_records(), reserve_initially_next_layer(), and reserve_record_space().

|

inline |

Definition at line 750 of file masstree_page_impl.hpp.

References get_record_payload().

Referenced by foedus::storage::masstree::MasstreeStoragePimpl::debugout_single_thread_recurse(), foedus::storage::masstree::MasstreeStoragePimpl::follow_layer(), foedus::storage::masstree::MasstreeStoragePimpl::hcc_reset_all_temperature_stat_recurse(), initialize_as_layer_root_physical(), foedus::storage::masstree::operator<<(), foedus::storage::masstree::MasstreeStoragePimpl::peek_volatile_page_boundaries_next_layer(), foedus::storage::masstree::MasstreeStoragePimpl::prefetch_pages_normalized_recurse(), release_pages_recursive(), replace_next_layer_snapshot(), foedus::storage::masstree::GrowNonFirstLayerRoot::run(), foedus::storage::masstree::ReserveRecords::run(), track_moved_record_next_layer(), and foedus::storage::masstree::MasstreeStoragePimpl::verify_single_thread_border().

|

inline |

Definition at line 753 of file masstree_page_impl.hpp.

References get_record_payload().

|

inline |

Definition at line 782 of file masstree_page_impl.hpp.

References get_record_payload_from_offsets().

Referenced by append_next_layer_snapshot(), and reserve_initially_next_layer().

|

inline |

Definition at line 788 of file masstree_page_impl.hpp.

References get_record_payload_from_offsets().

|

inline |

Definition at line 625 of file masstree_page_impl.hpp.

Referenced by foedus::storage::masstree::SplitBorder::run(), and try_expand_record_in_page_physical().

|

inline |

Definition at line 809 of file masstree_page_impl.hpp.

References foedus::storage::masstree::MasstreeBorderPage::SlotLengthUnion::components, get_slot(), foedus::storage::masstree::MasstreeBorderPage::Slot::lengthes_, and foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::offset_.

Referenced by get_record(), and foedus::storage::masstree::operator<<().

|

inline |

Definition at line 813 of file masstree_page_impl.hpp.

References get_slot(), and foedus::storage::masstree::MasstreeBorderPage::Slot::tid_.

Referenced by foedus::storage::masstree::MasstreeStoragePimpl::follow_layer(), initialize_layer_root(), foedus::storage::masstree::SplitBorder::lock_existing_records(), foedus::storage::masstree::operator<<(), foedus::storage::masstree::RecordLocation::populate_logical(), foedus::storage::masstree::MasstreeStoragePimpl::register_record_write_log(), replace_next_layer_snapshot(), foedus::storage::masstree::GrowNonFirstLayerRoot::run(), foedus::storage::masstree::SplitBorder::run(), foedus::storage::masstree::ReserveRecords::run(), track_moved_record_next_layer(), and foedus::storage::masstree::MasstreeStoragePimpl::verify_single_thread_border().

|

inline |

Definition at line 816 of file masstree_page_impl.hpp.

References get_slot(), and foedus::storage::masstree::MasstreeBorderPage::Slot::tid_.

|

inline |

Definition at line 832 of file masstree_page_impl.hpp.

References foedus::storage::masstree::MasstreeBorderPage::SlotLengthUnion::components, get_slot(), foedus::storage::masstree::MasstreeBorderPage::Slot::lengthes_, and foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::payload_length_.

Referenced by assert_entries_impl(), foedus::storage::masstree::MasstreeStoragePimpl::increment_general(), foedus::storage::masstree::operator<<(), foedus::storage::masstree::MasstreeStoragePimpl::overwrite_general(), foedus::storage::masstree::MasstreeStoragePimpl::retrieve_general(), foedus::storage::masstree::MasstreeStoragePimpl::retrieve_part_general(), and foedus::storage::masstree::MasstreeStoragePimpl::upsert_general().

|

inline |

Definition at line 732 of file masstree_page_impl.hpp.

References ASSERT_ND, get_offset_in_bytes(), get_record_from_offset(), and foedus::storage::masstree::kBorderPageMaxSlots.

Referenced by assert_entries_impl(), compare_key(), find_key(), find_key_for_reserve(), find_key_for_snapshot(), get_record_payload(), ltgt_key(), foedus::storage::masstree::operator<<(), foedus::storage::masstree::MasstreeStoragePimpl::register_record_write_log(), track_moved_record(), and track_moved_record_next_layer().

|

inline |

Definition at line 736 of file masstree_page_impl.hpp.

References ASSERT_ND, get_offset_in_bytes(), get_record_from_offset(), and foedus::storage::masstree::kBorderPageMaxSlots.

|

inline |

Offset versions.

Definition at line 758 of file masstree_page_impl.hpp.

References ASSERT_ND.

Referenced by get_record(), get_record_payload_from_offsets(), initialize_layer_root(), foedus::storage::masstree::SplitBorder::migrate_records(), reserve_record_space(), and try_expand_record_in_page_physical().

|

inline |

Definition at line 763 of file masstree_page_impl.hpp.

References ASSERT_ND.

|

inline |

Definition at line 740 of file masstree_page_impl.hpp.

References get_record(), and get_suffix_length_aligned().

Referenced by get_next_layer(), foedus::storage::masstree::MasstreeStoragePimpl::increment_general(), initialize_as_layer_root_physical(), initialize_layer_root(), foedus::storage::masstree::operator<<(), foedus::storage::masstree::MasstreeStoragePimpl::retrieve_general(), and foedus::storage::masstree::MasstreeStoragePimpl::retrieve_part_general().

|

inline |

Definition at line 745 of file masstree_page_impl.hpp.

References get_record(), and get_suffix_length_aligned().

|

inline |

Definition at line 768 of file masstree_page_impl.hpp.

References foedus::storage::masstree::calculate_suffix_length_aligned(), and get_record_from_offset().

Referenced by get_next_layer_from_offsets(), and initialize_layer_root().

|

inline |

Definition at line 775 of file masstree_page_impl.hpp.

References foedus::storage::masstree::calculate_suffix_length_aligned(), and get_record_from_offset().

|

inline |

Definition at line 820 of file masstree_page_impl.hpp.

References get_slot(), and foedus::storage::masstree::MasstreeBorderPage::Slot::remainder_length_.

Referenced by assert_entries_impl(), compare_key(), find_key(), find_key_for_reserve(), find_key_for_snapshot(), find_key_normalized(), get_suffix_length(), get_suffix_length_aligned(), ltgt_key(), foedus::storage::masstree::operator<<(), reserve_record_space(), foedus::storage::masstree::MasstreeStoragePimpl::verify_single_thread_border(), and will_conflict().

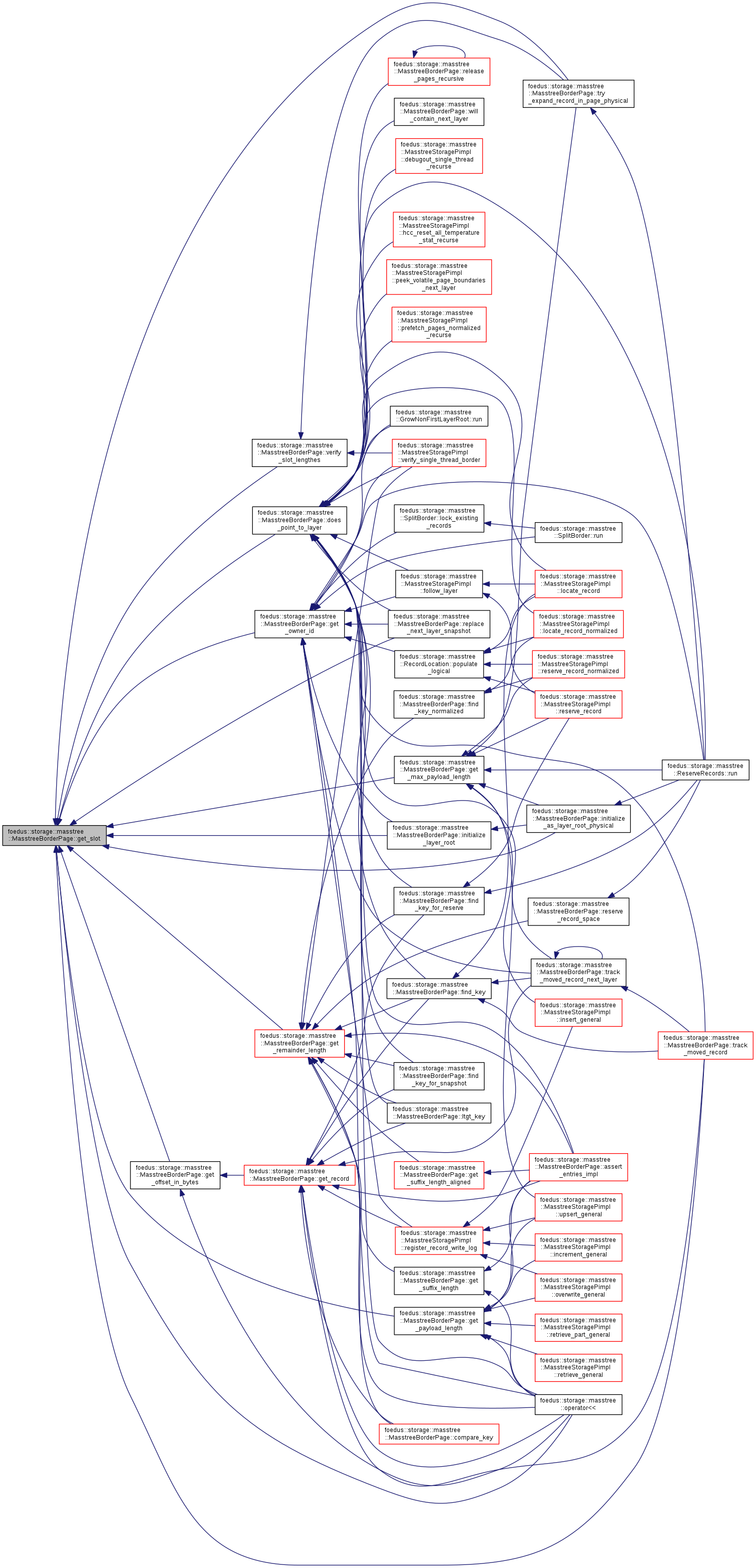

Definition at line 801 of file masstree_page_impl.hpp.

References ASSERT_ND, and foedus::storage::masstree::kBorderPageMaxSlots.

Referenced by assert_entries_impl(), compare_key(), foedus::storage::masstree::SplitBorder::decide_strategy(), find_key(), find_key_for_reserve(), find_key_for_snapshot(), find_key_normalized(), ltgt_key(), foedus::storage::masstree::operator<<(), foedus::storage::masstree::MasstreeStoragePimpl::peek_volatile_page_boundaries_next_layer(), foedus::storage::masstree::MasstreeStoragePimpl::prefetch_pages_normalized_recurse(), reserve_initially_next_layer(), reserve_record_space(), track_moved_record(), foedus::storage::masstree::MasstreeStoragePimpl::verify_single_thread_border(), will_conflict(), and will_contain_next_layer().

Definition at line 631 of file masstree_page_impl.hpp.

References ASSERT_ND, foedus::storage::masstree::MasstreePage::get_key_count(), and foedus::storage::masstree::kBorderPageMaxSlots.

Referenced by does_point_to_layer(), get_max_payload_length(), get_offset_in_bytes(), get_owner_id(), get_payload_length(), get_remainder_length(), initialize_as_layer_root_physical(), initialize_layer_root(), foedus::storage::masstree::operator<<(), replace_next_layer_snapshot(), track_moved_record(), try_expand_record_in_page_physical(), and verify_slot_lengthes().

Definition at line 637 of file masstree_page_impl.hpp.

References ASSERT_ND, foedus::storage::masstree::MasstreePage::get_key_count(), and foedus::storage::masstree::kBorderPageMaxSlots.

|

inline |

Definition at line 823 of file masstree_page_impl.hpp.

References foedus::storage::masstree::calculate_suffix_length(), and get_remainder_length().

Referenced by assert_entries_impl(), and foedus::storage::masstree::operator<<().

|

inline |

Definition at line 827 of file masstree_page_impl.hpp.

References foedus::storage::masstree::calculate_suffix_length_aligned(), and get_remainder_length().

Referenced by assert_entries_impl(), and get_record_payload().

|

inline |

Definition at line 626 of file masstree_page_impl.hpp.

References ASSERT_ND.

Referenced by try_expand_record_in_page_physical().

| void foedus::storage::masstree::MasstreeBorderPage::initialize_as_layer_root_physical | ( | VolatilePagePointer | page_id, |

| MasstreeBorderPage * | parent, | ||

| SlotIndex | parent_index | ||

| ) |

A physical-only method to initialize this page as a volatile page of a layer-root pointed from the given parent record.

It merely migrates the parent record without any logical change.

Definition at line 431 of file masstree_page_impl.cpp.

References ASSERT_ND, foedus::storage::masstree::MasstreePage::get_layer(), get_max_payload_length(), get_next_layer(), get_record_payload(), get_slot(), foedus::storage::masstree::MasstreePage::header_, initialize_layer_root(), initialize_volatile_page(), foedus::storage::masstree::MasstreePage::is_locked(), foedus::storage::masstree::MasstreePage::is_moved(), foedus::storage::masstree::MasstreePage::is_retired(), foedus::storage::masstree::kInfimumSlice, foedus::storage::masstree::kSupremumSlice, foedus::assorted::memory_fence_release(), foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::payload_length_, foedus::storage::DualPagePointer::snapshot_pointer_, foedus::storage::PageHeader::storage_id_, and foedus::storage::DualPagePointer::volatile_pointer_.

Referenced by foedus::storage::masstree::ReserveRecords::run().

| void foedus::storage::masstree::MasstreeBorderPage::initialize_layer_root | ( | const MasstreeBorderPage * | copy_from, |

| SlotIndex | copy_index | ||

| ) |

Copy the initial record that will be the only record for a new root page.

This is called when a new layer is created, and done in a thread-private memory. So, no synchronization needed.

Definition at line 298 of file masstree_page_impl.cpp.

References foedus::assorted::align8(), ASSERT_ND, foedus::storage::masstree::calculate_suffix_length_aligned(), foedus::storage::masstree::MasstreeBorderPage::SlotLengthUnion::components, foedus::storage::masstree::MasstreePage::get_key_count(), get_new_slot(), get_owner_id(), get_record_from_offset(), get_record_payload(), get_record_payload_from_offsets(), get_slot(), foedus::storage::masstree::MasstreePage::header_, foedus::xct::RwLockableXctId::is_keylocked(), foedus::storage::masstree::MasstreePage::is_locked(), foedus::xct::XctId::is_next_layer(), foedus::storage::PageHeader::key_count_, foedus::storage::masstree::kInitiallyNextLayer, foedus::storage::masstree::MasstreeBorderPage::Slot::lengthes_, foedus::xct::RwLockableXctId::lock_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::offset_, foedus::storage::masstree::MasstreeBorderPage::Slot::original_offset_, foedus::storage::masstree::MasstreeBorderPage::Slot::original_physical_record_length_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::payload_length_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::physical_record_length_, foedus::storage::masstree::MasstreeBorderPage::Slot::remainder_length_, foedus::xct::McsRwLock::reset(), set_slice(), foedus::storage::masstree::slice_key(), foedus::storage::masstree::MasstreeBorderPage::Slot::tid_, to_record_length(), foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::unused_, and foedus::xct::RwLockableXctId::xct_id_.

Referenced by initialize_as_layer_root_physical().

| void foedus::storage::masstree::MasstreeBorderPage::initialize_snapshot_page | ( | StorageId | storage_id, |

| SnapshotPagePointer | page_id, | ||

| uint8_t | layer, | ||

| KeySlice | low_fence, | ||

| KeySlice | high_fence | ||

| ) |

Definition at line 146 of file masstree_page_impl.cpp.

References foedus::storage::masstree::MasstreePage::initialize_snapshot_common(), and foedus::storage::kMasstreeBorderPageType.

Referenced by foedus::storage::masstree::MasstreeComposer::construct_root().

| void foedus::storage::masstree::MasstreeBorderPage::initialize_volatile_page | ( | StorageId | storage_id, |

| VolatilePagePointer | page_id, | ||

| uint8_t | layer, | ||

| KeySlice | low_fence, | ||

| KeySlice | high_fence | ||

| ) |

Definition at line 128 of file masstree_page_impl.cpp.

References foedus::storage::masstree::MasstreePage::initialize_volatile_common(), and foedus::storage::kMasstreeBorderPageType.

Referenced by initialize_as_layer_root_physical(), foedus::storage::masstree::MasstreeStoragePimpl::load_empty(), and foedus::storage::masstree::ReserveRecords::run().

|

inline |

Whether this page is receiving only sequential inserts.

If this is true, cursor can skip its sorting phase. If this is a snapshot page, this is always true.

Definition at line 623 of file masstree_page_impl.hpp.

Referenced by foedus::storage::masstree::SplitBorder::decide_strategy(), foedus::storage::masstree::SplitBorder::run(), and foedus::storage::masstree::MasstreeStoragePimpl::verify_single_thread_border().

|

inline |

compare the key.

returns negative, 0, positive when the given key is smaller,same,larger.

Definition at line 1631 of file masstree_page_impl.hpp.

References ASSERT_ND, foedus::storage::masstree::MasstreePage::get_layer(), foedus::storage::masstree::kSliceLen, and foedus::storage::masstree::slice_layer().

|

inline |

Overload to receive slice+suffix.

Definition at line 1641 of file masstree_page_impl.hpp.

References ASSERT_ND, does_point_to_layer(), get_record(), get_remainder_length(), get_slice(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::storage::masstree::kMaxKeyLength, and foedus::storage::masstree::kSliceLen.

|

delete |

|

inline |

prefetch upto 256th bytes.

Definition at line 934 of file masstree_page_impl.hpp.

References foedus::assorted::prefetch_cachelines().

|

inline |

Definition at line 937 of file masstree_page_impl.hpp.

References foedus::storage::masstree::kBorderPageAdditionalHeaderSize, foedus::assorted::kCachelineSize, foedus::storage::masstree::kCommonPageHeaderSize, and foedus::assorted::prefetch_cachelines().

Referenced by find_key(), find_key_for_reserve(), find_key_normalized(), and foedus::storage::masstree::MasstreeStoragePimpl::peek_volatile_page_boundaries_next_layer().

| void foedus::storage::masstree::MasstreeBorderPage::release_pages_recursive | ( | const memory::GlobalVolatilePageResolver & | page_resolver, |

| memory::PageReleaseBatch * | batch | ||

| ) |

Definition at line 266 of file masstree_page_impl.cpp.

References ASSERT_ND, does_point_to_layer(), foedus::storage::masstree::MasstreePage::foster_twin_, foedus::storage::masstree::MasstreePage::get_key_count(), get_next_layer(), foedus::storage::masstree::MasstreePage::header(), foedus::storage::masstree::MasstreePage::header_, foedus::storage::PageVersion::is_moved(), foedus::storage::VolatilePagePointer::is_null(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::storage::PageHeader::page_id_, foedus::storage::PageHeader::page_version_, foedus::memory::PageReleaseBatch::release(), release_pages_recursive(), foedus::storage::masstree::MasstreePage::release_pages_recursive_common(), foedus::memory::GlobalVolatilePageResolver::resolve_offset(), foedus::storage::DualPagePointer::volatile_pointer_, and foedus::storage::VolatilePagePointer::word.

Referenced by release_pages_recursive(), and foedus::storage::masstree::MasstreePage::release_pages_recursive_common().

|

inline |

Same as above, except this is used to transform an existing record at end to a next layer pointer.

Unlike set_next_layer, this shrinks the payload part.

Definition at line 1447 of file masstree_page_impl.hpp.

References ASSERT_ND, foedus::storage::VolatilePagePointer::clear(), foedus::storage::masstree::MasstreeBorderPage::SlotLengthUnion::components, does_point_to_layer(), foedus::storage::masstree::MasstreePage::get_key_count(), get_next_layer(), get_owner_id(), get_slot(), foedus::storage::masstree::MasstreePage::header(), foedus::xct::RwLockableXctId::is_next_layer(), foedus::storage::masstree::kInitiallyNextLayer, foedus::storage::masstree::MasstreeBorderPage::Slot::lengthes_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::offset_, foedus::storage::masstree::MasstreeBorderPage::Slot::original_physical_record_length_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::payload_length_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::physical_record_length_, foedus::storage::masstree::MasstreeBorderPage::Slot::remainder_length_, foedus::xct::XctId::set_next_layer(), foedus::storage::DualPagePointer::snapshot_pointer_, foedus::storage::masstree::MasstreeBorderPage::Slot::tid_, to_record_length(), foedus::storage::DualPagePointer::volatile_pointer_, and foedus::xct::RwLockableXctId::xct_id_.

|

inlinestatic |

returns the byte size of required contiguous space in data_ to insert a new record of the given remainder/payload length.

This includes Slot size. Slices are not included as they are anyway statically placed.

Definition at line 687 of file masstree_page_impl.hpp.

References to_record_length().

Referenced by can_accomodate(), and can_accomodate_snapshot().

|

inline |

For creating a record that is initially a next-layer.

Definition at line 1375 of file masstree_page_impl.hpp.

References ASSERT_ND, can_accomodate(), foedus::storage::masstree::MasstreeBorderPage::SlotLengthUnion::components, foedus::storage::masstree::MasstreePage::get_key_count(), get_new_slot(), get_next_layer_from_offsets(), get_slice(), foedus::storage::masstree::MasstreePage::header(), foedus::xct::XctId::is_deleted(), foedus::storage::masstree::MasstreePage::is_locked(), foedus::xct::XctId::is_next_layer(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::storage::masstree::kInitiallyNextLayer, foedus::storage::masstree::MasstreeBorderPage::Slot::lengthes_, foedus::xct::RwLockableXctId::lock_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::offset_, foedus::storage::masstree::MasstreeBorderPage::Slot::original_offset_, foedus::storage::masstree::MasstreeBorderPage::Slot::original_physical_record_length_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::payload_length_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::physical_record_length_, foedus::storage::masstree::MasstreeBorderPage::Slot::remainder_length_, foedus::xct::McsRwLock::reset(), set_slice(), foedus::storage::masstree::MasstreeBorderPage::Slot::tid_, to_record_length(), foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::unused_, and foedus::xct::RwLockableXctId::xct_id_.

Referenced by foedus::storage::masstree::ReserveRecords::run().

|

inline |

Installs a new physical record that doesn't exist logically (delete bit on).

This sets 1) slot, 2) suffix key, and 3) XctId. Payload is not set yet. This is executed as a system transaction.

Definition at line 1322 of file masstree_page_impl.hpp.

References foedus::assorted::align8(), ASSERT_ND, foedus::storage::masstree::calculate_suffix_length(), can_accomodate(), foedus::storage::masstree::MasstreeBorderPage::SlotLengthUnion::components, foedus::storage::masstree::MasstreePage::get_key_count(), get_new_slot(), get_record_from_offset(), get_remainder_length(), get_slice(), foedus::storage::masstree::MasstreePage::header(), foedus::xct::XctId::is_deleted(), foedus::storage::masstree::MasstreePage::is_locked(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::storage::masstree::kInitiallyNextLayer, foedus::storage::masstree::kMaxKeyLength, foedus::storage::masstree::MasstreeBorderPage::Slot::lengthes_, foedus::xct::RwLockableXctId::lock_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::offset_, foedus::storage::masstree::MasstreeBorderPage::Slot::original_offset_, foedus::storage::masstree::MasstreeBorderPage::Slot::original_physical_record_length_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::payload_length_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::physical_record_length_, foedus::storage::masstree::MasstreeBorderPage::Slot::remainder_length_, foedus::xct::McsRwLock::reset(), set_slice(), foedus::storage::masstree::MasstreeBorderPage::Slot::tid_, to_record_length(), foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::unused_, and foedus::xct::RwLockableXctId::xct_id_.

Referenced by foedus::storage::masstree::ReserveRecords::run().

|

inline |

Definition at line 805 of file masstree_page_impl.hpp.

References ASSERT_ND, and foedus::storage::masstree::kBorderPageMaxSlots.

Referenced by append_next_layer_snapshot(), initialize_layer_root(), foedus::storage::masstree::SplitBorder::migrate_records(), reserve_initially_next_layer(), and reserve_record_space().

|

inlinestatic |

returns minimal physical_record_length_ for the given remainder/payload length.

Definition at line 674 of file masstree_page_impl.hpp.

References foedus::assorted::align8(), and foedus::storage::masstree::calculate_suffix_length_aligned().

Referenced by append_next_layer_snapshot(), initialize_layer_root(), foedus::storage::masstree::SplitBorder::migrate_records(), replace_next_layer_snapshot(), required_data_space(), reserve_initially_next_layer(), reserve_record_space(), and try_expand_record_in_page_physical().

|

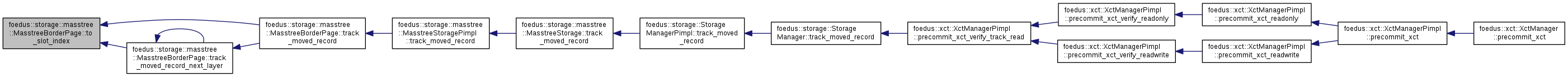

inline |

Definition at line 649 of file masstree_page_impl.hpp.

References ASSERT_ND.

Referenced by track_moved_record(), and track_moved_record_next_layer().

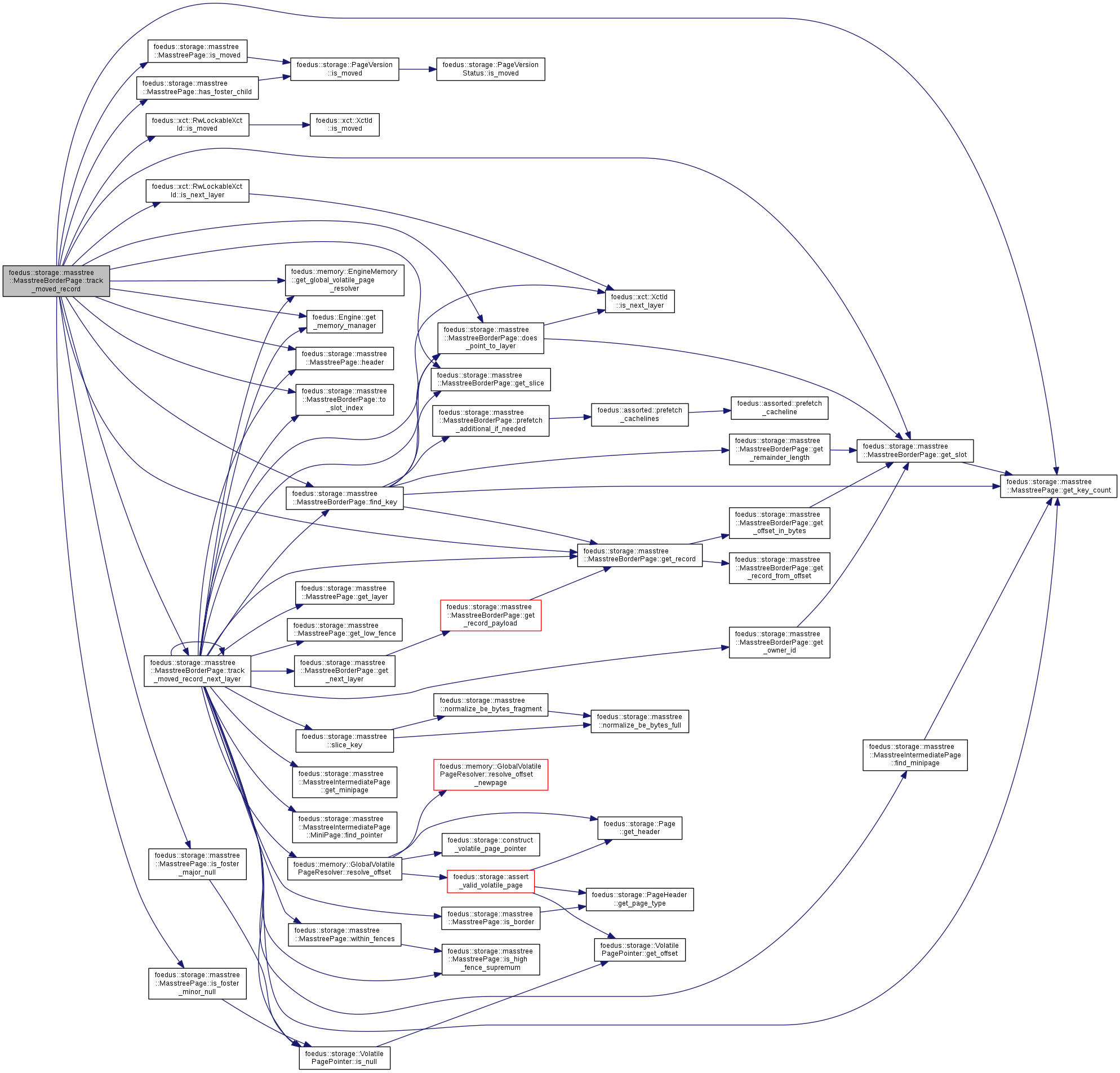

| xct::TrackMovedRecordResult foedus::storage::masstree::MasstreeBorderPage::track_moved_record | ( | Engine * | engine, |

| xct::RwLockableXctId * | old_address, | ||

| xct::WriteXctAccess * | write_set | ||

| ) |

Definition at line 534 of file masstree_page_impl.cpp.

References ASSERT_ND, does_point_to_layer(), find_key(), foedus::memory::EngineMemory::get_global_volatile_page_resolver(), foedus::storage::masstree::MasstreePage::get_key_count(), foedus::Engine::get_memory_manager(), get_record(), get_slice(), get_slot(), foedus::storage::masstree::MasstreePage::has_foster_child(), foedus::storage::masstree::MasstreePage::header(), foedus::storage::masstree::MasstreePage::is_foster_major_null(), foedus::storage::masstree::MasstreePage::is_foster_minor_null(), foedus::storage::masstree::MasstreePage::is_moved(), foedus::xct::RwLockableXctId::is_moved(), foedus::xct::RwLockableXctId::is_next_layer(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::storage::masstree::kInitiallyNextLayer, foedus::storage::kMasstreeBorderPageType, foedus::storage::masstree::MasstreeBorderPage::Slot::remainder_length_, foedus::storage::masstree::MasstreeBorderPage::Slot::tid_, to_slot_index(), and track_moved_record_next_layer().

Referenced by foedus::storage::masstree::MasstreeStoragePimpl::track_moved_record().

| xct::TrackMovedRecordResult foedus::storage::masstree::MasstreeBorderPage::track_moved_record_next_layer | ( | Engine * | engine, |

| xct::RwLockableXctId * | old_address | ||

| ) |

This one further tracks it to next layer.

Instead it requires a non-null write_set.

Definition at line 609 of file masstree_page_impl.cpp.

References ASSERT_ND, does_point_to_layer(), find_key(), foedus::storage::masstree::MasstreeIntermediatePage::find_minipage(), foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::find_pointer(), foedus::memory::EngineMemory::get_global_volatile_page_resolver(), foedus::storage::masstree::MasstreePage::get_key_count(), foedus::storage::masstree::MasstreePage::get_layer(), foedus::storage::masstree::MasstreePage::get_low_fence(), foedus::Engine::get_memory_manager(), foedus::storage::masstree::MasstreeIntermediatePage::get_minipage(), get_next_layer(), get_owner_id(), get_record(), foedus::storage::masstree::MasstreePage::header(), foedus::storage::masstree::MasstreePage::is_border(), foedus::storage::masstree::MasstreePage::is_high_fence_supremum(), foedus::xct::XctId::is_next_layer(), foedus::storage::VolatilePagePointer::is_null(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::storage::masstree::kInfimumSlice, foedus::storage::masstree::kInitiallyNextLayer, foedus::storage::kMasstreeBorderPageType, foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::pointers_, foedus::memory::GlobalVolatilePageResolver::resolve_offset(), foedus::storage::masstree::slice_key(), to_slot_index(), track_moved_record_next_layer(), UNLIKELY, foedus::storage::DualPagePointer::volatile_pointer_, foedus::storage::masstree::MasstreePage::within_fences(), and foedus::xct::RwLockableXctId::xct_id_.

Referenced by track_moved_record(), and track_moved_record_next_layer().

| bool foedus::storage::masstree::MasstreeBorderPage::try_expand_record_in_page_physical | ( | PayloadLength | payload_count, |

| SlotIndex | record_index | ||

| ) |

A physical-only method to expand a record within this page without any logical change.

Definition at line 360 of file masstree_page_impl.cpp.

References ASSERT_ND, available_space(), foedus::storage::masstree::MasstreeBorderPage::SlotLengthUnion::components, foedus::storage::masstree::MasstreeBorderPage::Slot::does_point_to_layer(), foedus::storage::masstree::MasstreePage::get_key_count(), get_max_payload_length(), get_next_offset(), get_record_from_offset(), get_slot(), foedus::storage::masstree::MasstreePage::header_, increase_next_offset(), foedus::xct::RwLockableXctId::is_keylocked(), foedus::storage::masstree::MasstreePage::is_locked(), foedus::storage::masstree::MasstreePage::is_moved(), foedus::xct::RwLockableXctId::is_moved(), foedus::storage::masstree::MasstreeBorderPage::Slot::lengthes_, foedus::assorted::memory_fence_release(), foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::offset_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::physical_record_length_, foedus::storage::masstree::MasstreeBorderPage::Slot::read_lengthes_oneshot(), foedus::storage::masstree::MasstreeBorderPage::Slot::remainder_length_, foedus::storage::PageHeader::snapshot_, foedus::storage::masstree::MasstreeBorderPage::Slot::tid_, to_record_length(), verify_slot_lengthes(), and foedus::storage::masstree::MasstreeBorderPage::Slot::write_lengthes_oneshot().

Referenced by foedus::storage::masstree::ReserveRecords::run().

| bool foedus::storage::masstree::MasstreeBorderPage::verify_slot_lengthes | ( | SlotIndex | index | ) | const |

Definition at line 708 of file masstree_page_impl.cpp.

References ASSERT_ND, foedus::storage::masstree::MasstreeBorderPage::SlotLengthUnion::components, foedus::storage::masstree::MasstreeBorderPage::Slot::does_point_to_layer(), foedus::storage::masstree::MasstreeBorderPage::Slot::get_max_payload_peek(), get_slot(), foedus::storage::masstree::kInitiallyNextLayer, foedus::storage::masstree::MasstreeBorderPage::Slot::lengthes_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::offset_, foedus::storage::masstree::MasstreeBorderPage::Slot::original_offset_, foedus::storage::masstree::MasstreeBorderPage::Slot::original_physical_record_length_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::payload_length_, foedus::storage::masstree::MasstreeBorderPage::SlotLengthPart::physical_record_length_, and foedus::storage::masstree::MasstreeBorderPage::Slot::remainder_length_.

Referenced by try_expand_record_in_page_physical(), and foedus::storage::masstree::MasstreeStoragePimpl::verify_single_thread_border().

|

inline |

Returns whether inserting the key will cause creation of a new next layer.

This is mainly used for assertions.

Definition at line 1690 of file masstree_page_impl.hpp.

References ASSERT_ND, foedus::storage::masstree::MasstreePage::get_layer(), foedus::storage::masstree::kSliceLen, and foedus::storage::masstree::slice_layer().

|

inline |

Overload to receive slice.

Definition at line 1699 of file masstree_page_impl.hpp.

References ASSERT_ND, get_remainder_length(), get_slice(), foedus::storage::masstree::kBorderPageMaxSlots, and foedus::storage::masstree::kSliceLen.

|

inline |

Returns whether the record is a next-layer pointer that would contain the key.

Definition at line 1714 of file masstree_page_impl.hpp.

References ASSERT_ND, foedus::storage::masstree::MasstreePage::get_layer(), foedus::storage::masstree::kSliceLen, and foedus::storage::masstree::slice_layer().

|

inline |

Definition at line 1723 of file masstree_page_impl.hpp.

References ASSERT_ND, does_point_to_layer(), get_slice(), foedus::storage::masstree::kBorderPageMaxSlots, and foedus::storage::masstree::kSliceLen.

|

friend |

defined in masstree_page_debug.cpp.

Definition at line 80 of file masstree_page_debug.cpp.

|

friend |

Definition at line 446 of file masstree_page_impl.hpp.