|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

A system transaction to split a border page in Master-Tree. More...

A system transaction to split a border page in Master-Tree.

When a border page becomes full or close to full, we split the page into two border pages. The new pages are placed as tentative foster twins of the page.

This does nothing and returns kErrorCodeOk in the following cases:

Locks taken in this sysxct (in order of taking):

At least after releasing enclosing user transaction's locks, there is no chance of deadlocks or even any conditional locks. max_retries=2 should be enough in run_nested_sysxct().

Definition at line 51 of file masstree_split_impl.hpp.

#include <masstree_split_impl.hpp>

Classes | |

| struct | SplitStrategy |

Public Member Functions | |

| SplitBorder (thread::Thread *context, MasstreeBorderPage *target, KeySlice trigger, bool disable_no_record_split=false, bool piggyback_reserve=false, KeyLength piggyback_remainder_length=0, PayloadLength piggyback_payload_count=0, const void *piggyback_suffix=nullptr) | |

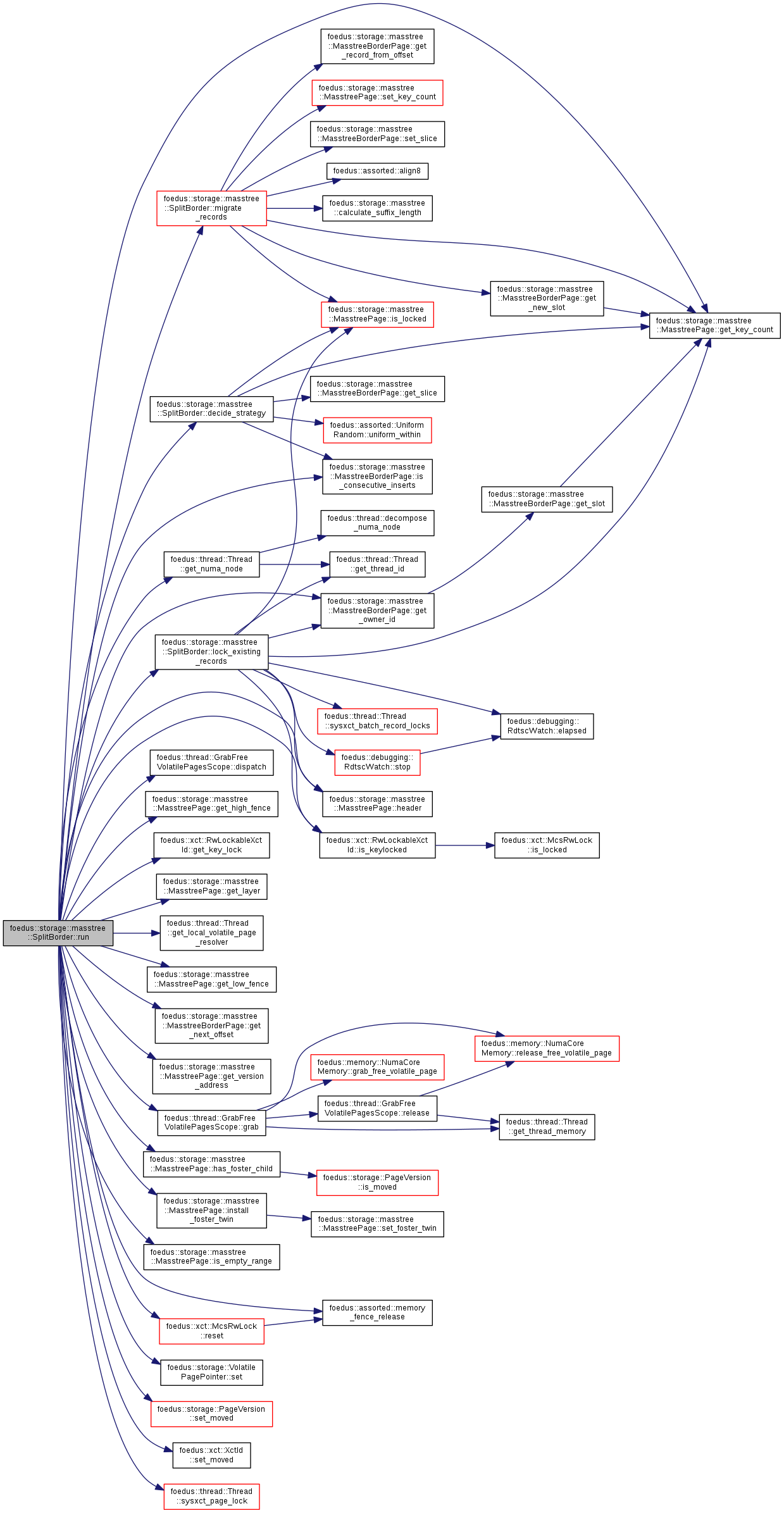

| virtual ErrorCode | run (xct::SysxctWorkspace *sysxct_workspace) override |

| Border node's Split. More... | |

| void | decide_strategy (SplitStrategy *out) const |

| Subroutine to decide how we will split this page. More... | |

| ErrorCode | lock_existing_records (xct::SysxctWorkspace *sysxct_workspace) |

| Subroutine to lock existing records in target_. More... | |

| void | migrate_records (KeySlice inclusive_from, KeySlice inclusive_to, MasstreeBorderPage *dest) const |

| Subroutine to construct a new page. More... | |

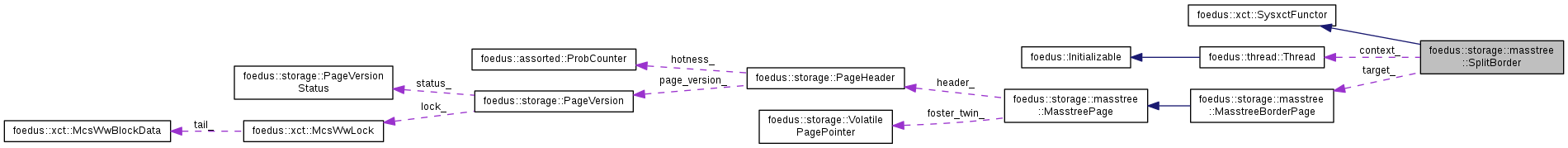

Public Attributes | |

| thread::Thread *const | context_ |

| Thread context. More... | |

| MasstreeBorderPage *const | target_ |

| The page to split. More... | |

| const KeySlice | trigger_ |

| The key that triggered this split. More... | |

| const bool | disable_no_record_split_ |

| If true, we never do no-record-split (NRS). More... | |

| const bool | piggyback_reserve_ |

| An optimization to also make room for a record. More... | |

| const KeyLength | piggyback_remainder_length_ |

| const PayloadLength | piggyback_payload_count_ |

| const void * | piggyback_suffix_ |

|

inline |

Definition at line 82 of file masstree_split_impl.hpp.

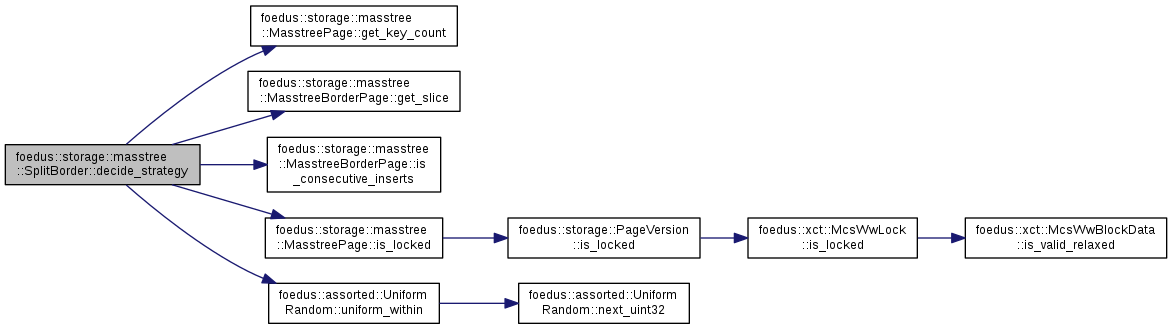

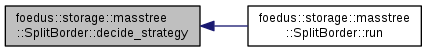

| void foedus::storage::masstree::SplitBorder::decide_strategy | ( | SplitBorder::SplitStrategy * | out | ) | const |

Subroutine to decide how we will split this page.

Definition at line 146 of file masstree_split_impl.cpp.

References ASSERT_ND, disable_no_record_split_, foedus::storage::masstree::MasstreePage::get_key_count(), foedus::storage::masstree::MasstreeBorderPage::get_slice(), foedus::storage::masstree::MasstreeBorderPage::is_consecutive_inserts(), foedus::storage::masstree::MasstreePage::is_locked(), foedus::storage::masstree::kInfimumSlice, foedus::storage::masstree::SplitBorder::SplitStrategy::largest_slice_, foedus::storage::masstree::SplitBorder::SplitStrategy::mid_slice_, foedus::storage::masstree::SplitBorder::SplitStrategy::no_record_split_, foedus::storage::masstree::SplitBorder::SplitStrategy::original_key_count_, foedus::storage::masstree::SplitBorder::SplitStrategy::smallest_slice_, target_, trigger_, and foedus::assorted::UniformRandom::uniform_within().

Referenced by run().

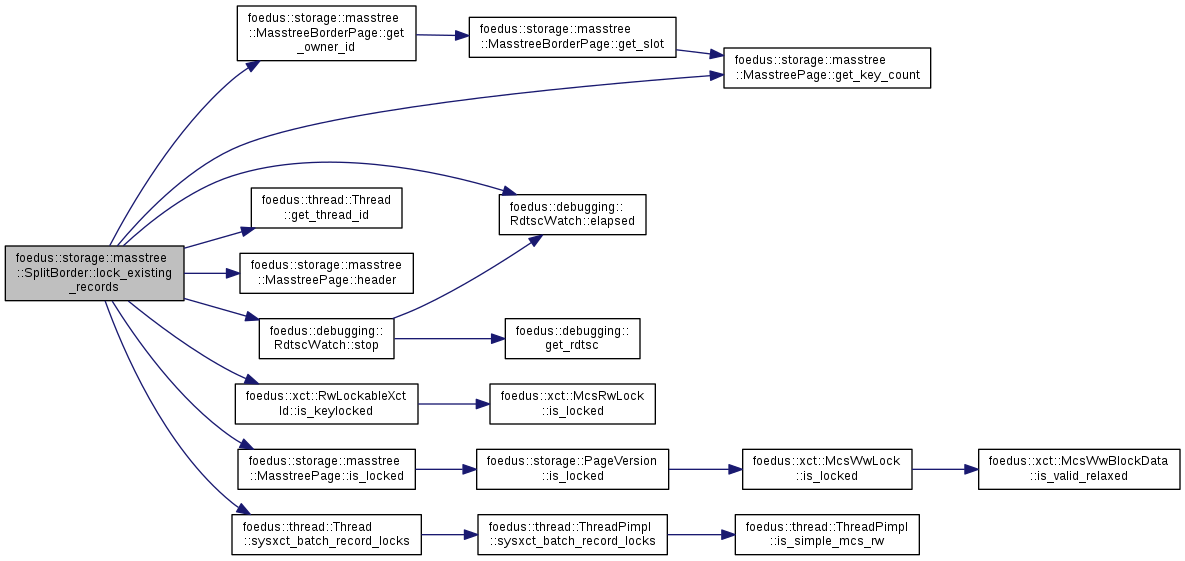

| ErrorCode foedus::storage::masstree::SplitBorder::lock_existing_records | ( | xct::SysxctWorkspace * | sysxct_workspace | ) |

Subroutine to lock existing records in target_.

Definition at line 304 of file masstree_split_impl.cpp.

References ASSERT_ND, CHECK_ERROR_CODE, context_, foedus::debugging::RdtscWatch::elapsed(), foedus::storage::masstree::MasstreePage::get_key_count(), foedus::storage::masstree::MasstreeBorderPage::get_owner_id(), foedus::thread::Thread::get_thread_id(), foedus::storage::masstree::MasstreePage::header(), foedus::xct::RwLockableXctId::is_keylocked(), foedus::storage::masstree::MasstreePage::is_locked(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::kErrorCodeOk, foedus::storage::PageHeader::page_id_, foedus::debugging::RdtscWatch::stop(), foedus::storage::PageHeader::storage_id_, foedus::thread::Thread::sysxct_batch_record_locks(), and target_.

Referenced by run().

| void foedus::storage::masstree::SplitBorder::migrate_records | ( | KeySlice | inclusive_from, |

| KeySlice | inclusive_to, | ||

| MasstreeBorderPage * | dest | ||

| ) | const |

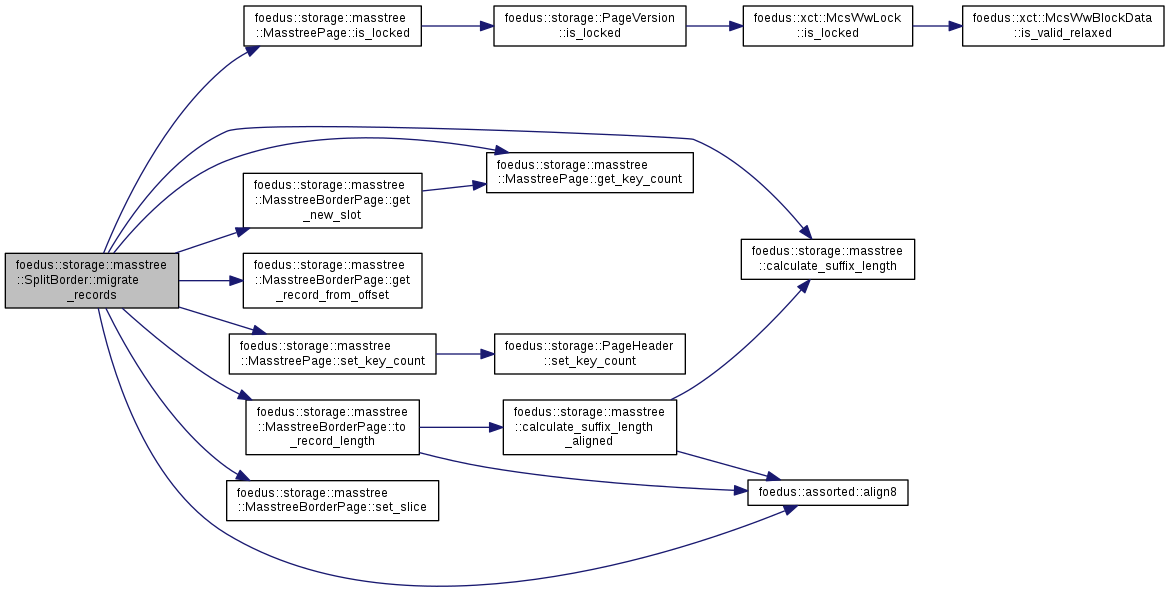

Subroutine to construct a new page.

Definition at line 345 of file masstree_split_impl.cpp.

References foedus::assorted::align8(), ASSERT_ND, foedus::storage::masstree::calculate_suffix_length(), foedus::storage::masstree::MasstreePage::get_key_count(), foedus::storage::masstree::MasstreeBorderPage::get_new_slot(), foedus::storage::masstree::MasstreeBorderPage::get_record_from_offset(), foedus::storage::masstree::MasstreePage::is_locked(), foedus::storage::masstree::kInitiallyNextLayer, foedus::storage::masstree::kMaxKeyLength, foedus::storage::masstree::kSupremumSlice, foedus::storage::masstree::MasstreePage::set_key_count(), foedus::storage::masstree::MasstreeBorderPage::set_slice(), target_, and foedus::storage::masstree::MasstreeBorderPage::to_record_length().

Referenced by run().

|

overridevirtual |

Border node's Split.

Implements foedus::xct::SysxctFunctor.

Definition at line 39 of file masstree_split_impl.cpp.

References ASSERT_ND, CHECK_ERROR_CODE, context_, decide_strategy(), disable_no_record_split_, foedus::thread::GrabFreeVolatilePagesScope::dispatch(), foedus::storage::masstree::MasstreePage::get_high_fence(), foedus::storage::masstree::MasstreePage::get_key_count(), foedus::xct::RwLockableXctId::get_key_lock(), foedus::storage::masstree::MasstreePage::get_layer(), foedus::thread::Thread::get_local_volatile_page_resolver(), foedus::storage::masstree::MasstreePage::get_low_fence(), foedus::storage::masstree::MasstreeBorderPage::get_next_offset(), foedus::thread::Thread::get_numa_node(), foedus::storage::masstree::MasstreeBorderPage::get_owner_id(), foedus::storage::masstree::MasstreePage::get_version_address(), foedus::thread::GrabFreeVolatilePagesScope::grab(), foedus::storage::masstree::MasstreePage::has_foster_child(), foedus::storage::masstree::MasstreePage::header(), foedus::storage::masstree::MasstreePage::install_foster_twin(), foedus::storage::masstree::MasstreeBorderPage::is_consecutive_inserts(), foedus::storage::masstree::MasstreePage::is_empty_range(), foedus::xct::RwLockableXctId::is_keylocked(), foedus::kErrorCodeOk, foedus::storage::masstree::SplitBorder::SplitStrategy::largest_slice_, lock_existing_records(), foedus::assorted::memory_fence_release(), foedus::storage::masstree::SplitBorder::SplitStrategy::mid_slice_, migrate_records(), foedus::storage::masstree::SplitBorder::SplitStrategy::no_record_split_, foedus::xct::McsRwLock::reset(), foedus::storage::VolatilePagePointer::set(), foedus::storage::PageVersion::set_moved(), foedus::xct::XctId::set_moved(), foedus::storage::masstree::SplitBorder::SplitStrategy::smallest_slice_, foedus::storage::PageHeader::snapshot_, foedus::storage::PageHeader::storage_id_, foedus::thread::Thread::sysxct_page_lock(), target_, and foedus::xct::RwLockableXctId::xct_id_.

| thread::Thread* const foedus::storage::masstree::SplitBorder::context_ |

Thread context.

Definition at line 53 of file masstree_split_impl.hpp.

Referenced by lock_existing_records(), and run().

| const bool foedus::storage::masstree::SplitBorder::disable_no_record_split_ |

If true, we never do no-record-split (NRS).

This is useful for example when we want to make room for record-expansion. Otherwise, we get stuck when the record-expansion causes a page-split that is eligible for NRS.

Definition at line 66 of file masstree_split_impl.hpp.

Referenced by decide_strategy(), and run().

| const PayloadLength foedus::storage::masstree::SplitBorder::piggyback_payload_count_ |

Definition at line 79 of file masstree_split_impl.hpp.

| const KeyLength foedus::storage::masstree::SplitBorder::piggyback_remainder_length_ |

Definition at line 78 of file masstree_split_impl.hpp.

| const bool foedus::storage::masstree::SplitBorder::piggyback_reserve_ |

An optimization to also make room for a record.

Whether to do that, key length, payload length, and the key (well, suffix). In this case, trigger_ is implicitly the slice for piggyback_reserve_.

This optimization is best-effort. The caller must check afterwards whether the space is actually reserved. For example, a concurrent thread might have newly reserved a (might be too small) space for the key right before the call.

Definition at line 77 of file masstree_split_impl.hpp.

| const void* foedus::storage::masstree::SplitBorder::piggyback_suffix_ |

Definition at line 80 of file masstree_split_impl.hpp.

| MasstreeBorderPage* const foedus::storage::masstree::SplitBorder::target_ |

The page to split.

Definition at line 58 of file masstree_split_impl.hpp.

Referenced by decide_strategy(), lock_existing_records(), migrate_records(), and run().

| const KeySlice foedus::storage::masstree::SplitBorder::trigger_ |

The key that triggered this split.

A hint for NRS

Definition at line 60 of file masstree_split_impl.hpp.

Referenced by decide_strategy().