|

libfoedus-core

FOEDUS Core Library

|

|

libfoedus-core

FOEDUS Core Library

|

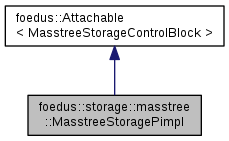

Pimpl object of MasstreeStorage. More...

Pimpl object of MasstreeStorage.

A private pimpl object for MasstreeStorage. Do not include this header from a client program unless you know what you are doing.

Definition at line 98 of file masstree_storage_pimpl.hpp.

#include <masstree_storage_pimpl.hpp>

Public Member Functions | |

| MasstreeStoragePimpl () | |

| MasstreeStoragePimpl (MasstreeStorage *storage) | |

| ErrorStack | create (const MasstreeMetadata &metadata) |

| ErrorStack | load (const StorageControlBlock &snapshot_block) |

| ErrorStack | load_empty () |

| ErrorStack | drop () |

| Storage-wide operations, such as drop, create, etc. More... | |

| bool | exists () const |

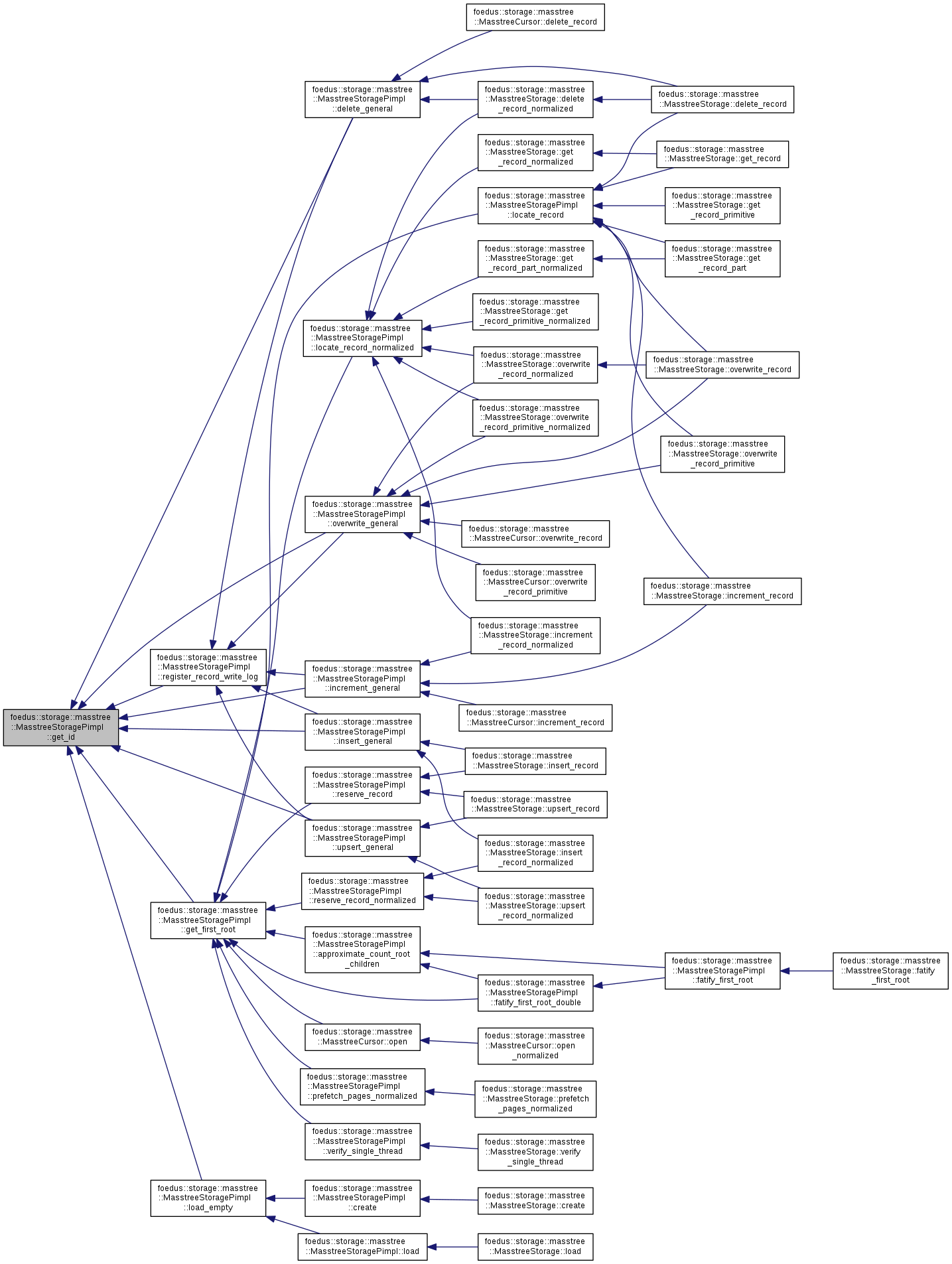

| StorageId | get_id () const |

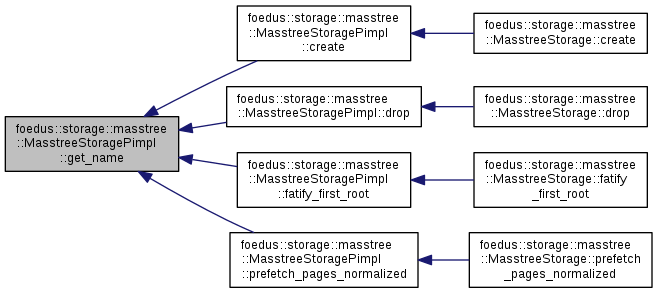

| const StorageName & | get_name () const |

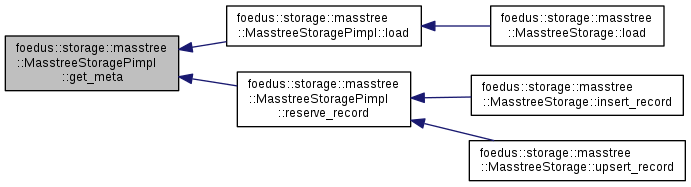

| const MasstreeMetadata & | get_meta () const |

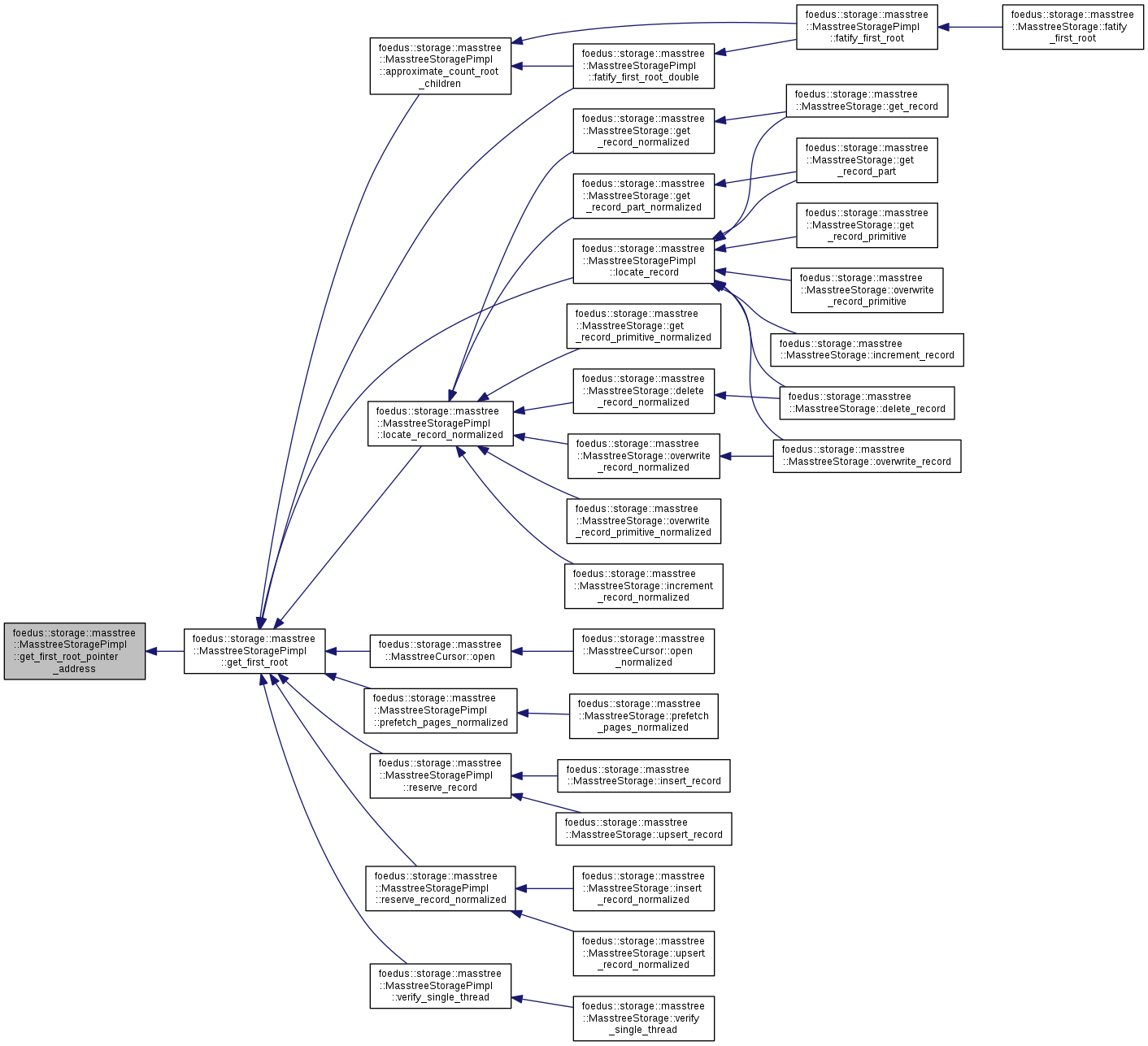

| const DualPagePointer & | get_first_root_pointer () const |

| DualPagePointer * | get_first_root_pointer_address () |

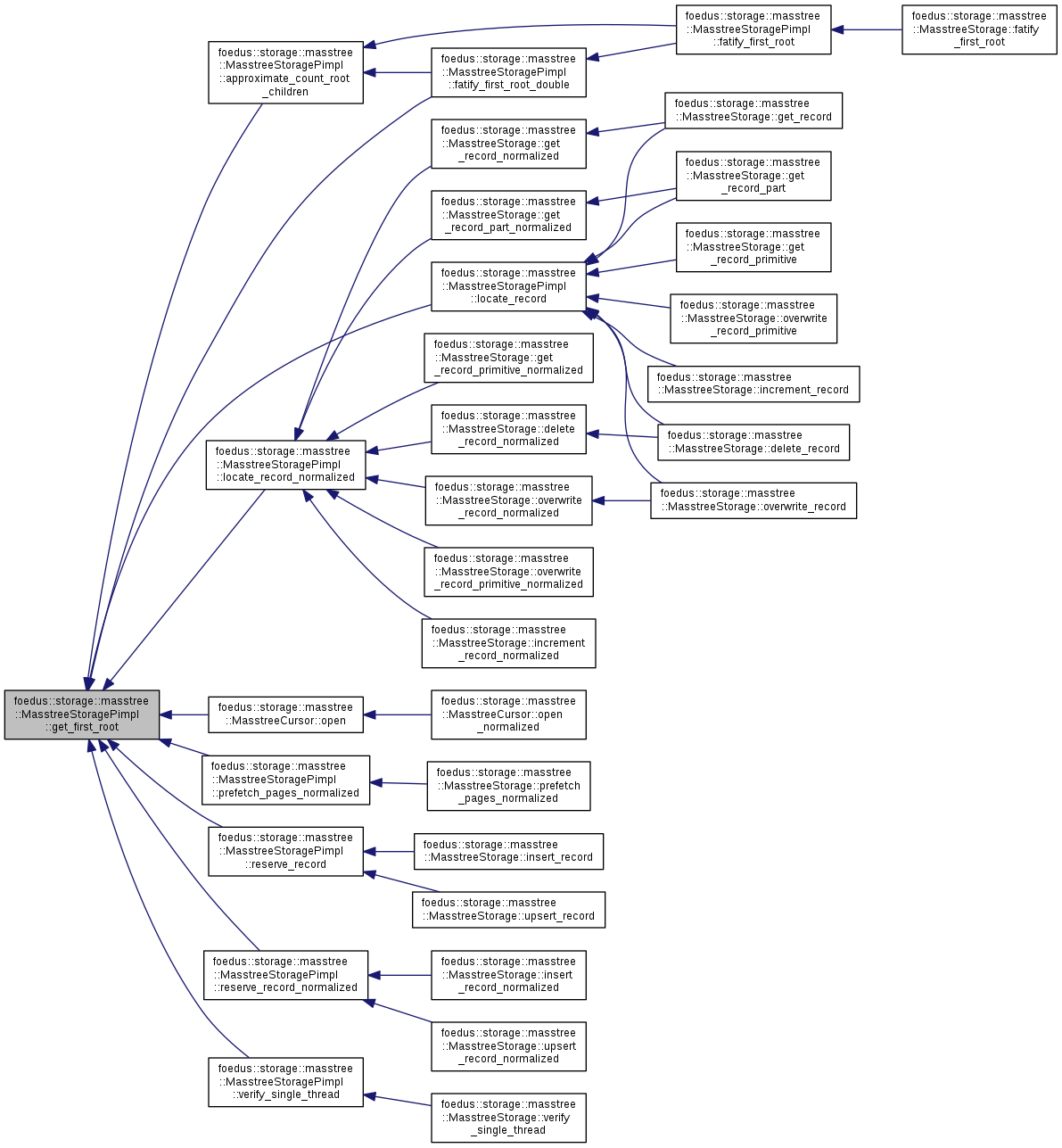

| ErrorCode | get_first_root (thread::Thread *context, bool for_write, MasstreeIntermediatePage **root) |

| Root-node related, such as a method to retrieve 1st-root, to grow, etc. More... | |

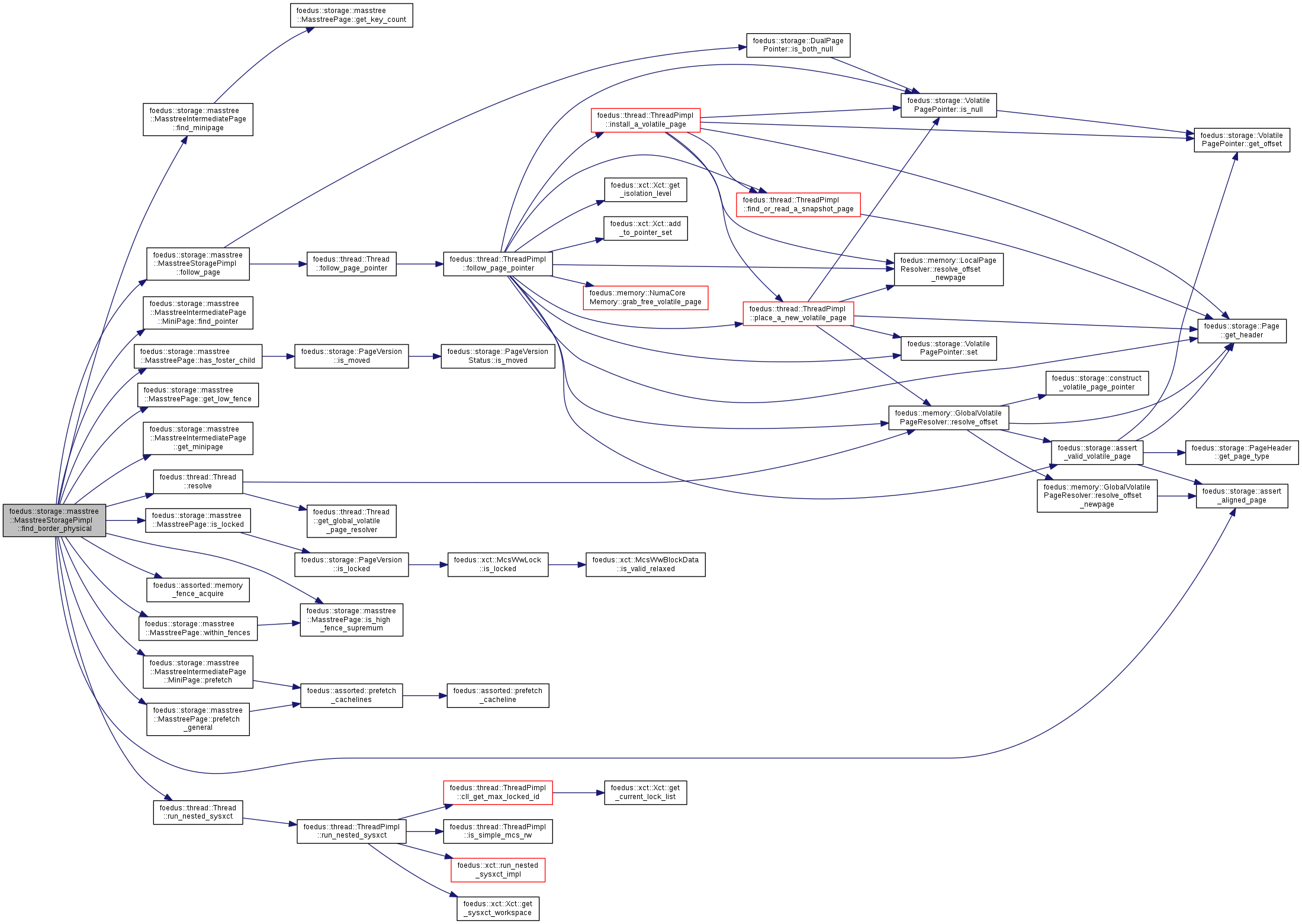

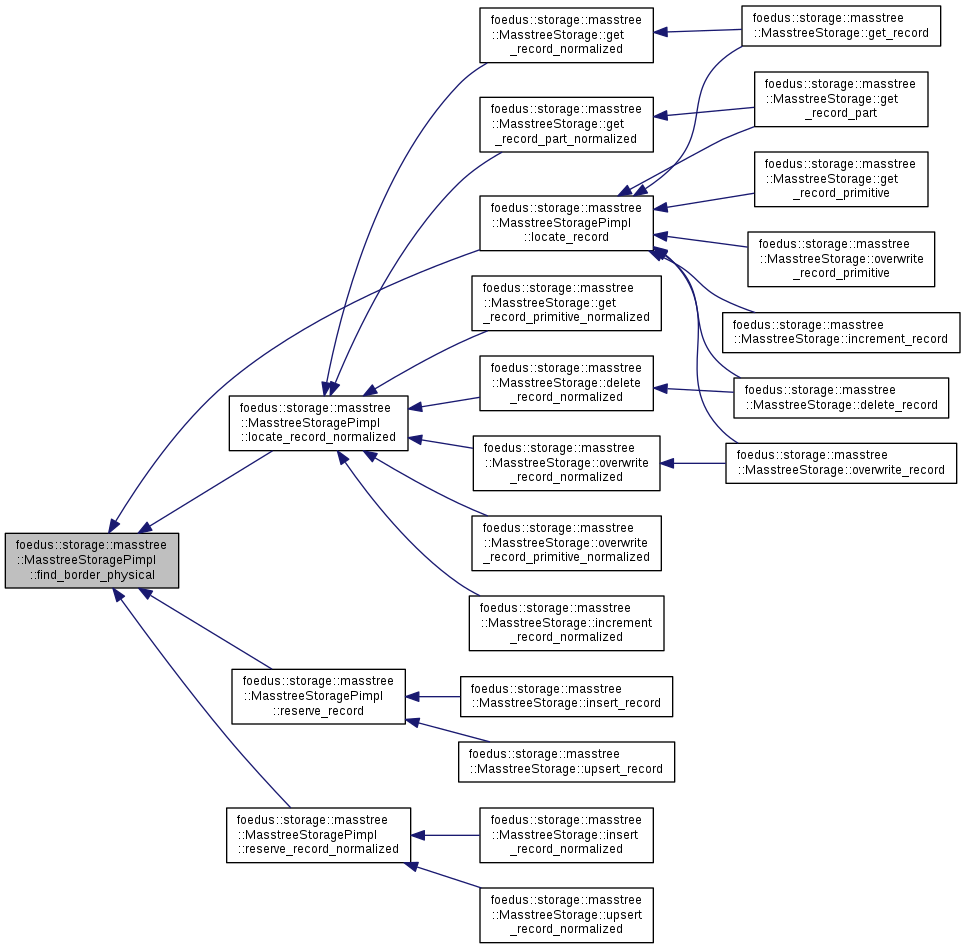

| ErrorCode | find_border_physical (thread::Thread *context, MasstreePage *layer_root, uint8_t current_layer, bool for_writes, KeySlice slice, MasstreeBorderPage **border) __attribute__((always_inline)) |

| Find a border node in the layer that corresponds to the given key slice. More... | |

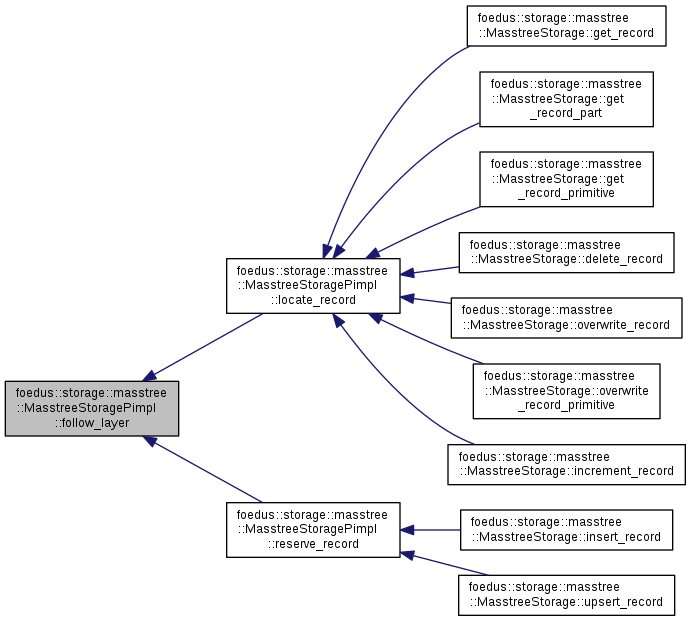

| ErrorCode | locate_record (thread::Thread *context, const void *key, KeyLength key_length, bool for_writes, RecordLocation *result) |

| Identifies page and record for the key. More... | |

| ErrorCode | locate_record_normalized (thread::Thread *context, KeySlice key, bool for_writes, RecordLocation *result) |

| Identifies page and record for the normalized key. More... | |

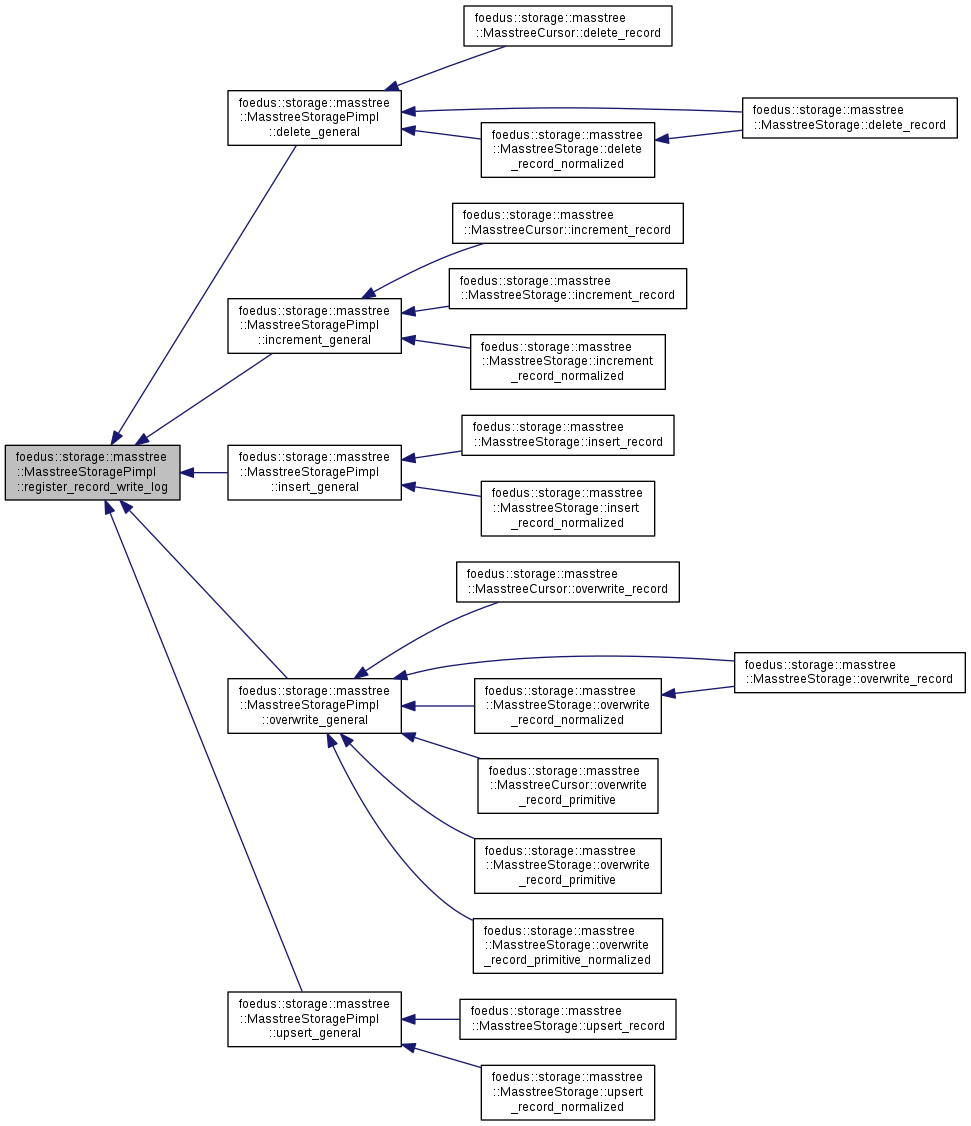

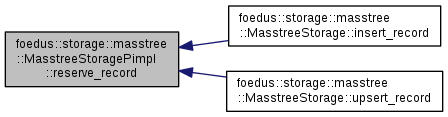

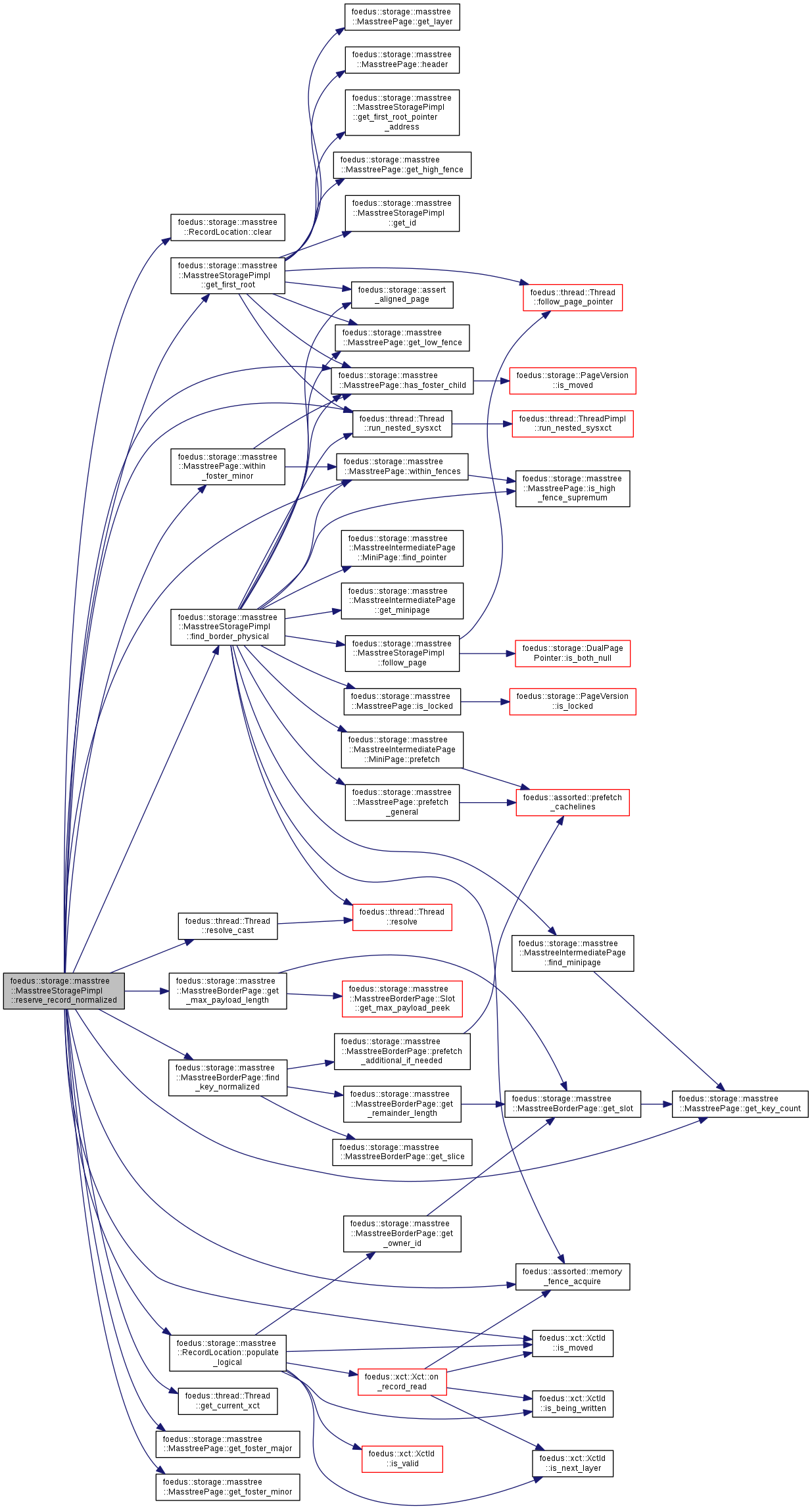

| ErrorCode | reserve_record (thread::Thread *context, const void *key, KeyLength key_length, PayloadLength payload_count, PayloadLength physical_payload_hint, RecordLocation *result) |

| Like locate_record(), this is also a logical operation. More... | |

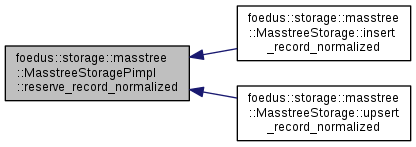

| ErrorCode | reserve_record_normalized (thread::Thread *context, KeySlice key, PayloadLength payload_count, PayloadLength physical_payload_hint, RecordLocation *result) |

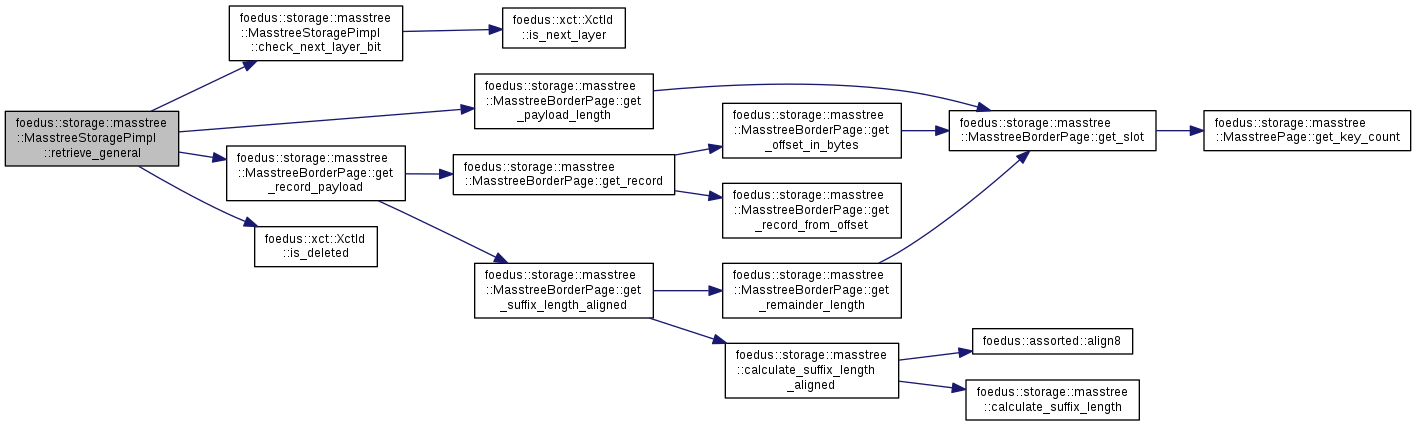

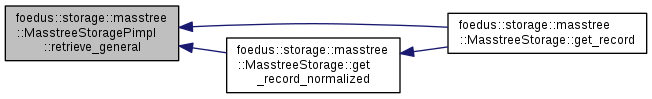

| ErrorCode | retrieve_general (thread::Thread *context, const RecordLocation &location, void *payload, PayloadLength *payload_capacity) |

| implementation of get_record family. More... | |

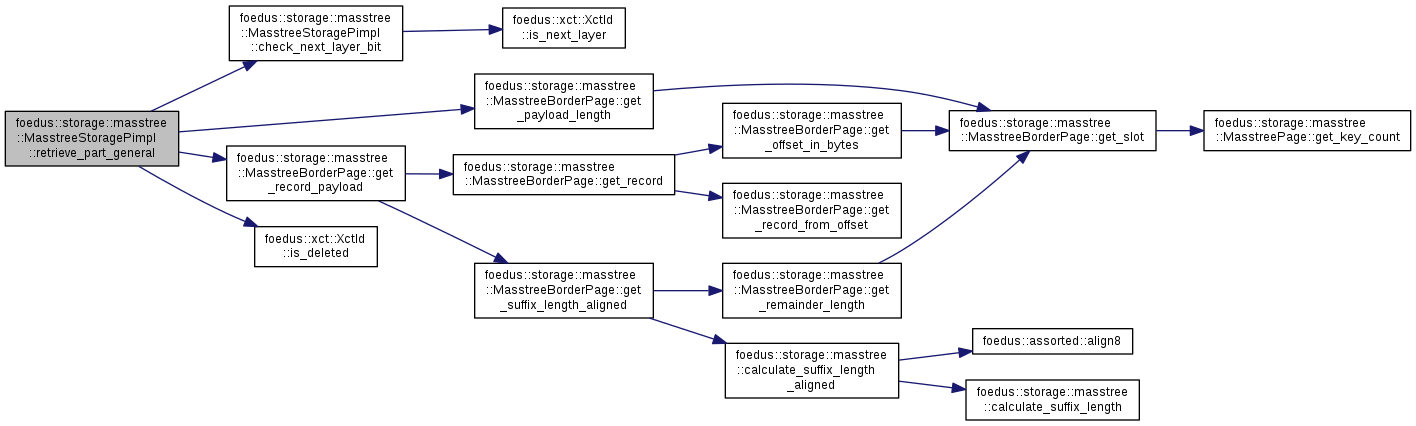

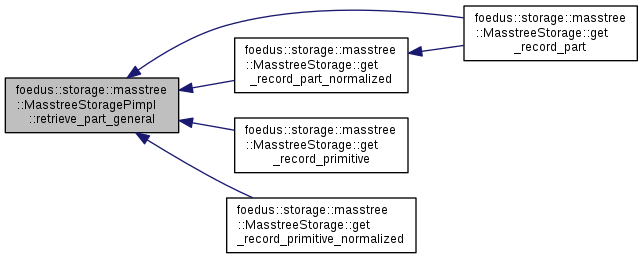

| ErrorCode | retrieve_part_general (thread::Thread *context, const RecordLocation &location, void *payload, PayloadLength payload_offset, PayloadLength payload_count) |

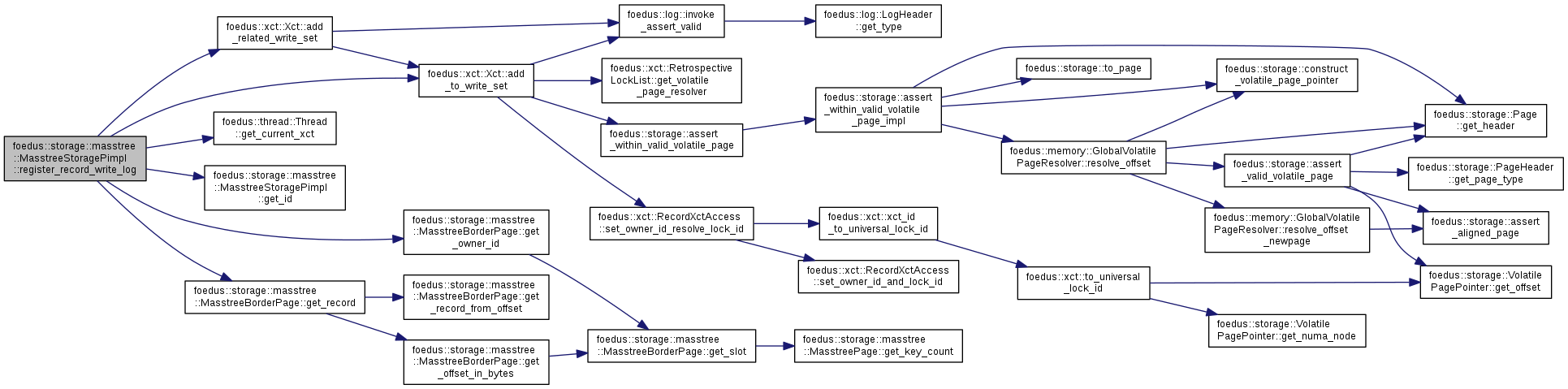

| ErrorCode | register_record_write_log (thread::Thread *context, const RecordLocation &location, log::RecordLogType *log_entry) |

| Used in the following methods. More... | |

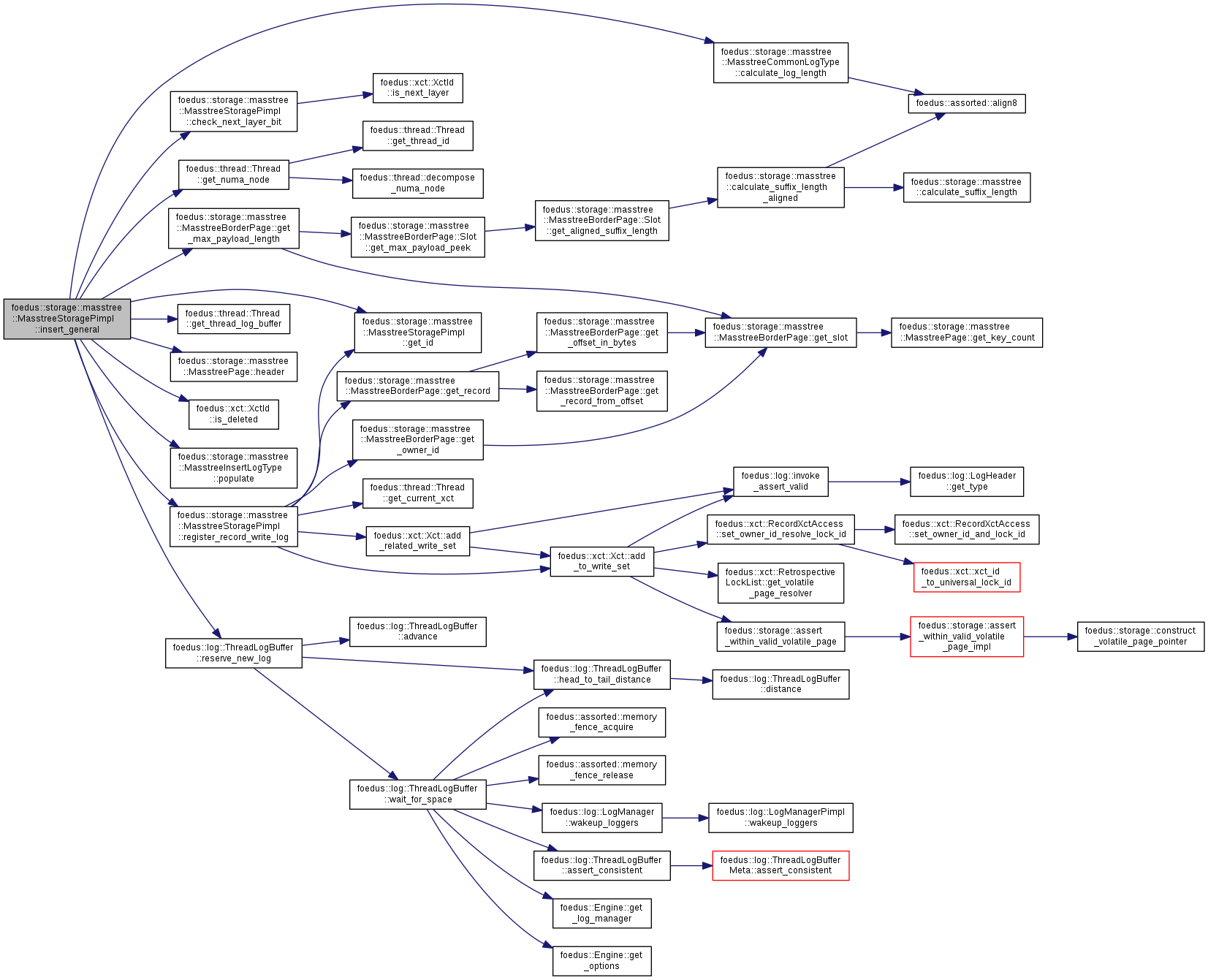

| ErrorCode | insert_general (thread::Thread *context, const RecordLocation &location, const void *be_key, KeyLength key_length, const void *payload, PayloadLength payload_count) |

| implementation of insert_record family. More... | |

| ErrorCode | delete_general (thread::Thread *context, const RecordLocation &location, const void *be_key, KeyLength key_length) |

| implementation of delete_record family. More... | |

| ErrorCode | upsert_general (thread::Thread *context, const RecordLocation &location, const void *be_key, KeyLength key_length, const void *payload, PayloadLength payload_count) |

| implementation of upsert_record family. More... | |

| ErrorCode | overwrite_general (thread::Thread *context, const RecordLocation &location, const void *be_key, KeyLength key_length, const void *payload, PayloadLength payload_offset, PayloadLength payload_count) |

| implementation of overwrite_record family. More... | |

| template<typename PAYLOAD > | |

| ErrorCode | increment_general (thread::Thread *context, const RecordLocation &location, const void *be_key, KeyLength key_length, PAYLOAD *value, PayloadLength payload_offset) |

| implementation of increment_record family. More... | |

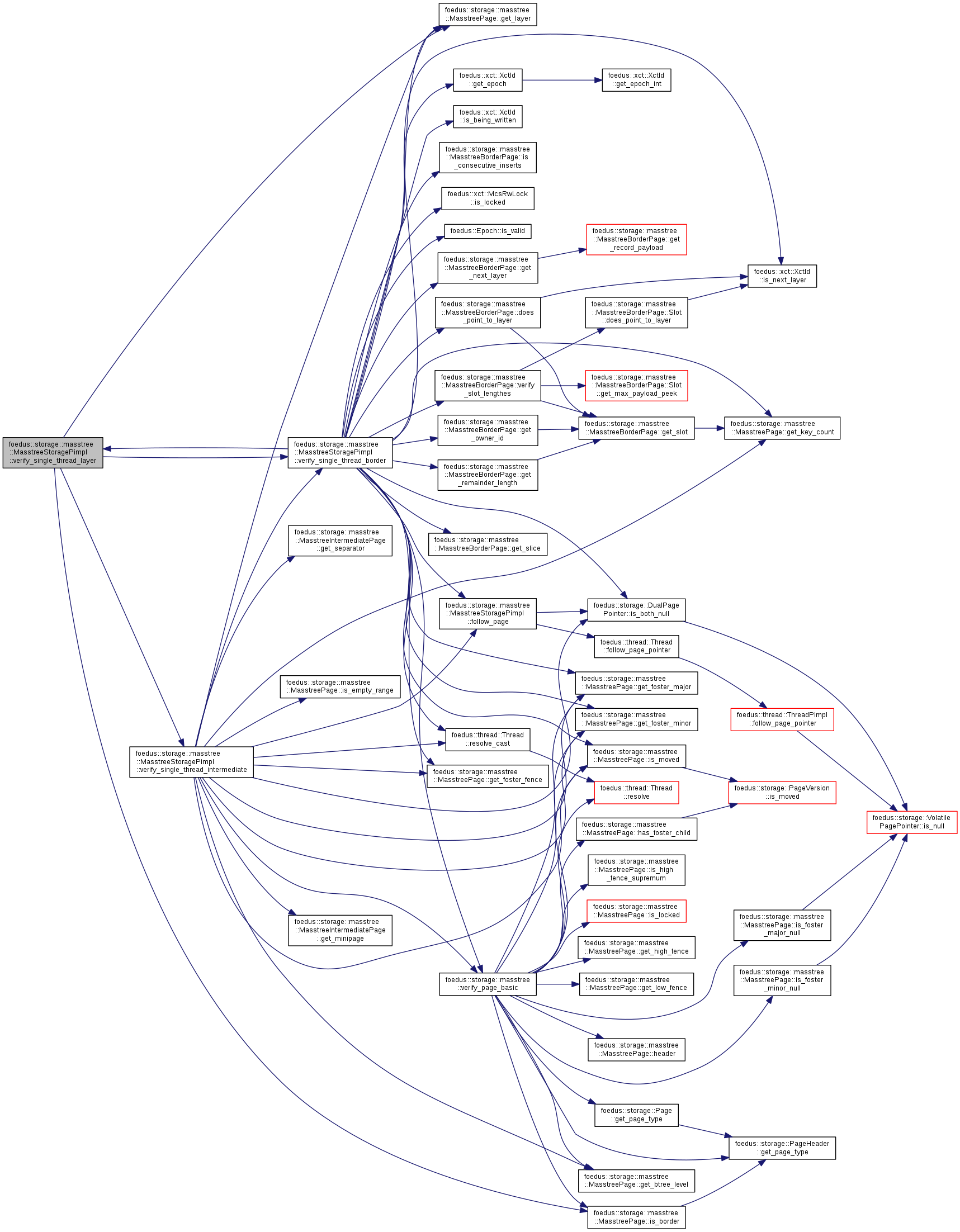

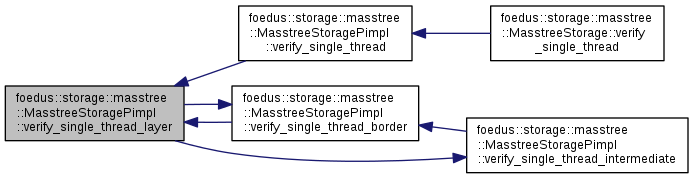

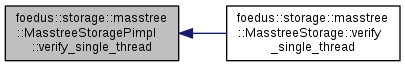

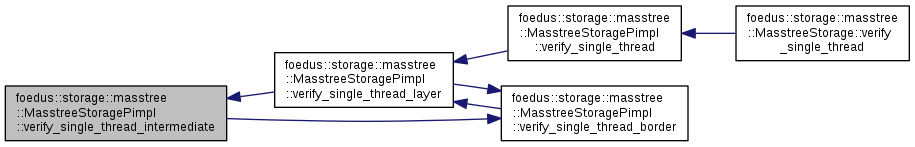

| ErrorStack | verify_single_thread (thread::Thread *context) |

| These are defined in masstree_storage_verify.cpp. More... | |

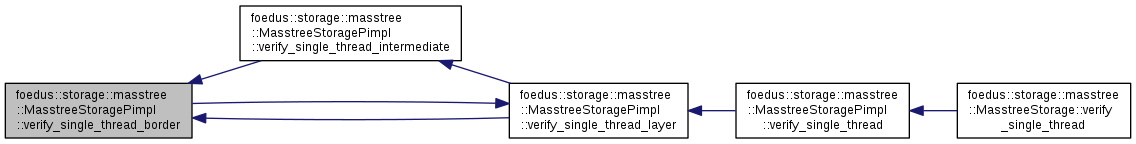

| ErrorStack | verify_single_thread_layer (thread::Thread *context, uint8_t layer, MasstreePage *layer_root) |

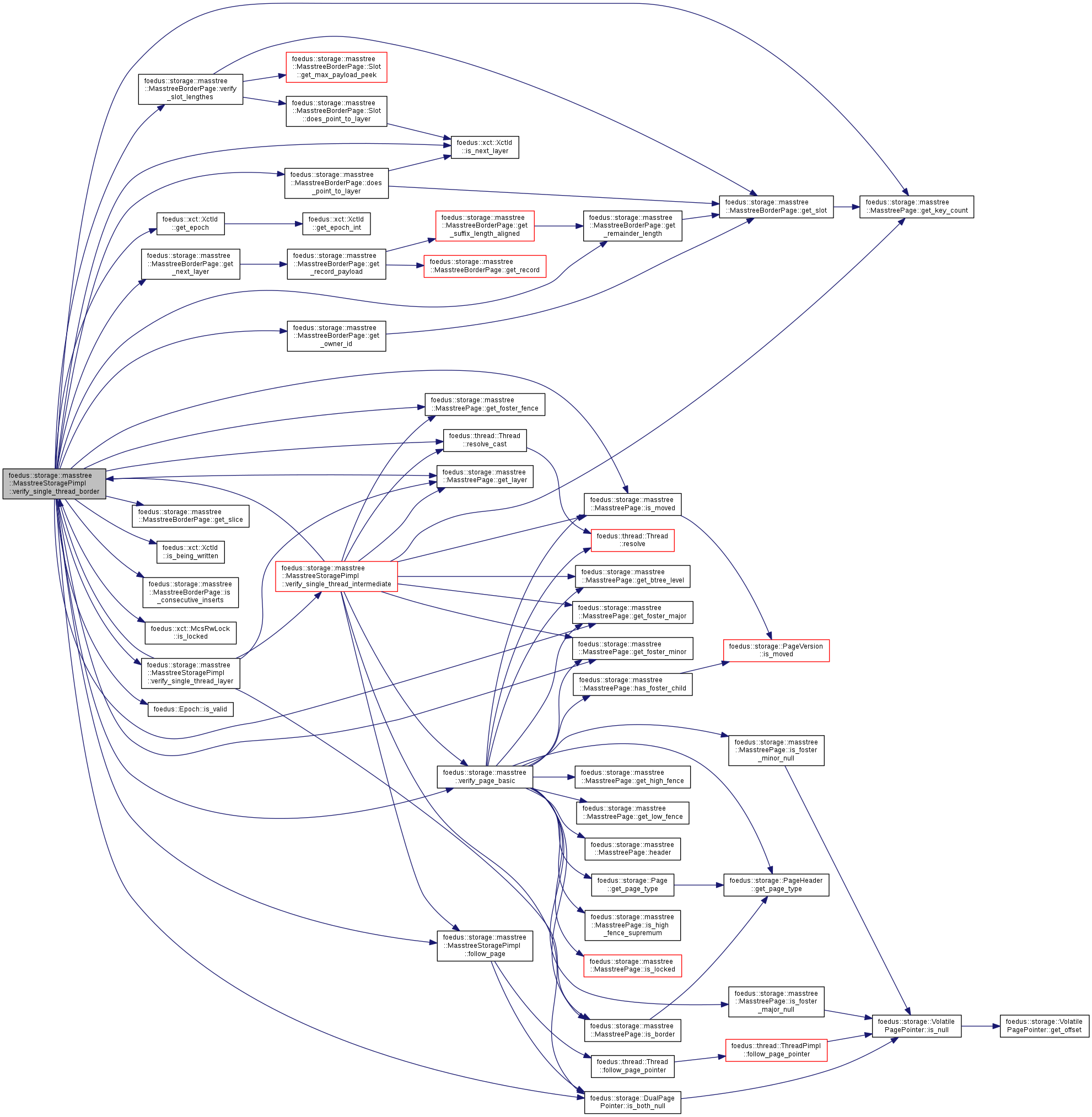

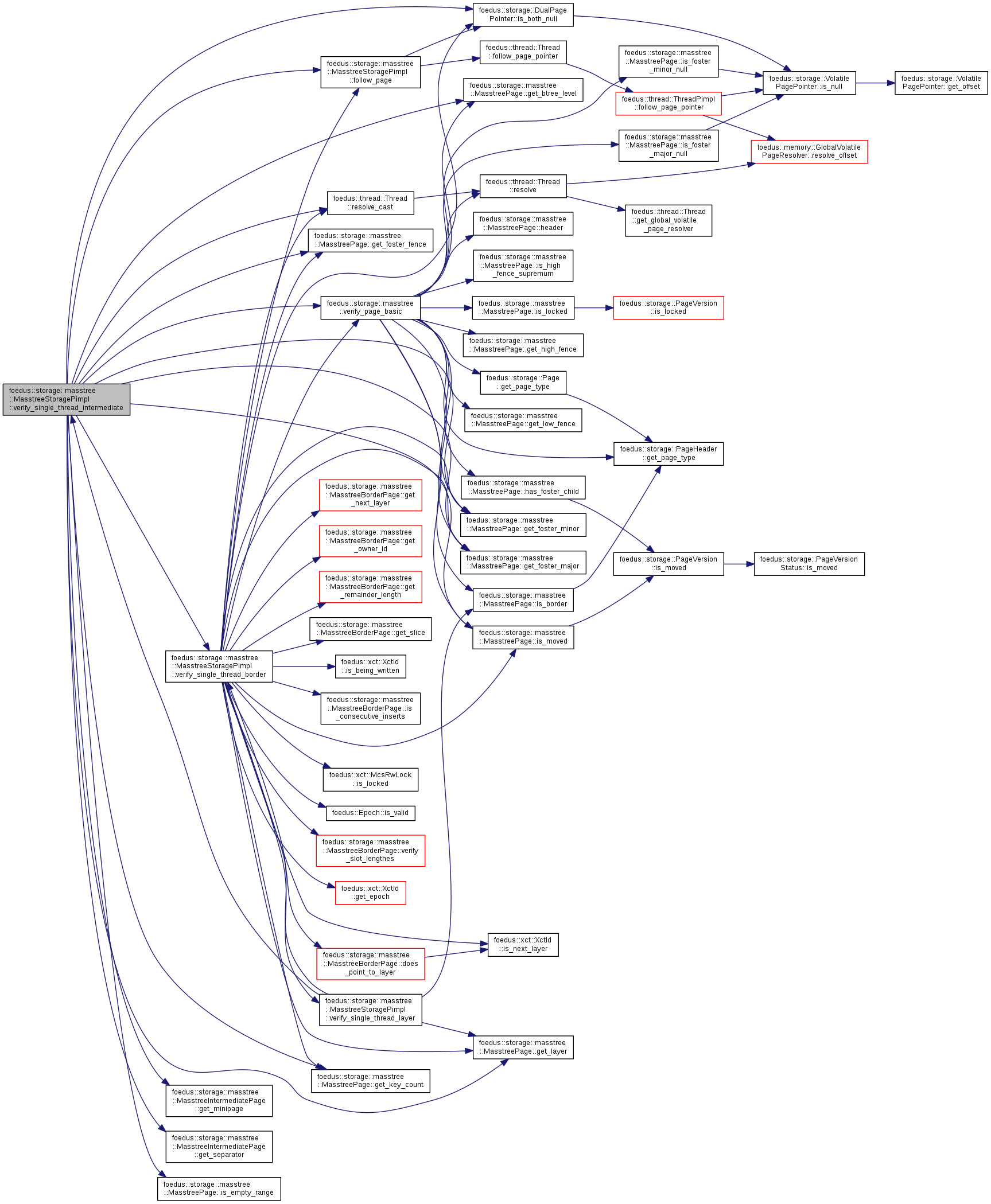

| ErrorStack | verify_single_thread_intermediate (thread::Thread *context, KeySlice low_fence, HighFence high_fence, MasstreeIntermediatePage *page) |

| ErrorStack | verify_single_thread_border (thread::Thread *context, KeySlice low_fence, HighFence high_fence, MasstreeBorderPage *page) |

| ErrorStack | debugout_single_thread (Engine *engine, bool volatile_only, uint32_t max_pages) |

| These are defined in masstree_storage_debug.cpp. More... | |

| ErrorStack | debugout_single_thread_recurse (Engine *engine, cache::SnapshotFileSet *fileset, MasstreePage *parent, bool follow_volatile, uint32_t *remaining_pages) |

| ErrorStack | debugout_single_thread_follow (Engine *engine, cache::SnapshotFileSet *fileset, const DualPagePointer &pointer, bool follow_volatile, uint32_t *remaining_pages) |

| ErrorStack | hcc_reset_all_temperature_stat () |

| For stupid reasons (I'm lazy!) these are defined in _debug.cpp. More... | |

| ErrorStack | hcc_reset_all_temperature_stat_recurse (MasstreePage *parent) |

| ErrorStack | hcc_reset_all_temperature_stat_follow (VolatilePagePointer page_id) |

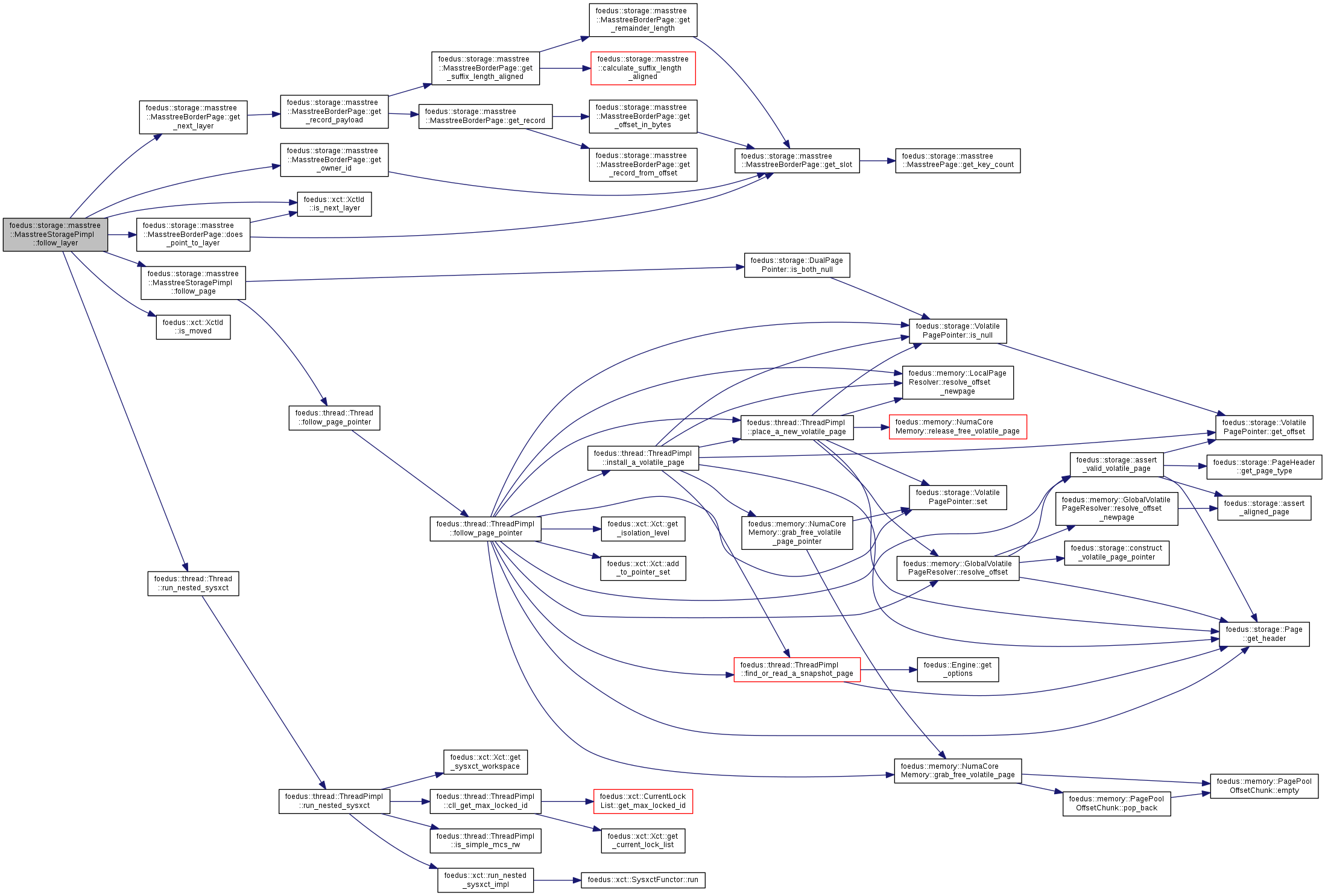

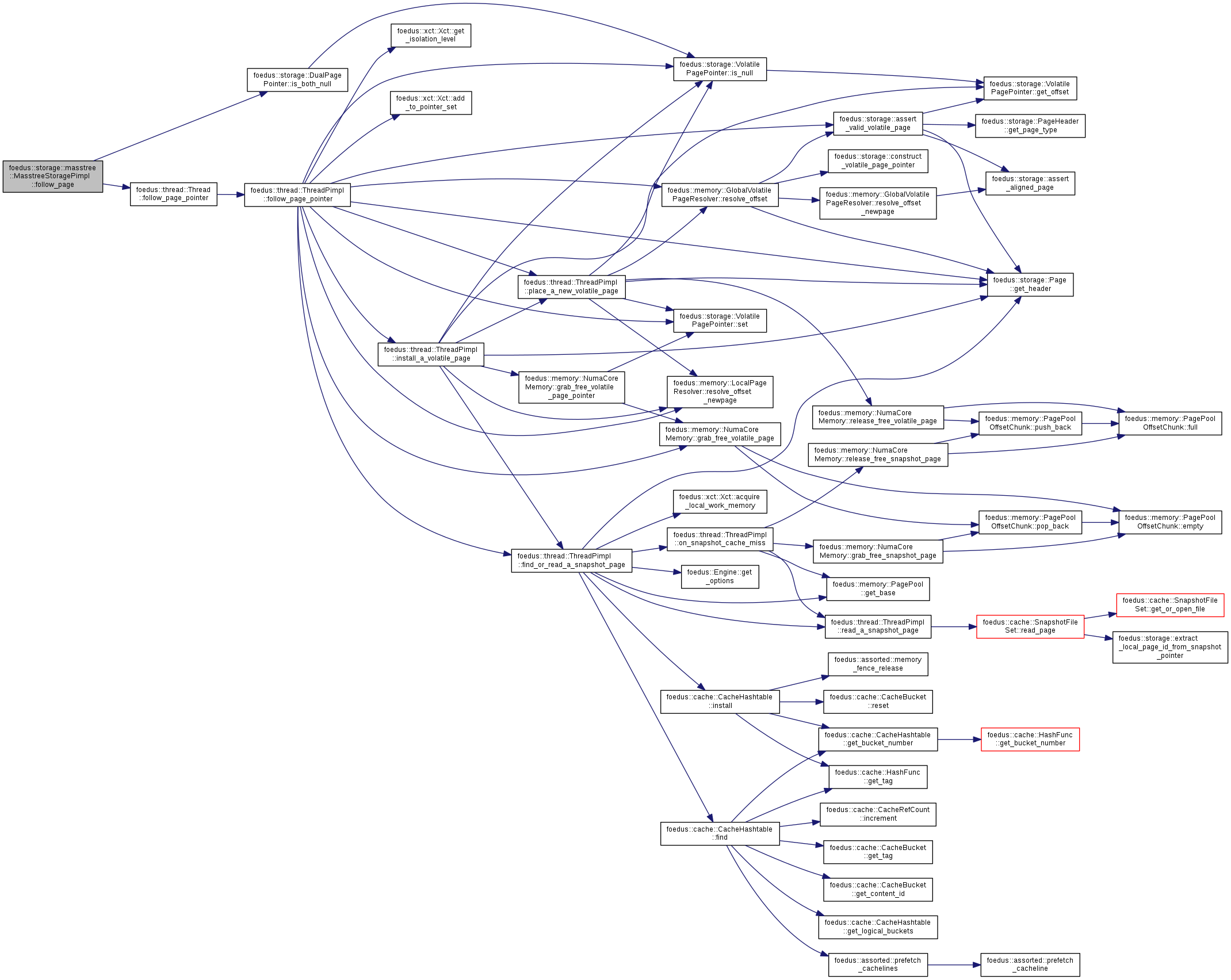

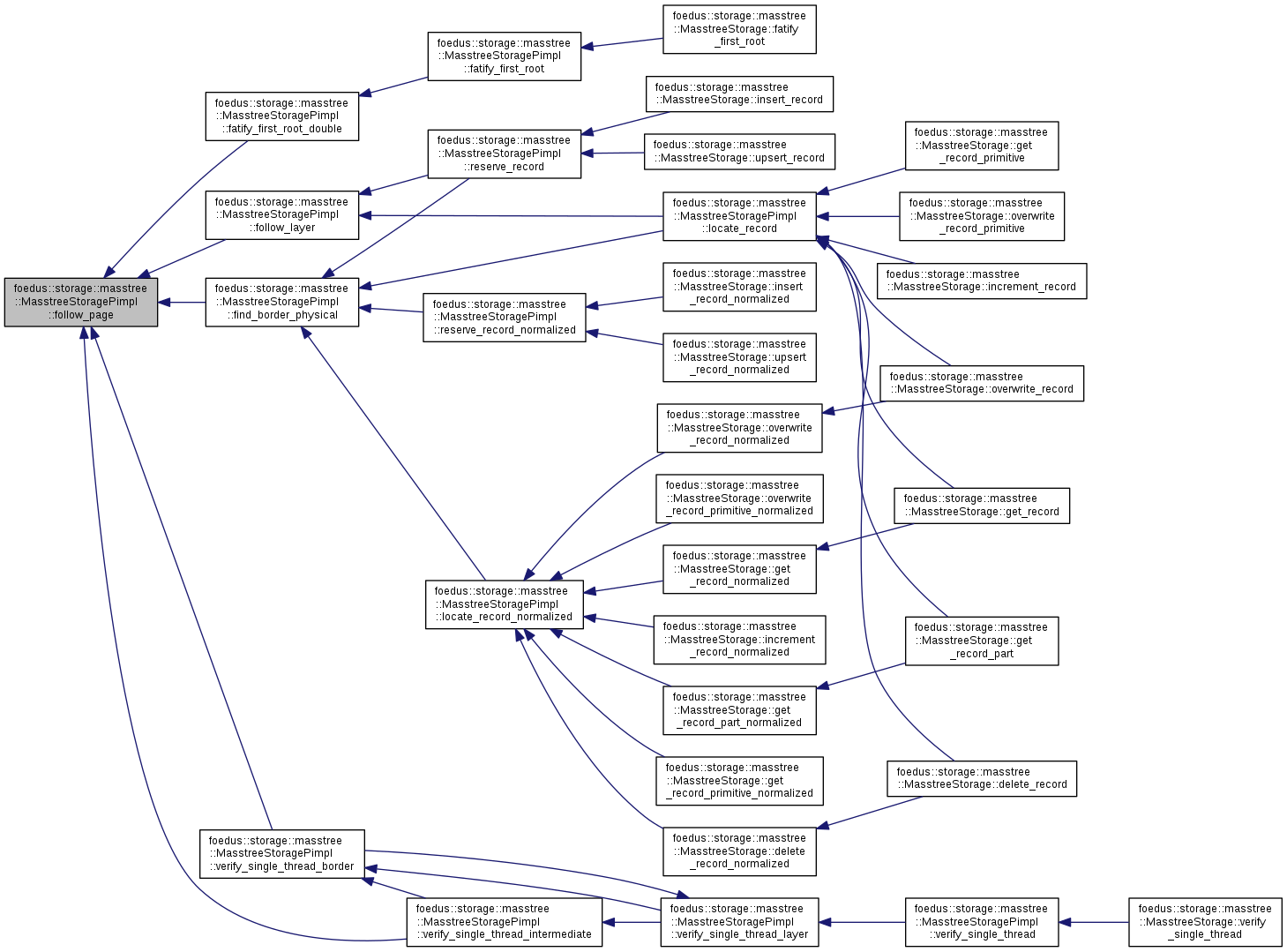

| ErrorCode | follow_page (thread::Thread *context, bool for_writes, storage::DualPagePointer *pointer, MasstreePage **page) |

| Thread::follow_page_pointer() for masstree. More... | |

| ErrorCode | follow_layer (thread::Thread *context, bool for_writes, MasstreeBorderPage *parent, SlotIndex record_index, MasstreePage **page) __attribute__((always_inline)) |

| Follows to next layer's root page. More... | |

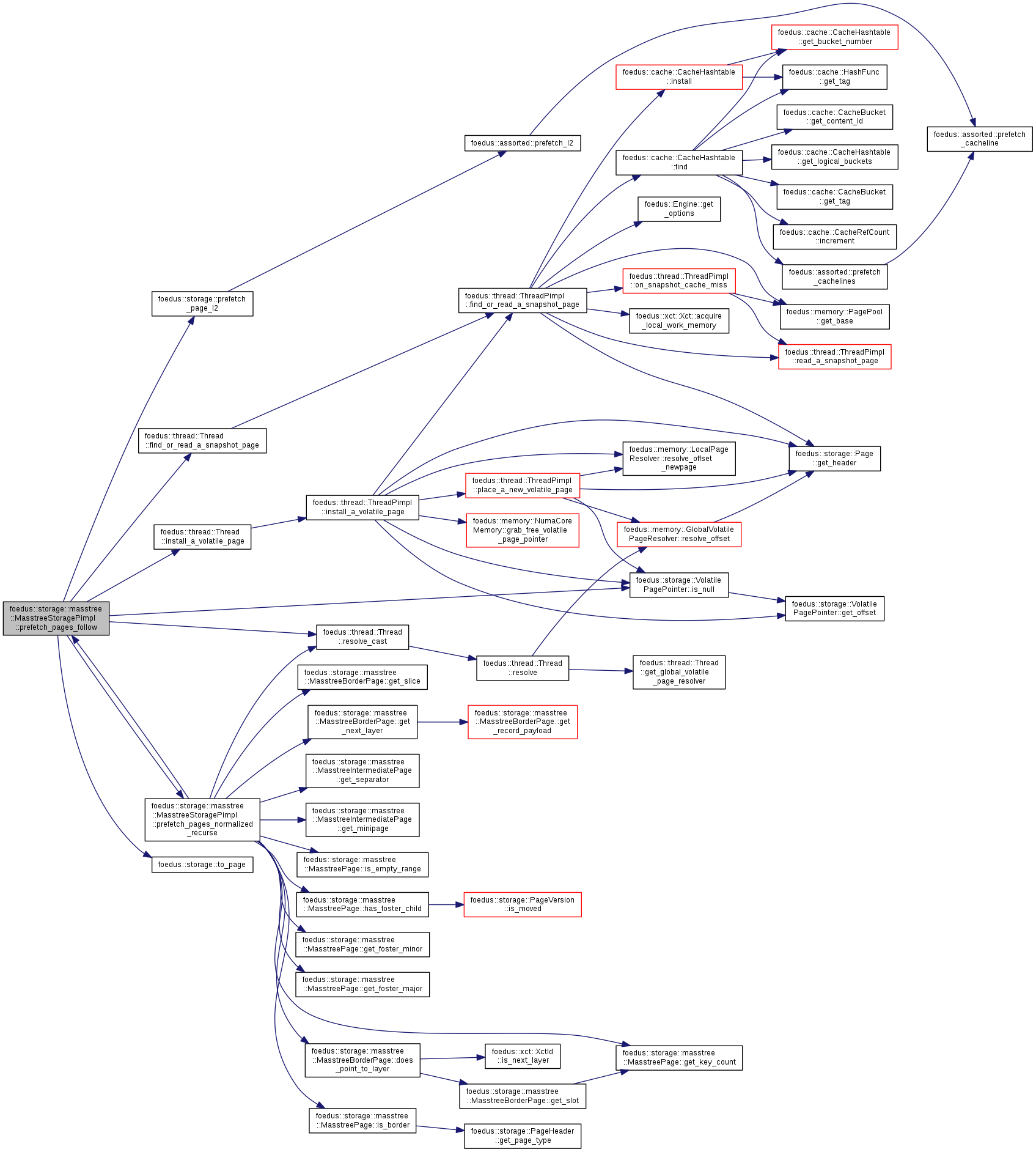

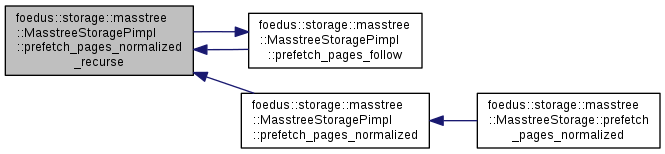

| ErrorCode | prefetch_pages_normalized (thread::Thread *context, bool install_volatile, bool cache_snapshot, KeySlice from, KeySlice to) |

| defined in masstree_storage_prefetch.cpp More... | |

| ErrorCode | prefetch_pages_normalized_recurse (thread::Thread *context, bool install_volatile, bool cache_snapshot, KeySlice from, KeySlice to, MasstreePage *page) |

| ErrorCode | prefetch_pages_follow (thread::Thread *context, DualPagePointer *pointer, bool vol_on, bool snp_on, KeySlice from, KeySlice to) |

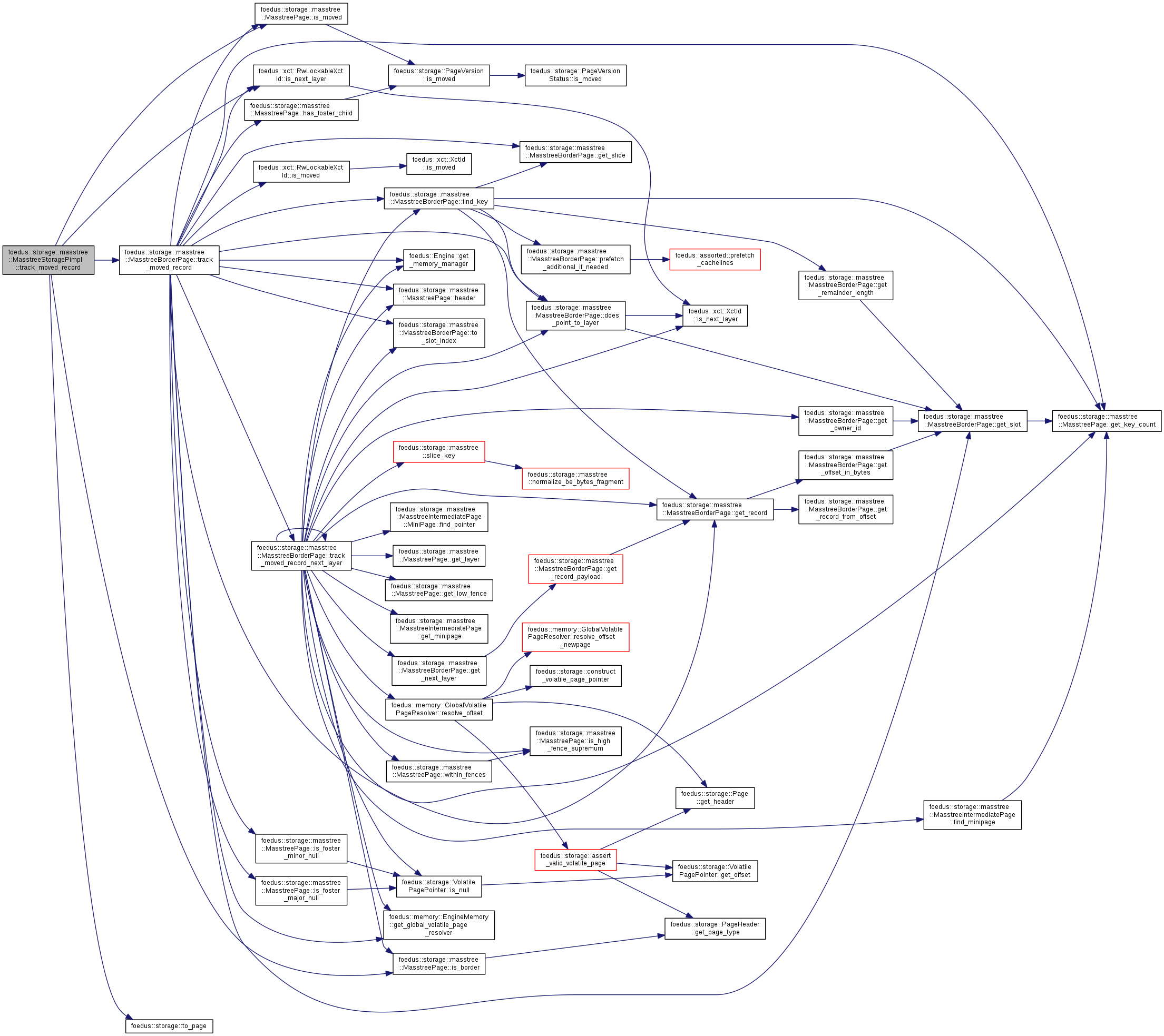

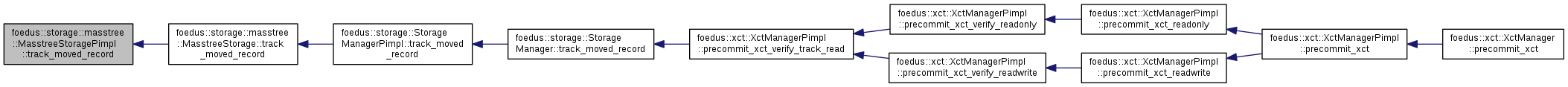

| xct::TrackMovedRecordResult | track_moved_record (xct::RwLockableXctId *old_address, xct::WriteXctAccess *write_set) __attribute__((always_inline)) |

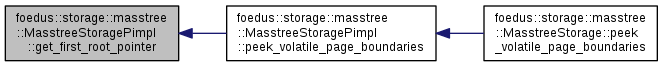

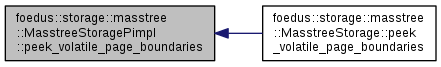

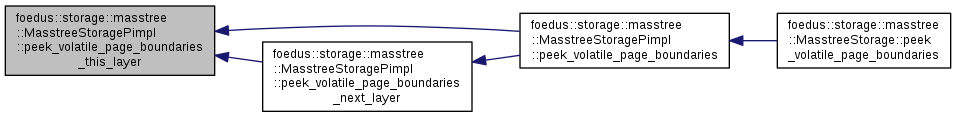

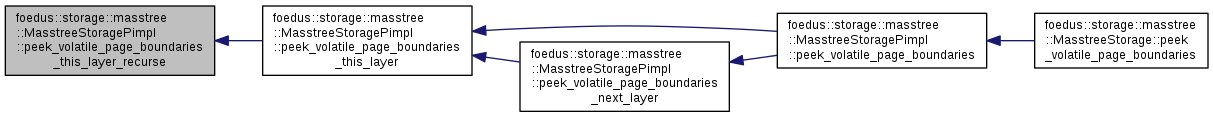

| ErrorCode | peek_volatile_page_boundaries (Engine *engine, const MasstreeStorage::PeekBoundariesArguments &args) |

| Defined in masstree_storage_peek.cpp. More... | |

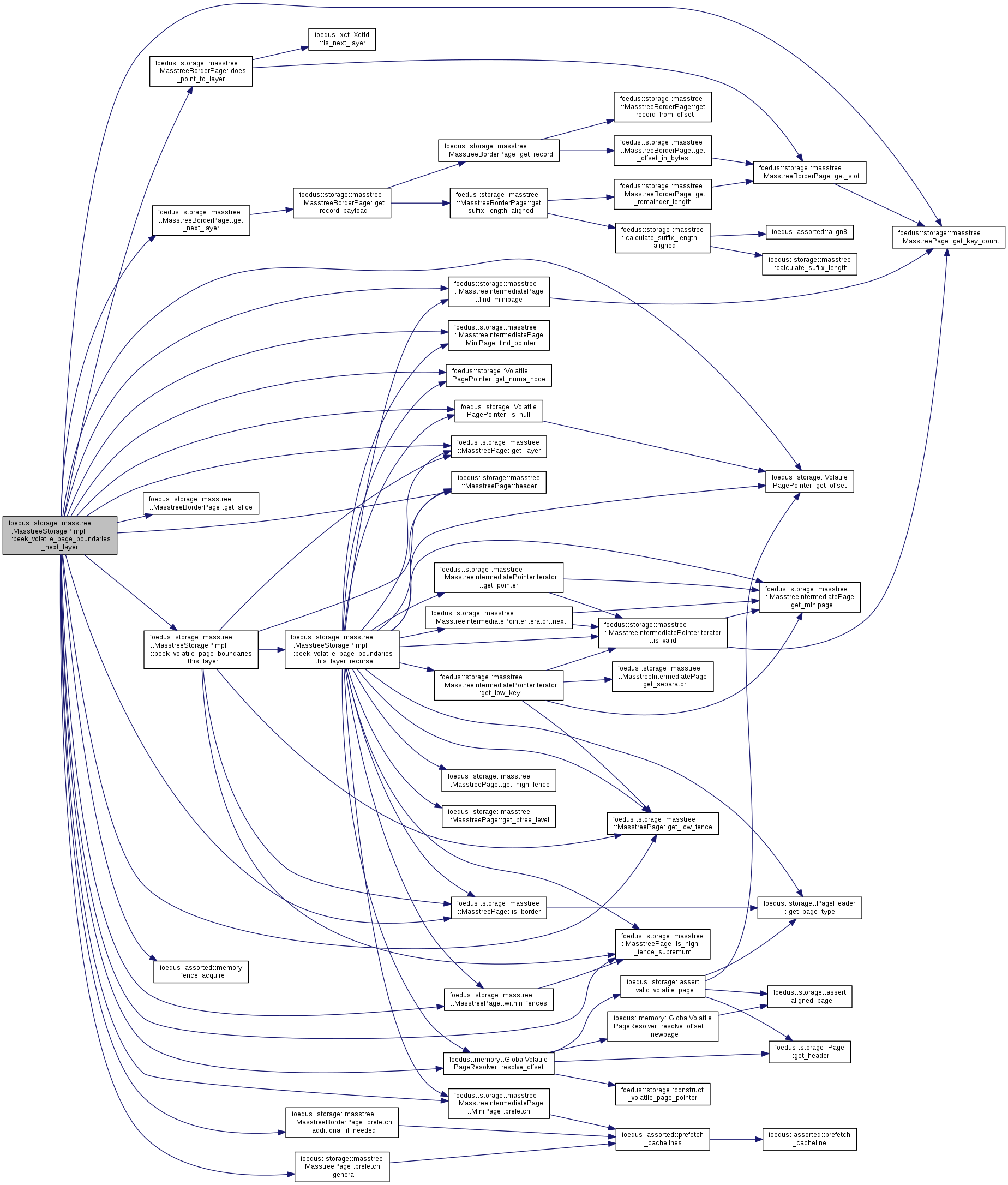

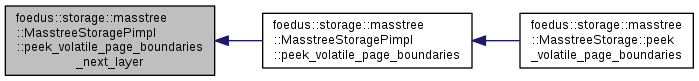

| ErrorCode | peek_volatile_page_boundaries_next_layer (const MasstreePage *layer_root, const memory::GlobalVolatilePageResolver &resolver, const MasstreeStorage::PeekBoundariesArguments &args) |

| ErrorCode | peek_volatile_page_boundaries_this_layer (const MasstreePage *layer_root, const memory::GlobalVolatilePageResolver &resolver, const MasstreeStorage::PeekBoundariesArguments &args) |

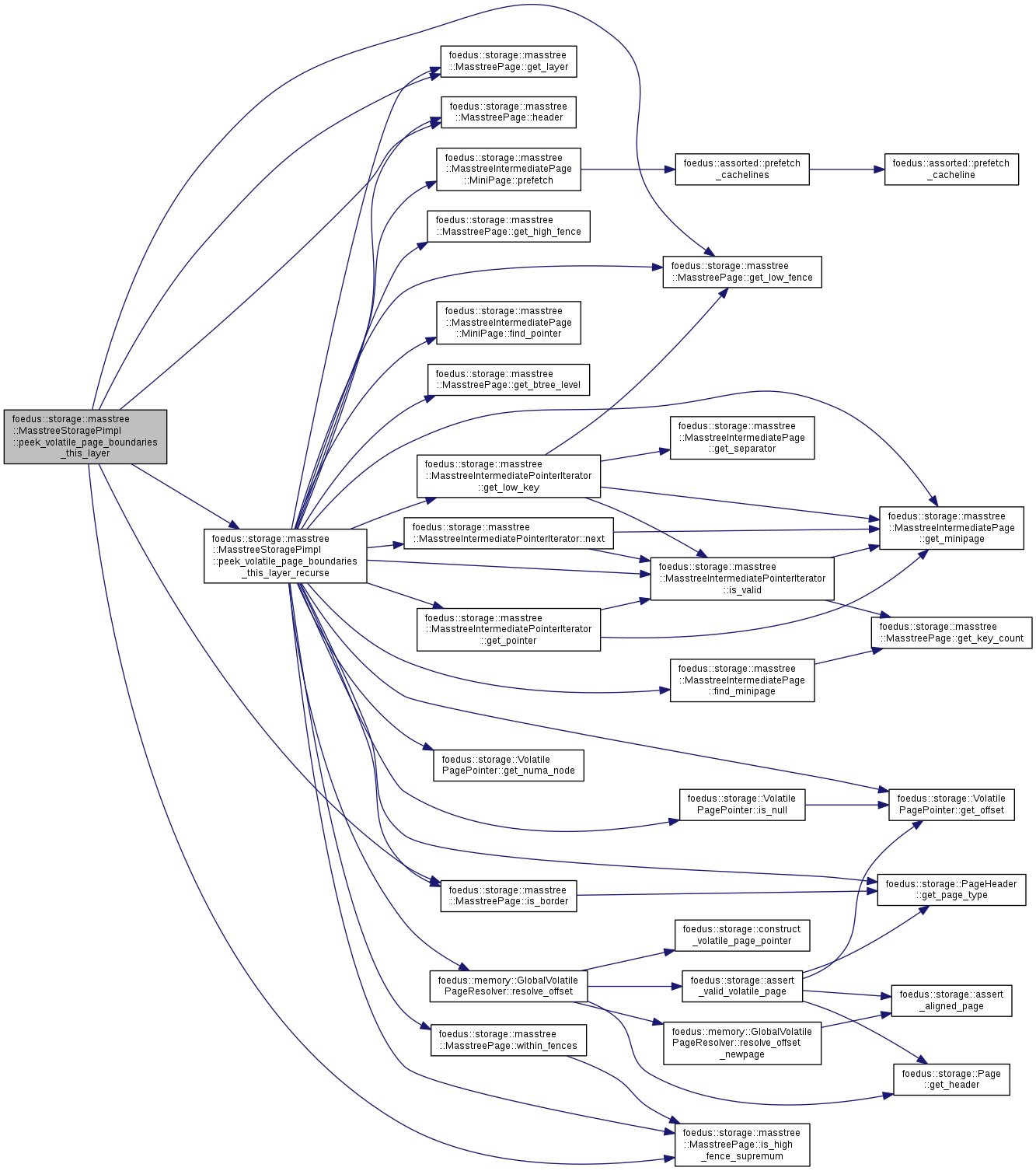

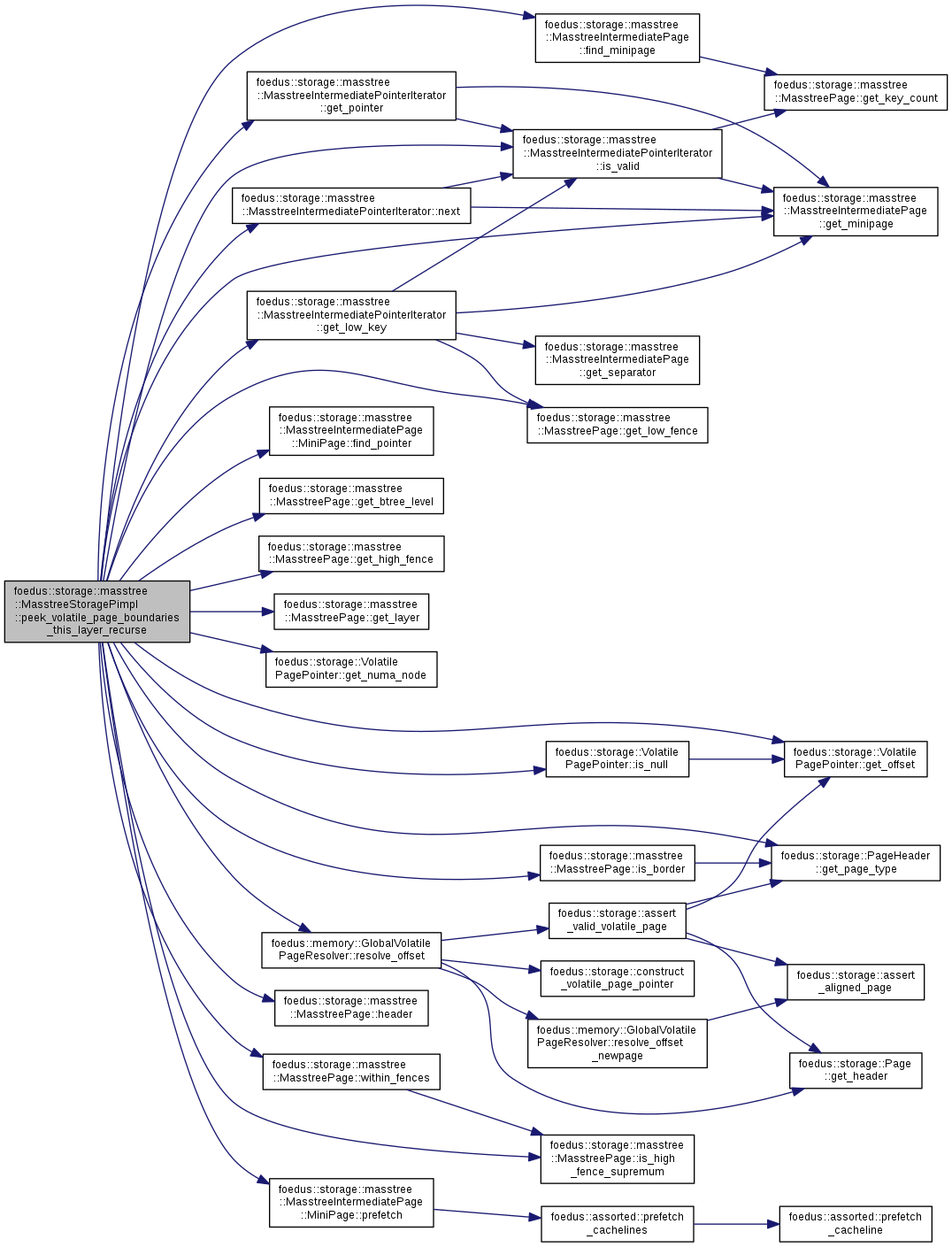

| ErrorCode | peek_volatile_page_boundaries_this_layer_recurse (const MasstreeIntermediatePage *cur, const memory::GlobalVolatilePageResolver &resolver, const MasstreeStorage::PeekBoundariesArguments &args) |

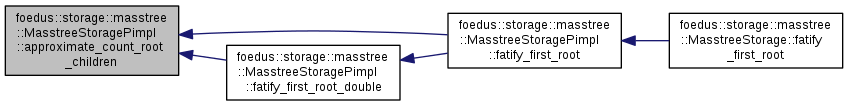

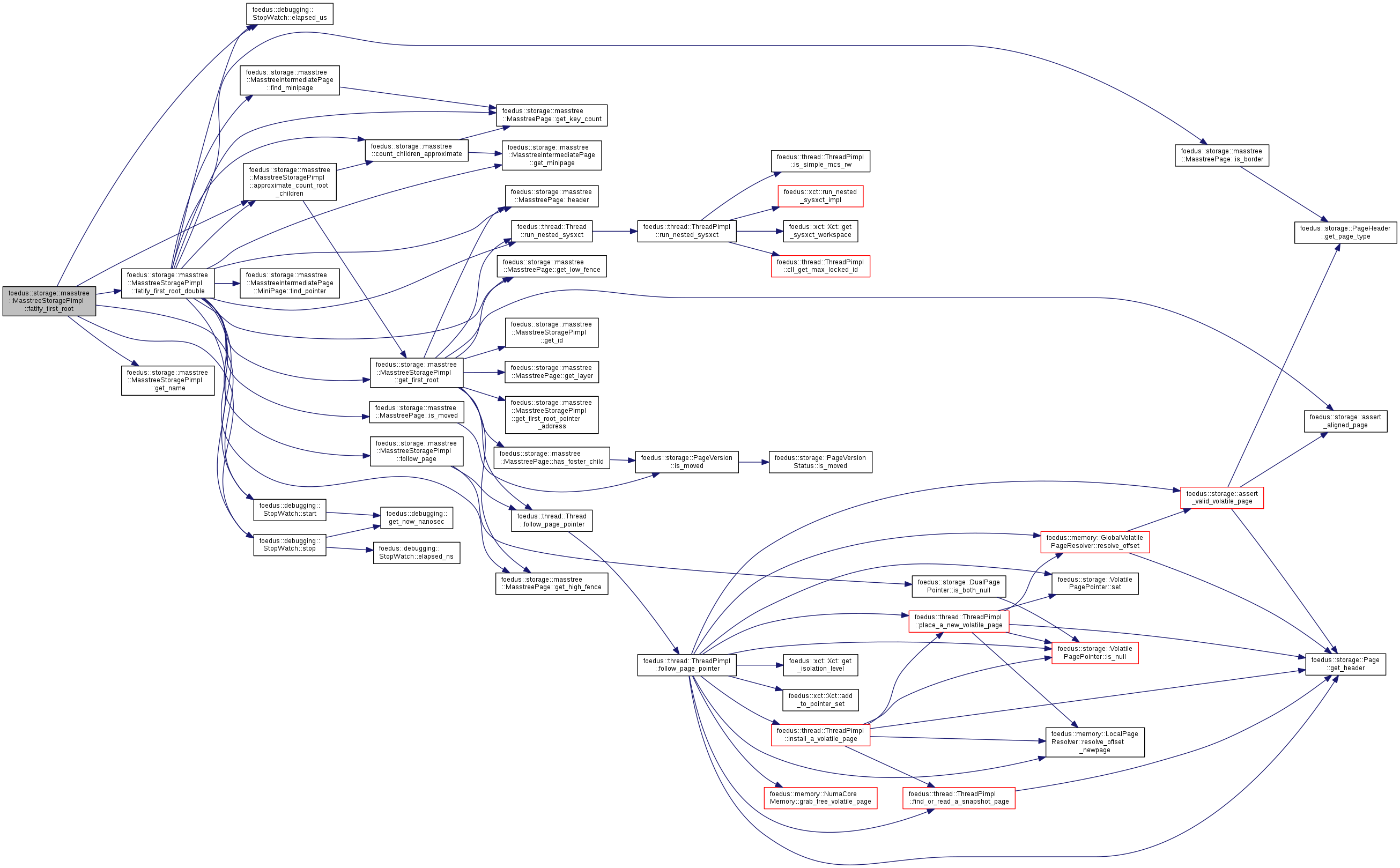

| ErrorStack | fatify_first_root (thread::Thread *context, uint32_t desired_count, bool disable_no_record_split) |

| Defined in masstree_storage_fatify.cpp. More... | |

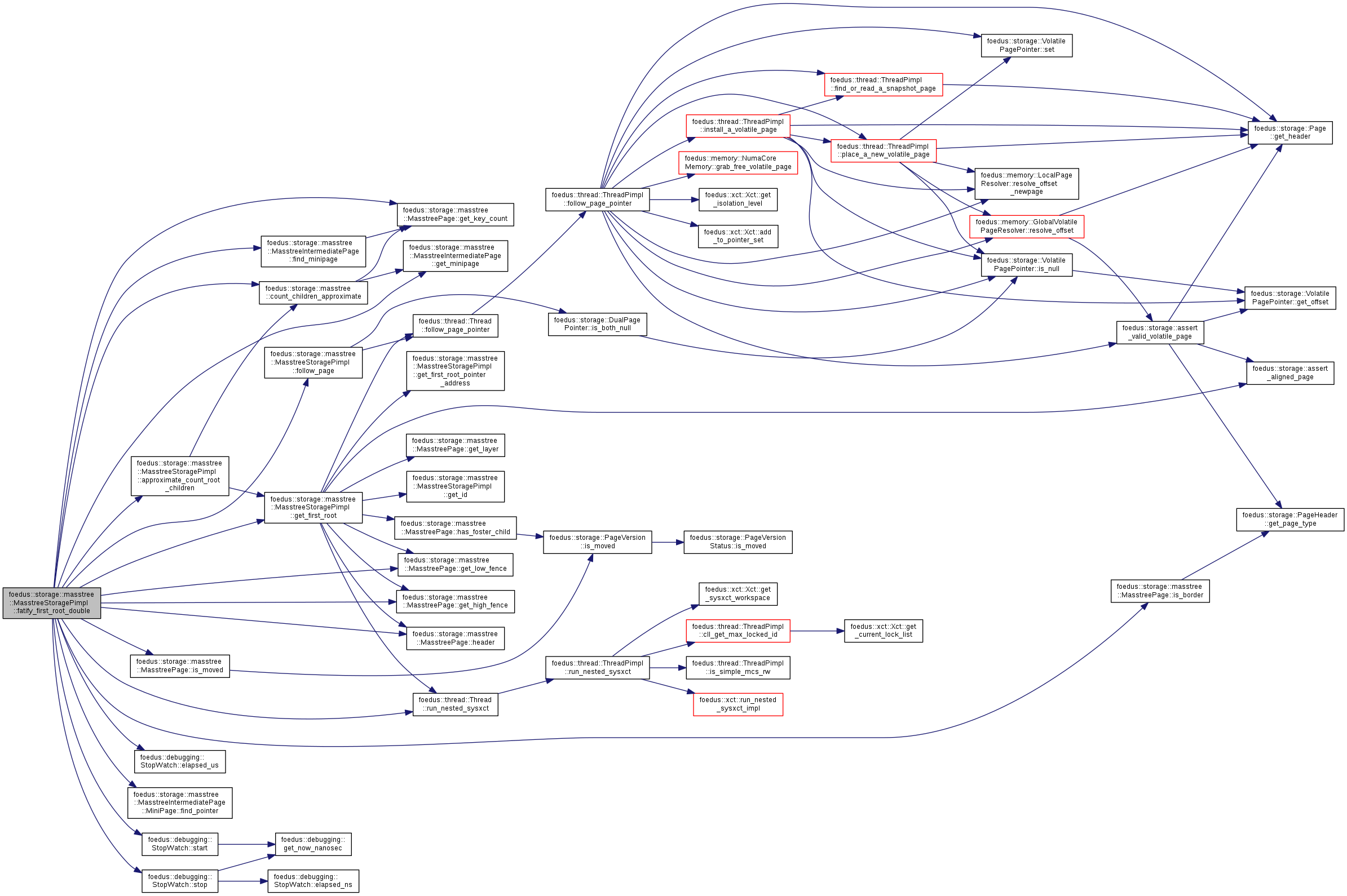

| ErrorStack | fatify_first_root_double (thread::Thread *context, bool disable_no_record_split) |

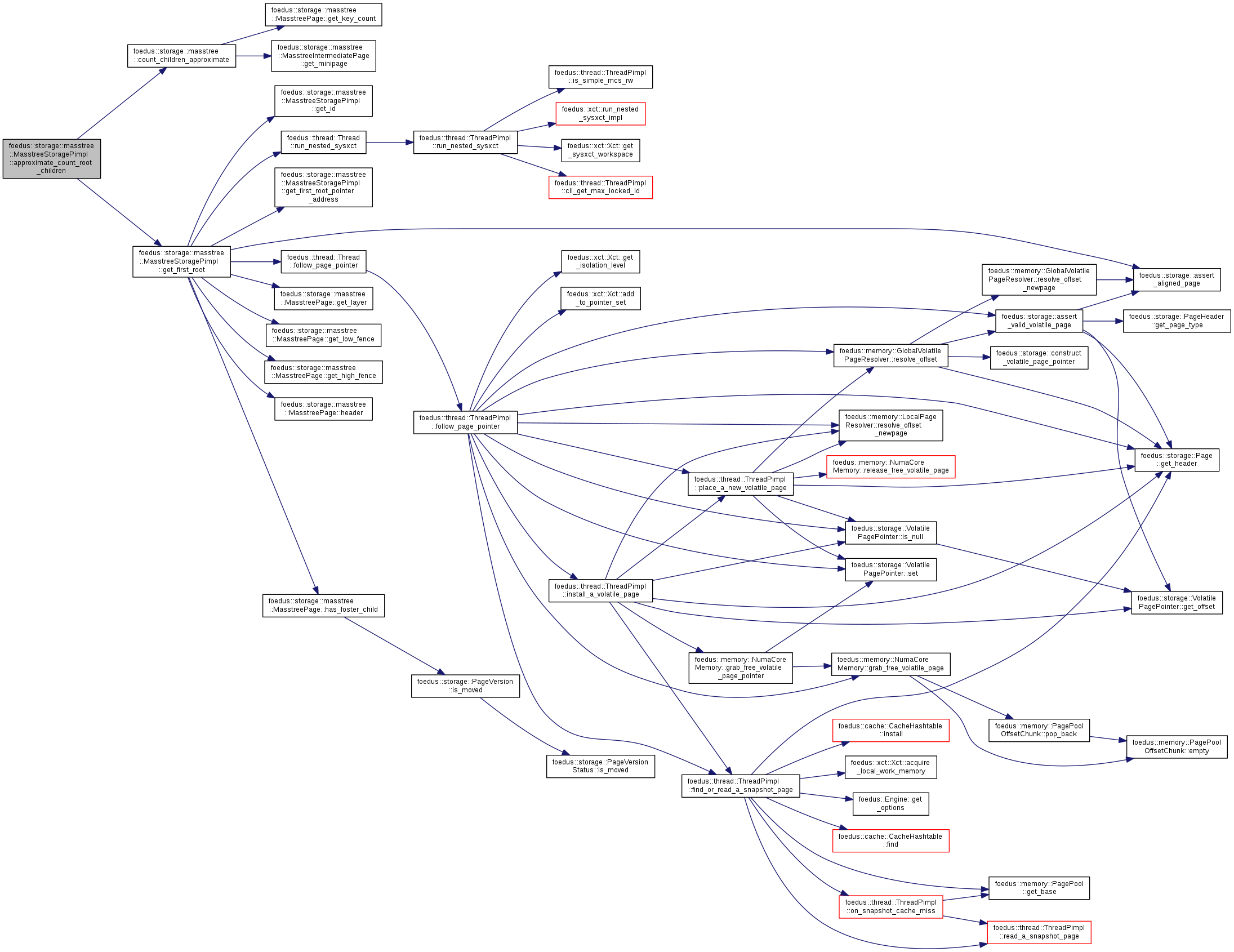

| ErrorCode | approximate_count_root_children (thread::Thread *context, uint32_t *out) |

| Returns the count of direct children in the first-root node. More... | |

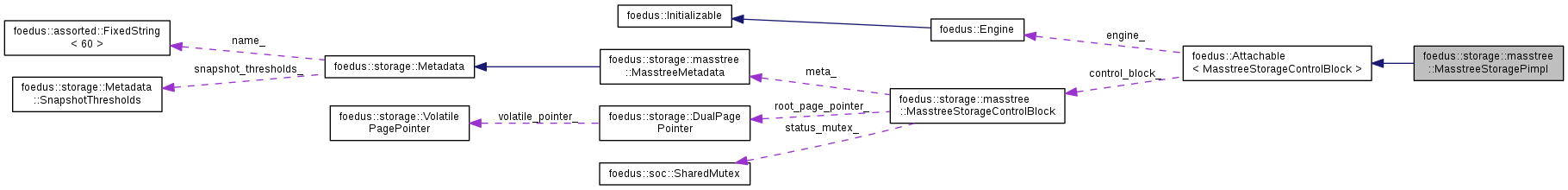

Public Member Functions inherited from foedus::Attachable< MasstreeStorageControlBlock > Public Member Functions inherited from foedus::Attachable< MasstreeStorageControlBlock > | |

| Attachable () | |

| Attachable (Engine *engine) | |

| Attachable (Engine *engine, MasstreeStorageControlBlock *control_block) | |

| Attachable (MasstreeStorageControlBlock *control_block) | |

| Attachable (const Attachable &other) | |

| virtual | ~Attachable () |

| Attachable & | operator= (const Attachable &other) |

| virtual void | attach (MasstreeStorageControlBlock *control_block) |

| Attaches to the given shared memory. More... | |

| bool | is_attached () const |

| Returns whether the object has been already attached to some shared memory. More... | |

| MasstreeStorageControlBlock * | get_control_block () const |

| Engine * | get_engine () const |

| void | set_engine (Engine *engine) |

Static Public Member Functions | |

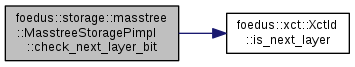

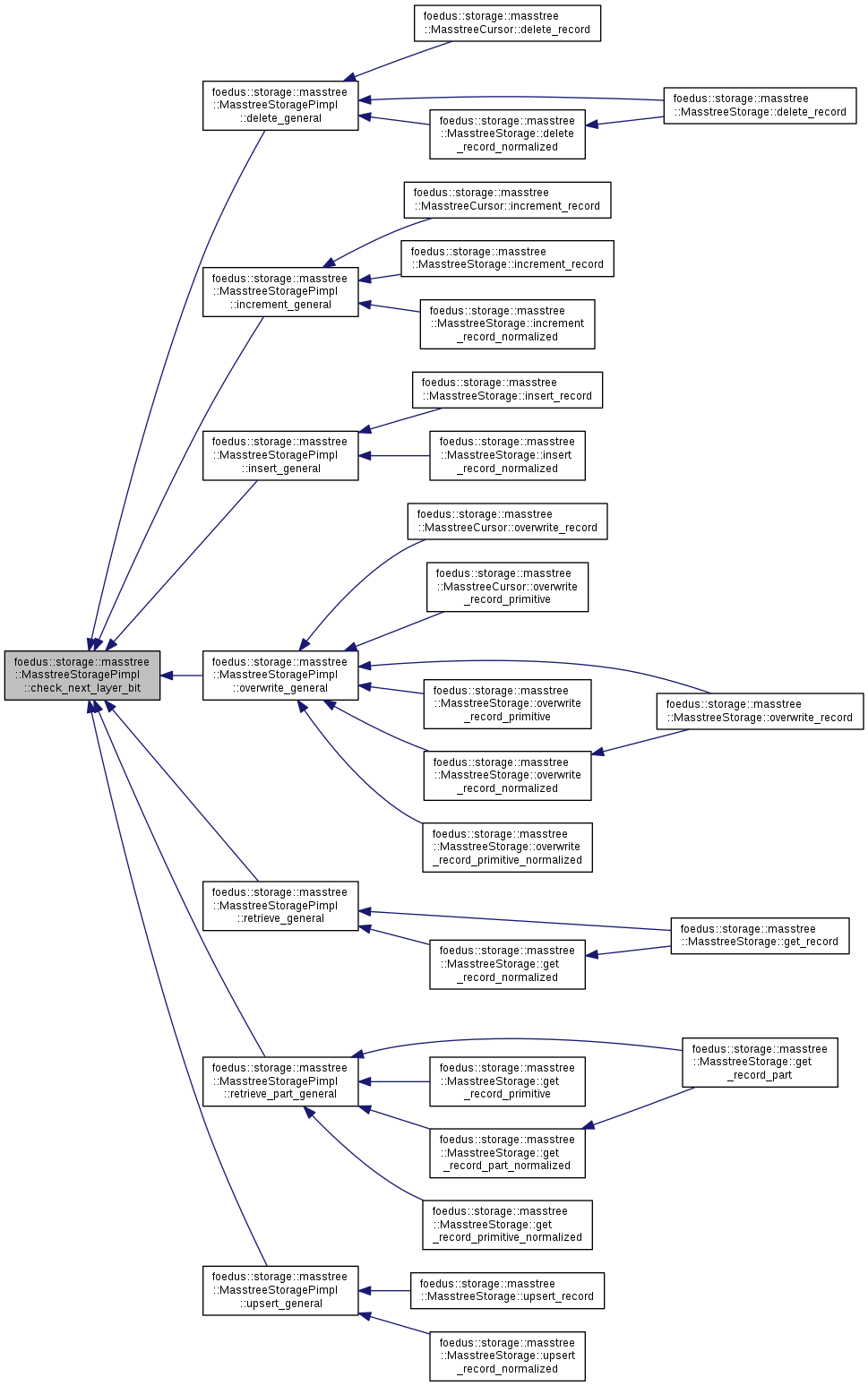

| static ErrorCode | check_next_layer_bit (xct::XctId observed) __attribute__((always_inline)) |

Additional Inherited Members | |

Protected Attributes inherited from foedus::Attachable< MasstreeStorageControlBlock > Protected Attributes inherited from foedus::Attachable< MasstreeStorageControlBlock > | |

| Engine * | engine_ |

| Most attachable object stores an engine pointer (local engine), so we define it here. More... | |

| MasstreeStorageControlBlock * | control_block_ |

| The shared data on shared memory that has been initialized in some SOC or master engine. More... | |

|

inline |

Definition at line 100 of file masstree_storage_pimpl.hpp.

|

inlineexplicit |

Definition at line 101 of file masstree_storage_pimpl.hpp.

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::approximate_count_root_children | ( | thread::Thread * | context, |

| uint32_t * | out | ||

| ) |

Returns the count of direct children in the first-root node.

This is without lock, so it might not be accurate.

Definition at line 42 of file masstree_storage_fatify.cpp.

References CHECK_ERROR_CODE, foedus::storage::masstree::count_children_approximate(), get_first_root(), and foedus::kErrorCodeOk.

Referenced by fatify_first_root(), and fatify_first_root_double().

|

inlinestatic |

Definition at line 589 of file masstree_storage_pimpl.cpp.

References foedus::xct::XctId::is_next_layer(), foedus::kErrorCodeOk, foedus::kErrorCodeXctRaceAbort, and UNLIKELY.

Referenced by delete_general(), increment_general(), insert_general(), overwrite_general(), retrieve_general(), retrieve_part_general(), and upsert_general().

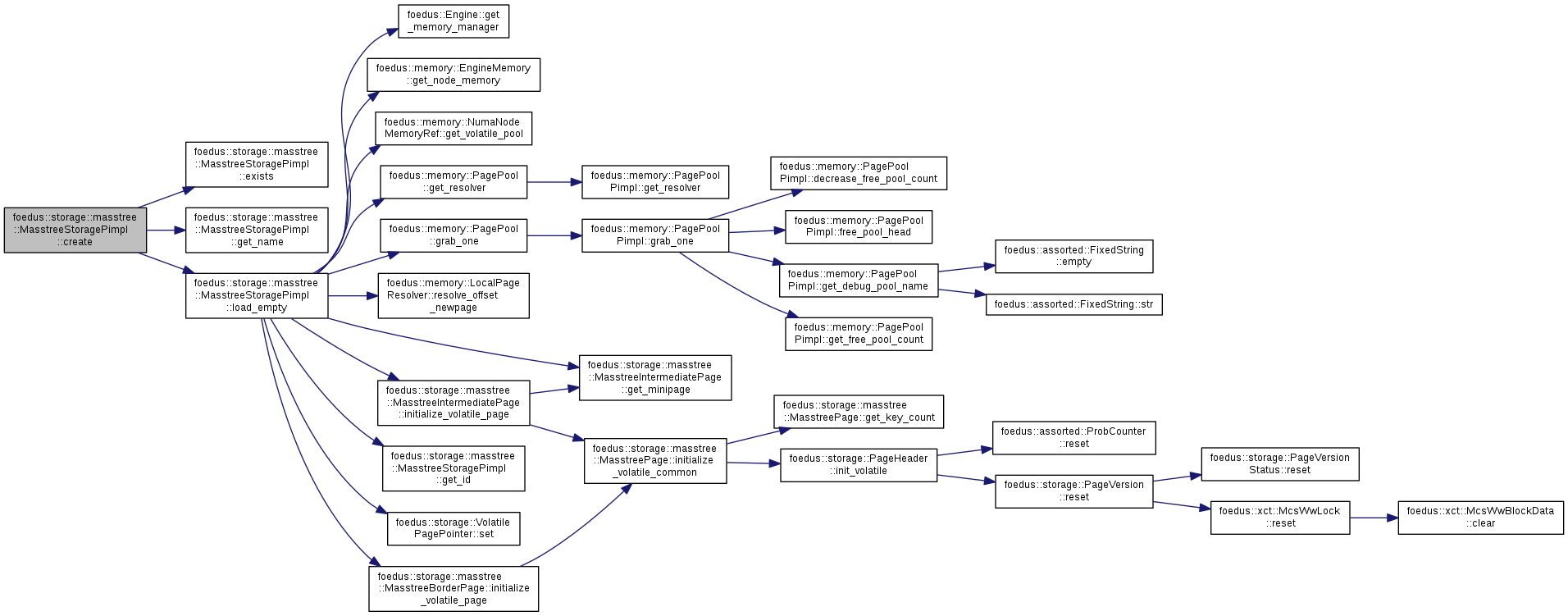

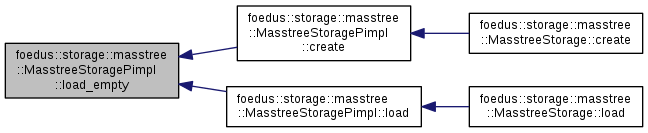

| ErrorStack foedus::storage::masstree::MasstreeStoragePimpl::create | ( | const MasstreeMetadata & | metadata | ) |

Definition at line 164 of file masstree_storage_pimpl.cpp.

References CHECK_ERROR, foedus::Attachable< MasstreeStorageControlBlock >::control_block_, ERROR_STACK, exists(), get_name(), foedus::kErrorCodeStrAlreadyExists, foedus::storage::kExists, foedus::kRetOk, and load_empty().

Referenced by foedus::storage::masstree::MasstreeStorage::create().

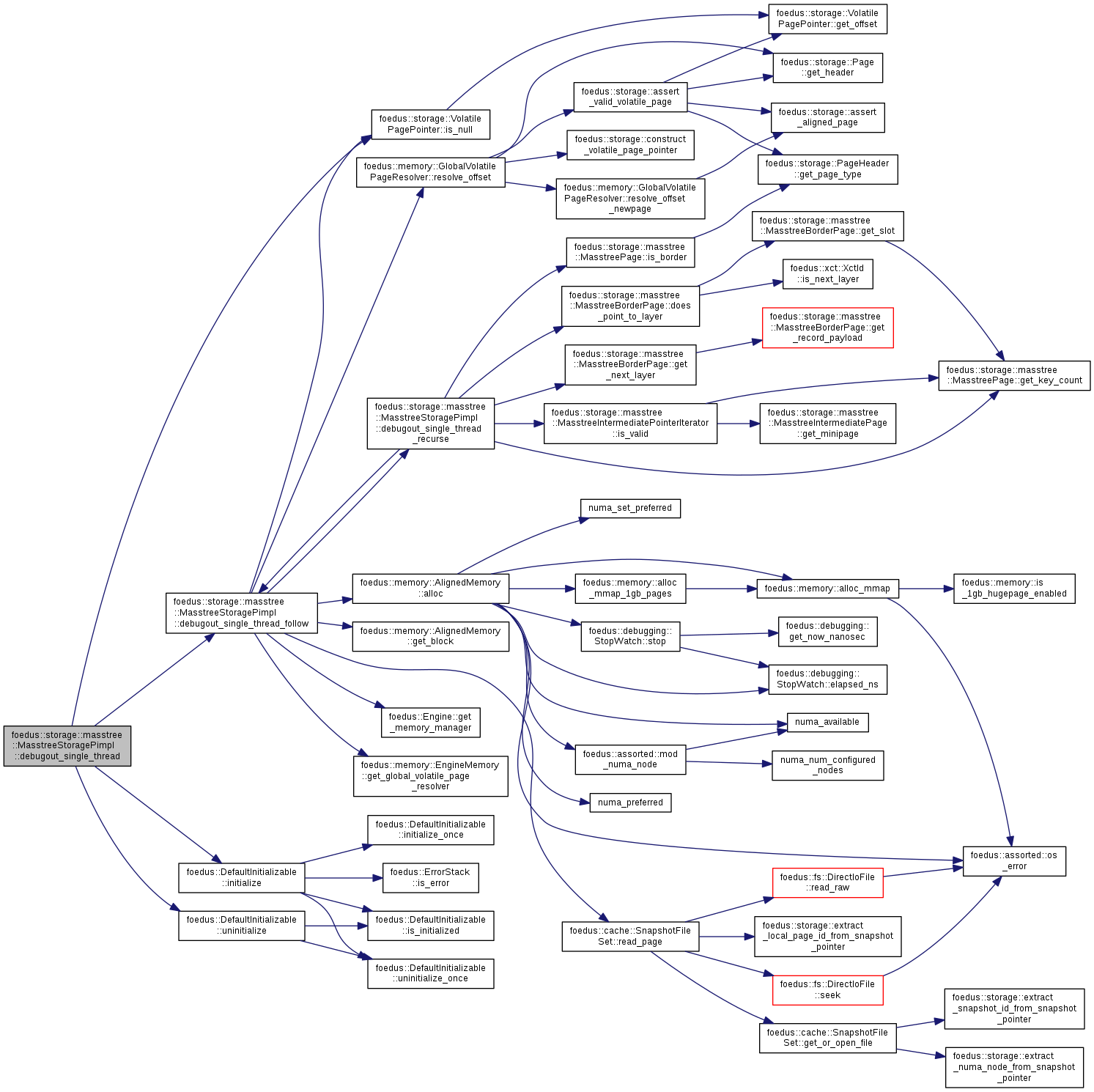

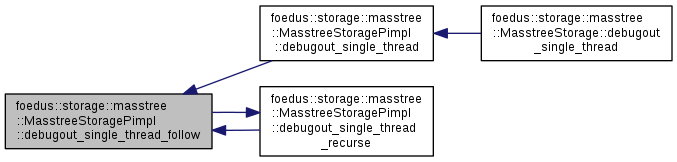

| ErrorStack foedus::storage::masstree::MasstreeStoragePimpl::debugout_single_thread | ( | Engine * | engine, |

| bool | volatile_only, | ||

| uint32_t | max_pages | ||

| ) |

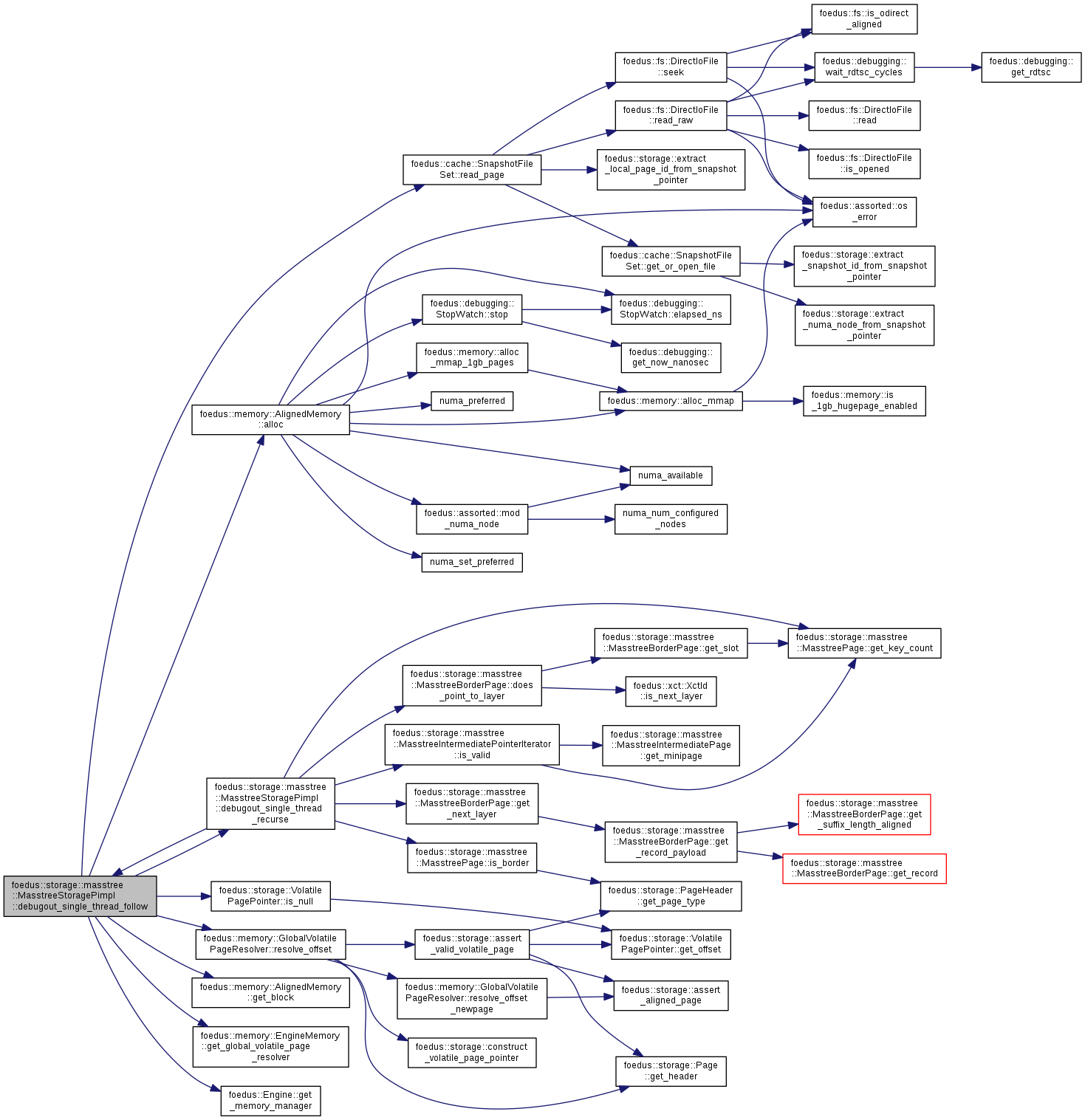

These are defined in masstree_storage_debug.cpp.

Definition at line 36 of file masstree_storage_debug.cpp.

References CHECK_ERROR, foedus::Attachable< MasstreeStorageControlBlock >::control_block_, debugout_single_thread_follow(), foedus::Attachable< MasstreeStorageControlBlock >::engine_, foedus::DefaultInitializable::initialize(), foedus::storage::VolatilePagePointer::is_null(), foedus::kRetOk, foedus::UninitializeGuard::kWarnIfUninitializeError, foedus::storage::DualPagePointer::snapshot_pointer_, foedus::DefaultInitializable::uninitialize(), and foedus::storage::DualPagePointer::volatile_pointer_.

Referenced by foedus::storage::masstree::MasstreeStorage::debugout_single_thread().

| ErrorStack foedus::storage::masstree::MasstreeStoragePimpl::debugout_single_thread_follow | ( | Engine * | engine, |

| cache::SnapshotFileSet * | fileset, | ||

| const DualPagePointer & | pointer, | ||

| bool | follow_volatile, | ||

| uint32_t * | remaining_pages | ||

| ) |

Definition at line 122 of file masstree_storage_debug.cpp.

References foedus::memory::AlignedMemory::alloc(), CHECK_ERROR, debugout_single_thread_recurse(), foedus::memory::AlignedMemory::get_block(), foedus::memory::EngineMemory::get_global_volatile_page_resolver(), foedus::Engine::get_memory_manager(), foedus::storage::VolatilePagePointer::is_null(), foedus::memory::AlignedMemory::kNumaAllocOnnode, foedus::kRetOk, foedus::cache::SnapshotFileSet::read_page(), foedus::memory::GlobalVolatilePageResolver::resolve_offset(), foedus::storage::DualPagePointer::snapshot_pointer_, foedus::storage::DualPagePointer::volatile_pointer_, and WRAP_ERROR_CODE.

Referenced by debugout_single_thread(), and debugout_single_thread_recurse().

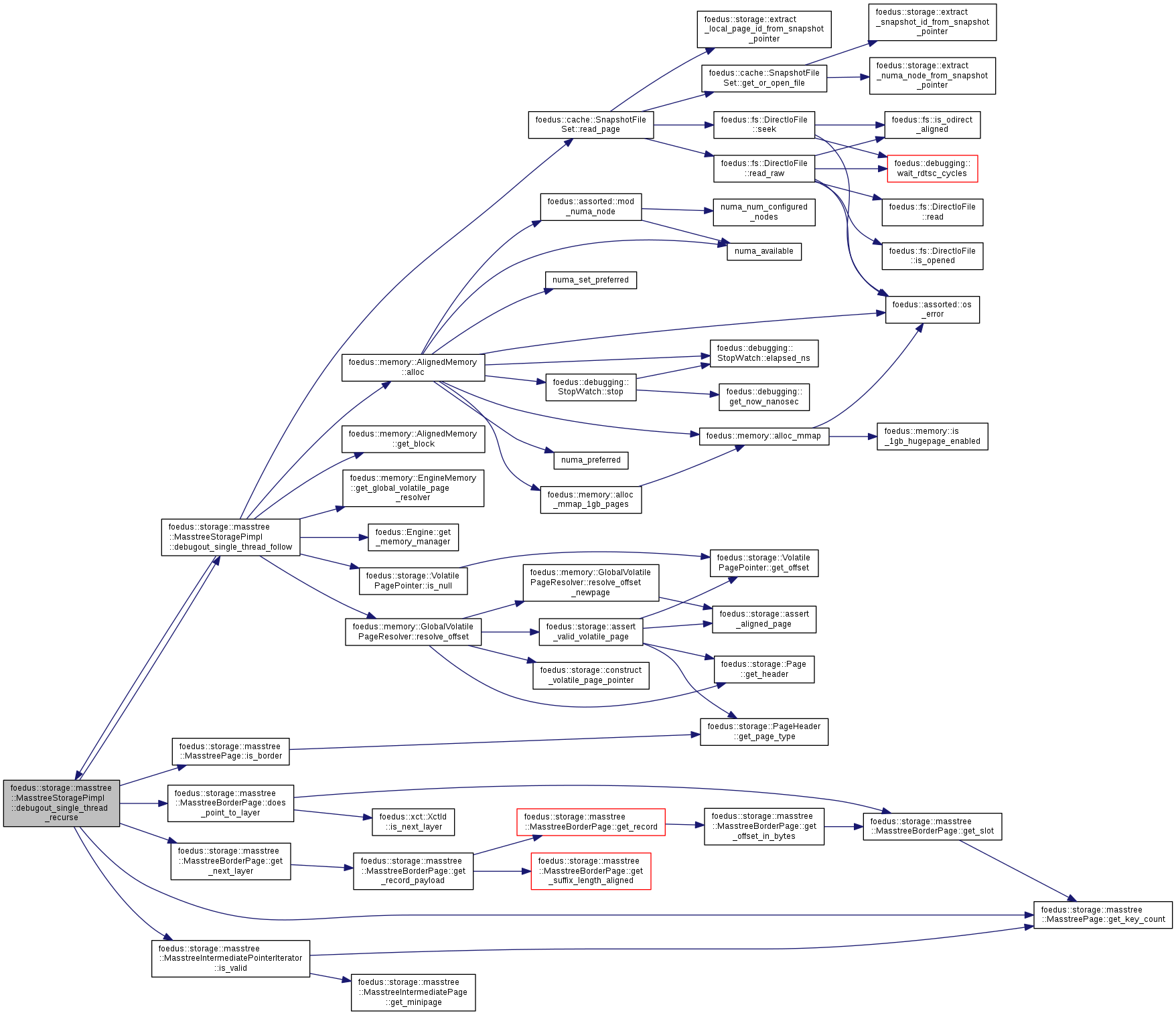

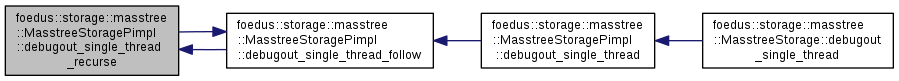

| ErrorStack foedus::storage::masstree::MasstreeStoragePimpl::debugout_single_thread_recurse | ( | Engine * | engine, |

| cache::SnapshotFileSet * | fileset, | ||

| MasstreePage * | parent, | ||

| bool | follow_volatile, | ||

| uint32_t * | remaining_pages | ||

| ) |

Definition at line 75 of file masstree_storage_debug.cpp.

References CHECK_ERROR, debugout_single_thread_follow(), foedus::storage::masstree::MasstreeBorderPage::does_point_to_layer(), foedus::storage::masstree::MasstreePage::get_key_count(), foedus::storage::masstree::MasstreeBorderPage::get_next_layer(), foedus::storage::masstree::MasstreePage::is_border(), foedus::storage::masstree::MasstreeIntermediatePointerIterator::is_valid(), and foedus::kRetOk.

Referenced by debugout_single_thread_follow().

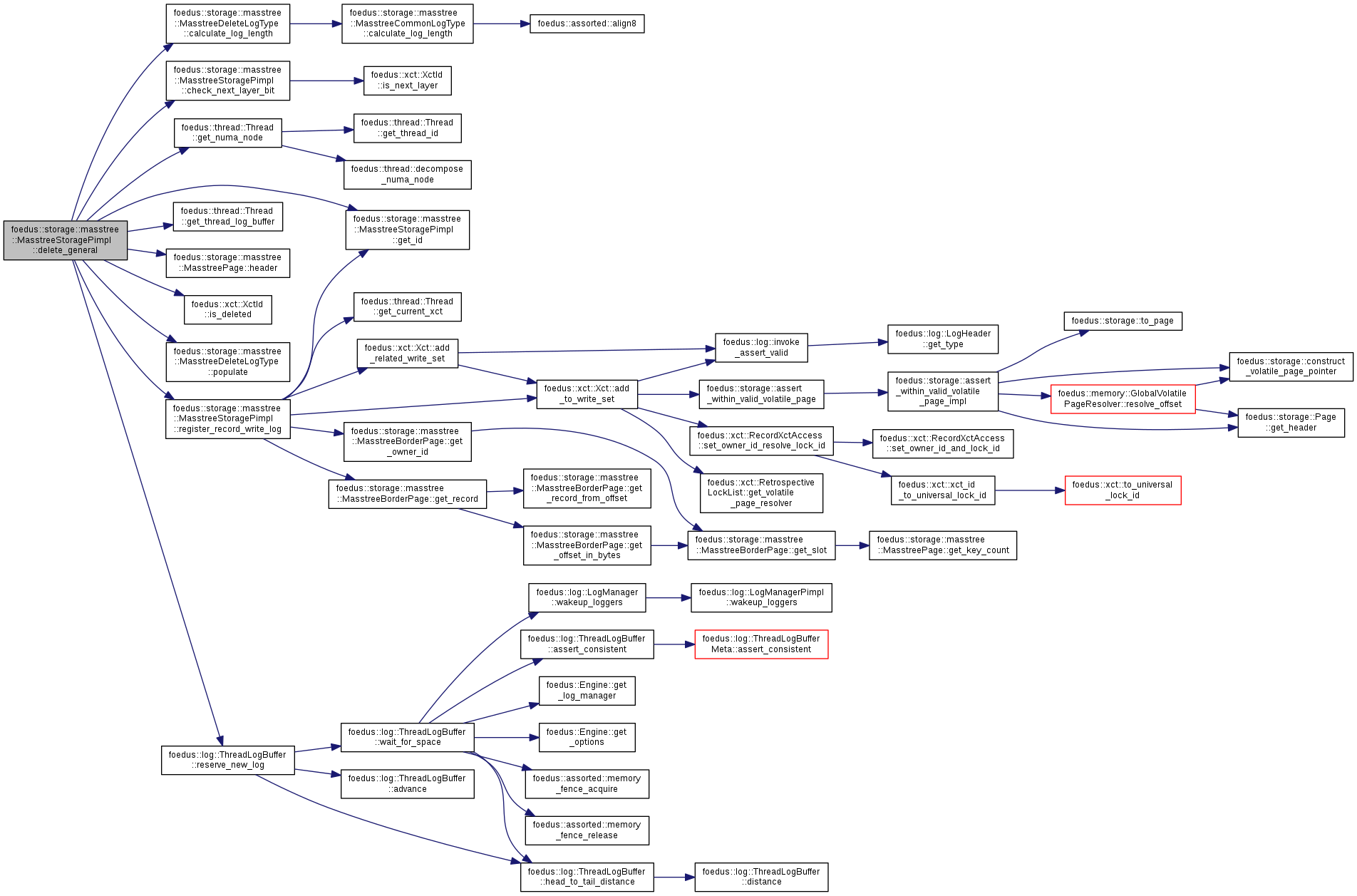

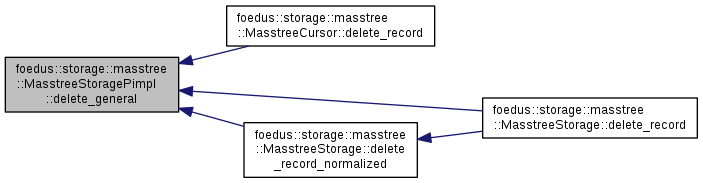

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::delete_general | ( | thread::Thread * | context, |

| const RecordLocation & | location, | ||

| const void * | be_key, | ||

| KeyLength | key_length | ||

| ) |

implementation of delete_record family.

use with locate_record()

Definition at line 691 of file masstree_storage_pimpl.cpp.

References foedus::storage::masstree::MasstreeDeleteLogType::calculate_log_length(), CHECK_ERROR_CODE, check_next_layer_bit(), get_id(), foedus::thread::Thread::get_numa_node(), foedus::thread::Thread::get_thread_log_buffer(), foedus::storage::masstree::MasstreePage::header(), foedus::xct::XctId::is_deleted(), foedus::kErrorCodeStrKeyNotFound, foedus::storage::masstree::RecordLocation::observed_, foedus::storage::masstree::RecordLocation::page_, foedus::storage::masstree::MasstreeDeleteLogType::populate(), register_record_write_log(), foedus::log::ThreadLogBuffer::reserve_new_log(), and foedus::storage::PageHeader::stat_last_updater_node_.

Referenced by foedus::storage::masstree::MasstreeCursor::delete_record(), foedus::storage::masstree::MasstreeStorage::delete_record(), and foedus::storage::masstree::MasstreeStorage::delete_record_normalized().

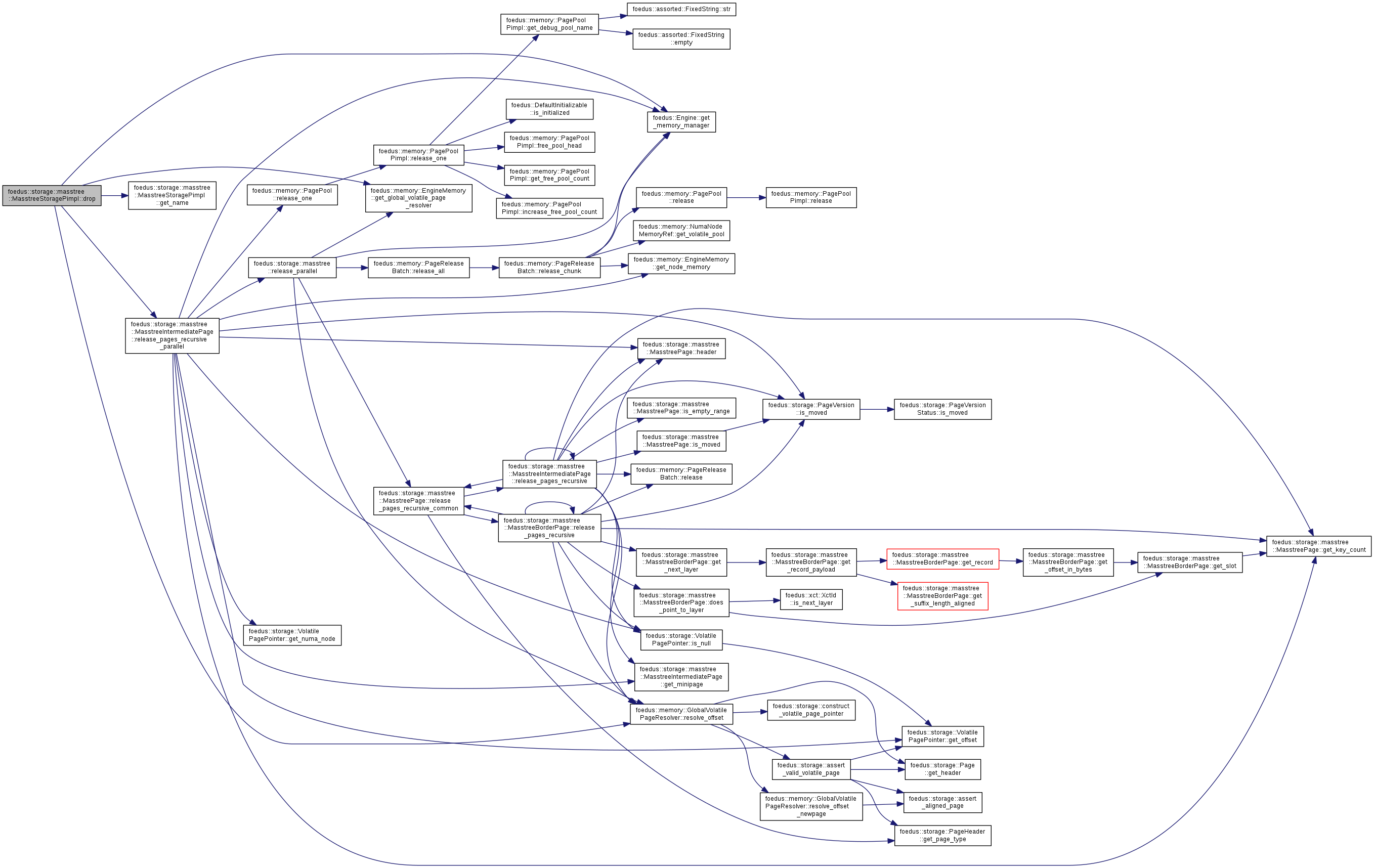

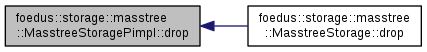

| ErrorStack foedus::storage::masstree::MasstreeStoragePimpl::drop | ( | ) |

Storage-wide operations, such as drop, create, etc.

Definition at line 105 of file masstree_storage_pimpl.cpp.

References foedus::Attachable< MasstreeStorageControlBlock >::control_block_, foedus::Attachable< MasstreeStorageControlBlock >::engine_, foedus::memory::EngineMemory::get_global_volatile_page_resolver(), foedus::Engine::get_memory_manager(), get_name(), foedus::kRetOk, foedus::storage::masstree::MasstreeIntermediatePage::release_pages_recursive_parallel(), and foedus::memory::GlobalVolatilePageResolver::resolve_offset().

Referenced by foedus::storage::masstree::MasstreeStorage::drop().

|

inline |

Definition at line 111 of file masstree_storage_pimpl.hpp.

References foedus::Attachable< MasstreeStorageControlBlock >::control_block_.

Referenced by create().

| ErrorStack foedus::storage::masstree::MasstreeStoragePimpl::fatify_first_root | ( | thread::Thread * | context, |

| uint32_t | desired_count, | ||

| bool | disable_no_record_split | ||

| ) |

Defined in masstree_storage_fatify.cpp.

Definition at line 54 of file masstree_storage_fatify.cpp.

References approximate_count_root_children(), CHECK_ERROR, foedus::debugging::StopWatch::elapsed_us(), fatify_first_root_double(), get_name(), foedus::storage::masstree::kIntermediateAlmostFull, foedus::kRetOk, foedus::debugging::StopWatch::start(), foedus::debugging::StopWatch::stop(), and WRAP_ERROR_CODE.

Referenced by foedus::storage::masstree::MasstreeStorage::fatify_first_root().

| ErrorStack foedus::storage::masstree::MasstreeStoragePimpl::fatify_first_root_double | ( | thread::Thread * | context, |

| bool | disable_no_record_split | ||

| ) |

Definition at line 94 of file masstree_storage_fatify.cpp.

References approximate_count_root_children(), ASSERT_ND, foedus::storage::masstree::count_children_approximate(), foedus::debugging::StopWatch::elapsed_us(), foedus::storage::masstree::MasstreeIntermediatePage::find_minipage(), foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::find_pointer(), follow_page(), get_first_root(), foedus::storage::masstree::MasstreePage::get_high_fence(), foedus::storage::masstree::MasstreePage::get_key_count(), foedus::storage::masstree::MasstreePage::get_low_fence(), foedus::storage::masstree::MasstreeIntermediatePage::get_minipage(), foedus::storage::masstree::MasstreePage::header(), foedus::storage::masstree::MasstreePage::is_border(), foedus::storage::masstree::MasstreePage::is_moved(), foedus::storage::masstree::kInfimumSlice, foedus::kRetOk, foedus::storage::masstree::kSupremumSlice, foedus::thread::Thread::run_nested_sysxct(), foedus::storage::PageHeader::snapshot_, foedus::debugging::StopWatch::start(), foedus::debugging::StopWatch::stop(), and WRAP_ERROR_CODE.

Referenced by fatify_first_root().

|

inline |

Find a border node in the layer that corresponds to the given key slice.

Record-wise or page-wise operations.

Definition at line 213 of file masstree_storage_pimpl.cpp.

References foedus::storage::assert_aligned_page(), ASSERT_ND, CHECK_ERROR_CODE, foedus::storage::masstree::MasstreeIntermediatePage::find_minipage(), foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::find_pointer(), follow_page(), foedus::storage::masstree::MasstreePage::get_low_fence(), foedus::storage::masstree::MasstreeIntermediatePage::get_minipage(), foedus::storage::masstree::MasstreePage::has_foster_child(), foedus::storage::masstree::MasstreePage::is_high_fence_supremum(), foedus::storage::masstree::MasstreePage::is_locked(), foedus::kErrorCodeOk, foedus::storage::masstree::kInfimumSlice, LIKELY, foedus::assorted::memory_fence_acquire(), foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::pointers_, foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::prefetch(), foedus::storage::masstree::MasstreePage::prefetch_general(), foedus::thread::Thread::resolve(), foedus::thread::Thread::run_nested_sysxct(), UNLIKELY, and foedus::storage::masstree::MasstreePage::within_fences().

Referenced by locate_record(), locate_record_normalized(), reserve_record(), and reserve_record_normalized().

|

inline |

Follows to next layer's root page.

Definition at line 384 of file masstree_storage_pimpl.cpp.

References ASSERT_ND, CHECK_ERROR_CODE, foedus::storage::masstree::MasstreeBorderPage::does_point_to_layer(), follow_page(), foedus::storage::masstree::MasstreeBorderPage::get_next_layer(), foedus::storage::masstree::MasstreeBorderPage::get_owner_id(), foedus::xct::XctId::is_moved(), foedus::xct::XctId::is_next_layer(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::kErrorCodeOk, foedus::thread::Thread::run_nested_sysxct(), UNLIKELY, and foedus::xct::RwLockableXctId::xct_id_.

Referenced by locate_record(), and reserve_record().

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::follow_page | ( | thread::Thread * | context, |

| bool | for_writes, | ||

| storage::DualPagePointer * | pointer, | ||

| MasstreePage ** | page | ||

| ) |

Thread::follow_page_pointer() for masstree.

Because of how masstree works, this method never creates a completely new page. In other words, always !pointer->is_both_null(). It either reads an existing volatile/snapshot page or creates a volatile page from existing snapshot page.

Definition at line 367 of file masstree_storage_pimpl.cpp.

References ASSERT_ND, foedus::thread::Thread::follow_page_pointer(), and foedus::storage::DualPagePointer::is_both_null().

Referenced by fatify_first_root_double(), find_border_physical(), follow_layer(), verify_single_thread_border(), and verify_single_thread_intermediate().

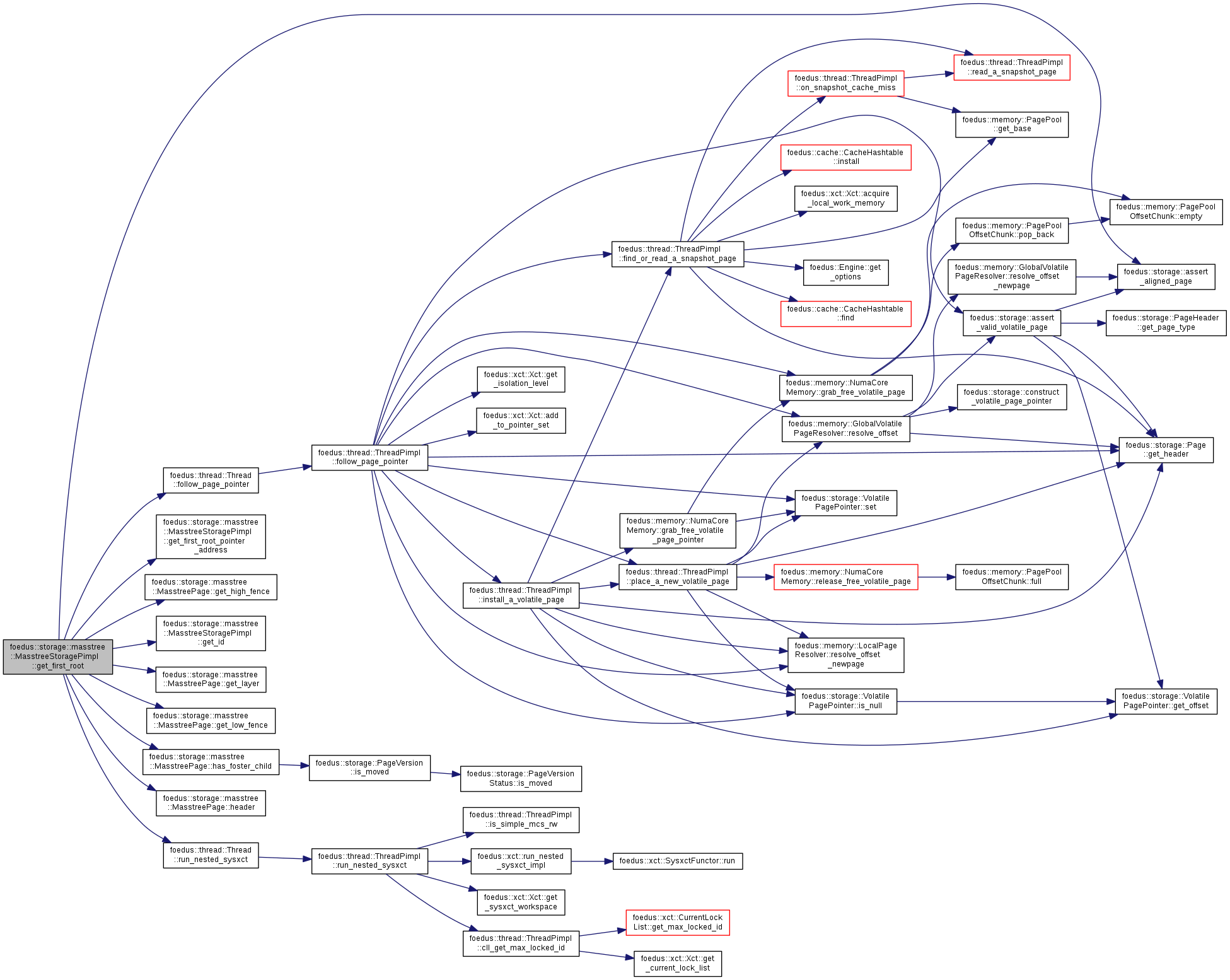

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::get_first_root | ( | thread::Thread * | context, |

| bool | for_write, | ||

| MasstreeIntermediatePage ** | root | ||

| ) |

Root-node related, such as a method to retrieve 1st-root, to grow, etc.

Definition at line 58 of file masstree_storage_pimpl.cpp.

References foedus::storage::assert_aligned_page(), ASSERT_ND, CHECK_ERROR_CODE, foedus::Attachable< MasstreeStorageControlBlock >::control_block_, foedus::thread::Thread::follow_page_pointer(), get_first_root_pointer_address(), foedus::storage::masstree::MasstreePage::get_high_fence(), get_id(), foedus::storage::masstree::MasstreePage::get_layer(), foedus::storage::masstree::MasstreePage::get_low_fence(), foedus::storage::masstree::MasstreePage::has_foster_child(), foedus::storage::masstree::MasstreePage::header(), foedus::kErrorCodeOk, foedus::storage::masstree::kInfimumSlice, foedus::storage::masstree::kSupremumSlice, foedus::thread::Thread::run_nested_sysxct(), foedus::storage::PageHeader::snapshot_, and UNLIKELY.

Referenced by approximate_count_root_children(), fatify_first_root_double(), locate_record(), locate_record_normalized(), foedus::storage::masstree::MasstreeCursor::open(), prefetch_pages_normalized(), reserve_record(), reserve_record_normalized(), and verify_single_thread().

|

inline |

Definition at line 115 of file masstree_storage_pimpl.hpp.

References foedus::Attachable< MasstreeStorageControlBlock >::control_block_.

Referenced by peek_volatile_page_boundaries().

|

inline |

Definition at line 118 of file masstree_storage_pimpl.hpp.

References foedus::Attachable< MasstreeStorageControlBlock >::control_block_.

Referenced by get_first_root().

|

inline |

Definition at line 112 of file masstree_storage_pimpl.hpp.

References foedus::Attachable< MasstreeStorageControlBlock >::control_block_.

Referenced by delete_general(), get_first_root(), increment_general(), insert_general(), load_empty(), overwrite_general(), register_record_write_log(), and upsert_general().

|

inline |

Definition at line 114 of file masstree_storage_pimpl.hpp.

References foedus::Attachable< MasstreeStorageControlBlock >::control_block_.

Referenced by load(), and reserve_record().

|

inline |

Definition at line 113 of file masstree_storage_pimpl.hpp.

References foedus::Attachable< MasstreeStorageControlBlock >::control_block_.

Referenced by create(), drop(), fatify_first_root(), and prefetch_pages_normalized().

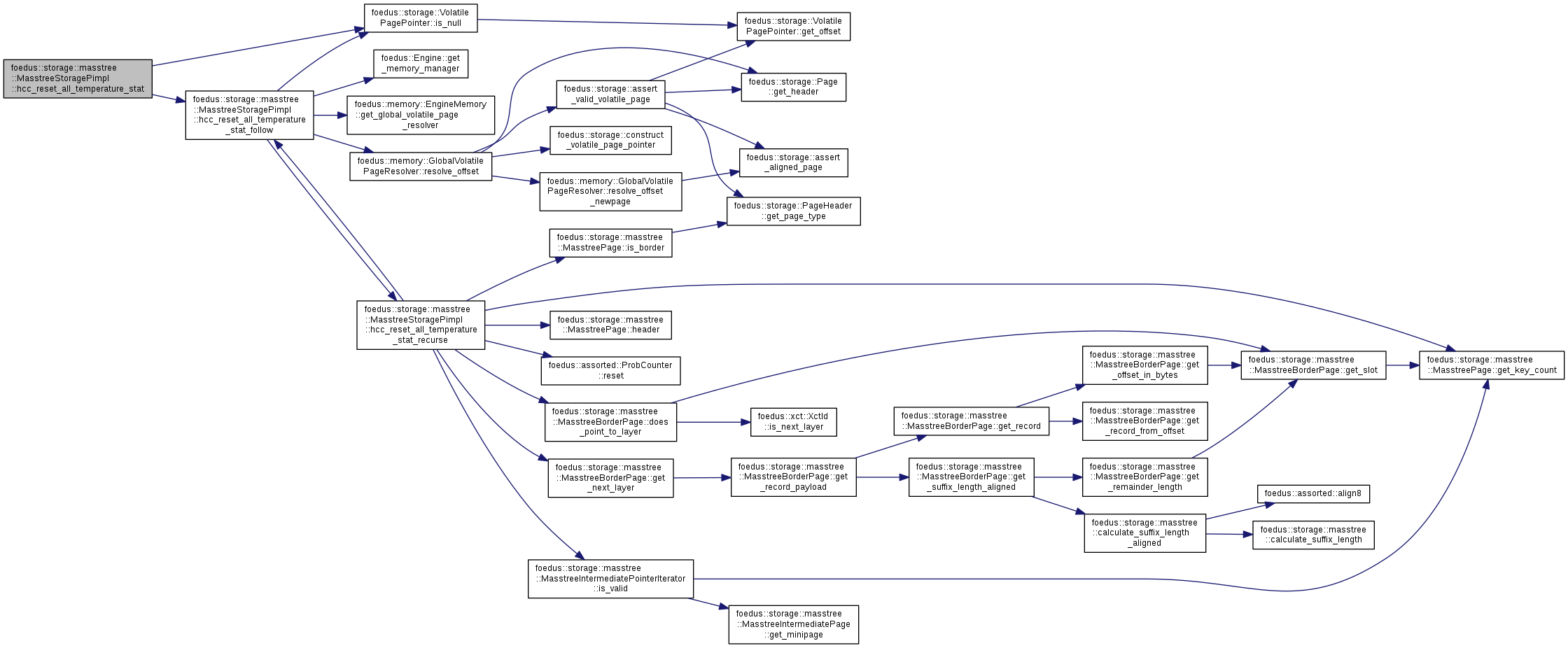

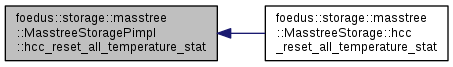

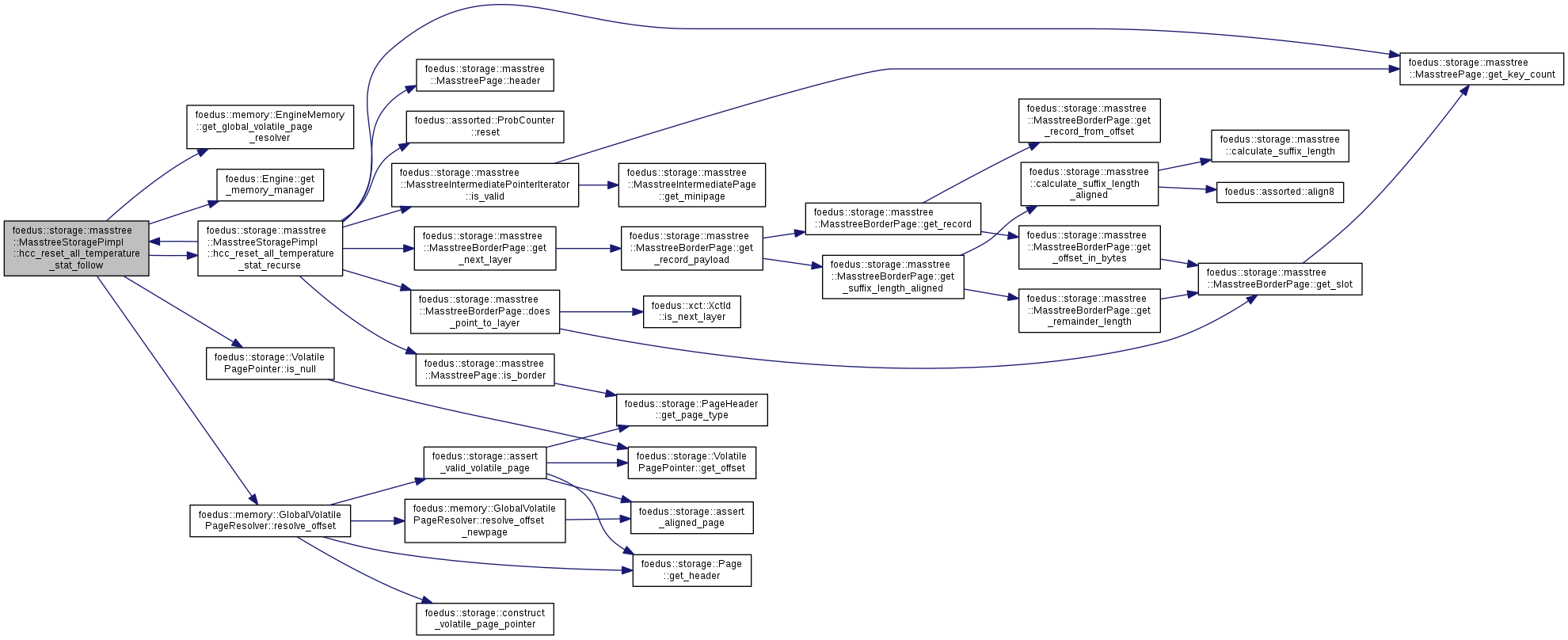

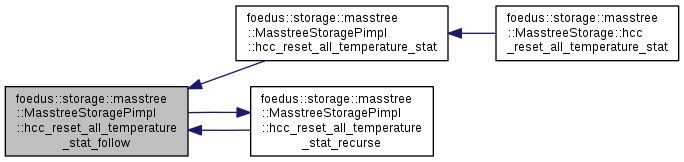

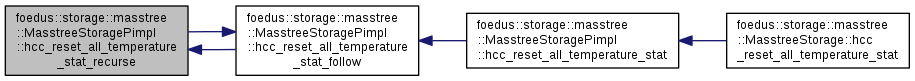

| ErrorStack foedus::storage::masstree::MasstreeStoragePimpl::hcc_reset_all_temperature_stat | ( | ) |

For stupid reasons (I'm lazy!) these are defined in _debug.cpp.

Definition at line 151 of file masstree_storage_debug.cpp.

References CHECK_ERROR, foedus::Attachable< MasstreeStorageControlBlock >::control_block_, foedus::Attachable< MasstreeStorageControlBlock >::engine_, hcc_reset_all_temperature_stat_follow(), foedus::storage::VolatilePagePointer::is_null(), foedus::kRetOk, and foedus::storage::DualPagePointer::volatile_pointer_.

Referenced by foedus::storage::masstree::MasstreeStorage::hcc_reset_all_temperature_stat().

| ErrorStack foedus::storage::masstree::MasstreeStoragePimpl::hcc_reset_all_temperature_stat_follow | ( | VolatilePagePointer | page_id | ) |

Definition at line 192 of file masstree_storage_debug.cpp.

References CHECK_ERROR, foedus::Attachable< MasstreeStorageControlBlock >::engine_, foedus::memory::EngineMemory::get_global_volatile_page_resolver(), foedus::Engine::get_memory_manager(), hcc_reset_all_temperature_stat_recurse(), foedus::storage::VolatilePagePointer::is_null(), foedus::kRetOk, and foedus::memory::GlobalVolatilePageResolver::resolve_offset().

Referenced by hcc_reset_all_temperature_stat(), and hcc_reset_all_temperature_stat_recurse().

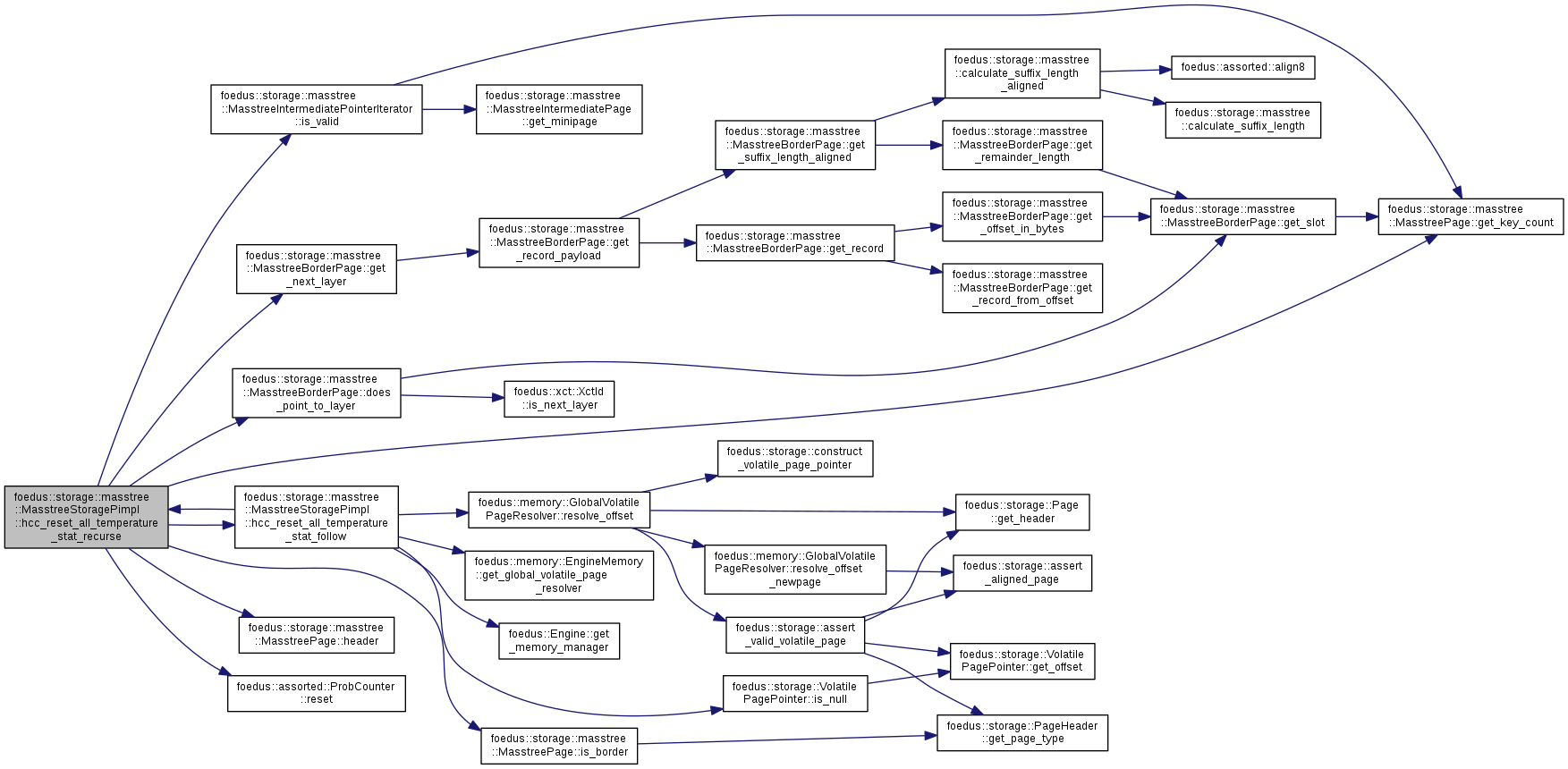

| ErrorStack foedus::storage::masstree::MasstreeStoragePimpl::hcc_reset_all_temperature_stat_recurse | ( | MasstreePage * | parent | ) |

Definition at line 170 of file masstree_storage_debug.cpp.

References CHECK_ERROR, foedus::storage::masstree::MasstreeBorderPage::does_point_to_layer(), foedus::storage::masstree::MasstreePage::get_key_count(), foedus::storage::masstree::MasstreeBorderPage::get_next_layer(), hcc_reset_all_temperature_stat_follow(), foedus::storage::masstree::MasstreePage::header(), foedus::storage::PageHeader::hotness_, foedus::storage::masstree::MasstreePage::is_border(), foedus::storage::masstree::MasstreeIntermediatePointerIterator::is_valid(), foedus::kRetOk, foedus::assorted::ProbCounter::reset(), and foedus::storage::DualPagePointer::volatile_pointer_.

Referenced by hcc_reset_all_temperature_stat_follow().

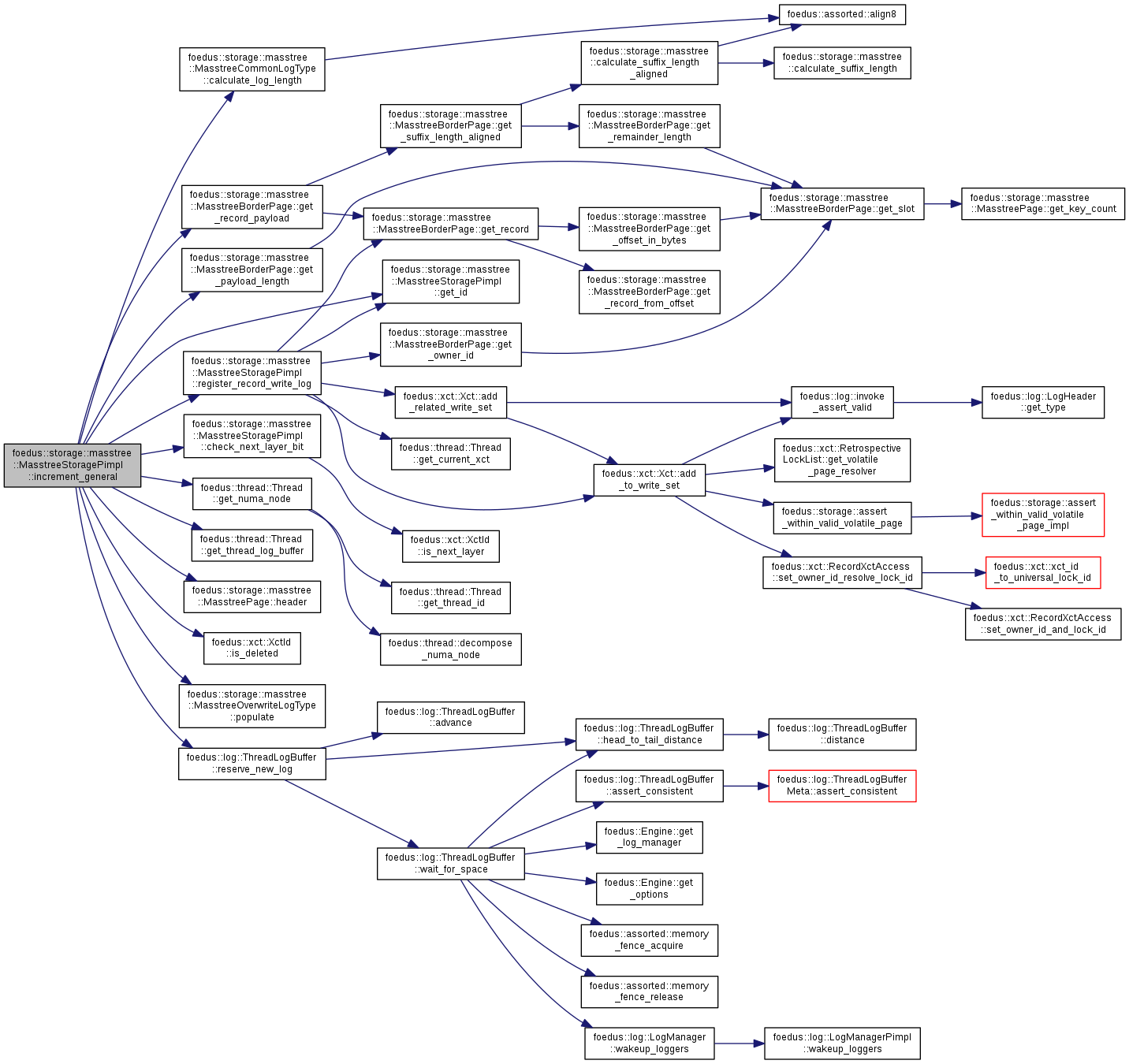

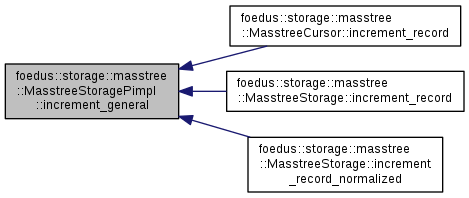

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::increment_general | ( | thread::Thread * | context, |

| const RecordLocation & | location, | ||

| const void * | be_key, | ||

| KeyLength | key_length, | ||

| PAYLOAD * | value, | ||

| PayloadLength | payload_offset | ||

| ) |

implementation of increment_record family.

use with locate_record()

Definition at line 806 of file masstree_storage_pimpl.cpp.

References foedus::storage::masstree::MasstreeCommonLogType::calculate_log_length(), CHECK_ERROR_CODE, check_next_layer_bit(), get_id(), foedus::thread::Thread::get_numa_node(), foedus::storage::masstree::MasstreeBorderPage::get_payload_length(), foedus::storage::masstree::MasstreeBorderPage::get_record_payload(), foedus::thread::Thread::get_thread_log_buffer(), foedus::storage::masstree::MasstreePage::header(), foedus::storage::masstree::RecordLocation::index_, foedus::xct::XctId::is_deleted(), foedus::kErrorCodeStrKeyNotFound, foedus::kErrorCodeStrTooShortPayload, foedus::storage::masstree::RecordLocation::observed_, foedus::storage::masstree::RecordLocation::page_, foedus::storage::masstree::MasstreeOverwriteLogType::populate(), register_record_write_log(), foedus::log::ThreadLogBuffer::reserve_new_log(), and foedus::storage::PageHeader::stat_last_updater_node_.

Referenced by foedus::storage::masstree::MasstreeCursor::increment_record(), foedus::storage::masstree::MasstreeStorage::increment_record(), and foedus::storage::masstree::MasstreeStorage::increment_record_normalized().

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::insert_general | ( | thread::Thread * | context, |

| const RecordLocation & | location, | ||

| const void * | be_key, | ||

| KeyLength | key_length, | ||

| const void * | payload, | ||

| PayloadLength | payload_count | ||

| ) |

implementation of insert_record family.

use with reserve_record()

Definition at line 661 of file masstree_storage_pimpl.cpp.

References ASSERT_ND, foedus::storage::masstree::MasstreeCommonLogType::calculate_log_length(), CHECK_ERROR_CODE, check_next_layer_bit(), get_id(), foedus::storage::masstree::MasstreeBorderPage::get_max_payload_length(), foedus::thread::Thread::get_numa_node(), foedus::thread::Thread::get_thread_log_buffer(), foedus::storage::masstree::MasstreePage::header(), foedus::storage::masstree::RecordLocation::index_, foedus::xct::XctId::is_deleted(), foedus::kErrorCodeStrKeyAlreadyExists, foedus::storage::masstree::RecordLocation::observed_, foedus::storage::masstree::RecordLocation::page_, foedus::storage::masstree::MasstreeInsertLogType::populate(), register_record_write_log(), foedus::log::ThreadLogBuffer::reserve_new_log(), and foedus::storage::PageHeader::stat_last_updater_node_.

Referenced by foedus::storage::masstree::MasstreeStorage::insert_record(), and foedus::storage::masstree::MasstreeStorage::insert_record_normalized().

| ErrorStack foedus::storage::masstree::MasstreeStoragePimpl::load | ( | const StorageControlBlock & | snapshot_block | ) |

Definition at line 176 of file masstree_storage_pimpl.cpp.

References CHECK_ERROR, foedus::Attachable< MasstreeStorageControlBlock >::control_block_, foedus::Attachable< MasstreeStorageControlBlock >::engine_, foedus::Engine::get_memory_manager(), get_meta(), foedus::DefaultInitializable::initialize(), foedus::storage::kExists, foedus::kRetOk, foedus::UninitializeGuard::kWarnIfUninitializeError, load_empty(), foedus::memory::EngineMemory::load_one_volatile_page(), foedus::storage::StorageControlBlock::meta_, foedus::storage::Metadata::root_snapshot_page_id_, and foedus::DefaultInitializable::uninitialize().

Referenced by foedus::storage::masstree::MasstreeStorage::load().

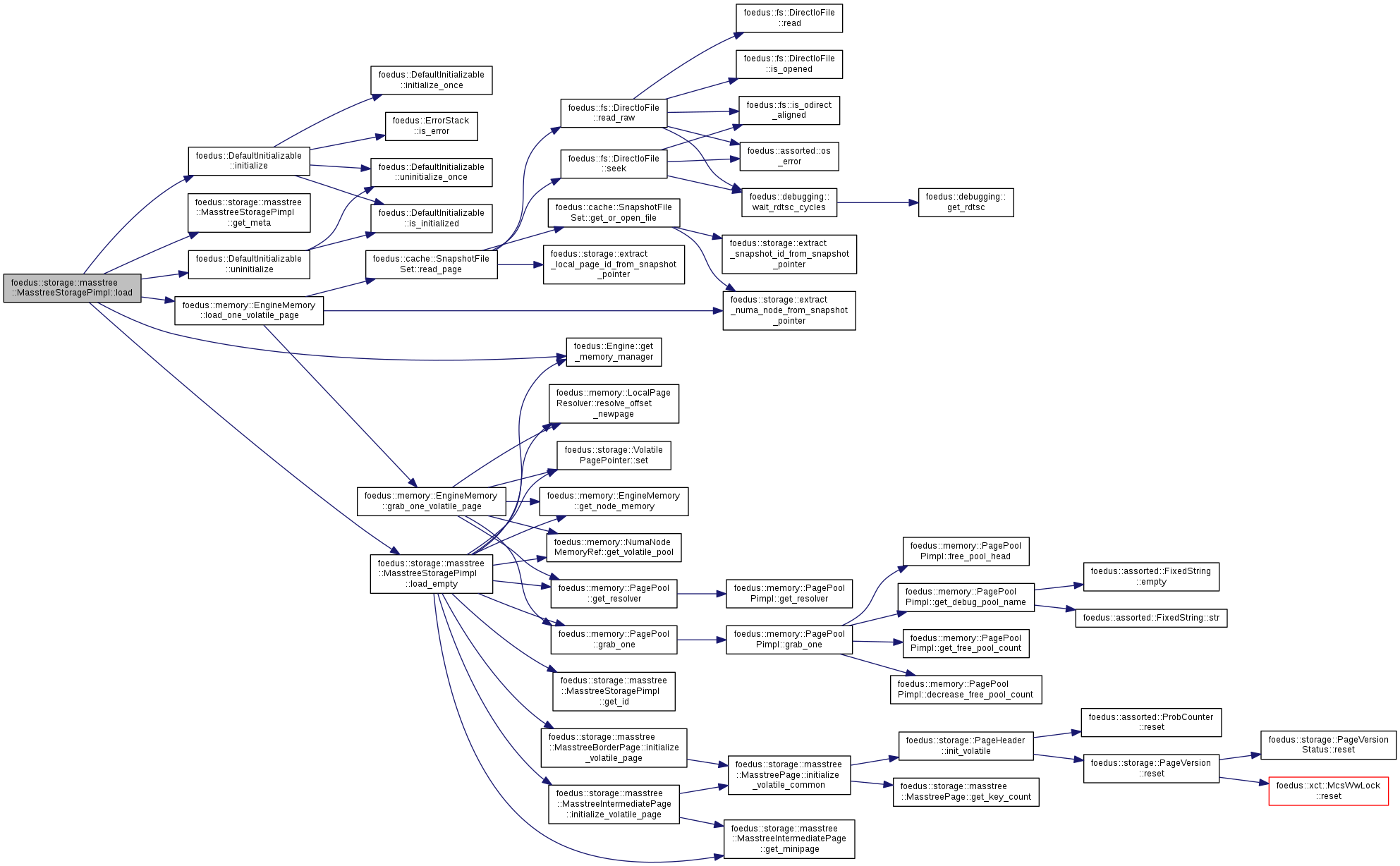

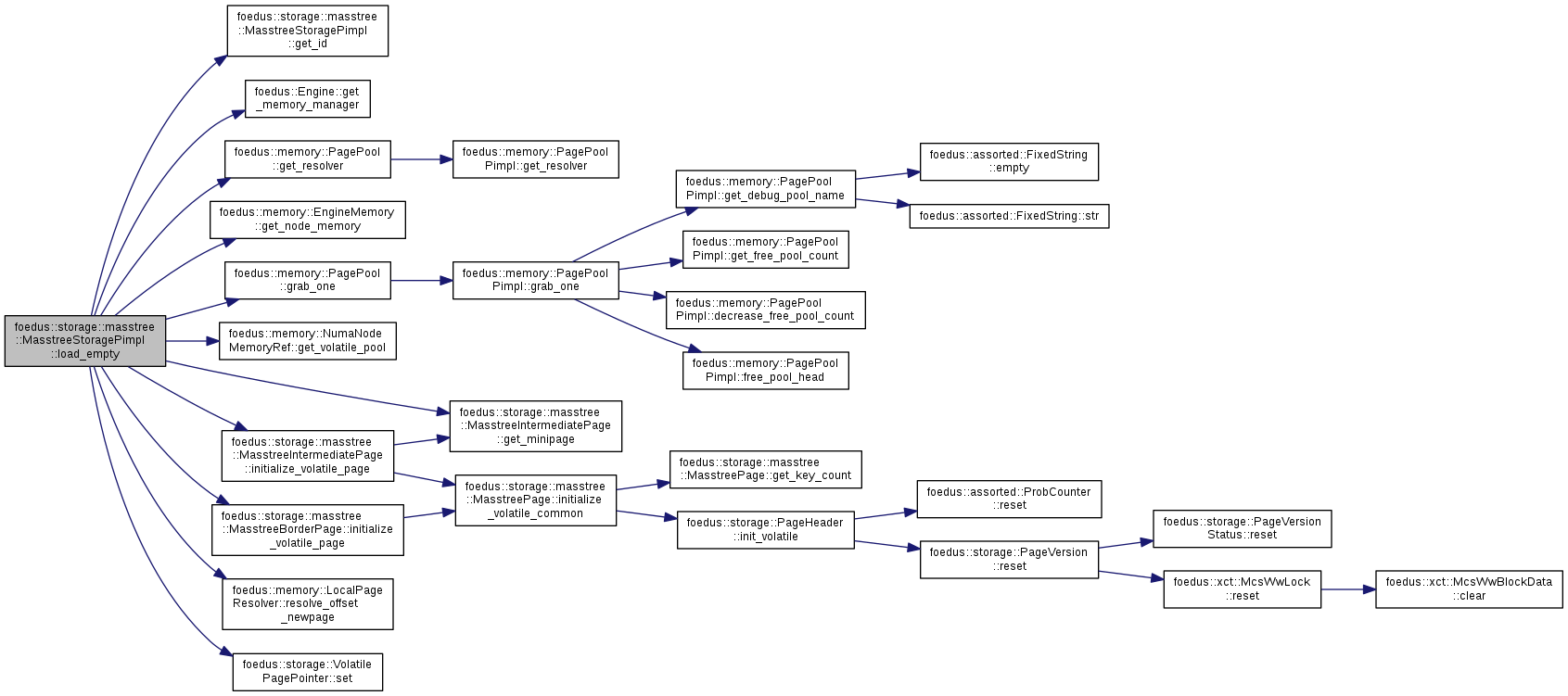

| ErrorStack foedus::storage::masstree::MasstreeStoragePimpl::load_empty | ( | ) |

Definition at line 122 of file masstree_storage_pimpl.cpp.

References ASSERT_ND, foedus::Attachable< MasstreeStorageControlBlock >::control_block_, foedus::Attachable< MasstreeStorageControlBlock >::engine_, get_id(), foedus::Engine::get_memory_manager(), foedus::storage::masstree::MasstreeIntermediatePage::get_minipage(), foedus::memory::EngineMemory::get_node_memory(), foedus::memory::PagePool::get_resolver(), foedus::memory::NumaNodeMemoryRef::get_volatile_pool(), foedus::memory::PagePool::grab_one(), foedus::storage::masstree::MasstreeIntermediatePage::initialize_volatile_page(), foedus::storage::masstree::MasstreeBorderPage::initialize_volatile_page(), foedus::storage::masstree::kInfimumSlice, foedus::kRetOk, foedus::storage::masstree::kSupremumSlice, foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::pointers_, foedus::memory::LocalPageResolver::resolve_offset_newpage(), foedus::storage::VolatilePagePointer::set(), foedus::storage::DualPagePointer::snapshot_pointer_, foedus::storage::DualPagePointer::volatile_pointer_, and WRAP_ERROR_CODE.

Referenced by create(), and load().

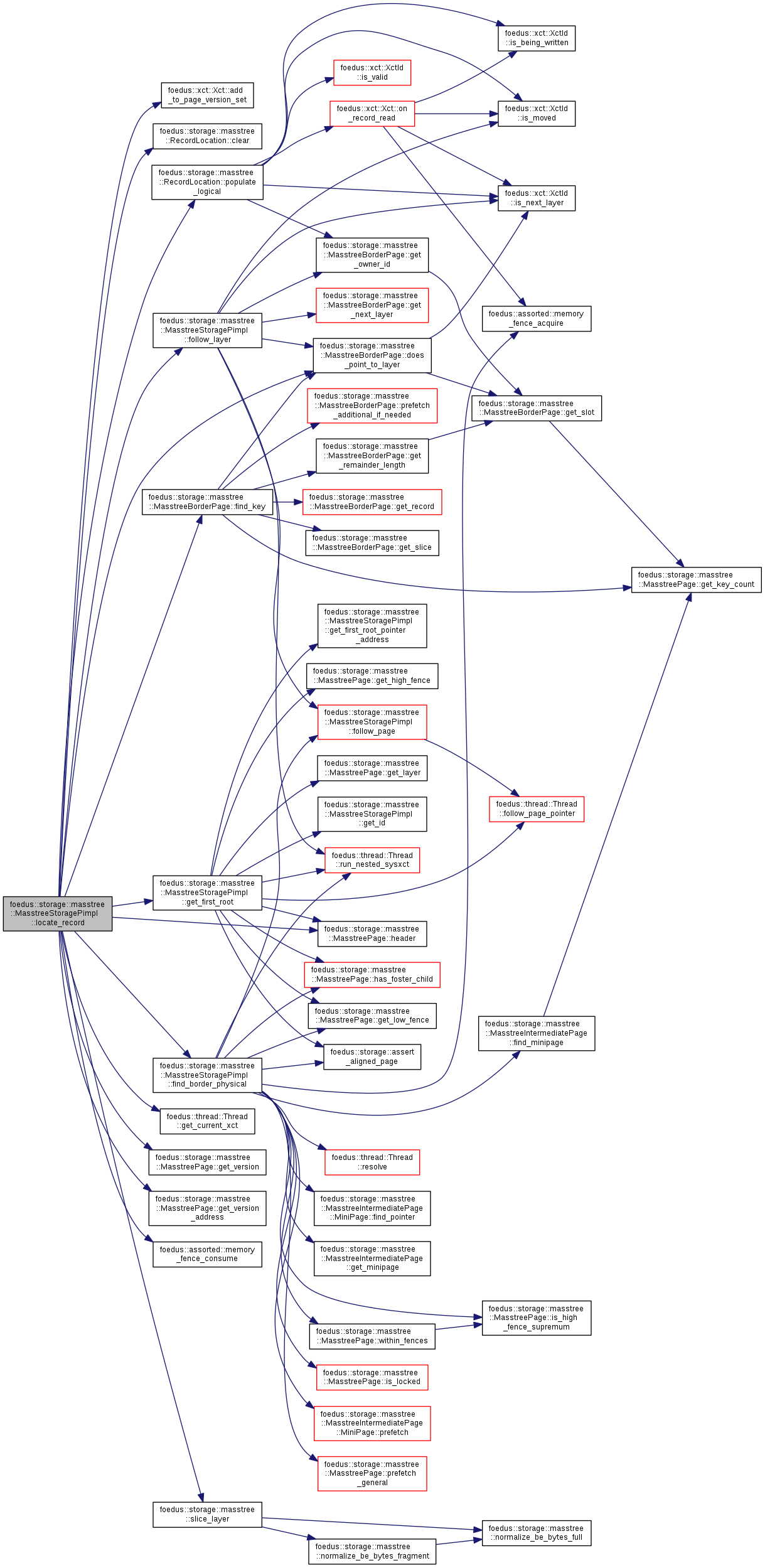

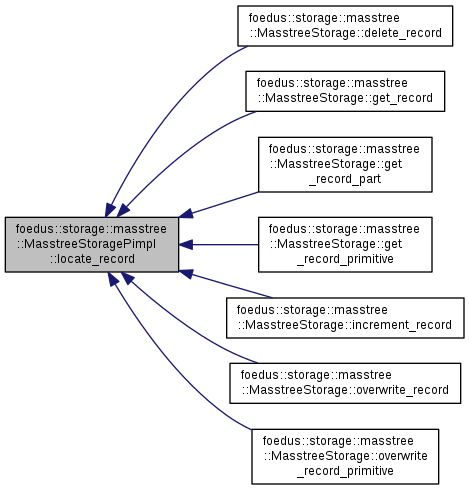

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::locate_record | ( | thread::Thread * | context, |

| const void * | key, | ||

| KeyLength | key_length, | ||

| bool | for_writes, | ||

| RecordLocation * | result | ||

| ) |

Identifies page and record for the key.

Definition at line 283 of file masstree_storage_pimpl.cpp.

References foedus::xct::Xct::add_to_page_version_set(), ASSERT_ND, CHECK_ERROR_CODE, foedus::storage::masstree::RecordLocation::clear(), foedus::storage::masstree::MasstreeBorderPage::does_point_to_layer(), find_border_physical(), foedus::storage::masstree::MasstreeBorderPage::find_key(), follow_layer(), foedus::thread::Thread::get_current_xct(), get_first_root(), foedus::storage::masstree::MasstreePage::get_version(), foedus::storage::masstree::MasstreePage::get_version_address(), foedus::storage::masstree::MasstreePage::header(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::kErrorCodeOk, foedus::kErrorCodeStrKeyNotFound, foedus::storage::masstree::kMaxKeyLength, foedus::assorted::memory_fence_consume(), foedus::storage::masstree::RecordLocation::populate_logical(), foedus::storage::masstree::slice_layer(), foedus::storage::PageHeader::snapshot_, and foedus::storage::PageVersion::status_.

Referenced by foedus::storage::masstree::MasstreeStorage::delete_record(), foedus::storage::masstree::MasstreeStorage::get_record(), foedus::storage::masstree::MasstreeStorage::get_record_part(), foedus::storage::masstree::MasstreeStorage::get_record_primitive(), foedus::storage::masstree::MasstreeStorage::increment_record(), foedus::storage::masstree::MasstreeStorage::overwrite_record(), and foedus::storage::masstree::MasstreeStorage::overwrite_record_primitive().

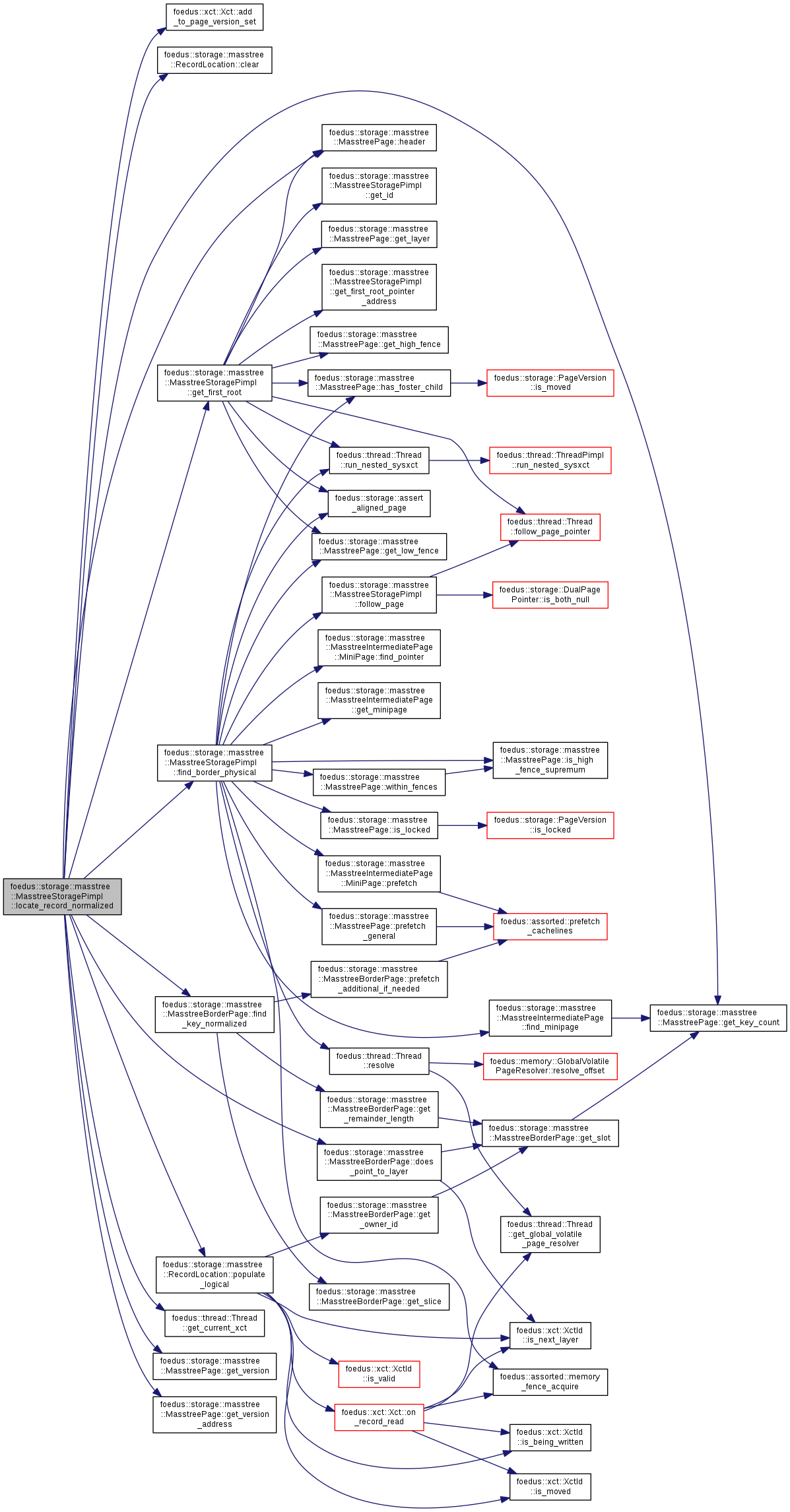

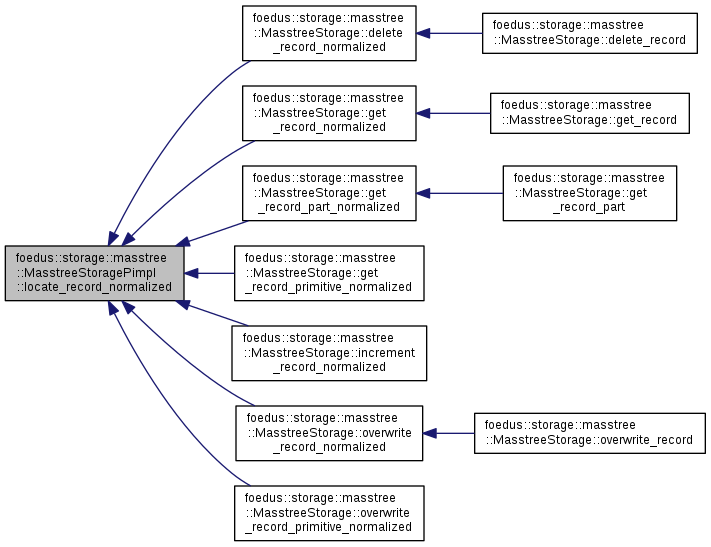

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::locate_record_normalized | ( | thread::Thread * | context, |

| KeySlice | key, | ||

| bool | for_writes, | ||

| RecordLocation * | result | ||

| ) |

Identifies page and record for the normalized key.

Definition at line 336 of file masstree_storage_pimpl.cpp.

References foedus::xct::Xct::add_to_page_version_set(), ASSERT_ND, CHECK_ERROR_CODE, foedus::storage::masstree::RecordLocation::clear(), foedus::storage::masstree::MasstreeBorderPage::does_point_to_layer(), find_border_physical(), foedus::storage::masstree::MasstreeBorderPage::find_key_normalized(), foedus::thread::Thread::get_current_xct(), get_first_root(), foedus::storage::masstree::MasstreePage::get_key_count(), foedus::storage::masstree::MasstreePage::get_version(), foedus::storage::masstree::MasstreePage::get_version_address(), foedus::storage::masstree::MasstreePage::header(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::kErrorCodeOk, foedus::kErrorCodeStrKeyNotFound, foedus::storage::masstree::RecordLocation::populate_logical(), foedus::storage::PageHeader::snapshot_, and foedus::storage::PageVersion::status_.

Referenced by foedus::storage::masstree::MasstreeStorage::delete_record_normalized(), foedus::storage::masstree::MasstreeStorage::get_record_normalized(), foedus::storage::masstree::MasstreeStorage::get_record_part_normalized(), foedus::storage::masstree::MasstreeStorage::get_record_primitive_normalized(), foedus::storage::masstree::MasstreeStorage::increment_record_normalized(), foedus::storage::masstree::MasstreeStorage::overwrite_record_normalized(), and foedus::storage::masstree::MasstreeStorage::overwrite_record_primitive_normalized().

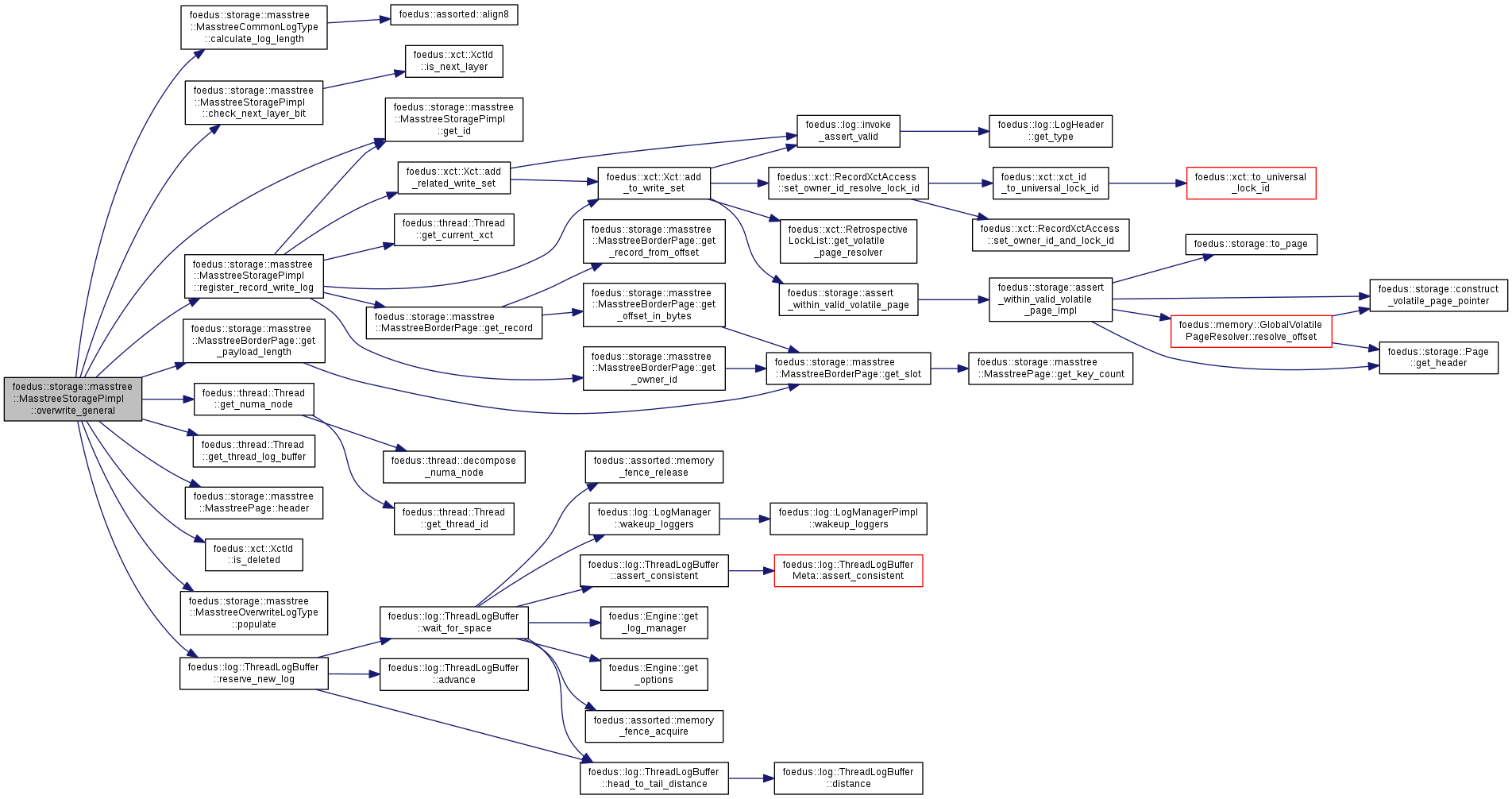

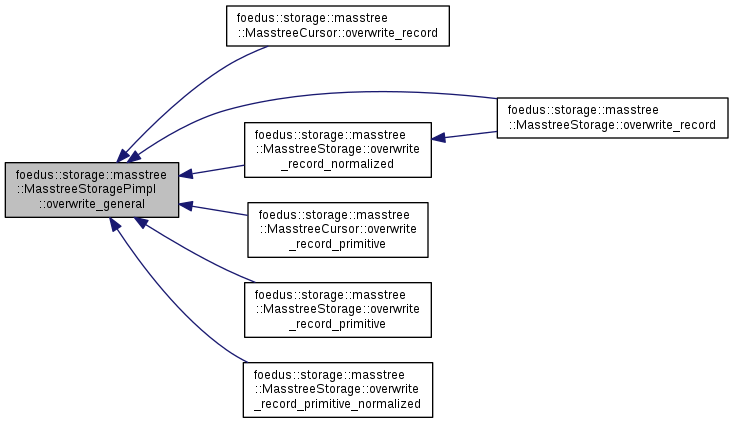

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::overwrite_general | ( | thread::Thread * | context, |

| const RecordLocation & | location, | ||

| const void * | be_key, | ||

| KeyLength | key_length, | ||

| const void * | payload, | ||

| PayloadLength | payload_offset, | ||

| PayloadLength | payload_count | ||

| ) |

implementation of overwrite_record family.

use with locate_record()

Definition at line 772 of file masstree_storage_pimpl.cpp.

References foedus::storage::masstree::MasstreeCommonLogType::calculate_log_length(), CHECK_ERROR_CODE, check_next_layer_bit(), get_id(), foedus::thread::Thread::get_numa_node(), foedus::storage::masstree::MasstreeBorderPage::get_payload_length(), foedus::thread::Thread::get_thread_log_buffer(), foedus::storage::masstree::MasstreePage::header(), foedus::storage::masstree::RecordLocation::index_, foedus::xct::XctId::is_deleted(), foedus::kErrorCodeStrKeyNotFound, foedus::kErrorCodeStrTooShortPayload, foedus::storage::masstree::RecordLocation::observed_, foedus::storage::masstree::RecordLocation::page_, foedus::storage::masstree::MasstreeOverwriteLogType::populate(), register_record_write_log(), foedus::log::ThreadLogBuffer::reserve_new_log(), and foedus::storage::PageHeader::stat_last_updater_node_.

Referenced by foedus::storage::masstree::MasstreeCursor::overwrite_record(), foedus::storage::masstree::MasstreeStorage::overwrite_record(), foedus::storage::masstree::MasstreeStorage::overwrite_record_normalized(), foedus::storage::masstree::MasstreeCursor::overwrite_record_primitive(), foedus::storage::masstree::MasstreeStorage::overwrite_record_primitive(), and foedus::storage::masstree::MasstreeStorage::overwrite_record_primitive_normalized().

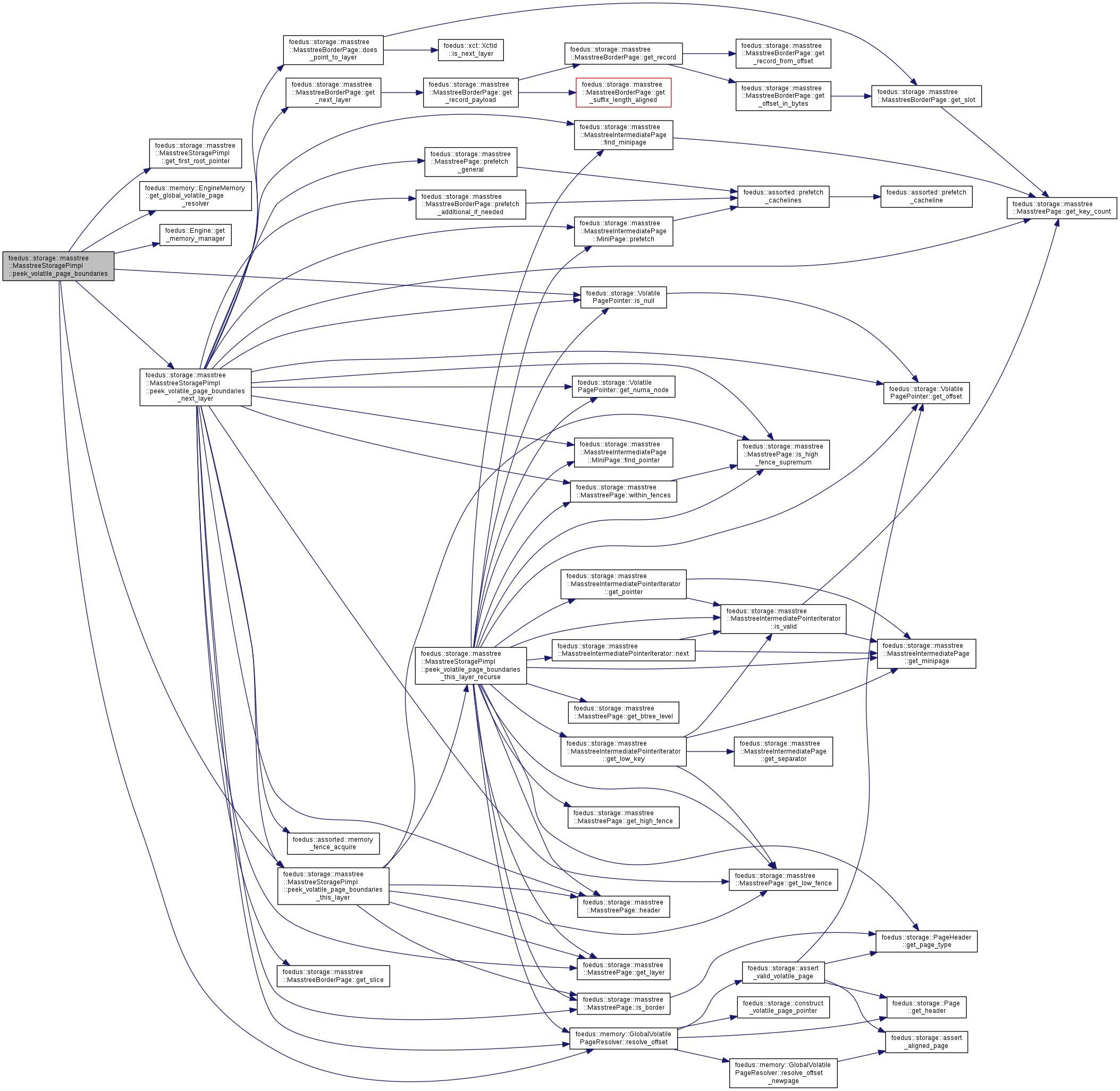

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::peek_volatile_page_boundaries | ( | Engine * | engine, |

| const MasstreeStorage::PeekBoundariesArguments & | args | ||

| ) |

Defined in masstree_storage_peek.cpp.

Definition at line 37 of file masstree_storage_peek.cpp.

References foedus::storage::masstree::MasstreeStorage::PeekBoundariesArguments::found_boundary_count_, get_first_root_pointer(), foedus::memory::EngineMemory::get_global_volatile_page_resolver(), foedus::Engine::get_memory_manager(), foedus::storage::VolatilePagePointer::is_null(), foedus::kErrorCodeOk, peek_volatile_page_boundaries_next_layer(), peek_volatile_page_boundaries_this_layer(), foedus::storage::masstree::MasstreeStorage::PeekBoundariesArguments::prefix_slice_count_, foedus::memory::GlobalVolatilePageResolver::resolve_offset(), and foedus::storage::DualPagePointer::volatile_pointer_.

Referenced by foedus::storage::masstree::MasstreeStorage::peek_volatile_page_boundaries().

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::peek_volatile_page_boundaries_next_layer | ( | const MasstreePage * | layer_root, |

| const memory::GlobalVolatilePageResolver & | resolver, | ||

| const MasstreeStorage::PeekBoundariesArguments & | args | ||

| ) |

Definition at line 61 of file masstree_storage_peek.cpp.

References ASSERT_ND, foedus::memory::GlobalVolatilePageResolver::begin_, foedus::storage::masstree::MasstreeBorderPage::does_point_to_layer(), foedus::memory::GlobalVolatilePageResolver::end_, foedus::storage::masstree::MasstreeIntermediatePage::find_minipage(), foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::find_pointer(), foedus::storage::masstree::MasstreePage::get_key_count(), foedus::storage::masstree::MasstreePage::get_layer(), foedus::storage::masstree::MasstreePage::get_low_fence(), foedus::storage::masstree::MasstreeBorderPage::get_next_layer(), foedus::storage::VolatilePagePointer::get_numa_node(), foedus::storage::VolatilePagePointer::get_offset(), foedus::storage::masstree::MasstreeBorderPage::get_slice(), foedus::storage::masstree::MasstreePage::header(), foedus::storage::masstree::MasstreePage::is_border(), foedus::storage::masstree::MasstreePage::is_high_fence_supremum(), foedus::storage::VolatilePagePointer::is_null(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::kErrorCodeOk, foedus::storage::masstree::kInfimumSlice, LIKELY, foedus::assorted::memory_fence_acquire(), foedus::memory::GlobalVolatilePageResolver::numa_node_count_, peek_volatile_page_boundaries_this_layer(), foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::pointers_, foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::prefetch(), foedus::storage::masstree::MasstreeBorderPage::prefetch_additional_if_needed(), foedus::storage::masstree::MasstreePage::prefetch_general(), foedus::storage::masstree::MasstreeStorage::PeekBoundariesArguments::prefix_slice_count_, foedus::storage::masstree::MasstreeStorage::PeekBoundariesArguments::prefix_slices_, foedus::memory::GlobalVolatilePageResolver::resolve_offset(), foedus::storage::PageHeader::snapshot_, UNLIKELY, foedus::storage::DualPagePointer::volatile_pointer_, and foedus::storage::masstree::MasstreePage::within_fences().

Referenced by peek_volatile_page_boundaries().

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::peek_volatile_page_boundaries_this_layer | ( | const MasstreePage * | layer_root, |

| const memory::GlobalVolatilePageResolver & | resolver, | ||

| const MasstreeStorage::PeekBoundariesArguments & | args | ||

| ) |

Definition at line 144 of file masstree_storage_peek.cpp.

References ASSERT_ND, foedus::storage::masstree::MasstreePage::get_layer(), foedus::storage::masstree::MasstreePage::get_low_fence(), foedus::storage::masstree::MasstreePage::header(), foedus::storage::masstree::MasstreePage::is_border(), foedus::storage::masstree::MasstreePage::is_high_fence_supremum(), foedus::kErrorCodeOk, foedus::storage::masstree::kInfimumSlice, peek_volatile_page_boundaries_this_layer_recurse(), foedus::storage::masstree::MasstreeStorage::PeekBoundariesArguments::prefix_slice_count_, and foedus::storage::PageHeader::snapshot_.

Referenced by peek_volatile_page_boundaries(), and peek_volatile_page_boundaries_next_layer().

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::peek_volatile_page_boundaries_this_layer_recurse | ( | const MasstreeIntermediatePage * | cur, |

| const memory::GlobalVolatilePageResolver & | resolver, | ||

| const MasstreeStorage::PeekBoundariesArguments & | args | ||

| ) |

Definition at line 161 of file masstree_storage_peek.cpp.

References ASSERT_ND, foedus::memory::GlobalVolatilePageResolver::begin_, CHECK_ERROR_CODE, foedus::memory::GlobalVolatilePageResolver::end_, foedus::storage::masstree::MasstreeIntermediatePage::find_minipage(), foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::find_pointer(), foedus::storage::masstree::MasstreeStorage::PeekBoundariesArguments::found_boundaries_, foedus::storage::masstree::MasstreeStorage::PeekBoundariesArguments::found_boundary_capacity_, foedus::storage::masstree::MasstreeStorage::PeekBoundariesArguments::found_boundary_count_, foedus::storage::masstree::MasstreeStorage::PeekBoundariesArguments::from_, foedus::storage::masstree::MasstreePage::get_btree_level(), foedus::storage::masstree::MasstreePage::get_high_fence(), foedus::storage::masstree::MasstreePage::get_layer(), foedus::storage::masstree::MasstreePage::get_low_fence(), foedus::storage::masstree::MasstreeIntermediatePointerIterator::get_low_key(), foedus::storage::masstree::MasstreeIntermediatePage::get_minipage(), foedus::storage::VolatilePagePointer::get_numa_node(), foedus::storage::VolatilePagePointer::get_offset(), foedus::storage::PageHeader::get_page_type(), foedus::storage::masstree::MasstreeIntermediatePointerIterator::get_pointer(), foedus::storage::masstree::MasstreePage::header(), foedus::storage::masstree::MasstreeIntermediatePointerIterator::index_, foedus::storage::masstree::MasstreeIntermediatePointerIterator::index_mini_, foedus::storage::masstree::MasstreePage::is_border(), foedus::storage::masstree::MasstreePage::is_high_fence_supremum(), foedus::storage::VolatilePagePointer::is_null(), foedus::storage::masstree::MasstreeIntermediatePointerIterator::is_valid(), foedus::kErrorCodeOk, foedus::storage::masstree::kInfimumSlice, foedus::storage::kMasstreeIntermediatePageType, LIKELY, foedus::storage::masstree::MasstreeIntermediatePointerIterator::next(), foedus::memory::GlobalVolatilePageResolver::numa_node_count_, foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::prefetch(), foedus::storage::masstree::MasstreeStorage::PeekBoundariesArguments::prefix_slice_count_, foedus::memory::GlobalVolatilePageResolver::resolve_offset(), foedus::storage::PageHeader::snapshot_, foedus::storage::masstree::MasstreeStorage::PeekBoundariesArguments::to_, UNLIKELY, foedus::storage::DualPagePointer::volatile_pointer_, and foedus::storage::masstree::MasstreePage::within_fences().

Referenced by peek_volatile_page_boundaries_this_layer().

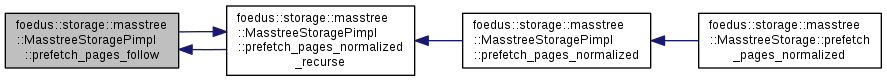

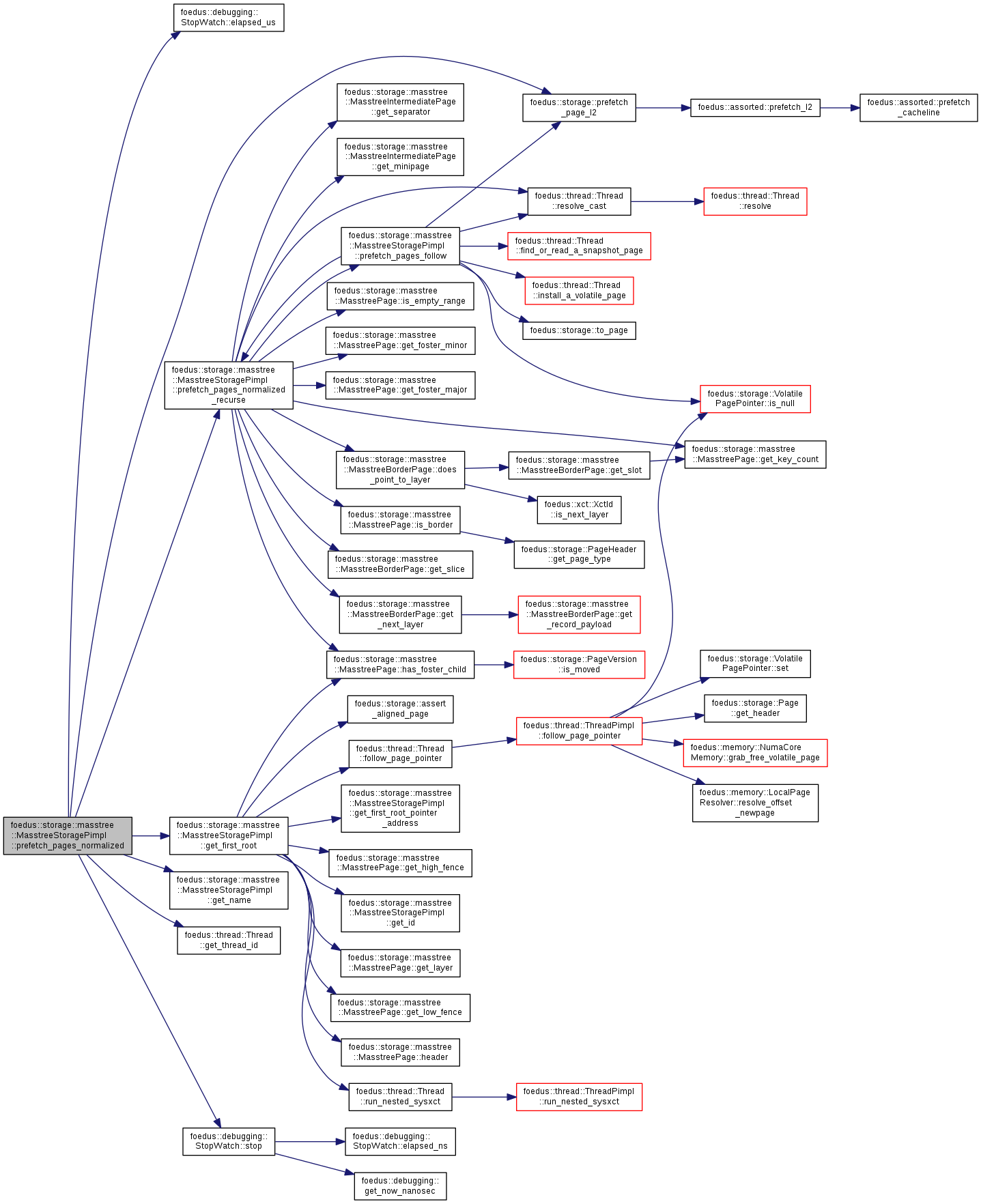

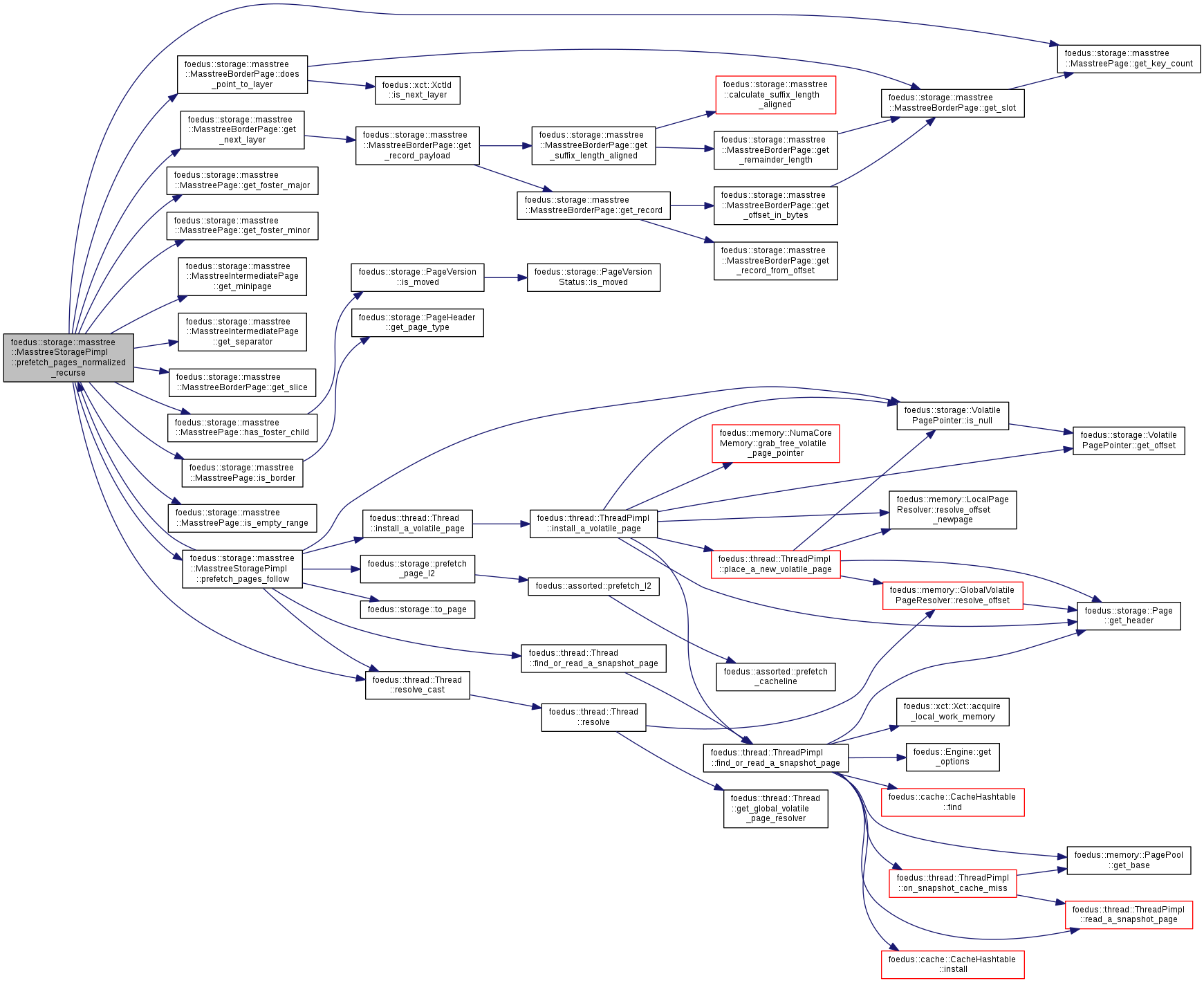

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::prefetch_pages_follow | ( | thread::Thread * | context, |

| DualPagePointer * | pointer, | ||

| bool | vol_on, | ||

| bool | snp_on, | ||

| KeySlice | from, | ||

| KeySlice | to | ||

| ) |

Definition at line 112 of file masstree_storage_prefetch.cpp.

References ASSERT_ND, CHECK_ERROR_CODE, foedus::thread::Thread::find_or_read_a_snapshot_page(), foedus::thread::Thread::install_a_volatile_page(), foedus::storage::VolatilePagePointer::is_null(), foedus::kErrorCodeOk, foedus::storage::prefetch_page_l2(), prefetch_pages_normalized_recurse(), foedus::thread::Thread::resolve_cast(), foedus::storage::DualPagePointer::snapshot_pointer_, foedus::storage::to_page(), and foedus::storage::DualPagePointer::volatile_pointer_.

Referenced by prefetch_pages_normalized_recurse().

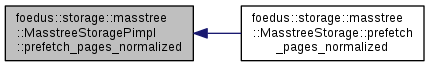

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::prefetch_pages_normalized | ( | thread::Thread * | context, |

| bool | install_volatile, | ||

| bool | cache_snapshot, | ||

| KeySlice | from, | ||

| KeySlice | to | ||

| ) |

defined in masstree_storage_prefetch.cpp

Definition at line 30 of file masstree_storage_prefetch.cpp.

References CHECK_ERROR_CODE, foedus::debugging::StopWatch::elapsed_us(), get_first_root(), get_name(), foedus::thread::Thread::get_thread_id(), foedus::kErrorCodeOk, foedus::storage::prefetch_page_l2(), prefetch_pages_normalized_recurse(), and foedus::debugging::StopWatch::stop().

Referenced by foedus::storage::masstree::MasstreeStorage::prefetch_pages_normalized().

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::prefetch_pages_normalized_recurse | ( | thread::Thread * | context, |

| bool | install_volatile, | ||

| bool | cache_snapshot, | ||

| KeySlice | from, | ||

| KeySlice | to, | ||

| MasstreePage * | page | ||

| ) |

Definition at line 51 of file masstree_storage_prefetch.cpp.

References CHECK_ERROR_CODE, foedus::storage::masstree::MasstreeBorderPage::does_point_to_layer(), foedus::storage::masstree::MasstreePage::get_foster_major(), foedus::storage::masstree::MasstreePage::get_foster_minor(), foedus::storage::masstree::MasstreePage::get_key_count(), foedus::storage::masstree::MasstreeIntermediatePage::get_minipage(), foedus::storage::masstree::MasstreeBorderPage::get_next_layer(), foedus::storage::masstree::MasstreeIntermediatePage::get_separator(), foedus::storage::masstree::MasstreeBorderPage::get_slice(), foedus::storage::masstree::MasstreePage::has_foster_child(), foedus::storage::masstree::MasstreePage::is_border(), foedus::storage::masstree::MasstreePage::is_empty_range(), foedus::kErrorCodeOk, foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::key_count_, foedus::storage::masstree::kInfimumSlice, foedus::storage::masstree::kSupremumSlice, foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::pointers_, prefetch_pages_follow(), foedus::thread::Thread::resolve_cast(), and foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::separators_.

Referenced by prefetch_pages_follow(), and prefetch_pages_normalized().

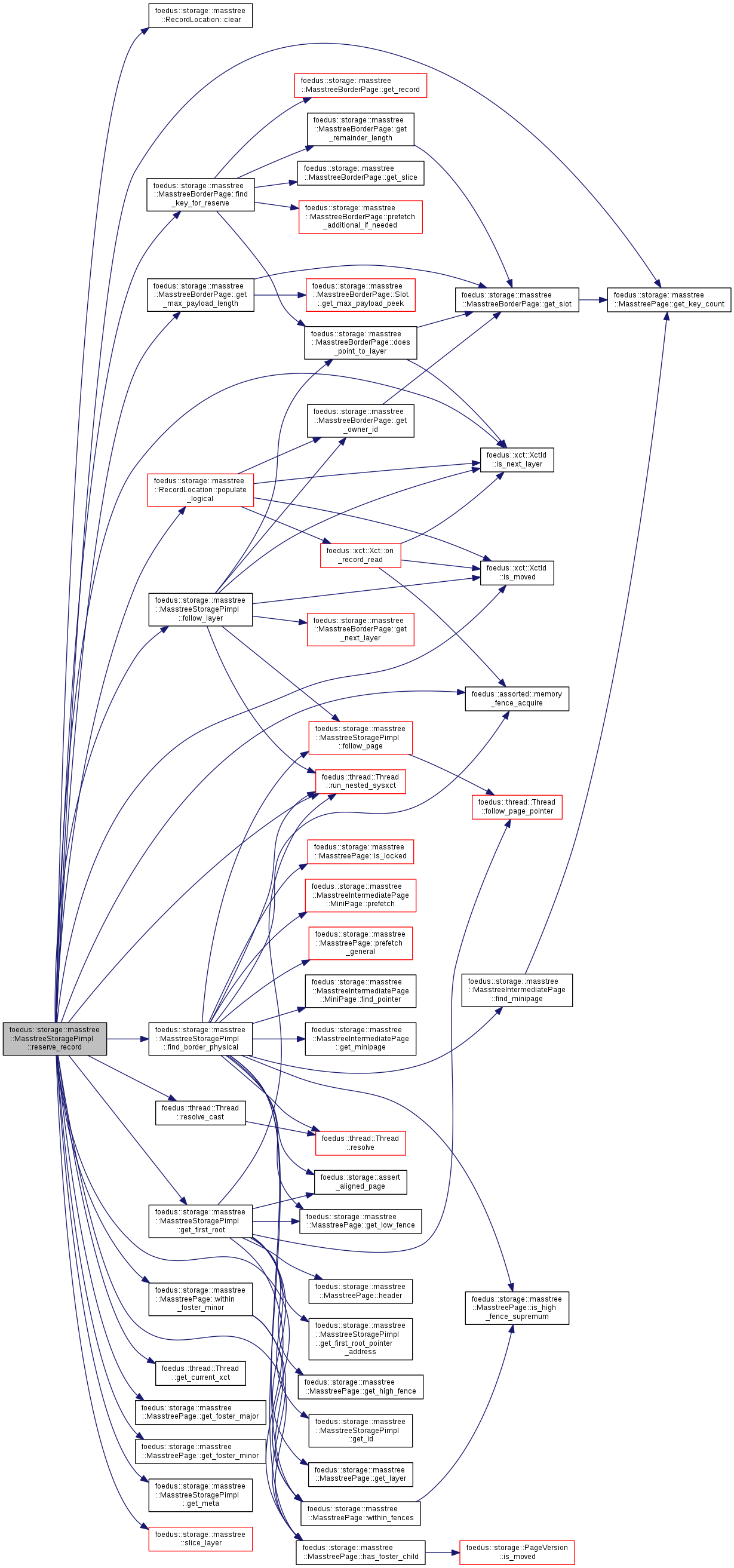

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::register_record_write_log | ( | thread::Thread * | context, |

| const RecordLocation & | location, | ||

| log::RecordLogType * | log_entry | ||

| ) |

Used in the following methods.

Definition at line 645 of file masstree_storage_pimpl.cpp.

References foedus::xct::Xct::add_related_write_set(), foedus::xct::Xct::add_to_write_set(), foedus::thread::Thread::get_current_xct(), get_id(), foedus::storage::masstree::MasstreeBorderPage::get_owner_id(), foedus::storage::masstree::MasstreeBorderPage::get_record(), foedus::storage::masstree::RecordLocation::index_, foedus::storage::masstree::RecordLocation::page_, and foedus::storage::masstree::RecordLocation::readset_.

Referenced by delete_general(), increment_general(), insert_general(), overwrite_general(), and upsert_general().

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::reserve_record | ( | thread::Thread * | context, |

| const void * | key, | ||

| KeyLength | key_length, | ||

| PayloadLength | payload_count, | ||

| PayloadLength | physical_payload_hint, | ||

| RecordLocation * | result | ||

| ) |

Like locate_record(), this is also a logical operation.

Definition at line 412 of file masstree_storage_pimpl.cpp.

References ASSERT_ND, CHECK_ERROR_CODE, foedus::storage::masstree::RecordLocation::clear(), find_border_physical(), foedus::storage::masstree::MasstreeBorderPage::find_key_for_reserve(), follow_layer(), foedus::thread::Thread::get_current_xct(), get_first_root(), foedus::storage::masstree::MasstreePage::get_foster_major(), foedus::storage::masstree::MasstreePage::get_foster_minor(), foedus::storage::masstree::MasstreePage::get_key_count(), foedus::storage::masstree::MasstreeBorderPage::get_max_payload_length(), get_meta(), foedus::storage::masstree::MasstreePage::has_foster_child(), foedus::storage::masstree::MasstreeBorderPage::FindKeyForReserveResult::index_, foedus::xct::XctId::is_moved(), foedus::xct::XctId::is_next_layer(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::kErrorCodeOk, foedus::storage::masstree::MasstreeBorderPage::kExactMatchLayerPointer, foedus::storage::masstree::MasstreeBorderPage::kExactMatchLocalRecord, foedus::storage::masstree::kMaxKeyLength, foedus::storage::masstree::MasstreeBorderPage::kNotFound, foedus::storage::masstree::MasstreeBorderPage::FindKeyForReserveResult::match_type_, foedus::assorted::memory_fence_acquire(), foedus::storage::masstree::RecordLocation::observed_, foedus::storage::masstree::ReserveRecords::out_split_needed_, foedus::storage::masstree::RecordLocation::populate_logical(), foedus::thread::Thread::resolve_cast(), foedus::thread::Thread::run_nested_sysxct(), foedus::storage::masstree::slice_layer(), foedus::storage::masstree::MasstreePage::within_fences(), and foedus::storage::masstree::MasstreePage::within_foster_minor().

Referenced by foedus::storage::masstree::MasstreeStorage::insert_record(), and foedus::storage::masstree::MasstreeStorage::upsert_record().

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::reserve_record_normalized | ( | thread::Thread * | context, |

| KeySlice | key, | ||

| PayloadLength | payload_count, | ||

| PayloadLength | physical_payload_hint, | ||

| RecordLocation * | result | ||

| ) |

Definition at line 515 of file masstree_storage_pimpl.cpp.

References ASSERT_ND, CHECK_ERROR_CODE, foedus::storage::masstree::RecordLocation::clear(), find_border_physical(), foedus::storage::masstree::MasstreeBorderPage::find_key_normalized(), foedus::thread::Thread::get_current_xct(), get_first_root(), foedus::storage::masstree::MasstreePage::get_foster_major(), foedus::storage::masstree::MasstreePage::get_foster_minor(), foedus::storage::masstree::MasstreePage::get_key_count(), foedus::storage::masstree::MasstreeBorderPage::get_max_payload_length(), foedus::storage::masstree::MasstreePage::has_foster_child(), foedus::xct::XctId::is_moved(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::kErrorCodeOk, foedus::assorted::memory_fence_acquire(), foedus::storage::masstree::RecordLocation::observed_, foedus::storage::masstree::ReserveRecords::out_split_needed_, foedus::storage::masstree::RecordLocation::populate_logical(), foedus::thread::Thread::resolve_cast(), foedus::thread::Thread::run_nested_sysxct(), foedus::storage::masstree::MasstreePage::within_fences(), and foedus::storage::masstree::MasstreePage::within_foster_minor().

Referenced by foedus::storage::masstree::MasstreeStorage::insert_record_normalized(), and foedus::storage::masstree::MasstreeStorage::upsert_record_normalized().

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::retrieve_general | ( | thread::Thread * | context, |

| const RecordLocation & | location, | ||

| void * | payload, | ||

| PayloadLength * | payload_capacity | ||

| ) |

implementation of get_record family.

use with locate_record()

Definition at line 599 of file masstree_storage_pimpl.cpp.

References CHECK_ERROR_CODE, check_next_layer_bit(), foedus::storage::masstree::MasstreeBorderPage::get_payload_length(), foedus::storage::masstree::MasstreeBorderPage::get_record_payload(), foedus::storage::masstree::RecordLocation::index_, foedus::xct::XctId::is_deleted(), foedus::kErrorCodeOk, foedus::kErrorCodeStrKeyNotFound, foedus::kErrorCodeStrTooSmallPayloadBuffer, foedus::storage::masstree::RecordLocation::observed_, and foedus::storage::masstree::RecordLocation::page_.

Referenced by foedus::storage::masstree::MasstreeStorage::get_record(), and foedus::storage::masstree::MasstreeStorage::get_record_normalized().

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::retrieve_part_general | ( | thread::Thread * | context, |

| const RecordLocation & | location, | ||

| void * | payload, | ||

| PayloadLength | payload_offset, | ||

| PayloadLength | payload_count | ||

| ) |

Definition at line 625 of file masstree_storage_pimpl.cpp.

References CHECK_ERROR_CODE, check_next_layer_bit(), foedus::storage::masstree::MasstreeBorderPage::get_payload_length(), foedus::storage::masstree::MasstreeBorderPage::get_record_payload(), foedus::storage::masstree::RecordLocation::index_, foedus::xct::XctId::is_deleted(), foedus::kErrorCodeOk, foedus::kErrorCodeStrKeyNotFound, foedus::kErrorCodeStrTooShortPayload, foedus::storage::masstree::RecordLocation::observed_, and foedus::storage::masstree::RecordLocation::page_.

Referenced by foedus::storage::masstree::MasstreeStorage::get_record_part(), foedus::storage::masstree::MasstreeStorage::get_record_part_normalized(), foedus::storage::masstree::MasstreeStorage::get_record_primitive(), and foedus::storage::masstree::MasstreeStorage::get_record_primitive_normalized().

|

inline |

Definition at line 848 of file masstree_storage_pimpl.cpp.

References ASSERT_ND, foedus::Attachable< MasstreeStorageControlBlock >::engine_, foedus::storage::masstree::MasstreePage::is_border(), foedus::storage::masstree::MasstreePage::is_moved(), foedus::xct::RwLockableXctId::is_next_layer(), foedus::storage::to_page(), and foedus::storage::masstree::MasstreeBorderPage::track_moved_record().

Referenced by foedus::storage::masstree::MasstreeStorage::track_moved_record().

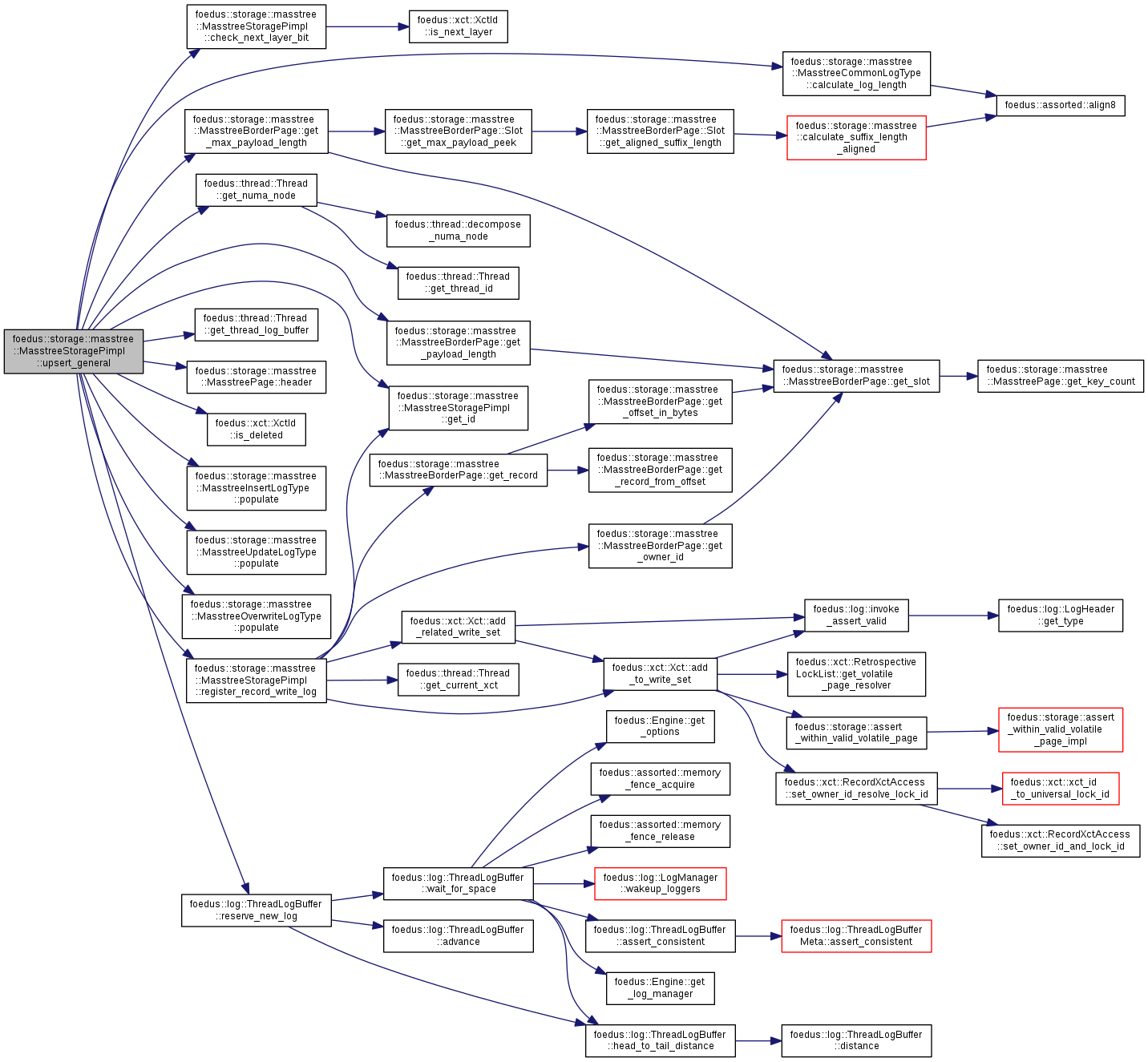

| ErrorCode foedus::storage::masstree::MasstreeStoragePimpl::upsert_general | ( | thread::Thread * | context, |

| const RecordLocation & | location, | ||

| const void * | be_key, | ||

| KeyLength | key_length, | ||

| const void * | payload, | ||

| PayloadLength | payload_count | ||

| ) |

implementation of upsert_record family.

use with reserve_record()

Definition at line 711 of file masstree_storage_pimpl.cpp.

References ASSERT_ND, foedus::storage::masstree::MasstreeCommonLogType::calculate_log_length(), CHECK_ERROR_CODE, check_next_layer_bit(), get_id(), foedus::storage::masstree::MasstreeBorderPage::get_max_payload_length(), foedus::thread::Thread::get_numa_node(), foedus::storage::masstree::MasstreeBorderPage::get_payload_length(), foedus::thread::Thread::get_thread_log_buffer(), foedus::storage::masstree::MasstreePage::header(), foedus::storage::masstree::RecordLocation::index_, foedus::xct::XctId::is_deleted(), foedus::storage::masstree::RecordLocation::observed_, foedus::storage::masstree::RecordLocation::page_, foedus::storage::masstree::MasstreeInsertLogType::populate(), foedus::storage::masstree::MasstreeUpdateLogType::populate(), foedus::storage::masstree::MasstreeOverwriteLogType::populate(), register_record_write_log(), foedus::log::ThreadLogBuffer::reserve_new_log(), and foedus::storage::PageHeader::stat_last_updater_node_.

Referenced by foedus::storage::masstree::MasstreeStorage::upsert_record(), and foedus::storage::masstree::MasstreeStorage::upsert_record_normalized().

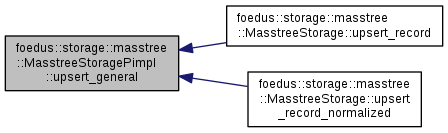

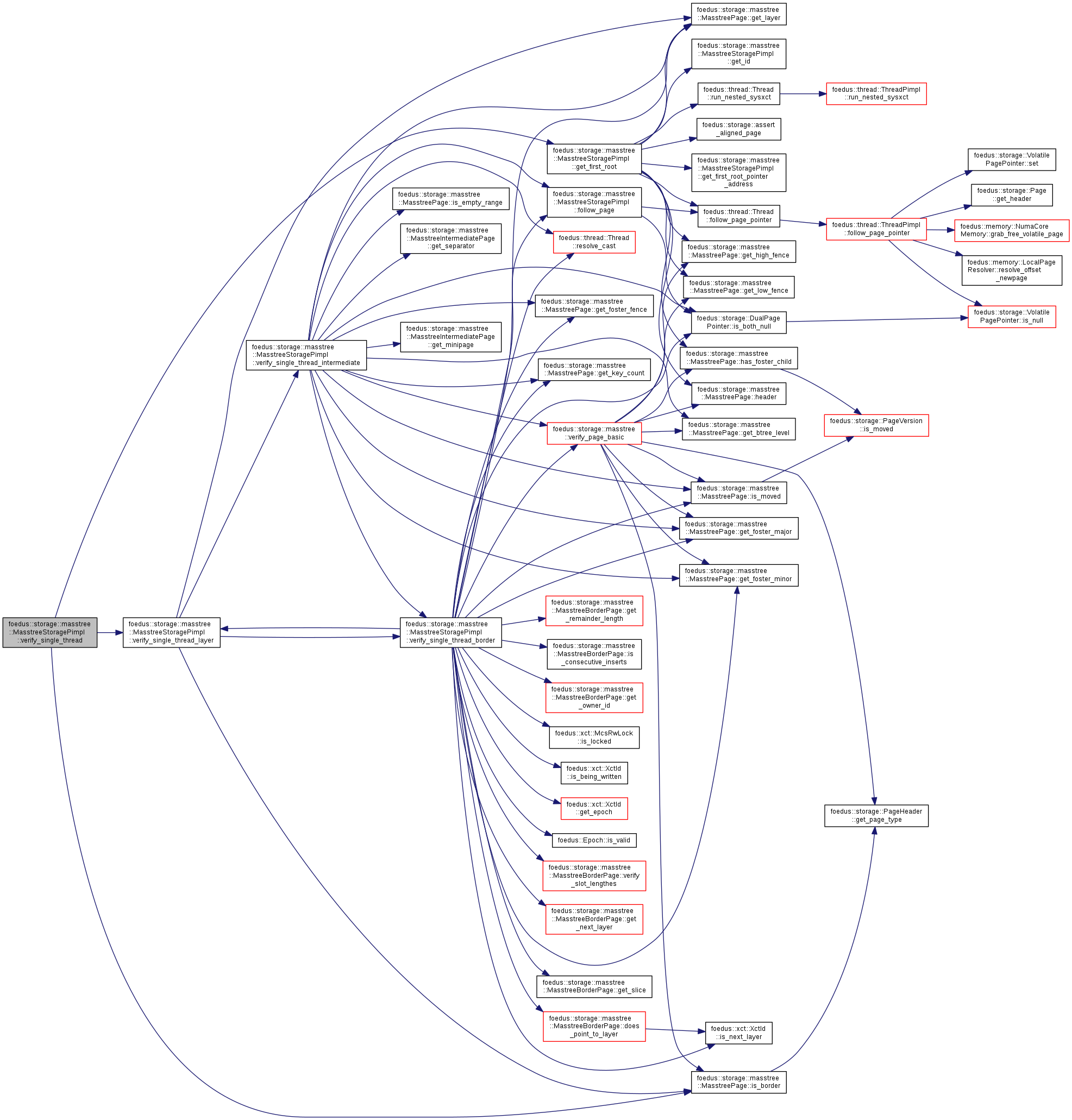

| ErrorStack foedus::storage::masstree::MasstreeStoragePimpl::verify_single_thread | ( | thread::Thread * | context | ) |

These are defined in masstree_storage_verify.cpp.

Definition at line 39 of file masstree_storage_verify.cpp.

References CHECK_AND_ASSERT, CHECK_ERROR, get_first_root(), foedus::storage::masstree::MasstreePage::is_border(), foedus::kRetOk, verify_single_thread_layer(), and WRAP_ERROR_CODE.

Referenced by foedus::storage::masstree::MasstreeStorage::verify_single_thread().

| ErrorStack foedus::storage::masstree::MasstreeStoragePimpl::verify_single_thread_border | ( | thread::Thread * | context, |

| KeySlice | low_fence, | ||

| HighFence | high_fence, | ||

| MasstreeBorderPage * | page | ||

| ) |

Definition at line 201 of file masstree_storage_verify.cpp.

References CHECK_AND_ASSERT, CHECK_ERROR, foedus::storage::masstree::MasstreeBorderPage::does_point_to_layer(), follow_page(), foedus::xct::XctId::get_epoch(), foedus::storage::masstree::MasstreePage::get_foster_fence(), foedus::storage::masstree::MasstreePage::get_foster_major(), foedus::storage::masstree::MasstreePage::get_foster_minor(), foedus::storage::masstree::MasstreePage::get_key_count(), foedus::storage::masstree::MasstreePage::get_layer(), foedus::storage::masstree::MasstreeBorderPage::get_next_layer(), foedus::storage::masstree::MasstreeBorderPage::get_owner_id(), foedus::storage::masstree::MasstreeBorderPage::get_remainder_length(), foedus::storage::masstree::MasstreeBorderPage::get_slice(), foedus::xct::XctId::is_being_written(), foedus::storage::DualPagePointer::is_both_null(), foedus::storage::masstree::MasstreeBorderPage::is_consecutive_inserts(), foedus::xct::McsRwLock::is_locked(), foedus::storage::masstree::MasstreePage::is_moved(), foedus::xct::XctId::is_next_layer(), foedus::Epoch::is_valid(), foedus::storage::masstree::kBorderPageMaxSlots, foedus::storage::kMasstreeBorderPageType, foedus::kRetOk, foedus::storage::masstree::kSupremumSlice, foedus::xct::RwLockableXctId::lock_, foedus::thread::Thread::resolve_cast(), foedus::storage::masstree::HighFence::supremum_, foedus::storage::masstree::verify_page_basic(), verify_single_thread_layer(), foedus::storage::masstree::MasstreeBorderPage::verify_slot_lengthes(), WRAP_ERROR_CODE, and foedus::xct::RwLockableXctId::xct_id_.

Referenced by verify_single_thread_intermediate(), and verify_single_thread_layer().

| ErrorStack foedus::storage::masstree::MasstreeStoragePimpl::verify_single_thread_intermediate | ( | thread::Thread * | context, |

| KeySlice | low_fence, | ||

| HighFence | high_fence, | ||

| MasstreeIntermediatePage * | page | ||

| ) |

Definition at line 103 of file masstree_storage_verify.cpp.

References CHECK_AND_ASSERT, CHECK_ERROR, follow_page(), foedus::storage::masstree::MasstreePage::get_btree_level(), foedus::storage::masstree::MasstreePage::get_foster_fence(), foedus::storage::masstree::MasstreePage::get_foster_major(), foedus::storage::masstree::MasstreePage::get_foster_minor(), foedus::storage::masstree::MasstreePage::get_key_count(), foedus::storage::masstree::MasstreePage::get_layer(), foedus::storage::masstree::MasstreeIntermediatePage::get_minipage(), foedus::storage::masstree::MasstreeIntermediatePage::get_separator(), foedus::storage::DualPagePointer::is_both_null(), foedus::storage::masstree::MasstreePage::is_empty_range(), foedus::storage::masstree::MasstreePage::is_moved(), foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::key_count_, foedus::storage::kMasstreeIntermediatePageType, foedus::storage::masstree::kMaxIntermediateMiniSeparators, foedus::storage::masstree::kMaxIntermediateSeparators, foedus::kRetOk, foedus::storage::masstree::kSupremumSlice, foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::pointers_, foedus::thread::Thread::resolve_cast(), foedus::storage::masstree::MasstreeIntermediatePage::MiniPage::separators_, foedus::storage::masstree::HighFence::slice_, foedus::storage::masstree::HighFence::supremum_, foedus::storage::masstree::verify_page_basic(), verify_single_thread_border(), and WRAP_ERROR_CODE.

Referenced by verify_single_thread_layer().

| ErrorStack foedus::storage::masstree::MasstreeStoragePimpl::verify_single_thread_layer | ( | thread::Thread * | context, |

| uint8_t | layer, | ||

| MasstreePage * | layer_root | ||

| ) |

Definition at line 47 of file masstree_storage_verify.cpp.

References CHECK_AND_ASSERT, CHECK_ERROR, foedus::storage::masstree::MasstreePage::get_layer(), foedus::storage::masstree::MasstreePage::is_border(), foedus::storage::masstree::kInfimumSlice, foedus::kRetOk, foedus::storage::masstree::kSupremumSlice, verify_single_thread_border(), and verify_single_thread_intermediate().

Referenced by verify_single_thread(), and verify_single_thread_border().